Sensing the Inside Out: An Embodied Perspective on Digital Animation Through Motion Capture and Wearables

Abstract

1. Introduction

2. The Embodied Perspective in Animation

3. Embodied Character Design

3.1. Nonverbal Behavior and Communication Definitions and Types

3.1.1. Cues vs. Signals

3.1.2. Nonverbal Communication Types

4. Character Personality as a Response Mechanism

4.1. Definitions

4.2. Personality Traits Through Nonverbal Reactions

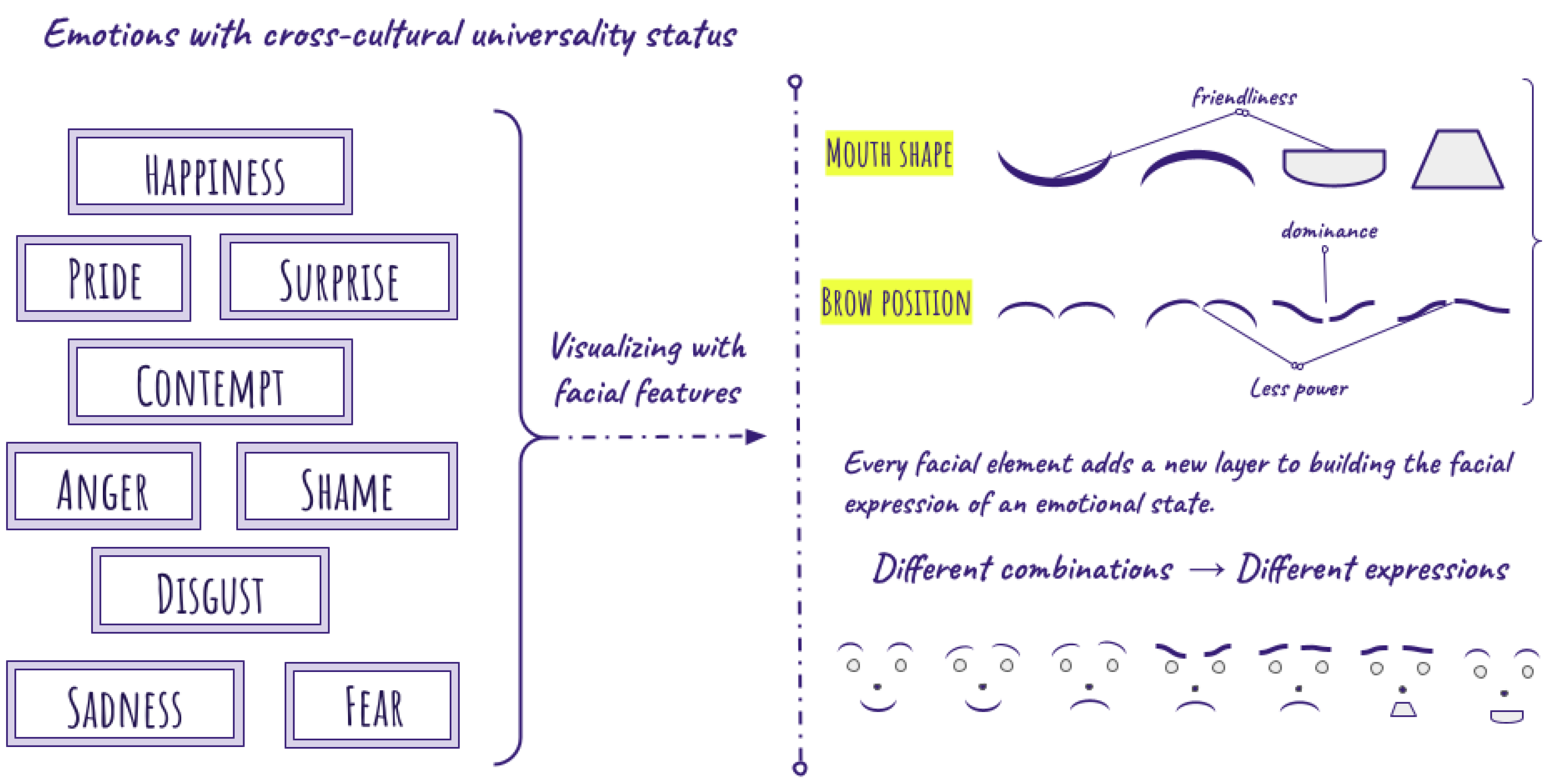

4.2.1. Facial Expressions

4.2.2. Eye Gaze

4.2.3. Body Language → Movement → Position-Posture-Gestures

Movement Analysis and LMA

Movement Qualities

Position and Posture

4.2.4. Gestures

4.2.5. Paralinguistics

4.2.6. Haptics

4.2.7. Appearance

5. Sensor-Based Animation

- Multisensory integration and egocentric frames of reference;

- Proprioception, position sense, and the perception of limb movement;

- Visual capture and visual processing of the human body;

- Motor systems: planning, preparation, and execution of motor schemas.

- Motion Capture and Motion Sensing: Acted sensing by the performer (the actor acts as if they feel or react and their behavior is captured);

- Biosensors: Captured sensing of the performer (actor’s biosignals are captured as indices of what the performer (and therefore the animated character) feels;

- Virtual sensors in Agents: Movement-generation-programmed sensing (agents are programmed to act as they feel a stimulus through AI);

- Physical sensors (morphological computing).

5.1. Motion Capture and Motion Sensing

5.2. Biosensors: Visualising the Inside Out

5.3. Virtual Sensors in Agents (Autonomous Digital Characters)

5.4. Morphological Computation

6. Integrating Sensors in Animation Workflow

6.1. Sensor-Based Emotion Capture in Animation

6.2. Contextual and Narrative Integration of Biosignals

6.3. Challenges and Considerations in Sensor-Driven Animation

- Noise and Variability: Biosignals are inherently noisy and sensitive to movement artifacts, making real-time animation challenging [115]. Filtering techniques and machine learning models must be implemented to distinguish genuine emotional signals from environmental interference.

- Individual Differences: Emotional responses and baseline biosignals vary across individuals, meaning that one-size-fits-all emotion models are ineffective [126]. Instead, personalised calibration may be required to adapt the animation system to each performer’s unique physiological patterns.

- Interpretation Complexity: Unlike direct motion capture, biosignals do not correspond to explicit movements or expressions, making their integration into animation workflows less straightforward. Instead of focusing on emotion detection, the emphasis should be on using biosignals as modulation parameters that influence motion curves, shaders, and secondary animations dynamically.

7. Proposed Workflow and Discussion

7.1. Methodology: A Sensor-Based Approach to Animation

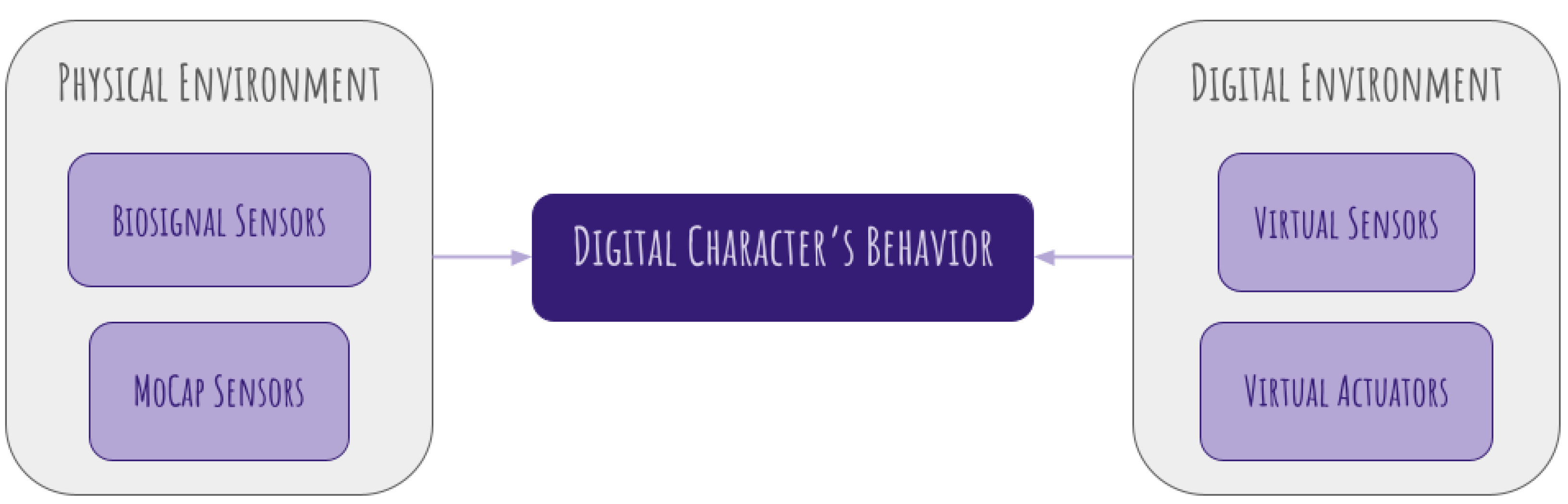

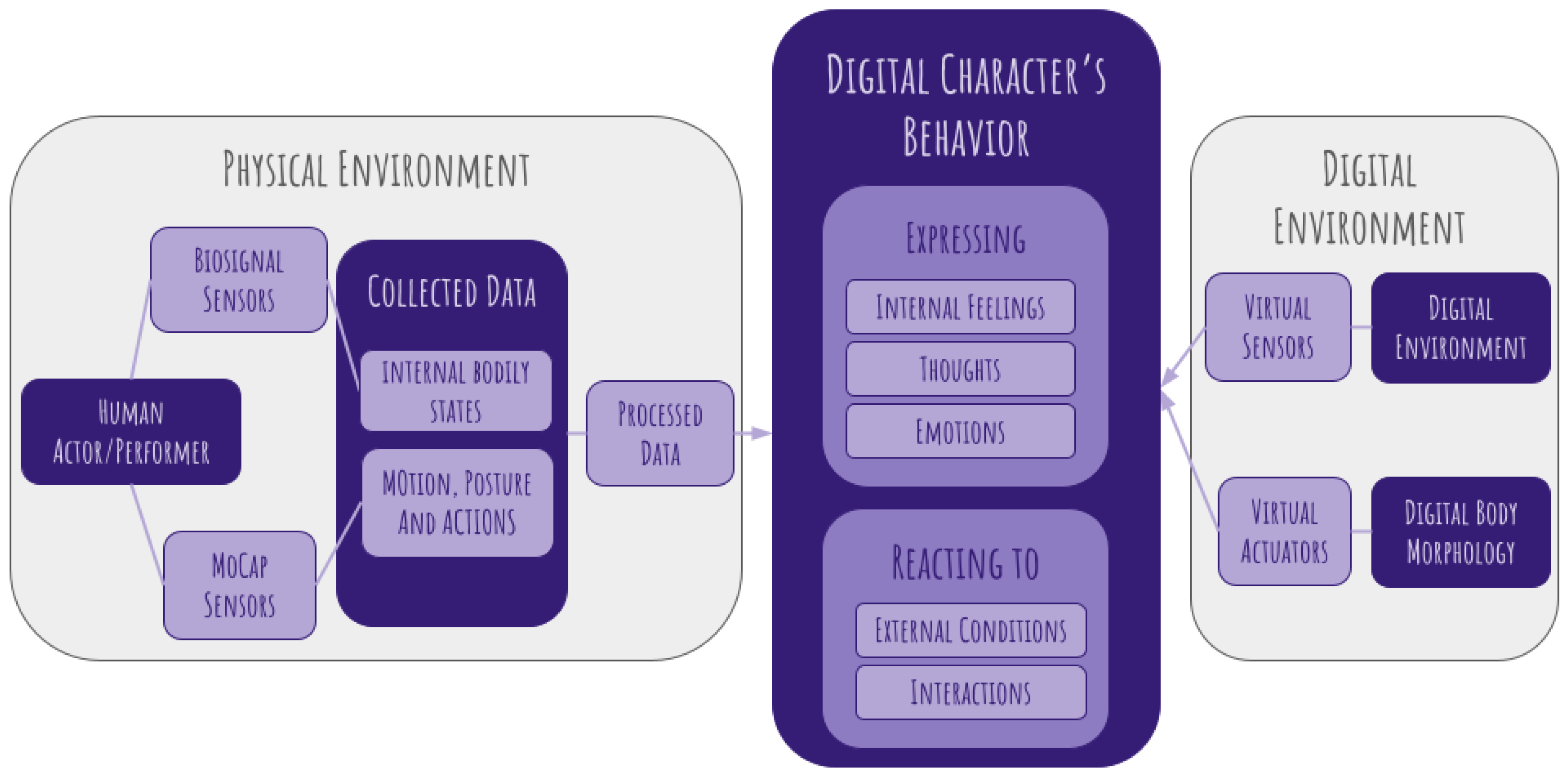

- Physical Environment: A human performer is equipped with biosignal sensors (e.g., EMG, HRV, and GSR) and motion capture sensors through wearables to track both internal bodily states and external movements. This combination allows for the real-time extraction of physiological and kinematic data, which are processed and used to create the digital character’s behavior.

- Digital Character Integration: The collected data inform digital character behavior:

- –

- Characters express internal states such as emotions and thoughts based on biosignal data.

- –

- Characters react to external conditions, dynamically adjusting responses within the space of the digital environment.

- Digital Environment Interaction: Virtual sensors and actuators allow the character to perceive and modify its digital surroundings. The digital body morphology adapts based on sensor inputs, ensuring a cohesive interaction between the character and its environment.

7.2. Discussion: Implications and Challenges

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Correction Statement

Abbreviations

| AR | Augmented Reality |

| BESS | Body Effort Shape System |

| CGI | Computer-generated Imagery |

| ECG | Electrocardiogram |

| LMA | Laban Movement Analysis |

| VR | Virtual Reality |

References

- Kilteni, K.; Groten, R.; Slater, M. The sense of embodiment in virtual reality. Presence Teleoperators Virtual Environ. 2012, 21, 373–387. [Google Scholar] [CrossRef]

- Otono, R.; Shikanai, Y.; Nakano, K.; Isoyama, N.; Uchiyama, H.; Kiyokawa, K. The Proteus Effect in Augmented Reality: Impact of Avatar Age and User Perspective on Walking Behaviors; The Virtual Reality Society of Japan: Tokyo, Japan, 2021. [Google Scholar]

- Oberdörfer, S.; Birnstiel, S.; Latoschik, M.E. Proteus effect or bodily affordance? The influence of virtual high-heels on gait behavior. Virtual Real. 2024, 28, 81. [Google Scholar]

- Merleau-Ponty, M.; Landes, D.; Carman, T.; Lefort, C. Phenomenology of Perception; Routledge: Abingdon, UK, 2013. [Google Scholar]

- Clark, A. Re-inventing ourselves: The plasticity of embodiment, sensing, and mind. J. Med. Philos. 2007, 32, 263–282. [Google Scholar] [CrossRef] [PubMed]

- Johnston, O.; Thomas, F. The Illusion of Life: Disney Animation; Disney Editions New York: New York, NY, USA, 1981. [Google Scholar]

- Lakoff, G.; Johnson, M.; Sowa, J.F. Review of Philosophy in the Flesh: The embodied mind and its challenge to Western thought. Comput. Linguist. 1999, 25, 631–634. [Google Scholar]

- Glenberg, A.M. Embodiment as a unifying perspective for psychology. Wiley Interdiscip. Rev. Cogn. Sci. 2010, 1, 586–596. [Google Scholar]

- Smith, L.B. Cognition as a dynamic system: Principles from embodiment. Dev. Rev. 2005, 25, 278–298. [Google Scholar]

- Pustejovsky, J.; Krishnaswamy, N. Embodied human computer interaction. KI-Künstliche Intell. 2021, 35, 307–327. [Google Scholar]

- Serim, B.; Spapé, M.; Jacucci, G. Revisiting embodiment for brain–computer interfaces. Hum.–Comput. Interact. 2024, 39, 417–443. [Google Scholar] [CrossRef]

- Kiverstein, J. The meaning of embodiment. Top. Cogn. Sci. 2012, 4, 740–758. [Google Scholar]

- Müller, U.; Newman, J.L. The body in action: Perspectives on embodiment and development. In Developmental Perspectives on Embodiment and Consciousness; Lawrence Erlbaum Associates: London, UK, 2008; pp. 313–342. [Google Scholar]

- Lakoff, G.; Johnson, M. Metaphors We Live by; University of Chicago Press: Chicago, IL, USA, 2008. [Google Scholar]

- Shiratori, T.; Mahler, M.; Trezevant, W.; Hodgins, J.K. Expressing animated performances through puppeteering. In Proceedings of the 2013 IEEE Symposium on 3D User Interfaces (3DUI), Orlando, FL, USA, 16–17 March 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 59–66. [Google Scholar] [CrossRef]

- Mou, T.Y. Keyframe or motion capture? Reflections on education of character animation. EURASIA J. Math. Sci. Technol. Educ. 2018, 14, em1649. [Google Scholar] [CrossRef]

- Sultana, N.; Peng, L.Y.; Meissner, N. Exploring believable character animation based on principles of animation and acting. In Proceedings of the 2013 International Conference on Informatics and Creative Multimedia, Kuala Lumpur, Malaysia, 4–6 September 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 321–324. [Google Scholar] [CrossRef]

- Sheets-Johnstone, M. Embodied minds or mindful bodies? A question of fundamental, inherently inter-related aspects of animation. Subjectivity 2011, 4, 451–466. [Google Scholar] [CrossRef]

- Thalmann, D. Physical, behavioral, and sensor-based animation. In Proceedings of the Graphicon 96, St. Petersburg, Russia, 1–5 July 1996; pp. 214–221. [Google Scholar]

- De Koning, B.B.; Tabbers, H.K. Facilitating understanding of movements in dynamic visualizations: An embodied perspective. Educ. Psychol. Rev. 2011, 23, 501–521. [Google Scholar] [CrossRef]

- Mehrabian, A. Silent Messages; Wadsworth: Belmont, CA, USA, 1971; Volume 8. [Google Scholar]

- Mehrabian, A. Nonverbal Communication, 3rd ed; Aldine Transaction: New Brunswick, NJ, USA, 2009. [Google Scholar]

- Birdwhistell, R.L. Introduction to Kinesics: (An Annotation System for Analysis of Body Motion and Gesture); Department of State, Foreign Service Institute: Washington, DC, USA, 1952. [Google Scholar]

- Hasson, O. Towards a general theory of biological signaling. J. Theor. Biol. 1997, 185, 139–156. [Google Scholar] [CrossRef] [PubMed]

- American Psychological Association. APA Dictionary of Psychology; American Psychological Association: Washington, DC, USA, 2007. [Google Scholar]

- Wikimedia Foundation. Nonverbal Communication. 2024. Available online: https://en.wikipedia.org/wiki/Nonverbal_communication (accessed on 14 May 2024).

- Frank, M.G. Facial Expressions. Int. Encycl. Soc. Behav. Sci. 2001, 5230–5234. [Google Scholar] [CrossRef]

- Gesture|English Meaning-Cambridge Dictionary. Available online: https://dictionary.cambridge.org/dictionary/english/gesture (accessed on 14 August 2024).

- Nordquist, R. What Is Paralinguistics? (Paralanguage). 2019. Available online: https://www.thoughtco.com/paralinguistics-paralanguage-term-1691568 (accessed on 6 June 2024).

- Battle, D.E. Communication Disorders in a Multicultural and Global Society. In Communication Disorders in Multicultural and International Populations; Mosby: St. Louis, MO, USA, 2012; pp. 1–19. [Google Scholar]

- Cherry, K. What Are the 9 Types of Nonverbal Communication? 2023. Available online: https://www.verywellmind.com/types-of-nonverbal-communication-2795397 (accessed on 14 August 2024).

- Hinde, R.A.; Royal Society (Great Britain) (Eds.) Non-Verbal Communication; Cambridge University Press: Cambridge, UK, 1972. [Google Scholar]

- Parmar, D.; Olafsson, S.; Utami, D.; Murali, P.; Bickmore, T. Designing empathic virtual agents: Manipulating animation, voice, rendering, and empathy to create persuasive agents. Auton. Agents Multi-Agent Syst. 2022, 36, 17. [Google Scholar] [CrossRef]

- Ekman, P. Facial Expression and Emotion. Am. Psychol. 1993, 48, 384. [Google Scholar] [CrossRef]

- Wiggins, J.S.; Trapnell, P.; Phillips, N. Psychometric and Geometric Characteristics of the Revised Interpersonal Adjective Scales (IAS-R). Multivar. Behav. Res. 1988, 23, 517–530. [Google Scholar] [CrossRef] [PubMed]

- Waude, A. Five-Factor Model of Personality. 2017. Available online: https://www.psychologistworld.com/personality/five-factor-model-big-five-personality (accessed on 6 August 2024).

- Korn, O.; Stamm, L.; Moeckl, G. Designing authentic emotions for non-human characters: A study evaluating virtual affective behavior. In Proceedings of the 2017 Conference on Designing Interactive Systems, Edinburgh, UK, 10–14 June 2017; pp. 477–487. [Google Scholar]

- Pervin, L.A.; John, O.P. Handbook of Personality: Theory and Research, 2nd ed.; Guilford Press: New York, NY, USA, 1999. [Google Scholar]

- Bergner, R.M. What Is Personality? Two Myths and a Definition. New Ideas Psychol. 2020, 57, 100759. [Google Scholar] [CrossRef]

- Hoffner, C.; Cantor, J. Perceiving and responding to mass media characters. In Responding to the Screen; Routledge: Abingdon, UK, 2013; pp. 63–101. [Google Scholar]

- Field, S. Screenplay: The Foundations of Screenwriting; Delta: London, UK, 2005. [Google Scholar]

- Laurel, B. Computers as Theater; Addison-Wesley: Reading, MA, USA, 1993. [Google Scholar]

- Higgins, T.E.; Scholer, A.A. When Is Personality Revealed? A Motivated Cognition Approach. In Advances in Experimental Social Psychology; The Guilford Press: New York, NY, USA, 2008. [Google Scholar]

- Mehrabian, A.; Ferris, S.R. Inference of Attitudes from Nonverbal Communication in Two Channels. J. Consult. Psychol. 1967, 31, 248. [Google Scholar] [CrossRef]

- Allan, P. Body Language: How to Read Others’ Thoughts by Their Gestures; Sheldon Press: London, UK, 1995. [Google Scholar]

- Darwin, C. The Expression of Emotions in Animals and Man; Murray: London, UK, 1872. [Google Scholar]

- Knutson, B. Facial Expressions of Emotion Influence Interpersonal Trait Inferences. J. Nonverbal Behav. 1996, 20, 165–182. [Google Scholar] [CrossRef]

- Shariff, A.F.; Tracy, J.L. What Are Emotion Expressions For? Curr. Dir. Psychol. Sci. 2011, 20, 395–399. [Google Scholar]

- Tracy, J.L.; Randles, D.; Steckler, C.M. The Nonverbal Communication of Emotions. Curr. Opin. Behav. Sci. 2015, 3, 25–30. [Google Scholar]

- Keating, C.F.; Mazur, A.; Segall, M.H. Facial Gestures Which Influence the Perception of Status. Sociometry 1977, 40, 374–378. [Google Scholar]

- Keating, C.F.; Mazur, A.; Segall, M.H.; Cysneiros, P.G.; Kilbride, J.E.; Leahy, P.; Wirsing, R. Culture and the Perception of Social Dominance from Facial Expression. J. Personal. Soc. Psychol. 1981, 40, 615. [Google Scholar]

- Matsumoto, D.; Kudoh, T. American-Japanese Cultural Differences in Attributions of Personality Based on Smiles. J. Nonverbal Behav. 1993, 17, 231–243. [Google Scholar]

- Poggi, I.; Pelachaud, C. Emotional Meaning and Expression in Animated Faces. In Affective Interactions; Springer: Berlin/Heidelberg, Germany, 1999; pp. 182–195. [Google Scholar]

- Ellyson, S.L.; Dovidio, J.F.; Fehr, B.J. Visual Behavior and Dominance in Women and Men. In Gender and Nonverbal Behavior; Mayo, C., Henley, N.M., Eds.; Springer: New York, NY, USA, 1981; pp. 63–81. [Google Scholar] [CrossRef]

- Emery, N.J. The Eyes Have It: The Neuroethology, Function and Evolution of Social Gaze. Neurosci. Biobehav. Rev. 2000, 24, 581–604. [Google Scholar] [CrossRef]

- Argyle, M.; Dean, J. Eye-Contact, Distance and Affiliation. Sociometry 1965, 28, 289–304. [Google Scholar] [CrossRef] [PubMed]

- Georgescu, A.L.; Kuzmanovic, B.; Schilbach, L.; Tepest, R.; Kulbida, R.; Bente, G.; Vogeley, K. Neural Correlates of “Social Gaze” Processing in High-Functioning Autism under Systematic Variation of Gaze Duration. NeuroImage Clin. 2013, 3, 340–351. [Google Scholar] [CrossRef]

- Efran, J.S.; Broughton, A. Effect of Expectancies for Social Approval on Visual Behavior. J. Personal. Soc. Psychol. 1966, 4, 103–107. [Google Scholar] [CrossRef]

- Efran, J.S. Looking for Approval: Effects on Visual Behavior of Approbation from Persons Differing in Importance. J. Personal. Soc. Psychol. 1968, 10, 21–25. [Google Scholar] [CrossRef]

- Ho, S.; Foulsham, T.; Kingstone, A. Speaking and Listening with the Eyes: Gaze Signaling During Dyadic Interactions. PLoS ONE 2015, 10, e0136905. [Google Scholar] [CrossRef] [PubMed]

- Laban, R.; Lawrence, F. Effort; MacDonald and Evans: London, UK, 1947. [Google Scholar]

- Bartenieff, I.; Lewis, D. Body Movement: Coping with the Environment; Routledge: London, UK, 2013. [Google Scholar]

- Alaoui, S.F.; Carlson, K.; Cuykendall, S.; Bradley, K.; Studd, K.; Schiphorst, T. How Do Experts Observe Movement? In Proceedings of the 2nd International Workshop on Movement and Computing, MOCO’15, New York, NY, USA, 14–15 August 2015; pp. 84–91. [Google Scholar]

- Hackney, P. Making Connections: Total Body Integration Through Bartenieff Fundamentals; Routledge: London, UK, 2003. [Google Scholar]

- Wahl, C. Laban/Bartenieff Movement Studies: Contemporary Applications; Human Kinetics: Champaign, IL, USA, 2019. [Google Scholar]

- Davis, J. Laban Movement Analysis: A Key to Individualizing Children’s Dance. J. Phys. Educ. Recreat. Danc. 1995, 66, 31–33. [Google Scholar] [CrossRef]

- Hankin, T. Laban Movement Analysis: In Dance Education. J. Phys. Educ. Recreat. Danc. 1984, 55, 65–67. [Google Scholar]

- Bacula, A.; LaViers, A. Character synthesis of ballet archetypes on robots using laban movement analysis: Comparison between a humanoid and an aerial robot platform with lay and expert observation. Int. J. Soc. Robot. 2021, 13, 1047–1062. [Google Scholar] [CrossRef]

- Kougioumtzian, L.; El Raheb, K.; Katifori, A.; Roussou, M. Blazing fire or breezy wind? A story-driven playful experience for annotating dance movement. Front. Comput. Sci. 2022, 4, 957274. [Google Scholar]

- Bishko, L. Animation Principles and Laban Movement Analysis: Movement Frameworks for Creating Empathic Character Performances. In Nonverbal Communication in Virtual Worlds; Tanenbaum, J.T., El-Nasr, M.S., Nixon, M., Eds.; ETC Press: Pittsburgh, PA, USA, 2014; pp. 177–203. [Google Scholar]

- Blom, L.A.; Chaplin, L.T. The Intimate Act of Choreography; University of Pittsburgh Press: Pittsburgh, PA, USA, 1982. [Google Scholar]

- Alaoui, S.F.; Caramiaux, B.; Serrano, M.; Bevilacqua, F. Movement Qualities as Interaction Modality. In Proceedings of the Designing Interactive Systems Conference, DIS’12, Newcastle Upon Tyne, UK, 11–15 June 2012. [Google Scholar] [CrossRef]

- Newlove, J.; Dalby, J. Laban for All; Taylor & Francis: New York, NY, USA, 2004. [Google Scholar]

- Camurri, A.; Volpe, G.; Piana, S.; Mancini, M.; Niewiadomski, R.; Ferrari, N.; Canepa, C. The Dancer in the Eye: Towards a Multi-Layered Computational Framework of Qualities in Movement. In Proceedings of the MOCO’16: 3rd International Symposium on Movement and Computing, Thessaloniki, GA, Greece, 5–6 July 2016. [Google Scholar] [CrossRef]

- Barakova, E.I.; van Berkel, R.; Hiah, L.; Teh, Y.F.; Werts, C. Observation Scheme for Interaction with Embodied Intelligent Agents Based on Laban Notation. In Proceedings of the 2015 IEEE International Conference on Robotics and Biomimetics, Zhuhai, China, 6–9 December 2015. [Google Scholar]

- Melzer, A.; Shafir, T.; Tsachor, R.P. How do we recognise emotion from movement? Specific motor components contribute to the recognition of each emotion. Front. Psychol. 2019, 10, 392097. [Google Scholar]

- Shafir, T. Modeling Emotion Perception from Body Movements for Human-Machine Interactions Using Laban Movement Analysis. In Modeling Visual Aesthetics, Emotion, and Artistic Style; Springer: Berlin/Heidelberg, Germany, 2023; pp. 313–330. [Google Scholar]

- Guo, W.; Craig, O.; Difato, T.; Oliverio, J.; Santoso, M.; Sonke, J.; Barmpoutis, A. AI-Driven human motion classification and analysis using laban movement system. In Proceedings of the International Conference on Human-Computer Interaction, Virtual, 26 June–1 July 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 201–210. [Google Scholar]

- Dash, B.; Davis, K. Significance of Nonverbal Communication and Paralinguistic Features in Communication: A Critical Analysis. Int. J. Innov. Res. Multidiscip. Field 2022, 8, 172–179. [Google Scholar]

- Ball, G.; Breese, J. Relating Personality and Behavior: Posture and Gestures. In Affective Interactions; Paiva, A., Ed.; Springer: Berlin/Heidelberg, Germany, 2000; pp. 196–203. [Google Scholar] [CrossRef]

- Pollick, F.E.; Paterson, H.M.; Bruderlin, A.; Sanford, A.J. Perceiving Affect from Arm Movement. Cognition 2001, 82, B51–B61. [Google Scholar]

- Castellano, G.; Villalba, S.D.; Camurri, A. Recognising Human Emotions from Body Movement and Gesture Dynamics. In Proceedings of the International Conference on Affective Computing and Intelligent Interaction, Lisbon, Portugal, 12–14 September 2007; pp. 71–82. [Google Scholar]

- Ziegelmaier, R.S.; Correia, W.; Teixeira, J.M.; Simões, F.P. Components of the LMA as a Design Tool for Expressive Movement and Gesture Construction. In Proceedings of the 2020 22nd Symposium on Virtual and Augmented Reality (SVR), Porto de Galinhas, Brazil, 7–10 November 2020. [Google Scholar]

- Novick, D.; Gris, I. Building Rapport Between Human and ECA: A Pilot Study. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2014; Volume 8511 LNCS (PART 2), pp. 472–480. [Google Scholar] [CrossRef]

- Brixey, J.; Novick, D. Building Rapport with Extraverted and Introverted Agents: 8th International Workshop on Spoken Dialog Systems; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar] [CrossRef]

- Hall, J.A.; Coats, E.J.; LeBeau, L.S. Nonverbal Behavior and the Vertical Dimension of Social Relations: A Meta-Analysis. Psychol. Bull. 2005, 131, 898–924. [Google Scholar] [CrossRef]

- Chopik, W.J.; Edelstein, R.S.; van Anders, S.M.; Wardecker, B.M.; Shipman, E.L.; Samples-Steele, C.R. Too Close for Comfort? Adult Attachment and Cuddling in Romantic and Parent–Child Relationships. Personal. Individ. Differ. 2014, 69, 212–216. [Google Scholar] [CrossRef]

- Simão, C.; Seibt, B. Friendly Touch Increases Gratitude by Inducing Communal Feelings. Front. Psychol. 2015, 6, 815. [Google Scholar] [CrossRef] [PubMed]

- Sekerdej, M.; Simão, C.; Waldzus, S.; Brito, R. Keeping in Touch with Context: Non-Verbal Behavior as a Manifestation of Communality and Dominance. J. Nonverbal Behav. 2018, 42, 311–326. [Google Scholar] [CrossRef] [PubMed]

- Cherry, K. Color Psychology: Does It Affect How You Feel? 2024. Available online: https://www.verywellmind.com/color-psychology-2795824 (accessed on 14 May 2024).

- Giummarra, M.J.; Gibson, S.J.; Georgiou-Karistianis, N.; Bradshaw, J.L. Mechanisms underlying embodiment, disembodiment and loss of embodiment. Neurosci. Biobehav. Rev. 2008, 32, 143–160. [Google Scholar] [CrossRef] [PubMed]

- Vicon Motion Systems. Vicon Motion Capture System. 2024. Available online: https://www.vicon.com (accessed on 14 February 2024).

- OptiTrack. OptiTrack Motion Capture System. 2024. Available online: https://www.optitrack.com (accessed on 14 February 2024).

- Qualisys Motion Capture. Qualisys Motion Capture System. 2024. Available online: https://www.qualisys.com (accessed on 14 February 2024).

- Xsens Technologies B.V. Xsens Motion Capture System. 2024. Available online: https://www.xsens.com (accessed on 14 February 2024).

- Rokoko Electronics. Rokoko Motion Capture System. 2024. Available online: https://www.rokoko.com (accessed on 14 February 2024).

- Cannavò, A.; Bottino, F.; Lamberti, F. Supporting motion-capture acting with collaborative Mixed Reality. Comput. Graph. 2024, 124, 104090. [Google Scholar] [CrossRef]

- Theodoropoulos, A.; El Raheb, K.; Kyriakoulakos, P.; Kougioumtzian, L.; Kalampratsidou, V.; Nikopoulos, G.; Stergiou, M.; Baltas, D.; Kolokotroni, A.; Malisova, K.; et al. Performing Personality in Game Characters and Digital Narrative. 2024. Available online: https://www.researchgate.net/publication/388631249_Editorial_Performing_Personality_in_Game_Characters_and_Digital_Narrative (accessed on 10 February 2025).

- Sharma, S.; Verma, S.; Kumar, M.; Sharma, L. Use of motion capture in 3D animation: Motion capture systems, challenges, and recent trends. In Proceedings of the 2019 International Conference on Machine Learning, Big Data, Cloud and Parallel Computing (COMITCon), Faridabad, India, 14–16 February 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 289–294. [Google Scholar]

- Wibowo, M.C.; Nugroho, S.; Wibowo, A. The use of motion capture technology in 3D animation. Int. J. Comput. Digit. Syst. 2024, 15, 975–987. [Google Scholar] [CrossRef]

- Menache, A. Understanding Motion Capture for Computer Animation, 2nd ed.; Morgan Kaufmann: San Francisco, CA, USA, 2010. [Google Scholar]

- Thomas, F.; Johnston, O. The Illusion of Life: Disney Animation; Hyperion: New York, NY, USA, 1995. [Google Scholar]

- Hanna-Barbera. Jerry’s Heartbeat. 1940. Available online: https://www.youtube.com/watch?v=QHuBdrCOpv8 (accessed on 14 February 2024).

- Liu, F.; Park, C.; Tham, Y.J.; Tsai, T.Y.; Dabbish, L.; Kaufman, G.; Monroy-Hernández, A. Significant otter: Understanding the role of biosignals in communication. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–15. [Google Scholar] [CrossRef]

- Birringer, J.; Danjoux, M. Wearable technology for the performing arts. In Smart Clothes and Wearable Technology; Elsevier: Amsterdam, The Netherlands, 2023; pp. 529–571. [Google Scholar]

- Nelson, E.C.; Verhagen, T.; Vollenbroek-Hutten, M.; Noordzij, M.L. Is wearable technology becoming part of us? Developing and validating a measurement scale for wearable technology embodiment. JMIR mHealth uHealth 2019, 7, e12771. [Google Scholar] [CrossRef]

- El-Raheb, K.; Kalampratsidou, V.; Issari, P.; Georgaca, E.; Koliouli, F.; Karydi, E.; Ioannidis, Y. Wearables in sociodrama: An embodied mixed-methods study of expressiveness in social interactions. Wearable Technol. 2022, 3, e10. [Google Scholar] [CrossRef]

- Ugur, S.; Bordegoni, M.; Wensveen, S.G.A.; Mangiarotti, R.; Carulli, M. Embodiment of emotions through wearable technology. In Proceedings of the International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Washington, DC, USA, 28–31 August 2011; Volume 54792, pp. 839–847. [Google Scholar]

- El Raheb, K.; Stergiou, M.; Koutiva, G.; Kalampratsidou, V.; Diamantides, P.; Katifori, A.; Giokas, P. Data and Artistic Creation: Challenges and opportunities of online mediation. In Proceedings of the 2nd International Conference of the ACM Greek SIGCHI Chapter, Athens Greece, 27–28 September 2023; pp. 1–8. [Google Scholar]

- Kalampratsidou, V.; El Raheb, K. Body signals as digital narratives: From social issues to digital characters. In Proceedings of the Performing Personality in Game Characters and Digital Narratives Workshop @ CEEGS 2024, Nafplio, Greece, 10–12 October 2024. [Google Scholar]

- Starke, S.; Zhang, H.; Komura, T.; Saito, J. Neural state machine for character-scene interactions. ACM Trans. Graph. 2019, 38, 178. [Google Scholar] [CrossRef]

- Yoshida, N.; Yonemura, S.; Emoto, M.; Kawai, K.; Numaguchi, N.; Nakazato, H.; Hayashi, K. Production of character animation in a home robot: A case study of Lovot. Int. J. Soc. Robot. 2022, 14, 39–54. [Google Scholar] [CrossRef]

- Rosas, J.; Palma, L.B.; Antunes, R.A. An Approach for Modeling and Simulation of Virtual Sensors in Automatic Control Systems Using Game Engines and Machine Learning. Sensors 2024, 24, 7610. [Google Scholar] [CrossRef]

- Feldotto, B.; Morin, F.O.; Knoll, A. The neurorobotics platform robot designer: Modeling morphologies for embodied learning experiments. Front. Neurorobotics 2022, 16, 856727. [Google Scholar] [CrossRef]

- Egger, M.; Ley, M.; Hanke, S. Emotion recognition from physiological signal analysis: A review. Electron. Notes Theor. Comput. Sci. 2019, 343, 35–55. [Google Scholar]

- Kim, J.; André, E. Multi-channel biosignal analysis for automatic emotion recognition. In Proceedings of the BIOSIGNALS 2008-International Conference on Bio-inspired Systems and Signal Processing, INSTICC, Funchal, Portugal, 28–31 January 2008; pp. 241–247. [Google Scholar]

- Guo, H.W.; Huang, Y.S.; Lin, C.H.; Chien, J.C.; Haraikawa, K.; Shieh, J.S. Heart rate variability signal features for emotion recognition by using principal component analysis and support vectors machine. In Proceedings of the 2016 IEEE 16th International Conference on Bioinformatics and Bioengineering (BIBE), Taichung, Taiwan, 31 October–2 November 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 274–277. [Google Scholar]

- Valenza, G.; Lanatà, A.; Scilingo, E.P.; De Rossi, D. Towards a smart glove: Arousal recognition based on textile electrodermal response. In Proceedings of the 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology, Buenos Aires, Argentina, 31 August–4 September 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 3598–3601. [Google Scholar]

- Villarejo, M.V.; Zapirain, B.G.; Zorrilla, A.M. A stress sensor based on Galvanic Skin Response (GSR) controlled by ZigBee. Sensors 2012, 12, 6075–6101. [Google Scholar] [CrossRef] [PubMed]

- Giomi, A. A Phenomenological Approach to Wearable Technologies and Viscerality: From embodied interaction to biophysical music performance. Organised Sound 2024, 29, 64–78. [Google Scholar]

- Wei, L.; Wang, S.J. Motion tracking of daily living and physical activities in health care: Systematic review from designers’ perspective. JMIR mHealth uHealth 2024, 12, e46282. [Google Scholar] [CrossRef]

- Kulic, D.; Croft, E.A. Affective state estimation for human–robot interaction. IEEE Trans. Robot. 2007, 23, 991–1000. [Google Scholar]

- Ekman, P. The directed facial action task. In Handbook of Emotion Elicitation and Assessment; Oxford University Press: Oxford, UK, 2007; Volume 47, p. 53. [Google Scholar]

- Damasio, A.R. The somatic marker hypothesis and the possible functions of the prefrontal cortex. Philos. Trans. R. Soc. Lond. Ser. B Biol. Sci. 1996, 351, 1413–1420. [Google Scholar]

- Zhang, Q.; Chen, X.; Zhan, Q.; Yang, T.; Xia, S. Respiration-based emotion recognition with deep learning. Comput. Ind. 2017, 92, 84–90. [Google Scholar]

- Barrett, L.F. Solving the emotion paradox: Categorization and the experience of emotion. Personal. Soc. Psychol. Rev. 2006, 10, 20–46. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

El-Raheb, K.; Kougioumtzian, L.; Kalampratsidou, V.; Theodoropoulos, A.; Kyriakoulakos, P.; Vosinakis, S. Sensing the Inside Out: An Embodied Perspective on Digital Animation Through Motion Capture and Wearables. Sensors 2025, 25, 2314. https://doi.org/10.3390/s25072314

El-Raheb K, Kougioumtzian L, Kalampratsidou V, Theodoropoulos A, Kyriakoulakos P, Vosinakis S. Sensing the Inside Out: An Embodied Perspective on Digital Animation Through Motion Capture and Wearables. Sensors. 2025; 25(7):2314. https://doi.org/10.3390/s25072314

Chicago/Turabian StyleEl-Raheb, Katerina, Lori Kougioumtzian, Vilelmini Kalampratsidou, Anastasios Theodoropoulos, Panagiotis Kyriakoulakos, and Spyros Vosinakis. 2025. "Sensing the Inside Out: An Embodied Perspective on Digital Animation Through Motion Capture and Wearables" Sensors 25, no. 7: 2314. https://doi.org/10.3390/s25072314

APA StyleEl-Raheb, K., Kougioumtzian, L., Kalampratsidou, V., Theodoropoulos, A., Kyriakoulakos, P., & Vosinakis, S. (2025). Sensing the Inside Out: An Embodied Perspective on Digital Animation Through Motion Capture and Wearables. Sensors, 25(7), 2314. https://doi.org/10.3390/s25072314