Driver Head–Hand Cooperative Action Recognition Based on FMCW Millimeter-Wave Radar and Deep Learning

Abstract

1. Introduction

2. Related Theories

2.1. Millimeter-Wave Radar Echo Model

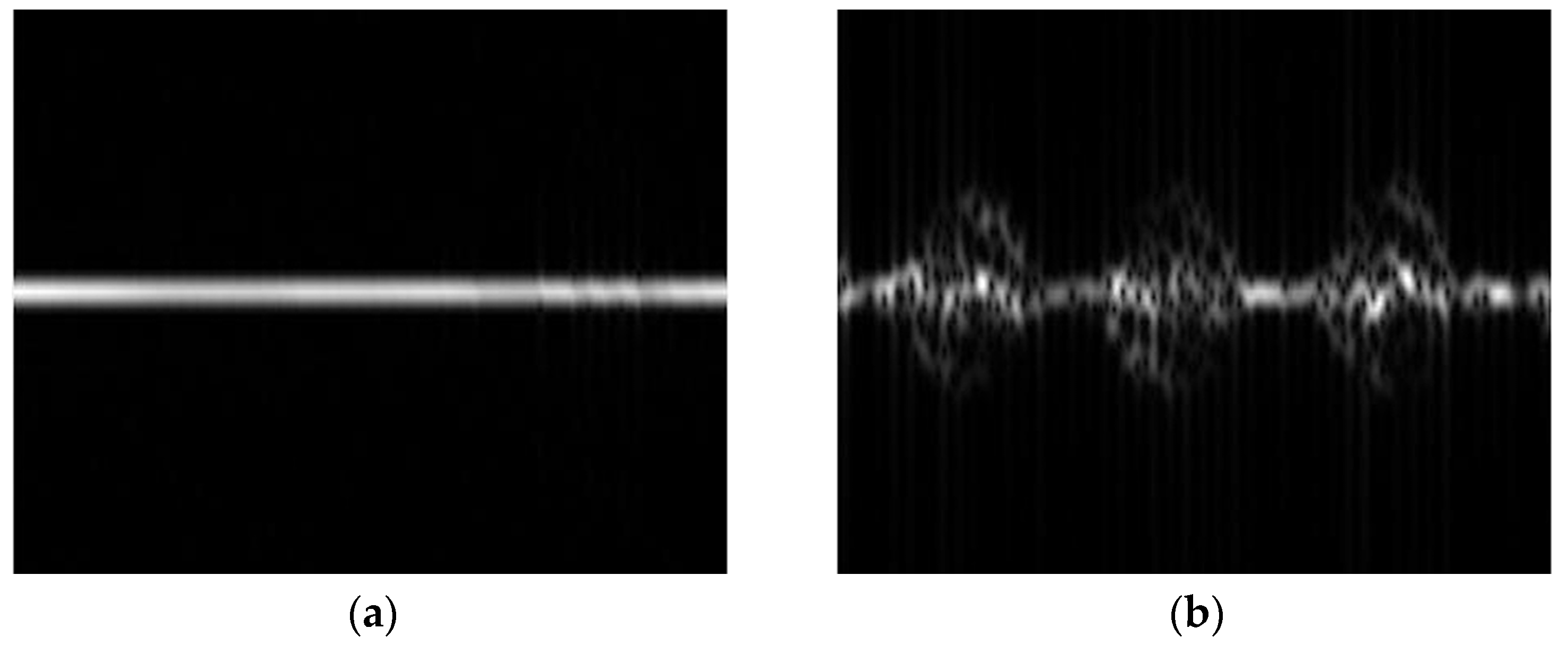

2.2. Micro-Doppler Spectrogram Acquisition

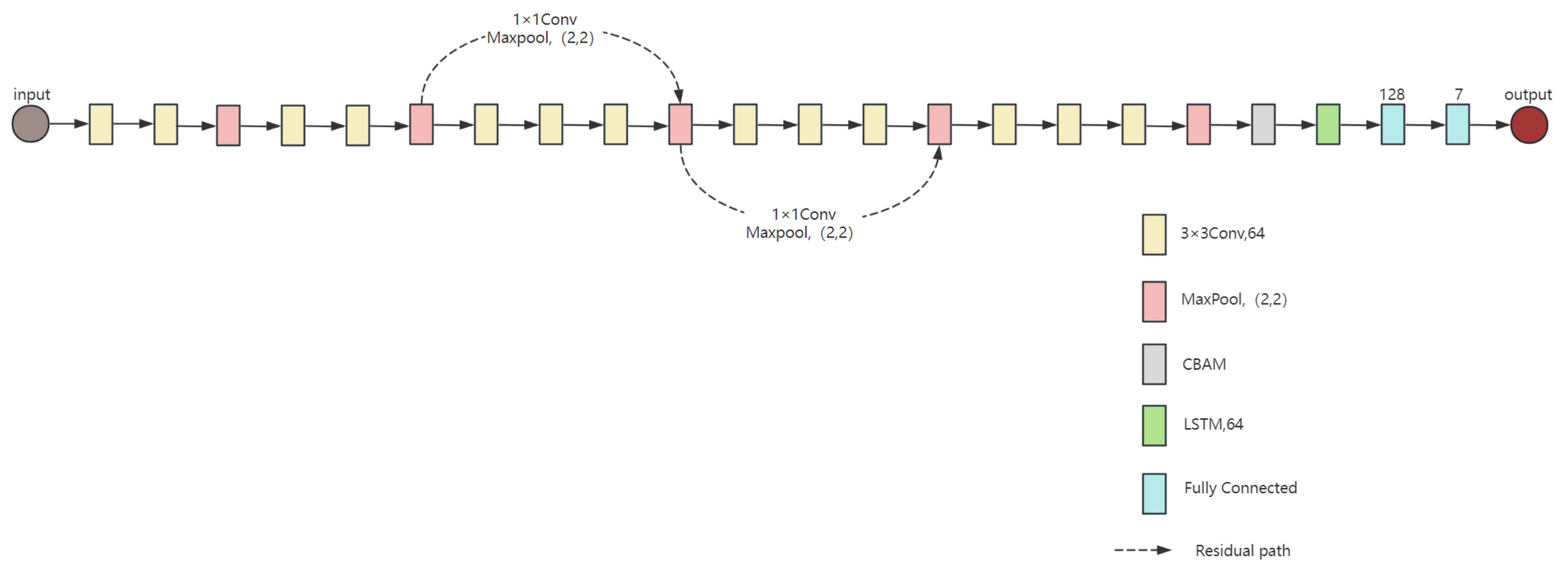

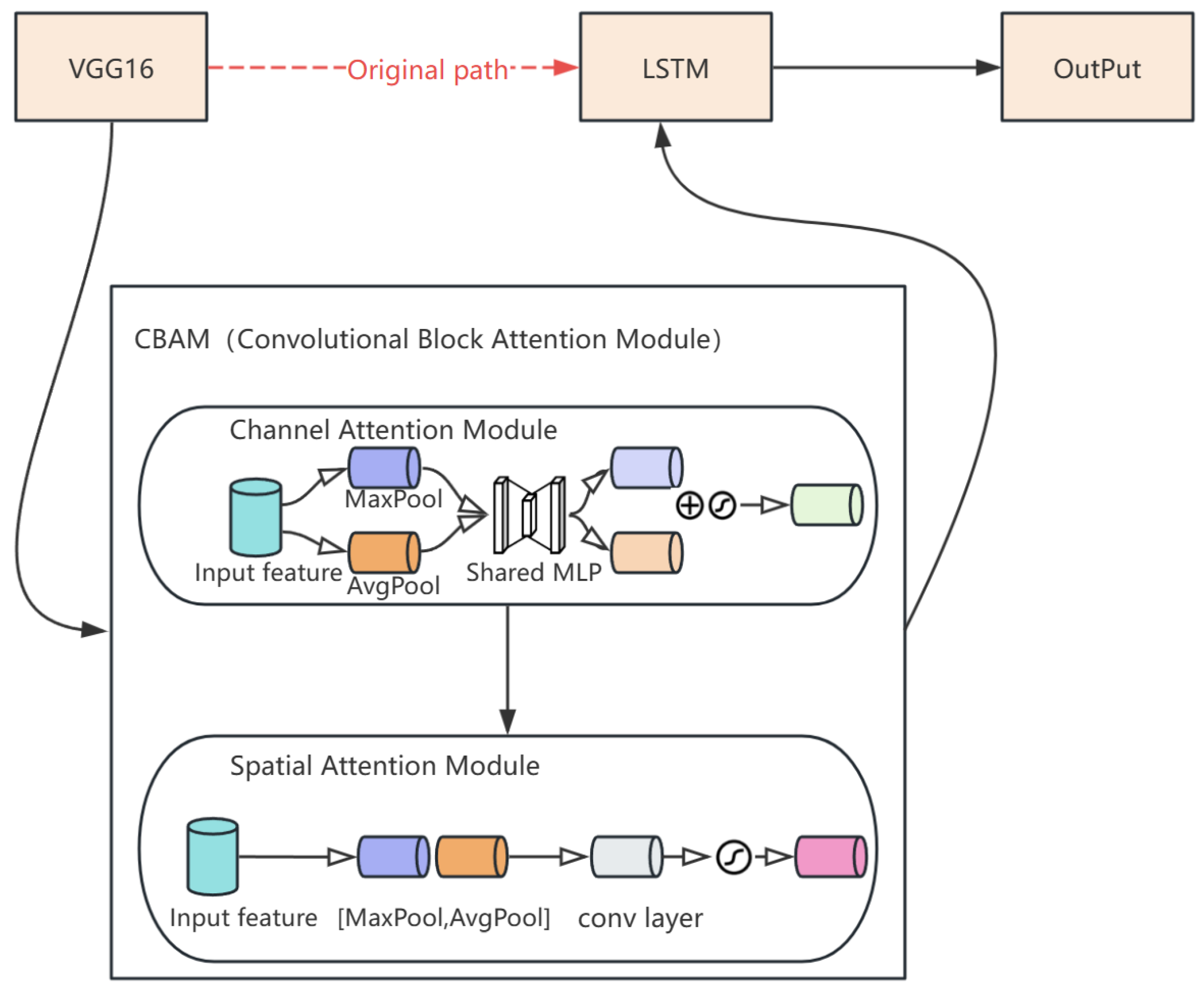

3. VGG16-LSTM-CBAM Network

3.1. VGG16-LSTM

3.2. CBAM

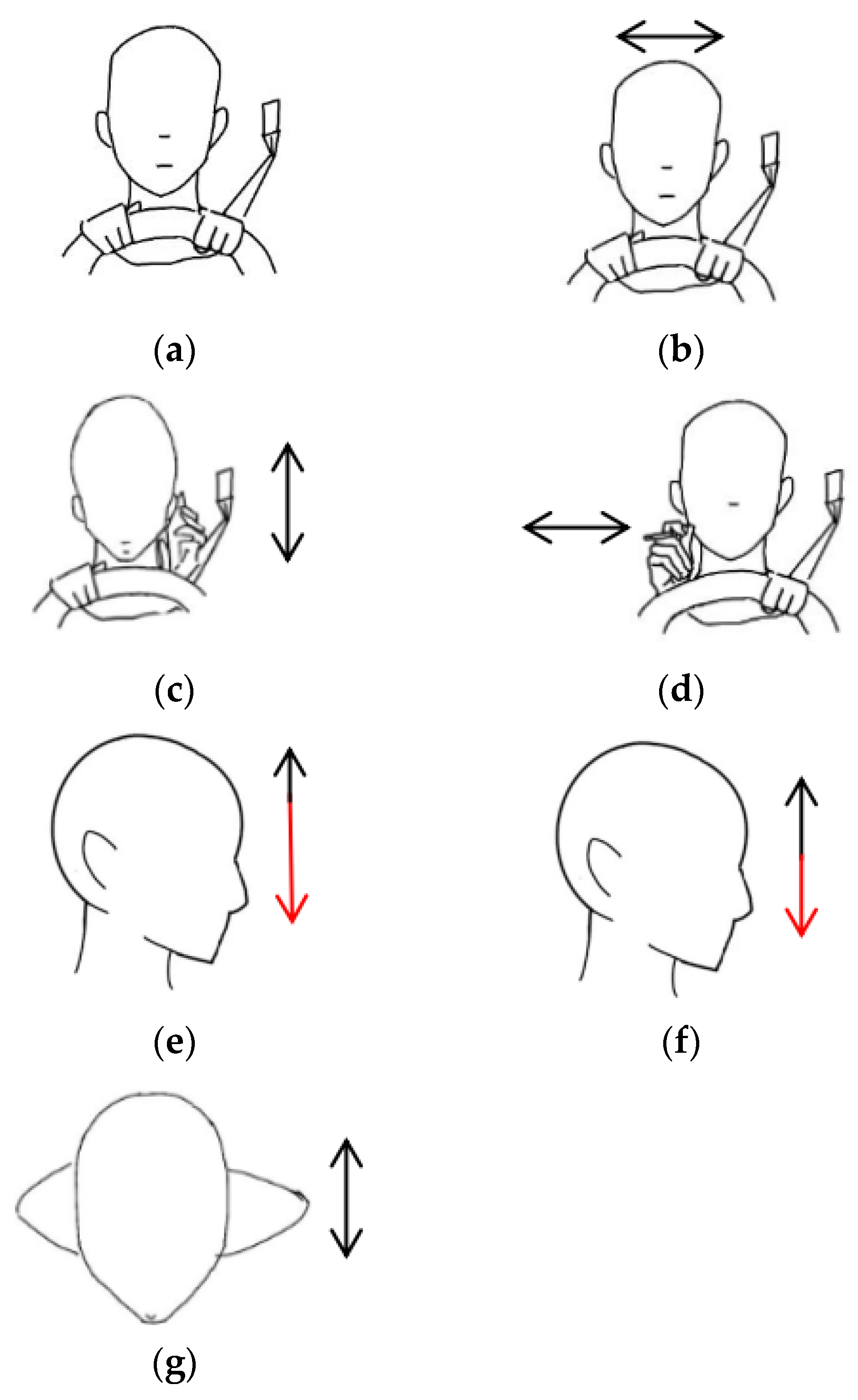

4. Dataset

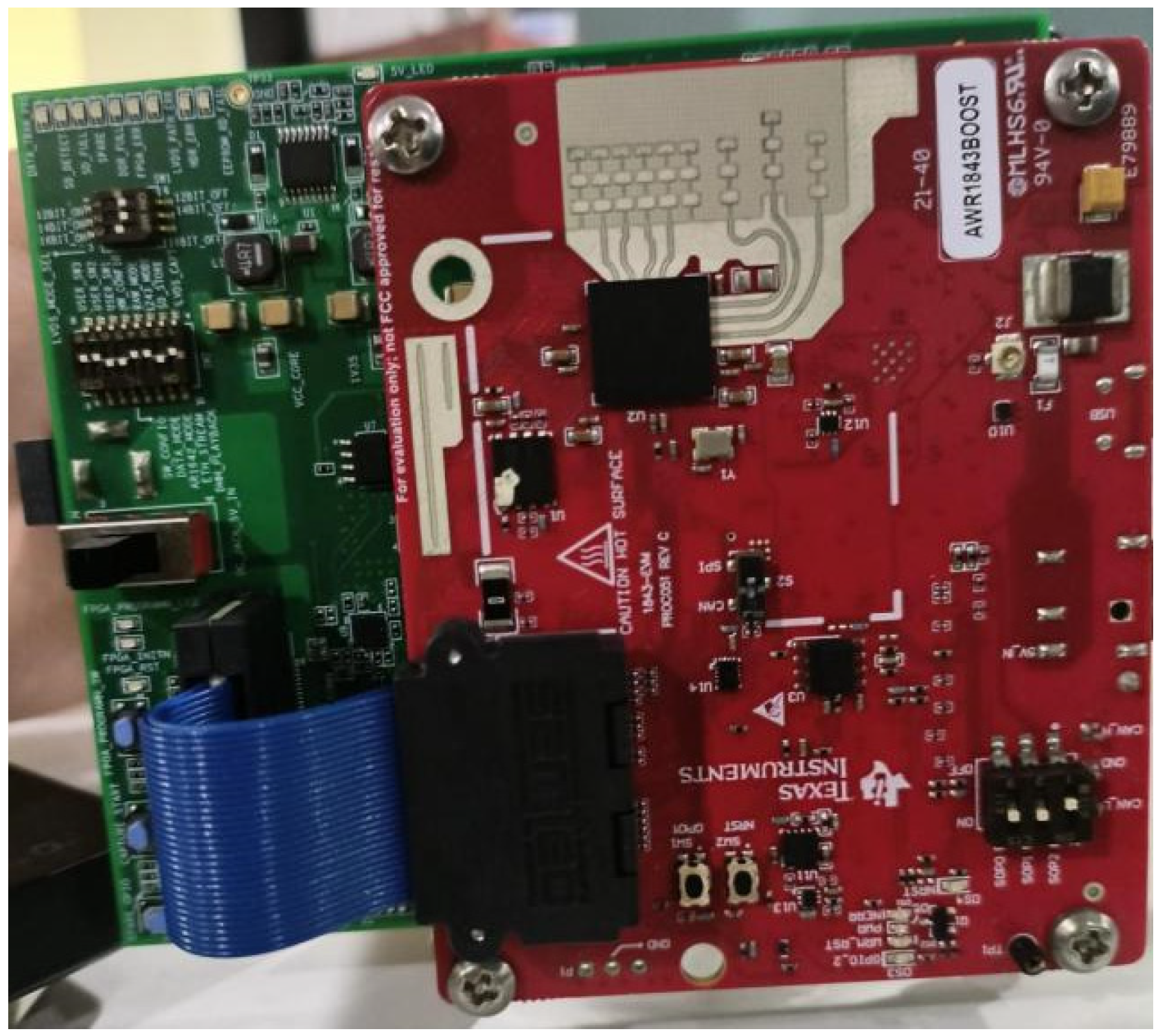

4.1. Experimental Setup

4.2. Safety of Millimeter-Wave Radar

4.3. Data Acquisition

5. Experimental Results and Analysis

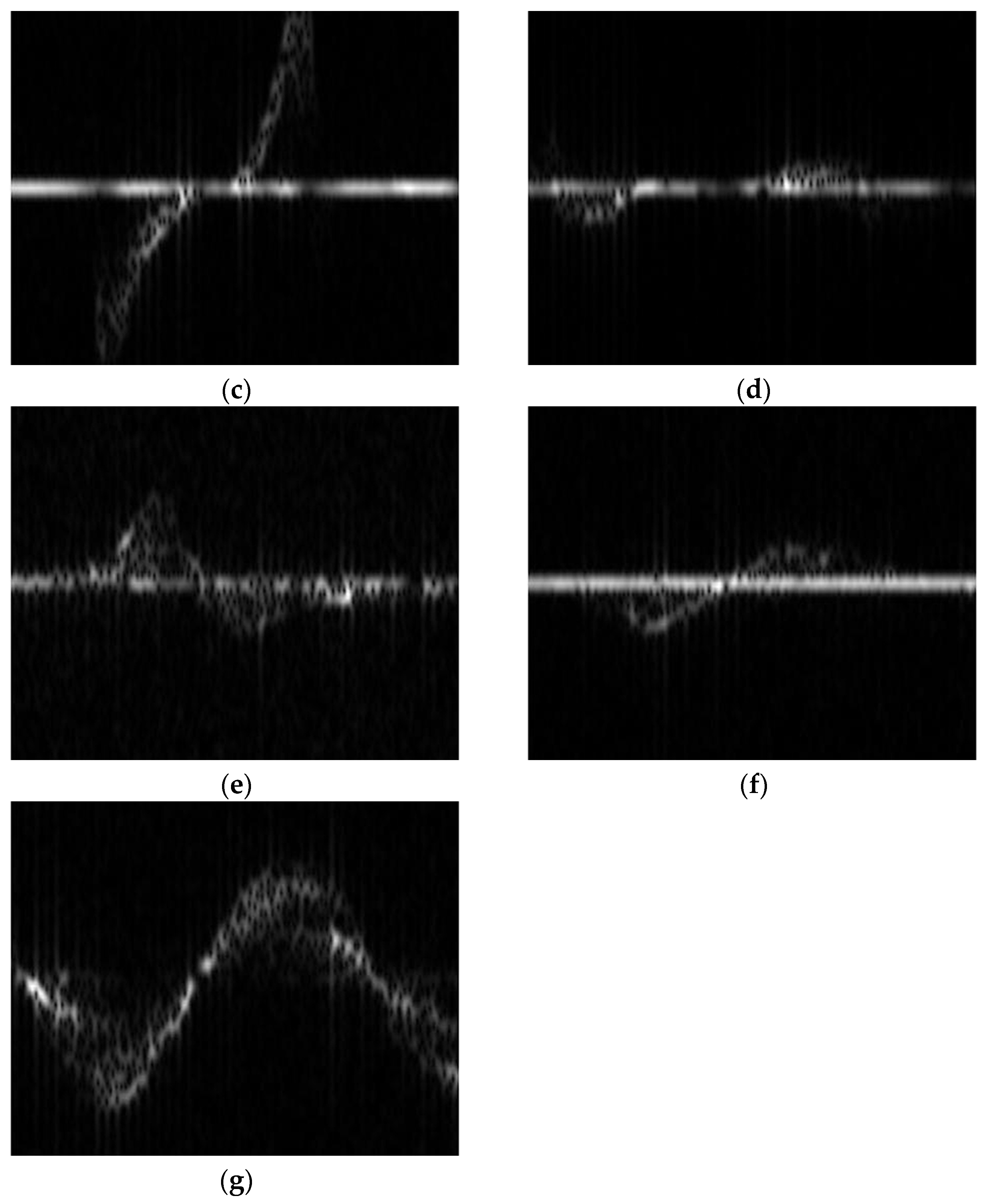

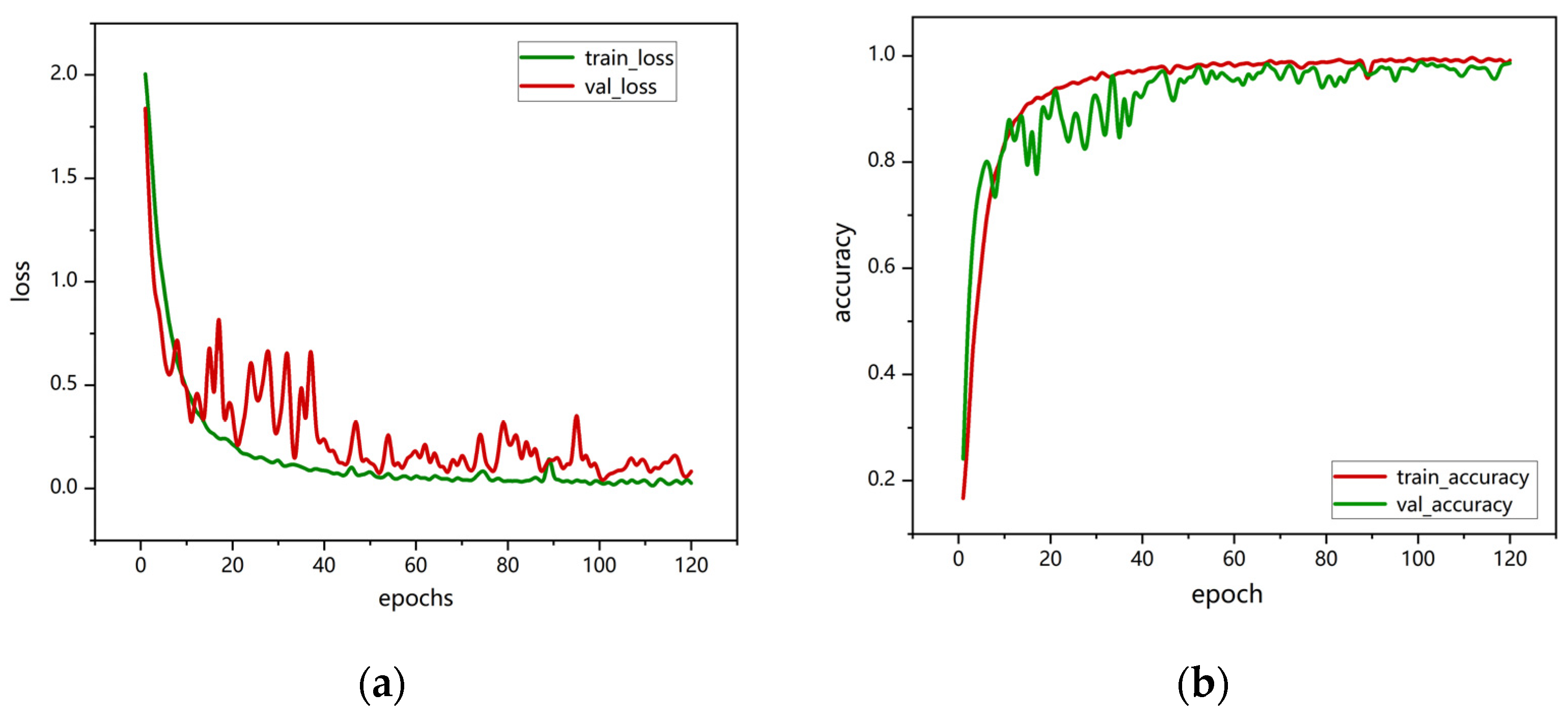

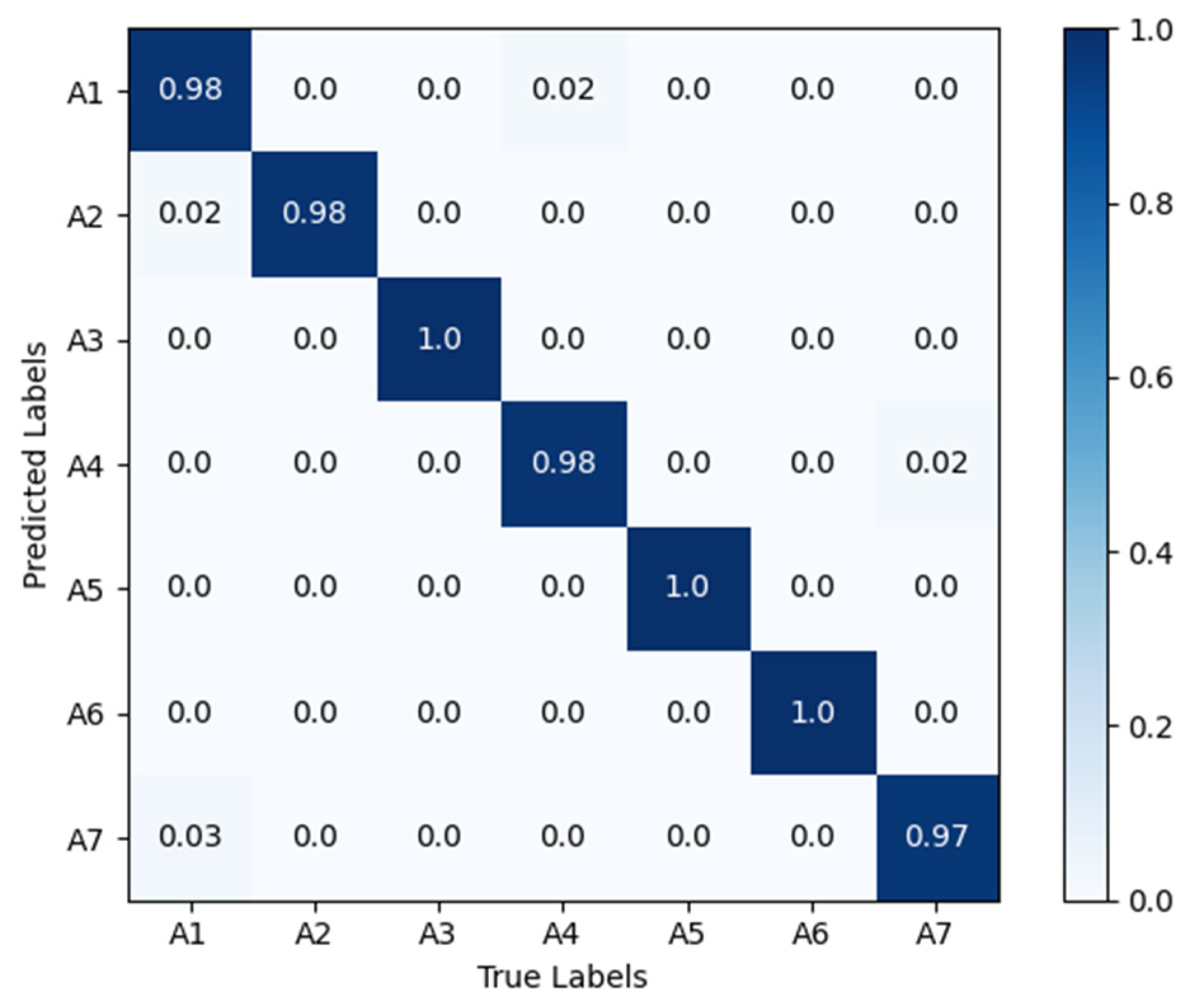

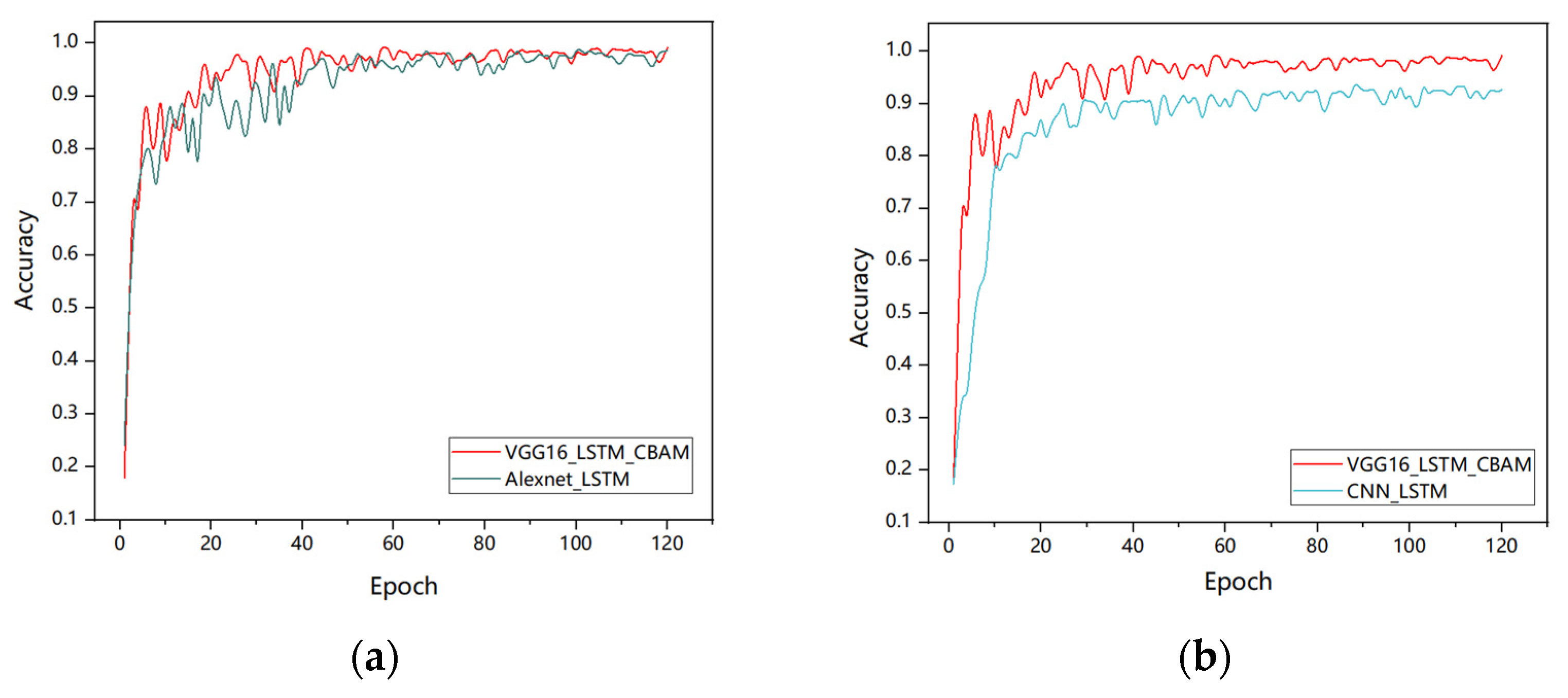

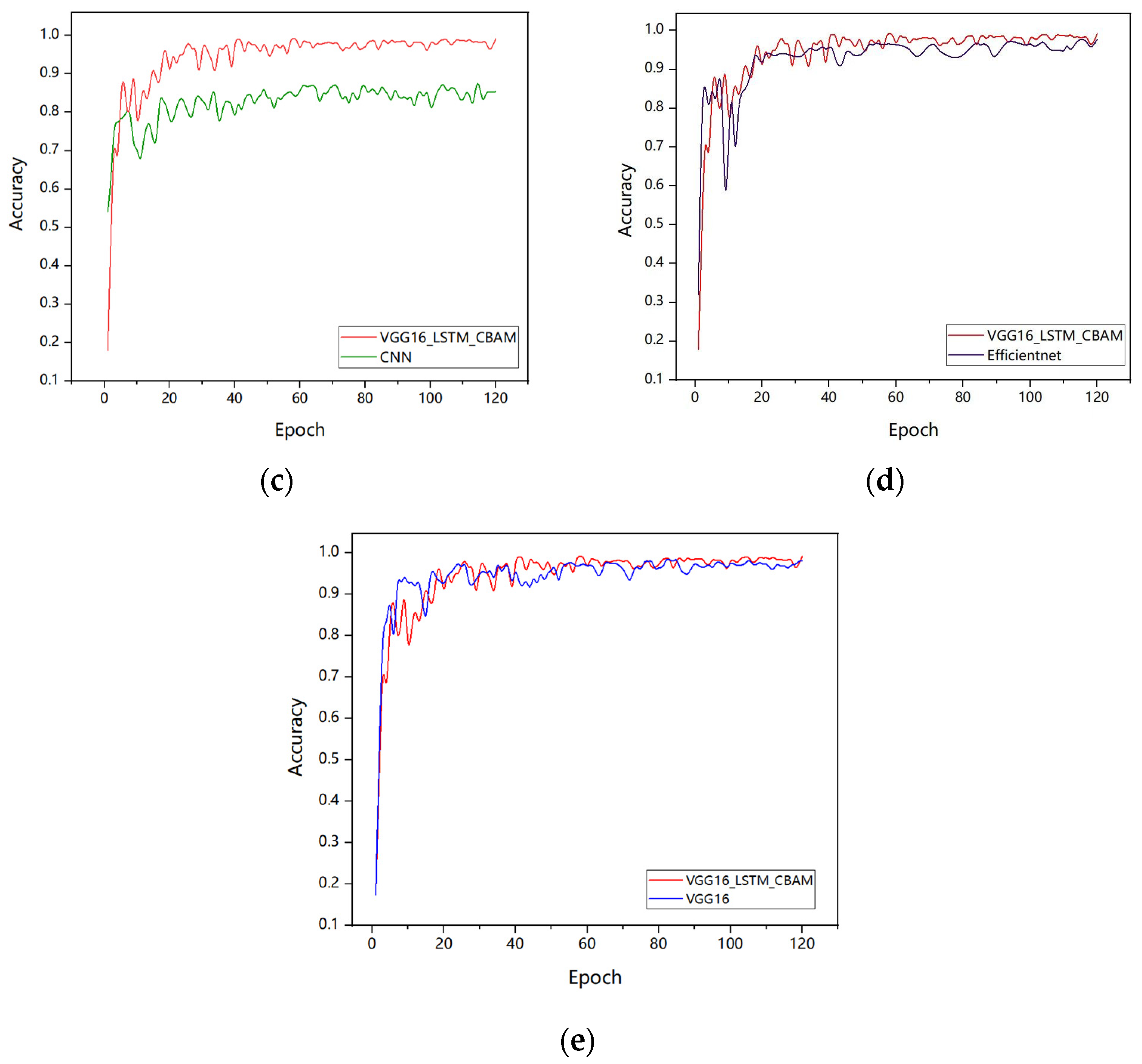

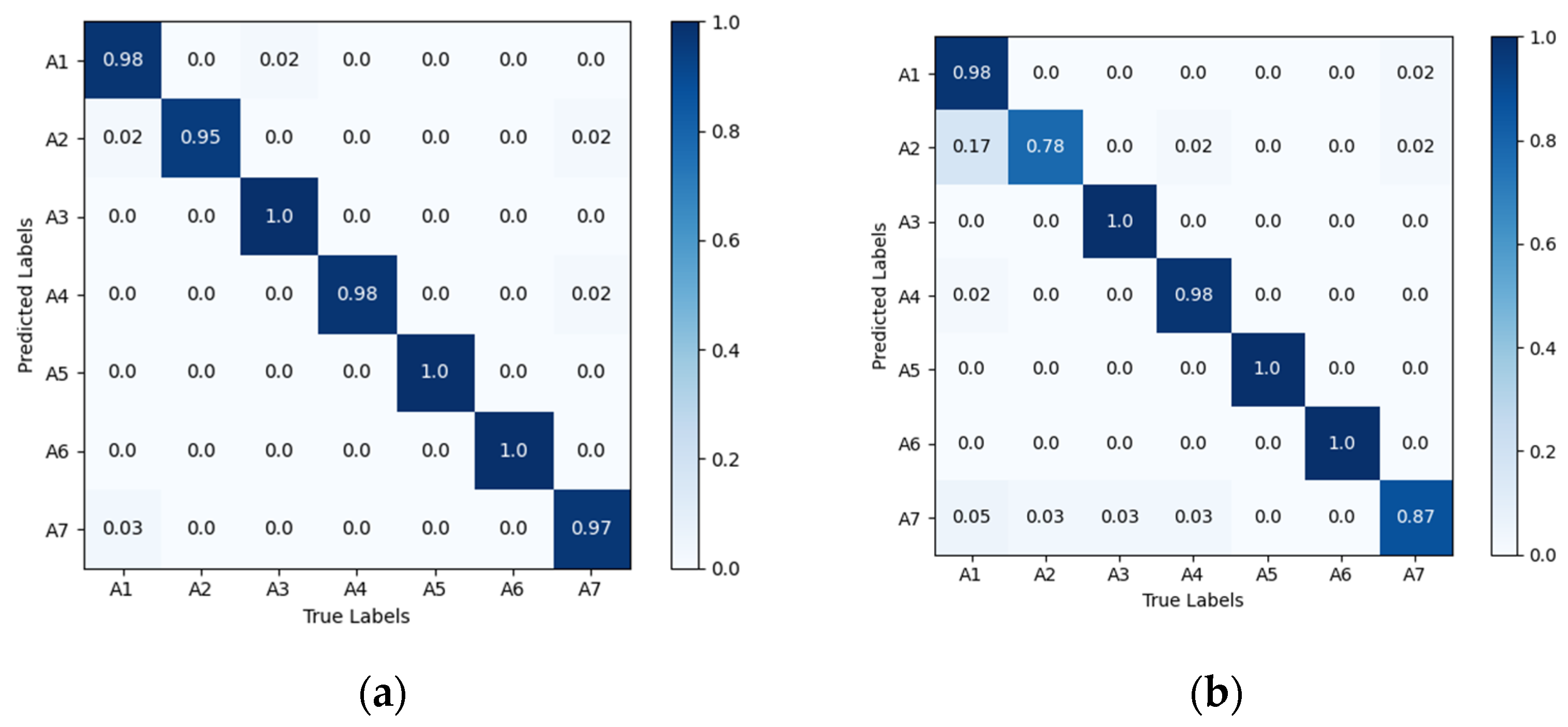

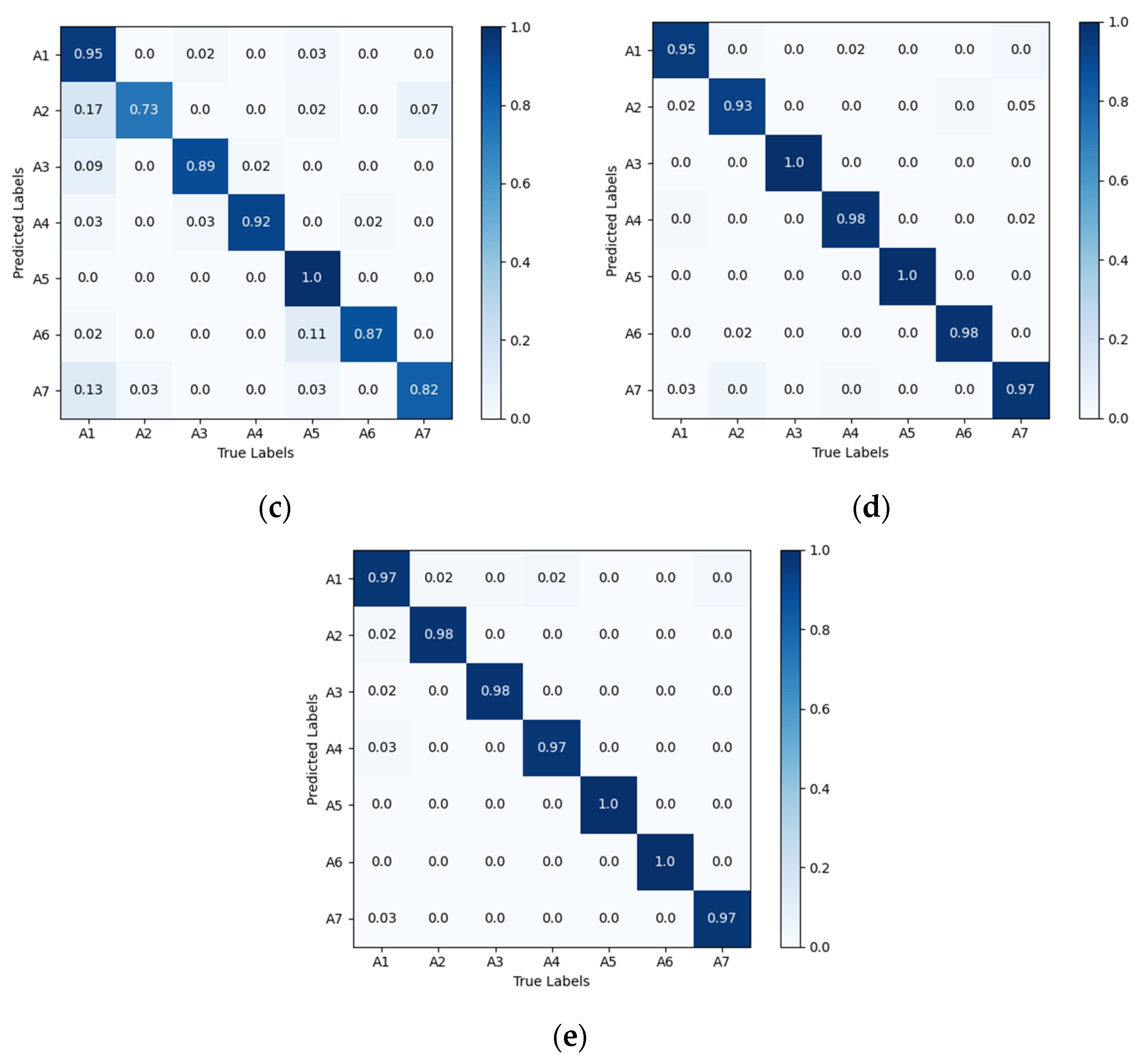

5.1. Performance Analysis

5.2. Comparison with Other Networks

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Creß, C.; Bing, Z.; Knoll, A.C. Intelligent Transportation Systems Using Roadside Infrastructure: A Literature Survey. IEEE Trans. Intell. Transp. Syst. 2024, 25, 6309–6327. [Google Scholar] [CrossRef]

- Garikapati, D.; Shetiya, S.S. Autonomous Vehicles: Evolution of Artificial Intelligence and the Current Industry Landscape. Big Data Cogn. Comput. 2024, 8, 42. [Google Scholar] [CrossRef]

- Wang, G.; Wang, Y.; Sun, H.; Miao, B.; Wang, Y. A Reference Matching-Based Temperature Compensation Method for Ultrasonic Guided Wave Signals. Sensors 2019, 19, 5174. [Google Scholar] [CrossRef] [PubMed]

- Xing, Y.; Lv, C.; Wang, H.; Cao, D.; Velenis, E.; Wang, F.-Y. Driver Activity Recognition for Intelligent Vehicles: A Deep Learning Approach. IEEE Trans. Veh. Technol. 2019, 68, 5379–5390. [Google Scholar] [CrossRef]

- Saranya, D.; Nalinipriya, G.; Kanagavalli, N.; Arunkumar, S.; Kavitha, G. Deep CNN Models for Driver Activity Recognition for Intelligent Vehicles. 2020, 8, 7146–7150. Int. J. 2020, 8, 7146–7150. [Google Scholar]

- Chai, W.; Chen, J.; Wang, J.; Velipasalar, S.; Venkatachalapathy, A.; Adu-Gyamfi, Y.; Merickel, J.; Sharma, A. Driver Head Pose Detection From Naturalistic Driving Data. IEEE Trans. Intell. Transp. Syst. 2023, 24, 9368–9377. [Google Scholar] [CrossRef]

- Jiao, S.-J.; Liu, L.-Y.; Liu, Q. A Hybrid Deep Learning Model for Recognizing Actions of Distracted Drivers. Sensors 2021, 21, 7424. [Google Scholar] [CrossRef]

- Shafique, A.; Cao, G.; Aslam, M.; Asad, M.; Ye, D. Application-Aware SDN-Based Iterative Reconfigurable Routing Protocol for Internet of Things (IoT). Sensors 2020, 20, E3521. [Google Scholar] [CrossRef]

- Sajid, F.; Javed, A.R.; Basharat, A.; Kryvinska, N.; Afzal, A.; Rizwan, M. An Efficient Deep Learning Framework for Distracted Driver Detection. IEEE Access 2021, 9, 169270–169280. [Google Scholar] [CrossRef]

- Ansari, S.; Naghdy, F.; Du, H.; Pahnwar, Y.N. Driver Mental Fatigue Detection Based on Head Posture Using New Modified reLU-BiLSTM Deep Neural Network. IEEE Trans. Intell. Transp. Syst. 2022, 23, 10957–10969. [Google Scholar] [CrossRef]

- Zhao, Z.; Xia, S.; Xu, X.; Zhang, L.; Yan, H.; Xu, Y.; Zhang, Z. Driver Distraction Detection Method Based on Continuous Head Pose Estimation. Comput. Intell. Neurosci. 2020, 2020, 9606908. [Google Scholar] [CrossRef]

- Sen, A.; Mandal, A.; Karmakar, P.; Das, A.; Chakraborty, S. Passive Monitoring of Dangerous Driving Behaviors Using mmWave Radar. Pervasive Mob. Comput. 2024, 103, 101949. [Google Scholar] [CrossRef]

- Ma, J.; Ma, Y.; Li, C. Infrared and Visible Image Fusion Methods and Applications: A Survey. Inf. Fusion 2019, 45, 153–178. [Google Scholar] [CrossRef]

- Moon, J.; Bukhari, M.; Kim, C.; Nam, Y.; Maqsood, M.; Rho, S. Object Detection under the Lens of Privacy: A Critical Survey of Methods, Challenges, and Future Directions. ICT Express 2024, 10, 1124–1144. [Google Scholar] [CrossRef]

- Guo, J.; Wei, J.; Xiang, Y.; Han, C. Millimeter-Wave Radar-Based Identity Recognition Algorithm Built on Multimodal Fusion. Sensors 2024, 24, 4051. [Google Scholar] [CrossRef]

- Mehrjouseresht, P.; Hail, R.E.; Karsmakers, P.; Schreurs, D.M.P. Respiration and Heart Rate Monitoring in Smart Homes: An Angular-Free Approach with an FMCW Radar. Sensors 2024, 24, 2448. [Google Scholar] [CrossRef]

- Xiang, M.; Ren, W.; Li, W.; Xue, Z.; Jiang, X. High-Precision Vital Signs Monitoring Method Using a FMCW Millimeter-Wave Sensor. Sensors 2022, 22, 7543. [Google Scholar] [CrossRef]

- Chen, Y.; Yuan, J.; Tang, J. A High Precision Vital Signs Detection Method Based on Millimeter Wave Radar. Sci. Rep. 2024, 14, 25535. [Google Scholar] [CrossRef]

- Dai, J.; Yan, J.; Qi, Y. Millimeter Wave Radar Range Bin Tracking and Locking for Vital Sign Detection with Binocular Cameras. Appl. Sci.-BASEL 2023, 13, 6270. [Google Scholar] [CrossRef]

- Han, Z.; Wang, J.; Xu, Z.; Yang, S.; He, L.; Xu, S.; Wang, J. 4D Millimeter-Wave Radar in Autonomous Driving: A Survey. arXiv 2023, arXiv:2306.04242. [Google Scholar]

- Anitori, L.; Jong, A.D.; Nennie, F. FMCW Radar for Life-Sign Detection. In Proceedings of the IEEE Radar Conference, Pasadena, CA, USA, 4–8 May 2009. [Google Scholar]

- Bai, W.; Chen, S.; Ma, J.; Wang, Y.; Han, C. Gesture Recognition with Residual LSTM Attention Using Millimeter-Wave Radar. Sensors 2025, 25, 469. [Google Scholar] [CrossRef] [PubMed]

- Arsalan, M.; Santra, A.; Issakov, V. RadarSNN: A Resource Efficient Gesture Sensing System Based on Mm-Wave Radar. IEEE Trans. Microw. Theory Tech. 2022, 70, 2451–2461. [Google Scholar] [CrossRef]

- Govindaraj, V. Forward Collision Warning System with Visual Distraction Detection in Bikes. Ph.D. Thesis, University of Twente, Enschede, The Netherlands, 2021. [Google Scholar]

- Li, W.; Guan, Y.; Chen, L.; Sun, L. Millimeter-Wave Radar and Machine Vision-Based Lane Recognition. Int. J. Pattern Recognit. Artif. Intell. 2018, 32, 1850015. [Google Scholar] [CrossRef]

- Dang, X.; Fan, K.; Li, F.; Tang, Y.; Gao, Y.; Wang, Y. Multi-Person Action Recognition Based on Millimeter-Wave Radar Point Cloud. Appl. Sci. Switz. 2024, 14, 7253. [Google Scholar] [CrossRef]

- Li, L.; Bai, W.; Han, C. Multiscale Feature Fusion for Gesture Recognition Using Commodity Millimeter-Wave Radar. Comput. Mater. Contin. 2024, 81, 1613–1640. [Google Scholar] [CrossRef]

- Lim, S.; Park, C.; Lee, S.; Jung, Y. Human Activity Recognition Based on Point Clouds from Millimeter-Wave Radar. Appl. Sci. Switz. 2024, 14, 10764. [Google Scholar] [CrossRef]

- Bresnahan, D.G.; Koziol, S.; Li, Y. Investigation of Patellar Deep Tendon Reflex Using Millimeter-Wave Radar and Motion Capture Technologies. IEEE Access 2024, 12, 9220–9228. [Google Scholar] [CrossRef]

- Arab, H.; Ghaffari, I.; Chioukh, L.; Tatu, S.O.; Dufour, S. A Convolutional Neural Network for Human Motion Recognition and Classification Using a Millimeter-Wave Doppler Radar. IEEE Sens. J. 2022, 22, 4494–4502. [Google Scholar] [CrossRef]

- Gharamohammadi, A.; Khajepour, A.; Shaker, G. In-Vehicle Monitoring by Radar: A Review. IEEE Sens. J. 2023, 23, 25650–25672. [Google Scholar] [CrossRef]

- Nguyen, H.N.; Lee, S.; Nguyen, T.; Kim, Y. One-shot Learning-based Driver’s Head Movement Identification Using a Millimetre-wave Radar Sensor. IET Radar Sonar Navig. 2022, 16, 825–836. [Google Scholar] [CrossRef]

- Chen, H.; Han, X.; Hao, Z.; Yan, H.; Yang, J. Non-Contact Monitoring of Fatigue Driving Using FMCW Millimeter Wave Radar. ACM Trans. Internet Things 2024, 5, 3. [Google Scholar] [CrossRef]

- Chae, R.; Wang, A.; Li, C. FMCW Radar Driver Head Motion Monitoring Based on Doppler Spectrogram and Range-Doppler Evolution. In Proceedings of the IEEE Topical Conference on Wireless Sensors and Sensor Networks, Orlando, FL, USA, 20–23 January 2019. [Google Scholar]

- Jung, J.; Lim, S.; Kim, B.-K.; Lee, S. CNN-Based Driver Monitoring Using Millimeter-Wave Radar Sensor. IEEE Sens. Lett. 2021, 5, 3500404. [Google Scholar] [CrossRef]

- Bresnahan, D.G.; Li, Y. Classification of Driver Head Motions Using a Mm-Wave FMCW Radar and Deep Convolutional Neural Network. IEEE Access 2021, 9, 100472–100479. [Google Scholar] [CrossRef]

- Zhang, Y.; Narayanan, R.M. Narayanan Monopulse Radar Based on Spatiotemporal Correlation of Stochastic Signals. IEEE Trans. Aerosp. Electron. Syst. 2006, 42, 160–173. [Google Scholar] [CrossRef]

- Finn, H.; Johnson, R. Adaptive detection mode with threshold control as a function of spatially sampled clutter-level estimates. RCA Rev. 1968, 29, 414–465. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- AWR1843BOOST TI.com.cn. Available online: http://www.ti.com.cn/tool/cn/AWR1843BOOST (accessed on 3 February 2025).

- DCA1000EVM TI.com.cn. Available online: http://www.ti.com.cn/tool/cn/DCA1000EVM (accessed on 3 February 2025).

- Sheng-Yang, S.; Wen-Zhong, T.; Yan-Yang, W.; Long, L.; Li, Y.; Li, X.; Dai, Y.; Yang, H. A Review on Fatigue Driving Detection. Itm Web Conf. 2017, 12, 01019. [Google Scholar]

| Parameters | Value |

|---|---|

| Starting frequency/GHz | 77 |

| Effective bandwidth/MHz | 2667 |

| Chirp samples/count | 128 |

| Sampling frequency/ksps | 4000 |

| Slope/(MHz/μs) | 21 |

| Frame chirp count/count | 255 |

| Frame period/ms | 270 |

| Network | Accuracy | Recall | F1 Score | Parameter Count (M) | FLOPs (G) | Model Size (MB) |

|---|---|---|---|---|---|---|

| VGG16-LSTM-CBAM | 99.16% | 99.13% | 99.15% | 16.25 | 91.00 | 61.99 |

| VGG16 | 98.04% | 97.94% | 97.99% | 304.17 | 90.37 | 1160.33 |

| Alexnet-LSTM | 98.03% | 98.00% | 98.02% | 4.99 | 6.10 | 19.03 |

| EfficientNetB0 | 97.76% | 98.12% | 97.86% | 4.13 | 0.78 | 15.76 |

| CNN-LSTM | 92.71% | 92.50% | 92.61% | 0.25 | 0.08 | 0.94 |

| CNN | 85.43% | 84.80% | 85.10% | 0.30 | 0.03 | 1.16 |

| Network | Accuracy | F1 Score | Model Size (MB) |

|---|---|---|---|

| VGG16 only | 98.04% | 97.99% | 1160.33 |

| +LSTM | 98.12% | 98.05% | 1160.89 |

| Modified FC | 98.60% | 98.63% | 61.46 |

| VGG16-LSTM-CBAM | 99.16% | 99.15% | 61.99 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, L.; Chen, X.; Chen, Z.; Zheng, J.; Diao, Y. Driver Head–Hand Cooperative Action Recognition Based on FMCW Millimeter-Wave Radar and Deep Learning. Sensors 2025, 25, 2399. https://doi.org/10.3390/s25082399

Zhang L, Chen X, Chen Z, Zheng J, Diao Y. Driver Head–Hand Cooperative Action Recognition Based on FMCW Millimeter-Wave Radar and Deep Learning. Sensors. 2025; 25(8):2399. https://doi.org/10.3390/s25082399

Chicago/Turabian StyleZhang, Lianlong, Xiaodong Chen, Zexin Chen, Jiawen Zheng, and Yinliang Diao. 2025. "Driver Head–Hand Cooperative Action Recognition Based on FMCW Millimeter-Wave Radar and Deep Learning" Sensors 25, no. 8: 2399. https://doi.org/10.3390/s25082399

APA StyleZhang, L., Chen, X., Chen, Z., Zheng, J., & Diao, Y. (2025). Driver Head–Hand Cooperative Action Recognition Based on FMCW Millimeter-Wave Radar and Deep Learning. Sensors, 25(8), 2399. https://doi.org/10.3390/s25082399