The Design of a Vision-Assisted Dynamic Antenna Positioning Radio Frequency Identification-Based Inventory Robot Utilizing a 3-Degree-of-Freedom Manipulator

Abstract

:1. Introduction

2. Related Work

3. Robot Design

3.1. RFID-HAND Robot Hardware Description

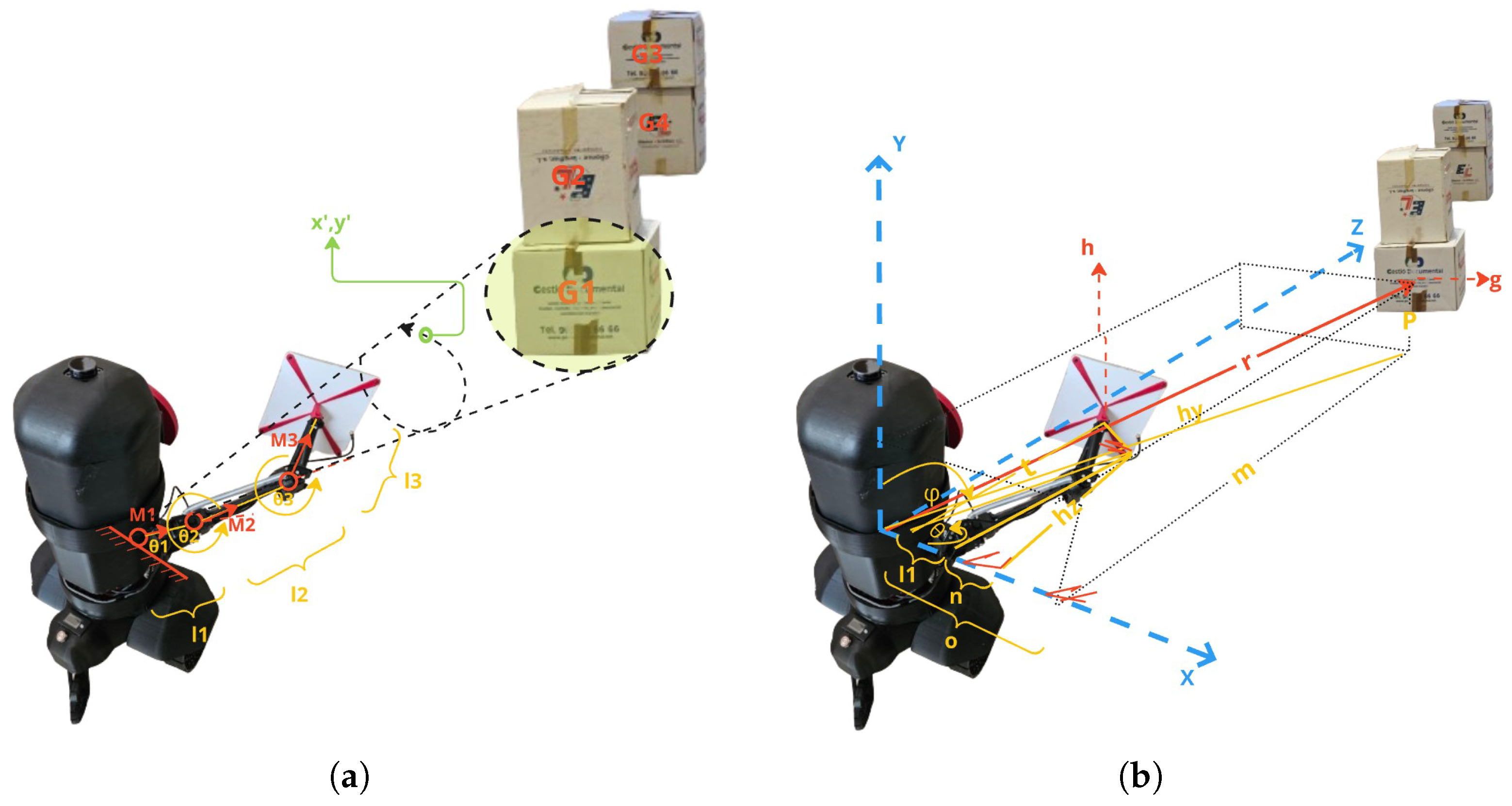

- Robot arms block: The robot is designed with a robotic hand offering 3 DOF, which is powered by three cost-effective servo motors equipped with feedback capabilities for precise and accurate movement. An RFID antenna is mounted on the end effector, allowing for efficient spatial manipulation. The joints of the robotic hand are interconnected with an elastic rubber band, capable of supporting weights up to 30 kg, which not only reduces pressure on the servos but also enhances the stall torque limit, thus optimizing the performance of each joint.

- Sensors block: The sensor array of the robot is meticulously arranged, featuring a 2D LiDAR sensor mounted on top of the chassis for critical proximity detection and 2D environmental mapping. Additionally, an RGB-D camera is integrated to capture both color images and depth information, enabling the robot to accurately perceive the shape, size, and distance of objects in its vicinity. The computational backbone of the robot is a cost-effective and energy-efficient single-board computer (SBC), specifically the n100, which processes sensor data, executes control algorithms, and supports advanced functionalities such as neural network-based object recognition, navigation, and localization.

- Mobility block: For mobility, the robot is equipped with two 24 V high-torque motors, each fitted with hall sensors and a motion controller, ensuring precise control over the robot’s movement, velocity, and acceleration. The power system is designed for sustained operation, either via a rechargeable battery pack or an external power source, ensuring the robot’s continuous functionality.

3.2. RFID-HAND Robot Software Description

4. Technical Overview

5. Experiments

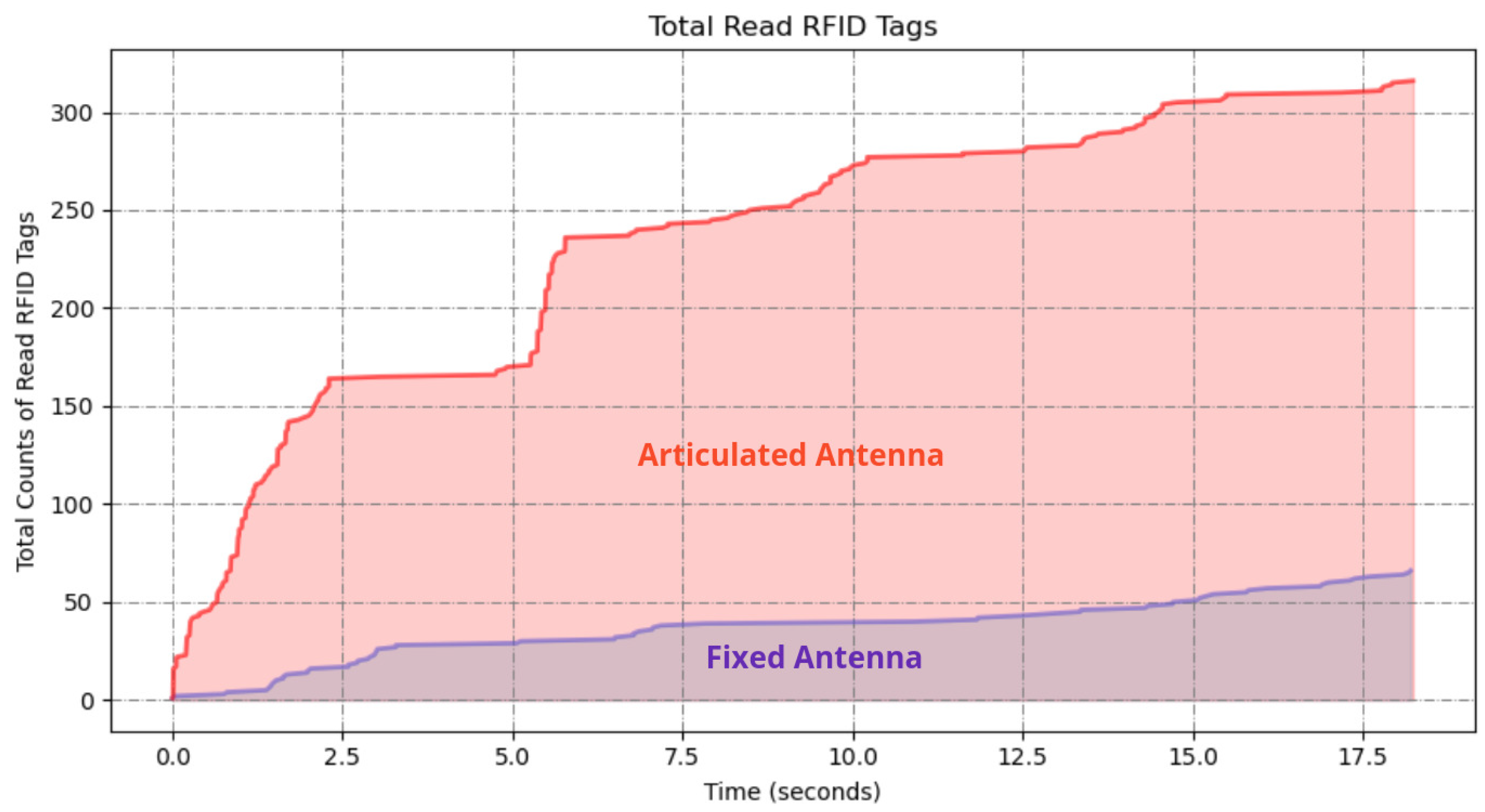

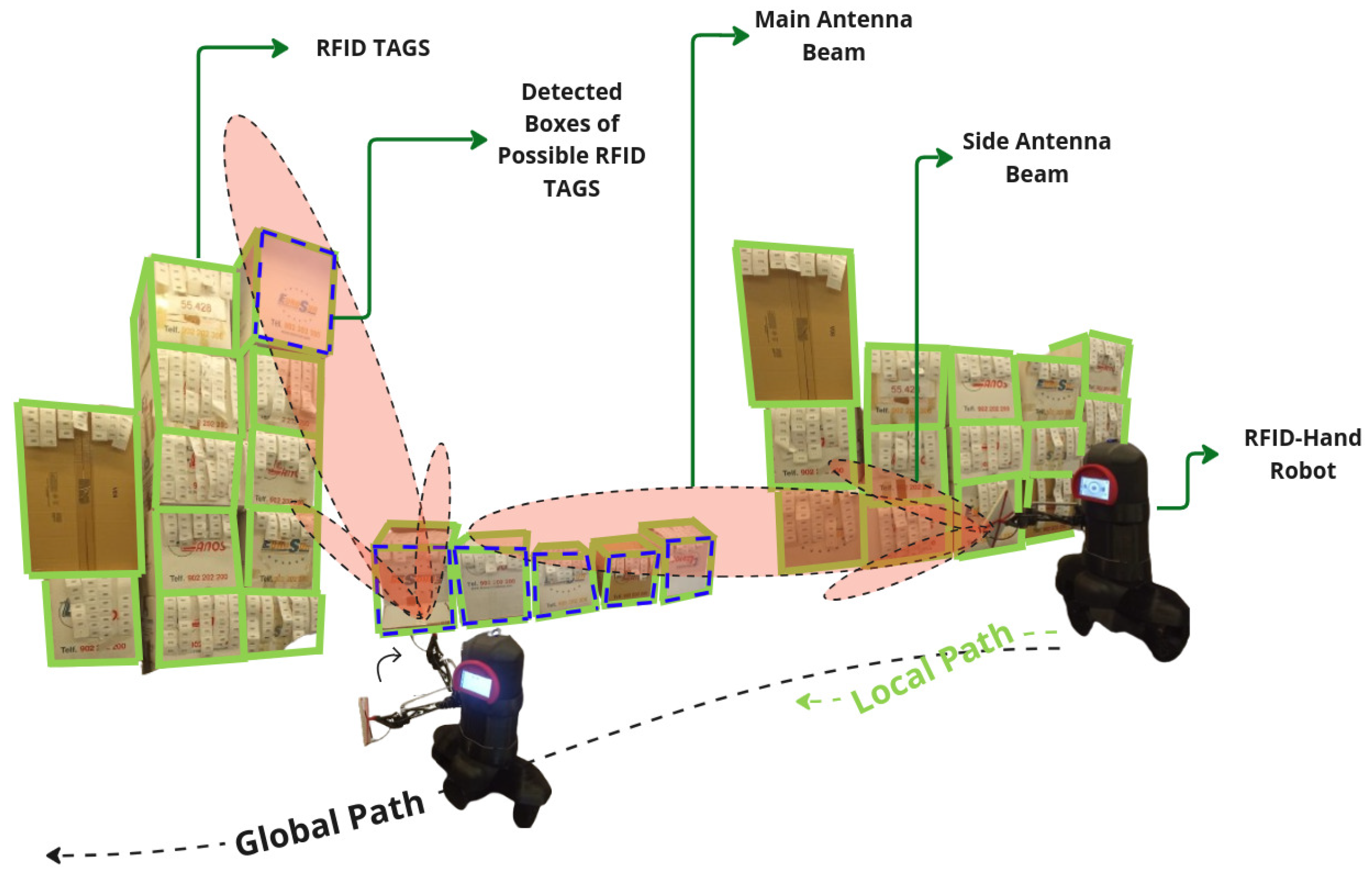

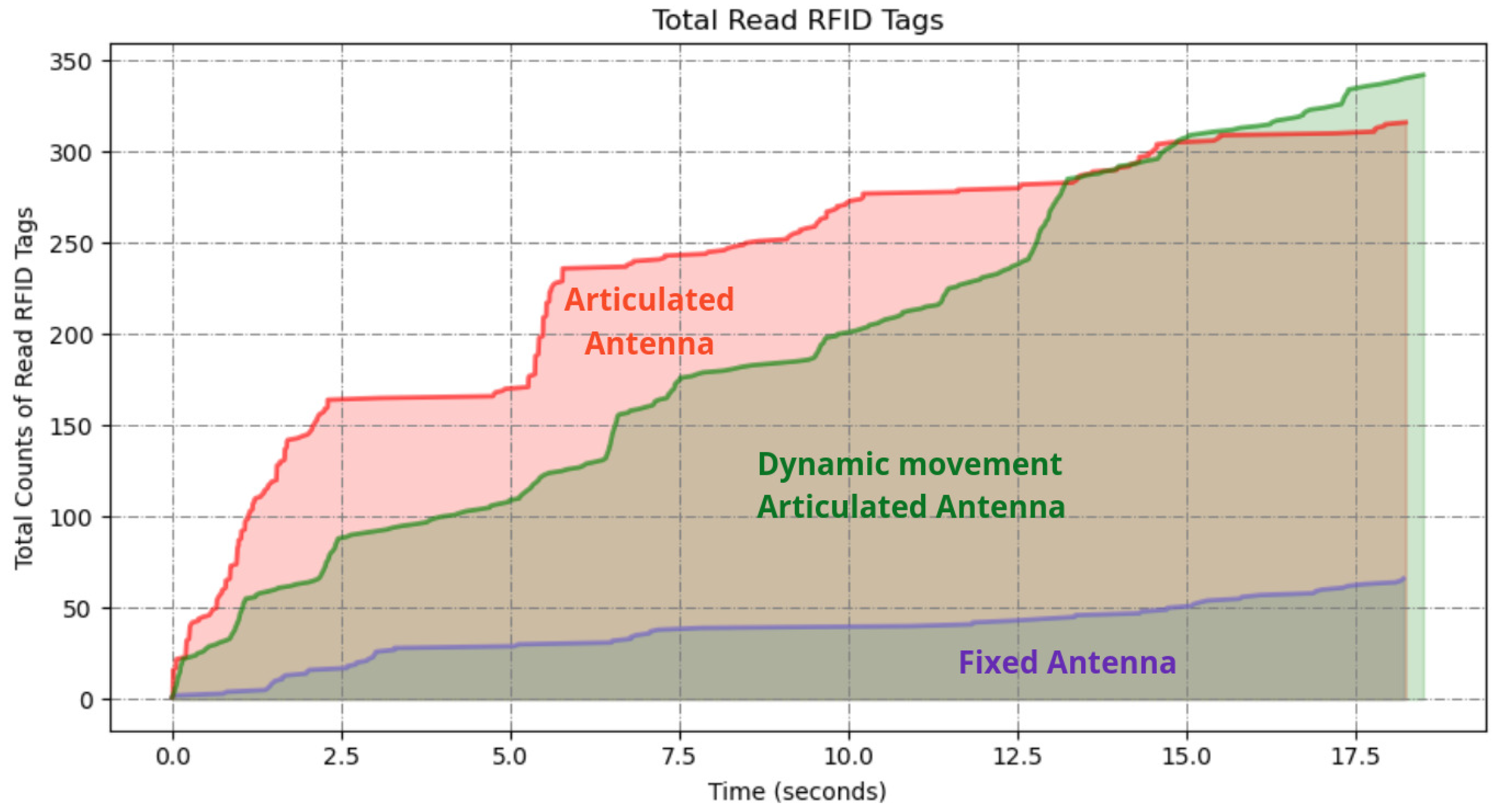

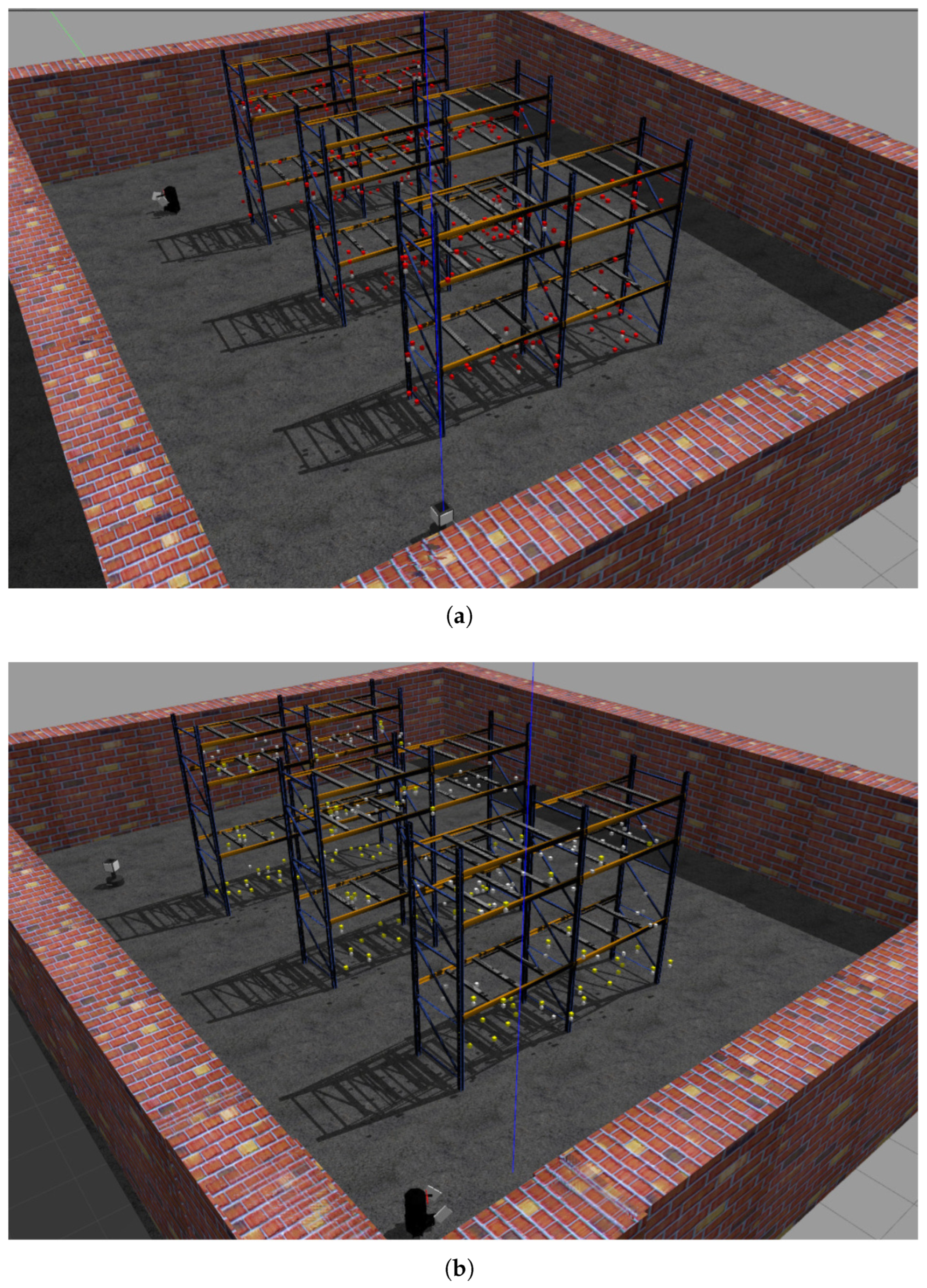

5.1. Short Aile Low Shelves Scanning

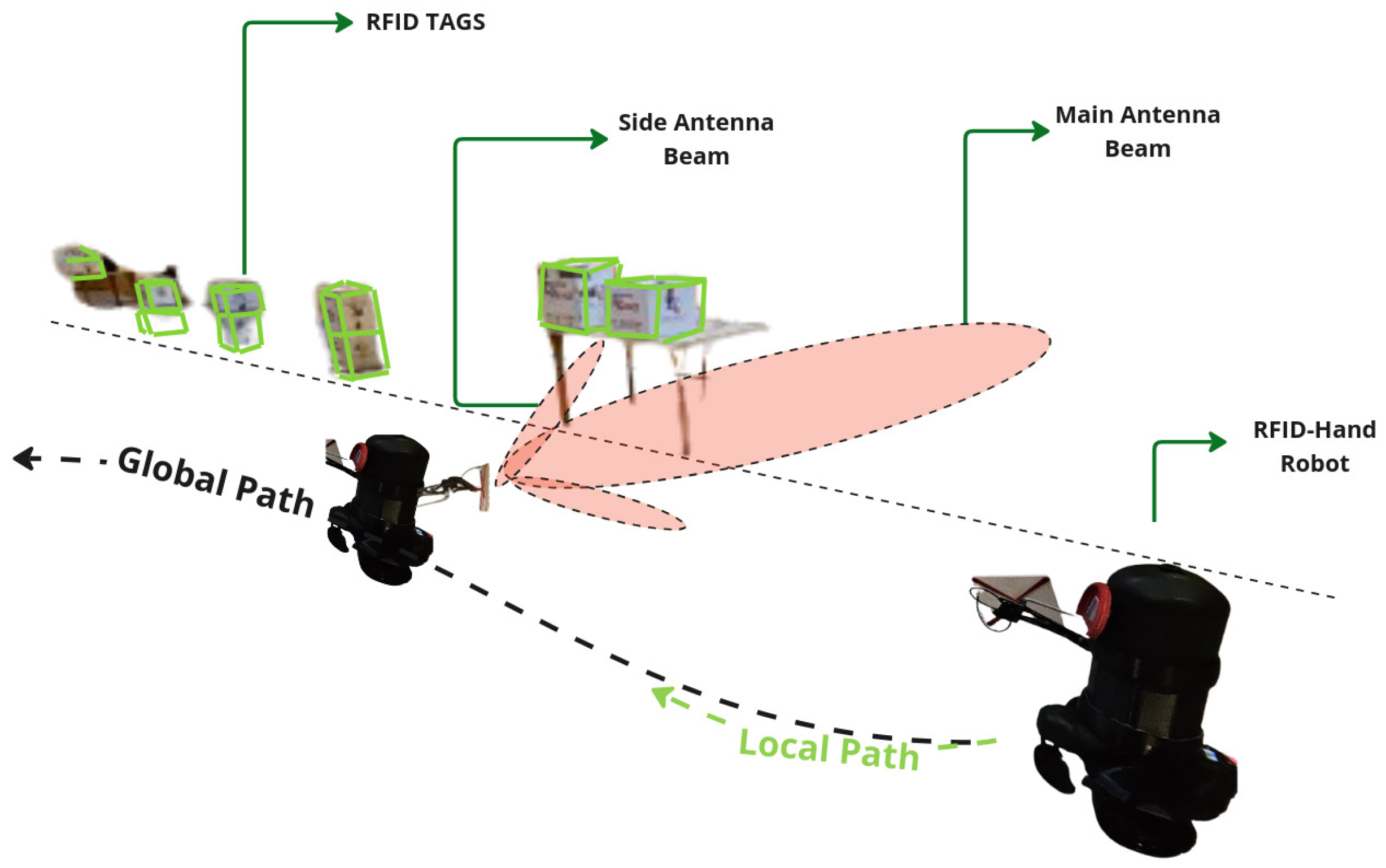

5.1.1. Fixed Antenna

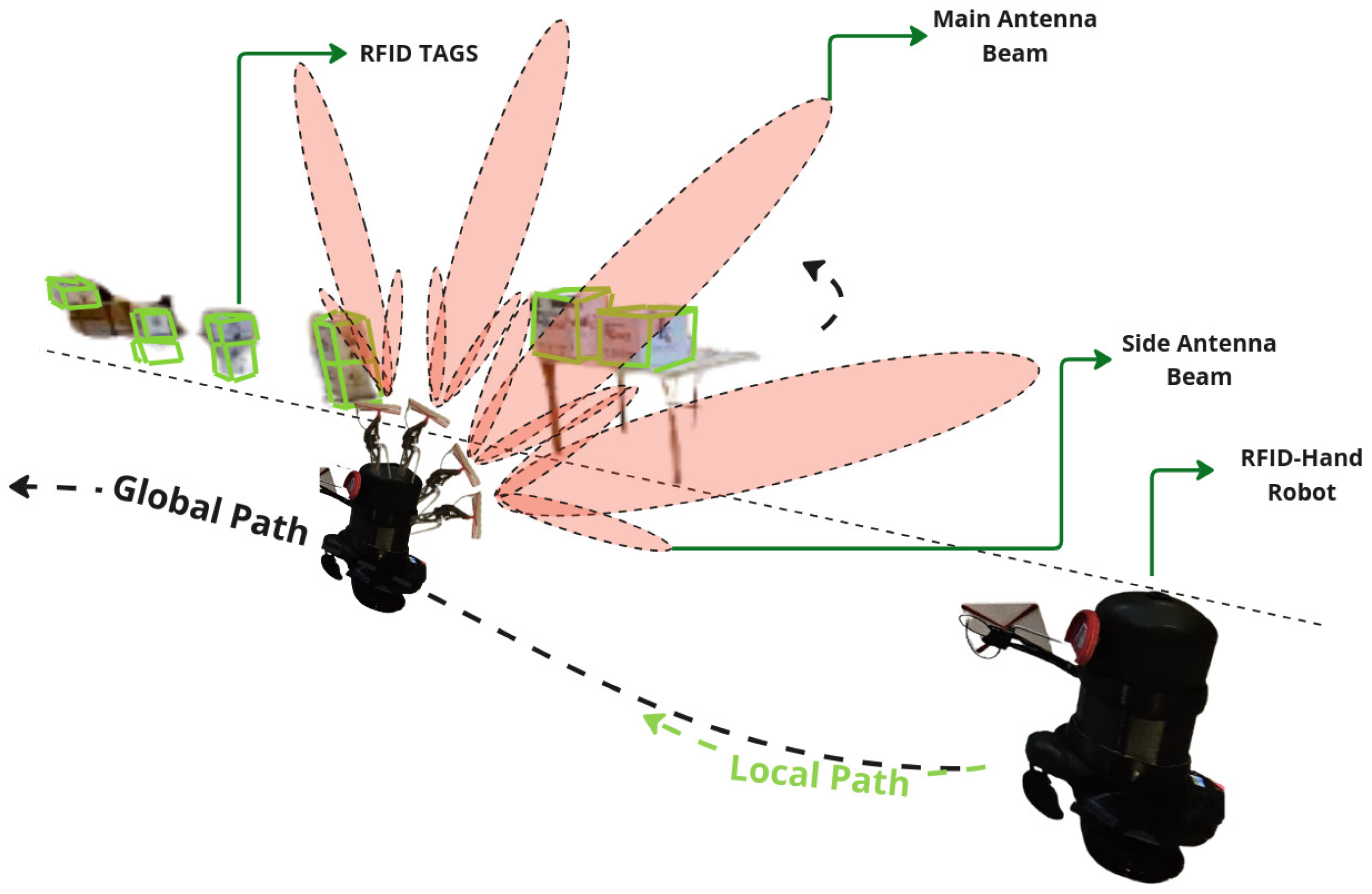

5.1.2. Articulated Antenna

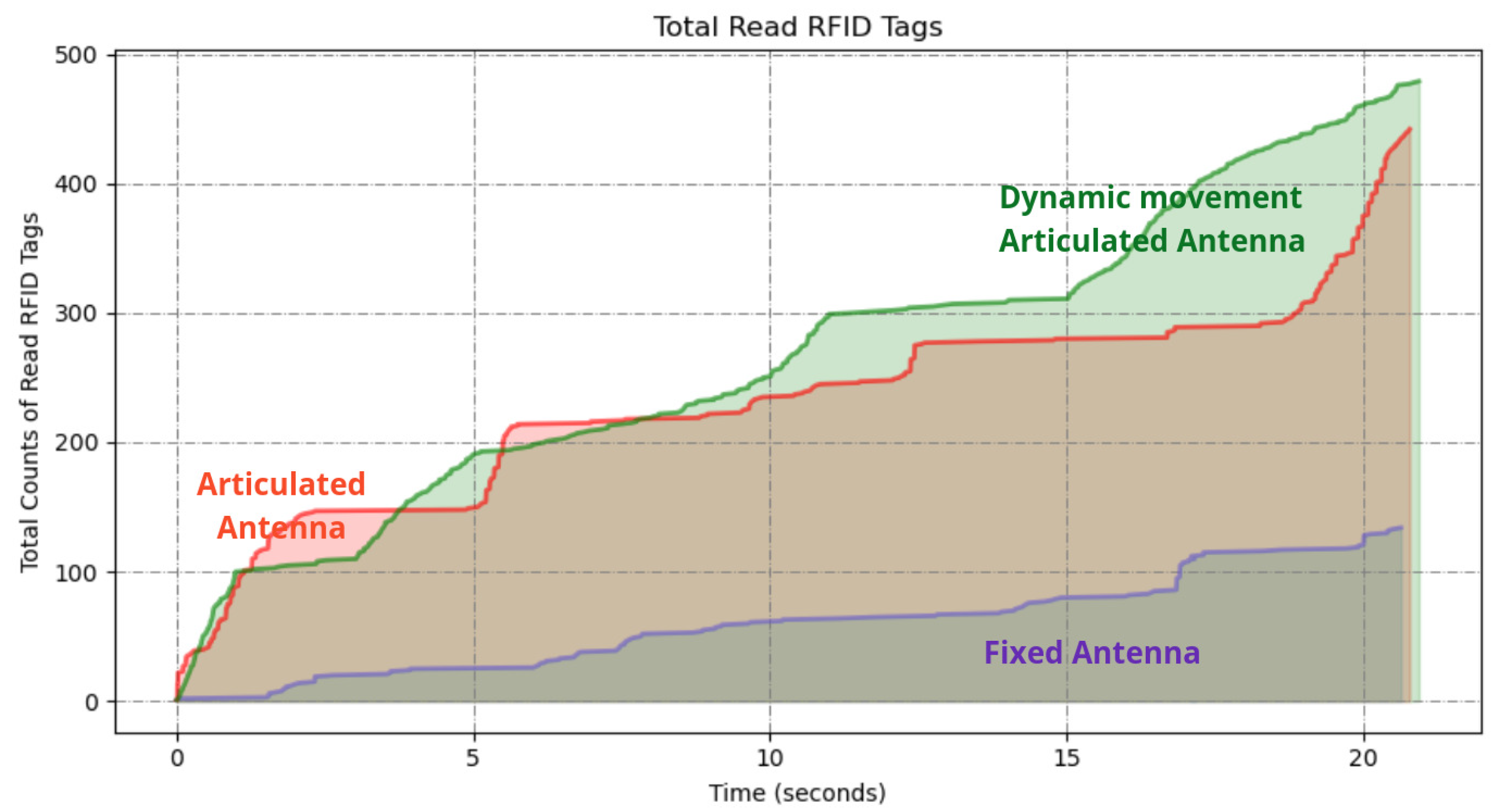

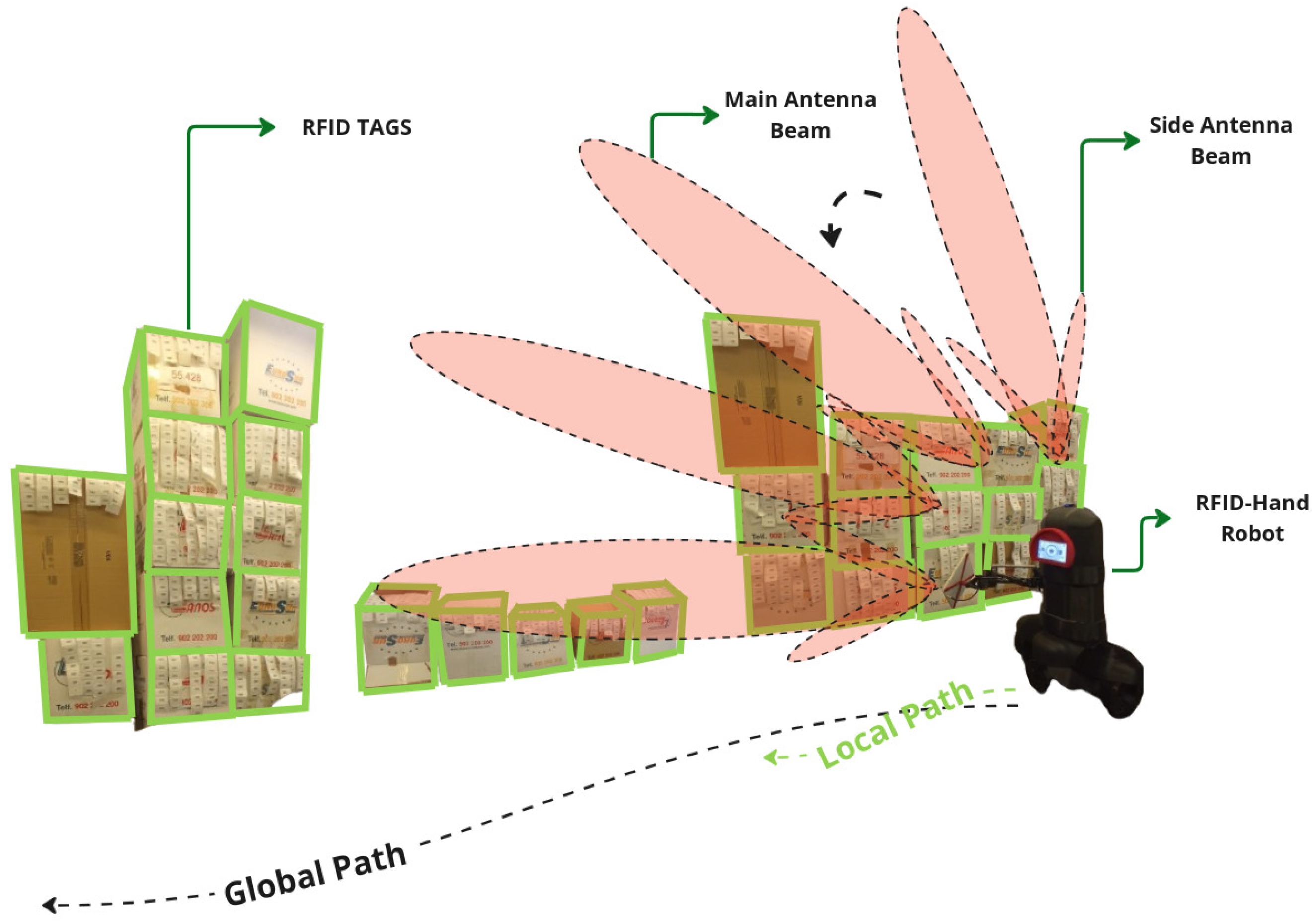

5.1.3. Dynamic Movement Articulated Antenna

5.2. Tall Ailes High Shelves Scanning

5.2.1. Fixed Antenna

5.2.2. Articulated Antenna

5.2.3. Dynamic Movement Articulated Antenna

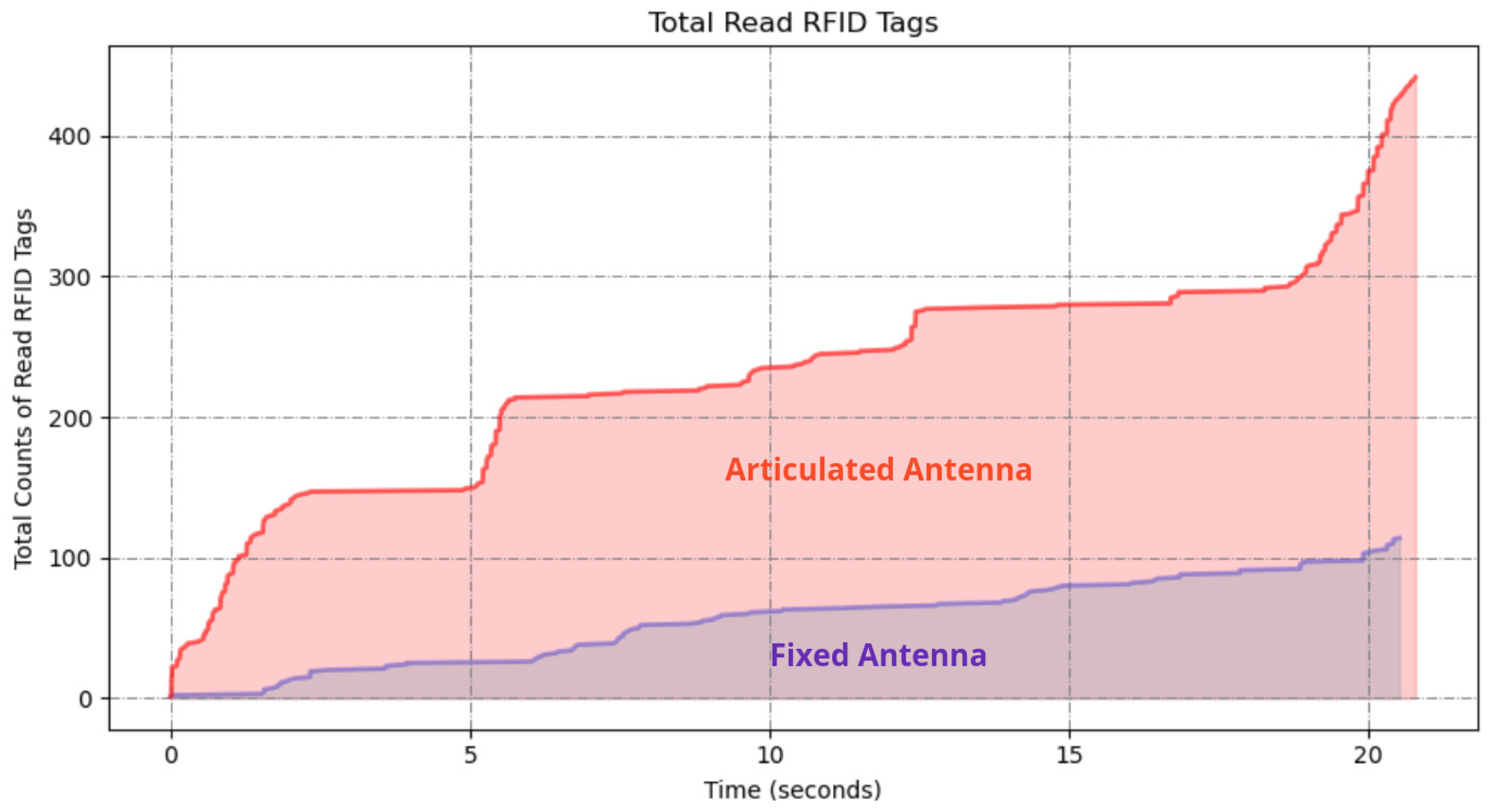

6. Simulation Experiment: Dynamic vs. Fixed Antenna Performance

- Vertical Coverage Constraints: Static antennas failed to maintain optimal read zones for upper-tier tags (1.6–2.4 m height), exhibiting 58.2% detection rate above 1.2 m versus 96.4% for RFID-HAND.

- Occlusion Sensitivity: Fixed beam patterns could not circumvent pallet obstructions, whereas the manipulator actively reoriented antennas to exploit RF propagation paths.

- Angular Coverage Limitations: The circular scanning motion increased antenna–tag polarization alignment opportunities, raising read likelihood by around 26.5% compared to fixed orientations.

7. Future Work

8. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Krishnan, R.; Perumal, E.; Govindaraj, M.; Kandasamy, L. Enhancing Logistics Operations Through Technological Advancements for Superior Service Efficiency. In Innovative Technologies for Increasing Service Productivity; IGI Global: Hershey, PA, USA, 2024; pp. 61–82. [Google Scholar]

- Lai, Y. Innovative Strategies in Logistics and Supply Chain Management: Navigating Modern Challenges. In Proceedings of the SHS Web of Conferences, Funchal, Portugal, 11–12 November 2024; EDP Sciences: Les Ulis, France, 2024; Volume 183, p. 02020. [Google Scholar]

- Mohan Banur, O.; Patle, B.; Pawar, S. Integration of robotics and automation in supply chain: A comprehensive review. Robot. Syst. Appl. 2024, 4, 1–19. [Google Scholar] [CrossRef]

- Liu, C.; Liu, Y.; Xie, R.; Li, Z.; Bai, S.; Zhao, Y. The evolution of robotics: Research and application progress of dental implant robotic systems. Int. J. Oral Sci. 2024, 16, 28. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Fan, C.; Ding, W.; Qian, K. Robot Navigation and Map Construction Based on SLAM Technology. 2024. Available online: https://adwenpub.com/index.php/wjimt/article/view/291 (accessed on 15 May 2024).

- Yarovoi, A.; Cho, Y.K. Review of simultaneous localization and mapping (SLAM) for construction robotics applications. Autom. Constr. 2024, 162, 105344. [Google Scholar] [CrossRef]

- Ress, V.; Zhang, W.; Skuddis, D.; Haala, N.; Soergel, U. Slam for indoor mapping of wide area construction environments. arXiv 2024, arXiv:2404.17215. [Google Scholar] [CrossRef]

- Khan, I.; Blake, G. The Future of SME Manufacturing: Computer Vision and AI for Enhanced Quality Control and Inventory Accuracy. 2024. Available online: https://www.researchgate.net/publication/386409991_The_Future_of_SME_Manufacturing_Computer_Vision_and_AI_for_Enhanced_Quality_Control_and_Inventory_Accuracy (accessed on 16 February 2025).

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Zhang, K.; Dong, C.; Guo, H.; Ye, Q.; Gao, L.; Xiang, S.; Chen, X.; Wu, Y. A semantic visual SLAM based on improved mask R-CNN in dynamic environment. Robotica 2024, 42, 3570–3591. [Google Scholar] [CrossRef]

- Bandara, I.; Simpson, O.; Sun, Y. Optimizing Efficiency Using a Low-Cost RFID-Based Inventory Management System. In Proceedings of the 2024 International Wireless Communications and Mobile Computing (IWCMC), Ayia Napa, Cyprus, 27–31 May 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1729–1733. [Google Scholar]

- Gareis, M.; Parr, A.; Trabert, J.; Mehner, T.; Vossiek, M.; Carlowitz, C. Stocktaking Robots, Automatic Inventory, and 3D Product Maps: The Smart Warehouse Enabled by UHF-RFID Synthetic Aperture Localization Techniques. IEEE Microw. Mag. 2021, 22, 57–68. [Google Scholar] [CrossRef]

- Sharma, R.; Patange, A.D.; Padalghare, R.; Kale, R.C. Development of LiDAR operated inventory control and assistance robot. Proc. Inst. Mech. Eng. Part E 2024, 238, 192–202. [Google Scholar] [CrossRef]

- Zhang, J.; Lyu, Y.; Roppel, T.; Patton, J.; Senthilkumar, C. Mobile robot for retail inventory using RFID. In Proceedings of the 2016 IEEE International Conference on Industrial Technology (ICIT), Taipei, Taiwan, 14–17 March 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 101–106. [Google Scholar]

- Morenza-Cinos, M.; Casamayor-Pujol, V.; Soler-Busquets, J.; Sanz, J.L.; Guzmán, R.; Pous, R. Development of an RFID inventory robot (AdvanRobot). In Robot Operating System (ROS); The Complete Reference; Springer: Cham, Switzerland, 2017; Volume 2, pp. 387–417. [Google Scholar]

- Alajami, A.A.; Moreno, G.; Pous, R. Design of a UAV for Autonomous RFID-Based Dynamic Inventories Using Stigmergy for Mapless Indoor Environments. Drones 2022, 6, 208. [Google Scholar] [CrossRef]

- Beul, M.; Droeschel, D.; Nieuwenhuisen, M.; Quenzel, J.; Houben, S.; Behnke, S. Fast Autonomous Flight in Warehouses for Inventory Applications. IEEE Robot. Autom. Lett. 2018, 3, 3121–3128. [Google Scholar] [CrossRef]

- Gago, R.M.; Pereira, M.Y.; Pereira, G.A. An aerial robotic system for inventory of stockpile warehouses. Eng. Rep. 2021, 3, e12396. [Google Scholar] [CrossRef]

- Alajami, A.A.; Santa Cruz, L.D.; Pous, R. Design of an Energy-Efficient Self-Heterogeneous Aerial-Ground Vehicle. In Proceedings of the 2023 9th International Conference on Automation, Robotics and Applications (ICARA), Abu Dhabi, United Arab Emirates, 10–12 February 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 213–218. [Google Scholar]

- Cao, M.; Xu, X.; Yuan, S.; Cao, K.; Liu, K.; Xie, L. DoubleBee: A Hybrid Aerial-Ground Robot with Two Active Wheels. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; pp. 6962–6969. [Google Scholar] [CrossRef]

- Alajami, A.A.; Perez, F.; Pous, R. The Design of an RFID-Based Inventory Hybrid Robot for Large Warehouses. In Proceedings of the 2024 9th International Conference on Control and Robotics Engineering (ICCRE), Osaka, Japan, 10–12 May 2024; pp. 50–54. [Google Scholar] [CrossRef]

- Dexory. Dexory Inventory Robot. 2024. Available online: https://www.dexory.com/ (accessed on 9 August 2024).

- Bernardini, F.; Motroni, A.; Nepa, P.; Tripicchio, P.; Buffi, A.; Del Col, L. The MONITOR Project: RFID-based Robots enabling real-time inventory and localization in warehouses and retail areas. In Proceedings of the 2021 6th International Conference on Smart and Sustainable Technologies (SpliTech), Bol and Split, Croatia, 8–11 September 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–6. [Google Scholar]

- Vivek, N.; Suriya, A.; Kamala, V.; Chinnasamy, N.V.; Akshya, J.; Sundarrajan, M.; Choudhry, M.D. Integrating Deep Q-Networks and YOLO with Autonomous Robots for Efficient Warehouse Management. In Proceedings of the 2024 5th International Conference on Recent Trends in Computer Science and Technology (ICRTCST), Jamshedpur, India, 9–10 April 2024; pp. 419–424. [Google Scholar] [CrossRef]

- Macenski, S.; Foote, T.; Gerkey, B.; Lalancette, C.; Woodall, W. Robot Operating System 2: Design, architecture, and uses in the wild. Sci. Robot. 2022, 7, eabm6074. [Google Scholar] [CrossRef] [PubMed]

- Fröhlich, C. Ros2 Control Framework. 2022. Available online: https://github.com/ros-controls/ros2_control (accessed on 21 November 2024).

- Macenski, S.; Moore, T.; Lu, D.V.; Merzlyakov, A.; Ferguson, M. From the desks of ROS maintainers: A survey of modern & capable mobile robotics algorithms in the robot operating system 2. Robot. Auton. Syst. 2023, 168, 104493. [Google Scholar]

- Görner, M.; Haschke, R.; Ritter, H.; Zhang, J. Moveit! task constructor for task-level motion planning. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019. [Google Scholar]

- Balasubramanian, R. The Denavit Hartenberg Convention; Robotics Insitute Carnegie Mellon University: Pittsburgh, PA, USA, 2011. [Google Scholar]

- Casamayor-Pujol, V.; Morenza-Cinos, M.; Gastón, B.; Pous, R. Autonomous stock counting based on a stigmergic algorithm for multi-robot systems. Comput. Ind. 2020, 122, 103259. [Google Scholar] [CrossRef]

- Alajami, A.; Moreno, G.; Pous, R. RFID Sensor Gazebo Plugin. 2025. Available online: http://wiki.ros.org/RFIDsensor_Gazebo_plugin (accessed on 3 April 2025).

- Alajami, A.A.; Moreno, G.; Pous, R. A ROS Gazebo Plugin Design to Simulate RFID Systems. IEEE Access 2022, 10, 93921–93932. [Google Scholar] [CrossRef]

| Scanning Method | Tags Read | Total Read (%) | Energy Used (Wh) | |

|---|---|---|---|---|

| Low Shelves | High Shelves | |||

| Fixed Antenna | 117/500 (23.4%) | 67/350 (19.1%) | 21.6 | 18.2 |

| Predefined Path | 438/500 (87.6%) | 314/350 (89.7%) | 88.5 | 24.5 |

| Dynamic Positioning | 479/500 (95.8%) | 343/350 (98.0%) | 96.7 | 22.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alajami, A.A.; Pous, R. The Design of a Vision-Assisted Dynamic Antenna Positioning Radio Frequency Identification-Based Inventory Robot Utilizing a 3-Degree-of-Freedom Manipulator. Sensors 2025, 25, 2418. https://doi.org/10.3390/s25082418

Alajami AA, Pous R. The Design of a Vision-Assisted Dynamic Antenna Positioning Radio Frequency Identification-Based Inventory Robot Utilizing a 3-Degree-of-Freedom Manipulator. Sensors. 2025; 25(8):2418. https://doi.org/10.3390/s25082418

Chicago/Turabian StyleAlajami, Abdussalam A., and Rafael Pous. 2025. "The Design of a Vision-Assisted Dynamic Antenna Positioning Radio Frequency Identification-Based Inventory Robot Utilizing a 3-Degree-of-Freedom Manipulator" Sensors 25, no. 8: 2418. https://doi.org/10.3390/s25082418

APA StyleAlajami, A. A., & Pous, R. (2025). The Design of a Vision-Assisted Dynamic Antenna Positioning Radio Frequency Identification-Based Inventory Robot Utilizing a 3-Degree-of-Freedom Manipulator. Sensors, 25(8), 2418. https://doi.org/10.3390/s25082418