A Mamba U-Net Model for Reconstruction of Extremely Dark RGGB Images

Abstract

1. Introduction

2. Related Works

2.1. Traditional Algorithms

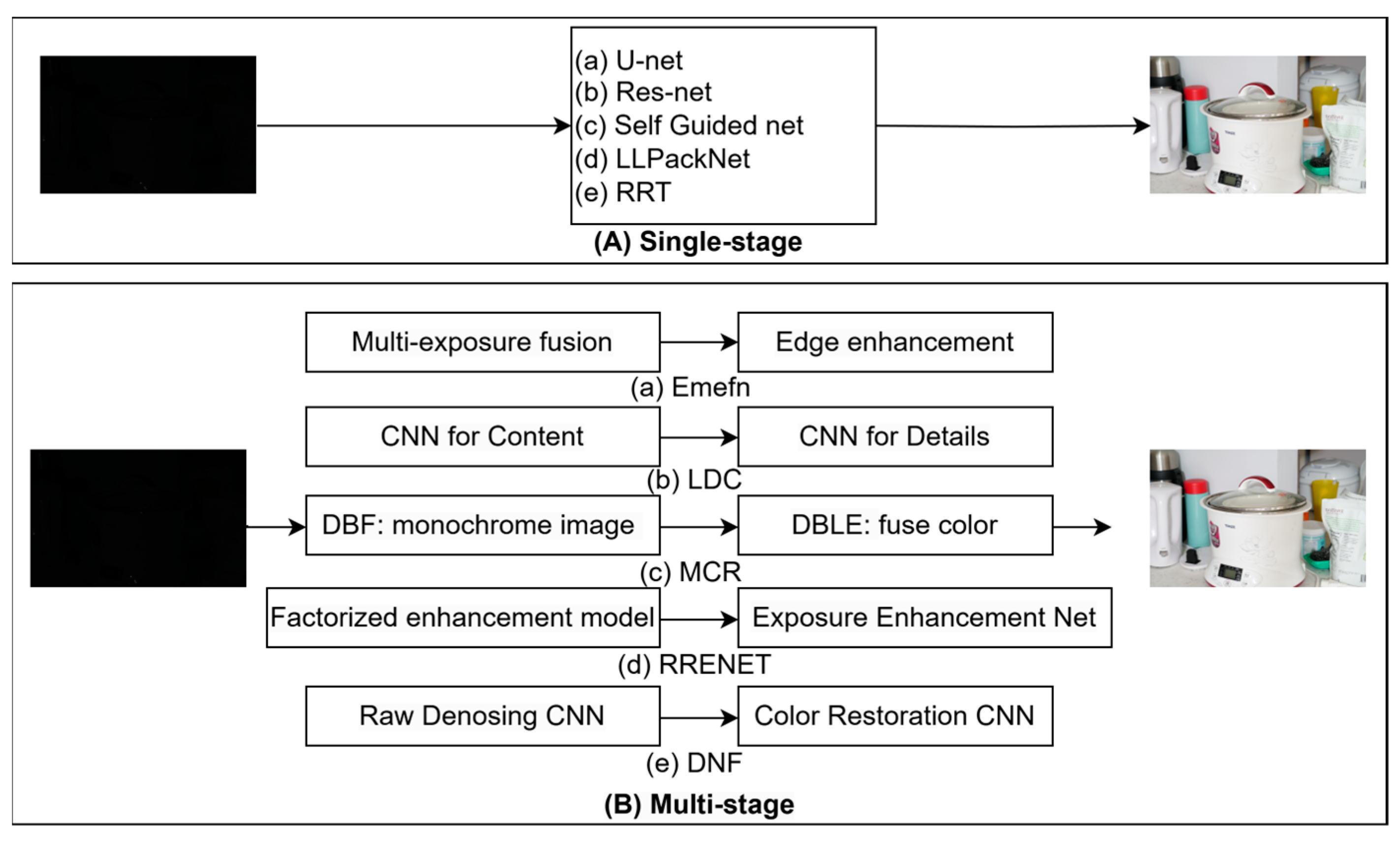

2.2. Single-Stage Learning-Based Algorithms

2.3. Multi-Stage Learning-Based Algorithms

2.4. Datasets Used in Literature

3. Methodology

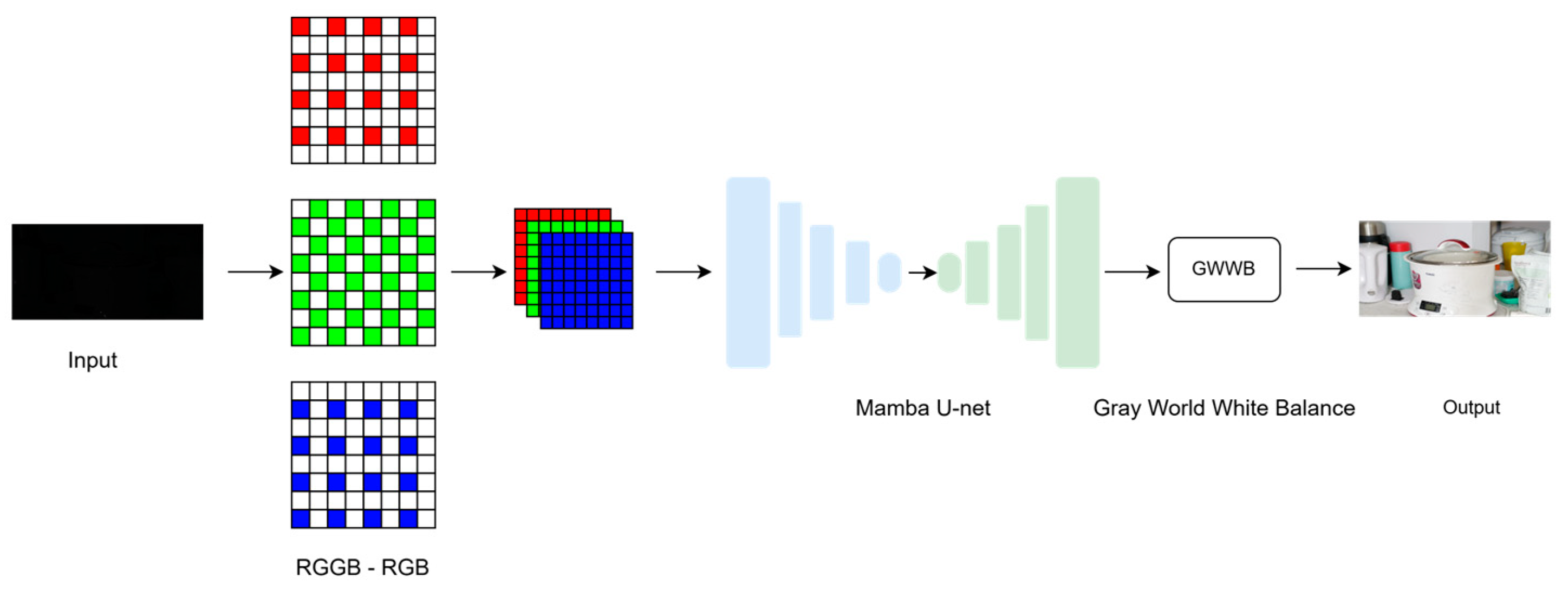

3.1. Proposed Pipeline Structure

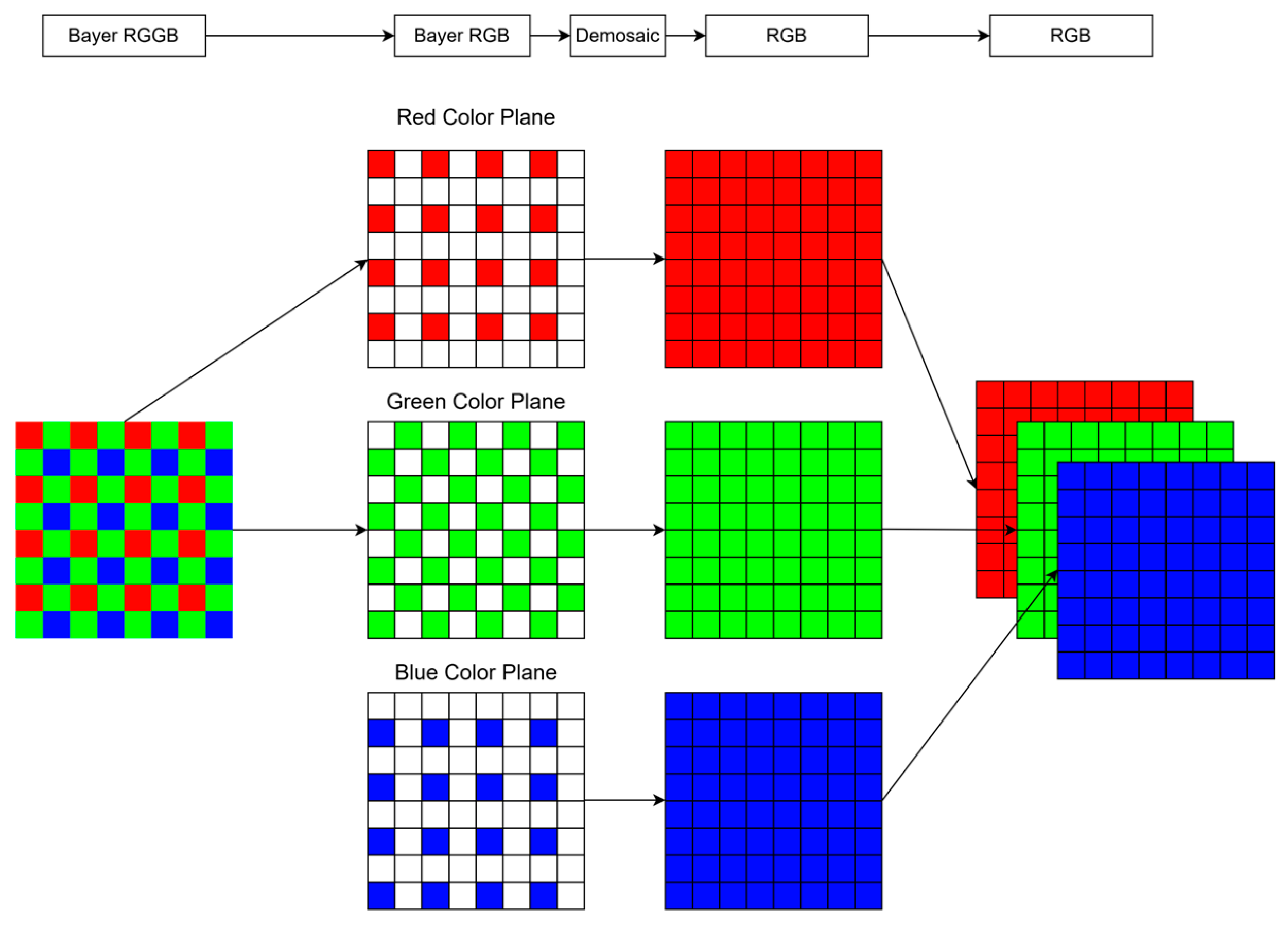

3.2. Image Preprocessing: RGGB to RGB

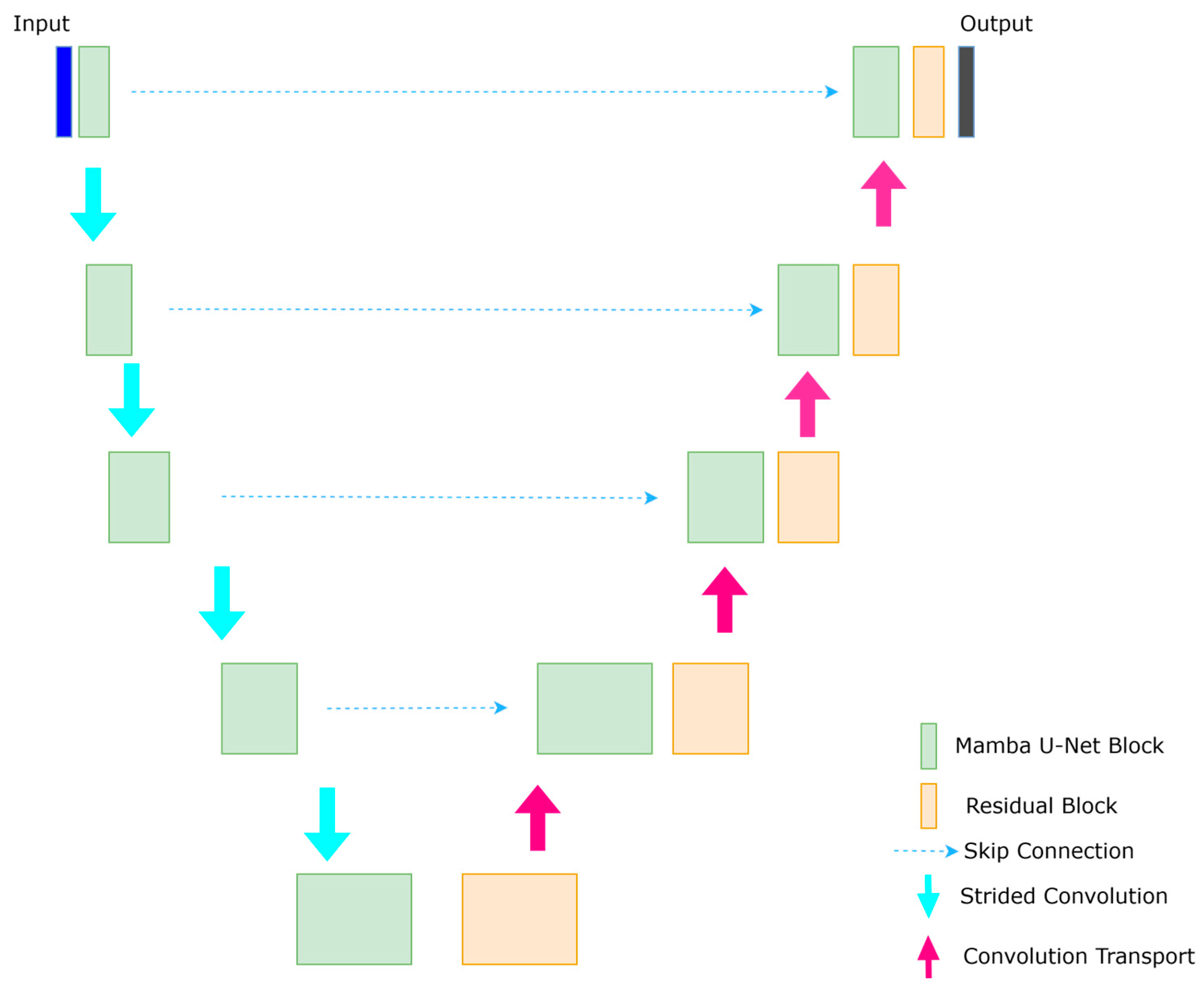

3.3. Mamba U-Net Network

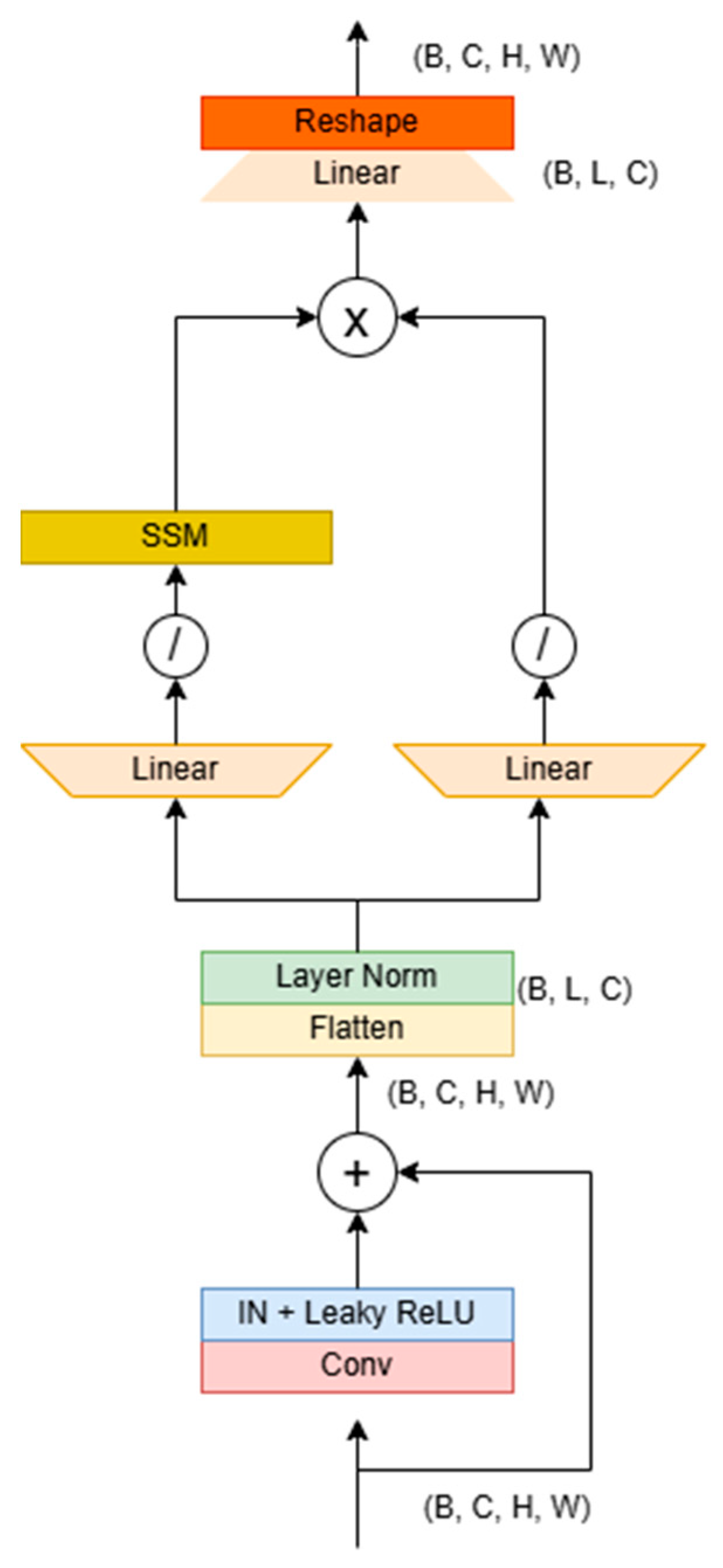

3.4. Mamba U-Net Block

3.5. Gray-World White-Balance Algorithm (GW-WB)

4. Experiments and Analysis

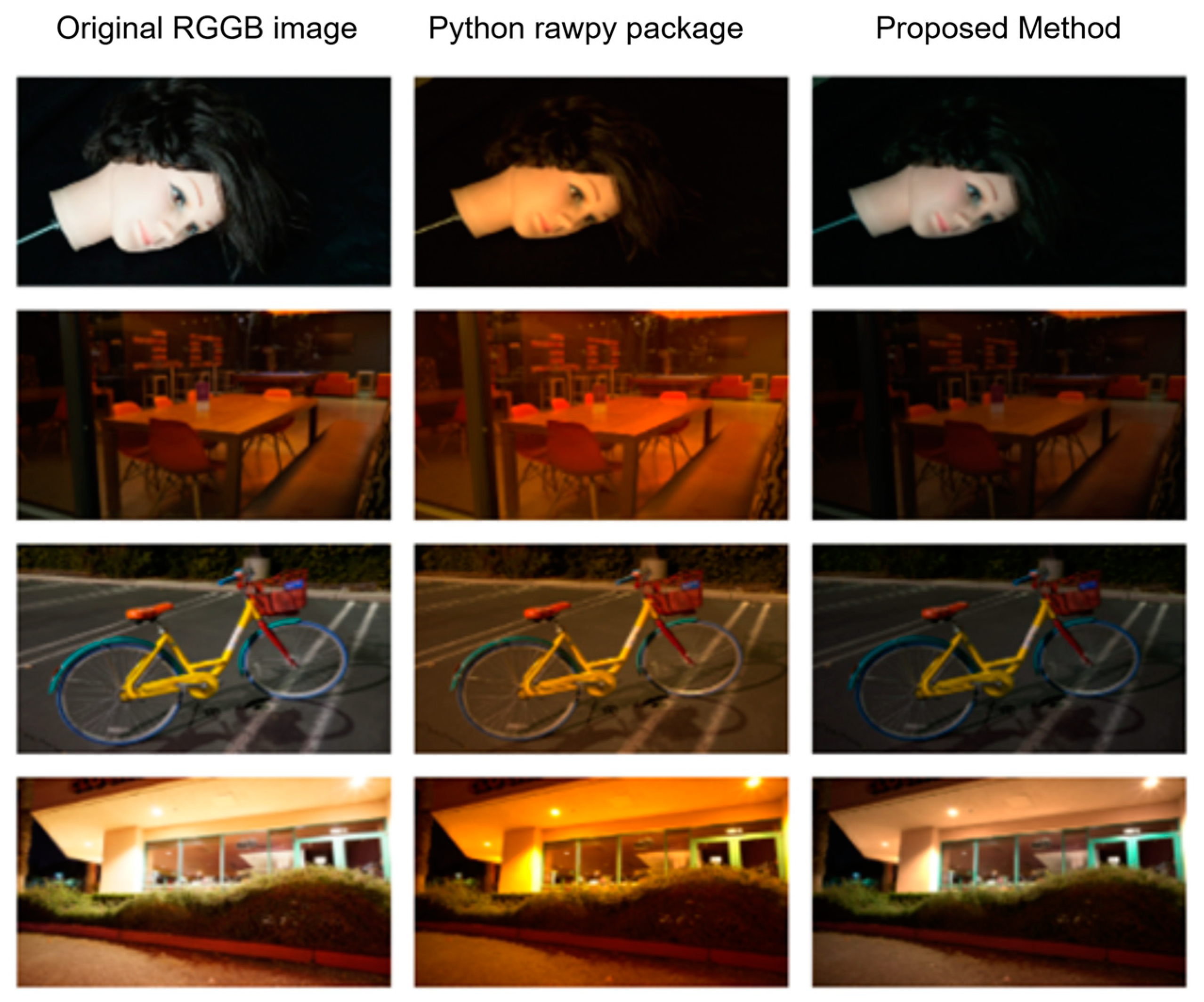

4.1. RGGB to RGB Experiments

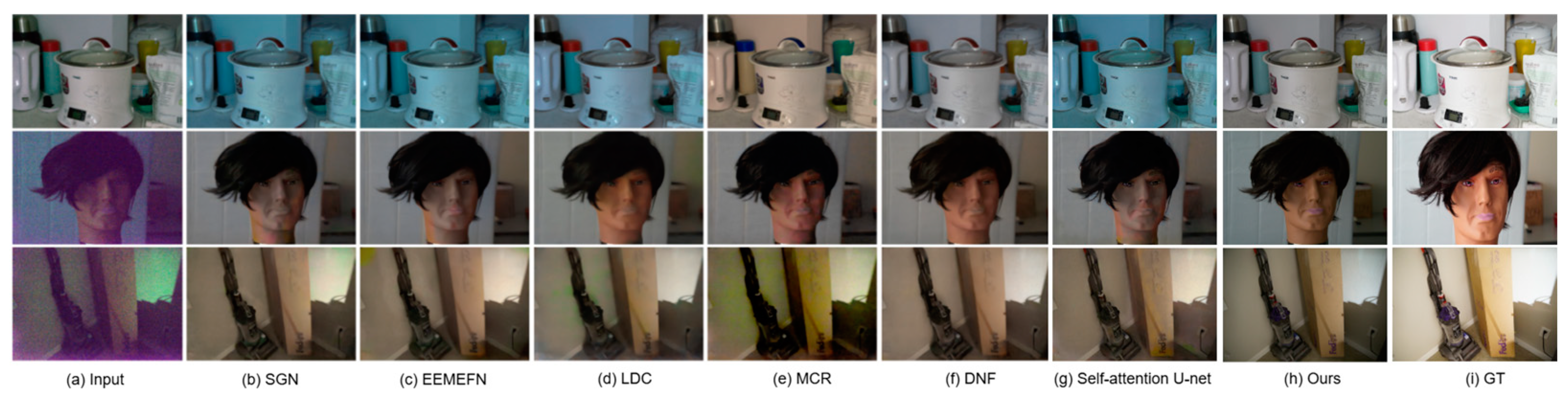

4.2. Comparison with Other State-of-the-Art Methods

4.3. Computational Complexity Analysis and Resource Efficiency Verification

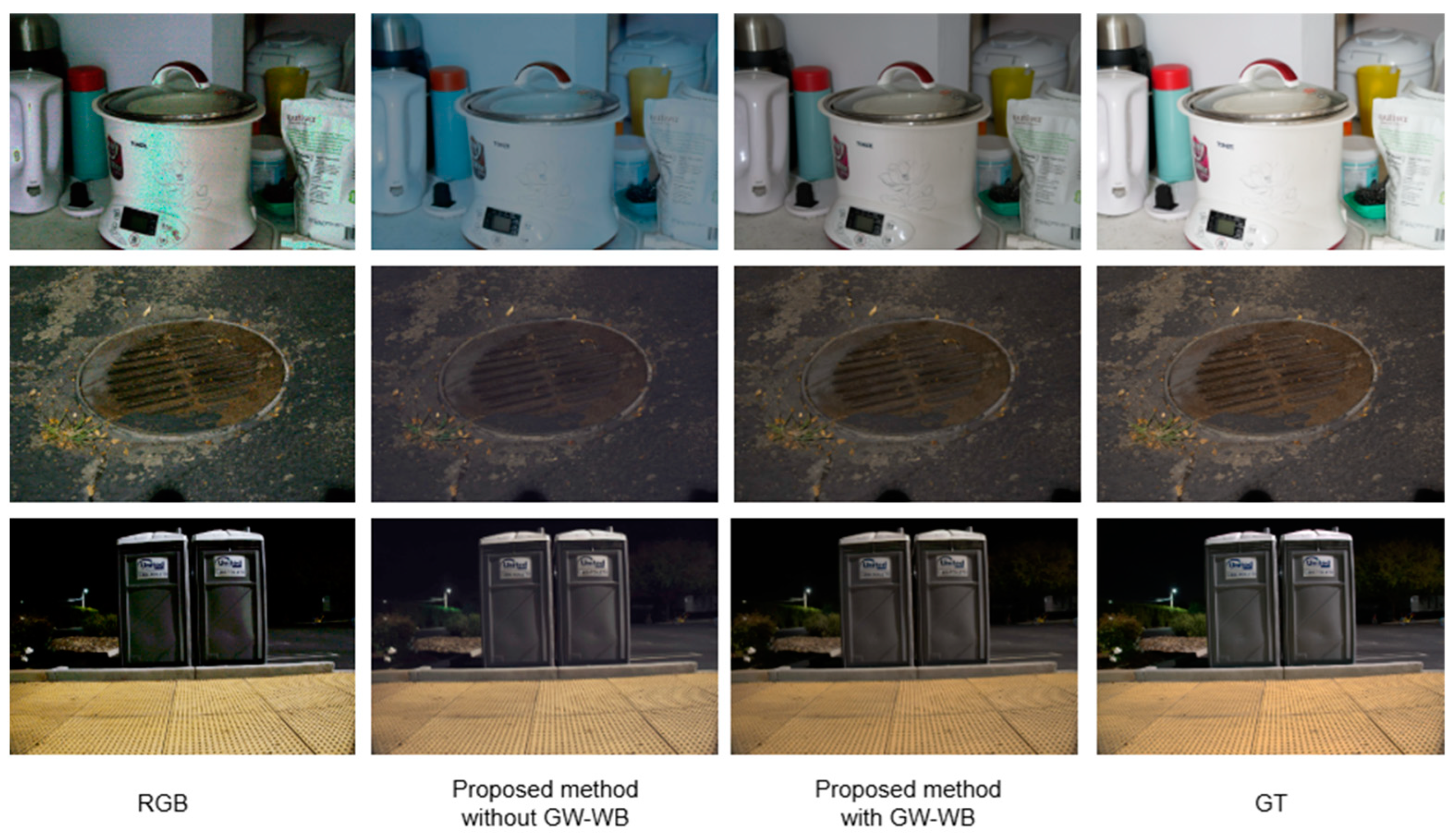

4.4. Ablation Studies

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar]

- Gu, A.; Goel, K.; Ré, C. Efficiently modeling long sequences with structured state spaces. arXiv 2021, arXiv:2111.00396. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Hai, J.; Xuan, Z.; Yang, R.; Hao, Y.; Zou, F.; Lin, F.; Han, S. R2rnet: Low-light image enhancement via real-low to real-normal network. J. Vis. Commun. Image Represent. 2023, 90, 103712. [Google Scholar] [CrossRef]

- Huang, Y.; Zhu, X.; Yuan, F.; Shi, J.; Kintak, U.; Fu, J.; Peng, Y.; Deng, C. A two-stage HDR reconstruction pipeline for extreme dark-light RGGB images. Sci. Rep. 2025, 15, 2847. [Google Scholar] [CrossRef]

- Zunair, H.; Hamza, A.B. Sharp U-Net: Depthwise convolutional network for biomedical image segmentation. Comput. Biol. Med. 2021, 136, 104699. [Google Scholar] [CrossRef]

- Wang, W.; Wu, X.; Yuan, X.; Gao, Z. An experiment-based review of low-light image enhancement methods. IEEE Access 2020, 8, 87884–87917. [Google Scholar] [CrossRef]

- Xu, Q.; Jiang, H.; Scopigno, R.; Sbert, M. A novel approach for enhancing very dark image sequences. Signal Process. 2014, 103, 309–330. [Google Scholar] [CrossRef]

- Feng, Z.; Hao, S. Low-light image enhancement by refining illumination map with self-guided filtering. In Proceedings of the 2017 IEEE International Conference on Big Knowledge (ICBK), Hefei, China, 9–10 August 2017; pp. 183–187. [Google Scholar]

- Srinivas, K.; Bhandari, A.K. Low light image enhancement with adaptive sigmoid transfer function. IET Image Process. 2020, 14, 668–678. [Google Scholar] [CrossRef]

- Kim, W.; Lee, R.; Park, M.; Lee, S.H. Low-light image enhancement based on maximal diffusion values. IEEE Access 2019, 7, 129150–129163. [Google Scholar] [CrossRef]

- Lee, S.; Kim, N.; Paik, J. Adaptively partitioned block-based contrast enhancement and its application to low light-level video surveillance. SpringerPlus 2015, 4, 431. [Google Scholar] [CrossRef]

- Land, E.H.; McCann, J.J. Lightness and retinex theory. JOSA 1971, 61, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Jobson, D.J.; Rahman, Z.; Woodell, G.A. Properties and performance of a center/surround retinex. IEEE Trans. Image Process. 1997, 6, 451–462. [Google Scholar] [CrossRef] [PubMed]

- Rahman, Z.; Jobson, D.J.; Woodell, G.A. Multi-scale retinex for color image enhancement. In Proceedings of the 3rd IEEE International Conference on Image Processing, Lausanne, Switzerland, 19 September 1996; Volume 3, pp. 1003–1006. [Google Scholar]

- Jobson, D.J.; Rahman, Z.; Woodell, G.A. A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Process. 1997, 6, 965–976. [Google Scholar] [CrossRef] [PubMed]

- Wang, M.; Tian, Z.; Gui, W.; Zhang, X.; Wang, W. Low-light image enhancement based on nonsubsampled shearlet transform. IEEE Access 2020, 8, 63162–63174. [Google Scholar] [CrossRef]

- Chen, C.; Chen, Q.; Xu, J.; Koltun, V. Learning to see in the dark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3291–3300. [Google Scholar]

- Chen, C.; Chen, Q.; Do, M.N.; Koltun, V. Seeing motion in the dark. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3185–3194. [Google Scholar]

- Cai, Y.; Kintak, U. Low-light image enhancement based on modified U-Net. In Proceedings of the 2019 International Conference on Wavelet Analysis and Pattern Recognition (ICWAPR), Kobe, Japan, 7–10 July 2019; pp. 1–7. [Google Scholar]

- Maharjan, P.; Li, L.; Li, Z.; Xu, N.; Ma, C.; Li, Y. Improving extreme low-light image denoising via residual learning. In Proceedings of the 2019 IEEE International Conference on Multimedia and Expo (ICME), Shanghai, China, 8–12 July 2019; pp. 916–921. [Google Scholar]

- Gu, S.; Li, Y.; Gool, L.V.; Timofte, R. Self-guided network for fast image denoising. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 2511–2520. [Google Scholar]

- Lamba, M.; Balaji, A.; Mitra, K. Towards fast and light-weight restoration of dark images. arXiv 2020, arXiv:2011.14133. [Google Scholar]

- Lamba, M.; Mitra, K. Restoring extremely dark images in real time. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 3487–3497. [Google Scholar]

- Zhu, M.; Pan, P.; Chen, W.; Yang, Y. Eemefn: Low-light image enhancement via edge-enhanced multi-exposure fusion network. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 13106–13113. [Google Scholar]

- Xu, K.; Yang, X.; Yin, B.; Lau, R.W. Learning to restore low-light images via decomposition-and-enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2281–2290. [Google Scholar]

- Dong, X.; Xu, W.; Miao, Z.; Ma, L.; Zhang, C.; Yang, J.; Jin, Z.; Jin Teoh, A.B.; Shen, J. Abandoning the bayer-filter to see in the dark. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 17431–17440. [Google Scholar]

- Huang, H.; Yang, W.; Hu, Y.; Liu, J.; Duan, L.-Y. Towards low light enhancement with raw images. IEEE Trans. Image Process. 2022, 31, 1391–1405. [Google Scholar] [CrossRef]

- Jin, X.; Han, L.H.; Li, Z.; Guo, C.L.; Chai, Z.; Li, C. Dnf: Decouple and feedback network for seeing in the dark. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 18135–18144. [Google Scholar]

- Anaya, J.; Barbu, A. RENOIR—A dataset for real low-light image noise reduction. J. Vis. Commun. Image Represent. 2018, 51, 144–154. [Google Scholar] [CrossRef]

- Plotz, T.; Roth, S. Benchmarking denoising algorithms with real photographs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1586–1595. [Google Scholar]

- Wei, C.; Wang, W.; Yang, W.; Liu, J. Deep retinex decomposition for low-light enhancement. arXiv 2018, arXiv:1808.04560. [Google Scholar]

- Loh, Y.P.; Chan, C.S. Getting to know low-light images with the exclusively dark dataset. Comput. Vis. Image Underst. 2019, 178, 30–42. [Google Scholar] [CrossRef]

- Malvar, H.S.; He, L.; Cutler, R. High-quality linear interpolation for demosaicing of Bayer-patterned color images. In Proceedings of the 2004 IEEE International Conference on Acoustics, Speech, and Signal Processing, Montreal, QC, Canada, 17–21 May 2004. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015, Proceedings of the 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18; Springer International Publishing: Cham, Switherland, 2015; pp. 234–241. [Google Scholar]

- Cao, H.; Wang, Y.; Chen, J.; Jiang, D.; Zhang, X.; Tian, Q.; Wang, M. Swin-Unet: Unet-like Pure Transformer for Medical Image Segmentation; European Conference on Computer Vision; Springer Nature: Cham, Switzerland, 2022; pp. 205–218. [Google Scholar]

- Ma, J.; Li, F.; Wang, B. U-mamba: Enhancing long-range dependency for biomedical image segmentation. arXiv 2024, arXiv:2401.04722. [Google Scholar]

- Wang, Z.; Zheng, J.Q.; Zhang, Y.; Cui, G.; Li, L. Mamba-unet: Unet-like pure visual mamba for medical image segmentation. arXiv 2024, arXiv:2402.05079. [Google Scholar]

- Zheng, Z.; Wu, C. U-shaped vision mamba for single image dehazing. arXiv 2024, arXiv:2402.04139. [Google Scholar]

- Ulyanov, D. Instance normalization: The missing ingredient for fast stylization. arXiv 2016, arXiv:1607.08022. [Google Scholar]

- Maas, A.L.; Hannun, A.Y.; Ng, Y. Rectifier nonlinearities improve neural network acoustic models. Proc. Icml. 2013, 30, 3. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Hendrycks, D.; Gimpel, K. Gaussian error linear units (gelus). arXiv 2016, arXiv:1606.08415. [Google Scholar]

- Xu, R.; Yang, S.; Wang, Y.; Cai, Y.; Du, B.; Chen, H. Visual mamba: A survey and new outlooks. arXiv 2024, arXiv:2404.18861. [Google Scholar]

- Zhu, L.; Liao, B.; Zhang, Q.; Wang, X.; Liu, W.; Wang, X. Vision mamba: Efficient visual representation learning with bidirectional state space model. arXiv 2024, arXiv:2401.09417. [Google Scholar]

- Liu, Y.; Tian, Y.; Zhao, Y.; Yu, H.; Xie, L.; Wang, Y.; Ye, Q.; Jiao, J.; Liu, Y. Vmamba: Visual state space model. arXiv 2024, arXiv:2401.10166. [Google Scholar]

- Pei, X.; Huang, T.; Xu, C. Efficientvmamba: Atrous selective scan for light weight visual mamba. arXiv 2024, arXiv:2403.09977. [Google Scholar] [CrossRef]

| Type | Original RGGB File Size | Python Rawpy Package RGB File Size | Bilinear Interpolation RGB File Size |

|---|---|---|---|

| Black Background Object | 23.57 MB | 19.37 MB | 1.21 MB |

| Indoor | 23.5 MB | 11.62 MB | 0.41 MB |

| Object | 23.5 MB | 14.81 MB | 0.88 MB |

| Outdoor | 23.55 MB | 15.63 MB | 1.13 MB |

| Type | Python Rawpy Package PSNR↑/SSIM↑ | Bilinear Interpolation PSNR↑/SSIM↑ |

|---|---|---|

| Black Background Object | 41.94/0.8805 | 45.70/0.9734 |

| Indoor | 44.47/0.9974 | 46.12/0.9993 |

| Object | 47.09/0.9943 | 48.93/0.9996 |

| Outdoor | 37.20/0.8758 | 48.93/0.9841 |

| Category | Method | PSNR↑ | SSIM↑ |

|---|---|---|---|

| Single-Stage | DID [21] | 29.16 | 0.785 |

| SGN [22] | 29.28 | 0.790 | |

| LLPackNet [23] | 27.83 | 0.755 | |

| RRT [24] | 28.66 | 0.790 | |

| Self-Attention U-Net [6] | 29.17 | 0.788 | |

| Ours | 29.34 | 0.793 | |

| Multi-Stage | EEMEFN [25] | 29.60 | 0.795 |

| LDC [26] | 29.56 | 0.799 | |

| MCR [27] | 29.65 | 0.797 | |

| RRENet [28] | 29.17 | 0.792 | |

| DNF [29] | 30.62 | 0.797 | |

| Self-Attention + HDR [6] | 30.78 | 0.799 |

| Type | Parameters | FLOPs |

|---|---|---|

| SID [18] | 7.7 M | 48.5 G |

| Single Self-Attention [6] | 33.4 M | 148.7 G |

| Proposed Mamba U-Net | 2.3 M | 20.9 G |

| Type | PSNR↑ | SSIM↑ |

|---|---|---|

| Standard U-Net RGB [19] | 26.96 | 0.694 |

| Proposed without GW-WB | 29.12 | 0.789 |

| Proposed with GW-WB | 29.34 | 0.793 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, Y.; Zhu, X.; Yuan, F.; Shi, J.; U, K.; Qin, J.; Kong, X.; Peng, Y. A Mamba U-Net Model for Reconstruction of Extremely Dark RGGB Images. Sensors 2025, 25, 2464. https://doi.org/10.3390/s25082464

Huang Y, Zhu X, Yuan F, Shi J, U K, Qin J, Kong X, Peng Y. A Mamba U-Net Model for Reconstruction of Extremely Dark RGGB Images. Sensors. 2025; 25(8):2464. https://doi.org/10.3390/s25082464

Chicago/Turabian StyleHuang, Yiyao, Xiaobao Zhu, Fenglian Yuan, Jing Shi, Kintak U, Junshuo Qin, Xiangjie Kong, and Yiran Peng. 2025. "A Mamba U-Net Model for Reconstruction of Extremely Dark RGGB Images" Sensors 25, no. 8: 2464. https://doi.org/10.3390/s25082464

APA StyleHuang, Y., Zhu, X., Yuan, F., Shi, J., U, K., Qin, J., Kong, X., & Peng, Y. (2025). A Mamba U-Net Model for Reconstruction of Extremely Dark RGGB Images. Sensors, 25(8), 2464. https://doi.org/10.3390/s25082464