VariGAN: Enhancing Image Style Transfer via UNet Generator, Depthwise Discriminator, and LPIPS Loss in Adversarial Learning Framework

Abstract

1. Introduction

2. The Contributions of Our Work

- We use a UNet generator with a self-attention mechanism to enhance detail processing and a global ResNet block to fuse attention features (Q, K, V).

- We introduce the depthwise discriminator to refine and stabilize the training process.

- We incorporate LPIPS loss to improve the perceptual similarity in the loss function.

- Detailed qualitative and quantitative evaluations validate the efficacy and robustness of our VariGAN.

3. Related Work

3.1. Style Transfer of Images

3.2. Semantic Segmentation Model Based on Deep Learning

3.3. Encoder–Decoder Architecture

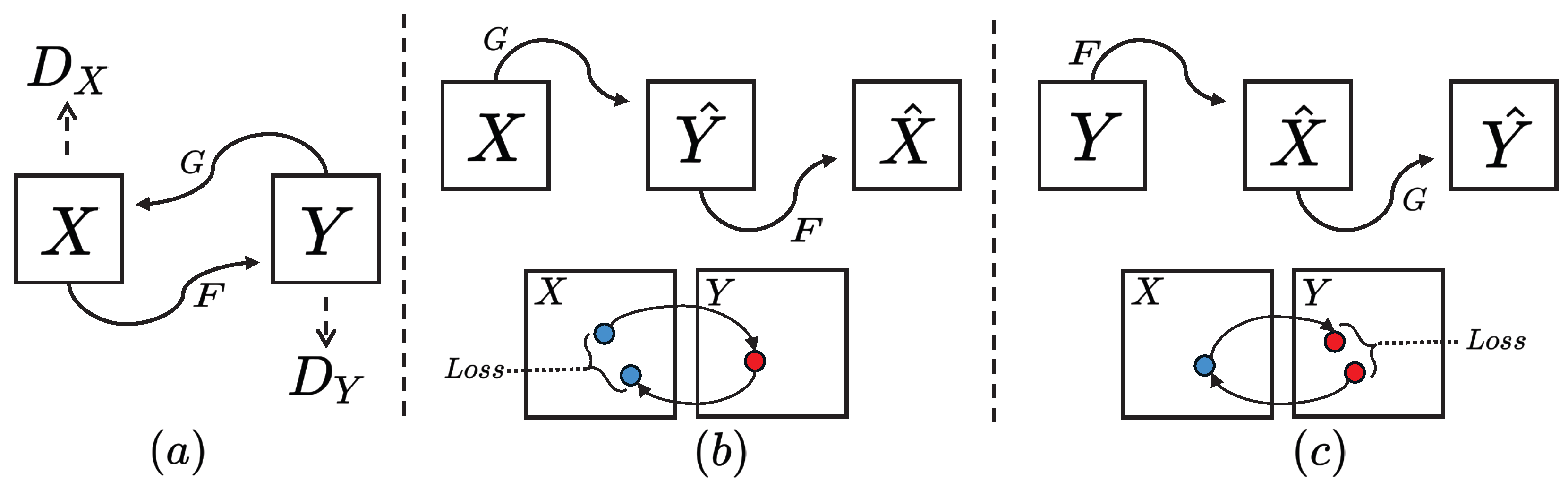

3.4. CycleGAN

4. Methodology

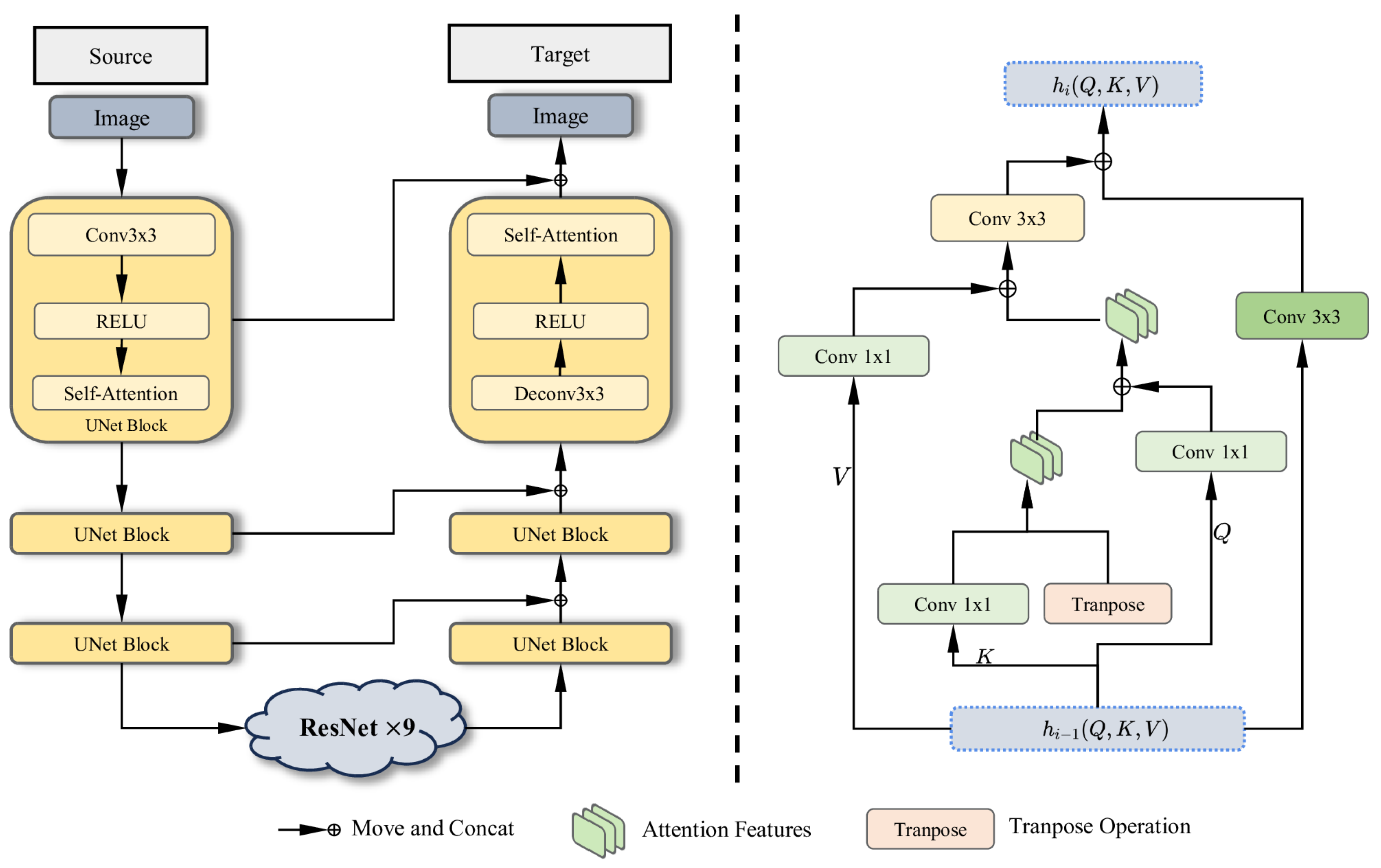

4.1. UNet-Like Generator with Self-Attention Block

4.2. Globally Connected ResNet Block

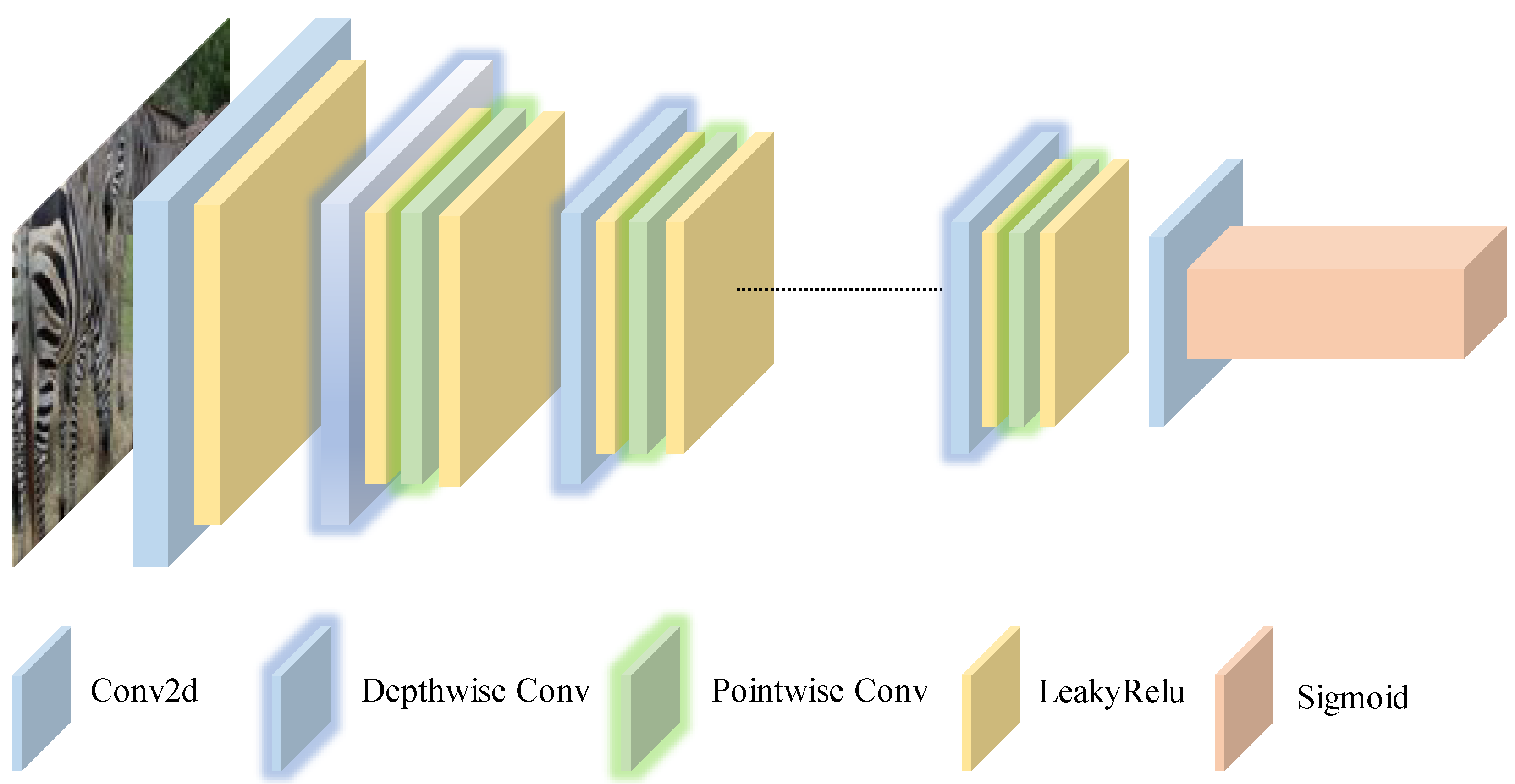

4.3. Depthwise Discriminator with Depthwise and Pointwise Convolution

4.4. Loss Function

5. Experiments

5.1. Implementation Details

5.2. Datasets and Evaluation Metrics

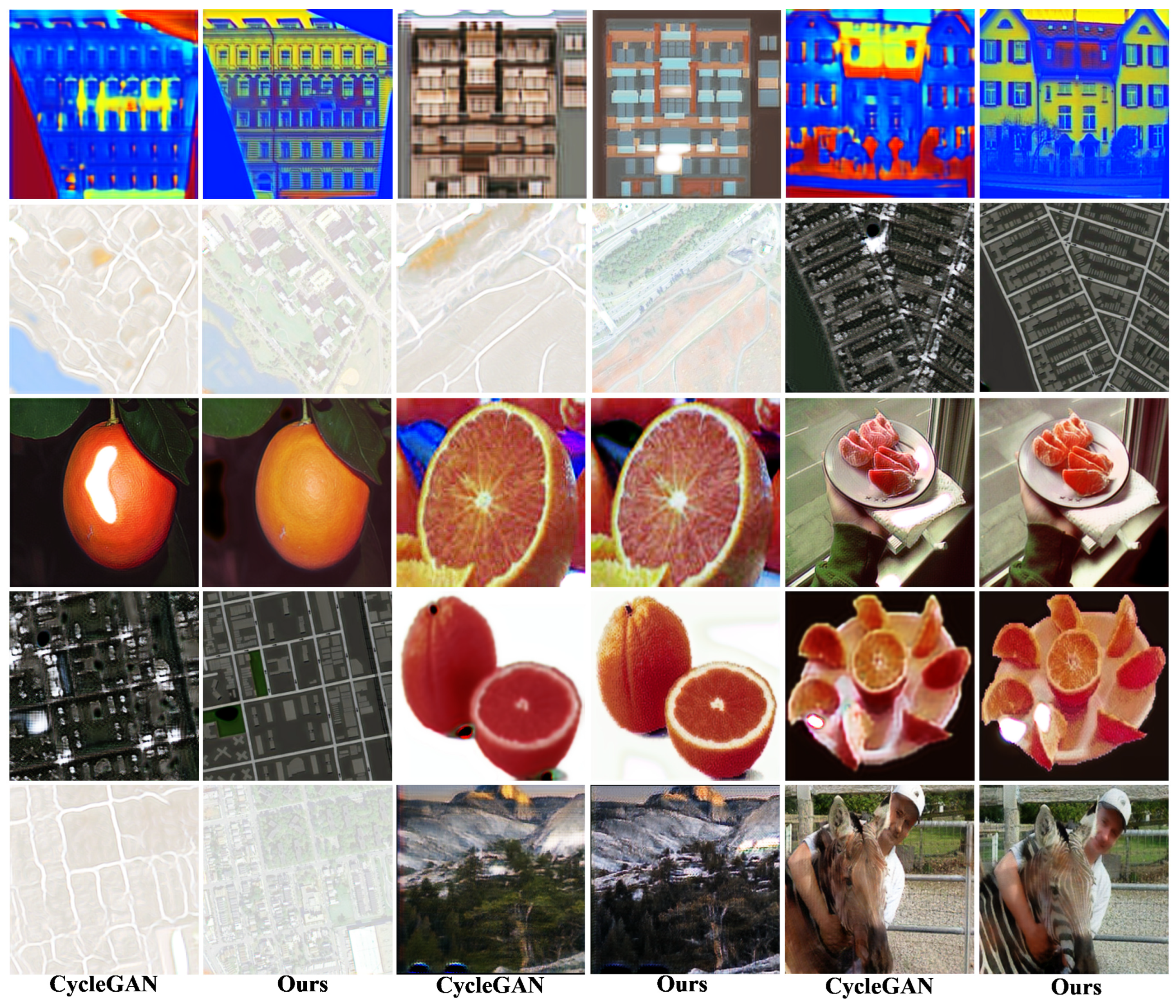

5.3. Qualitative Experiment

5.4. Quantitative Experiment

5.5. Ablation Experiment

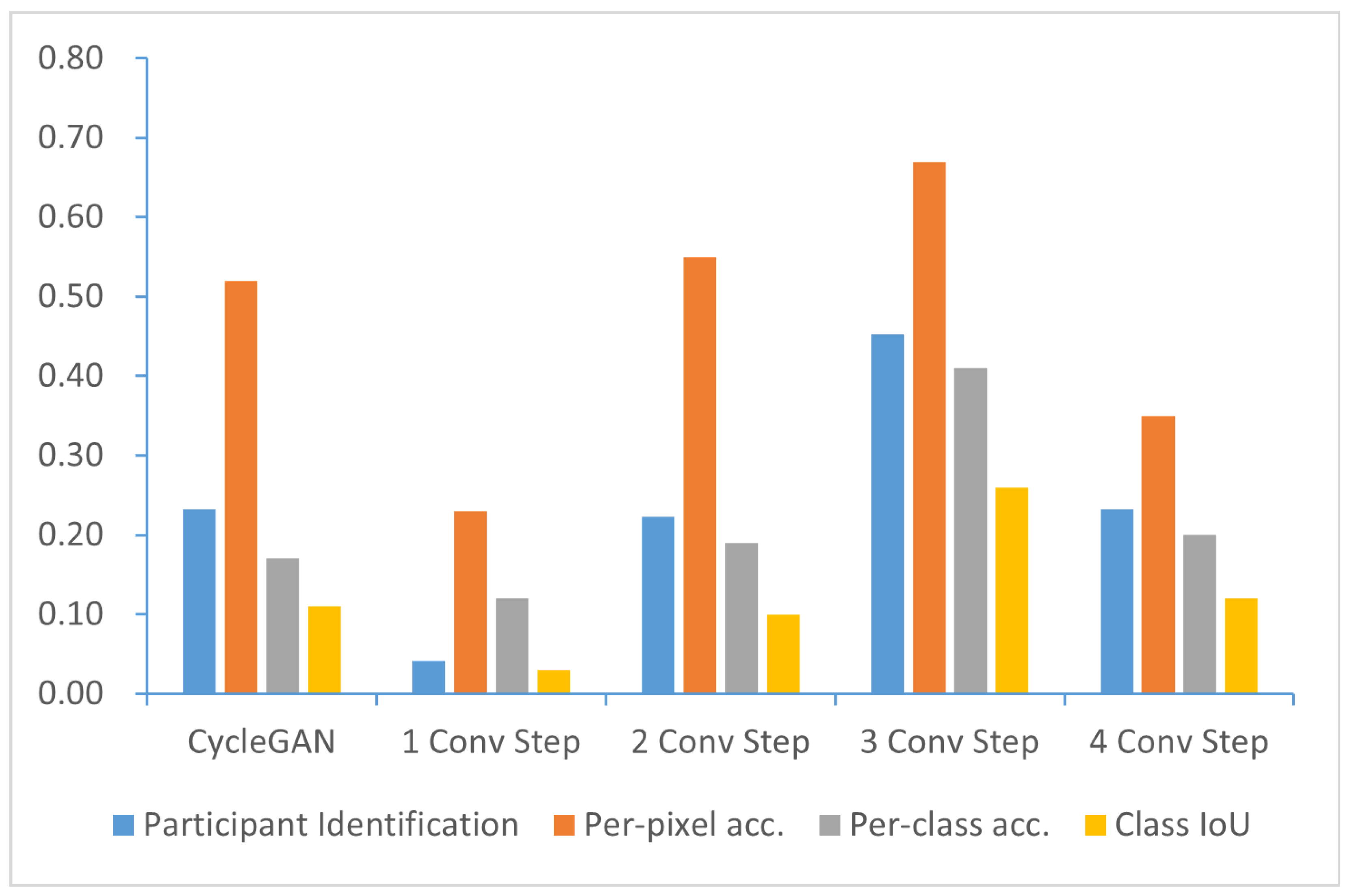

5.6. Layer Choice of UNet Generator

5.7. Qualitative Experiments on Training Efficiency of the Depthwise Discriminator

5.8. An Analysis of the VariGAN Error Cases

6. Conclusions

7. Limitation and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hutson, J.; Plate, D.; Berry, K. Embracing AI in English composition: Insights and innovations in hybrid pedagogical practices. Int. J. Changes Educ. 2024, 1, 19–31. [Google Scholar] [CrossRef]

- Nikolopoulou, K. Generative artificial intelligence in higher education: Exploring ways of harnessing pedagogical practices with the assistance of ChatGPT. Int. J. Changes Educ. 2024, 1, 103–111. [Google Scholar] [CrossRef]

- Creely, E. Exploring the role of generative AI in enhancing language learning: Opportunities and challenges. Int. J. Changes Educ. 2024, 1, 158–167. [Google Scholar] [CrossRef]

- Gatys, L.A.; Ecker, A.S.; Bethge, M. A Neural Algorithm of Artistic Style. arXiv 2015, arXiv:1508.06576. [Google Scholar] [CrossRef]

- Huang, X.; Belongie, S. Arbitrary Style Transfer in Real-time with Adaptive Instance Normalization. arXiv 2017, arXiv:1703.06868. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-Image Translation with Conditional Adversarial Networks. arXiv 2018, arXiv:1611.07004. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks. arXiv 2020, arXiv:1703.10593. [Google Scholar]

- He, B.; Gao, F.; Ma, D.; Shi, B.; Duan, L.Y. ChipGAN: A Generative Adversarial Network for Chinese Ink Wash Painting Style Transfer. In Proceedings of the 26th ACM International Conference on Multimedia (MM’18), New York, NY, USA, 10–16 October 2018; pp. 1172–1180. [Google Scholar] [CrossRef]

- Eigen, D.; Fergus, R. Predicting Depth, Surface Normals and Semantic Labels with a Common Multi-Scale Convolutional Architecture. arXiv 2015, arXiv:1411.4734. [Google Scholar]

- Laffont, P.Y.; Ren, Z.; Tao, X.; Qian, C.; Hays, J. Transient attributes for high-level understanding and editing of outdoor scenes. ACM Trans. Graph. 2014, 33, 1–11. [Google Scholar] [CrossRef]

- Shih, Y.; Paris, S.; Durand, F.; Freeman, W.T. Data-driven hallucination of different times of day from a single outdoor photo. ACM Trans. Graph. 2013, 32, 1–11. [Google Scholar] [CrossRef]

- Karras, T.; Laine, S.; Aila, T. A Style-Based Generator Architecture for Generative Adversarial Networks. arXiv 2019, arXiv:1812.04948. [Google Scholar]

- Karras, T.; Laine, S.; Aittala, M.; Hellsten, J.; Lehtinen, J.; Aila, T. Analyzing and Improving the Image Quality of StyleGAN. arXiv 2020, arXiv:1912.04958. [Google Scholar]

- Karras, T.; Aittala, M.; Laine, S.; Härkönen, E.; Hellsten, J.; Lehtinen, J.; Aila, T. Alias-Free Generative Adversarial Networks. In Proceedings of the Advances in Neural Information Processing Systems; Ranzato, M., Beygelzimer, A., Dauphin, Y., Liang, P., Vaughan, J.W., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2021; Volume 34, pp. 852–863. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Yi, Z.; Zhang, H.; Tan, P.; Gong, M. DualGAN: Unsupervised Dual Learning for Image-to-Image Translation. arXiv 2018, arXiv:1704.02510. [Google Scholar]

- Kim, T.; Cha, M.; Kim, H.; Lee, J.K.; Kim, J. Learning to Discover Cross-Domain Relations with Generative Adversarial Networks. arXiv 2017, arXiv:1703.05192. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising Diffusion Probabilistic Models. arXiv 2020, arXiv:2006.11239. [Google Scholar]

- Hu, J.; Xing, X.; Zhang, J.; Yu, Q. VectorPainter: Advanced Stylized Vector Graphics Synthesis Using Stroke-Style Priors. arXiv 2025, arXiv:2405.02962. [Google Scholar]

- Xing, P.; Wang, H.; Sun, Y.; Wang, Q.; Bai, X.; Ai, H.; Huang, R.; Li, Z. CSGO: Content-Style Composition in Text-to-Image Generation. arXiv 2024, arXiv:2408.16766. [Google Scholar]

- Murugesan, S.; Naganathan, A. Edge Computing Based Cardiac Monitoring and Detection System in the Internet of Medical Things. Medinformatics 2024. [Google Scholar] [CrossRef]

- Que, Y.; Dai, Y.; Ji, X.; Kwan Leung, A.; Chen, Z.; Jiang, Z.; Tang, Y. Automatic classification of asphalt pavement cracks using a novel integrated generative adversarial networks and improved VGG model. Eng. Struct. 2023, 277, 115406. [Google Scholar] [CrossRef]

- Kodali, N.; Abernethy, J.; Hays, J.; Kira, Z. On Convergence and Stability of GANs. arXiv 2017, arXiv:1705.07215. [Google Scholar]

- Zheng, S.; Gao, P.; Zhou, P.; Qin, J. Puff-Net: Efficient Style Transfer with Pure Content and Style Feature Fusion Network. arXiv 2024, arXiv:2405.19775. [Google Scholar]

- Qi, T.; Fang, S.; Wu, Y.; Xie, H.; Liu, J.; Chen, L.; He, Q.; Zhang, Y. DEADiff: An Efficient Stylization Diffusion Model with Disentangled Representations. arXiv 2024, arXiv:2403.06951. [Google Scholar]

- Yu, X.Y.; Yu, J.X.; Zhou, L.B.; Wei, Y.; Ou, L.L. InstantStyleGaussian: Efficient Art Style Transfer with 3D Gaussian Splatting. arXiv 2024, arXiv:2408.04249. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. arXiv 2015, arXiv:1411.4038. [Google Scholar]

- Hua, C.; Luan, S.; Zhang, Q.; Fu, J. Graph Neural Networks Intersect Probabilistic Graphical Models: A Survey. arXiv 2023, arXiv:2206.06089. [Google Scholar]

- Huang, H.; Lin, L.; Tong, R.; Hu, H.; Zhang, Q.; Iwamoto, Y.; Han, X.; Chen, Y.W.; Wu, J. UNet 3+: A Full-Scale Connected UNet for Medical Image Segmentation. arXiv 2020, arXiv:2004.08790. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. arXiv 2014, arXiv:1311.2524. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Zunair, H.; Hamza, A.B. Masked Supervised Learning for Semantic Segmentation. arXiv 2022, arXiv:2210.00923. [Google Scholar]

- Ibtehaz, N.; Rahman, M.S. MultiResUNet: Rethinking the U-Net architecture for multimodal biomedical image segmentation. Neural Netw. 2020, 121, 74–87. [Google Scholar] [CrossRef]

- Lan, L.; Cai, P.; Jiang, L.; Liu, X.; Li, Y.; Zhang, Y. BRAU-Net++: U-Shaped Hybrid CNN-Transformer Network for Medical Image Segmentation. arXiv 2024, arXiv:2401.00722. [Google Scholar]

- Zunair, H.; Ben Hamza, A. Sharp U-Net: Depthwise convolutional network for biomedical image segmentation. Comput. Biol. Med. 2021, 136, 104699. [Google Scholar] [CrossRef]

- Vincent, P.; Larochelle, H.; Lajoie, I.; Bengio, Y.; Manzagol, P.A. Stacked Denoising Autoencoders: Learning Useful Representations in a Deep Network with a Local Denoising Criterion. J. Mach. Learn. Res. 2010, 11, 3371–3408. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. arXiv 2015, arXiv:1505.04597. [Google Scholar]

- Johnson, J.; Alahi, A.; Fei-Fei, L. Perceptual Losses for Real-Time Style Transfer and Super-Resolution. arXiv 2016, arXiv:1603.08155. [Google Scholar]

- Zhang, H.; Goodfellow, I.; Metaxas, D.; Odena, A. Self-Attention Generative Adversarial Networks. arXiv 2019, arXiv:1805.08318. [Google Scholar]

- Park, T.; Liu, M.Y.; Wang, T.C.; Zhu, J.Y. Semantic Image Synthesis with Spatially-Adaptive Normalization. arXiv 2019, arXiv:1903.07291. [Google Scholar]

- Dwibedi, D.; Aytar, Y.; Tompson, J.; Sermanet, P.; Zisserman, A. Temporal Cycle-Consistency Learning. arXiv 2019, arXiv:1904.07846. [Google Scholar]

- Davenport, J.L.; Potter, M.C. Scene Consistency in Object and Background Perception. Psychol. Sci. 2004, 15, 559–564. [Google Scholar] [CrossRef]

- Chen, X.; Li, Y.; Yao, L.; Adeli, E.; Zhang, Y. Generative Adversarial U-Net for Domain-free Medical Image Augmentation. arXiv 2021, arXiv:2101.04793. [Google Scholar]

- Ji, Y.; Zhang, H.; Zhang, Z.; Liu, M. CNN-based encoder-decoder networks for salient object detection: A comprehensive review and recent advances. Inf. Sci. 2021, 546, 835–857. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st International Conference on Neural Information Processing Systems (NIPS’17), Long Beach, CA, USA, 4–9 December 2017; pp. 6000–6010. [Google Scholar]

- Targ, S.; Almeida, D.; Lyman, K. Resnet in Resnet: Generalizing Residual Architectures. arXiv 2016, arXiv:1603.08029. [Google Scholar]

- Orhan, A.E.; Pitkow, X. Skip Connections Eliminate Singularities. arXiv 2018, arXiv:1701.09175. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2021, arXiv:2010.11929. [Google Scholar]

- Liu, J.; Bhatti, U.A.; Zhang, J.; Zhang, Y.; Huang, M. EF-VPT-Net: Enhanced Feature-Based Vision Patch Transformer Network for Accurate Brain Tumor Segmentation in Magnetic Resonance Imaging. IEEE J. Biomed. Health Inform. 2025, 1–14. [Google Scholar] [CrossRef]

- Chen, Y.; Dai, X.; Liu, M.; Chen, D.; Yuan, L.; Liu, Z. Dynamic ReLU. arXiv 2020, arXiv:2003.10027. [Google Scholar]

- Zhang, Y.; Tian, Y.; Kong, Y.; Zhong, B.; Fu, Y. Residual Dense Network for Image Super-Resolution. arXiv 2018, arXiv:1802.08797. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. arXiv 2018, arXiv:1801.03924. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017, arXiv:1412.6980. [Google Scholar]

- Wen, L.; Gao, L.; Li, X.; Zeng, B. Convolutional Neural Network With Automatic Learning Rate Scheduler for Fault Classification. IEEE Trans. Instrum. Meas. 2021, 70, 1–12. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Region-Based Convolutional Networks for Accurate Object Detection and Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 142–158. [Google Scholar] [CrossRef] [PubMed]

- Siano, P.; Cecati, C.; Yu, H.; Kolbusz, J. Real Time Operation of Smart Grids via FCN Networks and Optimal Power Flow. IEEE Trans. Ind. Inform. 2012, 8, 944–952. [Google Scholar] [CrossRef]

- Liu, J.; Bhatti, U.A.; Huang, M.; Tang, H.; Wu, Y.; Feng, S.; Zhang, Y. FF-UNET: An Enhanced Framework for Improved Segmentation of Brain Tumors in MRI Images. In Proceedings of the 2024 7th International Conference on Pattern Recognition and Artificial Intelligence (PRAI), Hangzhou, China, 15–17 August 2024; pp. 852–857. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E. Deep Learning for Computer Vision: A Brief Review. Comput. Intell. Neurosci. 2018, 2018, 7068349. [Google Scholar] [CrossRef]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. Blind/Referenceless Image Spatial Quality Evaluator. In Proceedings of the 2011 Conference Record of the Forty Fifth Asilomar Conference on Signals, Systems and Computers (ASILOMAR), Pacific Grove, CA, USA, 6–9 November 2011; pp. 723–727. [Google Scholar] [CrossRef]

- Liu, M.Y.; Tuzel, O. Coupled generative adversarial networks. In Proceedings of the 30th International Conference on Neural Information Processing Systems, NIPS’16, Barcelona, Spain, 5–10 December 2016; pp. 469–477. [Google Scholar]

- Dumoulin, V.; Belghazi, I.; Poole, B.; Mastropietro, O.; Lamb, A.; Arjovsky, M.; Courville, A. Adversarially Learned Inference. arXiv 2017, arXiv:1606.00704. [Google Scholar]

- Shrivastava, A.; Pfister, T.; Tuzel, O.; Susskind, J.; Wang, W.; Webb, R. Learning from Simulated and Unsupervised Images through Adversarial Training. arXiv 2017, arXiv:1612.07828. [Google Scholar]

- Xu, W.; Long, C.; Wang, R.; Wang, G. DRB-GAN: A Dynamic ResBlock Generative Adversarial Network for Artistic Style Transfer. arXiv 2021, arXiv:2108.07379. [Google Scholar]

- Zhang, Y.; Huang, N.; Tang, F.; Huang, H.; Ma, C.; Dong, W.; Xu, C. Inversion-Based Style Transfer with Diffusion Models. arXiv 2023, arXiv:2211.13203. [Google Scholar]

- Li, M.; Lin, J.; Ding, Y.; Liu, Z.; Zhu, J.Y.; Han, S. GAN Compression: Efficient Architectures for Interactive Conditional GANs. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 5283–5293. [Google Scholar] [CrossRef]

- Li, M.; Lin, J.; Meng, C.; Ermon, S.; Han, S.; Zhu, J.Y. Efficient spatially sparse inference for conditional GANs and diffusion models. In Proceedings of the 36th International Conference on Neural Information Processing Systems (NIPS’22), New Orleans, LA, USA, 28 November–9 December 2022. [Google Scholar]

| Dataset | TrainA | TrainB | TestA | TestB | Image Size |

|---|---|---|---|---|---|

| apple2orange | 995 | 1019 | 266 | 248 | 256 × 256 |

| horse2zebra | 1067 | 1334 | 120 | 140 | 256 × 256 |

| maps | 1096 | 1096 | 1098 | 1098 | 600 × 600 |

| winter2summer | 1073 | 1073 | 210 | 210 | 256 × 256 |

| vangogh2photo | 400 | 6287 | 400 | 751 | 256 × 256 |

| monet2photo | 1072 | 6287 | 121 | 751 | 256 × 256 |

| facades | 400 | 400 | 106 | 106 | 256 × 256 |

| Participant Identification↑ | FCN Score↑ | ||||

|---|---|---|---|---|---|

| Loss | % Labeled Real (1 Image) | % Labeled Real (100 Images) | Per-Pixel Acc. | Per-Class Acc. | Class IoU |

| CoGAN [62] | 0.40 | 0.10 | 0.06 | ||

| BiGAN/ALI [63] | 0.19 | 0.06 | 0.02 | ||

| SimGAN [64] | 0.20 | 0.10 | 0.04 | ||

| Feature loss + GAN | 0.06 | 0.04 | 0.01 | ||

| DualGAN [17] | 0.27 | 0.13 | 0.06 | ||

| cGAN [7] | 0.54 | 0.33 | 0.19 | ||

| DRB-GAN [65] | 0.33 | 0.20 | 0.12 | ||

| InST [66] | 0.49 | 0.20 | 0.03 | ||

| CycleGAN [8] | 0.52 | 0.17 | 0.11 | ||

| GAN Comp. [67] | 0.84 | 0.53 | 0.61 | ||

| GauGAN [68] | 0.77 | 0.49 | 0.62 | ||

| VariGAN (ours) | 0.67 | 0.41 | 0.26 | ||

| Loss | Per-Pixel Acc. | Per-Class Acc. | Class IoU | One-Tailed Test | BRISQUE↓ |

|---|---|---|---|---|---|

| CycleGAN | 0.52 | 0.17 | 0.11 | - | 37.1 |

| CycleGAN + UNet | 0.62 | 0.21 | 0.15 | 3.65 | 27.8 |

| CycleGAN + Depthwise | 0.52 | 0.18 | 0.11 | 0.18 * | 33.2 |

| CycleGAN + LIPIS loss | 0.58 | 0.23 | 0.17 | 3.56 | 30.8 |

| VariGAN (ours) | 0.67 | 0.41 | 0.26 | 7.78 | 24.2 |

| Generator | Discriminator | Self-Attention | ResNet | |

|---|---|---|---|---|

| Total parameters (VariGAN) | 39.23 M | 0.84 M | 0.10 M | 2.95 M |

| Total parameters (CycleGAN) | 11.38 M | 2.76 M | – | 1.18 M |

| Model | FLOPS | Parameters | apple2orange | horse2zebra | Maps | monet2photo | vangogh2photo |

|---|---|---|---|---|---|---|---|

| CycleGAN | 58,541.74 M | 14.14 M | 167 s/556 min | 218 s/729 min | 179 s/598 min | 997 s/3323 min | 1003 s/3343 min |

| VariGAN-Depthwise | 70,478.47 M | 41.99 M | 279 s/930 min | 382 s/1273 min | 294 s/980 min | 1174 s/3913 min | 1189 s/3963 min |

| VariGAN | 69,009.22 M | 40.07 M | 257 s/857 min | 336 s/1120 min | 262 s/873 min | 1053 s/3510 min | 1066 s/3553 min |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guan, D.; Lin, X.; Zhang, H.; Zhou, H. VariGAN: Enhancing Image Style Transfer via UNet Generator, Depthwise Discriminator, and LPIPS Loss in Adversarial Learning Framework. Sensors 2025, 25, 2671. https://doi.org/10.3390/s25092671

Guan D, Lin X, Zhang H, Zhou H. VariGAN: Enhancing Image Style Transfer via UNet Generator, Depthwise Discriminator, and LPIPS Loss in Adversarial Learning Framework. Sensors. 2025; 25(9):2671. https://doi.org/10.3390/s25092671

Chicago/Turabian StyleGuan, Dawei, Xinping Lin, Haoyi Zhang, and Hang Zhou. 2025. "VariGAN: Enhancing Image Style Transfer via UNet Generator, Depthwise Discriminator, and LPIPS Loss in Adversarial Learning Framework" Sensors 25, no. 9: 2671. https://doi.org/10.3390/s25092671

APA StyleGuan, D., Lin, X., Zhang, H., & Zhou, H. (2025). VariGAN: Enhancing Image Style Transfer via UNet Generator, Depthwise Discriminator, and LPIPS Loss in Adversarial Learning Framework. Sensors, 25(9), 2671. https://doi.org/10.3390/s25092671