Advances in Wearable Sensors for Learning Analytics: Trends, Challenges, and Prospects

Abstract

:1. Introduction

2. Overview of Wearable Sensor Technologies

2.1. Wearable Sensor Technologies

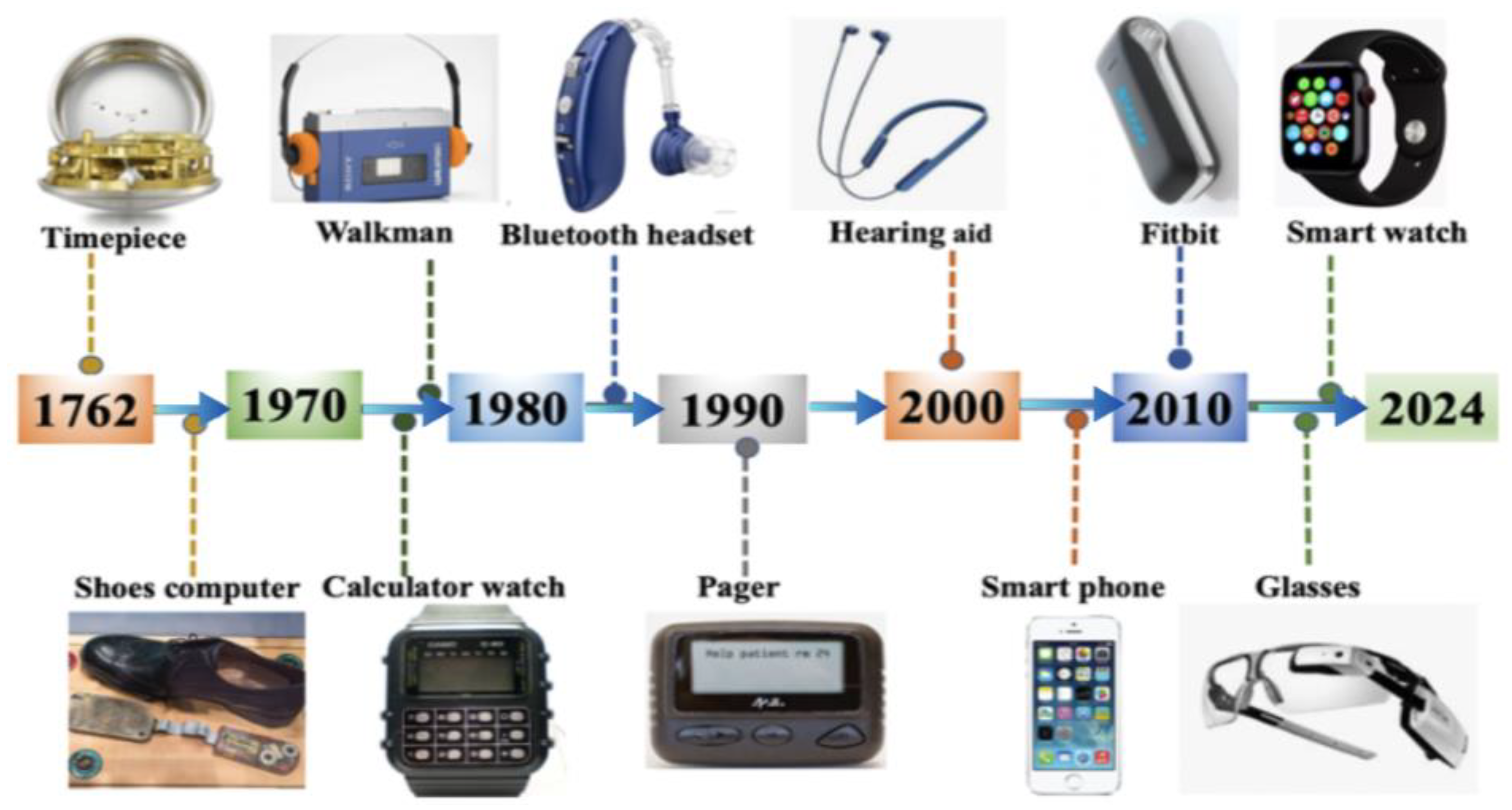

2.2. The History of Wearable Sensor Technologies

2.3. Sensors and Sensing Data

2.4. Multimode Sensor

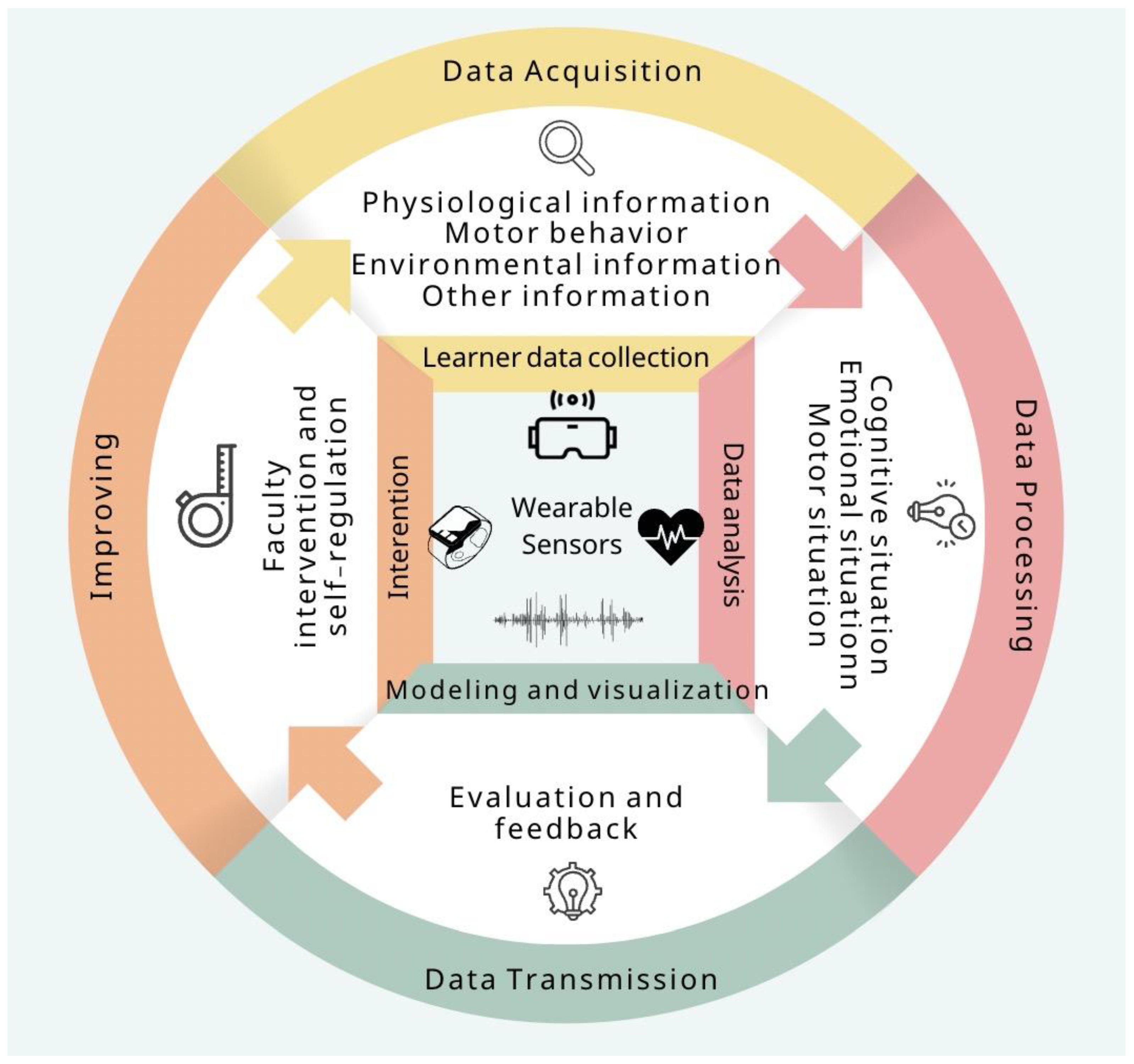

3. Wearable Sensors for Learning Analytics

3.1. Overview of Prior Research on Wearable Sensors in Learning Analytics

3.2. Learning Analytics

3.3. Sensor Data in Learning Analytics

3.4. The Advantage of Wearable Sensors for Learning Analytics

4. Trends

4.1. The Need for Educational Development

4.2. Research of Wearable Sensors for Learning Analytics

4.3. Application of Wearable Sensors for Learning Analytics

5. Challenges

5.1. Ethical Issues

5.2. Explainable Learning Analytics

5.3. Technological and Data Challenges

6. Future Directions

6.1. Safeguarding Data Privacy and Security

6.2. Establishing Comprehensive Biological Databases

6.3. Advancing Multidisciplinary Collaborative Research to Construct Systemic Theoretical Models

6.4. Identifying Perception Data Suitable for Learning Analytics

6.5. Enhancing the Accuracy of Emotional State Recognition

6.6. Immersive Learning Experiences and Non-Intrusive Sensing

6.7. Leveraging Data from the Learning Process to Create Meaningful Educational Contexts

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Liu, D.; Zhang, S.; Wang, Y. A wearable in-sensor computing platform based on stretchable organic electrochemical transistors. Nat. Electron. 2024, 7, 1176–1185. [Google Scholar] [CrossRef]

- Attallah, M.; Ilagure, M. Research on wearable technologies for learning: A systematic review. Front. Educ. 2023, 8, 127–389. [Google Scholar]

- Khosravi, S.; Bailey, S.G.; Parvizi, H.; Ghannam, R. Wearable sensors for learning enhancement in higher education. Sensors 2022, 22, 7633. [Google Scholar] [CrossRef]

- Al-Maroof, R.S.; Alfaisal, A.M.; Salloum, S.A. Google glass adoption in the educational environment: A case study in the Gulf area. Educ. Inf. Technol. 2021, 26, 2477–2500. [Google Scholar] [CrossRef]

- Buchem, I.; Klamma, R.; Wild, F. Introduction to wearable enhanced learning (WELL): Trends, opportuni. ties, and challenges. In Perspectives on Wearable Enhanced Learning (WELL) Current Trends, Research, and Practice; Buchem, I., Klamma, R., Wild, F., Eds.; Springer: Cham, Switzerland, 2019; pp. 3–32. [Google Scholar]

- Jovanov, E. Wearables Meet IoT: Synergistic Personal Area Networks (SPANs). Sensors 2019, 19, 4295. [Google Scholar] [CrossRef] [PubMed]

- Wise, A.F.; Knight, S.; Ochoa, X. What makes learning analytics research matter. J. Learn. Anal. 2021, 8, 1–9. [Google Scholar] [CrossRef]

- Alvarez, V.; Bower, M.; de Freitas, S.; Gregory, S.; De Wit, B. The use of wearable technologies in Australian universities: Examples from environmental science, cognitive and brain sciences and teacher training. In Proceedings of the 15th World Conference on Mobile and Contextual Learning, mLearn 2016, Sydney, Australia, 24–26 October 2016. [Google Scholar]

- Cowperthwait, A.L.; Campagnola, N.; Doll, E.J.; Downs, R.G.; Hott, N.E.; Kelly, S.C.; Montoya, A.; Bucha, A.C.; Wang, L.; Buckley, J.M. Tracheostomy overlay system: An effective learning device using standardized patients. Clin. Simul. Nurs. 2015, 11, 253–258. [Google Scholar] [CrossRef]

- Laverde, R.; Rueda, C.; Amado, L.; Rojas, D.; Altuve, M. Artificial neural network for laparoscopic skills classification using motion signals from apple watch. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–22 July 2018; pp. 5434–5437. [Google Scholar]

- Shen, G.; Gao, Y.; Jian, H. Wearable sensors for learning enhancement in higher education. Sensors 2024, 24, 21–80. [Google Scholar]

- Ba, S.; Hu, X. Measuring emotions in education using wearable devices: A systematic review. Comput. Educ. 2023, 200, 104797. [Google Scholar] [CrossRef]

- Guk, K.; Han, G.; Lim, J.; Jeong, K.; Kang, T.; Lim, E.K.; Jung, J. Evolution of Wearable Devices with Real-time Disease Monitoring for Personalized Healthcare. Nanomaterials 2019, 9, 813. [Google Scholar] [CrossRef]

- Dong, Q.; Miao, R. Wearable Devices for Smart Education Based on Sensing Data: Methods and Applications. In International Conference on Web-Based Learning. Springer: Cham, Switzerland, 2022; pp. 270–281. [Google Scholar]

- Yuan, Z.; Li, H.; Duan, Z.; Huang, Q.; Zhang, M.; Zhang, H.; Tai, H. High Sensitivity, Wide Range and Waterproof Strain Sensor with Inner Surface Sensing Layer for Motion Detection and Gesture Reconstruction. Sens. Actuators A Phys. 2024, 369, 115–202. [Google Scholar] [CrossRef]

- Stöckli, S.; Schulte-Mecklenbeck, M.; Borer, S.; Samson, A.C. Facial Expression Analysis with AFFDEX and FACET: A Validation Study. Behav. Res. Methods 2018, 50, 1446–1460. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Zeng, S.; Wang, W. The influence of emotion and learner control on multimedia learning. Learn. Motiv. 2021, 76, 101762. [Google Scholar] [CrossRef]

- Motti, V.G. Wearable Technologies in Education: A Design Space. In Proceedings of the Ubiquitous and Virtual Environments for Learning and Collaboration: 6th International Conference, Orlando, FL, USA, 26–31 July 2019; pp. 55–67. [Google Scholar]

- Shahrezaei, A.; Sohani, M.; Taherkhani, S.; Zarghami, S.Y. The impact of surgical simulation and training technologies on general surgery education. BMC Med. Educ. 2024, 24, 1297. [Google Scholar] [CrossRef]

- Yan, L.; Martinez-Maldonado, R.; Cordoba, B.G.; Deppeler, J.; Corrigan, D.; Nieto, G.F.; Gasevic, D. Footprints at School: Modelling In-class Social Dynamics from Students’ Physical Positioning Traces. In LAK22, Proceedings of the 11th International Learning Analytics and Knowledge Conference, Irvine, CA, USA, 12–16 April 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 43–54. [Google Scholar]

- Martinez-Maldonado, R.; Echeverria, V.; Fernandez-Nieto, G.; Yan, L.; Zhao, L.; Alfredo, R.; Li, X.; Dix, S.; Jaggard, H.; Wotherspoon, R.; et al. Lessons learnt from a multimodal learning analytics deployment in-the-wild. ACM Trans. Comput.-Hum. Interact. 2023, 31, 1–41. [Google Scholar] [CrossRef]

- Kolodzey, L.; Grantcharov, P.D.; Rivas, H.; Schijven, M.P.; Grantcharov, T.P. Wearable Technology in the Operating Room: A Systematic Review. BMJ Innov. 2016, 3, 55–63. [Google Scholar] [CrossRef]

- Ahrar, A.; Doroodian, M.; Hatala, M. Exploring Eye-tracking Features to Understand Students’ Sensemaking of Learning Analytics Dashboards. In LAK ’25, Proceedings of the 15th International Learning Analytics and Knowledge Conference, Dublin, Ireland, 3–7 March 2025; Association for Computing Machinery: New York, NY, USA, 2025; pp. 931–937. [Google Scholar]

- Aguilera, A.; Mellado, D.; Rojas, F. An assessment of in-the-wild datasets for multimodal emotion recognition. Sensors 2023, 23, 5184. [Google Scholar] [CrossRef]

- Yun, S.; Choi, J.; Yoo, Y.; Yun, K.; Choi, J.Y. Action-Driven Visual Object Tracking with Deep Reinforcement Learning. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 2239–2252. [Google Scholar] [CrossRef]

- Rouast, P.V.; Adam, M.T.; Chiong, R. Deep Learning for Human Affect Recognition: Insights and New Developments. IEEE Trans. Affect. Comput. 2019, 12, 524–543. [Google Scholar] [CrossRef]

- Bandara, D.; Song, S.; Hirshfield, L.; Velipasalar, S. A More Complete Picture of Emotion Using Electrocardiogram and Electrodermal Activity to Complement Cognitive Data. In Foundations of Augmented Cognition: Neuroergonomics and Operational Neuroscience; Springer: Cham, Switzerland, 2016; Volume 9743, pp. 287–298. [Google Scholar]

- Giannakos, M.N.; Papavlasopoulou, S.; Sharma, K. Monitoring Children’s Learning Through Wearable Eye-tracking: The Case of a Making-based Coding Activity. IEEE Pervasive Comput. 2020, 19, 10–21. [Google Scholar] [CrossRef]

- Chango, W.; Lara, J.A.; Cerezo, R.; Romero, C. A review on data fusion in multimodal learning analytics and educational data mining. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2022, 12, e1458. [Google Scholar] [CrossRef]

- Blikstein, P.; Worsley, M. Multimodal Learning Analytics and Education Data Mining: Using Computational Technologies to Measure Complex Learning Tasks. J. Learn. Anal. 2016, 3, 220–238. [Google Scholar] [CrossRef]

- Ochoa, X.; Worsley, M. Augmenting Learning Analytics with Multimodal Sensory Data. J. Learn. Anal. 2016, 3, 213–219. [Google Scholar] [CrossRef]

- Pijeira-Díaz, H.J.; Drachsler, H.; Kirschner, P.A.; Järvelä, S. Profiling Sympathetic Arousal in a Physics Course: How Active are Students. J. Comput. Assist. Learn. 2018, 34, 397–408. [Google Scholar] [CrossRef]

- Giannakos, M.N.; Sharma, K.; Papavlasopoulou, S.; Pappas, I.O.; Kostakos, V. Fitbit for Learning: Towards Capturing the Learning Experience Using Wearable Sensing. Int. J. Hum. Comput. Stud. 2020, 136, 102384. [Google Scholar] [CrossRef]

- Sharma, K.; Giannakos, M. Multimodal Data Capabilities for Learning: What can Multimodal Data Tell us about Learning. Br. J. Educ. Technol. 2020, 51, 1450–1484. [Google Scholar] [CrossRef]

- Pelánek, R.; Rihák, J.; Papoušek, J. Impact of Data Collection on Interpretation and Evaluation of Student Models. In Proceedings of the Sixth International Conference on Learning Analytics & Knowledge, Edinburgh, UK, 25–29 April 2016; pp. 40–47. [Google Scholar]

- Fidalgo-Blanco, A.; Sein-Echaluce, M.L.; García-Peñalvo, F.J.; Ángel Conde, M. Using Learning Analytics to Improve Teamwork Assessment. Comput. Hum. Behav. 2015, 47, 149–156. [Google Scholar] [CrossRef]

- Taelman, J.; Vandeput, S.; Spaepen, A.; Van Huffel, S. Influence of Mental Stress on Heart Rate and Heart Rate Variability. In Proceedings of the 4th European Conference of the International Federation for Medical and Biological Engineering, Antwerp, Belgium, 23–27 November 2008; Springer: Berlin/Heidelberg, Germany, 2008; pp. 1366–1369. [Google Scholar]

- Aftanas, L.I.; Golocheikine, S.A. Human Anterior and Frontal Midline Theta and Lower Alpha Reflect Emotionally Positive State and Internalized Attention: High-resolution EEG Investigation of Meditation. Neurosci. Lett. 2001, 310, 57–60. [Google Scholar] [CrossRef]

- Kaklauskas, A.; Kuzminske, A.; Zavadskas, E.K.; Daniunas, A.; Kaklauskas, G.; Seniut, M.; Cerkauskiene, R. Affective Tutoring System for Built Environment Management. Comput. Educ. 2015, 82, 202–216. [Google Scholar] [CrossRef]

- Larmuseau, C.; Cornelis, J.; Lancieri, L.; Desmet, P.; Depaepe, F. Multimodal Learning Analytics to Investigate Cognitive Load During Online ProblemSolving. Br. J. Educ. Technol. 2020, 51, 1548–1562. [Google Scholar] [CrossRef]

- Tabuchi, K.; Kobayashi, S.; Fukuda, S.T.; Nakagawa, Y. A Case Study on Reducing Language Anxiety and Enhancing Speaking Skills through Online Conversation Lessons. Technol. Lang. Teach. Learn. 2024, 6, n3. [Google Scholar] [CrossRef]

- Standen, P.J.; Brown, D.J.; Taheri, M.; Trigo, M.J.G.; Boulton, H.; Burton, A.; Hallewell, M.J.; Lathe, J.G.; Shopland, N.; Gonzalez, M.A.B.; et al. An Evaluation of an Adaptive Learning System Based on Multimodal Affect Recognition for Learners with Intellectual Disabilities. Br. J. Educ. Technol. 2020, 51, 1748–1765. [Google Scholar] [CrossRef]

- Neffati, O.S.; Setiawan, R.; Jayanthi, P.; Vanithamani, S.; Sharma, D.K.; Regin, R.; Sengan, S. An educational tool for enhanced mobile e-Learning for technical higher education using mobile devices for augmented reality. Microprocess. Microsyst. 2021, 83, 104030. [Google Scholar] [CrossRef]

- Törmänen, T.; Järvenoja, H.; Saqr, M.; Malmberg, J.; Järvelä, S. Affective states and regulation of learning during socio-emotional interactions in secondary school collaborative groups. Br. J. Educ. Psychol. 2023, 93, 48–70. [Google Scholar] [CrossRef]

- Shakroum, M.; Wong, K.W.; Fung, C.C. The Influence of Gesture-based Learning System (GBLS) on Learning Outcomes. Comput. Educ. 2018, 117, 75–101. [Google Scholar] [CrossRef]

- Sung, B.; Mergelsberg, E.; Teah, M.; D’Silva, B.; Phau, I. The Effectiveness of a Marketing Virtual Reality Learning Simulation: A Quantitative Survey with Psychophysiological Measures. Br. J. Educ. Technol. 2021, 52, 196–213. [Google Scholar] [CrossRef]

- Arjomandi, A.; Paloyo, A.; Suardi, S. Active learning and academic performance: The case of real-time interactive student polling. Stat. Educ. Res. J. 2023, 22, 3. [Google Scholar] [CrossRef]

- Chen, G.; Zhang, Y.; Chen, N.S.; Fan, Z. Context-aware ubiquitous learning in science museum with ibeacon technology. In Learning, Design, and Technology: An International Compendium of Theory, Research, Practice, and Policy; Springer: Cham, Switzerland, 2023; pp. 3369–3392. [Google Scholar]

- Malmberg, J.; Järvelä, S.; Holappa, J.; Haataja, E.; Huang, X.; Siipo, A. Going Beyond What is Visible: What Multichannel Data Can Reveal about Interaction in the Context of Collaborative Learning. Comput. Hum. Behav. 2019, 96, 235–245. [Google Scholar] [CrossRef]

- Hsiao, H.S.; Chen, J.C. Using a Gesture Interactive Game-based Learning Approach to Improve Preschool Children’s Learning Performance and Motor Skills. Comput. Educ. 2016, 95, 151–162. [Google Scholar] [CrossRef]

- Oppici, L.; Stell, F.M.; Utesch, T.; Woods, C.T.; Foweather, L.; Rudd, J.R. A skill acquisition perspective on the impact of exergaming technology on foundational movement skill development in children 3–12 years: A systematic review and meta-analysis. Sports Med. Open 2022, 8, 148. [Google Scholar] [CrossRef]

- Wong, R.M.; Adesope, O.O. Meta-analysis of emotional designs in multimedia learning: A replication and extension study. Educ. Psychol. Rev. 2021, 33, 357–385. [Google Scholar] [CrossRef]

- Weiland, S.; Hickmann, T.; Lederer, M.; Marquardt, J.; Schwindenhammer, S. The 2030 agenda for sustainable development: Transformative change through the sustainable development goals? Politics Gov. 2021, 9, 90–95. [Google Scholar] [CrossRef]

- Rani, N.; Chu, S.L. Wearables can help me learn: A survey of user perception of wearable technologies for learning in everyday life. Educ. Inf. Technol. 2022, 27, 3381–3401. [Google Scholar] [CrossRef]

- Cardona, M.A.; Rodríguez, R.J.; Ishmael, K. Artificial Intelligence and the Future of Teaching and Learning: Insights and Recommendations. 2023. Available online: https://policycommons.net/artifacts/3854312/ai-report/4660267 (accessed on 1 January 2025).

- Unesco, P. Reimagining Our Futures Together: A New Social Contract for Education. Comp. Educ. 2021, 58, 568–569. [Google Scholar]

- Heilala, V.; Saarela, M.; Jääskelä, P.; Kärkkäinen, T. Course Satisfaction in Engineering Education Through the Lens of Student Agency Analytics. In Proceedings of the 2020 IEEE Frontiers in Education Conference (FIE), Uppsala, Sweden, 21–24 October 2020; pp. 1–9. [Google Scholar]

- Ochoa, X.; Lang, A.C.; Siemens, G. Multimodal Learning Analytics. Handb. Learn. Anal. 2017, 1, 129–141. [Google Scholar]

- Sergis, S.; Sampson, D.G.; Rodríguez-Triana, M.J.; Gillet, D.; Pelliccione, L.; de Jong, T. Using Educational Data from Teaching and Learning to Inform Teachers’ Reflective Educational Design in Inquiry-based STEM Education. Comput. Hum. Behav. 2019, 92, 724–738. [Google Scholar] [CrossRef]

- Prieto, L.P.; Sharma, K.; Dillenbourg, P.; Jesús, M. Teaching Analytics: Towards Automatic Extraction of Orchestration Graphs Using Wearable Sensors. In LAK ’16, Proceedings of the Sixth International Conference on Learning Analytics & Knowledge, Edinburgh, UK, 25–29 April 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 148–157. [Google Scholar]

- Xu, Z.; Woodruff, E. Person-centred Approach to Explore Learner’s Emotionally in Learning Within a 3D Narrative Game. In LAK ’17, Proceedings of the Seventh International Learning Analytics & Knowledge Conference, Vancouver, Canada, 13–17 March 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 439–443. [Google Scholar]

- Hernández-Mustieles, M.A.; Lima-Carmona, Y.E.; Pacheco-Ramírez, M.A.; Mendoza-Armenta, A.A.; Romero-Gómez, J.E.; Cruz-Gómez, C.F.; Rodríguez-Alvarado, D.C.; Arceo, A.; Cruz-Garza, J.G.; Ramírez-Moreno, M.A.; et al. Wearable biosensor technology in education: A systematic review. Sensors 2024, 24, 2437. [Google Scholar] [CrossRef]

- McFadden, C.; Li, Q. Motivational Readiness to Change Exercise Behaviors: An Analysis of the Differences in Exercise, Wearable Exercise Tracking Technology, and Exercise Frequency, Intensity, and Time (FIT) Values and BMI Scores in University Students. Am. J. Health Educ. 2019, 50, 67–79. [Google Scholar] [CrossRef]

- Jia, L.; Sun, H.; Jiang, J.; Yang, X. High-Quality Classroom Dialogue Automatic Analysis System. Appl. Sci. 2025, 15, 2076–3417. [Google Scholar] [CrossRef]

- Almusawi, H.A.; Durugbo, C.M.; Bugawa, A.M. Wearable technology in education: A systematic review. IEEE Trans. Learn. Technol. 2021, 14, 540–554. [Google Scholar] [CrossRef]

- Havard, B.; Podsiad, M. A Meta-analysis of Wearables Research in Educational Settings Published 2016–2019. Educ. Technol. Res. Dev. 2020, 68, 1829–1854. [Google Scholar] [CrossRef]

- Cristina, O.P.; Jorge, P.B.; Eva, R.L.; Mario, A.O. From wearable to insideable: Is ethical judgment key to the acceptance of human capacity-enhancing intelligent technologies? Comput. Hum. Behav. 2021, 114, 106559. [Google Scholar] [CrossRef]

- Canali, S.; De Marchi, B.; Aliverti, A. Wearable technologies and stress: Toward an ethically grounded approach. Int. J. Environ. Res. Public Health 2023, 20, 6737. [Google Scholar] [CrossRef] [PubMed]

- Sivakumar, C.L.V.; Mone, V.; Abdumukhtor, R. Addressing privacy concerns with wearable health monitoring technology. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2024, 14, e1535. [Google Scholar] [CrossRef]

- Yan, L.; Zhao, L.; Gasevic, D.; Martinez-Maldonado, R. Scalability, Sustainability, and Ethicality of Multimodal Learning Analytics. In LAK22, Proceedings of the 12th International Learning Analytics and Knowledge Conference, Newport Beach, CA, USA, 21–25 March 2022; Association for Computing Machinery: New York, NY, USA; 2022; pp. 13–23. [Google Scholar]

- Bassiou, N.; Tsiartas, A.; Smith, J.; Bratt, H.; Richey, C.; Shriberg, E.; Alozie, N. Privacy-Preserving Speech Analytics for Automatic Assessment of Student Collaboration. Interspeech 2016, 5, 888–892. [Google Scholar]

- Praharaj, S.; Scheffel, M.; Drachsler, H.; Specht, M. Multimodal Analytics for Real-time Feedback in Co-located Collaboration. In European Conference on Technology Enhanced Learning; Pammer-Schindler, V., Pérez-Sanagustín, M., Drachsler, H., Elferink, R., Scheffel, M., Eds.; Springer: Cham, Switzerland, 2018; pp. 187–201. [Google Scholar]

- Yu, B.; Zadorozhnyy, A. Developing students’ linguistic and digital literacy skills through the use of multimedia presentations. ReCALL 2022, 34, 95–109. [Google Scholar] [CrossRef]

- Turnock, A.; Langley, K.; Jones, C.R. Understanding stigma in autism: A narrative review and theoretical model. Autism Adulthood 2022, 4, 76–91. [Google Scholar] [CrossRef]

- Guaman-Quintanilla, S.; Everaert, P.; Chiluiza, K.; Valcke, M. Impact of design thinking in higher education: A multi-actor perspective on problem solving and creativity. Int. J. Technol. Des. Educ. 2023, 33, 217–240. [Google Scholar] [CrossRef]

- Pope, Z.C.; Barr-Anderson, D.J.; Lewis, B.A.; Pereira, M.A.; Gao, Z. Use of Wearable Technology and Social Media to Improve Physical Activity and Dietary Behaviors Among College Students: A 12-week Randomized Pilot Study. Int. J. Environ. Res. Public Health 2019, 16, 3579. [Google Scholar] [CrossRef]

- Sharma, K.; Papamitsiou, Z.; Giannakos, M. Building Pipelines for Educational Data Using AI and Multimodal Analytics: A “grey-box” approach. Br. J. Educ. Technol. 2019, 50, 3004–3031. [Google Scholar] [CrossRef]

- Buolamwini, J.; Gebru, T. Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification. In Proceedings of the 1st Conference on Fairness, Accountability and Transparency, New York, NY, USA, 23–24 February 2018; pp. 77–91. [Google Scholar]

- Hill, V.; Lee, H.J. Libraries and Immersive Learning Environments Unite in Second Life. Libr. Hi Tech 2009, 27, 338–356. [Google Scholar] [CrossRef]

- Callan, G.L.; Rubenstein, L.D.; Ridgley, L.M.; McCall, J.R. Measuring self-regulated learning during creative problem-solving with SRL microanalysis. Psychol. Aesthet. Creat. Arts 2021, 15, 136. [Google Scholar] [CrossRef]

- Lv, Z.; Qiao, L.; Verma, S.; Kavita. AI-enabled IoT-edge data analytics for connected living. ACM Trans. Internet Technol. 2021, 21, 1–20. [Google Scholar] [CrossRef]

- DaCosta, B.; Kinsell, C. Serious games in cultural heritage: A review of practices and considerations in the design of location-based games. Educ. Sci. 2022, 13, 47. [Google Scholar] [CrossRef]

- Enyedy, N.; Yoon, S. Immersive environments: Learning in augmented+ virtual reality. In International Handbook of Computer-Supported Collaborative Learning; Cress, U., Rosé, C., Wise, A.F., Oshima, J., Eds.; Springer: Cham, Switzerland, 2021; pp. 389–405. [Google Scholar]

- Zhu, Z.T.; Yu, M.H.; Riezebos, P. A research framework of smart education. Smart Learn. Environ. 2016, 3, 4. [Google Scholar] [CrossRef]

| Classification | Specific Type | Measured Data | Application Example |

|---|---|---|---|

| Motion sensor | Accelerometer | Accelerated velocity | Motion recognition and falling monitoring [13,14] |

| Gyroscope | Angular velocity | ||

| Compass | Magnetic declination | ||

| Dynamometer | Force | ||

| Biosensor | EEG | Brain wave | Expression recognition, pressure perception, attention change, and psychological health monitoring [15,16,17,18] |

| Glucometer | Blood sugar content | ||

| ECG | Cardiac electrical activity | ||

| EDA | Electro dermal activity | ||

| Eye tracker | Blink and focus the pupils | ||

| Environmental sensor | GPS | Location information | Environmental information, scenario inference, and smart home [19,20,21] |

| Air quality sensor | Air quality | ||

| Thermometer | Temperature | ||

| Hygrometer | Humidity | ||

| Barometer | Air pressure | ||

| Others | Optical camera | Optical imaging | Motion recognition and emotional judgment [22,23] |

| Infrared camera | Thermal imaging | ||

| Microphone | Acoustical signal | ||

| Software sensor | Human-computer interaction data |

| Author(s) & Year | Focus | Strength | Weakness |

|---|---|---|---|

| Bandara et al., 2016, [27] | Explored the integration of physiological signals into affective learning analytics. | Demonstrated the complementary value of multimodal physiological data in emotion modeling. | Limited ecological validity and insufficient consideration of longitudinal effects. |

| Blikstein & Worsley, 2016, [30] | Proposed a framework for integrating multimodal sensor data into learning analytics. | Provided a theoretical foundation for computational sensing in complex learning tasks. | Lacked empirical implementation and omitted ethical and technical constraints. |

| Ochoa & Worsley, 2016, [31] | Discussed the role of sensor-based data in enhancing traditional learning analytics. | Emphasized the analytic potential of multimodal wearable data for real-time learning support. | Absence of validated models and underdeveloped pedagogical interpretation strategies. |

| Pijeira-Díaz et al., 2018, [32] | Applied biometric indicators to infer cognitive-affective states in learning contexts. | Demonstrated the feasibility of using biosignals for in-situ engagement detection. | Data reliability affected by environmental confounds and signal ambiguity. |

| Buchem et al., 2019, [5] | Conceptualized wearable-enhanced learning and its pedagogical affordances. | Mapped theoretical linkages between wearables, personalization, and feedback in education. | Lacked empirical evidence and specific sensor-level analysis. |

| Giannakos et al., 2020, [33] | Investigated the use of commercial wearables for tracking learner engagement. | Highlighted the practicality and institutional viability of wearable-based data collection. | Limited signal resolution and insufficient representation of cognitive processes. |

| Sharma & Giannakos, 2020, [34] | Examined the theoretical integration of multimodal data into educational analytics. | Advanced a multidimensional framework for interpreting learning signals. | No empirical validation and limited contextual applicability. |

| Khosravi et al., 2022, [3] | Studied biosensor-based methods to monitor learning dynamics in higher education. | Provided structured insights into sensor-informed adaptive feedback mechanisms. | Narrow participant scope and insufficient exploration of interoperability issues. |

| Ba & Hu, 2023, [12] | Reviewed emotional sensing via wearable devices in educational settings. | Synthesized sensor types, application scenarios, and signal modalities across studies. | Lacked methodological critique and discussion of deployment feasibility. |

| Shen et al., 2024, [11] | Empirically analyzed multimodal sensor integration for real-time learner modeling. | Validated a full sensor-based framework for continuous monitoring in education. | Underdeveloped treatment of ethical risks and explainability in learning analytics. |

| Application | Utilized Technology | Function |

|---|---|---|

| Intelligent Tutoring Systems (ITS) | Intelligent Tutoring Systems | ITS determines students’ emotional states in real-time and provided adaptive support, resulting in enhanced learning outcomes through long-term usage. |

| Virtual Learning Companion (VLC) | Smart Monitor sensors and “Learning Companion” system | VLC provides personalized learning feedback to students and activated specific motivational mechanisms to encourage learners in completing difficult tasks. |

| Emotional Interaction Support (EIS) | Wireless networks and sensor technologies | EIS enhances students’ social interaction skills, promoted self-awareness and reflection on emotions, and assisted teachers in organizing classroom activities and adjusting teaching strategies. |

| Self-Regulation Skills Assessment (SSA) | Learning Management Systems | SSA offers an effective means to assess learners’ self-regulatory abilities |

| Academic Situation Analysis and Monitoring (ASAM) | Real-time monitoring | ASAM facilitates real-time emotional feedback and intervention for students potentially at risk of academic difficulties. |

| Challenge Category | Description | Implications for Learning Analytics |

|---|---|---|

| Ethical Issues (5.1) | Includes privacy breaches, lack of informed consent, demographic bias, and unequal access to sensor technologies. | Undermines trust, excludes marginalized populations, and introduces systemic bias in learner classification and feedback models. |

| Interpretability and Validity (5.2) | Sensor-derived data often lack explainability and are affected by non-learning-related variables, limiting their educational interpretability. | Reduces the transparency of learning analytics outputs, impeding teacher adoption and potentially leading to misinformed interventions. |

| Limited Predictive Accuracy (5.2) | Physiological signals show weak and inconsistent correlations with learning outcomes, and sensor outputs are susceptible to error and device variation. | Challenges the reliability of engagement or performance predictions, making data suitable only as secondary indicators. |

| Data Integration and Technical Alignment (5.2–5.3) | Current systems face difficulties in combining multimodal sensor streams, aligning them with learning models, and maintaining coherence with digital platforms. | Hinders the implementation of real-time adaptive systems and comprehensive learner profiling. |

| Sensor Reliability and Environmental Robustness (5.3) | Signal distortion due to classroom noise, device misalignment, and learner movement reduces data quality in dynamic learning settings. | Compromises the generalizability and robustness of sensor-based learning analytics in authentic educational environments. |

| Computational and Implementation Barriers (5.3) | Real-time data processing requires significant computational power and storage; cost and system complexity hinder large-scale deployment. | Limits practical scalability, especially in under-resourced institutions, and increases reliance on edge-computing architectures. |

| Future Direction | Core Focus | Development Outlook |

|---|---|---|

| Analyzing High-Order Physiological and Bodily Signals (6.1–6.2) | Investigate the links between learner posture, gestures, and internal states by integrating embodied cognition with neuroscience-informed approaches. | Medium-term: Requires interdisciplinary methods and interactive experimental paradigms. |

| Developing Inclusive Biological Databases (6.2) | Build open, granular, and culturally diverse databases of physiological and behavioral data for modeling cognitive and affective engagement. | Medium-term: Technically feasible but demands ethical oversight, international collaboration, and standardization. |

| Constructing Multidisciplinary Theoretical Frameworks (6.3) | Integrate theories from education, psychology, neuroscience, and computing to systematize multimodal sensor data interpretation. | Medium- to long-term: Calls for iterative model development and sustained cross-disciplinary research. |

| Identifying and Optimizing Perception Data (6.4) | Determine which types of sensor-derived perception data are most effective for assessing teaching quality and learner states. | Short- to medium-term: Experimental validation needed under authentic and complex classroom conditions. |

| Enhancing Emotional State Recognition (6.5) | Improve accuracy and reliability of emotion detection through advanced machine learning and signal filtering, with contextual awareness. | Medium-term: Requires large-scale datasets, explainable AI models, and bias reduction strategies. |

| Integrating Immersive Experiences with Non-Intrusive Sensing (6.6) | Combine virtual and augmented reality with continuous physiological sensing to create adaptive and authentic learning environments. | Long-term: Demands technological innovation, real-time response systems, and learner-centered design. |

| Creating Intelligent, Context-Rich Learning Environments (6.7) | Leverage data from the learning process to construct personalized and seamless instructional contexts guided by intelligent systems. | Medium-term: Requires new frameworks to align sensor analytics with pedagogical goals. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hong, H.; Dai, L.; Zheng, X. Advances in Wearable Sensors for Learning Analytics: Trends, Challenges, and Prospects. Sensors 2025, 25, 2714. https://doi.org/10.3390/s25092714

Hong H, Dai L, Zheng X. Advances in Wearable Sensors for Learning Analytics: Trends, Challenges, and Prospects. Sensors. 2025; 25(9):2714. https://doi.org/10.3390/s25092714

Chicago/Turabian StyleHong, Huaqing, Ling Dai, and Xiulin Zheng. 2025. "Advances in Wearable Sensors for Learning Analytics: Trends, Challenges, and Prospects" Sensors 25, no. 9: 2714. https://doi.org/10.3390/s25092714

APA StyleHong, H., Dai, L., & Zheng, X. (2025). Advances in Wearable Sensors for Learning Analytics: Trends, Challenges, and Prospects. Sensors, 25(9), 2714. https://doi.org/10.3390/s25092714