Pride, Love, and Twitter Rants: Combining Machine Learning and Qualitative Techniques to Understand What Our Tweets Reveal about Race in the US

Abstract

1. Introduction

2. Methods

2.1. Social Media Data Collection and Processing

2.2. Computer Modeling: Sentiment Analysis of Twitter Data

2.3. Content Analysis

3. Results

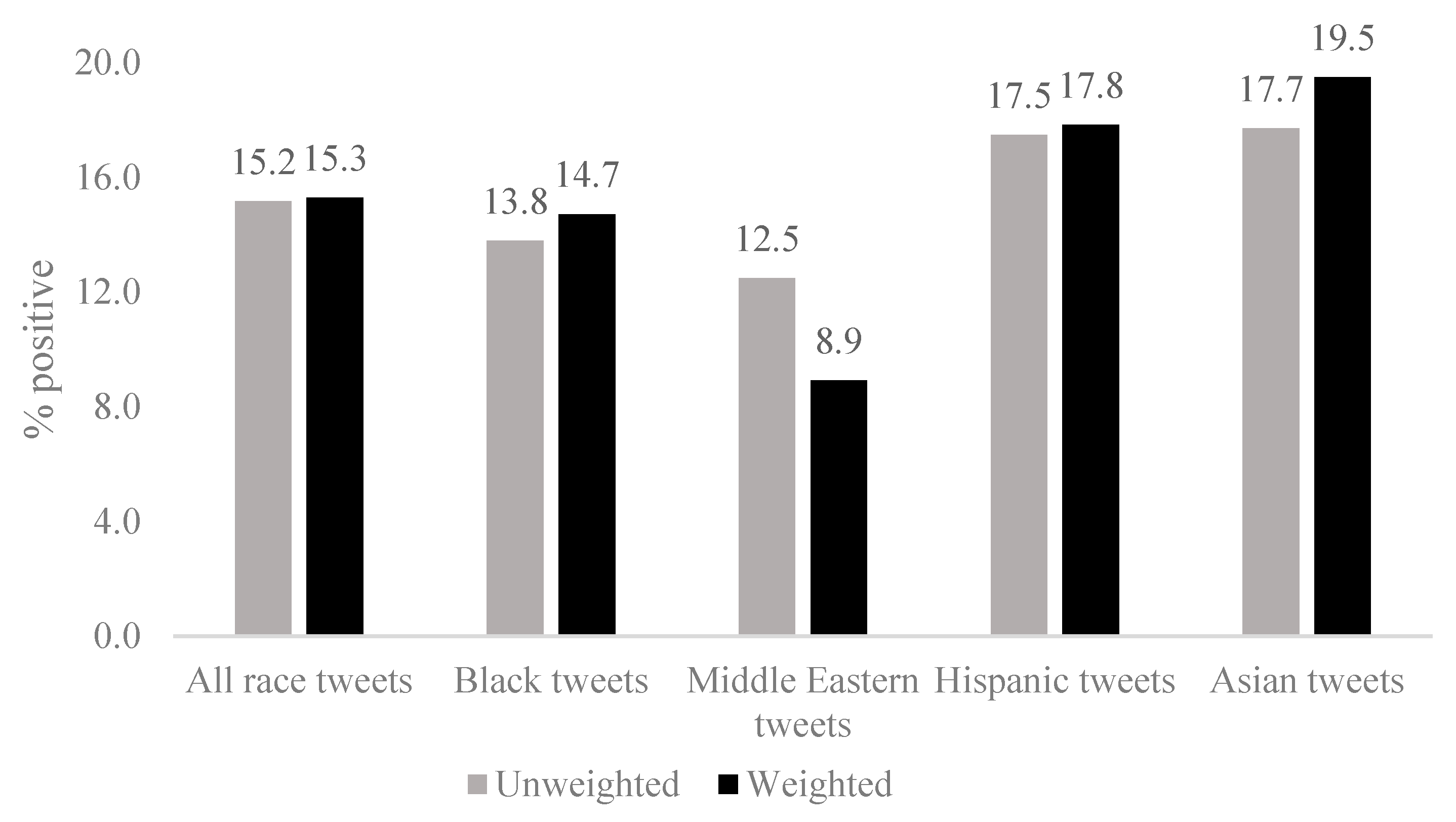

3.1. Quantitative Analyses: Descriptive Characteristics of Tweets

3.2. Qualitative Content Analysis: Themes

3.2.1. Spectrum of Negativity

3.2.2. Pride

3.2.3. Intimate Relationships

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| Items | Race |

|---|---|

| afghanistan | Middle Eastern |

| afghanistani | Middle Eastern |

| afghans | Middle Eastern |

| african american | Black |

| african americans | Black |

| african’t | Black |

| africoon | Black |

| afro caribbean | Black |

| afro-caribbean | Black |

| aid refugees | refugees |

| alaska native | Alaskan Native |

| american indian | Native American |

| apache indian | Native American |

| apache nation | Native American |

| apache tribe | Native American |

| arab | Middle Eastern |

| arabs | Middle Eastern |

| arabic | Middle Eastern |

| arabush | Arab |

| asian | Asian |

| asians | Asian |

| asian indian | Asian |

| bahamian | Black |

| bahamians | Black |

| bamboo coon | Asian |

| ban islam | anti-islamic |

| ban muslim | anti-islamic |

| ban on mulsims | anti-islamic |

| bangalees | Asian |

| bangladeshi | Asian |

| banislam | anti-islamic |

| banjo lip | Black |

| banmuslim | anti-islamic |

| banonmulsims | anti-islamic |

| bantu | Black |

| beaner | Mexican |

| beaner shnitzel | Multi-race |

| beanershnitzel | Multi-race |

| bengalis | Asian |

| bhutanese | Asian |

| biscuit lip | Black |

| bix nood | Black |

| black boy | Black |

| black boys | Black |

| black female | Black |

| black girl | Black |

| black girls | Black |

| black male | Black |

| black men | Black |

| black women | Black |

| blacks | Black |

| bootlip | Black |

| borde jumper | Hispanic |

| border bandit | Hispanic |

| border control | immigrant |

| border fence | immigrant |

| border hopper | Hispanic |

| border nigger | Hispanic |

| border security | immigrant |

| border surveillance | immigrant |

| border wall | immigrant |

| bow bender | Native American |

| brazilians | Hispanic |

| buffalo jockey | Native American |

| build a wall | immigrant |

| buildawall | immigrant |

| bumper lip | Black |

| burmese | Asian |

| burnt cracker | Black |

| burundi | Black |

| bush-boogie | Black |

| bushnigger | Native American |

| cairo coon | Middle Eastern |

| cambodian | Asian |

| cambodians | Asian |

| camel cowboy | Middle Eastern |

| camel fucker | Middle Eastern |

| camel jacker | Middle Eastern |

| camelfucker | Middle Eastern |

| camel-fucker | Middle Eastern |

| cameljacker | Middle Eastern |

| camel-jacker | Middle Eastern |

| carpet pilot | Middle Eastern |

| carpetpilot | anti-islamic |

| carribean people | Black |

| caublasian | Multi-race |

| central american | Hispanic |

| chain dragger | Black |

| chamorro | Asian |

| cherokee indian | Native American |

| cherokee nation | Native American |

| cherokee tribe | Native American |

| cherry nigger | Native American |

| chexican | Multi-race |

| chicano | Hispanic |

| chicanos | Hispanic |

| chiegro | Asian |

| chinaman | Asian |

| chinese | Asian |

| ching-chong | Asian |

| chink | Asian |

| chinks | Asian |

| chippewa indian | Native American |

| chippewa nation | Native American |

| chippewa tribe | Native American |

| choctaw indian | Native American |

| choctaw nation | Native American |

| choctaw tribe | Native American |

| clit chopper | Middle Eastern |

| clit-chopper | Middle Eastern |

| clitless | Middle Eastern |

| clit-swiper | Middle Eastern |

| coconut nigger | Asian |

| colombian | Hispanic |

| columbians | Hispanic |

| congo lip | Black |

| congolese | Black |

| coonass | Black |

| coon-ass | Black |

| coontang | Black |

| costa rican | Hispanic |

| cracker jap | Asian |

| cuban | Hispanic |

| cubans | Hispanic |

| dampback | Hispanic |

| darkey | Black |

| darkie | Black |

| darky | Black |

| deport | immigrant |

| deportation | immigrant |

| deported | immigrant |

| deporting | immigrant |

| deports | immigrant |

| derka derka | anti-islamic |

| derkaderka | anti-islamic |

| diaper head | Middle Eastern |

| diaperhead | Middle Eastern |

| diaper-head | Middle Eastern |

| dog muncher | Asian |

| dog-muncher | Asian |

| dominican | Hispanic |

| dominicans | Hispanic |

| dothead | South Asians |

| dune coon | Middle Eastern |

| dune nigger | anti-islamic |

| dunecoon | anti-islamic |

| dunenigger | anti-islamic |

| durka durka | Middle Eastern |

| durka-durka | Middle Eastern |

| east asian | Asian |

| ecuadorian | Hispanic |

| egyptian | Black |

| egyptians | Black |

| end sanctuary | immigrant |

| ethiopian | Black |

| ethiopians | Black |

| fence fairy | Hispanic |

| fence hopper | Hispanic |

| fence-hopper | Hispanic |

| fesskin | Hispanic |

| field nigger | Black |

| filipino | Asian |

| filipinos | Asian |

| fingernail rancher | Asian |

| fob | Asian |

| fuckmuslims | anti-islamic |

| ghanaian | Black |

| ghetto | Black |

| go back where | immigrant |

| gobackwhere | immigrant |

| golliwog | Black |

| gook | Asian |

| gookaniese | Asian |

| gookemon | Asian |

| gooky | Asian |

| groid | Black |

| guamanian | Asian |

| guatemalans | Hispanic |

| haitian | Black |

| haitians | Black |

| half breed | Multi-race |

| half cast | Multi-race |

| half-breed | Multi-race |

| half-cast | Multi-race |

| hatchet-packer | Native American |

| help refugees | refugees |

| hijab | anti-islamic |

| hijabs | anti-islamic |

| hindu | |

| hindus | |

| hispandex | Hispanic |

| hispanic | Hispanic |

| hispanics | Hispanic |

| house nigger | Black |

| illegal alien | immigrant |

| illegal aliens | immigrant |

| illegal immigrant | immigrant |

| illegal immigrants | immigrant |

| immigrant | immigrant |

| immigrants | immigrant |

| immigration | immigrant |

| indian | |

| indonesian | Asian |

| iranian | Middle Eastern |

| iraqi | Middle Eastern |

| iroquois indian | Native American |

| iroquois nation | Native American |

| iroquois tribe | Native American |

| islam | Middle Eastern |

| islamic | Middle Eastern |

| israeli | Middle Eastern |

| israelis | Middle Eastern |

| jamaican | Black |

| jamaicans | Black |

| japanese | Asian |

| jewish | |

| jews | |

| jig-abdul | anti-islamic |

| jigaboo | Black |

| jigga | Black |

| jiggabo | Black |

| jihad | Middle Eastern |

| jihads | Middle Eastern |

| jihadi | Middle Eastern |

| jihadis | Middle Eastern |

| jordanian | Black |

| kafeir | anti-islamic |

| karen people | Asian |

| kenyan | Black |

| knuckle-dragger | Black |

| korean | Asian |

| koreans | Asian |

| kuffar | anti-islamic |

| laotian | Asian |

| latin american | Hispanic |

| latina | Hispanic |

| latinas | Hispanic |

| latino | Hispanic |

| latinos | Hispanic |

| lebanese | Middle East |

| liberian | Black |

| little hiroshima | Asian |

| malayali | Asian |

| malaysian | Asian |

| mexcrement | Hispanic |

| mexican | Hispanic |

| mexicans | Hispanic |

| Mexican’t | Hispanic |

| mexico border | immigrant |

| mexicoborder | immigrant |

| mexicoon | Multi-race |

| mexihos | Hispanic |

| middle eastern | Middle Eastern |

| mongolian | Asian |

| mongolians | Asian |

| moroccan | Black |

| moroccans | Black |

| mozambican | Black |

| mud people | Black |

| mudshark | anti-islamic |

| muslim | Middle Eastern |

| muslimban | Middle Eastern |

| muslims | Middle Eastern |

| muzrat | anti-islamic |

| muzzie | Middle Eastern |

| native american | Native American |

| native americans | Native American |

| native hawaiian | Native Hawaiian |

| navajo | Native American |

| negro | Black |

| nepalese | Asian |

| nigerian | Black |

| nigerians | Black |

| nigga | Black |

| nigger | Black |

| niggers | black |

| nigglet | Black |

| nigglets | black |

| niglet | Black |

| noodle nigger | Asian |

| north korean | Asian |

| oriental | Asian |

| orientals | Asian |

| our country back | immigrant |

| ourcountryback | immigrant |

| pacific islander | Pacific Islander |

| paki | Middle eastern/south asian |

| pakistani | Middle Eastern |

| palestinian | Middle Eastern |

| panamanian | Hispanic |

| paraguayan | Hispanic |

| pashtun | Middle Eastern |

| pegida | anti-islamic |

| peruvian | Hispanic |

| pickaninny | Black |

| pisslam | anti-islamic |

| polynesian | Pacific Islander |

| porch monkey | Black |

| prairie nigger | Native American |

| pueblo indians | Native American |

| pueblo nation | Native American |

| pueblo tribe | Native American |

| puerto rican | Hispanic |

| puerto ricans | Hispanic |

| qtip head | anti-islamic |

| race traitor | Multi-race |

| raghead | anti-islamic |

| rag head | anti-islamic |

| rapefugee | anti-islamic |

| red nigger | Native American |

| refugee | refugees |

| refugees | refugees |

| resettlement | refugees |

| rice burner | Asian |

| rice nigger | Asian |

| rice rocket | Asian |

| rice-nigger | Asian |

| river nigger | Native American |

| rivernigger | Native American |

| rug pilot | Middle Eastern |

| rugpilot | anti-islamic |

| rug rider | Middle Eastern |

| rwandan people | Black |

| salvadoreans | Hispanic |

| samoan | Pacific Islander |

| sanctuary cities | immigrant |

| sanctuary city | immigrant |

| sanctuarycities | immigrant |

| sanctuarycity | immigrant |

| sand flea | anti-islamic |

| sand monkey | Middle Eastern |

| sand moolie | anti-islamic |

| sand nigger | Middle Eastern |

| sand rat | anti-islamic |

| sandflea | anti-islamic |

| sandmonkey | anti-islamic |

| sandmoolie | anti-islamic |

| sandnigger | anti-islamic |

| sandrat | anti-islamic |

| secure our border | immigrant |

| secureourborder | immigrant |

| shiptar | Middle Eastern |

| sioux indian | Native American |

| sioux nation | Native American |

| sioux tribe | Native American |

| slurpee nigger | anti-islamic |

| slurpeenigger | anti-islamic |

| somali | Black |

| somalian | Black |

| south african | Black |

| south american | Hispanic |

| south asian | Asian |

| sudanese | Black |

| sun goblin | Middle Eastern |

| syria | refugees |

| syrian | refugees |

| syrians | refugees |

| syrianrefugee | refugees |

| taco nigger | Hispanic |

| taiwanese | Asian |

| tanzanian | Black |

| tar baby | Black |

| tar-baby | Black |

| teepee creeper | Native American |

| tee-pee creeper | Native American |

| thai | Asian |

| thais | Asian |

| thin eyed | Asian |

| thin-eyed | Asian |

| tibetan | Asian |

| timber nigger | Native American |

| timbernigger | Native American |

| tomahawk chucker | Native American |

| tomahawk-chucker | Native American |

| tomahonky | Native American |

| towel head | anti-islamic |

| towelhead | anti-islamic |

| towel-head | Middle Eastern |

| undocumented | immigrant |

| unhcr | refugees |

| vietnamese | Asian |

| we welcome refugees | refugees |

| welcome refugee | refugees |

| welcomerefugee | refugees |

| wetback | Hispanic |

| whacky iraqi | Middle Eastern |

| whitegenocide | anti-islamic |

| wog | dark-skinned foreigner |

| zambian | Black |

| zimbabwean | Black |

| zipperhead | Asian |

| @artistsandfleas | exclude |

| negroni | exclude |

| new mexico border | exclude |

| deportes | exclude |

| deportiva | exclude |

| indiana | exclude |

| indianapolis | exclude |

References

- Williams, D.R.; Yan, Y.; Jackson, J.S.; Anderson, N.B. Racial Differences in Physical and Mental Health: Socio-economic Status, Stress and Discrimination. J. Health Psychol. 1997, 2, 335–351. [Google Scholar] [CrossRef] [PubMed]

- Krieger, N.; Smith, K.; Naishadham, D.; Hartman, C.; Barbeau, E.M. Experiences of discrimination: Validity and reliability of a self-report measure for population health research on racism and health. Soc. Sci. Med. 2005, 61, 1576–1596. [Google Scholar] [CrossRef]

- Stocké, V. Determinants and Consequences of Survey Respondents’ Social Desirability Beliefs about Racial Attitudes. Methodology 2007, 3, 125–138. [Google Scholar] [CrossRef][Green Version]

- An, B.P. The role of social desirability bias and racial/ethnic composition on the relation between education and attitude toward immigration restrictionism. Soc. Sci. J. 2015, 52, 459–467. [Google Scholar] [CrossRef]

- Nuru-Jeter, A.M.; Michaels, E.K.; Thomas, M.D.; Reeves, A.N.; Thorpe, R.J., Jr.; LaVeist, T.A. Relative Roles of Race Versus Socioeconomic Position in Studies of Health Inequalities: A Matter of Interpretation. Annu. Rev. Public Health 2018, 39, 169–188. [Google Scholar] [CrossRef] [PubMed]

- Krieger, N.; Sidney, S. Racial discrimination and blood pressure: The CARDIA Study of young black and white adults. Am. J. Public Health 1996, 86, 1370–1378. [Google Scholar] [CrossRef] [PubMed]

- Ito, T.A.; Friedman, N.P.; Bartholow, B.D.; Correll, J.; Loersch, C.; Altamirano, L.J.; Miyake, A. Toward a comprehensive understanding of executive cognitive function in implicit racial bias. J. Personal. Soc. Psychol. 2015, 108, 187–218. [Google Scholar] [CrossRef]

- Hahn, A.; Judd, C.M.; Hirsh, H.K.; Blair, I.V. Awareness of implicit attitudes. J. Exp. Psychol. Gen. 2014, 143, 1369–1392. [Google Scholar] [CrossRef] [PubMed]

- Turner, M.A.; Skidmore, F. Mortgage Lending Discrimination: A Review of Existing Evidence; Urban Inst: Washington, DC, USA, 1999. [Google Scholar]

- Pager, D. The Use of Field Experiments for Studies of Employment Discrimination: Contributions, Critiques, and Directions for the Future. Ann. Am. Acad. Polit. Soc. Sci. 2007, 609, 104–133. [Google Scholar] [CrossRef]

- Lauderdale, D.S. Birth outcomes for Arabic-named women in California before and after September 11. Demography 2006, 43, 185–201. [Google Scholar] [CrossRef] [PubMed]

- Quillian, L. New Approaches to Understanding Racial Prejudice and Discrimination. Annu. Rev. Sociol. 2006, 32, 299–328. [Google Scholar] [CrossRef]

- Lee, Y.; Muennig, P.; Kawachi, I.; Hatzenbuehler, M.L. Effects of Racial Prejudice on the Health of Communities: A Multilevel Survival Analysis. Am. J. Public Health 2015, 105, 2349–2355. [Google Scholar] [CrossRef] [PubMed]

- Mislove, A.; Lehmann, S.; Ahn, Y.; Onnela, J.P.; Rosenquist, J.N. Understanding the Demographics of Twitter Users. In Proceedings of the Fifth International AAAI Conference on Weblogs and Social Media, Catalonia, Spain, 17–21 July 2011; pp. 554–557. [Google Scholar]

- Suler, J. The Online Disinhibition Effect. CyberPsychol. Behav. 2004, 7, 321–326. [Google Scholar] [CrossRef]

- Mondal, M.; Silva, A.; Benevenuto, F. Measurement Study of Hate Speech in Social Media. In Proceedings of the HT’17 28th ACM Conference on Hypertext and Social Media, Prague, Czech Republic, 4–7 July 2017; pp. 85–94. [Google Scholar] [CrossRef]

- Pinsonneault, A.; Heppel, N. Anonymity in Group Support Systems Research: A New Conceptualization, Measure, and Contingency Framework. J. Manag. Inf. Syst. 1997, 14, 89–108. [Google Scholar] [CrossRef]

- Nguyen, Q.C.; Li, D.; Meng, H.W.; Kath, S.; Nsoesie, E.; Li, F.; Wen, M. Building a National Neighborhood Dataset From Geotagged Twitter Data for Indicators of Happiness, Diet, and Physical Activity. JMIR Public Health Surveill 2016, 2, e158. [Google Scholar] [CrossRef]

- Nguyen, Q.C.; Meng, H.; Li, D.; Kath, S.; McCullough, M.; Paul, D.; Kanokvimankul, P.; Nguyen, T.X.; Li, F. Social media indicators of the food environment and state health outcomes. Public Health 2017, 148, 120–128. [Google Scholar] [CrossRef]

- Bahk, C.Y.; Cumming, M.; Paushter, L.; Madoff, L.C.; Thomson, A.; Brownstein, J.S. Publicly available online tool facilitates real-time monitoring of vaccine conversations and sentiments. Health Aff. 2016, 35, 341–347. [Google Scholar] [CrossRef]

- Nsoesie, E.O.; Brownstein, J.S. Computational Approaches to Influenza Surveillance: Beyond Timeliness. Cell Host Microbe 2015, 17, 275–278. [Google Scholar] [CrossRef] [PubMed]

- Hawkins, J.B.; Brownstein, J.S.; Tuli, G.; Runels, T.; Broecker, K.; Nsoesie, E.O.; McIver, D.J.; Rozenblum, R.; Wright, A.; Bourgeois, F.T.; et al. Measuring patient-perceived quality of care in US hospitals using Twitter. BMJ Qual. Saf. 2016, 25, 404–413. [Google Scholar] [CrossRef]

- Chae, D.H.; Clouston, S.; Hatzenbuehler, M.L.; Kramer, M.R.; Cooper, H.L.; Wilson, S.M.; Stephens-Davidowitz, S.I.; Gold, R.S.; Link, B.G. Association between an Internet-Based Measure of Area Racism and Black Mortality. PLoS ONE 2015, 10, e0122963. [Google Scholar] [CrossRef] [PubMed]

- Bartlett, J.; Reffin, J.; Rumball, N.; Wiliamson, S. Anti-Social Media; Demos: London, UK, 2014. [Google Scholar]

- Stephens, M. Geography of Hate. Available online: https://users.humboldt.edu/mstephens/hate/hate_map.html# (accessed on 8 August 2018).

- The Racial Slur Database. Available online: http://www.rsdb.org/ (accessed on 7 August 2018).

- Guttman, A. R-trees: A dynamic index structure for spatial searching. In Proceedings of the 1984 ACM SIGMOD International Conference on Management of Data, Boston, MA, USA, 18–21 June 1984; pp. 47–57. [Google Scholar]

- Nguyen, Q.C.; Kath, S.; Meng, H.-W.; Li, D.; Smith, K.R.; VanDerslice, J.A.; Wen, M.; Li, F. Leveraging geotagged Twitter data to examine neighborhood happiness, diet, and physical activity. Appl. Geogr. 2016, 73, 77–88. [Google Scholar] [CrossRef] [PubMed]

- Stanford Natural Language Processing Group. Stanford Tokenizer. Available online: http://nlp.stanford.edu/software/tokenizer.shtml (accessed on 12 November 2018).

- Gunther, T.; Furrer, L. GU-MLT-LT: Sentiment Analysis of Short Messages using Linguistic Features and Stochastic Gradient Descent. In Proceedings of the Second Joint Conference on Lexical and Computational Semantics (SemEval@NAACL-HLT), Atlanta, Georgia, 13–14 June 2013; pp. 328–332. [Google Scholar]

- Stochastic Gradient Descent. Available online: http://scikit-learn.org/stable/modules/sgd.html (accessed on 2 October 2018).

- Go, A.; Bhayani, R.; Huang, L. Twitter Sentiment Classification Using distant Supervision; CS224N Project Report; Stanford University: Stanford, CA, USA, 2009; Volume 1. [Google Scholar]

- Sanders Analytics.Twitter Sentiment Corpus. Available online: http://www.sananalytics.com/lab/twitter-sentiment/ (accessed on 11 November 2018).

- Kaggle in Class. Sentiment classification. Available online: https://inclass.kaggle.com/c/si650winter11/ (accessed on 3 January 2019).

- Sentiment140. For Academics. Available online: http://help.sentiment140.com/for-students (accessed on 11 Novemer 2018).

- Kwok, I.; Wang, Y. Locate the Hate: Detecting Tweets against Blacks. In Proceedings of the Twenty-Seventh AAAI Conference on Artificial Intelligence, Bellevue, WA, USA, 14–18 July 2013. [Google Scholar]

- Nguyen, T.T.; Meng, H.-W.; Sandeep, S.; McCullough, M.; Yu, W.; Lau, Y.; Huang, D.; Nguyen, Q.C. Twitter-derived measures of sentiment towards minorities (2015–2016) and associations with low birth weight and preterm birth in the United States. Comput. Hum. Behav. 2018, 89, 308–315. [Google Scholar] [CrossRef] [PubMed]

- Eichstaedt, J.C.; Schwartz, H.A.; Kern, M.L.; Park, G.; Labarthe, D.R.; Merchant, R.M.; Jha, S.; Agrawal, M.; Dziurzynski, L.A.; Sap, M.; et al. Psychological Language on Twitter Predicts County-Level Heart Disease Mortality. Psychol. Sci. 2015, 26, 159–169. [Google Scholar] [CrossRef] [PubMed]

- Morstatter, F.; Pfeffer, J.; Liu, H.; Carley, K.M. Is the Sample Good Enough? Comparing Data from Twitter’s Streaming API with Twitter’s Firehose. In Proceedings of the 7th International AAAI Conference on Web Blogs and Social Media, Palo Alto, CA, USA, 25–28 June 2018. [Google Scholar]

- Greenwood, S.; Perrin, A.; Duggan, M. Social Media Update; Pew Research Center: Washington, DC, USA, 2016. [Google Scholar]

| Month | Percent of Tweets That Are Positive | ||||

|---|---|---|---|---|---|

| All Race | Blacks | Middle Eastern | Hispanic | Asian | |

| Jan | 15.7% | 15.6% | 11.4% | 17.0% | 16.2% |

| Feb | 18.1% | 16.2% | 12.4% | 17.8% | 20.9% |

| Mar | 16.0% | 15.0% | 11.5% | 18.0% | 16.4% |

| Apr | 14.7% | 13.3% | 13.4% | 18.0% | 19.3% |

| May | 15.5% | 13.7% | 14.0% | 17.8% | 18.3% |

| Jun | 15.4% | 13.9% | 12.4% | 16.5% | 18.3% |

| Jul | 15.0% | 14.1% | 11.5% | 15.1% | 17.2% |

| Aug | 14.7% | 14.1% | 11.8% | 14.8% | 15.8% |

| Sep | 15.0% | 13.5% | 10.8% | 15.6% | 17.6% |

| Oct | 15.6% | 14.7% | 11.0% | 16.6% | 16.8% |

| Nov | 15.2% | 15.6% | 9.6% | 15.7% | 16.7% |

| Dec | 14.9% | 14.7% | 9.7% | 16.1% | 16.0% |

| Maximum difference | 3.4% | 2.8% | 4.4% | 3.2% | 5.1% |

| Themes | Example Tweets |

|---|---|

| Negative Sentiment | |

| Innocuous |

|

| Complaints |

|

| Insults using derogatory language |

|

| Generalizations, use of racial slurs in derogatory ways |

|

| Hostile tweets, some mentioning violence |

|

| Positive Sentiment | |

| Cultural pride |

|

| Food |

|

| Loyalty within friendships |

|

| Denying stereotypes |

|

| Intimate Relationships | |

| Appearance |

|

| Affinity to a particular race |

|

| Cheating |

|

| Frustration over behavior of men or women |

|

| Sex |

|

| Introspection about own behavior |

|

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nguyen, T.T.; Criss, S.; Allen, A.M.; Glymour, M.M.; Phan, L.; Trevino, R.; Dasari, S.; Nguyen, Q.C. Pride, Love, and Twitter Rants: Combining Machine Learning and Qualitative Techniques to Understand What Our Tweets Reveal about Race in the US. Int. J. Environ. Res. Public Health 2019, 16, 1766. https://doi.org/10.3390/ijerph16101766

Nguyen TT, Criss S, Allen AM, Glymour MM, Phan L, Trevino R, Dasari S, Nguyen QC. Pride, Love, and Twitter Rants: Combining Machine Learning and Qualitative Techniques to Understand What Our Tweets Reveal about Race in the US. International Journal of Environmental Research and Public Health. 2019; 16(10):1766. https://doi.org/10.3390/ijerph16101766

Chicago/Turabian StyleNguyen, Thu T., Shaniece Criss, Amani M. Allen, M. Maria Glymour, Lynn Phan, Ryan Trevino, Shrikha Dasari, and Quynh C. Nguyen. 2019. "Pride, Love, and Twitter Rants: Combining Machine Learning and Qualitative Techniques to Understand What Our Tweets Reveal about Race in the US" International Journal of Environmental Research and Public Health 16, no. 10: 1766. https://doi.org/10.3390/ijerph16101766

APA StyleNguyen, T. T., Criss, S., Allen, A. M., Glymour, M. M., Phan, L., Trevino, R., Dasari, S., & Nguyen, Q. C. (2019). Pride, Love, and Twitter Rants: Combining Machine Learning and Qualitative Techniques to Understand What Our Tweets Reveal about Race in the US. International Journal of Environmental Research and Public Health, 16(10), 1766. https://doi.org/10.3390/ijerph16101766