Abstract

The increasing use of three-dimensional (3D) imaging techniques in dental medicine has boosted the development and use of artificial intelligence (AI) systems for various clinical problems. Cone beam computed tomography (CBCT) and intraoral/facial scans are potential sources of image data to develop 3D image-based AI systems for automated diagnosis, treatment planning, and prediction of treatment outcome. This review focuses on current developments and performance of AI for 3D imaging in dentomaxillofacial radiology (DMFR) as well as intraoral and facial scanning. In DMFR, machine learning-based algorithms proposed in the literature focus on three main applications, including automated diagnosis of dental and maxillofacial diseases, localization of anatomical landmarks for orthodontic and orthognathic treatment planning, and general improvement of image quality. Automatic recognition of teeth and diagnosis of facial deformations using AI systems based on intraoral and facial scanning will very likely be a field of increased interest in the future. The review is aimed at providing dental practitioners and interested colleagues in healthcare with a comprehensive understanding of the current trend of AI developments in the field of 3D imaging in dental medicine.

1. Introduction

Artificial intelligence (AI) is generally defined as intelligent computer programs capable of learning and applying knowledge to accomplish complex tasks such as to predict treatment outcomes, recognize objects, and answer questions [1]. Nowadays, AI technologies are widespread and penetrate many applications of our daily life, such as Amazon’s online shopping recommendations, Facebook’s image recognition, Netflix’s streaming videos, and the smartphone’s voice assistant [2]. For such daily life applications, it is characteristic that the initial use of an AI-driven system will give a more generalized outcome based on big data, and after repeated use by the individual, it will gradually present a more adapted and personalized outcome in accordance with the user’s characteristics. The remarkable success of AI in various fields of our daily life has inspired and is stimulating the development of AI systems in the field of medicine and, also, more specifically, dental medicine [3,4].

Radiology is deemed to be the front door for AI into medicine as digitally coded diagnostic images are more easily translated into computer language [5]. Thus, diagnostic images are seen as one of the primary sources of data used to develop AI systems for the purpose of an automated prediction of disease risk (such as osteoporotic bone fractures [6]), detection of pathologies (such as coronary artery calcification as a predictor for atherosclerosis [7]), and diagnosis of disease (such as skin cancers in dermatology [8]). Machine learning is a key component of AI, and commonly applied to develop image-based AI systems. Through a synergism between radiologists and the medical AI system used, increased work efficiency and more precise outcomes regarding the final diagnosis of various diseases are expected to be achieved [9,10].

In the field of dental and maxillofacial radiology (DMFR), reports on AI models used for diagnostic purposes and treatment planning cover a wide range of clinical applications, including automated localization of craniofacial anatomical structures/pathological changes, classification of maxillofacial cysts and/or tumors, and diagnosis of caries and periodontal lesions [11]. According to the literature related to clinical applications of AI in DMFR, most of the proposed machine learning algorithms were developed using two-dimensional (2D) diagnostic images, such as periapical, panoramic, and cephalometric radiographs [11]. However, 2D images have several limitations, including image magnification and distortion, superimposition of anatomical structures, and the lack of three-dimensional information for relevant landmarks/pathological changes. These may lower the diagnostic accuracy of the AI models trained using only 2D images [12]. For example, a 2D image-based AI model built for the detection of periodontal bone defects might not be able to detect three-walled bony defects, loss of buccal/oral cortical bone plates, or bone defects around overlapping teeth. Three-dimensional (3D) imaging techniques, including cone beam computed tomography (CBCT), as well as intraoral and facial scanning systems, are increasingly used in dental practice. CBCT imaging allows for the visualization and assessment of bony anatomic structures and/or pathological changes in 3D with high diagnostic accuracy and precision. The use of CBCT is of great help when conventional 2D imaging techniques do not provide sufficient information for diagnosis and treatment planning purposes [13]. Intraoral and facial scanning systems are reported to be reproducible and reliable to capture 3D soft-tissue images that can be used for digital treatment planning systems [14,15]. CBCT and intraoral/facial scans are considered as an ideal data source for developing AI models to overcome the limitations of 2D image-based algorithms [12,15]. Thus, the aim of this review is to describe current developments and to assess the performance of AI models for 3D imaging in DMFR, as well as intraoral and facial scanning.

2. Current Use of AI for 3D Imaging in DMFR

A literature search was conducted using PubMed to identify all existing studies of AI applications for 3D imaging in DMFR and intraoral/facial scanning. The search was conducted without restriction on the publication period but was limited to studies in English. The keywords used for the search were combinations of terms including “artificial intelligence”, “AI”, “machine learning”, “deep learning”, “convolutional neural networks”, “automatic”, ”automated”, “three-dimensional imaging”, “3D imaging”, “cone beam computed tomography”, “CBCT”, “three-dimensional scan”, “3D scan”, “intraoral scan”, “intraoral scanning”, “facial scan”, “facial scanning”, and/or “dentistry”. Reviews, conference papers, and studies using clinical/nonclinical image data were eligible for the initial screening process. Initially, titles of the identified studies were manually screened, and subsequently, abstracts of the relevant studies were read to identify studies for further full-text reading. Furthermore, references of included articles were examined to identify further relevant articles. As a result, approximately 650 publications were initially screened, and 23 publications were eventually included in the present review for data extraction (details provided in Table 1 and Table 2).

Table 1.

Characteristics of studies describing machine learning-based artificial intelligence (AI) models applied in dentomaxillofacial radiology (DMFR).

Table 2.

Characteristics of the machine learning-based AI models based on intraoral and facial scanning.

The methodological quality of the included studies was evaluated using the assessment criteria proposed by Hung et al. [11]. For proposed AI models for diagnosis/classification of a certain condition, four studies [16,17,18,19] were rated as having a “high” or an “unclear” risk of concern in the domain of subject selection because the testing dataset only consisted of images from subjects with the condition of interest. With regard to the selection of reference standards, all studies were considered as “low” risk of concern as expert judgment and clinical or pathological examination was applied as the reference standard. Concerns regarding the risk of bias were relatively high in the domain of index test, as ten [16,17,20,21,22,23,24,25,26,27] of the included studies did not test their AI models on independent images unused for developing the algorithms.

Table 1 exhibits the included studies regarding the use of AI for 3D imaging in DMFR. These studies focused on three main applications, including automated diagnosis of dental and maxillofacial diseases [16,17,18,19,20,28,29,30,31,32], localization of anatomical landmarks for orthodontic and orthognathic treatment planning [21,22,33,34,35], and improvement of image quality [23,36].

2.1. Automated Diagnosis of Dental and Maxillofacial Diseases

The basic principle of the learning algorithms for diagnostic purposes is to explore associations between the input image and output diagnosis. Theoretically, a machine learning algorithm is initially built using hand-crafted detectors of image features in a predefined framework, subsequently trained with the training data, iteratively adapted to minimize the error at the output, and eventually tested with the unseen testing data to verify its validity [37]. Deep learning, a subset of machine learning, is able to automatically learn to extract relevant image features without the requirement of the manual design of image feature detectors, which is currently considered as the most suitable method to develop image-based diagnostic AI models [12].

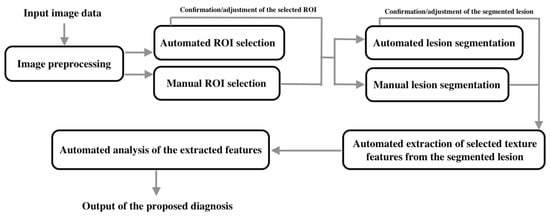

The workflow of the proposed machine learning algorithms for diagnostic purpose can be mainly categorized as (see Figure 1).

Figure 1.

The workflow of the proposed machine learning algorithms for diagnostic purposes.

- Input image data;

- Image preprocessing;

- Selection of the region of interest (ROI);

- Segmentation of lesions;

- Extraction of selected texture features in the segmented lesions;

- Analysis of the extracted features;

- Output of the diagnosis or classification.

Some of the proposed machine learning algorithms were not fully automated and required manual operation/adjustment for the ROI selection or lesion segmentation. Okada et al. proposed a semiautomatic machine learning algorithm, using CBCT images to classify periapical cysts and granulomas [16]. This algorithm requires users to segment the target lesion before it proceeds to the next step (feature extraction). Yilmaz et al. proposed a semiautomatic algorithm, using CBCT images to classify periapical cysts and keratocysts [18]. In this algorithm, detection and segmentation of lesions are required to be performed manually. The users need to mark the lesion on different cross-sectional planes to predefine the volume of interest containing the lesion. Manual segmentation of cystic lesions on multiple CBCT slices is time-consuming, which limits the efficiency of the algorithms and also their implementation for routine clinical use. Lee et al. proposed deep learning algorithms, respectively, using panoramic radiographs and CBCT images for the detection and diagnosis of periapical cysts, dentigerous cysts, and keratocysts [19]. It was reported that automatic edge detection techniques can segment cystic lesions more efficiently and accurately than manual segmentation. This can shorten the execution time for the segmentation step and improve the usability of the proposed algorithms for clinical practice. Moreover, higher diagnostic accuracy was reported for CBCT image-based algorithms in comparison with panoramic image-based ones. This may result from a higher accuracy in detecting the lesion boundary in 3D and more quantitative features extracted from the voxel units. Abdolali et al. proposed an algorithm based on asymmetry analysis using CBCT images to automatically segment cystic lesions, including dentigerous cysts, radicular cysts, and keratocysts [39]. The algorithm exhibited promising performance with high true-positives and low false-positives. However, its limitations include a relatively low detection rate for small cysts, imperfect segmentation of keratocysts without well-defined boundaries, and the incapability of dealing with symmetric cysts crossing the midsagittal plane. Based on the proposed segmentation algorithm, Abdolali et al. developed another AI model using CBCT images to automatically classify dentigerous cysts, radicular cysts, and keratocysts [17]. This model exhibited high classification accuracies ranging from 94.29% to 96.48%. Subsequently, Abdolali et al. further proposed a fully automated medical-content-based image retrieval system for the diagnosis of four maxillofacial lesions/conditions, including radiolucent lesions, maxillary sinus perforation, unerupted teeth, and root fractures [29]. In this novel system, an improved version of a previously proposed segmentation algorithm [39] was incorporated. The diagnostic accuracy of the proposed system was 90%, with a significantly reduced segmentation time of three minutes per case. It was stated that this system is more effective than previous models proposed in the literature, and is promising for introduction into clinical practice in the near future.

Orhan et al. verified the performance of a deep learning algorithm using CBCT images to detect and volumetrically measure periapical lesions [28]. A detection rate of 92.8% and a significant positive correlation between the automated and manual measurements were reported. The differences between manual and automated measurements are mainly due to inaccurate lesion segmentation. Because of low soft-tissue contrast in CBCT images, the deep learning algorithm exhibits difficulties in perfectly distinguishing the lesion area from neighboring soft tissue when buccal/oral cortical perforations or endo-perio lesions occur. Johari et al. proposed deep learning algorithms using periapical and CBCT images to detect vertical root fractures [30]. The results showed that the proposed model resulted in higher diagnostic performance for CBCT images than periapicals. Furthermore, some studies have reported on the application of deep learning algorithms for the diagnosis of Sjögren’s syndrome or lymph node metastasis. Kise et al. proposed a deep learning algorithm using CT images to assist inexperienced radiologists to semiautomatically diagnose Sjögren’s syndrome [32]. The results exhibited that the diagnostic performance of the deep learning algorithm is comparable to experienced radiologists and is significantly higher than for inexperienced radiologists. The main limitation of the proposed algorithm is its semiautomatic nature, requiring manual image segmentation prior to performing automated diagnosis. For further ease and implementation in daily routine, a completely automated segmentation of the region of the parotid gland should be developed and incorporated into a fully automated diagnostic system. Kann et al. and Ariji et al., respectively, proposed deep learning algorithms using contrast-enhanced CT images to semiautomatically identify nodal metastasis in patients with oral/head and neck cancer [20,31]. The user of the respective programs is required to manually segment the contour of lymph nodes on multiple CT slices. Excellent performance was reported for both algorithms proposed, which was close to or even surpassed the diagnostic accuracy of experienced radiologists. Therefore, these deep learning algorithms have the potential to help guide oral/head and neck cancer patient management. Future investigations should focus on the development of a fully automated identification system to avoid manual segmentation of lymph nodes. This can significantly improve the efficiency of the AI system used and could enable wider use of this system in community clinics.

2.2. Automated Localization of Anatomical Landmarks for Orthodontic and Orthognathic Treatment Planning

The correct analysis of craniofacial anatomy and facial proportions is the basis of successful orthodontic and orthognathic treatment. Traditional orthodontic analysis is generally conducted on 2D cephalometric radiographs, which can be less accurate due to image magnification, superimposition of structures, inappropriate X-ray projection angle, and patient position. Since CBCT was introduced in dental medicine, 3D diagnosis and virtual treatment planning have been assessed as a more accurate option for orthodontic and orthognathic treatment [40]. Although 3D orthodontic analysis can be performed by a computer-aided digital tracing approach, it still requires orthodontists to manually locate anatomical landmarks on multiple CBCT slices. The manual localization process is tedious and time-consuming, which may currently discourage orthodontists from switching to a fully digital workflow. Cheng et al. proposed the first machine learning algorithm to automatically localize one key landmark on CBCT images and reported promising results [33]. Subsequently, a series of machine learning algorithms were developed for automated localization of several anatomical landmarks and analysis of dentofacial deformity. Shahidi et al. proposed a machine-learning algorithm to automatically locate 14 craniofacial landmarks on CBCT images, whereas the mean deviation (3.40 mm) for all of the automatically identified landmarks was higher than the mean deviation (1.41 mm) for the manually detected ones [34]. Montufar et al. proposed two different automatic landmark localization systems, respectively, based on active shape models and a hybrid approach using active shape models followed by a 3D knowledge-based searching algorithm [21,22]. The mean deviation (2.51 mm) for all of the automatically identified landmarks in the hybrid system was lower than that of the system only using active shape models (3.64 mm). Despite less localization deviation, the performance of automated localization in the proposed systems is still not accurate enough to meet clinical requirements. Therefore, the existing AI systems can only be recommended for the use of preliminary localization of the orthodontic landmarks, but manual correction is still necessary prior to further orthodontic analyses. This may be the main limitation of these AI systems and this needs to be improved for future clinical dissemination and use.

Orthodontic and orthognathic treatments in patients with craniofacial deformities are challenging. The aforementioned AI systems may not be able to effectively deal with such patients. Torosdagli et al. proposed a novel deep learning algorithm applied for fully automated mandible segmentation and landmarking in craniofacial anomalies on CBCT images [35]. The proposed algorithm allows for orthodontic analysis in patients with craniofacial deformities and showed excellent performance with a sensitivity of 93.42% and specificity of 99.97%. Future studies should consider widening the field of applications for AI systems, especially for different patient populations.

2.3. Automated Improvement of Image Quality

Radiation dose protection is of paramount importance in medicine and also for DMFR. It is reported that medical radiation exposure is the largest artificial radiation source and represents approximately 14% of the total annual dose of ionizing radiation for individuals [41]. Computed tomography (CT) imaging is widely used to assist clinical diagnosis in various fields of medicine. Reducing the scanning slice thickness is the general option to enhance the resolution of CT images. However, this will increase the noise level as well as radiation dose exposure to the patient. High-resolution CT images are recommended only when low-resolution CT images do not provide sufficient information for diagnosis and treatment planning purposes in individual cases [42]. The balance between the radiation dose and CT image resolution is the biggest concern for radiologists. To address this issue, Park et al. proposed a deep learning algorithm to enhance the thick-slice CT image resolution similar to that of a thin slice [36]. It is reported that the noise level of the enhanced CT images is even lower than the original images. Therefore, this algorithm has the potential to be a useful tool for enhancing the image resolution for CT scans as well as reducing the radiation dose and noise level. It is expected that such an algorithm can further be developed for CBCT scans.

The presence of metal artifacts in CT/CBCT images is another critical issue that can obscure neighboring anatomical structures and interfere with disease diagnosis. In dental medicine, metal artifacts are not uncommon in CBCT images due to materials used for dental restorations or orthodontic purposes. These metal artifacts not only interfere with disease diagnosis but, in some cases, impede the image segmentation of the teeth and bony structures in the maxilla and mandible for computer-guided treatment. Minnema et al. proposed a deep learning algorithm based on a mixed-scale dense convolutional neural network for the segmentation of teeth and bone on CBCT images affected by metal artifacts [23]. It is reported that the proposed algorithm can accurately classify metal artifacts as background and segment teeth and bony structures. The promising results prove that a convolutional neural network is capable of extracting the characteristic features in CBCT voxel units that cannot be distinguished by human eyes.

2.4. Other Applications

In addition to the above AI applications, automated tooth detection, classification, and numbering are also fields of great interest, and they have the potential to simplify the process of filling out digital dental charts [43]. Miki et al. developed a deep learning algorithm based on a convolutional neural network to automatically classify tooth types based on CBCT images [38]. Although this algorithm was designed for automated filling of dental charts for forensic identification purposes, it may also be valuable to incorporate it into the digital treatment planning system, especially for use in implantology and prosthetics. For example, such an application may contribute to the automated identification of missing teeth for the diagnosis and planning of implants or other prosthetic treatments.

3. Current Use of AI for Intraoral 3D Imaging and Facial Scanning

In recent years, computer-aided design and manufacturing (CAD/CAM) technology have been widely used in various fields of dentistry, especially in implantology, prosthetics, orthodontics, and maxillofacial surgery. For example, CAD/CAM technology can be used for the fabrication of surgical implant guides, provisional/definitive restorations, orthodontic appliances, and maxillofacial surgical templates. Most of these applications are based on 3D hard and soft tissue images generated by CBCT and optical scanning (such as intraoral/facial scanning and scanning of dental casts/impressions). Intraoral scanning is the most accurate method of digitalizing the 3D contour of teeth and gingiva [44]. As a result, the intraoral scanning technique is now gradually replacing the scanning of dental casts or impressions and is also frequently used in CAD/CAM systems. Tooth segmentation is a critical step, which is usually performed manually by trained dental practitioners in a digital workflow to design and fabricate restorations and orthodontic appliances. However, manual segmentation is time-consuming, poorly reproducible, and limited due to human error, which may eventually have a negative influence on treatment outcome. Ghazvinian Zanjani et al. and Kim et al., respectively, developed deep learning algorithms for automated tooth segmentation on digitalized 3D dental surface models resulting in high segmentation precision (Table 2) [24,45]. These algorithms can speed up the digital workflow and reduce human error. Furthermore, Lian et al. proposed an automated tooth labeling algorithm based on intraoral scanning [25]. This algorithm can simplify the process of tooth position rearrangements in orthodontic treatment planning.

Currently, only a few studies have reported on the use of machine learning techniques based on facial scanning (Table 2). Knoops et al. proposed an AI 3D-morphable model based on facial scanning to automatically analyze facial shape features for diagnosis and planning in plastic and reconstructive surgery [26]. In addition, this model is also able to predict patient-specific postoperative outcomes. The proposed model may improve the efficiency and accuracy in diagnosis and treatment planning, and help preoperative communication with the patient. However, this model can only perform an analysis based on 3D facial scanning alone. As facial scanning is unable to acquire volumetric bone data, the information about the underlying skeletal structures cannot be analyzed by this model. An updated model that can perform the analysis simultaneously on facial soft tissue and skeletal structures will be more realistic and probably more effective for clinical use.

Interestingly, facial scanning techniques in combination with AI can also be used for the diagnosis of neurodevelopmental disorders, such as autism spectrum disorder (ASD). Liu et al. explored the possibility of using a machine learning algorithm based on facial scanning to identify ASD and showed promising results with an accuracy of 88.51% (Table 2) [27]. This algorithm could be a supportive tool for the screening and diagnosis of ASD in clinical practice.

4. Limitations of the Included Studies

While the AI models proposed in the included studies have shown promising performance, several limitations are worth noting, which may affect the reliability of the proposed models. First, most of the proposed AI models were developed using a small number of images collected from the same institution over one defined time period (see details in Table 1, Table 2 and Table 3). Additionally, some classification models were only trained and tested using images from subjects with confirmed diseases (Table 3). These limitations might result in a risk of overfitting and a too optimistic appraisal of the proposed models. In addition, the images used to develop the algorithms might very likely be captured using the same device and imaging protocols, resulting in a lack of data heterogeneity (Table 3). This might cause a lack of generalizability and reliability of the proposed models and can result in inferior performance in clinical practice settings due to differences in variables, including devices, imaging protocols, and patient populations [46]. Thus, these models may still need to be verified by using adequate heterogeneous data collected from different dental institutions prior to being transferred and implemented into clinical practice.

Table 3.

Conclusions and limitations of the included studies.

5. Conclusions

The AI models described in the included studies exhibited various potential applications for 3D imaging in dental medicine, such as automated diagnosis of cystic lesions, localization of anatomical landmarks, and classification/segmentation of teeth (see details in Table 3). The performance of most of the proposed machine learning algorithms was considered satisfactory for clinical use, but with room for improvement. Currently, none of the algorithms described are commercially available. It is expected that the developed AI systems will be available as open-source for others to verify their findings and this will eventually lead to true impact in different dental settings. By such an approach, they will also be more easily accessible and potentially user-friendly for dental practitioners.

Up to date, most of the proposed machine learning algorithms were designed to address specific clinical issues in various fields of dental medicine. In the future, it is expected that various relevant algorithms would be integrated into one intelligent workflow system specifically designed for dental clinic use [47]. After input of the patient’s demographic data, medical history, clinical findings, 2D/3D diagnostic images, and/or intraoral/facial scans, the system could automatically conduct an overall analysis of the patient. The gathered data might contribute to a better understanding of the health condition of the respective patient and the development of personalized dental medicine, and subsequently, an individualized diagnosis, recommendations for comprehensive interdisciplinary treatment plans, and prediction of the treatment outcome and follow-up. This information will be provided to assist dental practitioners in making evidence-based decisions for each individual based on a real-time up-to-date big database. Furthermore, the capability of deep learning to analyze the information in each pixel/voxel unit may help to detect early lesions or unhealthy conditions that cannot be readily seen by human eyes. The future goals of AI development in dental medicine can be expected to not only improve patient care and radiologist’s work but also surpass human experts in achieving more timely diagnoses. Long working hours and uncomfortable work environments may affect the performance of radiologists, whereas a more consistent performance of AI systems can be achieved regardless of working hours and conditions.

It is worth noting that although the development of AI in healthcare is vigorously supported by world-leading medical and technological institutions, the current evidence of AI applications for 3D imaging in dental medicine is very limited. The lack of adequate studies on this topic has resulted in the present methodological approach to provide findings from the literature rather than a pure systematic review. Thus, a selection bias could very likely not be eliminated due to the design of the study, which is certainly a relevant limitation of the present article. Nevertheless, the results presented might have a positive and stimulating impact on future studies and research in this field and hopefully will result in academic debate.

Author Contributions

Conceptualization, M.M.B.; Methodology, A.W.K.Y. and K.H.; Writing—Original Draft Preparation, K.H. and M.M.B.; Writing—Review and Editing, A.W.K.Y. and R.T.; Supervision, M.M.B.; Project Administration, M.M.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Stone, P.; Brooks, R.; Brynjolfsson, E.; Calo, R.; Etzioni, O.; Hager, G.; Hirschberg, J.; Kalyanakrishnan, S.; Kamar, E.; Kraus, S.; et al. Artificial Intelligence and Life in 2030. One Hundred Year Study on Artificial Intelligence: Report of the 2015–2016 Study Panel, Stanford University, Stanford, CA. Available online: https://ai100.stanford.edu/2016-report (accessed on 12 March 2020).

- Gandomi, A.; Haider, M. Beyond the hype: Big data concepts, methods, and analytics. Int. J. Inf. Manag. 2015, 35, 137–144. [Google Scholar] [CrossRef]

- Jiang, F.; Jiang, Y.; Zhi, H.; Dong, Y.; Li, H.; Ma, S.; Wang, Y.; Dong, Q.; Shen, H.; Wang, Y. Artificial intelligence in healthcare: Past, present and future. Stroke Vasc. Neurol. 2017, 2, 230–243. [Google Scholar] [CrossRef] [PubMed]

- Hamet, P.; Tremblay, J. Artificial intelligence in medicine. Metabolism 2017, 69, S36–S40. [Google Scholar] [CrossRef] [PubMed]

- Fazal, M.I.; Patel, M.E.; Tye, J.; Gupta, Y. The past, present and future role of artificial intelligence in imaging. Eur. J. Radiol. 2018, 105, 246–250. [Google Scholar] [CrossRef] [PubMed]

- Ferizi, U.; Besser, H.; Hysi, P.; Jacobs, J.; Rajapakse, C.S.; Chen, C.; Saha, P.K.; Honig, S.; Chang, G. Artificial intelligence applied to osteoporosis: A performance comparison of machine learning algorithms in predicting fragility fractures from MRI data. J. Magn. Reson. Imaging 2019, 49, 1029–1038. [Google Scholar] [CrossRef] [PubMed]

- Schuhbaeck, A.; Otaki, Y.; Achenbach, S.; Schneider, C.; Slomka, P.; Berman, D.S.; Dey, D. Coronary calcium scoring from contrast coronary CT angiography using a semiautomated standardized method. J. Cardiovasc. Comput. Tomogr. 2015, 9, 446–453. [Google Scholar] [CrossRef]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.; van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef]

- Hosny, A.; Parmar, C.; Quackenbush, J.; Schwartz, L.H.; Aerts, H.J.W.L. Artificial intelligence in radiology. Nat. Rev. Cancer 2018, 18, 500–510. [Google Scholar] [CrossRef]

- Hung, K.; Montalvao, C.; Tanaka, R.; Kawai, T.; Bornstein, M.M. The use and performance of artificial intelligence applications in dental and maxillofacial radiology: A systematic review. Dentomaxillofac. Radiol. 2020, 49, 20190107. [Google Scholar] [CrossRef]

- Leite, A.F.; Vasconcelos, K.F.; Willems, H.; Jacobs, R. Radiomics and machine learning in oral healthcare. Proteom. Clin. Appl. 2020, 14, e1900040. [Google Scholar] [CrossRef] [PubMed]

- Pauwels, R.; Araki, K.; Siewerdsen, J.H.; Thongvigitmanee, S.S. Technical aspects of dental CBCT: State of the art. Dentomaxillofac. Radiol. 2015, 44, 20140224. [Google Scholar] [CrossRef] [PubMed]

- Baysal, A.; Sahan, A.O.; Ozturk, M.A.; Uysal, T. Reproducibility and reliability of three-dimensional soft tissue landmark identification using three-dimensional stereophotogrammetry. Angle Orthod. 2016, 86, 1004–1009. [Google Scholar] [CrossRef] [PubMed]

- Hwang, J.J.; Jung, Y.H.; Cho, B.H.; Heo, M.S. An overview of deep learning in the field of dentistry. Imaging Sci. Dent. 2019, 49, 1–7. [Google Scholar] [CrossRef]

- Okada, K.; Rysavy, S.; Flores, A.; Linguraru, M.G. Noninvasive differential diagnosis of dental periapical lesions in cone-beam CT scans. Med. Phys. 2015, 42, 1653–1665. [Google Scholar] [CrossRef]

- Abdolali, F.; Zoroofi, R.A.; Otake, Y.; Sato, Y. Automated classification of maxillofacial cysts in cone beam CT images using contourlet transformation and Spherical Harmonics. Comput. Methods Programs Biomed. 2017, 139, 197–207. [Google Scholar] [CrossRef]

- Yilmaz, E.; Kayikcioglu, T.; Kayipmaz, S. Computer-aided diagnosis of periapical cyst and keratocystic odontogenic tumor on cone beam computed tomography. Comput. Methods Programs Biomed. 2017, 146, 91–100. [Google Scholar] [CrossRef]

- Lee, J.H.; Kim, D.H.; Jeong, S.N. Diagnosis of cystic lesions using panoramic and cone beam computed tomographic images based on deep learning neural network. Oral Dis. 2020, 26, 152–158. [Google Scholar] [CrossRef]

- Ariji, Y.; Fukuda, M.; Kise, Y.; Nozawa, M.; Yanashita, Y.; Fujita, H.; Katsumata, A.; Ariji, E. Contrast-enhanced computed tomography image assessment of cervical lymph node metastasis in patients with oral cancer by using a deep learning system of artificial intelligence. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2019, 127, 458–463. [Google Scholar] [CrossRef]

- Montufar, J.; Romero, M.; Scougall-Vilchis, R.J. Automatic 3-dimensional cephalometric landmarking based on active shape models in related projections. Am. J. Orthod. Dentofac. Orthop. 2018, 153, 449–458. [Google Scholar] [CrossRef]

- Montufar, J.; Romero, M.; Scougall-Vilchis, R.J. Hybrid approach for automatic cephalometric landmark annotation on cone-beam computed tomography volumes. Am. J. Orthod. Dentofac. Orthop. 2018, 154, 140–150. [Google Scholar] [CrossRef] [PubMed]

- Minnema, J.; van Eijnatten, M.; Hendriksen, A.A.; Liberton, N.; Pelt, D.M.; Batenburg, K.J.; Forouzanfar, T.; Wolff, J. Segmentation of dental cone-beam CT scans affected by metal artifacts using a mixed-scale dense convolutional neural network. Med. Phys. 2019, 46, 5027–5035. [Google Scholar] [CrossRef] [PubMed]

- Ghazvinian Zanjani, F.; Anssari Moin, D.; Verheij, B.; Claessen, F.; Cherici, T.; Tan, T.; de With, P.H.N. Deep learning approach to semantic segmentation in 3D point cloud intra-oral scans of teeth. MIDL 2019, 102, 557–571. [Google Scholar]

- Lian, C.; Wang, L.; Wu, T.H.; Wang, F.; Yap, P.T.; Ko, C.C.; Shen, D. Deep multi-scale mesh feature learning for automated labeling of raw dental surfaces from 3D intraoral scanners. IEEE Trans. Med. Imaging 2020, in press. [Google Scholar] [CrossRef]

- Knoops, P.G.M.; Papaioannou, A.; Borghi, A.; Breakey, R.W.F.; Wilson, A.T.; Jeelani, O.; Zafeiriou, S.; Steinbacher, D.; Padwa, B.L.; Dunaway, D.J.; et al. A machine learning framework for automated diagnosis and computer-assisted planning in plastic and reconstructive surgery. Sci. Rep. 2019, 9, 13597. [Google Scholar] [CrossRef]

- Liu, W.; Li, M.; Yi, L. Identifying children with autism spectrum disorder based on their face processing abnormality: A machine learning framework. Autism Res. 2016, 9, 888–998. [Google Scholar] [CrossRef]

- Orhan, K.; Bayrakdar, I.S.; Ezhov, M.; Kravtsov, A.; Ozyurek, T. Evaluation of artificial intelligence for detecting periapical pathosis on cone-beam computed tomography scans. Int. Endod. J. 2020, 53, 680–689. [Google Scholar] [CrossRef]

- Abdolali, F.; Zoroofi, R.A.; Otake, Y.; Sato, Y. A novel image-based retrieval system for characterization of maxillofacial lesions in cone beam CT images. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 785–796. [Google Scholar] [CrossRef]

- Johari, M.; Esmaeili, F.; Andalib, A.; Garjani, S.; Saberkari, H. Detection of vertical root fractures in intact and endodontically treated premolar teeth by designing a probabilistic neural network: An ex vivo study. Dentomaxillofac. Radiol. 2017, 46, 20160107. [Google Scholar] [CrossRef]

- Kann, B.H.; Aneja, S.; Loganadane, G.V.; Kelly, J.R.; Smith, S.M.; Decker, R.H.; Yu, J.B.; Park, H.S.; Yarbrough, W.G.; Malhotra, A.; et al. Pretreatment identification of head and neck cancer nodal metastasis and extranodal extension using deep learning neural networks. Sci. Rep. 2018, 8, 14036. [Google Scholar] [CrossRef]

- Kise, Y.; Ikeda, H.; Fujii, T.; Fukuda, M.; Ariji, Y.; Fujita, H.; Katsumata, A.; Ariji, E. Preliminary study on the application of deep learning system to diagnosis of Sjögren's syndrome on CT images. Dentomaxillofac. Radiol. 2019, 48, 20190019. [Google Scholar] [CrossRef] [PubMed]

- Cheng, E.; Chen, J.; Yang, J.; Deng, H.; Wu, Y.; Megalooikonomou, V.; Gable, B.; Ling, H. Automatic Dent-landmark detection in 3-D CBCT dental volumes. Conf. Proc. IEEE Eng. Med. Biol. Soc. 2011, 2011, 6204–6207. [Google Scholar] [PubMed]

- Shahidi, S.; Bahrampour, E.; Soltanimehr, E.; Zamani, A.; Oshagh, M.; Moattari, M.; Mehdizadeh, A. The accuracy of a designed software for automated localization of craniofacial landmarks on CBCT images. BMC Med. Imaging 2014, 14, 32. [Google Scholar] [CrossRef] [PubMed]

- Torosdagli, N.; Liberton, D.K.; Verma, P.; Sincan, M.; Lee, J.S.; Bagci, U. Deep geodesic learning for segmentation and anatomical landmarking. IEEE Trans. Med. Imaging 2019, 38, 919–931. [Google Scholar] [CrossRef] [PubMed]

- Park, J.; Hwang, D.; Kim, K.Y.; Kang, S.K.; Kim, Y.K.; Lee, J.S. Computed tomography super-resolution using deep convolutional neural network. Phys. Med. Biol. 2018, 63, 145011. [Google Scholar] [CrossRef]

- ter Haar Romeny, B.M. A deeper understanding of deep learning. In Artificial Intelligence in Medical Imaging: Opportunities, Applications and Risks, 1st ed.; Ranschaert, E.R., Morozov, S., Algra, P.R., Eds.; Springer: Berlin, Germany, 2019; pp. 25–38. [Google Scholar]

- Miki, Y.; Muramatsu, C.; Hayashi, T.; Zhou, X.; Hara, T.; Katsumata, A.; Fujita, H. Classification of teeth in cone-beam CT using deep convolutional neural network. Comput. Biol. Med. 2017, 80, 24–29. [Google Scholar] [CrossRef]

- Abdolali, F.; Zoroofi, R.A.; Otake, Y.; Sato, Y. Automatic segmentation of maxillofacial cysts in cone beam CT images. Comput. Biol. Med. 2016, 72, 108–119. [Google Scholar] [CrossRef]

- Scarfe, W.C.; Azevedo, B.; Toghyani, S.; Farman, A.G. Cone beam computed tomographic imaging in orthodontics. Aust. Dent. J. 2017, 62, 33–50. [Google Scholar] [CrossRef]

- Bornstein, M.M.; Yeung, W.K.A.; Montalvao, C.; Colsoul, N.; Parker, Q.A.; Jacobs, R. Facts and Fallacies of Radiation Risk in Dental Radiology. Available online: http://facdent.hku.hk/docs/ke/2019_Radiology_KE_booklet_en.pdf (accessed on 12 March 2020).

- Yeung, A.W.K.; Jacobs, R.; Bornstein, M.M. Novel low-dose protocols using cone beam computed tomography in dental medicine: A review focusing on indications, limitations, and future possibilities. Clin. Oral Investig. 2019, 23, 2573–2581. [Google Scholar] [CrossRef]

- Tuzoff, D.V.; Tuzova, L.N.; Bornstein, M.M.; Krasnov, A.S.; Kharchenko, M.A.; Nikolenko, S.I.; Sveshnikov, M.M.; Bednenko, G.B. Tooth detection and numbering in panoramic radiographs using convolutional neural networks. Dentomaxillofac. Radiol. 2019, 48, 20180051. [Google Scholar] [CrossRef]

- Tomita, Y.; Uechi, J.; Konno, M.; Sasamoto, S.; Iijima, M.; Mizoguchi, I. Accuracy of digital models generated by conventional impression/plaster-model methods and intraoral scanning. Dent. Mater. J. 2018, 37, 628–633. [Google Scholar] [CrossRef] [PubMed]

- Kim, T.; Cho, Y.; Kim, D.; Chang, M.; Kim, Y.J. Tooth segmentation of 3D scan data using generative adversarial networks. Appl. Sci. 2020, 10, 490. [Google Scholar] [CrossRef]

- Morey, J.M.; Haney, N.M.; Kim, W. Applications of AI beyond image interpretation. In Artificial Intelligence in Medical Imaging: Opportunities, Applications and Risks, 1st ed.; Ranschaert, E.R., Morozov, S., Algra, P.R., Eds.; Springer: Berlin, Germany, 2019; pp. 129–144. [Google Scholar]

- Chen, Y.W.; Stanley, K.; Att, W. Artificial intelligence in dentistry: Current applications and future perspectives. Quintessence Int. 2020, 51, 248–257. [Google Scholar] [PubMed]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).