1. Introduction

Internet of things (IoT) is an interconnected scheme which promotes seamless information exchange between devices (e.g., smart home sensors, environmental sensors, automotive and road-side sensors, medical devices, industrial robots and surveillance devices) [

1]. Recently, the emergence of the IoT has significantly increased its use in communities and services around the world, with the number of the linked IoT devices reaching 27 billion in 2017, and the number is projected to hit about 125 billion in 2030 [

2]. IoT devices use different types of services, technologies and protocols. As a result, huge complexity will arise to maintain the future IoT infrastructures, which consequently leads to undesirable vulnerability to the system [

3,

4].

Since IoT devices are used in smart city applications, cyber-attacks can access in an unauthorized manner the details of citizen’s everyday activities without the knowledge of the user or administrator or reconfigure devices to an unsecured setting (e.g., in Miria botnet attack [

5,

6], a malware that transforms Linux networked devices remotely). In 2019, Symantec recorded a 600% rise in attacks on the IoT platform [

7] where attackers tried to manipulate the linked nature of those devices.

Smart city applications pose several security challenges. Firstly, zero-day attacks can occur by exploiting vulnerabilities in different protocols in smart city applications. Secondly, is it possible to identify cyber-attacks from the network intelligently before it disrupts smart city operations? Thirdly, the IoT devices used in smart cities are resource (e.g., memory) constrained, are typically resource constrained, have limited onboard functionality for security operations and send captured data to cloud servers for processing. Existing intrusion detection systems (IDS) do not take IoT devices into account. Combining all these issues, is it is possible to design an IDS design an IDS that is tailored for IoT networks?

The data collected from the IoT system is stored on the cloud computing environment which has progressively advanced processors and adequate memory assets. However, the volume of data transmitted from the IoT terminal layer to the cloud has increased rapidly with the recent increases in IoT devices and this causes delay and congestion problems in the cloud. Fog computing is designed as a possible solution to these problems [

8]. The fog layer devices can share a greater amount of computing load originally transferred to the cloud. This reduces energy consumption, network traffic and latency and removes the data storage and transmission problem. It also aims to push the computation process near the edge device, enabling a quick response to the IoT-based smart city applications. The benefits of cyber attack detection in the fog layer are two folds [

9]. Firstly, the ISP or network administrator can take necessary steps to prevent large damage if attacks (e.g., infected devices) are identified early in the fog layer. Secondly, it will not interrupt the normal flow of urban life.

In the literature, some techniques (e.g., signature base techniques) have been proposed to resolve the above-mentioned issue. In the signature-based technique, a collection of previously produced signatures (attacks) are checked against the current suspicious samples [

10]. If the signature extraction method is not fully able to capture the distinct feature of attacks or attack families, it may lead to misdetection of an attack or produce false alarm [

11]. This technique is not suitable is not suitable for identifying unknown attacks and suffers from high processing overhead. Machine learning techniques can detect attacks during runtime and take less processing time compared to other techniques.

In this paper, we explore a machine learning-based attack and anomaly detection technique in IoT-based smart city applications. This technique is able to identify infected IoT devices which is a major challenge in the cloud computing environment [

12,

13]. The technique is based on the implementation of a training model in the distributed fog networks that can learn intelligently from training near to IoT layer devices and detect attack and anomaly.

A single classifier is often insufficient to develop an effective IDS, motivating researchers to build an ensemble model of classifiers. Taking a multitude of models into account, ensemble methods combine those models to generate one final model. Research has demonstrated that the ensemble model produces better performance compared to the single classifier [

14]. However, there are many factors (e.g., feature selection and base classifier) that need to be considered carefully to ensure enhanced performance by the ensemble method. The most suitable ensemble techniques are bagging [

15], boosting [

16] and stacking [

17]. In this paper, we use individual classifiers as well as ensemble techniques to achieve better IDS performance in terms of different evaluation metrics such as accuracy, precision, recall and F1-Score.

The contributions of this paper are summarized as follows:

We explore a machine learning based attack and anomaly detection technique through analyzing network traffic in distributed fog networks over the IoT-based systems.

Existing works have generally used signature-based techniques to detect attacks and anomalies. These techniques suffer from high overheads and are vulnerable to known threats. In this paper, we explore the feasibility of ensemble based learning as compared to single model classifiers for identifying cyberattacks in IoT-based smart city applications. Further, we consider a multi-class classification setting as compared to binary class prediction considered in most relevant works. On top of these, we consider an integration of feature selection and cross validation, which even common machine learning approaches have not been well focused in existing literature for this domain.

Extensive evaluation incorporating the above integrations shows that the ensemble of machine learning-based classifiers works better in accurately identifying attacks and their types than single classifiers.

The paper is organized in the following sections.

Section 2 discusses the related works.

Section 3 discusses the IoT-based Smart city framework.

Section 4 presents the proposed anomaly detection model.

Section 5 presents the experimental results.

Section 6 gives concluding remarks.

3. IoT-Based Smart City Framework

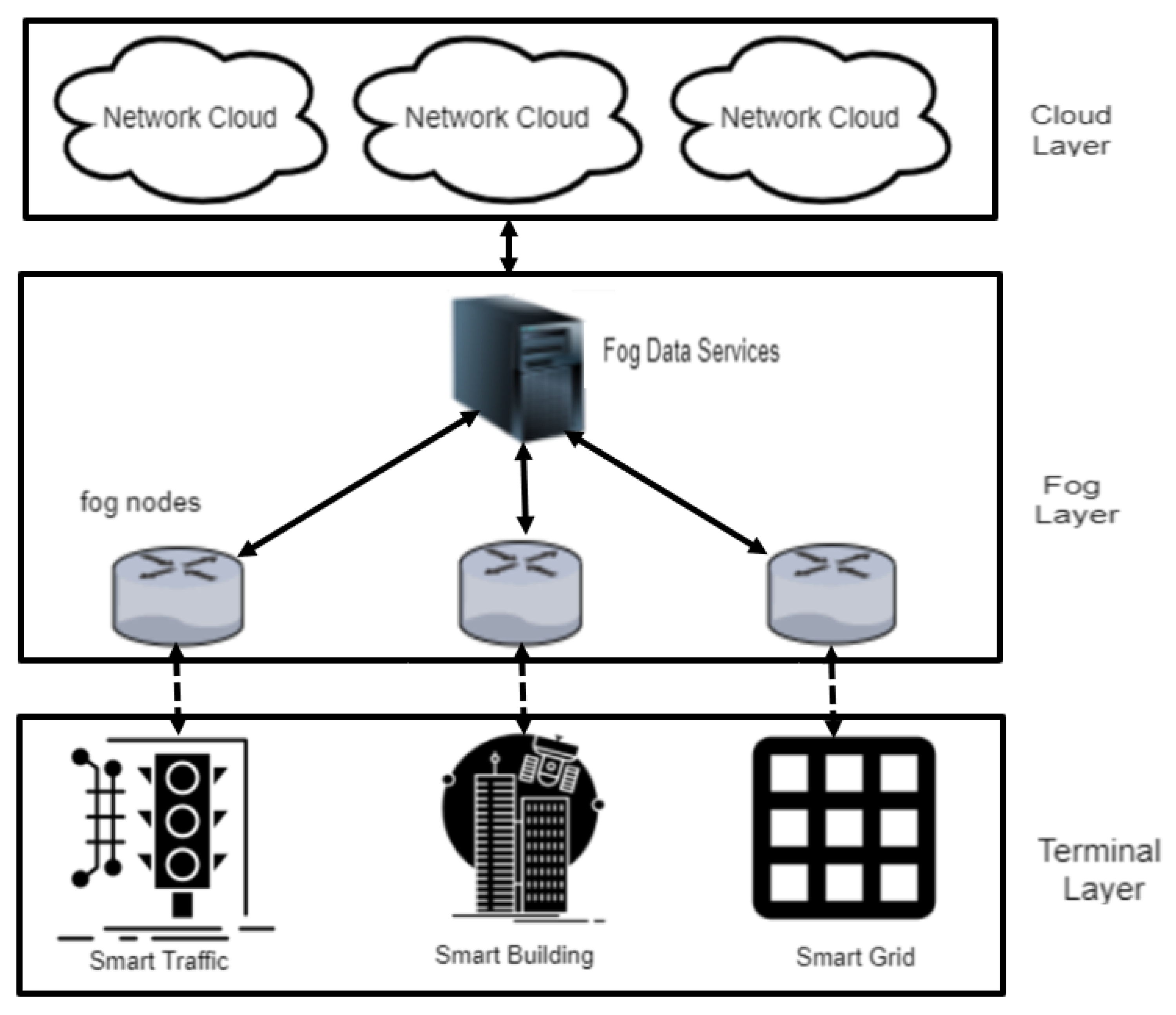

Smart city is an integrated framework where IoT technology, smart systems and information and communication technology (ICT) are collectively used to enhance the quality and performance of the different city services such as transportation, health systems, pollution control and energy distribution. A smart city framework, as based on existing literature [

26], is shown in

Figure 1 and consists of the following three layers: terminal layer, fog layers and cloud layer.

The cloud layer contains storage resources (e.g., servers and virtual machines) to store as well as maintain a large amount of data. The fog layer acts as a bridge between the terminal layer devices and cloud layer and is responsible to ensure the computational process and management at the edges of the network. The fog layer is more effective at identifying the different cyber-attacks than the centralized cloud layer. The terminal layer consists of a set of IoT devices (sensors) that are installed within the city to collect data.

For several reasons, IoT networks and applications are vulnerable against attacks. Firstly, most IoT devices have limited resources (e.g., small processing power and memory) and as a result suffer from limited processing capability. Secondly, IoT devices are interconnected to different protocols and the increasing number of IoT devices further causes latency in cloud centers. Thirdly, sometimes IoT devices are unattended, which makes it possible for an intruder to physically access them. Fourthly, the greater part of the data communication is wireless, exposing it to eavesdropping.

As a consequence, conventional IDS systems often fail to detect the IoT attack accurately [

43]. Thus, an attacker can successfully compromise vulnerable IoT devices to connect to smart city routers and devices located at various places such as homes, shopping malls, restaurants, hotels and airports. By doing so, an attacker who compromises these IoT devices may obtain sensitive data such as information of credit card, stream video and similar personal information.

One of the key issues that smart city framework and infrastructure must ensure is its ability to deliver services in a sustainable manner to meet the needs of the current and future generations of citizens [

44,

45]. Some ongoing smart city projects such as those initiated in Hong Kong and Masdar city in Abu Dhabi [

46] have already been criticized because of the vulnerable urban development plan and consequently doubts about sustainability of the services. The management of several facets of sustainability programs inside smart cities are facilitated by IoT, and this exposes organizations to the risks of failure from the network unavailability, security breaches and damage of IoT infrastructure from natural disasters [

47]. Further, sustainable operation of services such as intelligent transportation systems, smart buildings and sustainable usage of resources such as water and energy supply, garbage disposal, etc. are highly dependent on IoT and related cyber-physical systems [

48]. Machine learning techniques are used to better manage those smart city services and resources in an autonomous manner [

45,

49]. In addition, machine learning techniques can well detect intrusion and cyberattacks in industrial IoT [

50], which therefore can enhance sustainability and ensure uninterrupted services in smart cities by thwarting attacks and intrusion on respective IoT systems. However, there is a need for more research on machine learning implementation and model verification in terms of security and privacy [

51].

In this paper, we hence explore the feasibility of both ensemble-based learning and single-model classifiers for identifying cyberattacks in IoT-based smart city applications.

5. Experimental Results

Experiments were implemented using Python programming language and several libraries such as Pandas, Numpy, Matplotlib, sklearn and Keras on a HP (ELITEBOOK) laptop where the operating system was Windows 10 Education 64-bit and the processor was Intel(R) Core(TM) i5-8350U CPU @ 1.70 GHz 1.9 GHz with 16 GB RAM.

To test the performance of the base classifier as well as the ensemble classifier, 10-fold cross-validation (CV) was used where the provided dataset was randomly divided into 10 equal size subsets. Out of these 10 subsets, nine were used to build the model classifier and the remaining one was used as a test set. The same procedure was repeated ten times to ensure that each subset was used once as the test dataset. Finally, the mean accuracy summarized from each classifier in each fold was noted.

Figure 3 represents different evaluation metrics for different classifiers on the training and test datasets.

We first show the performance of the different classifiers in terms of accuracy which is presented in

Figure 3a. Here, the task was to classify an unknown sample into one of the ten categories for UNSW-NB15 dataset and eight categories for CICIDS2017 dataset, as shown in

Table 3. For UNSW-BC15 and CICIDS2017 datasets, the accuracy of the machine learning algorithms LR, SVM, DT, RF, ANN and KNN on the test dataset are 72.32% and 93.60%, 71.49% and 92%, 80.69% and 99.7%, 81.77% and 99.7%, 78.89% and 94.2% and 78.23% and 99.7% respectively. The accuracy of ensemble methods bagging (RF as the base-learner), boosting (DT as the base-learner), stacking (base-learners of RF and ANN and Meta-learner of DT) are 82.36% and 99.7%, 83.30% and 99.8% and 83.84% and 99.9%, respectively. Among the algorithms, SVM shows poor and least performance while DT and RF shows better results compared to others. On the other hand, stacking ensemble, constructed from base- and meta-classifiers, shows better performance compared to others.

We show the performance measure in terms of precision in

Figure 3b. The precision for LR, SVM, DT, RF, ANN and KNN on test dataset are 72% and 92%, 70% and 94%, 81% and 99.8%, 82%, and 99.8%, 78% and 94.5% and 79% and 99.7%, respectively. The precision of ensemble methods bagging, boosting and stacking are 82% and 99.7%, 83% and 99.8% and 83% and 99.9%, respectively. Similar to the accuracy metric, SVM shows the least precision for UNSW-NB15 dataset. However, LR shows the least precision for CICIDS2017 and RF shows better results compared to others. On the other hand, the stacking ensemble method performances better compared to others.

The performance in terms of recall is shown in

Figure 3c. The recall for LR, SVM, DT, RF, ANN and KNN on test dataset are 72% and 94%, 71% and 92%, 81% and 98%, 82% and 99.8%, 79% and 94.3% and 78% and 99.7%, respectively. The recall values of the ensemble methods bagging, boosting and stacking are 82% and 99.8%, 83% and 99.9% and 83% and 99.9%, respectively. Once again, ensemble techniques yield better performance compared to the base classifier and the stacking ensemble method outperforms others. Finally, we demonstrate the performance measure in terms of F1-score in

Figure 3d. The F1-score for LR, SVM, DT, RF, ANN and KNN on test dataset are 71% and 92%, 70% and 94%, 80% and 99.7%, 81% and 99.7%, 78% and 94% and 78% and 99.7%, respectively. The F1-score of ensemble methods bagging, boosting and stacking are 81% and 99.8%, 81% and 99.9% and 83% and 99.9%, respectively. Once again, ensemble techniques show better performance than base classifier and the stacking ensemble method outperforms other classifiers considered in this research.

The results show that the ensemble of learning models provides better performance than the single model classifiers on both test datasets. This implies, while existing works on the data have focused on single learning model, ensemble classifiers such as stacking represent a promising approach for application in this domain.

We also experimented with how the classifier performs when applied in a multi-class classification context. More precisely, we considered each type of attack as a separate class and then assessed the classifiers’ ability in identifying the attack from a normal situation. The results are shown in

Table 5 and

Table 6 for UNSW-NB15 and CICIDS2017 datasets, respectively. The results illustrate that, for various types of attack, DT and RF perform better in comparison to other algorithms. On the other hand, the stacking ensemble technique shows significant improvement compared to bagging and boosting in some cases. For example, on the UNSW-NB15 dataset, in the DoS attack, stacking yields an F1-score of 0.45 vs. 0.24 for bagging and boosting, while, in the Worm attack, these scores are 0.57, 0.37 and 0.33, respectively. In most of the other types of attacks, stacking attains an F1-score of above 0.75. On the other hand, on CICIDS2017, in the Bot attack, stacking ensemble achieved 0.950 vs. 0.898 and 0.942 for bagging and boosting.

Figure 4 shows the Receiver Operating Characteristic Curves for base and ensemble classifiers on UNSW-BC15 dataset. We found that, among the base classifiers, ANN shows better performance. On the other hand, among the ensemble techniques, boosting and stacking demonstrate almost the same results.

Table 7 and

Table 8 show a comparison of the multi-class classification performance attained by ensemble approaches to a recent work [

29] on UNSW-BC15 and CICIDS2017 datasets, respectively. The accuracies attained in our work using LR, SVM, DT, RF and KNN are 72.32% and 93.6%, 71.49% and 92%, 80.69% and 99.7%, 81.77% and 99.7% and 78.23% and 99.6%, respectively, while those in [

29] are, respectively, 53.8% and 87%, 58.1% and 79.9%, 73.3% and 94%, 75.5% and 94.4% and 62.2% and 90.0%, albeit with some differences in the way the datasets were used. In [

29], the researchers experimented with the boosting ensemble technique and achieved accuracies of 60.8% and 64.1 %, which are significantly lower than the accuracies attained in our work (83.3% and 99.9%) using stacking ensemble.

The results of individual classifiers as well as ensemble methods for the binary class classification are shown in

Table 9 and

Table 10 on UNSW-BC15 and CICIDS2017 datasets, respectively. We used the same metric as the multi-class classification. The highest accuracies of 95.45% and 99.7% and F1-scores of 95% and 99.8% by an individual classifier were achieved with RF classifier and the values of those metrics rose to 96.83% and 99.9% and 97% and 99.9%, respectively, when the stacking ensemble technique was used.

A possible reason for the proposed model’s significantly better performance compared to Vinayakumar et al. [

29] is that they did not consider any feature selection. The existing work experimented with all features for both datasets. However, our proposed model considers an information gain-based feature selection technique and finally uses only 25 most important features based on their information gain ratio.

Notably, a question may be raised as to the complexity of using ensemble models as compared to a single classifier. With technological advances, however, processing units such as mobile devices are becoming increasingly faster and memory resources are becoming increasingly cheaper—a reason fog computing potentially has seen application of a wide range of algorithms including ensemble techniques [

61,

62]. There are also active investigation on efficient allocation of resources in fog computing [

63]. Further, research has devised fog system architecture that can exploit ensemble learning without increasing latency of the system substantially [

62]. Arguably, the stacking approach considered in this article can be rolled out using the architecture and efficient resource allocation mechanism. Thus, despite some increases in complexity, the finding that stacking can outperform single classifiers for counterattacks detection in IoT smart city applications has thus a notable value, especially with missing a cyberattack being linked to a high cost.

For example, the model building time for ten runs for each of the base classifiers (DT, RF and ANN) and the stacking ensemble technique on both datasets are shown in

Table 11. The table shows that the model building times by the classifiers are 1.4, 2.17, 6.8 and 25.6 s for the UNSW-NB15 dataset and 5.3, 4.35, 7.4 and 27.09 s for the CICIDS2017 dataset, respectively, which shows that DT takes the least time to build the model for UNSW-NB15 while RF is the fastest for CICIDS2017. On the other hand, since the stacking ensemble model deals with more complexity by combining several base classifiers, it takes longer time to build the model for the both datasets. The time taken to test the model on a single sample by the classifiers is, respectively, 0.48, 2.53, 1.91 and 5.70

s for UNSW-NB15 and 0.42, 1.57, 1.80 and 4.19

s for CICIDS2017, suggesting that DT and RF take the least amount of time compared to others in both datasets. Thus, the model takes very little time, in the range of

s, to test whether an activity is malicious or not.