The Bibliometric Literature on Scopus and WoS: The Medicine and Environmental Sciences Categories as Case of Study

Abstract

:1. Introduction

- Bibliometric performance studies on authorship and production: they focus on analyzing the profiles of authors according to elements such as their affiliation, country, and the production of articles, examining which are the most cited or relevant;

- Bibliometric studies on topics: they focus on the main topics dealt with, as well as their relationships or evolution in a specific topic;

- Studies on research methodologies: they focus on the research methods and techniques used to develop the research papers published in the journals.

2. Materials and Methods

- Number of publications in WoS and Scopus;

- Number of citations in WoS and Scopus;

- Quartile in JCR and SJR;

- Journal Impact Factor JCR. It uses for the citations, articles, reviews, and proceedings papers [36];

- 5-Year Journal Impact Factor JCR, available from 2007 onward [36];

- Impact SJR [37];

- Cite Score [35].

3. Results of Bibliometric Literature on Scopus and WoS

3.1. Trend in Scientific Production

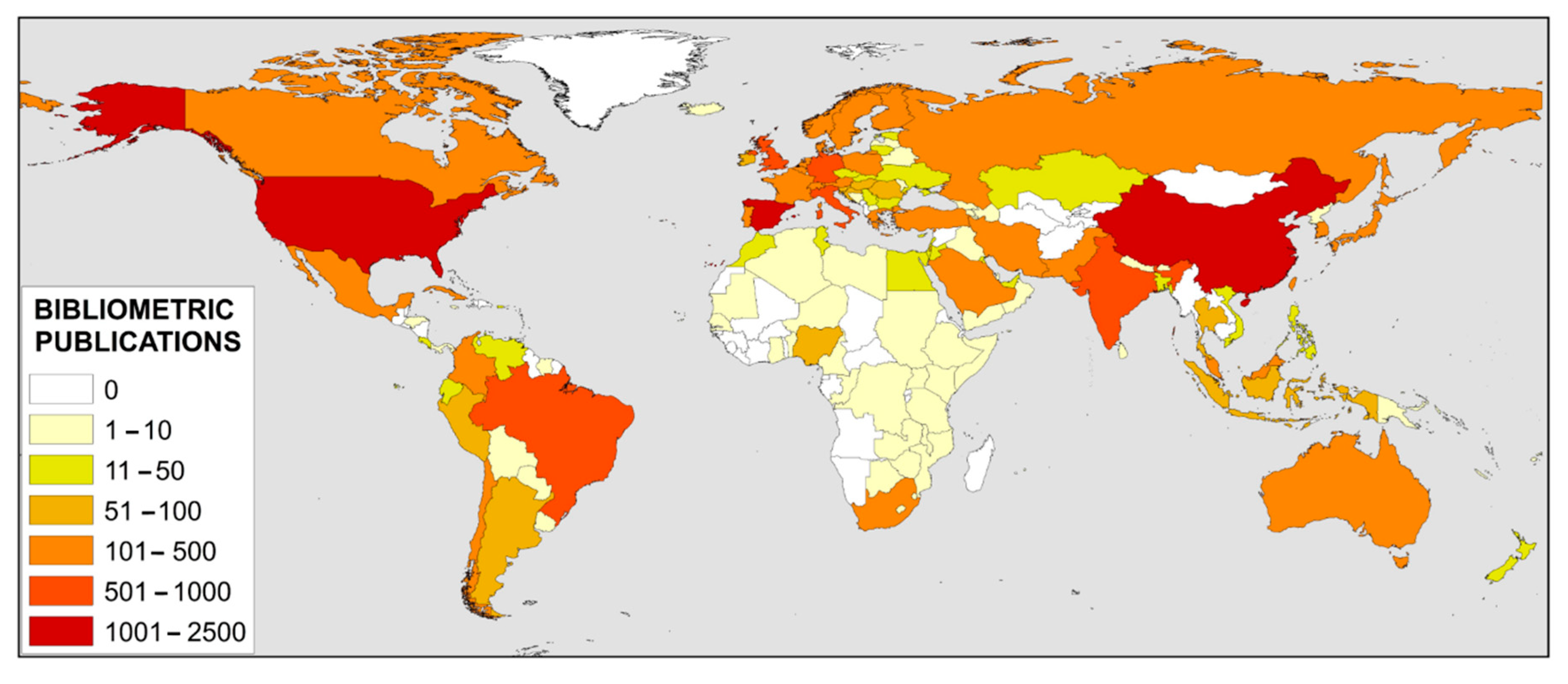

3.1.1. Countries

3.1.2. Institutions According to Scopus and WoS

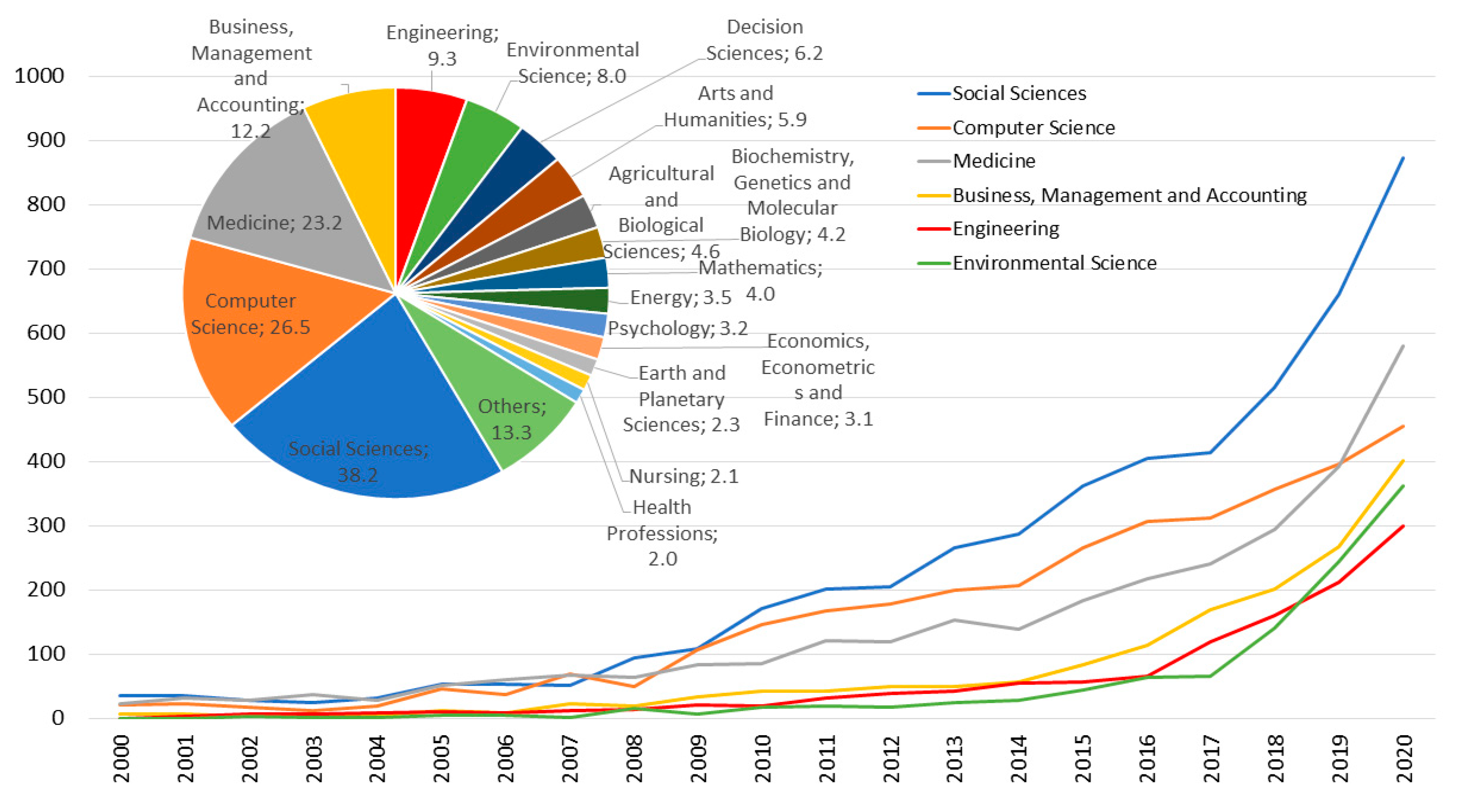

3.2. Scientific Areas of Indexing

3.2.1. Scopus

Subject Area

SciVal

3.2.2. WoS

Categories

Incites

3.3. Source (Journal)

3.4. CNCI vs. FWCI

4. The Medicine and Environmental Sciences Categories as Case of Study

4.1. The Medicine Category

4.1.1. Countries and Affiliations

4.1.2. Keywords

4.1.3. Journals

4.2. The Environmental Sciences Category

4.2.1. Countries and Affiliations

4.2.2. Keywords

4.2.3. Journals

5. Independent Cluster Analysis of Bibliometric Publications

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cole, F.J.; Eales, N.B. The history of comparative anatomy: Part I—A statistical analysis of the literature. Sci. Prog. 1917, 11, 578–596. [Google Scholar]

- Lotka, A.J. The frequency distribution of scientific productivity. J. Wash. Acad. Sci. 1926, 16, 317–323. [Google Scholar]

- Price, D.J. Little Science, Big Science; Columbia Univ. Press: New York, NY, USA, 1963. [Google Scholar]

- Gross, P.L.; Gross, E.M. College libraries and chemical education. Science 1927, 66, 385–389. [Google Scholar] [CrossRef] [PubMed]

- Bradford, S.C. Sources of information on specific subjects. Engineering 1934, 137, 85–86. [Google Scholar]

- Cason, H.; Lubotsky, M. The influence and dependence of psychological journals on each other. Psychol. Bull. 1936, 33, 95. [Google Scholar] [CrossRef]

- Garfield, E. Citation indexes for science. Science 1955, 122, 108–111. [Google Scholar] [CrossRef] [PubMed]

- Garfield, E. Is citation analysis a legitimate evaluation tool? Scientometrics 1979, 1, 359–375. [Google Scholar] [CrossRef]

- Pinski, G.; Narin, F. Citation influence for journal aggregates of scientific publications: Theory, with application to the literature of physics. Inf. Process. Manag. 1976, 12, 297–312. [Google Scholar] [CrossRef] [Green Version]

- Tomer, C. A statistical assessment of two measures of citation: The impact factor and the immediacy index. Inf. Process. Manag. 1986, 22, 251–258. [Google Scholar] [CrossRef]

- Tijssen, R.J.; Visser, M.S.; Van Leeuwen, T.N. Searching for scientific excellence: Scientometric measurements and citation analyses of national research systems. In Proceedings of the 8th International Conference on Scientometrics and Informetrics Proceedings-ISSI-2001, Sidney, Australia, 16–20 July 2001; pp. 675–689. [Google Scholar]

- Lariviere, V.; Sugimoto, C.R. The journal impact factor: A brief history, critique, and discussion of adverse effects. In Springer Handbook of Science and Technology Indicators; Springer: Cham, Switzerland, 2019; pp. 3–24. [Google Scholar]

- González-Pereira, B.; Guerrero-Bote, V.P.; Moya-Anegón, F. A new approach to the metric of journals’ scientific prestige: The SJR indicator. J. Informetr. 2010, 4, 379–391. [Google Scholar] [CrossRef]

- Zijlstra, H.; McCullough, R. CiteScore: A New Metric to Help You Track Journal Performance and Make Decisions; Elsevier: Amsterdam, The Netherlands, 2016; Available online: https://www.elsevier.com/editors-update/story/journal-metrics/citescore-a-new-metric-to-help-you-choose-the-right-journal (accessed on 18 February 2021).

- Bergstrom, C.T.; West, J. Comparing Impact Factor and Scopus CiteScore. Eigenfactor.org. 2016. Available online: http://eigenfactor.org/projects/posts/citescore.php (accessed on 18 February 2021).

- Davis, P. Changing Journal Impact Factor Rules Creates Unfair Playing Field For Some. Available online: https://scholarlykitchen.sspnet.org/2021/02/01/unfair-playing-field/ (accessed on 18 February 2021).

- Liu, W. A matter of time: Publication dates in Web of Science Core Collection. Scientometrics 2021, 126, 849–857. [Google Scholar] [CrossRef]

- McVeigh, M.; Quaderi, N. Adding Early Access Content to Journal Citation Reports Choosing a Prospective Model. Available online: https://clarivate.com/webofsciencegroup/article/adding-early-access-content-to-journal-citation-reports-choosing-a-prospective-model/ (accessed on 18 February 2021).

- López-Robles, J.R.; Guallar, J.; Otegi-Olaso, J.R.; Gamboa-Rosales, N.K. El profesional de la información (EPI): Bibliometric and thematic analysis (2006–2017). El Profesional. de la Información. 2019, 28, e280417. [Google Scholar] [CrossRef]

- Pritchard, A. Statistical bibliography or bibliometrics. J. Doc. 1969, 25, 348–349. [Google Scholar]

- Broadus, R.N. Toward a definition of “bibliometrics”. Scientometrics 1987, 12, 373–379. [Google Scholar] [CrossRef]

- Moed, H.F. Bibliometric measurement of research performance and Price’s theory of differences among the sciences. Scientometrics 1989, 15, 473–483. [Google Scholar] [CrossRef]

- White, H.D.; McCain, K.W. Bibliometrics. Annu. Rev. Inf. Sci. Technol. 1989, 24, 119–186. [Google Scholar]

- Spinak, E. Diccionario Enciclopédico de Bibliometría, Cienciometría e Informetría; UNESCO: Caracas, Venezuela, 1996. [Google Scholar]

- Garcia-Zorita, C.; Rousseau, R.; Marugan-Lazaro, S.; Sanz-Casado, E. Ranking dynamics and volatility. J. Informetr. 2018, 12, 567–578. [Google Scholar] [CrossRef]

- Web of Science Group. 2021. Available online: https://clarivate.com/webofsciencegroup/ (accessed on 18 February 2021).

- Traag, V.A.; Waltman, L.; Van Eck, N.J. From Louvain to Leiden: Guaranteeing well-connected communities. Sci. Rep. 2019, 9, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Elsevier. 2021. Available online: https://www.elsevier.com/es-es (accessed on 18 February 2021).

- Hicks, D.; Wouters, P.; Waltman, L.; De Rijcke, S.; Rafols, I. Bibliometrics: The Leiden Manifesto for research metrics. Nat. News 2015, 520, 429. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- American Society for Cell Biology. San Francisco Declaration on Research Assessment. 2012. Available online: https://sfdora.org/read/ (accessed on 18 February 2021).

- Liu, W. The data source of this study is Web of Science Core Collection? Not enough. Scientometrics 2019, 121, 1815–1824. [Google Scholar] [CrossRef]

- Montoya, F.G.; Alcayde, A.; Baños, R.; Manzano-Agugliaro, F. A fast method for identifying worldwide scientific collaborations using the Scopus database. Telemat. Inform. 2018, 35, 168–185. [Google Scholar] [CrossRef]

- Klavans, R.; Boyack, K.W. Research portfolio analysis and topic prominence. J. Informetr. 2017, 11, 1158–1174. [Google Scholar] [CrossRef] [Green Version]

- Dresbeck, R. SciVal. J. Med Libr. Assoc. 2015, 103, 164. [Google Scholar] [CrossRef]

- Scopus. How Are CiteScore Metrics Used in Scopus? 2021. Available online: https://service.elsevier.com/app/answers/detail/a_id/14880/supporthub/scopus/ (accessed on 18 February 2021).

- Clarivate. Journal Citation Reports Help. 2021. Available online: http://jcr.help.clarivate.com/Content/home.htm (accessed on 18 February 2021).

- Scimago Journal Country Rank. SJR Scimago Journal Country Rank. 2021. Available online: https://www.scimagojr.com/ (accessed on 18 February 2021).

- Scopus. What Is Field-Weighted Citation Impact (FWCI)? 2021. Available online: https://service.elsevier.com/app/answers/detail/a_id/14894/supporthub/scopus/kw/FWCI/ (accessed on 18 February 2021).

- Van Eck, N.J.; Waltman, L. Software survey: VOSviewer, a computer program for bibliometric mapping. Scientometrics 2010, 84, 523–538. [Google Scholar] [CrossRef] [Green Version]

- Fahimnia, B.; Sarkis, J.; Davarzani, H. Green supply chain management: A review and bibliometric analysis. Int. J. Prod. Econ. 2015, 162, 101–114. [Google Scholar] [CrossRef]

- Zhu, J.; Liu, W. Comparing like with like: China ranks first in SCI-indexed research articles since 2018. Scientometrics 2020, 124, 1691–1700. [Google Scholar] [CrossRef]

- Zhang, P.; Yan, F.; Du, C. A comprehensive analysis of energy management strategies for hybrid electric vehicles based on bibliometrics. Renew. Sustain. Energy Rev. 2015, 48, 88–104. [Google Scholar] [CrossRef]

- Ramos-Rodríguez, A.R.; Ruíz-Navarro, J. Changes in the intellectual structure of strategic management research: A bibliometric study of the Strategic Management Journal, 1980–2000. Strateg. Manag. J. 2004, 25, 981–1004. [Google Scholar] [CrossRef]

- Heradio, R.; De La Torre, L.; Galan, D.; Cabrerizo, F.J.; Herrera-Viedma, E.; Dormido, S. Virtual and remote labs in education: A bibliometric analysis. Comput. Educ. 2016, 98, 14–38. [Google Scholar] [CrossRef]

- Merigó, J.M.; Mas-Tur, A.; Roig-Tierno, N.; Ribeiro-Soriano, D. A bibliometric overview of the Journal of Business Research between 1973 and 2014. J. Bus. Res. 2015, 68, 2645–2653. [Google Scholar] [CrossRef]

- Costas, R.; Bordons, M. The h-index: Advantages, limitations and its relation with other bibliometric indicators at the micro level. J. Informetr. 2007, 1, 193–203. [Google Scholar] [CrossRef] [Green Version]

- Liu, X.; Zhang, L.; Hong, S. Global biodiversity research during 1900–2009: A bibliometric analysis. Biodivers. Conserv. 2011, 20, 807–826. [Google Scholar] [CrossRef]

- CWTS. The Centre for Science and Technology Studies (CWTS). 2021. Available online: https://www.cwts.nl/about-cwts (accessed on 18 February 2021).

- Hirsch, J.E. An index to quantify an individual’s scientific research output. Proc. Natl. Acad. Sci. USA 2005, 102, 16569–16572. [Google Scholar] [CrossRef] [Green Version]

- Bartneck, C.; Kokkelmans, S. Detecting h-index manipulation through self-citation analysis. Scientometrics 2011, 87, 85–98. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Schreiber, M. A modification of the h-index: The hm-index accounts for multi-authored manuscripts. J. Informetr. 2008, 2, 211–216. [Google Scholar] [CrossRef] [Green Version]

- Opthof, T. Inflation of impact factors by journal self-citation in cardiovascular science. Neth. Heart J. 2013, 21, 163–165. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jeong, Y.K.; Song, M.; Ding, Y. Content-based author co-citation analysis. J. Informetr. 2014, 8, 197–211. [Google Scholar] [CrossRef]

- Liu, W.; Gu, M.; Hu, G.; Li, C.; Liao, H.; Tang, L.; Shapira, P. Profile of developments in biomass-based bioenergy research: A 20-year perspective. Scientometrics 2014, 99, 507–521. [Google Scholar] [CrossRef]

- Salmerón-Manzano, E.; Garrido-Cardenas, J.A.; Manzano-Agugliaro, F. Worldwide research trends on medicinal plants. Int. J. Environ. Res. Public Health 2020, 17, 3376. [Google Scholar] [CrossRef]

- Aznar-Sánchez, J.A.; Piquer-Rodríguez, M.; Velasco-Muñoz, J.F.; Manzano-Agugliaro, F. Worldwide research trends on sustainable land use in agriculture. Land Use Policy 2019, 87, 104069. [Google Scholar] [CrossRef]

- Montoya, F.G.; Baños, R.; Meroño, J.E.; Manzano-Agugliaro, F. The research of water use in Spain. J. Clean. Prod. 2016, 112, 4719–4732. [Google Scholar] [CrossRef]

- Garrido-Cardenas, J.A.; Cebrián-Carmona, J.; González-Cerón, L.; Manzano-Agugliaro, F.; Mesa-Valle, C. Analysis of global research on malaria and Plasmodium vivax. Int. J. Environ. Res. Public Health 2019, 16, 1928. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Garrido-Cardenas, J.A.; Esteban-García, B.; Agüera, A.; Sánchez-Pérez, J.A.; Manzano-Agugliaro, F. Wastewater treatment by advanced oxidation process and their worldwide research trends. Int. J. Environ. Res. Public Health 2020, 17, 170. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Aznar-Sánchez, J.A.; Velasco-Muñoz, J.F.; Belmonte-Ureña, L.J.; Manzano-Agugliaro, F. Innovation and technology for sustainable mining activity: A worldwide research assessment. J. Clean. Prod. 2019, 221, 38–54. [Google Scholar] [CrossRef]

- Blanco-Mesa, F.; Merigo, J.M.; Gil-Lafuente, A.M. Fuzzy decision making: A bibliometric-based review. J. Intell. Fuzzy Syst. 2017, 32, 2033–2050. [Google Scholar] [CrossRef] [Green Version]

- Montoya, F.G.; Baños, R.; Alcayde, A.; Montoya, M.G.; Manzano-Agugliaro, F. Power quality: Scientific collaboration networks and research trends. Energies 2018, 11, 2067. [Google Scholar] [CrossRef] [Green Version]

- Garfield, E. The history and meaning of the journal impact factor. JAMA 2006, 295, 90–93. [Google Scholar] [CrossRef]

- Loannidis, J.P. Contradicted and initially stronger effects in highly cited clinical research. JAMA 2005, 294, 218–228. [Google Scholar] [CrossRef] [Green Version]

- Abraham, C.; Michie, S. A taxonomy of behavior change techniques used in interventions. Health Psychol. 2008, 27, 379. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Vilagut, G.; Ferrer, M.; Rajmil, L.; Rebollo, P.; Permanyer-Miralda, G.; Quintana, J.M.; Santed, R.; Valderas, J.M.; Ribera, A.; Domingo-Salvany, A.; et al. The Spanish version of the Short Form 36 Health Survey: A decade of experience and new developments. Gac. Sanit. 2005, 19, 135–150. [Google Scholar] [CrossRef] [Green Version]

- Valderrama-Zurián, J.C.; González-Alcaide, G.; Valderrama-Zurián, F.J.; Aleixandre-Benavent, R.; Miguel-Dasit, A. Coauthorship networks and institutional collaboration in Revista Española de Cardiología publications. Rev. Española Cardiol. 2007, 60, 117–130. [Google Scholar] [CrossRef]

- Benavent, R.A.; Valderrama-Zurian, J.C.; Gomez, M.C.; Melendez, R.S.; Molina, C.N. Impact factor of the Spanish medical journals. Med. Clin. 2004, 123, 697–701. [Google Scholar]

- Ramos, J.M.; González-Alcaide, G.; Bolaños-Pizarro, M. Bibliometric analysis of leishmaniasis research in Medline (1945–2010). Parasites Vectors 2013, 6, 1–14. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Austin, P.C. A critical appraisal of propensity-score matching in the medical literature between 1996 and 2003. Stat. Med. 2008, 27, 2037–2049. [Google Scholar] [CrossRef]

- De Jong, M.; Joss, S.; Schraven, D.; Zhan, C.; Weijnen, M. Sustainable–smart–resilient–low carbon–eco–knowledge cities; making sense of a multitude of concepts promoting sustainable urbanization. J. Clean. Prod. 2015, 109, 25–38. [Google Scholar] [CrossRef] [Green Version]

- Ren, Y.; Yu, M.; Wu, C.; Wang, Q.; Gao, M.; Huang, Q.; Liu, Y. A comprehensive review on food waste anaerobic digestion: Research updates and tendencies. Bioresour. Technol. 2018, 247, 1069–1076. [Google Scholar] [CrossRef] [PubMed]

- Ferretti, F.; Saltelli, A.; Tarantola, S. Trends in sensitivity analysis practice in the last decade. Sci. Total Environ. 2016, 568, 666–670. [Google Scholar] [CrossRef] [PubMed]

- Albort-Morant, G.; Henseler, J.; Leal-Millán, A.; Cepeda-Carrión, G. Mapping the field: A bibliometric analysis of green innovation. Sustainability 2017, 9, 1011. [Google Scholar] [CrossRef] [Green Version]

- Meerow, S.; Newell, J.P.; Stults, M. Defining urban resilience: A review. Landsc. Urban Plan. 2016, 147, 38–49. [Google Scholar] [CrossRef]

- Janssen, M.A.; Schoon, M.L.; Ke, W.; Börner, K. Scholarly networks on resilience, vulnerability and adaptation within the human dimensions of global environmental change. Glob. Environ. Chang. 2006, 16, 240–252. [Google Scholar] [CrossRef] [Green Version]

- Williams, R.; Wright, A.J.; Ashe, E.; Blight, L.K.; Bruintjes, R.; Canessa, R.; Wale, M.A. Impacts of anthropogenic noise on marine life: Publication patterns, new discoveries, and future directions in research and management. Ocean Coast. Manag. 2015, 115, 17–24. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Wang, M.H.; Hu, J.; Ho, Y.S. A review of published wetland research, 1991–2008: Ecological engineering and ecosystem restoration. Ecol. Eng. 2010, 36, 973–980. [Google Scholar] [CrossRef]

- Padilla, F.M.; Gallardo, M.; Manzano-Agugliaro, F. Global trends in nitrate leaching research in the 1960–2017 period. Sci. Total Environ. 2018, 643, 400–413. [Google Scholar] [CrossRef]

- la Cruz-Lovera, D.; Perea-Moreno, A.J.; la Cruz-Fernández, D.; Alvarez-Bermejo, J.A.; Manzano-Agugliaro, F. Worldwide research on energy efficiency and sustainability in public buildings. Sustainability 2017, 9, 1294. [Google Scholar] [CrossRef] [Green Version]

| Rank | Scopus | WoS | ||||||

|---|---|---|---|---|---|---|---|---|

| Affiliation | NTOT | NIC SciVal | IC (%) | Affiliation | NTOT | NIC InCites | IC (%) | |

| 1 | Universidad de Granada | 259 | 55 | 21.2 | Consejo Superior de Investigaciones Científicas (CSIC) | 198 | 47 | 23.7 |

| 2 | University of Valencia | 211 | 74 | 35.1 | University of Granada | 198 | 49 | 24.7 |

| 3 | Consejo Superior de Investigaciones Científicas (CSIC) | 196 | 61 | 31.1 | Leiden University | 154 | 59 | 38.3 |

| 4 | Chinese Academy of Sciences | 188 | 50 | 26.6 | Chinese Academy of Sciences | 138 | 46 | 33.3 |

| 5 | Leiden University | 177 | 62 | 35.0 | University of Valencia | 133 | 39 | 29.3 |

| 6 | Universidade de São Paulo | 136 | 22 | 16.2 | Asia University Taiwan | 129 | 83 | 64.3 |

| 7 | Asia University Taiwan | 133 | 91 | 68.4 | Max Planck Society | 120 | 57 | 47.5 |

| 8 | Wuhan University | 118 | 39 | 33.1 | University of London | 115 | 67 | 58.3 |

| 9 | Consiglio Nazionale delle Ricerche (CNR) | 114 | 18 | 15.8 | Consiglio Nazionale delle Ricerche (CNR) | 112 | 20 | 17.9 |

| 10 | Peking University | 111 | 61 | 55.0 | University of Rome Tor Vergata | 109 | 18 | 16.5 |

| 11 | University of Rome Tor Vergata | 109 | 18 | 16.5 | Peking University | 101 | 53 | 52.5 |

| 12 | Administrative Headquarters of the Max Planck Society | 106 | 52 | 49.1 | Wuhan University | 101 | 34 | 33.7 |

| 13 | Universitat Politècnica de València | 104 | 35 | 33.7 | University System of Georgia | 90 | 67 | 74.4 |

| 14 | Universidad de Chile | 100 | 81 | 81.0 | KU Leuven | 80 | 59 | 73.8 |

| 15 | KU Leuven | 92 | 62 | 67.4 | Universitat Politecnica de Valencia | 80 | 30 | 37.5 |

| 16 | Sichuan University | 85 | 36 | 42.4 | Harvard University | 78 | 35 | 44.9 |

| 17 | Georgia Institute of Technology | 85 | 65 | 76.5 | Istituto di Analisi dei Sistemi ed Informatica Antonio Ruberti (IASI-CNR) | 78 | 8 | 10.3 |

| 18 | An-Najah National University | 85 | 26 | 30.6 | Georgia Institute of Technology | 73 | 58 | 79.5 |

| 19 | Universidade Federal de Santa Catarina | 82 | 19 | 23.2 | University of Barcelona | 73 | 34 | 46.6 |

| 20 | Universitat de Barcelona | 80 | 56 | 70.0 | Universidade de São Paulo | 72 | 9 | 12.5 |

| Topic Name | N | C | C/D |

|---|---|---|---|

| Hirsch Index, Self-Citation, Journal Impact Factor | 1005 | 16,417 | 16.34 |

| Intellectual Structure, Co-citation Analysis, Scientometrics | 980 | 17,639 | 18.00 |

| Co-Authorship, Scientific Collaboration, Scientometrics | 743 | 11,159 | 15.02 |

| Citation Counts, Bibliometric Analysis, Journal Impact Factor | 438 | 4897 | 11.18 |

| Scientometrics, Research Productivity, Bibliometric Analysis | 319 | 1580 | 4.95 |

| European Regional Development Fund, Bibliometric Indicators, ERDF | 283 | 2186 | 7.72 |

| Beauties, Citations, Sleeping Beauty | 220 | 2295 | 10.43 |

| Social Science and Humanities, Research Evaluation, Book Publishers | 198 | 8895 | 44.92 |

| Bibliometric Analysis, Citation Index, Document Type | 188 | 3472 | 18.47 |

| Readership, Citation Counts, Journal Impact Factor | 186 | 2863 | 15.39 |

| Scientific Journals, Doctoral Thesis, Spanish Universities | 146 | 1078 | 7.38 |

| Technology Roadmapping, Patent Analysis, Technological Competitiveness | 145 | 3306 | 22.80 |

| Female Scientist, Research Productivity, Women in Science | 120 | 1596 | 13.30 |

| Research Productivity, Bibliometric Analysis, Arab Countries | 114 | 1213 | 10.64 |

| Scientific Publications, Research Productivity, Bibliometric Analysis | 101 | 1090 | 10.79 |

| Tourism Research, Tourism and Hospitality, Hospitality Management | 85 | 1517 | 17.85 |

| Citations, Summarization, Scholarly Publication | 68 | 647 | 9.51 |

| Open Access Publishing, Scholarly Communication, Preprints | 67 | 586 | 8.75 |

| Economists, Co-Authorship, Economic Journals | 61 | 596 | 9.77 |

| Library Science, Tenure, Land Information System | 57 | 495 | 8.68 |

| Topic Cluster Name | N | C | C/D |

|---|---|---|---|

| Publications, Periodicals as Topic, Research | 6020 | 84,217 | 13.99 |

| Industry, Innovation, Entrepreneurship | 536 | 9593 | 17.90 |

| Library, Librarian, Information | 196 | 1560 | 7.96 |

| Research, Meta-Analysis as Topic, Guidelines as Topic | 163 | 1530 | 9.39 |

| Periodicals as Topic, Open Access, Library | 146 | 1560 | 10.68 |

| Tourism, Tourists, Destination | 133 | 1987 | 14.94 |

| Industry, Research, Marketing | 130 | 1429 | 10.99 |

| Supply Chains, Supply Chain Management, Industry | 129 | 1907 | 14.78 |

| Semantics, Models, Recommender Systems | 114 | 1171 | 10.27 |

| Corporate Social Responsibility, Corporate Governance, Firms | 110 | 1277 | 11.61 |

| Schools, Brazil, Education | 108 | 382 | 3.54 |

| Electricity, Energy, Economics | 101 | 1605 | 15.89 |

| Brazil, Health, Nursing | 95 | 363 | 3.82 |

| Libraries, Metadata, Ontology | 81 | 246 | 3.04 |

| Work, Personality, Psychology | 78 | 911 | 11.68 |

| Students, Medical Students, Education | 77 | 563 | 7.31 |

| Construction, Construction Industry, Project Management | 74 | 971 | 13.12 |

| Research, Data, Information Dissemination | 60 | 676 | 11.27 |

| Rotavirus, Norovirus, Coronavirus | 56 | 369 | 6.59 |

| Decision Making, Fuzzy Sets, Models | 51 | 1184 | 23.22 |

| Category | N | % | C/D |

|---|---|---|---|

| Information Science and Library Science | 2508 | 16.3 | 15.8 |

| Computer Science, Interdisciplinary Applications | 1552 | 10.1 | 17.7 |

| Computer Science, Information Systems | 666 | 4.3 | 14.3 |

| Environmental Sciences | 616 | 4.0 | 9.5 |

| Management | 521 | 3.4 | 18.2 |

| Business | 379 | 2.5 | 16.8 |

| Public, Environmental and Occupational Health | 373 | 2.4 | 7.7 |

| Green and Sustainable Science and Technology | 331 | 2.1 | 12.0 |

| Surgery | 299 | 1.9 | 8.5 |

| Environmental Studies | 289 | 1.9 | 8.7 |

| Education and Educational Research | 270 | 1.8 | 5.0 |

| Economics | 225 | 1.5 | 5.4 |

| Clinical Neurology | 204 | 1.3 | 9.2 |

| Computer Science, Theory and Methods | 195 | 1.3 | 2.6 |

| Computer Science, Artificial Intelligence | 194 | 1.3 | 9.9 |

| Engineering, Electrical and Electronic | 174 | 1.1 | 4.4 |

| Operations Research and Management Science | 171 | 1.1 | 17.1 |

| Health Care Sciences and Services | 165 | 1.1 | 11.0 |

| Social Sciences, Interdisciplinary | 162 | 1.1 | 3.5 |

| Engineering, Industrial | 145 | 0.9 | 18.1 |

| Macro Topic | Code | N | C | C/D |

|---|---|---|---|---|

| Social Sciences | 6 | 5614 | 80,783 | 14.39 |

| Clinical and Life Sciences | 1 | 1047 | 7771 | 7.42 |

| Electrical Engineering, Electronics and Computer Science | 4 | 387 | 4732 | 12.23 |

| Agriculture, Environment and Ecology | 3 | 278 | 2587 | 9.31 |

| Chemistry | 2 | 105 | 1068 | 10.17 |

| Earth Sciences | 8 | 62 | 522 | 8.42 |

| Engineering and Materials Science | 7 | 46 | 500 | 10.87 |

| Arts and Humanities | 10 | 44 | 199 | 4.52 |

| Physics | 5 | 29 | 83 | 2.86 |

| Mathematics | 9 | 14 | 68 | 4.86 |

| Meso Topic | Code | N | C | C/D |

|---|---|---|---|---|

| Bibliometrics, Scientometrics and Research Integrity | 6.238 | 4489 | 67,420 | 15.02 |

| Management | 6.3 | 397 | 6049 | 15.24 |

| Medical Ethics | 1.155 | 144 | 1477 | 10.26 |

| Sustainability Science | 6.115 | 114 | 1633 | 14.32 |

| Nursing | 1.14 | 101 | 808 | 8.00 |

| Knowledge Engineering and Representation | 4.48 | 90 | 484 | 5.38 |

| Education and Educational Research | 6.11 | 86 | 716 | 8.33 |

| Hospitality, Leisure, Sport and Tourism | 6.223 | 70 | 1053 | 15.04 |

| Forestry | 3.40 | 69 | 853 | 12.36 |

| Healthcare Policy | 1.156 | 58 | 569 | 9.81 |

| Economics | 6.10 | 57 | 595 | 10.44 |

| Climate Change | 6.153 | 56 | 511 | 9.13 |

| Artificial Intelligence and Machine Learning | 4.61 | 51 | 1012 | 19.84 |

| Human Geography | 6.86 | 48 | 559 | 11.65 |

| Design and Manufacturing | 4.224 | 47 | 715 | 15.21 |

| Social Psychology | 6.73 | 41 | 247 | 6.02 |

| Operations Research and Management Science | 6.294 | 40 | 691 | 17.28 |

| Supply Chain and Logistics | 4.84 | 37 | 581 | 15.70 |

| Marine Biology | 3.2 | 35 | 215 | 6.14 |

| Psychiatry | 1.21 | 34 | 332 | 9.76 |

| Micro Topic | Code | N | C | C/D |

|---|---|---|---|---|

| Bibliometrics | 6.238.166 | 4460 | 66,782 | 14.97 |

| Knowledge Management | 6.3.2 | 134 | 2199 | 16.41 |

| Systematic Reviews | 1.155.611 | 87 | 718 | 8.25 |

| Corporate Social Responsibility | 6.3.385 | 66 | 1113 | 16.86 |

| Tourism | 6.223.247 | 61 | 1014 | 16.62 |

| Foresight | 6.294.1807 | 39 | 689 | 17.67 |

| Entrepreneurship | 6.3.726 | 38 | 743 | 19.55 |

| Environmental Kuznets Curve | 6.115.234 | 31 | 471 | 15.19 |

| Academic Entrepreneurship | 6.3.1467 | 31 | 569 | 18.35 |

| Information Literacy | 4.48.228 | 30 | 153 | 5.10 |

| Customer Satisfaction | 6.3.65 | 29 | 369 | 12.72 |

| Project Scheduling | 4.224.599 | 28 | 495 | 17.68 |

| Fuzzy Sets | 4.61.56 | 28 | 857 | 30.61 |

| Agglomeration Economies | 6.86.280 | 27 | 356 | 13.19 |

| Internationalization | 6.3.1229 | 23 | 226 | 9.83 |

| Internet of Things | 4.13.807 | 22 | 481 | 21.86 |

| Sentiment Analysis | 4.48.672 | 21 | 149 | 7.10 |

| Unified Health System | 1.156.1509 | 20 | 106 | 5.30 |

| Corporate Governance | 6.10.63 | 20 | 379 | 18.95 |

| Life Cycle Assessment | 6.115.1181 | 20 | 258 | 12.90 |

| Rank | WoS—JCR | Scopus—SJR | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Journal | N1 | Cit1 | Q1 | IF2 | IF5 | Journal | N2 | Cit2 | Q2 | IF3 | CS | |

| 1 | Scientometrics | 1051 | 20,447 | Q1 | 2.87 | 3.07 | Scientometrics | 1036 | 26,087 | Q1 | 1.210 | 5.6 |

| 2 | Journal of Informetrics | 203 | 5691 | Q1 | 4.61 | 4.41 | Library Philosophy and Practice | 307 | 406 | Q2 | 0.220 | 0.3 |

| 3 | Sustainability | 180 | 852 | Q2 | 2.58 | 2.8 | Journal of Informetrics | 204 | 7542 | Q1 | 2.079 | 8.4 |

| 4 | Journal of the American Society for Information Science and Technology | 83 | 3178 | n/a | n/a | n/a | Sustainability | 185 | 1483 | Q2 | 0.581 | 3.2 |

| 5 | Journal of the Association for Information Science and Technology | 81 | 1609 | Q2 | 2.41 | 3.17 | Journal of the American Society for Information Science and Technology | 83 | 4018 | N/A | N/A | N/A |

| 6 | Revista Española de Documentación Científica | 74 | 252 | Q3 | 1.3 | 1.12 | Revista Española de Documentación Científica | 81 | 552 | Q2 | 0.497 | 1.7 |

| 7 | Journal of Cleaner Production | 74 | 1287 | Q1 | 7.25 | 7.49 | Malaysian Journal of Library and Information Science | 81 | 732 | Q2 | 0.414 | 1.3 |

| 8 | Current Science | 71 | 292 | Q4 | 0.73 | 0.88 | Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) | 78 | 244 | Q2 | 0.427 | 1.9 |

| 9 | Research Evaluation | 64 | 914 | Q2 | 2.57 | 3.41 | Espacios | 78 | 57 | Q3 | 0.215 | 0.5 |

| 10 | Technological Forecasting and Social Change | 61 | 1934 | Q1 | 5.85 | 5.18 | Journal of Cleaner Production | 75 | 1867 | Q1 | 1.886 | 10.9 |

| 11 | Profesional de la Información | 60 | 351 | Q3 | 1.58 | 1.42 | Journal of the Association for Information Science and Technology | 74 | 2317 | Q1 | 1.270 | 7.9 |

| 12 | Environmental Science and Pollution Research | 59 | 352 | Q2 | 3.06 | 3.31 | Current Science | 69 | 427 | Q2 | 0.238 | 1.2 |

| 13 | International Journal of Environmental Research and Public Health | 52 | 167 | Q1 | 2.85 | 3.13 | Research Evaluation | 67 | 1165 | Q1 | 1.792 | 5.6 |

| 14 | PLOS ONE | 51 | 912 | Q2 | 2.74 | 3.23 | ACM International Conference Proceeding Series | 64 | 114 | N/A | 0.200 | 0.8 |

| 15 | World Neurosurgery | 50 | 306 | Q3 | 1.83 | 2.07 | Profesional de la Información | 62 | 615 | Q1 | 0.480 | 2.1 |

| 16 | Malaysian Journal of Library and Information Science | 49 | 215 | Q3 | 1.55 | 0.96 | Technological Forecasting and Social Change | 62 | 2662 | Q1 | 1.815 | 8.7 |

| 17 | Investigación Bibliotecologica | 49 | 42 | Q4 | 0.35 | 0.48 | CEUR Workshop Proceedings | 62 | 123 | N/A | 0.177 | 0.6 |

| 18 | Medicine | 49 | 245 | Q3 | 1.55 | 2 | DESIDOC Journal of Library and Information Technology | 57 | 280 | Q2 | 0.281 | 1.0 |

| 19 | Research Policy | 41 | 2541 | Q1 | 5.35 | 7.93 | World Neurosurgery | 55 | 463 | Q2 | 0.727 | 2.4 |

| 20 | Journal of Information Science | 35 | 645 | Q2 | 2.41 | 2.34 | Environmental Science and Pollution Research | 55 | 403 | Q2 | 0.788 | 4.9 |

| Rank | Country | N | C | C/D | Affiliation | N | C | C/D |

|---|---|---|---|---|---|---|---|---|

| 1 | China | 1174 | 7881 | 6.7 | KU Leuven | 271 | 4986 | 18.4 |

| 2 | United States | 1125 | 14,841 | 13.2 | Magyar Tudomanyos Akademia | 268 | 5660 | 21.1 |

| 3 | Spain | 693 | 7538 | 10.9 | Leiden University | 248 | 8665 | 34.9 |

| 4 | United Kingdom | 579 | 10,206 | 17.6 | Consejo Superior de Investigaciones Científicas | 210 | 2924 | 13.9 |

| 5 | Netherlands | 572 | 12,720 | 22.2 | Universiteit Antwerpen | 157 | 2642 | 16.8 |

| 6 | Germany | 558 | 7890 | 14.1 | Wuhan University | 152 | 1191 | 7.8 |

| 7 | Belgium | 469 | 7681 | 16.4 | Universidad de Granada | 132 | 1969 | 14.9 |

| 8 | India | 340 | 2787 | 8.2 | Chinese Academy of Sciences | 126 | 950 | 7.5 |

| 9 | Hungary | 315 | 5974 | 19.0 | Dalian University of Technology | 123 | 1375 | 11.2 |

| 10 | Italy | 314 | 3197 | 10.2 | Indiana University Bloomington | 122 | 1770 | 14.5 |

| Rank | InCites | SciVal | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| WoS Journal Name | N | C | C/D | CNCI | Scopus Journal Name | N | C | C/D | FWCI | |

| 1 | Scientometrics | 1051 | 20,447 | 19.5 | 1.37 | Scientometrics | 1036 | 26,087 | 25.2 | 2.51 |

| 2 | Journal of Informetrics | 203 | 5691 | 28.0 | 2.02 | Library Philosophy and Practice | 307 | 406 | 1.3 | 0.61 |

| 3 | Sustainability | 180 | 852 | 4.7 | 0.89 | Journal of Informetrics | 204 | 7542 | 37.0 | 3.23 |

| 4 | Journal of the American Society for Information Science and Technology | 83 | 3178 | 38.3 | 5.14 | Sustainability | 185 | 1483 | 8.0 | 1.54 |

| 5 | Journal of the Association for Information Science and Technology | 81 | 1609 | 19.9 | 7.27 | Journal of the American Society for Information Science and Technology | 83 | 4018 | 48.4 | 2.19 |

| 6 | Revista Española de Documentación Científica | 74 | 252 | 3.4 | 0.3 | Revista Española de Documentación Científica | 81 | 552 | 6.8 | 0.94 |

| 6 | Journal of Cleaner Production | 74 | 1287 | 17.4 | 1.27 | Malaysian Journal of Library and Information Science | 81 | 732 | 9.0 | 0.72 |

| 8 | Current Science | 71 | 292 | 4.1 | 0.42 | Lecture Notes in Computer Science | 78 | 244 | 3.1 | 0.91 |

| 9 | Research Evaluation | 64 | 914 | 14.3 | 3.4 | Espacios | 78 | 57 | 0.7 | 0.11 |

| 10 | Technological Forecasting and Social Change | 61 | 1934 | 31.7 | 2.39 | Journal of Cleaner Production | 75 | 1867 | 24.9 | 2.35 |

| 11 | Profesional de la Información | 60 | 351 | 5.9 | 1.67 | Journal of the Association for Information Science and Technology | 74 | 2317 | 31.3 | 2.83 |

| 12 | Environmental Science and Pollution Research | 59 | 352 | 6.0 | 0.99 | Current Science | 69 | 427 | 6.2 | 0.25 |

| 13 | International Journal of Environmental Research and Public Health | 52 | 167 | 3.2 | 1.44 | Research Evaluation | 67 | 1165 | 17.4 | 1.75 |

| 14 | PLOS ONE | 51 | 912 | 17.9 | 1.61 | ACM International Conference Proceeding Series | 64 | 114 | 1.8 | 0.39 |

| 15 | World Neurosurgery | 50 | 306 | 6.1 | 1.43 | Profesional de la Información | 62 | 615 | 9.9 | 2.90 |

| 16 | Malaysian Journal of Library and Information Science | 49 | 215 | 4.4 | 0.35 | Technological Forecasting and Social Change | 62 | 2662 | 42.9 | 3.95 |

| 16 | Investigación Bibliotecológica | 49 | 42 | 0.9 | 0.11 | CEUR Workshop Proceedings | 62 | 123 | 2.0 | 0.71 |

| 16 | Medicine | 49 | 245 | 5.0 | 0.78 | DESIDOC Journal of Library and Information Technology | 57 | 280 | 4.9 | 0.76 |

| 19 | Research Policy | 41 | 2541 | 62.0 | 2.73 | World Neurosurgery | 55 | 463 | 8.4 | 1.25 |

| 20 | Journal of Information Science | 35 | 645 | 18.4 | 1.13 | Environmental Science and Pollution Research | 55 | 403 | 1.38 | |

| Rank | Country | N | Affiliation (Country) | N |

|---|---|---|---|---|

| 1 | United States | 1919 | University of Valencia (Spain) | 110 |

| 2 | China | 834 | University of Toronto (Canada) | 110 |

| 3 | United Kingdom | 688 | Harvard Medical School (USA) | 102 |

| 4 | Spain | 597 | Universidade de Sao Paulo—USP (Brasil) | 93 |

| 5 | Canada | 458 | McMaster University (Canada) | 86 |

| 6 | Brazil | 359 | Consejo Superior de Investigaciones Científicas (Spain) | 80 |

| 7 | Australia | 336 | Universidad Miguel Hernandez de Elche (Spain) | 73 |

| 8 | Germany | 303 | The University of Sydney (Australia) | 67 |

| 9 | France | 226 | An-Najah National University (Palestine) | 61 |

| 10 | Italy | 223 | The University of British Columbia (Canada) | 56 |

| Medicine Topic | N | Main Affiliation (Country) |

|---|---|---|

| Epidemiology | 194 | Universidad Tecnológica de Pereira (Colombia) |

| Pediatrics | 194 | University of Valencia (Spain) |

| Orthopedics | 186 | Centre Hospitalier Universitaire de Clermont-Ferrand (France) CNRS Centre National de la Recherche Scientifique (France) Second Military Medical University (China) McMaster University (Canada) |

| Cardiology | 166 | Universidade de Sao Paulo—USP (Brasil) |

| Neurosurgery | 164 | University of Tennessee Health Science Center (USA) |

| Radiology | 152 | Hallym University, College of Medicine (South Korea) |

| Ophthalmology | 134 | China Medical University Shenyang (China) |

| Oncology | 131 | University of Texas MD Anderson Cancer Center (USA) University of Michigan, Ann Arbor (USA) |

| Plastic Surgery | 121 | Harvard Medical School (USA) Massachusetts General Hospital (USA) |

| Psychiatry | 119 | King’s College London (UK) Universidad de Alcalá (Spain) |

| Rank | WoS—JCR | Scopus—SJR | ||||||

|---|---|---|---|---|---|---|---|---|

| Journal | N1 | Q1 | IF2 | IF5 | Q2 | IF3 | CS | |

| 1 | Journal Of The Medical Library Association | 87 | Q2 | 2.042 | 2.299 | Q1 | 0.894 | 2.8 |

| 2 | International Journal Of Environmental Research And Public Health | 83 | Q1 | 2.849 | 3.127 | Q2 | 0.739 | 3.0 |

| 3 | World Neurosurgery | 82 | Q3 | 1.829 | 2.074 | Q2 | 0.727 | 2.4 |

| 4 | Journal Of Clinical Epidemiology | 55 | Q1 | 4.952 | 6.234 | Q1 | 2.702 | 9.0 |

| 5 | BMJ Open | 42 | Q2 | 2.496 | 2.992 | Q1 | 1.247 | 3.5 |

| 6 | Health Research Policy And Systems | 40 | Q2 | 2.365 | 2.762 | Q1 | 0.987 | 3.8 |

| 7 | Medicine United States | 40 | Q3 | 1.552 | 1.998 | Q2 | 0.639 | 2.7 |

| 8 | Plastic And Reconstructive Surgery | 37 | Q1 | 4.235 | 4.387 | Q1 | 1.916 | 5.3 |

| 9 | Revista Cubana De Informacion En Ciencias De La Salud | 36 | n/a | n/a | n/a | Q3 | 0.172 | 0.5 |

| 10 | Health Information And Libraries Journal | 35 | Q3 | 1.356 | 1.280 | Q2 | 0.521 | 2.6 |

| Rank | Country/Region | N | Affiliation (Country) | N |

|---|---|---|---|---|

| 1 | China | 485 | Chinese Academy of Sciences (China) | 94 |

| 2 | Spain | 191 | Universidad de Almeria (Spain) | 47 |

| 3 | United States | 177 | Asia University Taiwan (China) | 38 |

| 4 | Brazil | 122 | University of Chinese Academy of Sciences (China) | 30 |

| 5 | United Kingdom | 113 | Beijing Institute of Technology (China) | 29 |

| 6 | Australia | 81 | Peking University (China) | 27 |

| 7 | Italy | 75 | Ministry of Education China (China) | 25 |

| 8 | Germany | 56 | Research Center for Eco-Environmental Sciences Chinese Academy of Sciences (China) | 19 |

| 9 | Canada | 54 | University of Valencia (Spain) | 18 |

| 10 | Taiwan | 50 | Tianjin University (China) Beijing Normal University (China) Wuhan University (China) | 18 |

| Environmental Sciences Topic | N | Main Affiliation (Country) |

|---|---|---|

| Sustainability | 214 | Universidad de Almeria (Spain) |

| Sustainable Development | 207 | Universidad de Almeria (Spain) |

| Climate Change | 144 | Chinese Academy of Sciences (China) |

| Ecology | 66 | Chinese Academy of Sciences (China) |

| Environmental Impact | 58 | Universidad de Almeria (Spain) |

| Biodiversity | 57 | Chinese Academy of Sciences (China) |

| Environmental Protection | 45 | Chinese Academy of Sciences (China) |

| Environmental Management | 44 | Chinese Academy of Sciences (China) |

| Public Health | 43 | Goethe-Universität Frankfurt am Main (Germany) |

| Environmental Monitoring | 37 | Chinese Academy of Sciences (China) |

| Rank | WoS—JCR | Scopus—SJR | ||||||

|---|---|---|---|---|---|---|---|---|

| Journal | N1 | Q1 | IF2 | IF5 | Q2 | IF3 | CS | |

| 1 | Sustainability Switzerland | 239 | Q2 | 2.576 | 2.798 | Q2 | 0.581 | 3.2 |

| 2 | Journal Of Cleaner Production | 108 | Q1 | 7.246 | 7.491 | Q1 | 1.886 | 10.9 |

| 3 | International Journal Of Environmental Research And Public Health | 83 | Q1 | 2.849 | 3.127 | Q2 | 0.739 | 3.0 |

| 4 | Environmental Science And Pollution Research | 60 | Q2 | 3.056 | 3.306 | Q2 | 0.788 | 4.9 |

| 5 | Science Of The Total Environment | 30 | Q1 | 6.551 | 6.419 | Q1 | 1.661 | 8.6 |

| 6 | Acta Ecologica Sinica | 30 | n/a | n/a | n/a | Q3 | 0.229 | 1.1 |

| 7 | Science And Public Policy | 26 | Q3 | 1.730 | 2.114 | Q1 | 0.771 | 3.3 |

| 8 | Water Switzerland | 22 | Q2 | 2.544 | 2.709 | Q1 | 0.657 | 3.0 |

| 9 | IOP Conference Series Earth And Environmental Science | 19 | n/a | n/a | n/a | Q3 | 0.175 | 0.4 |

| 10 | Ecological Indicators | 15 | Q1 | 4.229 | 4.968 | Q1 | 1.331 | 7.6 |

| Cluster 5 | Cluster 4 | Cluster 1 | Cluster 3 | Cluster 6 | Cluster 2 | Cluster 0 |

|---|---|---|---|---|---|---|

| Science Mapping | Research Productivity | Medicine | Environmental Sciences | Psychology | Nursing | Engineering |

| 28.72% | 23.29% | 19.65% | 11.84% | 7.02% | 5.66% | 3.82% |

| Vosviewer | Citation Analysis | Citation Analysis | Research Trends | Bibliometric Indicators | Citation Analysis | Nanotechnology |

| Citation Analysis | H-index | Citations | Web Of Science | Impact Factor | Authorship Pattern | Scientometrics |

| Web Of Science | Citations | Publications | Scientometrics | Bibliometry | Scientometrics | Citation Analysis |

| Literature Review | Research Evaluation | Scientometrics | Citespace | Spain | Nursing | Text Mining |

| Scopus | Scientometrics | H-index | Sci-expanded | Research | Research Productivity | Information Retrieval |

| Scientometrics | Impact Factor | Impact Factor | Social Network Analysis | Scientific Journals | Bibliometric Study | Patent Analysis |

| Bibliometric Study | Altmetrics | Research | Scopus | Scientometrics | Scopus | Digital Libraries |

| Co-word Analysis | Web Of Science | Web Of Science | Citations | Journals | India | Citations |

| Sustainability | Scopus | Scopus | Citation Analysis | Bibliometric Study | Author Productivity | Nanoscience |

| Co-citation Analysis | Peer Review | Journal Impact Factor | Climate Change | Publications | Nursing Research | China |

| Network Analysis | Journal Impact Factor | Pubmed | Publications | Citations | Citations | Citation Network |

| Science Mapping | Italy | Bibliometric Study | Impact Factor | Web Of Science | Impact Factor | Technology Forecasting |

| Social Network Analysis | Research Assessment | Vosviewer | Sci | Psychology | Library And Information Science | Computational Linguistics |

| Citations | Publications | COVID-19 | Research | Databases | Lotka’s Law | Emerging Technologies |

| Content Analysis | Research | Research Productivity | Vosviewer | Periodicals | Research Output | Research Evaluation |

| Citespace | Google Scholar | Biomedical Research | Sustainability | Scopus | Degree Of Collaboration | Document Clustering |

| Co-citation | Universities | Latin America | H-index | Citation Analysis | Bibliometric Indicators | Bibliometric Study |

| Research Trends | Research Productivity | Citespace | Scientific Production | Journal Article | Scientific Production | Publications |

| Bibliometric Review | Evaluation | Bibliometric Indicators | Research Hotspots | Impact | Research | Network Analysis |

| Cluster | Name | WoS | Scopus | Main Country keyword |

|---|---|---|---|---|

| Cluster 5 | Science Mapping | 192 | 133 | China |

| Cluster 4 | Research Productivity | 73 | 61 | Italy |

| Cluster 1 | Medical Research | 81 | 75 | China/India |

| Cluster 3 | Environment | 102 | 37 | China |

| Cluster 6 | Psychology | 22 | 17 | Spain |

| Cluster 2 | Nursing | 12 | 20 | India |

| Cluster 0 | Engineering | 2 | 1 | China |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cascajares, M.; Alcayde, A.; Salmerón-Manzano, E.; Manzano-Agugliaro, F. The Bibliometric Literature on Scopus and WoS: The Medicine and Environmental Sciences Categories as Case of Study. Int. J. Environ. Res. Public Health 2021, 18, 5851. https://doi.org/10.3390/ijerph18115851

Cascajares M, Alcayde A, Salmerón-Manzano E, Manzano-Agugliaro F. The Bibliometric Literature on Scopus and WoS: The Medicine and Environmental Sciences Categories as Case of Study. International Journal of Environmental Research and Public Health. 2021; 18(11):5851. https://doi.org/10.3390/ijerph18115851

Chicago/Turabian StyleCascajares, Mila, Alfredo Alcayde, Esther Salmerón-Manzano, and Francisco Manzano-Agugliaro. 2021. "The Bibliometric Literature on Scopus and WoS: The Medicine and Environmental Sciences Categories as Case of Study" International Journal of Environmental Research and Public Health 18, no. 11: 5851. https://doi.org/10.3390/ijerph18115851

APA StyleCascajares, M., Alcayde, A., Salmerón-Manzano, E., & Manzano-Agugliaro, F. (2021). The Bibliometric Literature on Scopus and WoS: The Medicine and Environmental Sciences Categories as Case of Study. International Journal of Environmental Research and Public Health, 18(11), 5851. https://doi.org/10.3390/ijerph18115851