Examining Different Factors in Web-Based Patients’ Decision-Making Process: Systematic Review on Digital Platforms for Clinical Decision Support System

Abstract

:1. Introduction

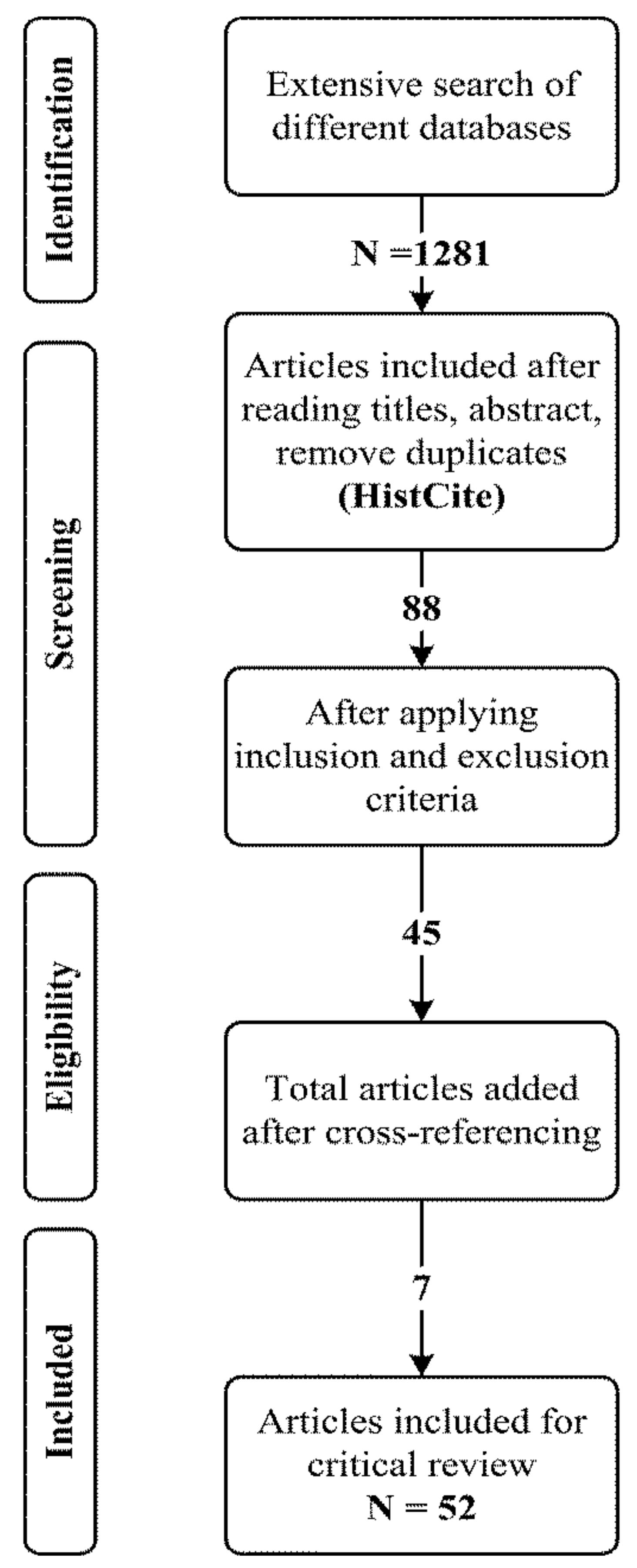

2. Methods

2.1. Planning the Systematic Review

2.2. Research Questions

2.3. Search Strategy and Criteria

2.4. Inclusion and Exclusion Criteria

- First, has the Web of science or Scopus database indexed the selected papers?

- Second, is the study aim/objective clear?

- Third, is the research context dealt with well?

- Finally, the last question helped us determine whether the research findings were sufficient for our research purpose.

2.5. Quality Assessment

2.6. Data Extraction and Synthesis

3. Findings

3.1. Overview of Publications

3.2. Evaluation Criteria and Statistical Analysis of the Signaling Mechanism

3.3. RQ1. How Can the Interaction of Online and Offline Signal Transmission on PRWs Provide Benefits Regarding Patients’ Choice for a Health Consultation?

3.3.1. Physician’s Online Reputation

3.3.2. Physician’s Online Effort

3.3.3. Service Popularity

3.3.4. Linguistic Signaling

3.3.5. Doctor–Patient Concordance Signals

3.3.6. Physician’s Offline Reputation

3.3.7. Physician Trustworthiness Signals

3.4. RQ2. What Are the Reviewed Studies’ Dynamics and Analytical Approaches Involved in Patients’ Decision-Making Process?

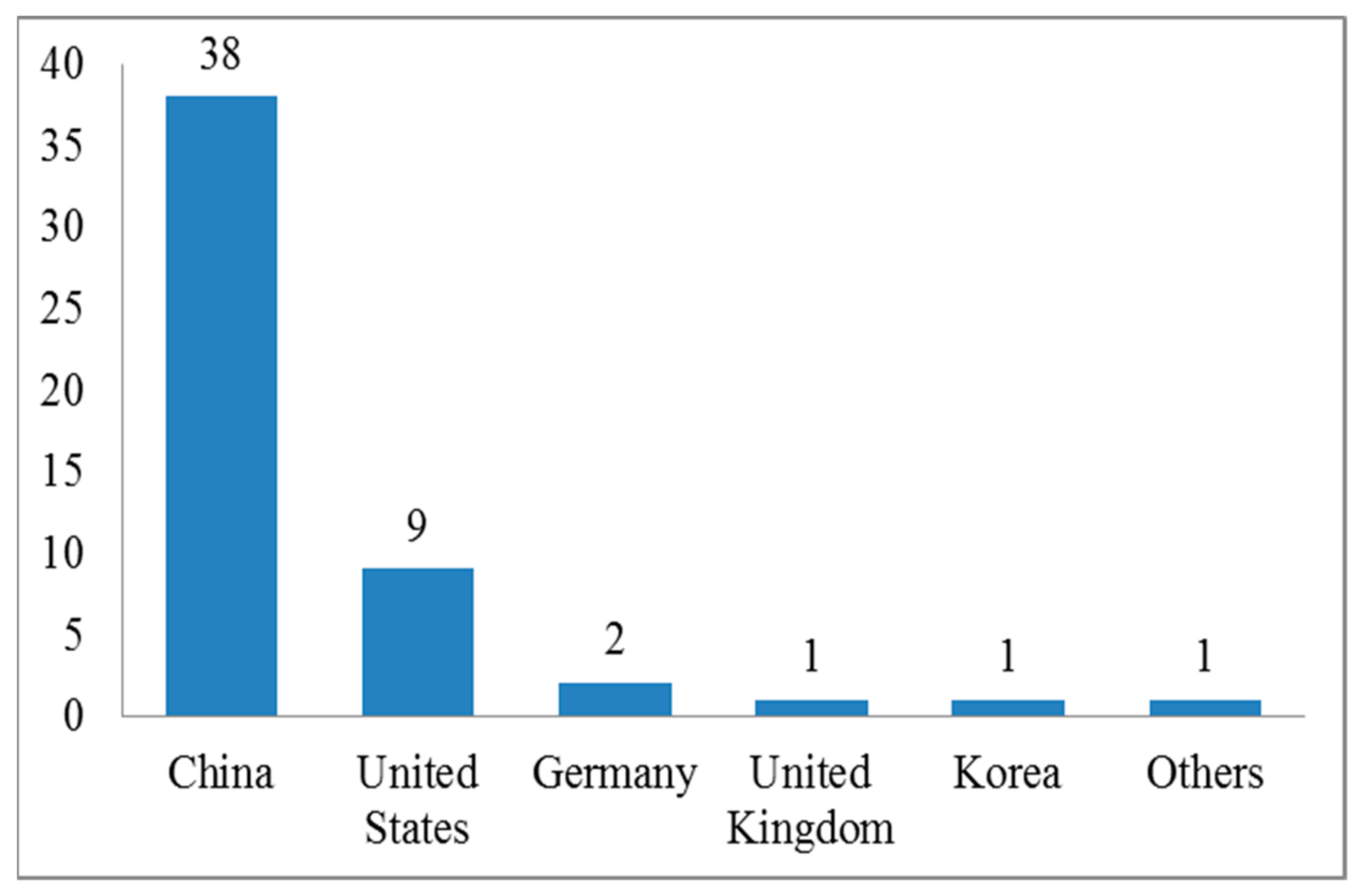

3.4.1. Time and Place of the Study

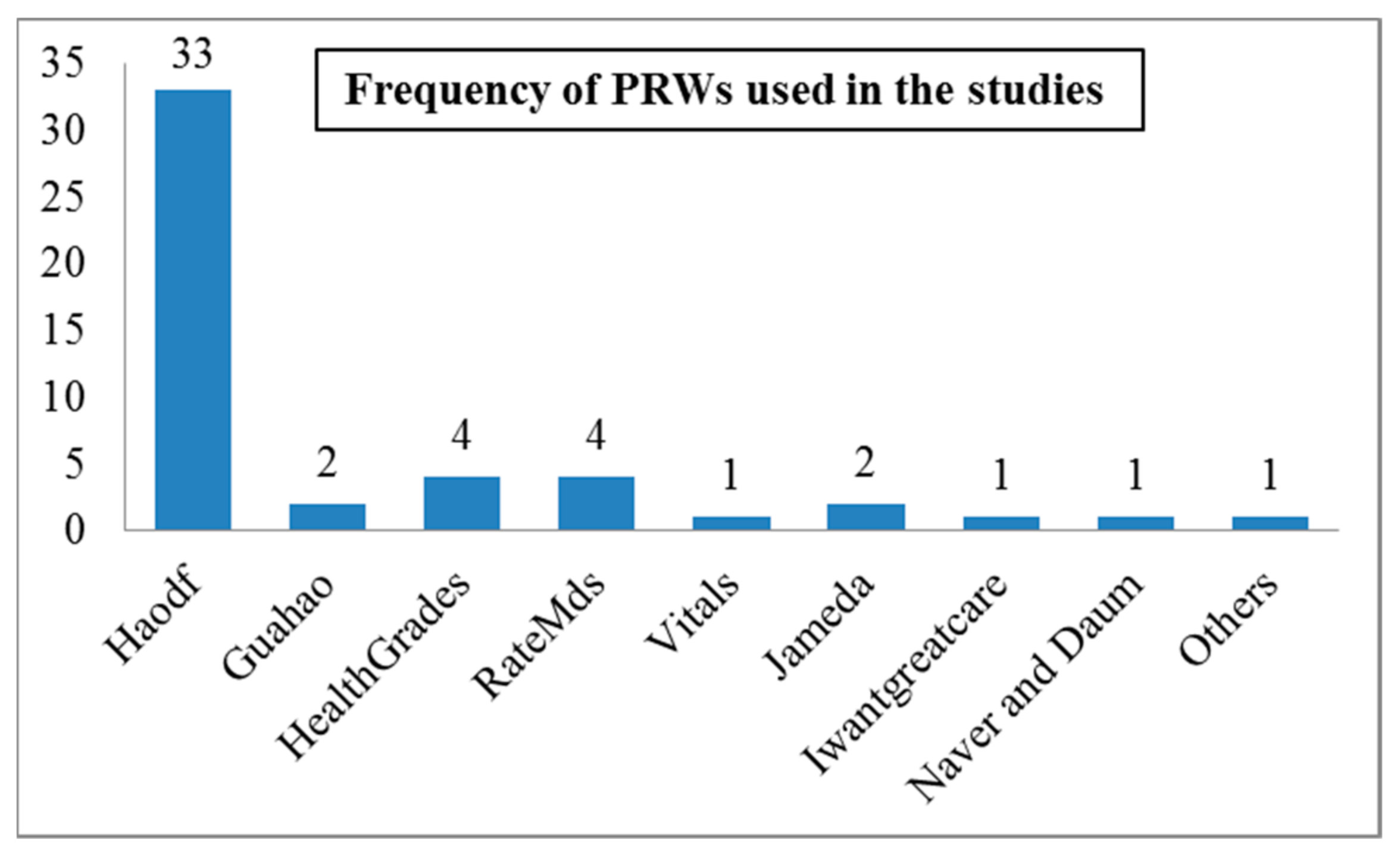

3.4.2. Physician Rating Websites

3.4.3. Signal Transmission across Different Contexts and Disease Specialties

3.4.4. Number of Reviews by PRWs

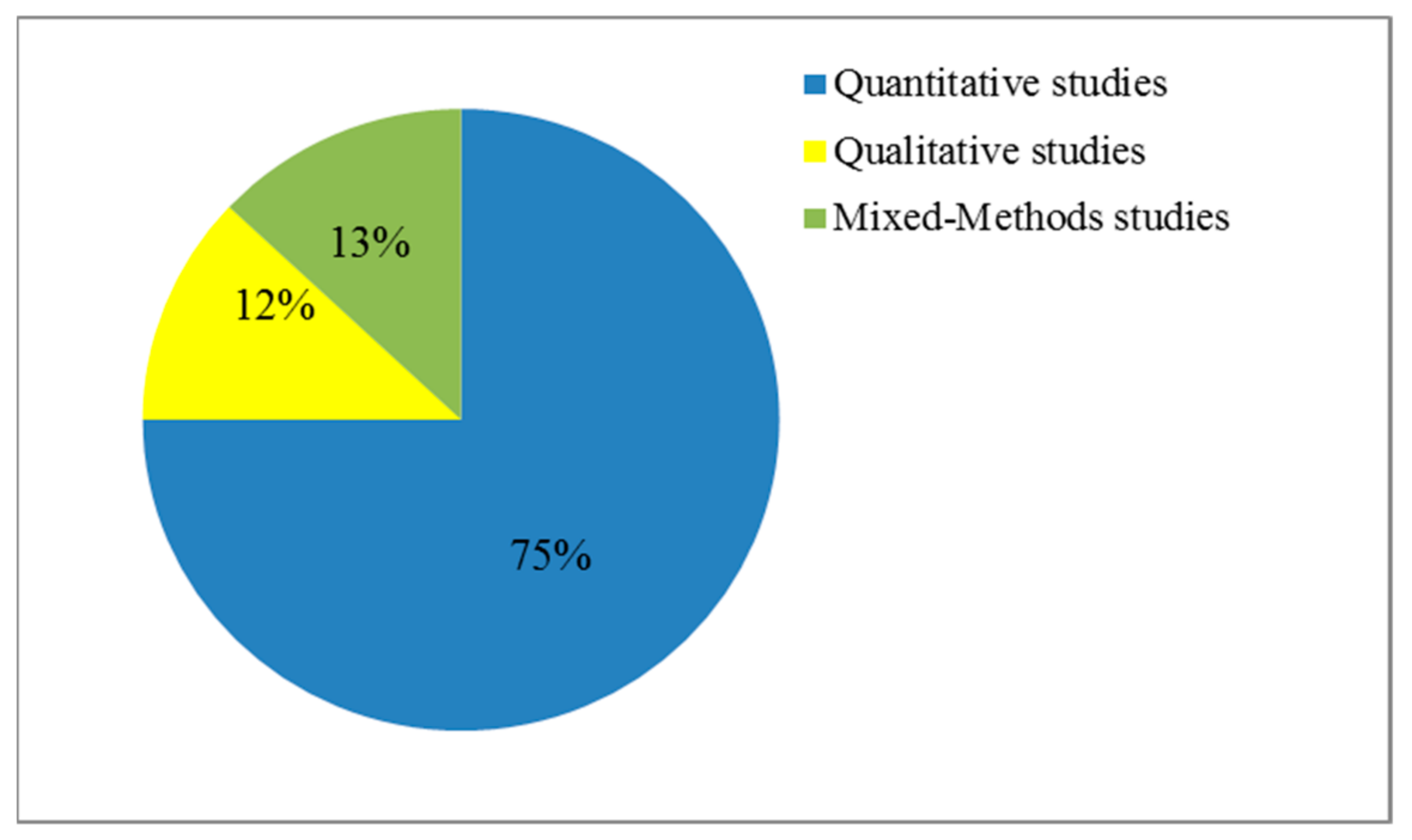

3.4.5. Study Design and Technological Roadmap Adopted

3.4.6. An Overview of the Findings of Online Physician Reviews

3.4.7. Relationship between Signal Transmission and Clinical Outcomes

4. Discussion

4.1. Implications of the Study

4.2. Limitations

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| Reference | Data Collection | Variables | Research Method | Findings |

|---|---|---|---|---|

| Wu [52] | Web scraping | Individual literacy social support Information quality Service quality | SEM-PLS | Information quality is significantly associated with patient satisfaction. |

| Jin, Yan, Li and Li [71] | Web scraping | Information quality | Text mining Logistic regression | Higher information quality leads to information adoption. |

| Storino, Castillo-Angeles, Watkins, Vargas, Mancias, Bullock, Demirjian, Moser, and Kent [116] | Collection of 50 pancreatic cancer websites | Information usefulness | Assessment by expert panel | Online information on pancreatic cancer lacks effective information about alternative therapy. |

| Zhang, Guo, Lai, and Yi [82] | Web crawling | Interpersonal unfairness Informational unfairness | Logistic regression | Informational unfairness contributes to the development of a quality relationship. |

| Li, Tang, Yen David, and Liu [38] | Web crawling | Online reputation Offline status Online self-representation | Regression analysis | Online reputation, offline status, and online self-representation are also positively related to a physician’s online order volume. |

| Yang, Guo, Wu, and Ju [47] | Web crawling | Patient-generated information System-generated information | Regression analysis | Positive system and patient-generated information will positively affect patients’ decisions. |

| Shah, Yan, Shah, Shah, and Mamirkulova [5] | Web crawling | EWOM Physician trustworthiness | OLS | EWOM and trustworthiness significantly influence physician’s economic returns. |

| Cao, Liu, Zhu, Hu, and Chen [30] | Web crawling | Service quality EWOM | OLS | Physician’s service quality and eWOM would affect patients’ selection decision. |

| Li, Tang, Jiang, Yen, and Liu [49] | Web crawling | Online knowledge Contribution Online reputation | Regression analysis | Knowledge contribution and reputation are positively related to a physician’s online income. |

| Deng, Hong, Zhang, Evans, and Chen [32] | Web crawling | Online effort Online reputation | OLS | Patients prefer to choose online physicians with greater effort and reputation. |

| Wu and Lu [45] | Web crawling | Online reputation Offline reputation Online services | OLS | Impact of online popularity is positive on online booking service in hospitals. |

| Yang and Zhang [48] | Web crawling | Free feedback Paid feedback | OLS GLS | Paid feedback has a greater effect on patient choice than free feedback. |

| Liu, Guo, Wu, and Wu [28] | Web crawling | Offline reputation Online reputation | HLM | Physician’s reputation and hospital’s reputation have a positive effect on the number of appointments. |

| Chen, Yan, and Zhang [108] | Web crawling | Physician’s login behavior | Regression analysis | Physician login behavior positively influences patient choice. |

| Han, Qu, and Zhang [104] | Questionnaire | Neighbor-recommended physician Trust in OPRs Review valence | Partial correlation analysis | Patients with high-risk diseases would be more likely to select a neighbor-recommended physician who has positive reviews than patients with low-risk diseases. |

| Luo, Chen, Wu, and Li [109] | Web crawling | Physician’s colleague multi-channel access (SI), ratings | OLS regression | SI and patients’ rating significantly and positively affect multi-channel access in an OHC. |

| Wu and Lu [44] | Web crawling | Colleague reputation Physician reputation | Fractional logistic regression | There is significant impact of focal physician’s colleagues’ reputation and focal physician reputation on the patients’ odds of posting treatment experience. |

| Guo, Guo, Fang, and Vogel [60] | Web crawling | Status capital Decisional capital | OLS | The social and economic returns of doctors in OHCs are positively associated with their status and decisional capital. |

| Shan, Wang, Luan, and Tang [106] | Eye-tracking experiment Surveys | Cognitive trust Affective trust | PLS-SEM | Cognitive trust and affective trust both positively affected patients’ choice of physician. |

| Yang, Diao, and Kiang [110] | Web crawling | Ability Reputation Benevolence | Logarithmiclinearregression | Physician’s trustworthiness (ability, reputation, and benevolence) has positive impact on a physician’s sales. |

| Chen, Rai, and Guo [31] | Web crawling | Credibility Benevolence | Regression analysis | Volume of reviews significantly moderates the impact of credibility on popularity, while valence and variance significantly moderate the influence of benevolence on online popularity and price premium. |

| Guo, Guo, Zhang, and Vogel [61] | Web crawling | Weak ties Strong ties | Smart-PLS | Significant effect of D–P tie strength was found on doctors’ returns in the online healthcare context. |

| Wu and Lu [65] | Web crawling | Pricing and quantity of online services | OLS | Service quantity positively impacts patient satisfaction. |

| Li, Zhang, Ma, and Liu [50] | Web crawling | Online reputation Online self-representation | Regression analysis | A market served by many doctors with strong online reputations or high levels ofself-representation will be less concentrated. |

| Zhang, Guo, and Wu [54] | Web crawling | Online contributions Quantity of online contributions (popularity), Quality of online contributions (reputation) | Regression analysis | The relationship between quantity of online free service contributions and doctor’s private benefits was found to be positive. |

| Wu and Deng [53] | Web crawling | Specification Credibility Coordination | OLS regression | Physician’s specification, credibility, and coordination are positively related to order quantity. |

| Liu, Guo, Wu, and Vogel [51] | Web crawling | Online doctor efforts | OLS and hierarchical regression | Breadth and depth of online doctor efforts are associated with doctor reputation and popularity. |

| Yang, Guo, and Wu [46] | Web crawling | Response speed Interaction frequency | Regression analysis | Physician’s response speed and interaction frequency would significantly affect patient satisfaction. |

| Lu and Wu [63] | Web crawling | Overall review rating Number of reviews | OLS regression | The rise in ratings and number of reviews will result in increase in the number of outpatient visits. |

| Zhao, Li, and Wu [56] | Web crawling | External WOM Peer influence Internal WOMA ppointment quantity | Three-stage least square | The number of doctors’ votes, followers, and reviews (external WOM), peer influence, internal WOM, and appointment quantity significantly influence patients’ doctor choice. |

| Liu, Zhou, and Wu [111] | Web crawling | Title, satisfaction, review volume, service attitude, technical level, clarity of explanation, ethics | Negative binomial regression | All variables significantly influence online appointment services received by the focal doctor except for service process. |

| Lu and Wu [40] | Web crawling | Technical quality Functional quality | OLS | Patients make appointments with physicians with technical and functional quality. |

| Lu and Zhang [73] | Questionnaire | Physician–patient communication Information quality Decision-making preference Physician–patient concordance | Smart-PLS | Physician–patient communication in OHCs positively impacts patient compliance through mediations of the perceived quality of internet health information, decision-making preference, and physician–patient concordance. |

| Emmert, Meier, Pisch, and Sander [115] | Questionnaire | – | Interviews | – |

| Laugesen, Hassanein, and Yuan [78] | Questionnaire | Perceived information asymmetry Patient–physician concordance | Smart-PLS | Perceived information asymmetry and patient–physician concordance significantly influence patient compliance for health consultation. |

| Chen, Guo, Wu, and Ju [95] | Web crawling | Patient activeness Informational support Emotional support Patient satisfaction | Text miningRegression analysis | Patient activeness has significant impact on informational and emotional support. While informational and emotional support significantly influence patient satisfaction. |

| Yang, Du, He, and Qiao [29] | Web crawling | ReputationMonetary rewardD–P interaction | Regression analysis | Reputation, monetary reward, and D–P interaction significantly influence physician contribution to OHC. |

| Chen, Baird, and Straub [81] | Web crawling | Affective signals Informational signals Informational support Social support | Text mining Regression analysis | Affective signals and informational signals significantly influence informational support and social support from OHCs. |

| Khurana, Qiu, and Kumar [14] | Web crawling | Doctor response to patient questions | Regression analysis | Doctor response significantly influences user perception of medical services offered. |

| Greenwood, Agarwal, Agarwal, and Gopal [99] | Web crawling | Individual expertise Organizational expertise Adoption of new practices | Econometric analysis | There is a significant influence of individual and organizational expertise on physician behavior. |

| Shah, Yan, Khan, and Shah [67] | Web crawling | Review-related signals Service-related signals | Text mining Regression analysis | Review-related signals and service-related signals significantly influence patients’ behavior. |

| Hong, Liang, Radcliff, Wigfall, and Street [15] | Systematic review | – | Content analysis | – |

| Hao and Zhang [33] | Web crawling | – | Topic modeling | – |

| Hao, Zhang, Wang, and Gao [34] | Web crawling | – | Topic modeling | – |

| Li, Liu, Li, Liu, and Liu [37] | Web crawling | – | Content analysisTopic modeling | – |

| James, Villacis Calderon, and Cook [35] | Web crawling | – | Topic modeling | – |

| Jung, Hur, Jung, and Kim [36] | Web crawling | – | Topic modeling | – |

| Wallace, Paul, Sarkar, Trikalinos, and Dredze [4] | Web crawling | – | Topic modeling | – |

| Zhang, Deng, Hong, Evans, Ma, and Zhang [7] | Web crawling | – | Content analysis | – |

| Bidmon, Elshiewy, Terlutter, and Boztug [3] | Survey | – | Regression analysis | – |

| Pang and Liu [112] | Web crawling | – | Topic modelingQualitative analysis | – |

| Noteboom and Al-Ramahi [114] | Web crawling | – | Topic modeling | – |

References

- Schulz, P.J.; Rothenfluh, F. Influence of health literacy on effects of patient rating websites: Survey study using a hypothetical situation and fictitious doctors. J. Med. Internet Res. 2020, 22, e14134. [Google Scholar] [CrossRef] [PubMed]

- Hanauer, D.A.; Zheng, K.; Singer, D.C.; Gebremariam, A.; Davis, M.M. Public Awareness, Perception, and Use of Online Physician Rating Sites. JAMA 2014, 311, 734–735. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bidmon, S.; Elshiewy, O.; Terlutter, R.; Boztug, Y. What patients value in physicians: Analyzing drivers of patient satisfaction using physician-rating website data. J. Med. Internet Res. 2020, 22, e13830. [Google Scholar] [CrossRef]

- Wallace, B.C.; Paul, M.J.; Sarkar, U.; Trikalinos, T.A.; Dredze, M. A large-scale quantitative analysis of latent factors and sentiment in online doctor reviews. J. Am. Med. Inform. Assoc. 2014, 21, 1098–1103. [Google Scholar] [CrossRef]

- Shah, A.M.; Yan, X.; Shah, S.A.A.; Shah, S.J.; Mamirkulova, G. Exploring the impact of online information signals in leveraging the economic returns of physicians. J. Biomed. Inform. 2019, 98, 103272. [Google Scholar] [CrossRef] [PubMed]

- Kordzadeh, N. An Empirical Examination of Factors Influencing the Intention to Use Physician Rating Websites. In Proceedings of the 52nd Hawaii International Conference on System Sciences, Association for Information Systems, Maui, HI, USA, 8–11 January 2019; pp. 4346–4354. [Google Scholar]

- Zhang, W.; Deng, Z.; Hong, Z.; Evans, R.; Ma, J.; Zhang, H. Unhappy patients are not alike: Content analysis of the negative comments from china’s good doctor website. J. Med. Internet Res. 2018, 20, e35. [Google Scholar] [CrossRef] [PubMed]

- Emmert, M.; Halling, F.; Meier, F. Evaluations of dentists on a german physician rating website: An analysis of the ratings. J. Med. Internet Res. 2015, 17, e15. [Google Scholar] [CrossRef] [Green Version]

- Shah, A.M.; Yan, X.; Tariq, S.; Khan, S. Listening to the patient voice: Using a sentic computing model to evaluate physicians’ healthcare service quality for strategic planning in hospitals. Qual. Quant. 2020, 55, 173–201. [Google Scholar] [CrossRef]

- Rothenfluh, F.; Schulz, P.J. Physician rating websites: What aspects are important to identify a good doctor, and are patients capable of assessing them? A mixed-methods approach including physicians’ and health care consumers’ perspectives. J. Med. Internet Res. 2017, 19, e127. [Google Scholar] [CrossRef]

- Lin, Y.; Hong, Y.A.; Henson, B.S.; Stevenson, R.D.; Hong, S.; Lyu, T.; Liang, C. Assessing patient experience and healthcare quality of dental care using patient online reviews in the United States: Mixed methods study. J. Med. Internet Res. 2020, 22, e18652. [Google Scholar] [CrossRef]

- Rothenfluh, F.; Germeni, E.; Schulz, P.J. Consumer decision-making based on review websites: Are there differences between choosing a hotel and choosing a physician? J. Med. Internet Res. 2016, 18, e129. [Google Scholar] [CrossRef] [Green Version]

- Terlutter, R.; Bidmon, S.; Röttl, J. Who uses physician-rating websites? Differences in sociodemographic variables, psychographic variables, and health status of users and nonusers of physician-rating websites. J. Med. Internet Res. 2014, 16, e97. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Khurana, S.; Qiu, L.; Kumar, S. When a doctor knows, it shows: An empirical analysis of doctors’ responses in a Q&A forum of an online healthcare portal. Inf. Syst. Res. 2019, 30, 872–891. [Google Scholar] [CrossRef]

- Hong, Y.A.; Liang, C.; Radcliff, T.A.; Wigfall, L.T.; Street, R.L. What do patients say about doctors online? A systematic review of studies on patient online reviews. J. Med. Internet Res. 2019, 21, e12521. [Google Scholar] [CrossRef] [Green Version]

- Han, X.; Li, B.; Zhang, T.; Qu, J. Factors Associated with the actual behavior and intention of rating physicians on physician rating websites: Cross-sectional study. J. Med. Internet Res. 2020, 22, e14417. [Google Scholar] [CrossRef] [PubMed]

- Schlesinger, M.; Grob, R.; Shaller, D.; Martino, S.C.; Parker, A.M.; Finucane, M.L.; Cerully, J.L.; Rybowski, L. Taking patients’ narratives about clinicians from anecdote to science. N. Engl. J. Med. 2015, 373, 675–679. [Google Scholar] [CrossRef] [PubMed]

- Lagu, T.; Metayer, K.; Moran, M.; Ortiz, L.; Priya, A.; Goff, S.L.; Lindenauer, P.K. Website characteristics and physician reviews on commercial physician-rating websites. JAMA 2017, 317, 766–768. [Google Scholar] [CrossRef] [PubMed]

- Moher, D.; Shamseer, L.; Clarke, M.; Ghersi, D.; Liberati, A.; Petticrew, M.; Shekelle, P.; Stewart, L.A.; Group, P.-P. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Syst. Rev. 2015, 4, 1. [Google Scholar] [CrossRef] [Green Version]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G.; Group, P. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. PLoS Med. 2009, 6, e1000097. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Inayat, I.; Salim, S.S.; Marczak, S.; Daneva, M.; Shamshirband, S. A systematic literature review on agile requirements engineering practices and challenges. Comput. Hum. Behav. 2015, 51, 915–929. [Google Scholar] [CrossRef]

- Pacheco, C.; Garcia, I. A systematic literature review of stakeholder identification methods in requirements elicitation. J. Syst. Softw. 2012, 85, 2171–2181. [Google Scholar] [CrossRef]

- Qazi, A.; Raj Ram, G.; Hardaker, G.; Standing, C. A systematic literature review on opinion types and sentiment analysis techniques: Tasks and challenges. Internet Res. 2017, 27, 608–630. [Google Scholar] [CrossRef]

- Bokolo Anthony, J. Use of telemedicine and virtual care for remote treatment in response to COVID-19 Pandemic. J. Med. Syst. 2020, 44, 132. [Google Scholar] [CrossRef]

- Keele, S. Guidelines for Performing Systematic Literature Reviews in Software Engineering; Ver. 2.3 EBSE Technical Report; EBSE: Goyang-si, Korea, 2007. [Google Scholar]

- Akerlof, G.A. The market for “lemons”: Quality uncertainty and the market mechanism. Q. J. Econ. 1970, 84, 488–500. [Google Scholar] [CrossRef]

- Kerschbamer, R.; Sutter, M. The economics of credence goods—A survey of recent lab and field experiments*. CESifo Econ. Stud. 2017, 63, 1–23. [Google Scholar] [CrossRef] [Green Version]

- Liu, X.; Guo, X.; Wu, H.; Wu, T. The impact of individual and organizational reputation on physicians’ appointments online. Int. J. Electron. Commer. 2016, 20, 551–577. [Google Scholar] [CrossRef]

- Yang, H.; Du, H.S.; He, W.; Qiao, H. Understanding the motivators affecting doctors’ contributions in online healthcare communities: Professional status as a moderator. Behav. Inf. Technol. 2021, 40, 146–160. [Google Scholar] [CrossRef]

- Cao, X.; Liu, Y.; Zhu, Z.; Hu, J.; Chen, X. Online selection of a physician by patients: Empirical study from elaboration likelihood perspective. Comput. Hum. Behav. 2017, 73, 403–412. [Google Scholar] [CrossRef]

- Chen, L.; Rai, A.; Guo, X. Physicians’ Online Popularity and Price Premiums for Online Health Consultations: A Combined Signaling Theory and Online Feedback Mechanisms Explanation. In Proceedings of the Thirty Sixth International Conference on Information Systems (ICIS 15), Association for Information Systems, Fort Worth, TX, USA, 13–16 December 2015; pp. 2105–2115. [Google Scholar]

- Deng, Z.; Hong, Z.; Zhang, W.; Evans, R.; Chen, Y. The effect of online effort and reputation of physicians on patients’ choice: 3-Wave data analysis of china’s good doctor website. J. Med. Internet Res. 2019, 21, e10170. [Google Scholar] [CrossRef]

- Hao, H.; Zhang, K. The voice of chinese health consumers: A text mining approach to web-based physician reviews. J. Med. Internet Res. 2016, 18, e108. [Google Scholar] [CrossRef]

- Hao, H.; Zhang, K.; Wang, W.; Gao, G. A tale of two countries: International comparison of online doctor reviews between China and the United States. Int. J. Med. Inform. 2017, 99, 37–44. [Google Scholar] [CrossRef] [PubMed]

- James, T.L.; Villacis Calderon, E.D.; Cook, D.F. Exploring patient perceptions of healthcare service quality through analysis of unstructured feedback. Expert Syst. Appl. 2017, 71, 479–492. [Google Scholar] [CrossRef]

- Jung, Y.; Hur, C.; Jung, D.; Kim, M. Identifying key hospital service quality factors in online health communities. J. Med. Internet Res. 2015, 17, e90. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Liu, M.; Li, X.; Liu, X.; Liu, J. Developing embedded taxonomy and mining patients’ interests from web-based physician reviews: Mixed-methods approach. J. Med. Internet Res. 2018, 20, e254. [Google Scholar] [CrossRef]

- Li, J.; Tang, J.; Yen David, C.; Liu, X. Disease risk and its moderating effect on the e-consultation market offline and online signals. Inf. Technol. People 2019, 32, 1065–1084. [Google Scholar] [CrossRef]

- Liu, N.; Finkelstein, S.R.; Kruk, M.E.; Rosenthal, D. When waiting to see a doctor is less irritating: Understanding patient preferences and choice behavior in appointment scheduling. Manag. Sci. 2018, 64, 1975–1996. [Google Scholar] [CrossRef] [Green Version]

- Lu, N.; Wu, H. Exploring the impact of word-of-mouth about physicians’ service quality on patient choice based on online health communities. BMC Med. Inform. Decis. 2016, 16, 151. [Google Scholar] [CrossRef] [Green Version]

- Lu, S.F.; Rui, H. Can we trust online physician ratings? Evidence from cardiac surgeons in Florida. Manag. Sci. 2018, 64, 2557–2573. [Google Scholar] [CrossRef]

- Luca, M.; Zervas, G. Fake it till you make it: Reputation, competition, and yelp review fraud. Manag. Sci. 2016, 62, 3412–3427. [Google Scholar] [CrossRef] [Green Version]

- Segal, J.; Sacopulos, M.; Sheets, V.; Thurston, I.; Brooks, K.; Puccia, R. Online doctor reviews: Do they track surgeon volume, a proxy for quality of care? J. Med. Internet Res. 2012, 14, e50. [Google Scholar] [CrossRef]

- Wu, H.; Lu, N. How your colleagues’ reputation impact your patients’ odds of posting experiences: Evidence from an online health community. Electron. Commer. Res. Appl. 2016, 16, 7–17. [Google Scholar] [CrossRef]

- Wu, H.; Lu, N. Online written consultation, telephone consultation and offline appointment: An examination of the channel effect in online health communities. Int. J. Med. Inform. 2017, 107, 107–119. [Google Scholar] [CrossRef]

- Yang, H.; Guo, X.; Wu, T. Exploring the influence of the online physician service delivery process on patient satisfaction. Decis. Support Syst. 2015, 78, 113–121. [Google Scholar] [CrossRef]

- Yang, H.; Guo, X.; Wu, T.; Ju, X. Exploring the effects of patient-generated and system-generated information on patients’ online search, evaluation and decision. Electron. Commer. Res. Appl. 2015, 14, 192–203. [Google Scholar] [CrossRef]

- Yang, H.; Zhang, X. Investigating the effect of paid and free feedback about physicians’ telemedicine services on patients’ and physicians’ behaviors: Panel data analysis. J. Med. Internet Res. 2019, 21, e12156. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Tang, J.; Jiang, L.; Yen, D.C.; Liu, X. Economic success of physicians in the online consultation market: A signaling theory perspective. Int. J. Electron. Commer. 2019, 23, 244–271. [Google Scholar] [CrossRef]

- Li, J.; Zhang, Y.; Ma, L.; Liu, X. The impact of the internet on health consultation market concentration: An econometric analysis of secondary data. J. Med. Internet Res. 2016, 18, e276. [Google Scholar] [CrossRef] [Green Version]

- Liu, X.; Guo, X.; Wu, H.; Vogel, D. Doctor’s Effort Influence on Online Reputation and Popularity. In Proceedings of the 2nd International Conference on Smart Health, Beijing, China, 10–11 July 2014; Zheng, X., Zeng, D., Chen, H., Zhang, Y., Xing, C., Neill, D.B., Eds.; Smart Health. Springer: Berlin/Heidelberg, Germany, 2014; pp. 111–126. [Google Scholar]

- Wu, B. Patient continued use of online health care communities: Web mining of patient-doctor communication. J. Med. Internet Res. 2018, 20, e126. [Google Scholar] [CrossRef]

- Wu, H.; Deng, Z. Knowledge collaboration among physicians in online health communities: A transactive memory perspective. Int. J. Inf. Manag. 2019, 49, 13–33. [Google Scholar] [CrossRef]

- Zhang, M.; Guo, X.; Wu, T. Impact of free contributions on private benefits in online healthcare communities. Int. J. Electron. Commer. 2019, 23, 492–523. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, S.; Deng, Z.; Chen, X. Knowledge sharing motivations in online health communities: A comparative study of health professionals and normal users. Comput. Hum. Behav. 2017, 75, 797–810. [Google Scholar] [CrossRef]

- Zhao, Y.; Li, S.; Wu, J. Exploring the Factors Influencing Patient Usage Behavior Based on Online Health Communities. Proceedings of The 6th International Conference for Smart Health, Wuhan, China, 1–3 July 2018; Chen, H., Fang, Q., Zeng, D., Wu, J., Eds.; Smart Health. Springer: Berlin/Heidelberg, Germany, 2018; pp. 70–76. [Google Scholar]

- Zhang, X.; Guo, F.; Xu, T.; Li, Y. What motivates physicians to share free health information on online health platforms? Inform. Process. Manag. 2020, 57, 102166. [Google Scholar] [CrossRef]

- Liang, Q.; Luo, F.J.; WU, Y.Z. The impact of doctor’s efforts and reputation on the number of new patients in online health community. Chin. J. Health Policy 2017, 10, 63–71. [Google Scholar]

- Gao, G.; Greenwood, B.N.; Agarwal, R.; McCullough, J.S. Vocal minority and silent majority: How do online ratings reflect population perceptions of quality. MIS Q. 2015, 39, 565–590. [Google Scholar] [CrossRef]

- Guo, S.; Guo, X.; Fang, Y.; Vogel, D. How doctors gain social and economic returns in online health-care communities: A professional capital perspective. J. Manag. Inf. Syst. 2017, 34, 487–519. [Google Scholar] [CrossRef]

- Guo, S.; Guo, X.; Zhang, X.; Vogel, D. Doctor–patient relationship strength’s impact in an online healthcare community. Inf. Technol. Dev. 2018, 24, 279–300. [Google Scholar] [CrossRef]

- Holwerda, N.; Sanderman, R.; Pool, G.; Hinnen, C.; Langendijk, J.A.; Bemelman, W.A.; Hagedoorn, M.; Sprangers, M.A.G. Do patients trust their physician? The role of attachment style in the patient-physician relationship within one year after a cancer diagnosis. Acta Oncol. 2013, 52, 110–117. [Google Scholar] [CrossRef] [Green Version]

- Lu, W.; Wu, H. How online reviews and services affect physician outpatient visits: Content analysis of evidence from two online health care communities. JMIR Med. Inform. 2019, 7, e16185. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Roettl, J.; Bidmon, S.; Terlutter, R. What predicts patients’ willingness to undergo online treatment and pay for online treatment? Results from a web-based survey to investigate the changing patient-physician relationship. J. Med. Internet Res. 2016, 18, e32. [Google Scholar] [CrossRef] [PubMed]

- Wu, H.; Lu, N. Service provision, pricing, and patient satisfaction in online health communities. Int. J. Med. Inform. 2018, 110, 77–89. [Google Scholar] [CrossRef]

- Tucker, C.; Zhang, J. How does popularity information affect choices? A field experiment. Manag. Sci. 2011, 57, 828–842. [Google Scholar] [CrossRef]

- Shah, A.; Yan, X.; Khan, S.; Shah, J. Exploring the Impact of Review and Service-Related Signals on Online Physician Review Helpfulness: A Multi-Methods Approach. In Proceedings of the Twenty-Fourth Pacific Asia Conference on Information Systems, Association for Information Systems, Dubai, United Arab Emirates, 22–24 June 2020; pp. 1–14. [Google Scholar]

- Angst, C.M.; Agarwal, R. Adoption of electronic health records in the presence of privacy concerns: The elaboration likelihood model and individual persuasion. MIS Q. 2009, 33, 339–370. [Google Scholar] [CrossRef] [Green Version]

- Batini, C.; Scannapieco, M. Data and Information Quality; Springer: Cham, Switzerland, 2016; p. 500. [Google Scholar]

- Beck, F.; Richard, J.-B.; Nguyen-Thanh, V.; Montagni, I.; Parizot, I.; Renahy, E. Use of the internet as a health information resource among french young adults: Results from a nationally representative survey. J. Med. Internet Res. 2014, 16, e128. [Google Scholar] [CrossRef] [PubMed]

- Jin, J.; Yan, X.; Li, Y.; Li, Y. How users adopt healthcare information: An empirical study of an online Q&A community. Int. J. Med. Inform. 2016, 86, 91–103. [Google Scholar] [CrossRef] [PubMed]

- Keselman, A.; Arnott Smith, C.; Murcko, A.C.; Kaufman, D.R. Evaluating the quality of health information in a changing digital ecosystem. J. Med. Internet Res. 2019, 21, e11129. [Google Scholar] [CrossRef] [Green Version]

- Lu, X.; Zhang, R. Impact of physician-patient communication in online health communities on patient compliance: Cross-sectional questionnaire study. J. Med. Internet Res. 2019, 21, e12891. [Google Scholar] [CrossRef] [PubMed]

- Memon, M.; Ginsberg, L.; Simunovic, N.; Ristevski, B.; Bhandari, M.; Kleinlugtenbelt, Y.V. Quality of web-based information for the 10 most common fractures. Interact. J. Med. Res. 2016, 5, e19. [Google Scholar] [CrossRef] [PubMed]

- Sun, Y.; Zhang, Y.; Gwizdka, J.; Trace, C.B. Consumer evaluation of the quality of online health information: Systematic literature review of relevant criteria and indicators. J. Med. Internet Res. 2019, 21, e12522. [Google Scholar] [CrossRef] [PubMed]

- Yoon, T.J. Quality information disclosure and patient reallocation in the healthcare industry: Evidence from cardiac surgery report cards. Mark. Sci. 2019, 39, 636–662. [Google Scholar] [CrossRef]

- Wang, G.; Li, J.; Hopp, W.J.; Fazzalari, F.L.; Bolling, S.F. Using patient-specific quality information to unlock hidden healthcare capabilities. Manuf. Serv. Oper. Manag. 2019, 21, 582–601. [Google Scholar] [CrossRef] [Green Version]

- Laugesen, J.; Hassanein, K.; Yuan, Y. The impact of internet health information on patient compliance: A research model and an empirical study. J. Med. Internet Res. 2015, 17, e143. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tong, Y.; Tan, C.-H.; Teo, H.-H. Direct and indirect information system use: A multimethod exploration of social power antecedents in healthcare. Inf. Syst. Res. 2017, 28, 690–710. [Google Scholar] [CrossRef]

- Diviani, N.; van den Putte, B.; Giani, S.; van Weert, J.C. Low health literacy and evaluation of online health information: A systematic review of the literature. J. Med. Internet Res. 2015, 17, e112. [Google Scholar] [CrossRef] [Green Version]

- Chen, L.; Baird, A.; Straub, D. A linguistic signaling model of social support exchange in online health communities. Decis. Support Syst. 2020, 130, 113233. [Google Scholar] [CrossRef]

- Zhang, X.; Guo, X.; Lai, K.-h.; Yi, W. How does online interactional unfairness matter for patient–doctor relationship quality in online health consultation? The contingencies of professional seniority and disease severity. Eur. J. Inf. Syst. 2019, 28, 336–354. [Google Scholar] [CrossRef]

- Cousin, G.; Schmid Mast, M.; Roter, D.L.; Hall, J.A. Concordance between physician communication style and patient attitudes predicts patient satisfaction. Patient Educ. Couns. 2012, 87, 193–197. [Google Scholar] [CrossRef]

- Banerjee, A.; Sanyal, D. Dynamics of doctor-patient relationship: A cross-sectional study on concordance, trust, and patient enablement. J. Fam. Commu. Med. 2012, 19, 12–19. [Google Scholar] [CrossRef] [Green Version]

- Audrain-Pontevia, A.-F.; Menvielle, L.; Ertz, M. Effects of three antecedents of patient compliance for users of peer-to-peer online health communities: Cross-sectional study. J. Med. Internet Res. 2019, 21, e14006. [Google Scholar] [CrossRef]

- Chandwani, K.D.; Zhao, F.; Morrow, G.R.; Deshields, T.L.; Minasian, L.M.; Manola, J.; Fisch, M.J. Lack of patient-clinician concordance in cancer patients: Its relation with patient variables. J. Pain Symptom. Manag. 2017, 53, 988–998. [Google Scholar] [CrossRef] [Green Version]

- Gross, K.; Schindler, C.; Grize, L.; Späth, A.; Schwind, B.; Zemp, E. Patient–physician concordance and discordance in gynecology: Do physicians identify patients’ reasons for visit and do patients understand physicians’ actions? Patient Educ. Couns. 2013, 92, 45–52. [Google Scholar] [CrossRef]

- Kee, J.W.Y.; Khoo, H.S.; Lim, I.; Koh, M.Y.H. Communication skills in patient-doctor interactions: Learning from patient complaints. Health Prof. Educ. 2018, 4, 97–106. [Google Scholar] [CrossRef]

- Spencer, K.L. Transforming patient compliance research in an era of biomedicalization. J. Health Soc. Behav. 2018, 59, 170–184. [Google Scholar] [CrossRef] [PubMed]

- Tan, S.S.-L.; Goonawardene, N. Internet health information seeking and the patient-physician relationship: A systematic review. J. Med. Internet Res. 2017, 19, e9. [Google Scholar] [CrossRef] [PubMed]

- Thornton, R.L.J.; Powe, N.R.; Roter, D.; Cooper, L.A. Patient–physician social concordance, medical visit communication and patients’ perceptions of health care quality. Patient Educ. Couns. 2011, 85, e201–e208. [Google Scholar] [CrossRef] [Green Version]

- Zanini, C.; Sarzi-Puttini, P.; Atzeni, F.; Di Franco, M.; Rubinelli, S. Doctors’ insights into the patient perspective: A qualitative study in the field of chronic pain. Biomed. Res. Int. 2014, 2014, 514230. [Google Scholar] [CrossRef] [PubMed]

- Schubart, J.R.; Toran, L.; Whitehead, M.; Levi, B.H.; Green, M.J. Informed decision making in advance care planning: Concordance of patient self-reported diagnosis with physician diagnosis. Support Care 2013, 21, 637–641. [Google Scholar] [CrossRef] [PubMed]

- Shin, D.W.; Kim, S.Y.; Cho, J.; Sanson-Fisher, R.W.; Guallar, E.; Chai, G.Y.; Kim, H.-S.; Park, B.R.; Park, E.-C.; Park, J.-H. Discordance in perceived needs between patients and physicians in oncology practice: A nationwide survey in Korea. J. Clin. Oncol. 2011, 29, 4424–4429. [Google Scholar] [CrossRef]

- Chen, S.; Guo, X.; Wu, T.; Ju, X. Exploring the online doctor-patient interaction on patient satisfaction based on text mining and empirical analysis. Inform. Process Manag. 2020, 57, 102253. [Google Scholar] [CrossRef]

- Zhou, J.; Zuo, M.; Ye, C. Understanding the factors influencing health professionals’ online voluntary behaviors: Evidence from YiXinLi, a Chinese online health community for mental health. Int. J. Med. Inform. 2019, 130, 103939. [Google Scholar] [CrossRef] [PubMed]

- Hampshire, K.; Hamill, H.; Mariwah, S.; Mwanga, J.; Amoako-Sakyi, D. The application of signalling theory to health-related trust problems: The example of herbal clinics in Ghana and Tanzania. Soc. Sci. Med. 2017, 188, 109–118. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Wu, H.; Deng, Z.; Lu, N.; Evans, R.; Xia, C. How professional capital and team heterogeneity affect the demands of online team-based medical service. BMC Med. Inform. Decis. 2019, 19, 119. [Google Scholar] [CrossRef] [PubMed]

- Greenwood, B.N.; Agarwal, R.; Agarwal, R.; Gopal, A. The role of individual and organizational expertise in the adoption of new practices. Organ. Sci. 2019, 30, 191–213. [Google Scholar] [CrossRef]

- Özer, Ö.; Subramanian, U.; Wang, Y. Information sharing, advice provision, or delegation: What leads to higher trust and trustworthiness? Manag. Sci. 2018, 64, 474–493. [Google Scholar] [CrossRef]

- Gao, G.G.; McCullough, J.S.; Agarwal, R.; Jha, A.K. A changing landscape of physician quality reporting: Analysis of patients’ online ratings of their physicians over a 5-year period. J. Med. Internet Res. 2012, 14, e38. [Google Scholar] [CrossRef] [PubMed]

- Lankton, N.K.; McKnight, D.H.; Wright, R.T.; Thatcher, J.B. Research note—Using expectation disconfirmation theory and polynomial modeling to understand trust in technology. Inf. Syst. Res. 2016, 27, 197–213. [Google Scholar] [CrossRef]

- Yi, M.Y.; Yoon, J.J.; Davis, J.M.; Lee, T. Untangling the antecedents of initial trust in Web-based health information: The roles of argument quality, source expertise, and user perceptions of information quality and risk. Decis. Support Syst. 2013, 55, 284–295. [Google Scholar] [CrossRef]

- Han, X.; Qu, J.; Zhang, T. Exploring the impact of review valence, disease risk, and trust on patient choice based on online physician reviews. Telemat. Inform. 2019, 45, 101276. [Google Scholar] [CrossRef]

- Xiao, N.; Sharman, R.; Rao, H.R.; Upadhyaya, S. Factors influencing online health information search: An empirical analysis of a national cancer-related survey. Decis. Support Syst. 2014, 57, 417–427. [Google Scholar] [CrossRef]

- Shan, W.; Wang, Y.; Luan, J.; Tang, P. The influence of physician information on patients’ choice of physician in mhealth services using china’s chunyu doctor app: Eye-tracking and questionnaire study. JMIR Mhealth Uhealth 2019, 7, e15544. [Google Scholar] [CrossRef]

- Reimann, S.; Strech, D. The representation of patient experience and satisfaction in physician rating sites. A criteria-based analysis of English- and German-language sites. BMC Health Serv. Res. 2010, 10, 332. [Google Scholar] [CrossRef] [Green Version]

- Chen, Q.; Yan, X.; Zhang, T. The Impact of Physician’s Login Behavior on Patients’ Search and Decision in OHCs. In Proceedings of the Seventh International Conference for Smart Health, Shenzhen, China, 1–2 July 2019; Chen, H., Zeng, D., Yan, X., Xing, C., Eds.; Smart Health. Springer: Berlin/Heidelberg, Germany, 2019; pp. 155–169. [Google Scholar]

- Luo, P.; Chen, K.; Wu, C.; Li, Y. Exploring the social influence of multichannel access in an online health community. J. Assoc. Inf. Sci. Technol. 2018, 69, 98–109. [Google Scholar] [CrossRef]

- Yang, M.; Diao, Y.; Kiang, M.Y. Physicians’ Sales of Expert Service Online: The Role of Physician Trust and Price. In Proceedings of the Twenty-Second Pacific Asia Conference on Information Systems (PACIS 18), Association for Information Systems, Yokohama, Japan, 26–30 June 2018; pp. 1–14. [Google Scholar]

- Liu, G.; Zhou, L.; Wu, J. What Affects Patients’ Online Decisions: An Empirical Study of Online Appointment Service Based on Text Mining. In Proceedings of the 6th International Conference for Smart Health, Wuhan, China, 1–3 July 2018; Chen, H., Fang, Q., Zeng, D., Wu, J., Chen, H., Fang, Q., Zeng, D., Wu, J., Eds.; Smart Health. Springer: Berlin/Heidelberg, Germany, 2018; pp. 204–210. [Google Scholar]

- Pang, P.C.-I.; Liu, L. Why Do Consumers Review Doctors Online? Topic Modeling Analysis of Positive and Negative Reviews on an Online Health Community in China. In Proceedings of the 53rd Hawaii International Conference on System Sciences, Association of Information Systems, Maui, HI, USA, 7–10 January 2020; pp. 705–714. [Google Scholar]

- Chen, Q.; Yan, X.; Zhang, T. Converting visitors of physicians’ personal websites to customers in online health communities: Longitudinal study. J. Med. Internet Res. 2020, 22, e20623. [Google Scholar] [CrossRef]

- Noteboom, C.; Al-Ramahi, M. What Are the Gaps in Mobile Patient Portal? Mining Users Feedback Using Topic Modeling. In Proceedings of the 51st Hawaii International Conference on System Sciences, Association for Information Systems, Hilton Waikoloa Village, HI, USA, 3–6 January 2018; pp. 564–573. [Google Scholar]

- Emmert, M.; Meier, F.; Pisch, F.; Sander, U. Physician choice making and characteristics associated with using physician-rating websites: Cross-sectional study. J. Med. Internet Res. 2013, 15, e187. [Google Scholar] [CrossRef]

- Storino, A.; Castillo-Angeles, M.; Watkins, A.A.; Vargas, C.; Mancias, J.D.; Bullock, A.; Demirjian, A.; Moser, A.J.; Kent, T.S. Assessing the accuracy and readability of online health information for patients with pancreatic cancer. JAMA Surg. 2016, 151, 831–837. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shah, A.M.; Yan, X.; Shah, S.A.A.; Mamirkulova, G. Mining patient opinion to evaluate the service quality in healthcare: A deep-learning approach. J. Ambient. Intell. Humaniz. Comput. 2020, 11, 2925–2942. [Google Scholar] [CrossRef]

- Greaves, F.; Pape, U.J.; King, D.; Darzi, A.; Majeed, A.; Wachter, R.M.; Millett, C. Associations between internet-based patient ratings and conventional surveys of patient experience in the English NHS: An observational study. BMJ Qual. Saf. 2012, 21, 600–605. [Google Scholar] [CrossRef]

- Nieto-García, M.; Muñoz-Gallego, P.A.; González-Benito, Ó. Tourists’ willingness to pay for an accommodation: The effect of eWOM and internal reference price. Int. J. Hosp. Manag. 2017, 62, 67–77. [Google Scholar] [CrossRef] [Green Version]

- Narwal, P.; Nayak, J.K. How consumers form product quality perceptions in absence of fixed posted prices: Interaction of product cues with seller reputation and third-party reviews. J. Retail. Consum. Serv. 2020, 52, 101924. [Google Scholar] [CrossRef]

- Wald, J.T.; Timimi, F.K.; Kotsenas, A.L. Managing physicians’ medical brand. In Mayo Clinic Proceedings; Elsevier: Amsterdam, The Netherlands, 2017; Volume 92, pp. 685–686. [Google Scholar]

- Guo, L.; Jin, B.; Yao, C.; Yang, H.; Huang, D.; Wang, F. Which doctor to trust: A recommender system for identifying the right doctors. J. Med. Internet Res. 2016, 18, e186. [Google Scholar] [CrossRef] [PubMed]

- Strech, D. Ethical principles for physician rating sites. J. Med. Internet Res. 2011, 13, e113. [Google Scholar] [CrossRef]

- Liu, Q.; Zheng, Z.; Zheng, J.; Chen, Q.; Liu, G.; Chen, S.; Chu, B.; Zhu, H.; Akinwunmi, B.; Huang, J.; et al. Health communication through news media during the early stage of the COVID-19 outbreak in China: Digital topic modeling approach. J. Med. Internet Res. 2020, 22, e19118. [Google Scholar] [CrossRef] [PubMed]

- Greaves, F.; Ramirez-Cano, D.; Millett, C.; Darzi, A.; Donaldson, L. Harnessing the cloud of patient experience: Using social media to detect poor quality healthcare. BMJ Qual. Saf. 2013, 22, 251–255. [Google Scholar] [CrossRef] [PubMed]

- Shaffer, V.A.; Hulsey, L.; Zikmund-Fisher, B.J. The effects of process-focused versus experience-focused narratives in a breast cancer treatment decision task. Patient Educ. Couns. 2013, 93, 255–264. [Google Scholar] [CrossRef] [PubMed]

- Drewniak, D.; Glässel, A.; Hodel, M.; Biller-Andorno, N. Risks and benefits of web-based patient narratives: Systematic review. J. Med. Internet Res. 2020, 22, e15772. [Google Scholar] [CrossRef] [PubMed]

- Afzal, M.; Ali, S.I.; Ali, R.; Hussain, M.; Ali, T.; Khan, W.A.; Amin, M.B.; Kang, B.H.; Lee, S. Personalization of wellness recommendations using contextual interpretation. Expert Syst. Appl. 2018, 96, 506–521. [Google Scholar] [CrossRef]

- Krishnamurthy, M.; Marcinek, P.; Malik, K.M.; Afzal, M. Representing social network patient data as evidence-based knowledge to support decision making in disease progression for comorbidities. IEEE Access 2018, 6, 12951–12965. [Google Scholar] [CrossRef]

- Stacey, D.; Légaré, F.; Col, N.F.; Bennett, C.L.; Barry, M.J.; Eden, K.B.; Holmes-Rovner, M.; Llewellyn-Thomas, H.; Lyddiatt, A.; Thomson, R.; et al. Decision aids for people facing health treatment or screening decisions. Cochrane Database Syst. Rev. 2014, 28, CD001431. [Google Scholar] [CrossRef]

| Inclusion Criteria | Exclusion Criteria |

|---|---|

| Electronic databases | PubMed, EMBASE, Google Scholar, Scopus, Web of Science (Clarivate Analytics), Science Direct, Emerald, Taylor & Francis, Springer, Sage, ACM, Wiley, IEEE |

| Searched items | Journals and conference proceedings |

| Keywords used | Health rating platforms, physician rating websites, review sites, online reviews, online physician reviews, online ratings, patient online reviews, healthcare quality, e-health, digital health |

| Searched applied on | Full text to locate publications that fell within the scope of our search and to ensure that we did not overlook those that did not include our search keywords in their titles or abstracts. |

| Language | English |

| Study period | January 2010–December 2020 |

| Database | Retrieved | Included |

|---|---|---|

| PubMed | 75 | 11 |

| Science Direct | 156 | 10 |

| Emerald | 189 | 2 |

| Taylor & Francis | 195 | 8 |

| Springer | 178 | 7 |

| Sage | 165 | 5 |

| ACM | 158 | 4 |

| Wiley | 149 | 3 |

| IEEE | 16 | 2 |

| Total | 1281 | 52 |

| Inclusion Criteria | Exclusion Criteria |

|---|---|

| Studies that focused on PRWs. | Studies that were not written in English. |

| Studies that reported different signaling mechanisms in healthcare. | Excluded papers other than journal articles or conference proceedings. |

| Studies that analyzed patients’ choice or patient decision-making process. | Remove duplicate/similar studies by maintaining the most comprehensive and current version. |

| Studies that analyzed patients’ opinions as online physician reviews. | Studies without any practical, theoretical, or statistical evidence were excluded. |

| Studies with clear aims/objectives. | |

| Studies that addressed and described the research context properly. | |

| The findings of the studies were in line with our research purpose. | |

| Studies that were peer-reviewed and written in English. | |

| Studies that were qualitative, quantitative or mixed-methods, in nature. |

| Criteria | Response Score | Score Obtained |

|---|---|---|

| Is the study aim/objective clear? | Yes = 1/moderately = 0.5/no = 0 | 31 studies 88% |

| Is the research context dealt with well? | Yes = 1/moderately = 0.5/no = 0 | 21 studies 92% |

| Based on the research findings, what percentage is the quality rate acceptance? | >80% = 1/under 20% = 0/between = 0.5 |

| Quality Scores | ||||||

|---|---|---|---|---|---|---|

| Poor (<26%) | Fair (26–45%) | Good (46–65%) | Very Good (66–85%) | Excellent | Total | |

| Number of articles | 3 | 1 | 11 | 17 | 20 | 52 |

| Percentage of articles | 5.76 | 1.92 | 21.15 | 32.69 | 38.46 | 100 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shah, A.M.; Muhammad, W.; Lee, K.; Naqvi, R.A. Examining Different Factors in Web-Based Patients’ Decision-Making Process: Systematic Review on Digital Platforms for Clinical Decision Support System. Int. J. Environ. Res. Public Health 2021, 18, 11226. https://doi.org/10.3390/ijerph182111226

Shah AM, Muhammad W, Lee K, Naqvi RA. Examining Different Factors in Web-Based Patients’ Decision-Making Process: Systematic Review on Digital Platforms for Clinical Decision Support System. International Journal of Environmental Research and Public Health. 2021; 18(21):11226. https://doi.org/10.3390/ijerph182111226

Chicago/Turabian StyleShah, Adnan Muhammad, Wazir Muhammad, Kangyoon Lee, and Rizwan Ali Naqvi. 2021. "Examining Different Factors in Web-Based Patients’ Decision-Making Process: Systematic Review on Digital Platforms for Clinical Decision Support System" International Journal of Environmental Research and Public Health 18, no. 21: 11226. https://doi.org/10.3390/ijerph182111226