A Hybrid Convolutional Neural Network Model for Diagnosis of COVID-19 Using Chest X-ray Images

Abstract

:1. Introduction

2. Materials and Methods

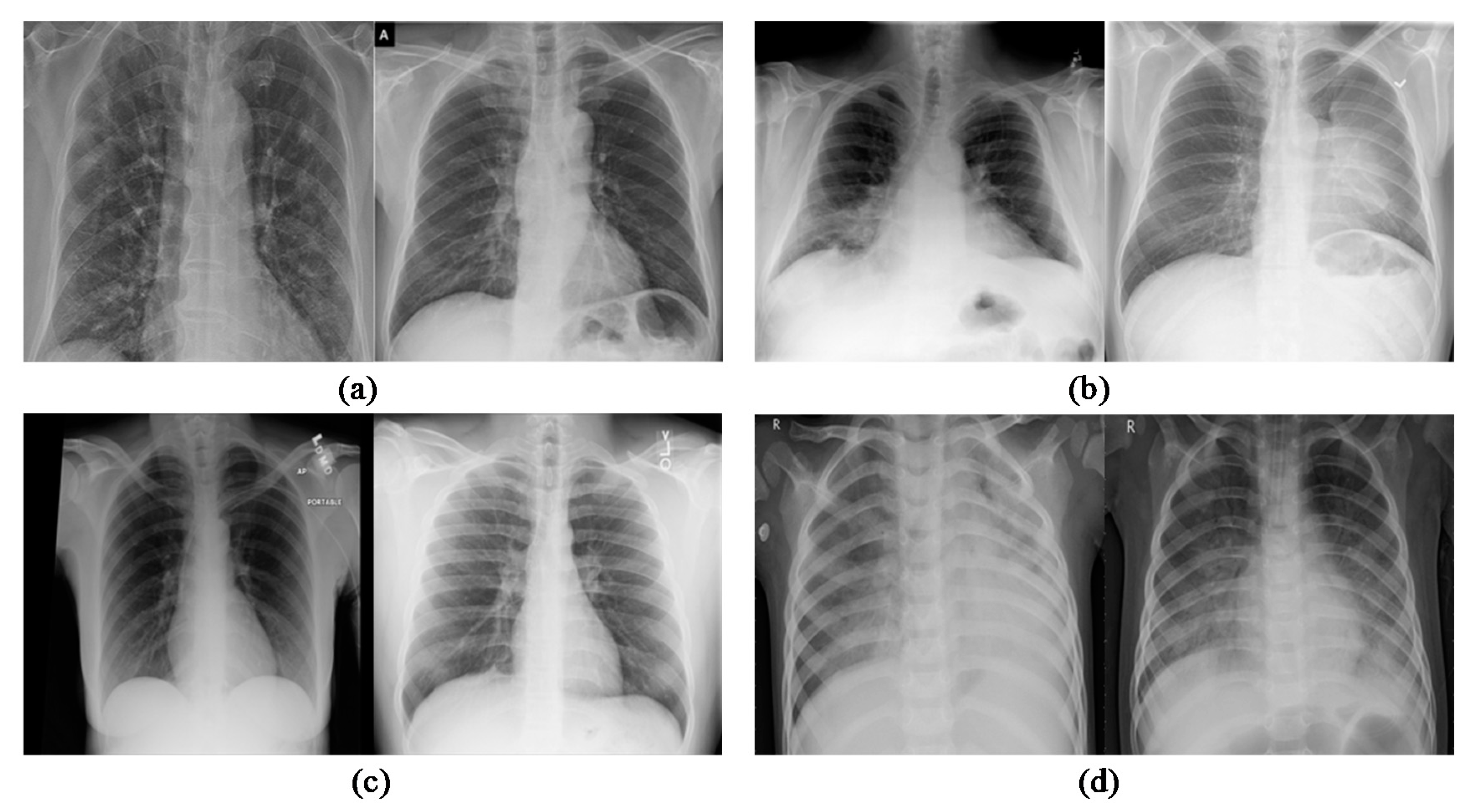

2.1. Dataset for Experiment

2.2. Methodological Contribution

2.3. Proposed C19D-Net Model

2.3.1. Steps

- Step 1: Pre-processingAll Chest XR images have been collected in one dataset and scaled to a constant size of 224 × 224 × 3 pixels to be used in the proposed deep learning pipeline.

- Step 2: Training and ValidationThe training and validation process starts with dividing the images into an 80–20 ratio. This means the training phase contains 80% data and the testing phase contains 20% of data from the total. The Inception V4 produces features of the average pooling layer from the input image.

- Step 3: ClassificationAll the features are extracted using Inception V4 from Chest XR images and then the multiclass SVM (MSVM) classifier is applied. The classification with the proposed C19D-Net model classifies the Chest XR images into multi-classes as “normal”, “viral pneumonia”, “COVID-19” and “bacterial pneumonia” with high precision as associated with other models or methods as discussed below in coming sections.

2.3.2. Architecture

2.3.3. Training of Proposed Model (C19D-Net)

2.4. Statistical Analysis

3. Results

3.1. Metrics Evaluation

3.1.1. 4-Class Evaluation Metrics Comparison

3.1.2. 3-Class and 2-Class Evaluation Metrics Comparison

3.2. Experimental Result

4. Discussion

5. Conclusions and Future Scope

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Deeks, J.J.; Dinnes, J.; Takwoingi, Y.; Davenport, C.; Spijker, R.; Phillips, S.T.; Adriano, A.; Beese, S.; Dretzke, J.; Ruffano, L.F.; et al. Anti-body tests for identification of current and past infection with SARS-CoV-2. Cochrane Database Syst. Rev. 2020, 6, 1–306. [Google Scholar] [CrossRef]

- Dastider, A.G.; Sadik, F.; Fattah, S.A. An integrated autoencoder-based hybrid CNN-LSTM model for COVID-19 severity prediction from lung ultrasound. Comput. Biol. Med. 2021, 132, 104296. [Google Scholar] [CrossRef] [PubMed]

- Serte, S.; Demirel, H. Deep learning for diagnosis of COVID-19 using 3D CT scans. Comput. Biol. Med. 2021, 132, 104306. [Google Scholar] [CrossRef]

- Ozyurt, F.; Tuncer, T.; Subasi, A. An automated COVID-19 detection based on fused dynamic exemplar pyramid feature extraction and hybrid feature selection using deep learning. Comput. Biol. Med. 2021, 132, 104356. [Google Scholar] [CrossRef]

- Gu, J.; Yang, L.; Li, T.; Liu, Y.; Zhang, J.; Ning, K.; Su, D. Temporal relationship between serial RT-PCR results and serial chest CT imaging, and serial CT changes in coronavirus 2019 (COVID-19) pneumonia: A descriptive study of 155 cases in China. Eur. Radiol. 2021, 31, 1175–1184. [Google Scholar] [CrossRef]

- Elkorany, A.S.; Alsharkawy, Z.F. COVIDetection-Net: A tailored COVID-19 detection from chest radiography images using deep learning. Optik 2021, 231, 166405. [Google Scholar] [CrossRef]

- Li, J.; Zhao, G.; Tao, Y.; Zhai, P.; Chen, H.; He, H.; Cai, T. Multi-task contrastive learning for automatic CT and X-ray diagnosis of COVID-19. Pattern Recognit. 2021, 114, 107848. [Google Scholar] [CrossRef]

- Das, A.K.; Kalam, S.; Kumar, C.; Sinha, D. TLCoV—An automated Covid-19 screening model using Transfer Learning from chest X-ray images. Chaos Solitons Fractals 2021, 144, 110713. [Google Scholar] [CrossRef] [PubMed]

- Wu, X.; Chen, C.; Zhong, M.; Wang, J.; Shi, J. COVID-AL: The diagnosis of COVID-19 with deep active learning. Med. Image Anal. 2021, 68, 101913. [Google Scholar] [CrossRef] [PubMed]

- Yang, D.; Xu, Z.; Li, W.; Myronenko, A.; Roth, H.R.; Harmon, S.; Xu, S.; Turkbey, B.; Turkbey, E.; Wang, X.; et al. Federated semi-supervised learning for COVID region segmentation in chest CT using multi-national data from China, Italy, Japan. Med. Image Anal. 2021, 70, 101992. [Google Scholar] [CrossRef]

- Nour, M.; Cömert, Z.; Polat, K. A Novel Medical Diagnosis model for COVID-19 infection detection based on Deep Features and Bayesian Optimization. Appl. Soft Comput. 2020, 97, 106580. [Google Scholar] [CrossRef]

- Demir, F. DeepCoroNet: A deep LSTM approach for automated detection of COVID-19 cases from chest X-ray images. Appl. Soft Comput. 2021, 103, 107160. [Google Scholar] [CrossRef]

- Brunese, L.; Mercaldo, F.; Reginelli, A.; Santone, A. Explainable Deep Learning for Pulmonary Disease and Coronavirus COVID-19 Detection from X-rays. Comput. Methods Programs Biomed. 2020, 196, 105608. [Google Scholar] [CrossRef]

- Das, D.; Santosh, K.C.; Pal, U. Truncated inception net: COVID-19 outbreak screening using chest X-rays. Phys. Eng. Sci. Med. 2020, 43, 915–925. [Google Scholar] [CrossRef]

- Hussain, E.; Hasan, M.; Rahman, A.; Lee, I.; Tamanna, T.; Parvez, M.Z. CoroDet: A deep learning based classification for COVID-19 detection using chest X-ray images. Chaos Solitons Fractals 2020, 142, 110495. [Google Scholar] [CrossRef]

- Apostolopoulos, I.D.; Mpesiana, T.A. Covid-19: Automatic detection from X-ray images utilizing transfer learning with convolutional neural networks. Phys. Eng. Sci. Med. 2020, 43, 635–640. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kumar, R.; Arora, R.; Bansal, V.; Sahayasheela, V.J.; Buckchash, H.; Imran, J.; Narayanan, N.; Pandian, G.N.; Raman, B. Accurate Prediction of COVID-19 using Chest X-Ray Images through Deep Feature Learning model with SMOTE and Ma-chine Learning Classifiers. MedRxiv 2020. [Google Scholar] [CrossRef]

- Wu, X.; Hui, H.; Niu, M.; Li, L.; Wang, L.; He, B.; Yang, X.; Li, L.; Li, H.; Tian, J.; et al. Deep learning-based multi-view fusion model for screening 2019 novel coronavirus pneumonia: A multicentre study. Eur. J. Radiol. 2020, 128, 109041. [Google Scholar] [CrossRef]

- Quan, H.; Xu, X.; Zheng, T.; Li, Z.; Zhao, M.; Cui, X. DenseCapsNet: Detection of COVID-19 from X-ray images using a capsule neural network. Comput. Biol. Med. 2021, 133, 104399. [Google Scholar] [CrossRef] [PubMed]

- Cruz, J.F.H.S. An ensemble approach for multi-stage transfer learning models for COVID-19 detection from chest CT scans. Intell. Med. 2021, 5, 100027. [Google Scholar] [CrossRef]

- Xu, Y.; Lam, H.K.; Jia, G. MANet: A two-stage deep learning method for classification of COVID-19 from Chest X-ray images. Neuro Comput. 2021, 443, 96–105. [Google Scholar] [CrossRef]

- Verma, P.; Tripathi, V.; Pant, B. Comparison of different optimizers implemented on the deep learning architectures for COVID-19 classification. Mater. Today Proc. 2021, 46, 11098–11102. [Google Scholar] [CrossRef]

- Rahimzadeh, M.; Attar, A. A modified deep convolutional neural network for detecting COVID-19 and pneumonia from chest X-ray images based on the concatenation of Xception and ResNet50V2. Inform. Med. Unlocked 2020, 19, 100360. [Google Scholar] [CrossRef]

- Hemdan, E.E.D.; Shouman, M.A.; Karar, M.E. COVIDX-Net: A Framework of Deep Learning Classifiers to Diagnose COVID-19 in X-Ray Images. arXiv 2020, arXiv:2003.11055v1. [Google Scholar]

- Abbas, A.; Abdelsamea, M.M.; Gaber, M.M. DeTrac: Transfer Learning of Class Decomposed Medical Images in Convolutional Neural Networks. IEEE Access 2020, 8, 74901–74913. [Google Scholar] [CrossRef]

- Rohila, V.S.; Gupta, N.; Kaul, A.; Sharma, D.K. Deep learning assisted COVID-19 detection using full CT-scans. Internet Things 2021, 14, 100377. [Google Scholar] [CrossRef]

- Latif, S.; Usman, M.; Manzoor, S.; Iqbal, W.; Qadir, J.; Tyson, G.; Castro, I.; Razi, A.; Boulos, M.N.K.; Weller, A.; et al. Leveraging Data Science to Combat COVID-19: A Comprehensive Review. IEEE Trans. Artif. Intell. 2020, 1, 85–103. [Google Scholar] [CrossRef]

- Khalifa, N.E.M.; Taha, M.H.N.; Hassanien, A.E.; Elghamrawy, S. Detection of Coronavirus (COVID-19) Associated Pneumonia based on Generative Adversarial Networks and a Fine-Tuned Deep Transfer Learning Model using Chest X-ray Dataset. arXiv 2020, arXiv:2004.01184. [Google Scholar]

- Xu, X.; Jiang, X.; Ma, C.; Du, P.; Li, X.; Lv, S.; Yu, L.; Ni, Q.; Chen, Y.; Su, J.; et al. A Deep Learning System to Screen Novel Coronavirus Disease 2019 Pneumonia. Eng. J. 2020, 6, 1122–1129. [Google Scholar] [CrossRef]

- Panahi, A.H.; Rafiei, A.; Rezaee, A. FCOD: Fast COVID-19 Detector based on deep learning techniques. Inform. Med. Unlocked 2021, 22, 100506. [Google Scholar] [CrossRef] [PubMed]

- Ibrahim, A.U.; Ozsoz, M.; Serte, S.; Al-Turjman, F.; Yakoi, P.S. Pneumonia Classification Using Deep Learning from Chest X-ray Images during COVID-19. Cogn. Comput. 2021, 1–13. [Google Scholar] [CrossRef]

- Singh, D.; Kumar, V.; Kaur, M. Classification of COVID-19 patients from chest CT images using multi-objective differential evolution–based convolutional neural networks. Eur. J. Clin. Microbiol. Infect. Dis. 2020, 39, 1379–1389. [Google Scholar] [CrossRef]

- Fathollahi-Fard, A.M.; Hajiaghaei-Keshteli, M.; Tavakkoli-Moghaddam, R.; Smith, N.R. Bi-level programming for home health care supply chain considering outsourcing. J. Ind. Inf. Integr. 2021, 100246. [Google Scholar] [CrossRef]

- Fathollahi-Fard, A.M.; Woodward, L.; Akhrif, O. Sustainable distributed permutation flow-shop scheduling model based on a triple bottom line concept. J. Ind. Inf. Integr. 2021, 24, 100233. [Google Scholar] [CrossRef]

- Fathollahi-Fard, A.M.; Hajiaghaei-Keshteli, M.; Mirjalili, S. A set of efficient heuristics for a home healthcare problem. Neural Comput. Appl. 2019, 32, 6185–6205. [Google Scholar] [CrossRef]

- Bahadori-Chinibelagh, S.; Fathollahi-Fard, A.M.; Hajiaghaei-Keshteli, M. Two Constructive Algorithms to Address a Multi-Depot Home Healthcare Routing Problem. IETE J. Res. 2019, 1–7. [Google Scholar] [CrossRef]

- Shi, Y.; Zhou, Y.; Ye, W.; Zhao, Q.Q. A relative robust optimization for a vehicle routing problem with time-window and synchronized visits considering greenhouse gas emissions. J. Clean. Prod. 2020, 275, 124112. [Google Scholar] [CrossRef]

- Dauletova, M.; Hafsan, H.; Mahhengam, N.; Zekiy, A.O.; Ahmadi, M.; Siahmansouri, H. Mesenchymal stem cell alongside exosomes as a novel cell-based therapy for COVID-19: A review study. Clin. Immunol. 2021, 226, 108712. [Google Scholar] [CrossRef] [PubMed]

- Joseph Paul Cohen, Paul Morrison, Lan Dao, COVID-19 Image Data Collection. 2020. Available online: https://github.com/ieee8023/covid-chestxray-dataset (accessed on 8 September 2021).

- Kaggle, P.M. Chest X-ray Images (pneumonia) Dataset. 2020. Available online: https://www.kaggle.com/paultimothymooney/chest-xray-pneumonia (accessed on 8 September 2021).

- Zhao, X.; Liu, L.; Qi, S.; Teng, Y.; Li, J.; Qian, W. Agile convolutional neural network for pulmonary nodule classification using CT images. Int. J. Comput. Assist Radiol. Surg. 2018, 13, 585–595. [Google Scholar] [CrossRef]

- Panwar, H.; Gupta, P.; Siddiqui, M.K.; Morales-Menendez, R.; Singh, V. Application of deep learning for fast detection of COVID-19 in X-Rays using nCOVnet. Chaos Solitons Fractals 2020, 138, 109944. [Google Scholar] [CrossRef]

- Wang, S.; Kang, B.; Ma, J.; Zeng, X.; Xiao, M.; Guo, J.; Cai, M.; Yang, J.; Li, Y.; Meng, X.; et al. A deep learning algorithm using CT images to screen for Corona virus disease (COVID-19). Eur. Radiol. 2021, 31, 6096–6104. [Google Scholar] [CrossRef] [PubMed]

- Zheng, C.; Deng, X.; Fu, Q.; Zhou, Q.; Feng, J.; Ma, H.; Liu, W.; Wang, X. Deep Learning-based Detection for COVID-19 from Chest CT using Weak Label. MedRxiv 2020. [Google Scholar] [CrossRef] [Green Version]

- Wang, L.; Wong, A. COVID-Net: A tailored deep convolutional neural network design for detection of COVID-19 cases from chest X-ray images. Sci. Rep. 2020, 10, 19549. [Google Scholar] [CrossRef]

- Li, X.; Zhu, D. COVID-Xpert: An AI Powered Population Screening of COVID-19 Cases Using Chest Radiography Images. arXiv 2020, arXiv:2004.03042v1. [Google Scholar]

- Mahmud, T.; Rahman, A.; Fattah, S.A. CovXNet: A multi-dilation convolutional neural network for automatic COVID-19 and other pneumonia detection from chest X-ray images with transferable multi-receptive feature optimization. Comput. Biol. Med. 2020, 122, 103869. [Google Scholar] [CrossRef]

| Ref No. | Dataset Name | No. of Images Used | Pre-Processing Techniques | Architecture Mode | Performance Accuracy |

|---|---|---|---|---|---|

| [24] | Chest X-Ray | 550 | “Rescaling” | 7 pre-trained CNNs VGG19, ResNetV2, DenseNet201, InceptionV3, InceptionResNetV2, Xception, and MobileNetV2 | Accuracy = 90% Precision = 83% F1-Score = 91% |

| [25] | Chest X-Ray | 740 | “DA”, “Histogram”, “Feature Extraction” using “AlexNet”, K-means”, “PCA” | 2pre-trained CNNs: ImageNet and ResNet | Accuracy = 95.12% Sensitivity = 97.91% Specificity = 91.87% |

| [26] | CT-Scans | 1106 | Segmentation | ResNet-101 ResNet-50 DenseNet-169 DenseNet-201 | Accuracy = 94.9% |

| [27] | CT-Scans | 381 | NA | AlexNet, GoogleNet, DenseNet, Inception, ResNet, VGG, XceptionNet, and InceptionResNet | Accuracy = 95.33% Sensitivity = 95.33% F1-Score = 95.34% |

| [28] | Chest X-Ray | 983 | Data Augmentation | Convolutional Neural Network | Accuracy = 93.3% Sensitivity = 91% |

| [29] | Computed tomography images (CT) | 618 | Data Augmentation | ResNet-18 | Accuracy = 86.7% |

| [30] | Chest X-Ray | 940 | Data Augmentation | Inception Architecture | Accuracy = 96% F1-score = 96% AUC = 95% |

| [31] | CXR | 5856 | NA | AlexNet | Accuracy = 93% Sensitivity = 89.18% Specificity = 98.92% |

| [32] | Computed tomography images (CT) | 1000 | NA | Convolutional Neural Network | Accuracy = 90% |

| Proposed C19-Net (Discussed in Section 4 in detail) | Chest X-Ray | 1900 | “Resizing” | InceptionV4 + Support Vector Machine | Accuracy = 96.24% |

| Class | Images Count |

|---|---|

| COVID-19 | 400 |

| Bacterial Pneumonia | 450 |

| Viral Pneumonia | 450 |

| Normal | 600 |

| Class | Images Count |

|---|---|

| COVID-19 | 400 |

| Pneumonia | 900 |

| Normal | 600 |

| Class | Images Count |

|---|---|

| Normal | 600 |

| COVID-19 | 400 |

| Method | Classes | Precision | Recall | Specificity | F1-Score |

|---|---|---|---|---|---|

| C19D-Net | COVID-19 | 95.81 | 95.14 | 94.18 | 94.89 |

| Bacterial Pneumonia | 95.89 | 94.28 | 94.88 | 94.72 | |

| Viral Pneumonia | 94.78 | 94.14 | 96.25 | 93.14 | |

| Normal | 95.71 | 95.84 | 95.88 | 95.12 | |

| Inception V4 | COVID-19 | 94.85 | 94.36 | 95.02 | 95.18 |

| Bacterial Pneumonia | 95.10 | 94.80 | 94.12 | 94.20 | |

| Viral Pneumonia | 95.82 | 95.25 | 94.28 | 95.88 | |

| Normal | 94.87 | 94.66 | 95.28 | 96.20 |

| Method | Classes | Precision | Recall | Specificity | F1-Score |

|---|---|---|---|---|---|

| C19D-Net | COVID-19 | 94.57 | 94.14 | 93.89 | 92.78 |

| Pneumonia | 94.57 | 95.70 | 94.48 | 92.42 | |

| Normal | 95.25 | 95.44 | 92.47 | 91.25 | |

| Inception V4 | COVID-19 | 95.25 | 90.14 | 93.47 | 94.00 |

| Pneumonia | 94.48 | 91.25 | 93.42 | 95.00 | |

| Normal | 93.14 | 92.02 | 94.36 | 92.13 |

| Method | Classes | Precision | Recall | Specificity | F1-Score |

|---|---|---|---|---|---|

| C19D-Net | Non-COVID | 96.51 | 97.1 | 97.45 | 98 |

| COVID-19 | 97.1 | 96.88 | 97.2 | 97.45 | |

| Inception V4 | Non-COVID | 96.1 | 97.14 | 96.80 | 97.8 |

| COVID-19 | 96.91 | 95.00 | 96.51 | 97.1 |

| Class Name | Precision | Recall | Specificity | F1-Score | Overall Accuracy |

|---|---|---|---|---|---|

| 4-Classes | 95.1 | 94.25 | 95.2 | 94.0 | 96.24 |

| 3-Classes | 91.7 | 92.14 | 92.89 | 91.58 | 95.50 |

| 2-Classes | 97.58 | 97.88 | 98.2 | 98 | 98.1 |

| Study (Ref) | Model | No. of Images | 2-Class Accuracy | 3-Class Accuracy | 4-Class Accuracy |

|---|---|---|---|---|---|

| [32] | CNN + MODE | 100,100 | 93.5 | -- | -- |

| [41] | LeNet and AlexNet | 25,25 | 95.38 | -- | -- |

| [42] | nCOVNet | 215,280 | 88.1 | -- | -- |

| [43] | Inception Transfer Learning | 195,258 | 82.9 | -- | -- |

| [44] | DeCoVNet | 313,229 | 90.8 | -- | -- |

| [16] | CNN | 224,700,504 | -- | 93.48 | -- |

| [45] | COVID-Net | 53,5526,8066 | -- | 92.42 | -- |

| [29] | ResNet + CNN | 219,224,175 | -- | 86.72 | -- |

| [46] | DenseNet121 | 179,179,179 | -- | 88.91 | -- |

| [6] | GoogleNet, AlexNet, DenseNet201 | 127,127,127 | 91.44 | 91.73 | -- |

| [15] | CoroDet | 500,400,400,800 | 99.11 | 94.21 | 91.27 |

| [47] | CovXNet | 305,305,305,305 | 97.40 | 89.60 | 90.21 |

| Proposed C19D-Net | InceptionV4 + SVM Classifier | 400,450,600,450 | 98.1 | 95.50 | 96.24 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kaur, P.; Harnal, S.; Tiwari, R.; Alharithi, F.S.; Almulihi, A.H.; Noya, I.D.; Goyal, N. A Hybrid Convolutional Neural Network Model for Diagnosis of COVID-19 Using Chest X-ray Images. Int. J. Environ. Res. Public Health 2021, 18, 12191. https://doi.org/10.3390/ijerph182212191

Kaur P, Harnal S, Tiwari R, Alharithi FS, Almulihi AH, Noya ID, Goyal N. A Hybrid Convolutional Neural Network Model for Diagnosis of COVID-19 Using Chest X-ray Images. International Journal of Environmental Research and Public Health. 2021; 18(22):12191. https://doi.org/10.3390/ijerph182212191

Chicago/Turabian StyleKaur, Prabhjot, Shilpi Harnal, Rajeev Tiwari, Fahd S. Alharithi, Ahmed H. Almulihi, Irene Delgado Noya, and Nitin Goyal. 2021. "A Hybrid Convolutional Neural Network Model for Diagnosis of COVID-19 Using Chest X-ray Images" International Journal of Environmental Research and Public Health 18, no. 22: 12191. https://doi.org/10.3390/ijerph182212191

APA StyleKaur, P., Harnal, S., Tiwari, R., Alharithi, F. S., Almulihi, A. H., Noya, I. D., & Goyal, N. (2021). A Hybrid Convolutional Neural Network Model for Diagnosis of COVID-19 Using Chest X-ray Images. International Journal of Environmental Research and Public Health, 18(22), 12191. https://doi.org/10.3390/ijerph182212191