Morphing Task: The Emotion Recognition Process in Children with Attention Deficit Hyperactivity Disorder and Autism Spectrum Disorder

Abstract

:1. Introduction

2. Methods

2.1. Participants

2.2. Morphing Task

2.3. Training Session

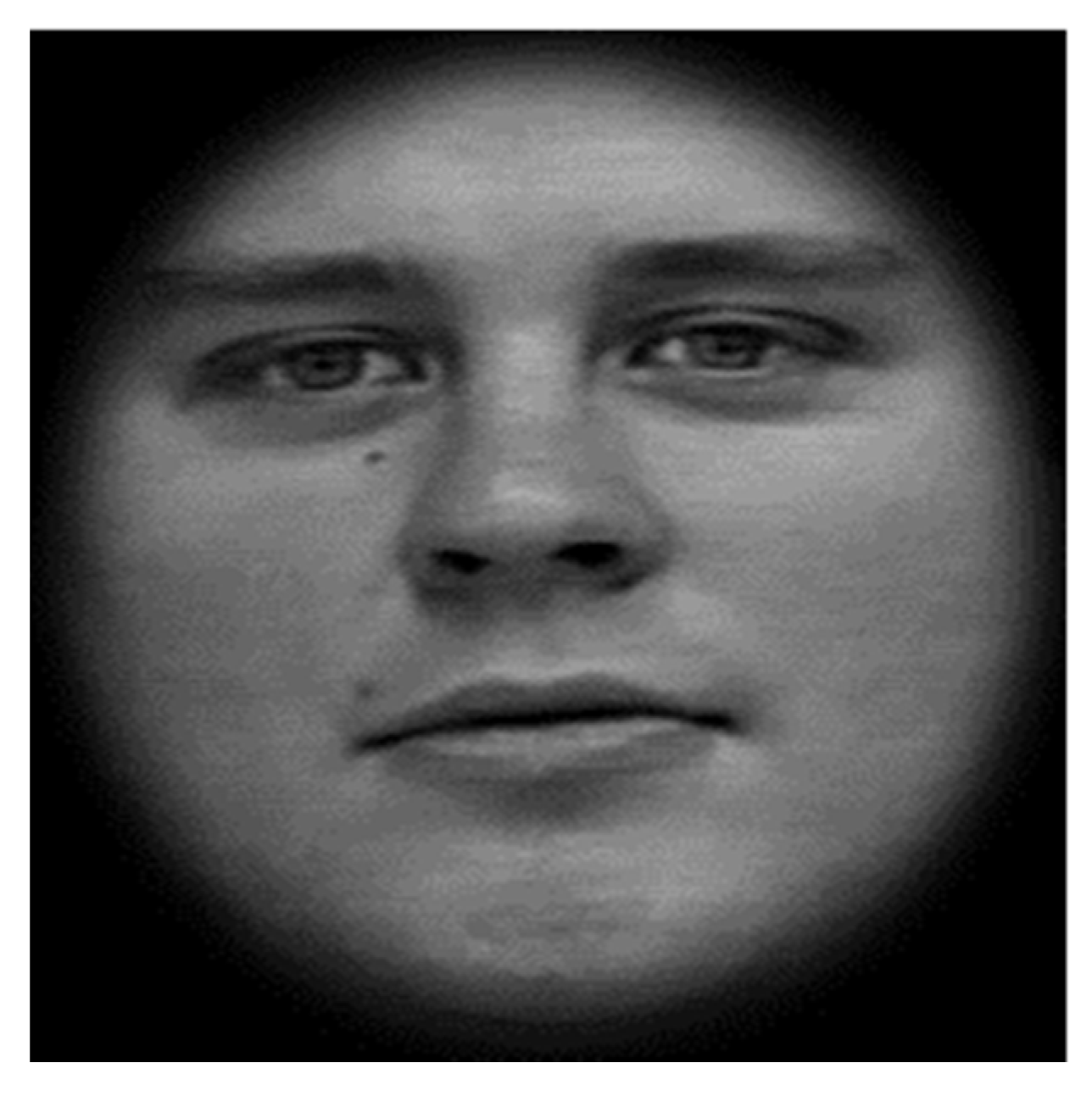

2.4. First Condition

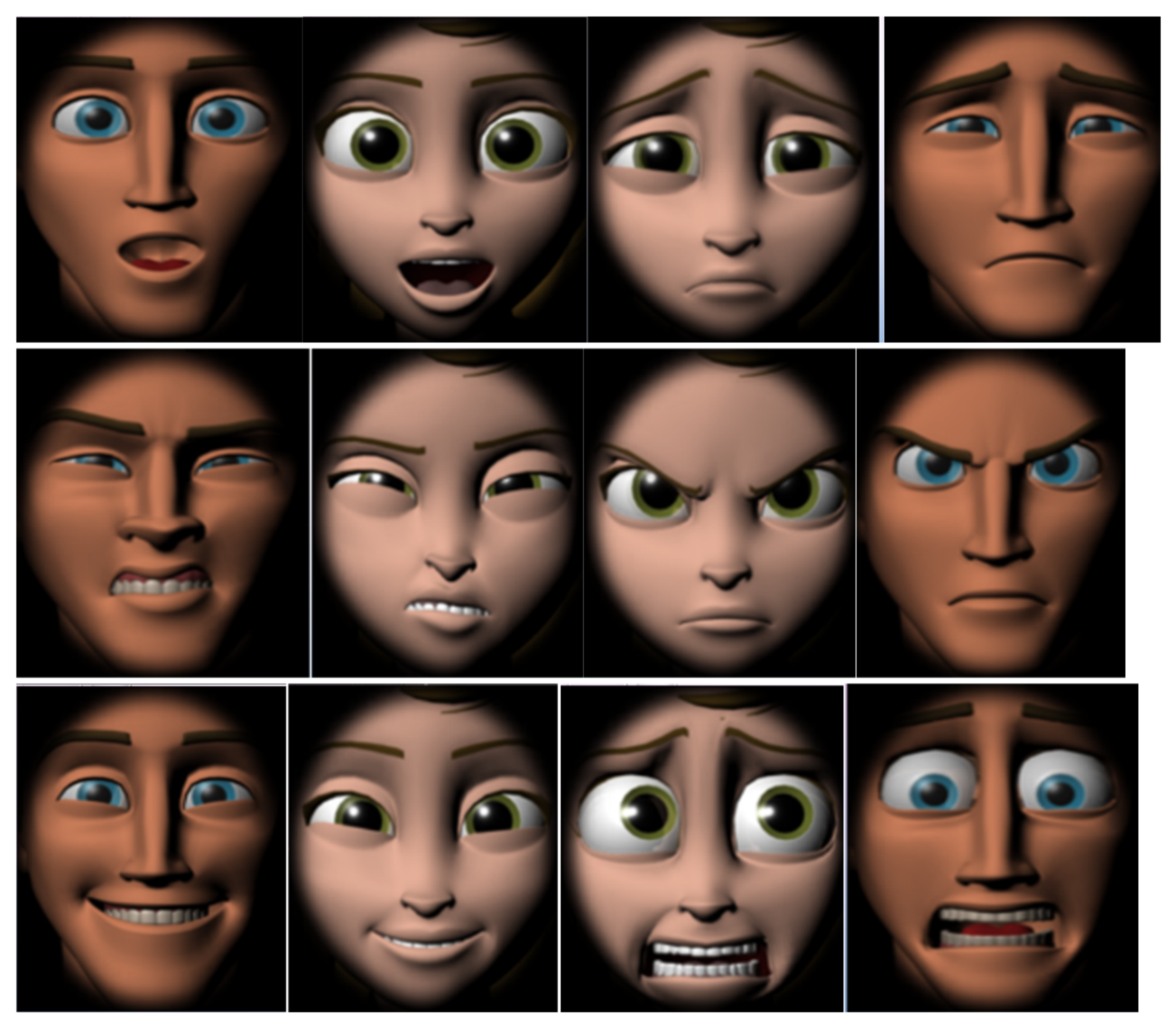

2.5. Second Condition

2.6. Procedure

- The Chi-square test (χ2) was performed to investigate potential relationships between the number of errors (as categorial variables) and the six emotions in the two conditions (human and cartoon faces);

- Multivariate analysis of variance (MANOVA) was used to investigate group differences with regard to response latency (speed in seconds).

3. Results

3.1. Misidentified Emotions

3.2. Response Latency

4. Discussion

- Major latency in emotion recognition for both clinical groups;

- Greater emotion recognition error rate compared to the control group;

- Tendency to confuse some emotions (see fear/sadness, anger/disgust).

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lee, K.; Anzures, G.; Quinn, P.C.; Pascalis, O.; Ge, L.; Slater, A.M. Development of face processing expertise. In The Oxford Handbook of Face Perception; Calder, A.J., Rhodes, G., Johnson, M.H., Haxby, J.V., Eds.; Oxford University Press: Oxford, UK, 2011; pp. 753–778. [Google Scholar] [CrossRef]

- Flowe, H.D. Do Characteristics of Faces That Convey Trustworthiness and Dominance Underlie Perceptions of Criminality? PLoS ONE 2012, 7, e37253. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hess, U.; Adams, R.R.; Kleck, R.E. Who may frown and who should smile? Dominance, affiliation, and the display of happiness and anger. Cogn. Emot. 2005, 19, 515–536. [Google Scholar] [CrossRef]

- Arsalidou, M.; Morris, D.; Taylor, M.J. Converging evidence for the advantage of dynamic facial expressions. Brain Topogr. 2011, 24, 149–163. [Google Scholar] [CrossRef] [PubMed]

- Pittella, E.; Fioriello, F.; Maugeri, A.; Rizzuto, E.; Piuzzi, E.; Del Prete, Z.; Sogos, C. Wereable Heart rate monitoring as stress report indicator in children with neurodevelopmental disorders. In Proceedings of the 2018 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Rome, Italy, 11–13 June 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Levi, G.; Colonnello, V.; Giacchè, R.; Piredda, M.L.; Sogos, C. Building words on actions: Verb enactment and verb recognition in children with specific language impairment. Res. Dev. Disabil. 2014, 35, 1036–1041. [Google Scholar] [CrossRef] [PubMed]

- Richoz, A.R.; Lao, J.; Pascalis, O.; Caldara, R. Tracking the recognition of static and dynamic facial expressions of emotion across the life span. J. Vis. 2018, 18, 5. [Google Scholar] [CrossRef] [Green Version]

- Jusyte, A.; Gulewitsch, M.D.; Schönenberg, M. Recognition of peer emotions in children with ADHD: Evidence from an animated facial expressions task. Psychiatry Res. 2017, 258, 351–357. [Google Scholar] [CrossRef] [PubMed]

- Golan, O.; Gordon, I.; Fichman, K.; Keinan, G. Specific Patterns of Emotion Recognition from Faces in Children with ASD: Results of a Cross-Modal Matching Paradigm. J. Autism Dev. Disord. 2018, 48, 844–852. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Adolphs, R. Reduced specificity in emotion judgment in people with autism spectrum disorder. Neuropsychologia 2017, 99, 286–295. [Google Scholar] [CrossRef] [Green Version]

- Auletta, A.F.; Cupellaro, S.; Abbate, L.; Aiello, E.; Cornacchia, P.; Norcia, C.; Sogos, C. SCORS-G and Card Pull Effect of TAT Stories: A Study With a Nonclinical Sample of Children. Assessment 2020, 27, 1368–1377. [Google Scholar] [CrossRef]

- Levi, G.; Colonnello, V.; Giacchè, R.; Piredda, M.L.; Sogos, C. Grasping the world through words: From action to linguistic production of verbs in early childhood. Dev. Psychobiol. 2014, 56, 510–516. [Google Scholar] [CrossRef] [PubMed]

- Garfinkel, S.N.; Tiley, C.; O’Keeffe, S.; Harrison, N.A.; Seth, A.K.; Critchley, H.D. Discrepancies between dimensions of interoception in autism: Implications for emotion and anxiety. Biol. Psychol. 2016, 114, 117–126. [Google Scholar] [CrossRef] [PubMed]

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders, 5th ed.; American Psychiatric Association: Washington, DC, USA, 2013. [Google Scholar]

- Baron-Cohen, S.; Spitz, A.; Cross, P. Do children with autism recognise surprise? A research note. J. Cogn. Emot. 1993, 7, 507–516. [Google Scholar] [CrossRef]

- Fioriello, F.; Maugeri, A.; D’Alvia, L.; Pittella, E.; Piuzzi, E.; Rizzuto, E.; Del Prete, Z.; Manti, F.; Sogos, C. A wearable heart rate measurement device for children with autism spectrum disorder. Sci. Rep. 2020, 10, 18659. [Google Scholar] [CrossRef] [PubMed]

- D’Alvia, L.; Pittella, E.; Fioriello, F.; Maugeri, A.; Rizzuto, E.; Piuzzi, E.; Sogos, C.; Del Prete, Z. Heart rate monitoring under stress condition during behavioral analysis in children with neurodevelopmental disorders. In Proceedings of the 2020 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Bari, Italy, 1 June–1 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–6. Available online: https://ieeexplore.ieee.org/document/9137306/ (accessed on 15 September 2021).

- Harmsen, I.E. Empathy in Autism Spectrum Disorder. J. Autism Dev. Disord. 2019, 49, 3939–3955. [Google Scholar] [CrossRef] [PubMed]

- Cox, C.L.; Uddin, L.Q.; Di Martino, A.; Castellanos, F.X.; Milham, M.P.; Kelly, C. The balance between feeling and knowing: Affective and cognitive empathy are reflected in the brain’s intrinsic functional dynamics. Soc. Cogn. Affect. Neurosci. 2012, 7, 727–737. [Google Scholar] [CrossRef] [PubMed]

- Rogers, K.; Dziobek, I.; Hassenstab, J.; Wolf, O.T.; Convit, A. Who cares? Revisiting empathy in Asperger syndrome. J. Autism Dev. Disord. 2007, 37, 709–715. [Google Scholar] [CrossRef] [PubMed]

- Shamay-Tsoory, S.G.; Tomer, R.; Yaniv, S.; Aharon-Peretz, J. Empathy deficits in Asperger syndrome: A cognitive profile. Neurocase 2002, 8, 245–252. [Google Scholar] [CrossRef]

- Lord, C.; Elsabbagh, M.; Baird, G.; Veenstra-Vanderweele, J. Autism spectrum disorder. Lancet 2018, 392, 508–520. [Google Scholar] [CrossRef]

- Ekman, P. Universal facial expressions of emotions. Calif. Ment. Health Res. Dig. 1970, 8, 151–158. [Google Scholar]

- Pelphrey, K.A.; Sasson, N.J.; Reznick, J.S.; Paul, G.; Goldman, B.D.; Piven, J. Visual scanning of faces in autism. J. Autism Dev. Disord. 2002, 32, 249–261. [Google Scholar] [CrossRef] [PubMed]

- Ashwin, C.; Chapman, E.; Colle, L.; Baron-Cohen, S. Impaired recognition of negative basic emotions in autism: A test of the amygdala theory. Soc. Neurosci. 2006, 1, 349–363. [Google Scholar] [CrossRef]

- Humphreys, K.; Minshew, N.; Leonard, G.L.; Behrmann, M. A fine-grained analysis of facial expression processing in high-functioning adults with autism. Neuropsychologia 2007, 45, 685–695. [Google Scholar] [CrossRef]

- Balconi, M.; Amenta, S.; Ferrari, C. Emotional decoding in facial expression, scripts and videos: A comparison between normal, autistic and Asperger children. Res. Autism Spectr. Disord. 2012, 6, 193–203. [Google Scholar] [CrossRef]

- Jones, C.R.G.; Pickles, A.; Falcaro, M.; Marsden, A.J.S.; Happé, F.; Scott, S.K.; Sauter, D.; Tregay, J.; Phillips, R.J.; Baird, G.; et al. A multimodal approach to emotion recognition ability in autism spectrum disorders. J. Child Psychol. Psychiatry 2011, 52, 275–285. [Google Scholar] [CrossRef] [Green Version]

- Wright, B.; Clarke, N.; Jordan, J.O.; Young, A.W.; Clarke, P.; Miles, J.; Nation, K.; Clarke, L.; Williams, C. Emotion recognition in faces and the use of visual context in young people with high-functioning autism spectrum disorders. Autism 2008, 12, 607–626. [Google Scholar] [CrossRef] [PubMed]

- Rutherford, M.D.; McIntosh, D.N. Rules versus prototype matching. Strategies of perception of emotional facial expressions in the autism spectrum. J. Autism Dev. Disord. 2007, 37, 187–196. [Google Scholar] [CrossRef]

- Rump, K.M.; Giovanelli, J.L.; Minshew, N.J.; Strauss, M.S. The development of emotion recognition in individuals with autism. Child Dev. 2009, 80, 1434–1447. [Google Scholar] [CrossRef] [Green Version]

- Lozier, L.M.; Vanmeter, J.W.; Marsh, A.A. Impairments in facial affect recognition associated with autism spectrum disorders: A meta-analysis. Dev. Psychopathol. 2014, 26, 933–945. [Google Scholar] [CrossRef] [Green Version]

- Barkley, R.A.; Murphy, K.R.; Fischer, M. ADHD in Adults: What the Science Says; Guilford Publications: New York, NY, USA, 2008. [Google Scholar]

- Pelc, K.; Kornreich, C.; Foisy, M.L.; Dan, B. Recognition of emotional facial expressions in attention-deficit hyperactivity disorder. Pediatr. Neurol. 2006, 35, 93–97. [Google Scholar] [CrossRef]

- Hoza, B. Peer functioning in children with ADHD. Ambul. Pediatr. 2007, 7 (Suppl. 1), 101–106. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hoza, B.; Mrug, S.; Gerdes, A.C.; Hinshaw, S.P.; Bukowski, W.M.; Gold, J.A.; Kraemer, H.C.; Pelham, W.E., Jr.; Wigal, T.; Arnold, L.E. What aspects of peer relationships are impaired in children with attention-deficit/hyperactivity disorder? J. Consult. Clin. Psychol. 2005, 73, 411–423. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pelham, W.E.; Milich, R. Peer Relations in Children with Hyperactivity/Attention Deficit Disorder. J. Learn. Disabil. 1984, 17, 560–567. [Google Scholar] [CrossRef] [Green Version]

- Schönenberg, M.; Schneidt, A.; Wiedemann, E. Processing of dynamic affective information in adults with ADHD. J. Atten. Disord. 2015, 23, 32–39. [Google Scholar] [CrossRef]

- Schwenck, C.; Schneider, T.; Schreckenbach, J.; Zenglein, Y.; Gensthaler, A.; Taurines, R.; Freitag, C.M.; Schneider, W.; Romanos, M. Emotion recognition in children and adolescents with attention-deficit/hyperactivity disorder (ADHD). ADHD Atten. Deficit Hyperact. Disord. 2013, 5, 295–302. [Google Scholar] [CrossRef]

- Berggren, S.; Engström, A.C.; Bölte, S. Facial affect recognition in autism, ADHD and typical development. Cognit. Neuropsychiatry 2016, 21, 213–227. [Google Scholar] [CrossRef]

- Sinzig, J.; Morsch, D.; Lehmkuhl, G. Do hyperactivity, impulsivity and inattention have an impact on the ability of facial affect recognition in children with autism and ADHD? Eur. Child Adolesc. Psychiatry 2008, 17, 63–72. [Google Scholar] [CrossRef] [PubMed]

- Demopoulos, C.; Hopkins, J.; Davis, A. A comparison of social cognitive profiles in children with autism spectrum disorders and attention-deficit/hyperactivity disorder: A matter of quantitative but not qualitative difference? J. Autism Dev. Disord. 2013, 43, 1157–1170. [Google Scholar] [CrossRef]

- Schwenck, C.; Mergenthaler, J.; Keller, K.; Zech, J.; Salehi, S.; Taurines, R.; Romanos, M.; Schecklmann, M.; Schneider, W.; Warnke, A.; et al. Empathy in children with autism and conduct disorder: Group-specific profiles and developmental aspects. J. Child Psychol. Psychiatry 2012, 53, 651–659. [Google Scholar] [CrossRef]

- Teunisse, J.P.; de Gelder, B. Impaired categorical perception of facial expressions in high-functioning adolescents with autism. Child Neuropsychol. 2001, 7, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Weber, S.; Falter-Wagner, C.; Stöttinger, E. Brief Report: Typical Visual Updating in Autism. J. Autism Dev. Disord. 2021, 51, 4711–4716. [Google Scholar] [CrossRef] [PubMed]

- Lee, K.S.; Chang, D.H.F. Biological motion perception is differentially predicted by Autistic trait domains. Sci. Rep. 2019, 9, 11029. [Google Scholar] [CrossRef] [Green Version]

- Han, B.; Tijus, C.; Le Barillier, F.; Nadel, J. Morphing technique reveals intact perception of object motion and disturbed perception of emotional expressions by low-functioning adolescents with Autism Spectrum Disorder. Res. Dev. Disabil. 2015, 47, 393–404. [Google Scholar] [CrossRef]

- Boakes, J.; Chapman, E.; Houghton, S.; West, J. Facial affect interpretation in boys with attention deficit/hyperactivity disorder. Child Neuropsychol. 2008, 14, 82–96. [Google Scholar] [CrossRef] [PubMed]

- Brosnan, M.; Johnson, H.; Grawmeyer, B.; Chapman, E.; Benton, L. Emotion Recognition in Animated Compared to Human Stimuli in Adolescents with Autism Spectrum Disorder. J. Autism Dev. Disord. 2015, 45, 1785–1796. [Google Scholar] [CrossRef] [Green Version]

- Raven, J.C. Coloured Progressive Matrices—CPM; Giunti OS: Firenze, Italy, 2008. [Google Scholar]

- Raven, J.C. Standard Progressive Matrices—SPM; Giunti OS: Firenze, Italy, 2008. [Google Scholar]

- Griffiths, P. What Emotions Really Are: The Problem of Psychological Categories; University of Chicago Press: Chicago, IL, USA; London, UK, 1997. [Google Scholar]

- Harris, P.L. Children and Emotion: The Development of Psychological Understanding; Blackwell: Oxford, UK, 1989. [Google Scholar]

- Baron-Cohen, S.; Golan, O.; Wheelwright, S.; Granader, Y.; Hill, J. Emotion word comprehension from 4 to 16 years old: A developmental survey. Front. Evol. Neurosci. 2010, 2, 109. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Golan, O.; Sinai-Gavrilov, Y.; Baron-Cohen, S. The Cambridge Mindreading Face-Voice Battery for Children (CAM-C): Complex emotion recognition in children with and without autism spectrum conditions. Mol. Autism 2015, 6, 22. [Google Scholar] [CrossRef] [Green Version]

- Kanade, T.; Cohn, J.F.; Tian, Y. Comprehensive database for facial expression analysis. In Proceedings of the Fourth IEEE International Conference on Automatic Face and Gesture Recognition (Cat. No, PR00580), Grenoble, France, 28–30 March 2000; pp. 46–53. [Google Scholar] [CrossRef] [Green Version]

- Lucey, P.; Cohn, J.F.; Kanade, T.; Saragih, J.; Ambadar, Z.; Matthews, I. The Extended Cohn-Kanade Dataset (CK+): A complete dataset for action unit and emotion-specified expression. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition—Workshops, San Francisco, CA, USA, 13–18 June 2010; pp. 94–101. [Google Scholar] [CrossRef] [Green Version]

- Aneja, D.; Colburn, A.; Faigin, G.; Shapiro, L.; Mones, B. Modeling Stylized Character Expressions via Deep Learning. In Proceedings of the Asian Conference on Computer Vision, Taipei, Taiwan, 20–24 November 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 136–153. [Google Scholar]

- Weber, R.; Keerl, R.; Jaspersen, D.; Huppmann, A.; Schick, B.; Draf, W. Computer-assisted documentation and analysis of wound healing of the nasal and oesophageal mucosa. J. Laryngol. Otol. 1996, 110, 1017–1021. [Google Scholar] [CrossRef] [PubMed]

- Trautmann, S.A.; Fehr, T.; Herrmann, M. Emotions in motion: Dynamic compared to static facial expressions of disgust and happiness reveal more widespread emotion-specific activations. Brain Res. 2009, 1284, 100–115. [Google Scholar] [CrossRef]

- Smith, F.W.; Rossit, S. Identifying and detecting facial expressions of emotion in peripheral vision. PLoS ONE 2018, 13, e0197160. [Google Scholar] [CrossRef]

- Herba, C.M.; Landau, S.; Russell, T.; Ecker, C.; Phillips, M.L. The development of emotion- processing in children: Effects of age, emotion, and intensity. J. Child Psychol. Psychiatry 2006, 47, 1098–1106. [Google Scholar] [CrossRef]

- Johnston, P.; Mayes, A.; Hughes, M.; Young, A.W. Brain networks subserving the evaluation of static and dynamic facial expressions. Cortex 2013, 49, 2462–2472. [Google Scholar] [CrossRef]

- D’Haesek, P.F.; Cetinkaya, E.; Konrad, P.E.; Kao, C.; Dawant, B.M. Computer-aided placement of deep brain stimulators: From planning to intraoperative guidance. IEEE Trans. Med. Imaging 2005, 24, 1469–1478. [Google Scholar] [CrossRef]

- Collin, L.; Bindra, J.; Raju, M.; Gillberg, C.; Minnis, H. Facial Emotion Recognition in child psychiatry: A Systematic review. Res. Dev. Disabil. 2013, 34, 1505–1520. [Google Scholar] [CrossRef]

- Staff, A.I.; Luman, M.; van der Oord, S.; Bergwerff, C.; van den Hoodfdakker, B.; Oosterlaan, J. Facial emotion recognition impairment predicts social and emotional problems in children with (subthreshold) ADHD. Eur. Child Adolesc. Psychiatry 2021, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Williams, B.T.; Gray, K.M.; Tonge, B.J. Teaching emotion recognition skills to young children with autism: A randomised controlled trial of an emotion training programme. J. Child Psychol. Psychiatry 2012, 53, 1268–1276. [Google Scholar] [PubMed]

- Young, R.L.; Posselt, M. Using the transporters DVD as a learning tool for children with Autism Spectrum Disorders (ASD). J. Autism Dev. Disord. 2012, 42, 984–991. [Google Scholar] [CrossRef] [PubMed]

- Ramdoss, S.; Machalicek, W.; Rispoli, M.; Mulloy, A.; Lang, R.; O’Reilly, M. Computer-based interventions to improve social and emotional skills in individuals with autism spectrum disorders: A systematic review. Dev. Neurorehabil. 2012, 15, 119–135. [Google Scholar] [CrossRef] [PubMed]

- Fedor, J.; Lynn, A.; Foran, W.; Di Ciccio-Bool, J.; Luna, B.; O’Hearn, K. Pattern of fixation during face recognition: Differences in autism across age. Autism 2018, 22, 866–880. [Google Scholar] [CrossRef] [PubMed]

| ASD | ADHD | TD | |

|---|---|---|---|

| Mean age (range) | 9.33 (7–12) | 10.12 (7–12) | 10.12 (712) |

| Female (number (%)) | 8 (38) | 5 (25) | 3 (15) |

| Feeling of Human Face | Groups | Comparison | χ2 | |||

|---|---|---|---|---|---|---|

| ADHD Mean ± SD | ASD Mean ± SD | TD Mean ± SD | ASD vs. TD | ADHD vs. TD | ||

| Happiness_Female Face | 4.57 ± 1.764 | 5.24 ± 1.44 | 3.24 ± 1.31 | p < 0.01 | p < 0.05 | 0.243 |

| Disgust_Male Face | 5.09 ± 1.05 | 5.23 ± 1.72 | 4.14 ± 1.55 | p < 0.05 | p < 0.05 | 0.104 |

| Surprise_Male Face | 4.73 ± 1.43 | 5.56 ± 0.86 | 4.75 ± 1.19 | p < 0.05 | Not significant | 0.103 |

| Anger_Female Face | 4.71 ± 1.43 | 5.83 ± 1.13 | 4.54 ± 1.30 | p < 0.01 | p < 0.05 | 0.173 |

| Fear_Female Face | 5.66 ± 1.56 | 6.62 ± 0.75 | 5.31 ± 1.43 | p < 0.01 | Not significant | 0.161 |

| Happiness_Male Face | 4.01 ± 1.06 | 5.09 ± 1.24 | 4.22 ± 0.82 | p < 0.01 | p < 0.05 | 0.170 |

| Anger_Male Face | 4.86 ± 1.66 | 5.95 ± 1.07 | 5.14 ± 1.11 | p < 0.05 | p < 0.05 | 0.115 |

| Surprise_Female Face | 3.66 ± 1.01 | 4.54 ± 1.41 | 3.61 ± 0.83 | p < 0.05 | Not significant | 0.136 |

| Disgust_Female Face | 4.39 ± 1.15 | 5.29 ± 1.44 | 4.06 ± 1.06 | p < 0.01 | p < 0.05 | 0.159 |

| Feeling of Cartoon Face | Groups | Comparison | χ2 | |||

|---|---|---|---|---|---|---|

| ADHD Mean ± SD | ASD Mean ± SD | TD Mean ± SD | ASD vs. TD | ADHD vs. TD | ||

| Fear_Male Face | 3.56 ± 1.49 | 4.711 ± 1.823 | 3.13 ± 1.43 | p < 0.01 | p < 0.05 | 0.157 |

| Sadness_Female Face | 3.42 ± 1.09 | 4.14 ± 1.3 | 2.97 ± 0.65 | p < 0.01 | p < 0.05 | 0.184 |

| Anger_Female Face | 3.04 ± 0.93 | 4.46 ± 2.17 | 3.09 ± 1.07 | p < 0.01 | Not significant | 0.167 |

| Happiness_Male Face | 2.73 ± 0.8 | 3.71 ± 2 | 2.35 ± 0.49 | p < 0.01 | p < 0.05 | 0.176 |

| Disgust_Female Face | 3.57 ± 1.54 | 4.7 ± 2.12 | 3.07 ± 1.24 | p < 0.01 | p < 0.05 | 0.149 |

| Surprise _Female Face | 3.46 ± 1.05 | 4.07 ± 1.91 | 2.97 ± 0.65 | p < 0.01 | p < 0.05 | 0.111 |

| Sadness_Male Face | 3.54 ± 1.4 | 4.1 ± 1.91 | 2.8 ± 0.542 | p < 0.01 | p < 0.05 | 0.132 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Greco, C.; Romani, M.; Berardi, A.; De Vita, G.; Galeoto, G.; Giovannone, F.; Vigliante, M.; Sogos, C. Morphing Task: The Emotion Recognition Process in Children with Attention Deficit Hyperactivity Disorder and Autism Spectrum Disorder. Int. J. Environ. Res. Public Health 2021, 18, 13273. https://doi.org/10.3390/ijerph182413273

Greco C, Romani M, Berardi A, De Vita G, Galeoto G, Giovannone F, Vigliante M, Sogos C. Morphing Task: The Emotion Recognition Process in Children with Attention Deficit Hyperactivity Disorder and Autism Spectrum Disorder. International Journal of Environmental Research and Public Health. 2021; 18(24):13273. https://doi.org/10.3390/ijerph182413273

Chicago/Turabian StyleGreco, Cristina, Maria Romani, Anna Berardi, Gloria De Vita, Giovanni Galeoto, Federica Giovannone, Miriam Vigliante, and Carla Sogos. 2021. "Morphing Task: The Emotion Recognition Process in Children with Attention Deficit Hyperactivity Disorder and Autism Spectrum Disorder" International Journal of Environmental Research and Public Health 18, no. 24: 13273. https://doi.org/10.3390/ijerph182413273