Understanding Medical Students’ Perceptions of and Behavioral Intentions toward Learning Artificial Intelligence: A Survey Study

Abstract

:1. Introduction

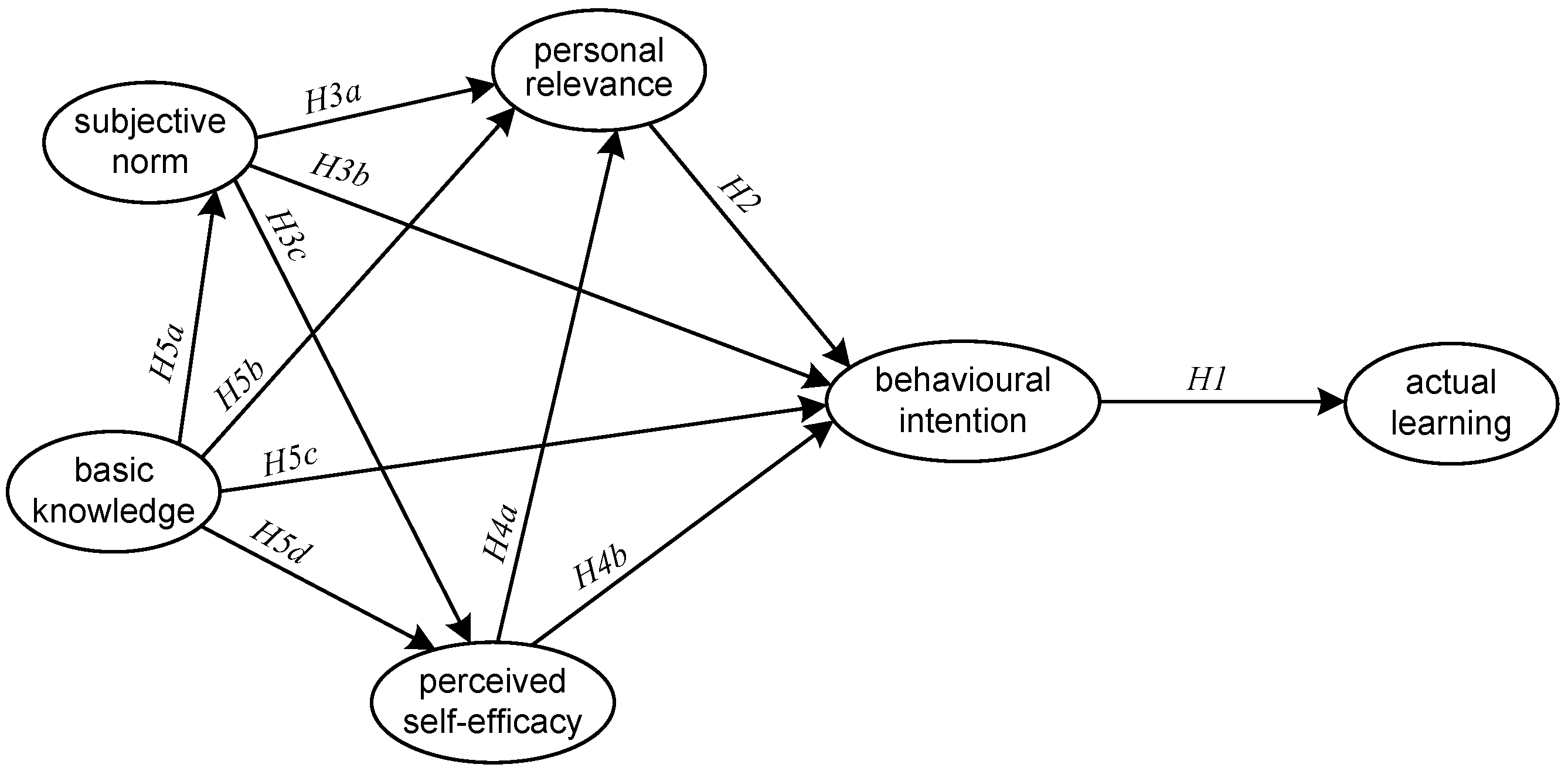

2. Theory and Hypotheses

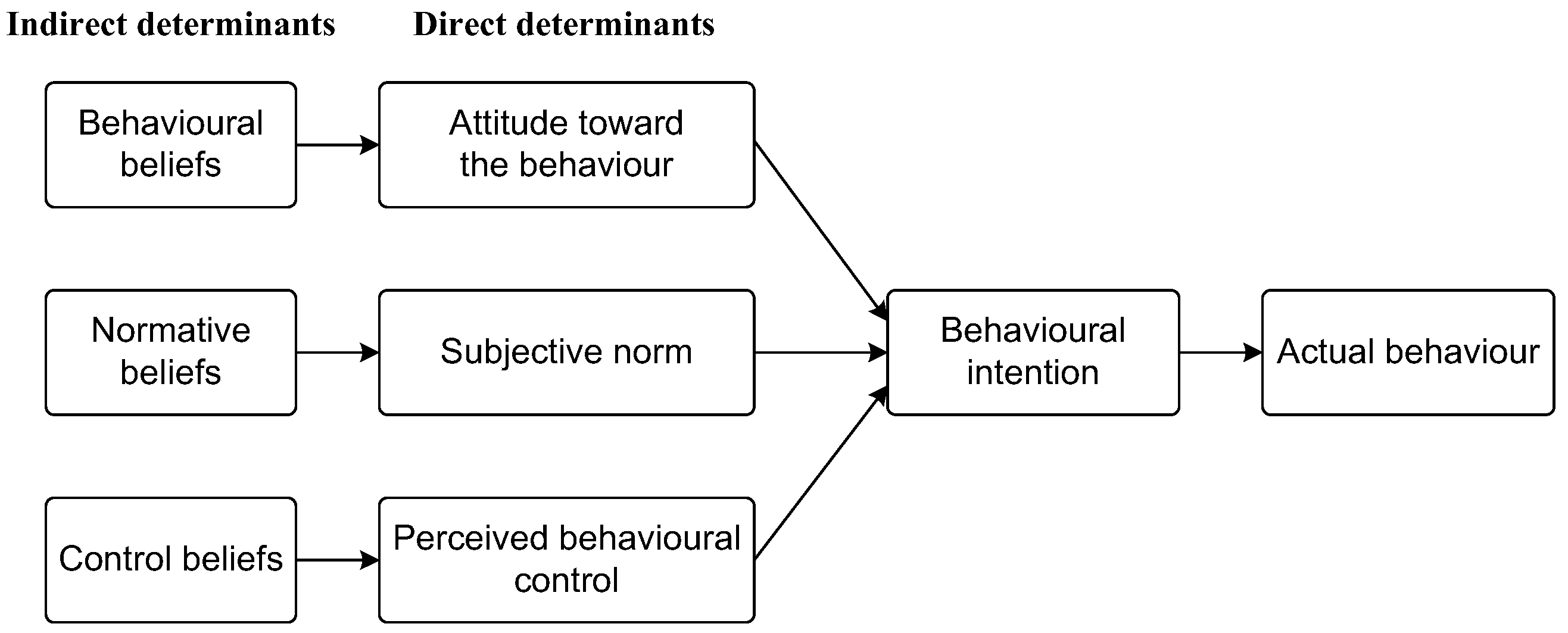

2.1. Theory of Planned Behavior

2.2. Attitude toward Learning Medical AI

2.3. Subjective Norm of Learning Medical AI

2.4. Perceived Behavioral Control over Learning Medical AI

2.5. Medical AI Literacy

3. Method

3.1. Participants

3.2. Measures and Instruments

3.3. Data Collection and Analysis

4. Results

4.1. Construct Validation

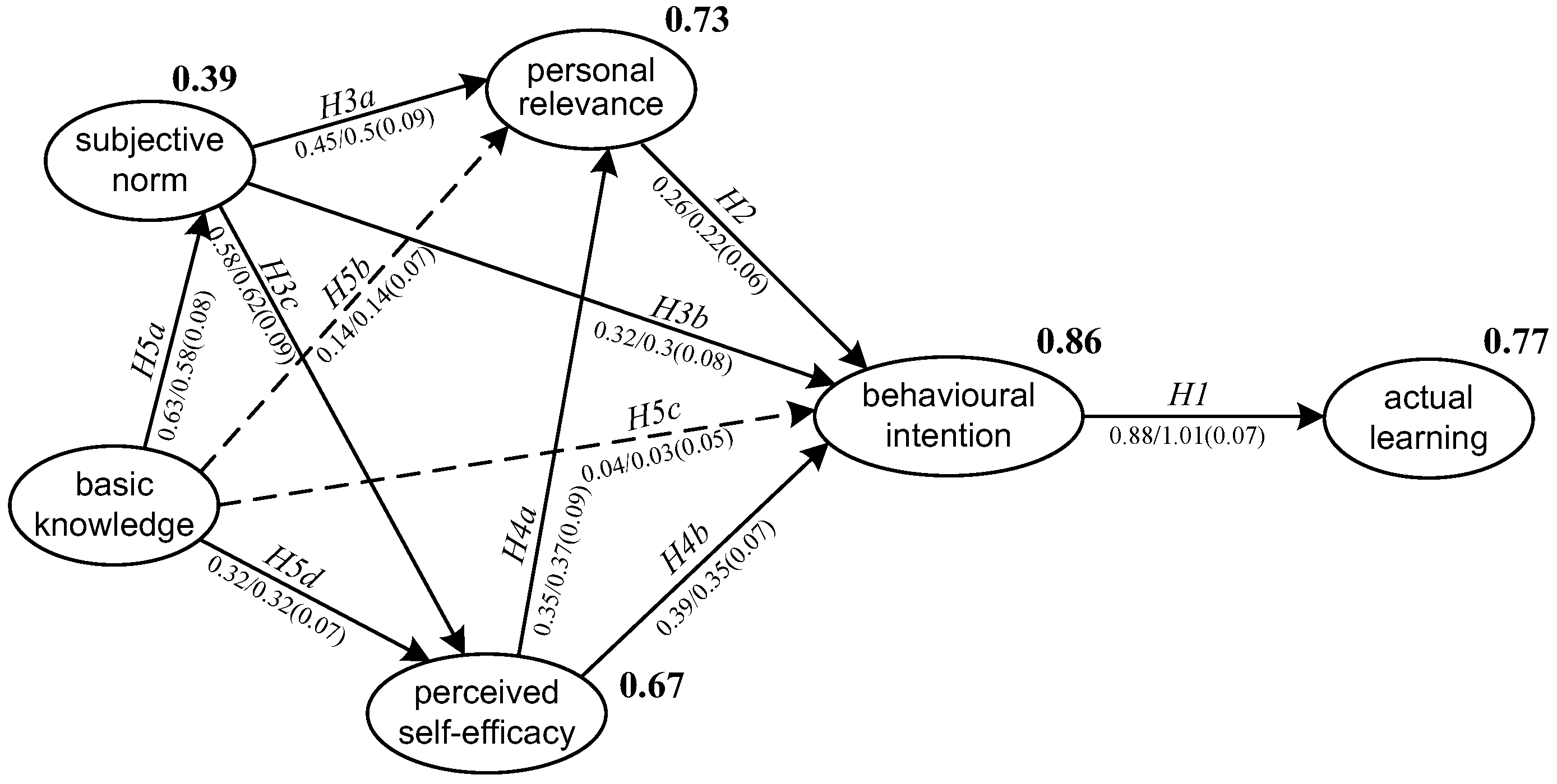

4.2. SEM Results

5. Discussion

6. Conclusions and Implications

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

- PR1. Using medical AI technology enables me to accomplish clinical tasks more quickly.

- PR2. Using medical AI technology improves my clinical performance.

- PR3. Using medical AI technology increases my clinical productivity.

- PR4. Using medical AI technology enhances my effectiveness.

- SN1. My school organizes enrichment lessons for us to learn more about medical AI technologies.

- SN2. My peers and/or parents encourage me to participate in innovative medical AI learning activities.

- SN3. My mentors/boss have emphasized the necessity to work creatively using medical AI technology.

- SN4. My classmates feel that it is necessary to learn how to work with medical AI technology.

- SE1. I am certain I can understand the most difficult materials presented in the courses about medical AI.

- SE2. I feel confident that I will do well in clinical practice involving medical AI.

- SE3. I am confident I can learn the basic concepts taught in the courses about medical AI.

- BKn1. I understand how computers process medical imaging to produce visual recognition and analysis.

- BKn2. I understand how AI technology optimizes the health care solutions.

- BKn3. I understand why AI-assisted genomic diagnostics needs big data for machine learning.

- BKn4. I understand how AI assistant in online patient guidance system handle human-computer interaction.

- BI1. I will continue to learn about medical AI technology in the future.

- BI2. I will pay attention to emerging AI applications used in medical practice.

- BI3. I expect that I would be concerned about medical AI development in the future.

- BI4. I plan to spend time in learning medical AI technology in the future.

- AL1. I have intentionally searched and viewed educational videos about medical AI.

- AL2. I have interacted with medical AI applications to understand how they work.

- AL3. I have studied about medical AI through books and journals.

- AL4. I have attended lessons about medical AI in schools or outside schools.

References

- Chan, K.S.; Zary, N. Applications and challenges of implementing artificial intelligence in medical education: Integrative review. JMIR Med. Educ. 2019, 5, e13930. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- D’Antoni, F.; Russo, F.; Ambrosio, L.; Bacco, L.; Vollero, L.; Vadalà, G.; Merone, M.; Papalia, R.; Denaro, V. Artificial Intelligence and Computer Aided Diagnosis in Chronic Low Back Pain: A Systematic Review. Int. J. Environ. Res. Public Health 2022, 19, 5971. [Google Scholar] [CrossRef] [PubMed]

- Ploug, T.; Holm, S. The four dimensions of contestable AI diagnostics—A patient-centric approach to explainable AI. Artif. Intell. Med. 2020, 107, 101901. [Google Scholar] [CrossRef] [PubMed]

- Alzubi, J.; Kumar, A.; Alzubi, O.A.; Manikandan, R. Efficient Approaches for Prediction of Brain Tumor using Machine Learning Techniques. Indian J. Public Health Res. Dev. 2019, 10, 267. [Google Scholar] [CrossRef]

- Miller, D.D.; Brown, E.W. Artificial intelligence in medical practice: The question to the answer? Am. J. Med. 2018, 131, 129–133. [Google Scholar] [CrossRef]

- Alzubi, O.A.; Alzubi, J.A.; Shankar, K.; Gupta, D. Blockchain and artificial intelligence enabled privacy-preserving medical data transmission in Internet of Things. Trans. Emerg. Telecommun. Technol. 2022, 32, e4360. [Google Scholar] [CrossRef]

- Panesar, S.; Cagle, Y.; Chander, D.; Morey, J.; Fernandez-Miranda, J.; Kliot, M. Artificial intelligence and the future of surgical robotics. Ann. Surg. 2019, 270, 223–226. [Google Scholar] [CrossRef]

- Amisha, P.M.; Pathania, M.; Rathaur, V.K. Overview of artificial intelligence in medicine. J. Fam. Med. Prim. Care 2019, 8, 2328–2331. [Google Scholar] [CrossRef]

- Goh, P.S.; Sandars, J. A vision of the use of technology in medical education after the COVID-19 pandemic. MedEdPublish 2020, 9, 1–8. [Google Scholar] [CrossRef] [Green Version]

- Arkorful, V.E.; Hammond, A.; Lugu, B.K.; Basiru, I.; Sunguh, K.K.; Charmaine-Kwade, P. Investigating the intention to use technology among medical students: An application of an extended model of the theory of planned behavior. J. Public Aff. 2022, 22, e2460. [Google Scholar] [CrossRef]

- Pinto dos Santos, D.; Giese, D.; Brodehl, S.; Chon, S.H.; Staab, W.; Kleinert, R.; Maintz, D.; Baeßler, B. Medical students’ attitude towards artificial intelligence: A multicentre survey. Eur. Radiol. 2019, 29, 1640–1646. [Google Scholar] [CrossRef] [PubMed]

- Olugbara, C.T.; Imenda, S.N.; Olugbara, O.O.; Khuzwayo, H.B. Moderating effect of innovation consciousness and quality consciousness on intention-behaviour relationship in e-learning integration. Educ. Inf. Technol. 2020, 25, 329–350. [Google Scholar] [CrossRef]

- Banerjee, M.; Chiew, D.; Patel, K.T.; Johns, I.; Chappell, D.; Linton, N.; Cole, G.D.; Francis, D.P.; Szram, J.; Ross, J.; et al. The impact of artificial intelligence on clinical education: Perceptions of postgraduate trainee doctors in London (UK) and recommendations for trainers. BMC Med. Educ. 2021, 21, 429. [Google Scholar] [CrossRef] [PubMed]

- Park, C.J.; Yi, P.H.; Siegel, E.L. Medical student perspectives on the impact of artificial intelligence on the practice of medicine. Curr. Probl. Diagn. Radiol. 2021, 50, 614–619. [Google Scholar] [CrossRef]

- Ajzen, I. The theory of planned behavior. Organ. Behav. Hum. Decis. Processes 1991, 50, 179–211. [Google Scholar] [CrossRef]

- Ajzen, I. The theory of planned behavior: Frequently asked questions. Hum. Behav. Emerg. Technol. 2020, 2, 314–324. [Google Scholar] [CrossRef]

- Hardeman, W.; Johnston, M.; Johnston, D.W.; Bonetti, D.; Wareham, N.J.; Kinmonth, A.L. Application of the theory of planned behaviour in behaviour change interventions: A systematic review. Psychol. Health 2002, 17, 123–158. [Google Scholar] [CrossRef]

- Hirschey, R.; Bryant, A.L.; Macek, C.; Battaglini, C.; Santacroce, S.; Courneya, K.S.; Walker, J.S.; Avishai, A.; Sheeran, P. Predicting physical activity among cancer survivors: Meta-analytic path modeling of longitudinal studies. Health Psychol. 2020, 39, 269–280. [Google Scholar] [CrossRef]

- Opoku, M.P.; Cuskelly, M.; Pedersen, S.J.; Rayner, C.S. Applying the theory of planned behaviour in assessments of teachers’ intentions towards practicing inclusive education: A scoping review. Eur. J. Spec. Needs Educ. 2021, 36, 577–592. [Google Scholar] [CrossRef]

- Tyson, M.; Covey, J.; Rosenthal, H.E.S. Theory of planned behavior interventions for reducing heterosexual risk behaviors: A meta-analysis. Health Psychol. 2014, 33, 1454–1467. [Google Scholar] [CrossRef] [Green Version]

- Ulker-Demirel, E.; Ciftci, G. A systematic literature review of the theory of planned behavior in tourism, leisure and hospitality management research. J. Hosp. Tour. Manag. 2020, 43, 209–219. [Google Scholar] [CrossRef]

- Qu, W.N.; Ge, Y.; Guo, Y.X.; Sun, X.H.; Zhang, K. The influence of WeChat use on driving behavior in China: A study based on the theory of planned behavior. Accid. Anal. Prev. 2020, 144, 105641. [Google Scholar] [CrossRef] [PubMed]

- Aboelmaged, M. E-waste recycling behaviour: An integration of recycling habits into the theory of planned behaviour. J. Clean. Prod. 2021, 278, 124182. [Google Scholar] [CrossRef]

- Gibson, K.E.; Lamm, A.J.; Woosnam, K.M.; Croom, D.B. Predicting intent to conserve freshwater resources using the theory of planned behavior (TPB). Water 2021, 13, 2581. [Google Scholar] [CrossRef]

- Lee, J.Y.; Kang, S.J. Factors influencing nurses’ intention to care for patients with emerging infectious diseases: Application of the theory of planned behavior. Nurs. Health Sci. 2020, 22, 82–90. [Google Scholar] [CrossRef]

- Parker, M.G.; Hwang, S.S.; Forbes, E.S.; Colvin, B.N.; Brown, K.R.; Colson, E.R. Use of the theory of planned behavior framework to understand breastfeeding decision-making among mothers of preterm infants. Breastfeed. Med. 2020, 15, 608–615. [Google Scholar] [CrossRef]

- Kam, C.C.S.; Hue, M.T.; Cheung, H.Y. Academic dishonesty among Hong Kong secondary school students: Application of theory of planned behaviour. Educ. Psychol. 2018, 38, 945–963. [Google Scholar] [CrossRef]

- Sungur-Gül, K.; Ateş, H. Understanding pre-service teachers’ mobile learning readiness using theory of planned behavior. Educ. Technol. Soc. 2021, 24, 44–57. [Google Scholar]

- Sagnak, H.C.; Baran, E. Faculty members’ planned technology integration behaviour in the context of a faculty technology mentoring programme. Australas. J. Educ. Technol. 2021, 37, 1–21. [Google Scholar] [CrossRef]

- Chai, C.S.; Lin, P.Y.; Jong, M.S.Y.; Dai, Y.; Chiu, T.K.F.; Qin, J.J. Perceptions of and behavioral intentions towards learning artificial intelligence in primary school students. Educ. Technol. Soc. 2021, 24, 89–101. [Google Scholar]

- Ajzen, I. From intentions to action: A theory of planned behavior. In Action-Control; Kuhl, J., Beckman, J., Eds.; Springer: Berlin, Germany, 1985; pp. 11–39. [Google Scholar]

- Chai, C.S.; Wang, X.W.; Xu, C. An extended theory of planned behavior for the modelling of Chinese secondary school students’ intention to learn artificial intelligence. Mathematics 2020, 8, 2089. [Google Scholar] [CrossRef]

- Kang, S.; Kim, I.; Lee, K. Predicting deviant behaviors in sports using the extended theory of planned behavior. Front. Psychol. 2021, 12, 678948. [Google Scholar] [CrossRef] [PubMed]

- Teo, T.; Zhou, M.M.; Noyes, J. Teachers and technology: Development of an extended theory of planned behavior. Educ. Technol. Res. Dev. 2016, 64, 1033–1052. [Google Scholar] [CrossRef]

- Fishbein, M.; Ajzen, I. Belief, Attitude, Intention, and Behavior: An Introduction to Theory and Research; Addison-Wesley: Reading, MA, USA, 1975. [Google Scholar]

- Lee, J.; Cerreto, F.A.; Lee, J. Theory of planned behavior and teachers’ decisions regarding use of educational technology. Educ. Technol. Soc. 2010, 13, 152–164. [Google Scholar]

- Amoako-Gyampah, K. Perceived usefulness, user involvement and behavioral intention: An empirical study of ERP implementation. Comput. Hum. Behav. 2007, 23, 1232–1248. [Google Scholar] [CrossRef]

- Martí-Parreño, J.; Galbis-Córdova, A.; Miquel-Romero, M.J. Students’ attitude towards the use of educational video games to develop competencies. Comput. Hum. Behav. 2018, 81, 366–377. [Google Scholar] [CrossRef]

- Weerathunga, P.R.; Samarathunga, W.H.M.S.; Rathnayake, H.N.; Agampodi, S.B.; Nurunnabi, M.; Madhunimasha, M.M.S.C. The COVID-19 pandemic and the acceptance of e-learning among university students: The role of precipitating events. Educ. Sci. 2021, 11, 436. [Google Scholar] [CrossRef]

- Jiang, M.Y.C.; Jong, M.S.Y.; Lau, W.W.F.; Meng, Y.L.; Chai, C.S.; Chen, M.Y. Validating the general extended technology acceptance model for e-learning: Evidence from an online English as a foreign language course amid COVID-19. Front. Psychol. 2021, 12, 671615. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhang, J.H.; Au, W.; Yates, G. University students’ online learning attitudes and continuous intention to undertake online courses: A self-regulated learning perspective. Educ. Technol. Res. Dev. 2020, 68, 1485–1519. [Google Scholar] [CrossRef]

- Agudo-Peregrina, Á.F.; Hernández-García, Á.; Pascual-Miguel, F.J. Behavioral intention, use behavior and the acceptance of electronic learning systems: Differences between higher education and lifelong learning. Comput. Hum. Behav. 2014, 34, 301–314. [Google Scholar] [CrossRef]

- Fishbein, M.; Ajzen, I. Predicting and Changing Behavior: The Reasoned Action Approach; Psychology Press: New York, NY, USA, 2009. [Google Scholar]

- Montano, D.E.; Kasprzyk, D. Theory of reasoned action, theory of planned behavior, and the integrated behavioral model. In Health Behavior and Health Education, 4th ed.; Glanz, K., Rimer, B.K., Viswanath, K., Eds.; Jossey-Bass: San Francisco, CA, USA, 2015; pp. 67–96. [Google Scholar]

- Rajeh, M.T.; Abduljabbar, F.H.; Alzaman, N. Students’ satisfaction and continued intention toward e-learning: A theory-based study. Med. Educ. Online 2021, 26, 1961348. [Google Scholar] [CrossRef] [PubMed]

- To, W.M.; Tang, M.N.F. Computer-based course evaluation: An extended technology acceptance model. Educ. Stud. 2019, 45, 131–144. [Google Scholar] [CrossRef]

- Teo, T. Examining the influence of subjective norm and facilitating conditions on the intention to use technology among pre-service teachers: A structural equation modeling of an extended technology acceptance model. Asia Pac. Educ. Rev. 2010, 11, 253–262. [Google Scholar] [CrossRef]

- Bandura, A. Self-efficacy: Toward a unifying theory of behavioral change. Psychol. Rev. 1977, 84, 191–215. [Google Scholar] [CrossRef] [PubMed]

- Bandura, A. Self-Efficacy: The Exercise of Control; Freeman: New York, NY, USA, 1997. [Google Scholar]

- Barton, E.A.; Dexter, S. Sources of teachers’ self-efficacy for technology integration from formal, informal, and independent professional learning. Educ. Technol. Res. Dev. 2020, 68, 89–108. [Google Scholar] [CrossRef]

- Webb-Williams, J. Science self-efficacy in the primary classroom: Using mixed methods to investigate sources of self-efficacy. Res. Sci. Educ. 2018, 48, 939–961. [Google Scholar] [CrossRef]

- Zamani-Alavijeh, F.; Araban, M.; Harandy, T.F.; Bastami, F.; Almasian, M. Sources of health care providers’ self-efficacy to deliver health education: A qualitative study. BMC Med. Educ. 2019, 19, 16. [Google Scholar] [CrossRef]

- Lu, H.; Hu, Y.P.; Gao, J.J.; Kinshuk. The effects of computer self-efficacy, training satisfaction and test anxiety on attitude and performance in computerized adaptive testing. Comput. Educ. 2016, 100, 45–55. [Google Scholar] [CrossRef]

- Pellas, N. The influence of computer self-efficacy, metacognitive self-regulation and self-esteem on student engagement in online learning programs: Evidence from the virtual world of second life. Comput. Hum. Behav. 2014, 35, 157–170. [Google Scholar] [CrossRef]

- Altalhi, M. Toward a model for acceptance of MOOCs in higher education: The modified UTAUT model for Saudi Arabia. Educ. Inf. Technol. 2021, 26, 1589–1605. [Google Scholar] [CrossRef]

- Li, R.; Meng, Z.K.; Tian, M.; Zhang, Z.Y.; Ni, C.B.; Xiao, W. Examining EFL learners’ individual antecedents on the adoption of automated writing evaluation in China. Comput. Assist. Lang. Learn. 2019, 32, 784–804. [Google Scholar] [CrossRef]

- Al-Qaysi, N.; Mohamad-Nordin, N.; Al-Emran, M. Employing the technology acceptance model in social media: A systematic review. Educ. Inf. Technol. 2020, 25, 4961–5002. [Google Scholar] [CrossRef]

- Tao, D.; Wang, T.Y.; Wang, T.S.; Zhang, T.R.; Zhang, X.Y.; Qu, X.D. A systematic review and meta-analysis of user acceptance of consumer-oriented health information technologies. Comput. Hum. Behav. 2020, 104, 106147. [Google Scholar] [CrossRef]

- Bas, G. Effect of student teachers’ teaching beliefs and attitudes towards teaching on motivation to teach: Mediating role of self-efficacy. J. Educ. Teach. 2022, 48, 348–363. [Google Scholar] [CrossRef]

- Moore, D.R. Technology literacy: The extension of cognition. Int. J. Technol. Des. Educ. 2011, 21, 185–193. [Google Scholar] [CrossRef]

- Jong, M.S.Y. Sustaining the adoption of gamified outdoor social enquiry learning in high schools through addressing teachers’ emerging concerns: A three-year study. Br. J. Educ. Technol. 2019, 50, 1275–1293. [Google Scholar] [CrossRef]

- Oluwajana, D.; Adeshola, I. Does the student’s perspective on multimodal literacy influence their behavioural intention to use collaborative computer-based learning? Educ. Inf. Technol. 2021, 26, 5613–5635. [Google Scholar] [CrossRef]

- Dickinson, B.L.; Gibson, K.; VanderKolk, K.; Greene, J.; Rosu, C.A.; Navedo, D.D.; Porter-Stransky, K.A.; Graves, L.E. “It is this very knowledge that makes us doctors”: An applied thematic analysis of how medical students perceive the relevance of biomedical science knowledge to clinical medicine. BMC Med. Educ. 2020, 20, 356. [Google Scholar] [CrossRef]

- Malau-Aduli, B.S.; Lee, A.Y.; Cooling, N.; Catchpole, M.; Jose, M.; Turner, R. Retention of knowledge and perceived relevance of basic sciences in an integrated case-based learning (CBL) curriculum. BMC Med. Educ. 2013, 13, 139. [Google Scholar] [CrossRef] [Green Version]

- Chiu, T.K.F.; Meng, H.; Chai, C.S.; King, I.; Wong, S.; Yam, Y. Creation and evaluation of a pretertiary artificial intelligence (AI) curriculum. IEEE Trans. Educ. 2021, 65, 30–39. [Google Scholar] [CrossRef]

- Huang, S.H.; Jiang, Y.C.; Yin, H.B.; Jong, S.Y.M. Does ICT use matter? The relationships between students’ ICT use, motivation, and science achievement in East Asia. Learn. Individ. Differ. 2021, 86, 101957. [Google Scholar] [CrossRef]

- Hu, L.T.; Bentler, P.M. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Struct. Equ. Modeling A Multidiscip. J. 1999, 6, 1–55. [Google Scholar] [CrossRef]

- Schreiber, J.B.; Nora, A.; Stage, F.K.; Barlow, E.A.; King, J. Reporting structural equation modeling and confirmatory factor analysis results: A review. J. Educ. Res. 2006, 99, 323–338. [Google Scholar] [CrossRef]

- Hair, J.F.; Black, W.; Babin, B.; Anderson, R. Multivariate Data Analysis, 7th ed.; Pearson Prentice Hall: Upper Saddle River, NJ, USA, 2010. [Google Scholar]

- Sposito, V.A.; Hand, M.L.; Bradley, S. On the efficiency of using the sample kurtosis in selecting optimal lp estimators. Commun. Stat.–Simul. Comput. 1983, 12, 265–272. [Google Scholar] [CrossRef]

- Hair, J.F.; Hult, G.T.M.; Ringle, C.M.; Sarstedt, M. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM), 2nd ed.; Sage: Los Angeles, CA, USA, 2017. [Google Scholar]

- Karaca, O.; Çalışkan, S.A.; Demir, K. Medical artificial intelligence readiness scale for medical students (MAIRS-MS)-development, validity and reliability study. BMC Med. Educ. 2021, 21, 112. [Google Scholar] [CrossRef] [PubMed]

- Lin, H.C.; Tu, Y.F.; Hwang, G.J.; Huang, H. From precision education to precision medicine: Factors affecting medical staff’s intention to learn to use AI applications in hospitals. Educ. Technol. Soc. 2021, 24, 123–137. [Google Scholar]

- Aoun, J.E. Robot-Proof: Higher Education in the Age of Artificial Intelligence; MIT Press: Boston, MA, USA, 2017. [Google Scholar]

- Seldon, A.; Abidoye, O. The Fourth Education Revolution; The University of Buckingham Press: Buckingham, UK, 2018. [Google Scholar]

- Lin, P.Y.; Chai, C.S.; Jong, M.S.Y.; Dai, Y.; Guo, Y.M.; Qin, J.J. Modeling the structural relationship among primary students’ motivation to learn artificial intelligence. Comput. Educ. Artif. Intell. 2021, 2, 100006. [Google Scholar] [CrossRef]

- Earle, A.M.; Napper, L.E.; LaBrie, J.W.; Brooks-Russell, A.; Smith, D.J.; de Rutte, J. Examining interactions within the theory of planned behavior in the prediction of intentions to engage in cannabis-related driving behaviors. J. Am. Coll. Health 2020, 68, 374–380. [Google Scholar] [CrossRef]

| Measure | Item | Mean | SD | Standardized Estimate | t-Value |

|---|---|---|---|---|---|

| PR | PR1 | 4.18 | 1.13 | 0.95 | -- |

| PR2 | 4.20 | 1.13 | 0.98 | 38.31 ** | |

| PR3 | 4.27 | 1.12 | 0.98 | 36.21 ** | |

| PR4 | 4.29 | 1.11 | 0.90 | 24.89 ** | |

| SN | SN1 | 3.61 | 1.39 | 0.66 | -- |

| SN2 | 4.11 | 1.21 | 0.80 | 9.91 ** | |

| SN3 | 4.14 | 1.25 | 0.80 | 9.91 ** | |

| SN4 | 4.49 | 1.10 | 0.83 | 10.28 ** | |

| PSE | PSE1 | 4.01 | 1.22 | 0.85 | -- |

| PSE2 | 4.22 | 1.14 | 0.94 | 19.08 ** | |

| PSE3 | 4.37 | 1.09 | 0.92 | 18.47 ** | |

| BKn | BKn1 | 3.02 | 1.39 | 0.74 | -- |

| BKn2 | 3.37 | 1.32 | 0.88 | 12.66 ** | |

| BKn3 | 3.96 | 1.28 | 0.76 | 10.95 ** | |

| BKn4 | 3.72 | 1.32 | 0.80 | 11.55 ** | |

| BI | BI1 | 4.48 | 1.05 | 0.88 | -- |

| BI2 | 4.47 | 1.03 | 0.89 | 18.93 ** | |

| BI3 | 4.55 | 1.03 | 0.91 | 20.02 ** | |

| BI4 | 4.32 | 1.13 | 0.91 | 19.76 ** | |

| AL | AL1 | 3.91 | 1.22 | 0.87 | -- |

| AL2 | 3.79 | 1.26 | 0.80 | 15.00 ** | |

| AL3 | 4.11 | 1.11 | 0.93 | 20.06 ** | |

| AL4 | 4.03 | 1.16 | 0.91 | 19.11 ** |

| 1 | 2 | 3 | 4 | 5 | 6 | |

|---|---|---|---|---|---|---|

| 1. PR | (0.95) | |||||

| 2. SN | 0.81 ** | (0.77) | ||||

| 3. PSE | 0.79 ** | 0.78 ** | (0.90) | |||

| 4. BKn | 0.66 ** | 0.63 ** | 0.68 ** | (0.80) | ||

| 5. BI | 0.84 ** | 0.85 ** | 0.85 ** | 0.64 ** | (0.90) | |

| 6. AL | 0.76 ** | 0.78 ** | 0.85 ** | 0.75 ** | 0.85 ** | (0.88) |

| Mean | 4.24 | 4.09 | 4.20 | 3.52 | 4.46 | 3.96 |

| SD | 1.08 | 1.03 | 1.07 | 1.13 | 0.98 | 1.08 |

| Skewness | −0.91 | −0.44 | −0.88 | −0.06 | −1.07 | −0.48 |

| Kurtosis | 1.14 | 0.33 | 1.18 | −0.28 | 2.39 | 0.02 |

| Cronbach α | 0.98 | 0.85 | 0.93 | 0.87 | 0.94 | 0.93 |

| Measure | CR | AVE | MSV | MaxR(H) |

|---|---|---|---|---|

| PR | 0.98 | 0.91 | 0.71 | 0.99 |

| SN | 0.86 | 0.60 | 0.72 | 0.87 |

| PSE | 0.93 | 0.82 | 0.72 | 0.94 |

| BKn | 0.88 | 0.64 | 0.56 | 0.89 |

| BI | 0.94 | 0.81 | 0.73 | 0.94 |

| AL | 0.93 | 0.77 | 0.73 | 0.94 |

| Hypothesis | Path | β-Value | Β-Value | SE | t-Value | Result |

|---|---|---|---|---|---|---|

| H1 | BI → AL | 0.88 | 1.01 | 0.07 | 14.33 ** | Supported |

| H2 | PR → BI | 0.26 | 0.22 | 0.06 | 3.60 ** | Supported |

| H3a | SN → PR | 0.45 | 0.50 | 0.09 | 5.40 ** | Supported |

| H3b | SN → BI | 0.32 | 0.30 | 0.08 | 4.06 ** | Supported |

| H3c | SN → PSE | 0.58 | 0.62 | 0.09 | 7.22 ** | Supported |

| H4a | PSE → PR | 0.35 | 0.37 | 0.09 | 4.29 ** | Supported |

| H4b | PSE → BI | 0.39 | 0.35 | 0.07 | 5.38 ** | Supported |

| H5a | BKn → SN | 0.63 | 0.58 | 0.08 | 7.61 ** | Supported |

| H5b | BKn → PR | 0.14 | 0.14 | 0.07 | 2.18 | Not supported |

| H5c | BKn → BI | 0.04 | 0.03 | 0.05 | 0.69 | Not supported |

| H5d | BKn → PSE | 0.32 | 0.32 | 0.07 | 4.35 ** | Supported |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, X.; Jiang, M.Y.-c.; Jong, M.S.-y.; Zhang, X.; Chai, C.-s. Understanding Medical Students’ Perceptions of and Behavioral Intentions toward Learning Artificial Intelligence: A Survey Study. Int. J. Environ. Res. Public Health 2022, 19, 8733. https://doi.org/10.3390/ijerph19148733

Li X, Jiang MY-c, Jong MS-y, Zhang X, Chai C-s. Understanding Medical Students’ Perceptions of and Behavioral Intentions toward Learning Artificial Intelligence: A Survey Study. International Journal of Environmental Research and Public Health. 2022; 19(14):8733. https://doi.org/10.3390/ijerph19148733

Chicago/Turabian StyleLi, Xin, Michael Yi-chao Jiang, Morris Siu-yung Jong, Xinping Zhang, and Ching-sing Chai. 2022. "Understanding Medical Students’ Perceptions of and Behavioral Intentions toward Learning Artificial Intelligence: A Survey Study" International Journal of Environmental Research and Public Health 19, no. 14: 8733. https://doi.org/10.3390/ijerph19148733

APA StyleLi, X., Jiang, M. Y.-c., Jong, M. S.-y., Zhang, X., & Chai, C.-s. (2022). Understanding Medical Students’ Perceptions of and Behavioral Intentions toward Learning Artificial Intelligence: A Survey Study. International Journal of Environmental Research and Public Health, 19(14), 8733. https://doi.org/10.3390/ijerph19148733