Facial Expressions and Self-Reported Emotions When Viewing Nature Images

Abstract

:1. Introduction

1.1. Positive Effects of Viewing Surrogate Nature

1.2. Emotional Facial Expressions

1.3. Measurement of Emotional Facial Expressions

AFFDEX Software for Automatic Computer Facial Expression Analysis

1.4. The Current Study

2. Materials and Methods

2.1. Participants

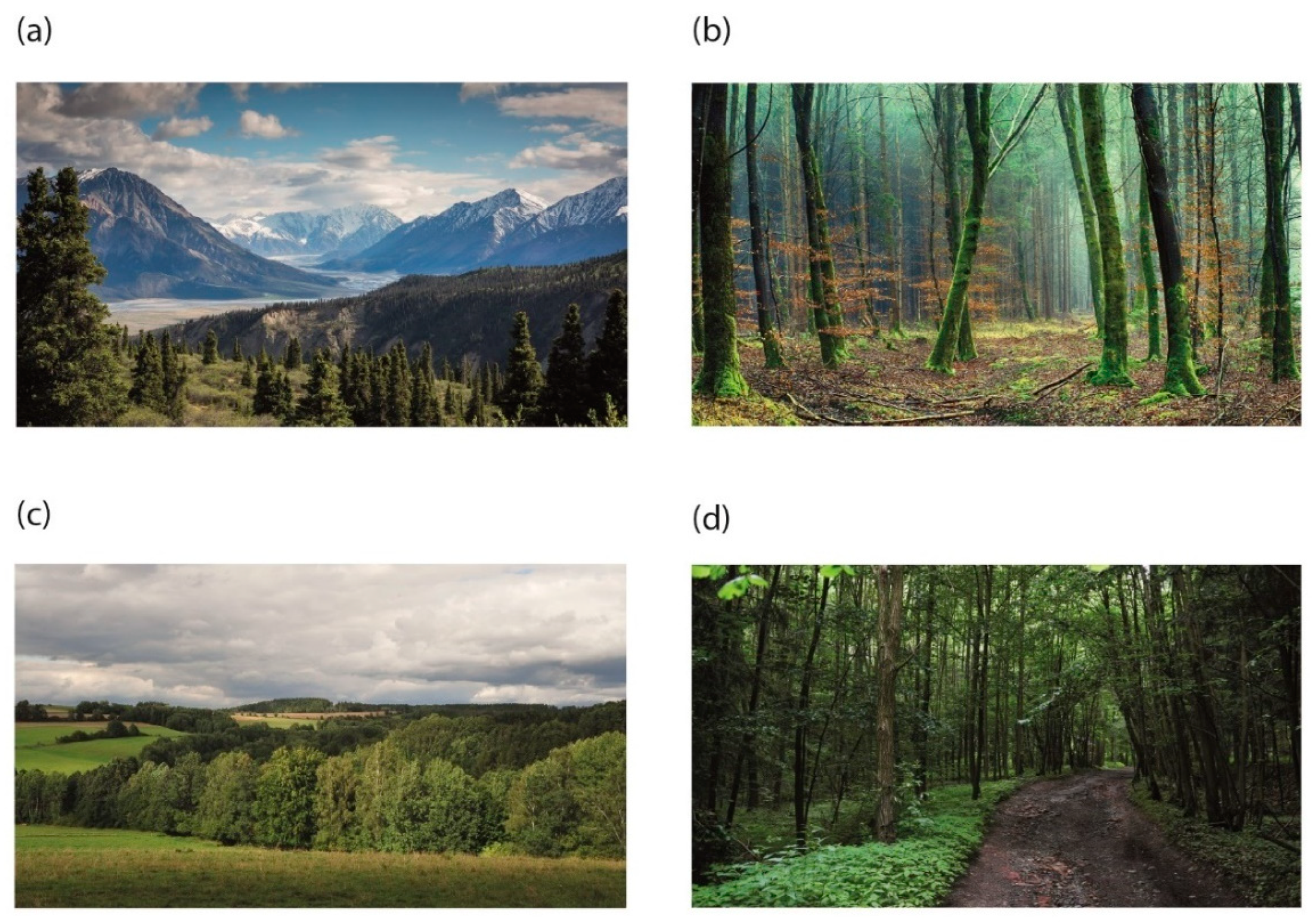

2.2. Stimulus Material

2.3. Apparatus

2.4. Procedure

2.5. Measures

3. Results

3.1. Analysis of Emotional Facial Expressions

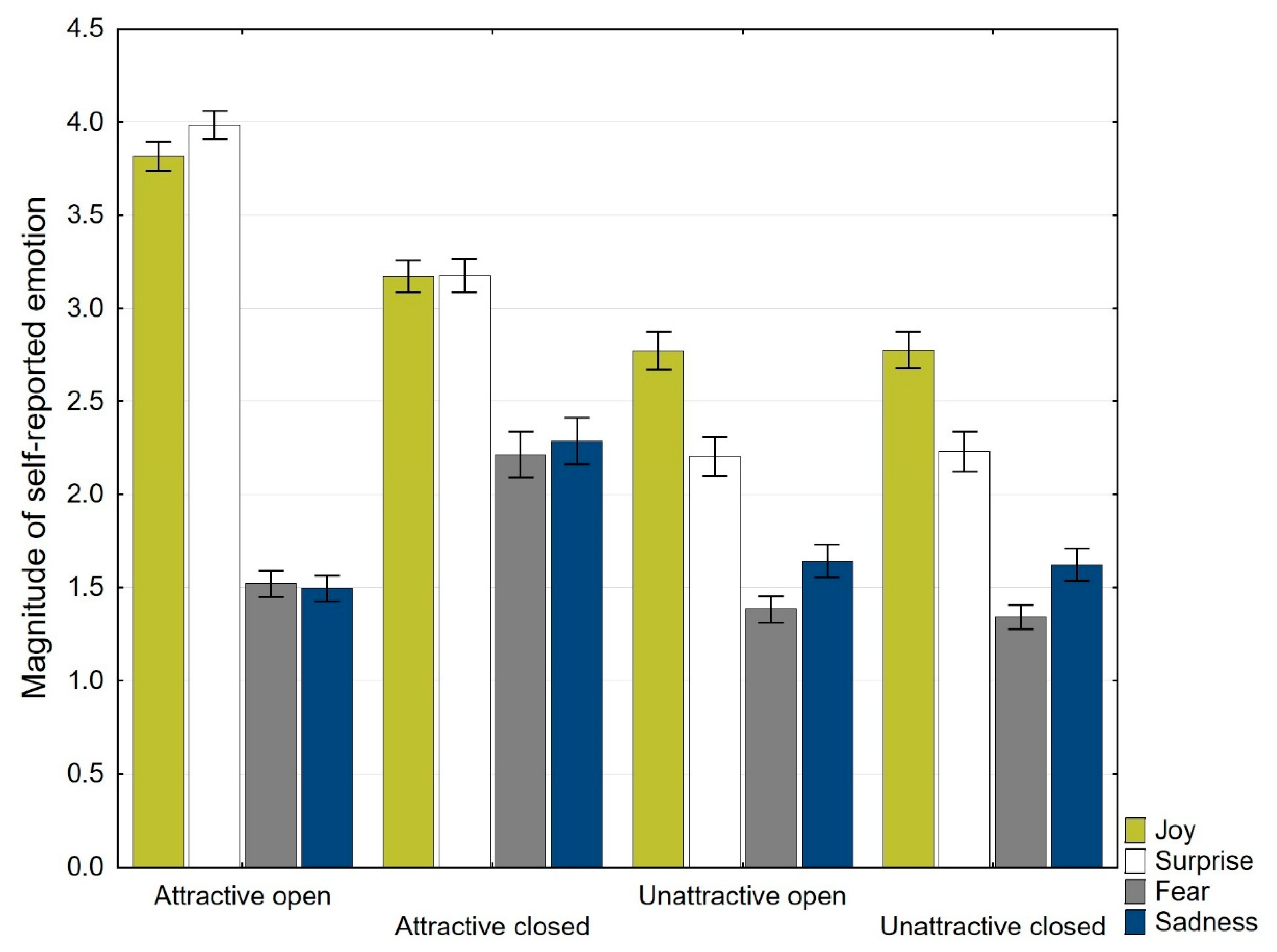

3.2. Analysis of Self-Reported Emotions

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Browning, M.H.E.M.; Saeidi-Rizi, F.; McAnirlin, O.; Yoon, H.; Pei, Y. The Role of Methodological Choices in the Effects of Experimental Exposure to Simulated Natural Landscapes on Human Health and Cognitive Performance: A Systematic Review. Environ. Behav. 2021, 53, 687–731. [Google Scholar] [CrossRef]

- Bowler, D.E.; Buyung-Ali, L.M.; Knight, T.M.; Pullin, A.S. A systematic review of evidence for the added benefits to health of exposure to natural environments. BMC Public Health 2010, 10, 456. [Google Scholar] [CrossRef] [PubMed]

- Bratman, G.N.; Hamilton, J.P.; Daily, G.C. The Impacts of Nature Experience on Human Cognitive Function and Mental Health: Nature Experience, Cognitive Function, and Mental Health. Ann. N. Y. Acad. Sci. 2012, 1249, 118–136. [Google Scholar] [CrossRef] [PubMed]

- McMahan, E.A.; Estes, D. The Effect of Contact with Natural Environments on Positive and Negative Affect: A Meta-Analysis. J. Posit. Psychol. 2015, 10, 507–519. [Google Scholar] [CrossRef]

- Staats, H.; Kieviet, A.; Hartig, T. Where to Recover from Attentional Fatigue: An Expectancy-Value Analysis of Environmental Preference. J. Environ. Psychol. 2003, 23, 147–157. [Google Scholar] [CrossRef]

- Berman, M.G.; Jonides, J.; Kaplan, S. The Cognitive Benefits of Interacting with Nature. Psychol. Sci. 2008, 19, 1207–1212. [Google Scholar] [CrossRef]

- Johnsen, S.Å.K.; Rydstedt, L.W. Active Use of the Natural Environment for Emotion Regulation. Eur. J. Psychol. 2013, 9, 798–819. [Google Scholar] [CrossRef]

- Martínez-Soto, J.; Gonzales-Santos, L.; Barrios, F.A.; Lena, M.E.M.-L. Affective and Restorative Valences for Three Environmental Categories. Percept. Mot. Skills 2014, 119, 901–923. [Google Scholar] [CrossRef]

- Lee, K.E.; Williams, K.J.H.; Sargent, L.D.; Williams, N.S.G.; Johnson, K.A. 40-Second Green Roof Views Sustain Attention: The Role of Micro-Breaks in Attention Restoration. J. Environ. Psychol. 2015, 42, 182–189. [Google Scholar] [CrossRef]

- de Kort, Y.A.W.; Meijnders, A.L.; Sponselee, A.A.G.; IJsselsteijn, W.A. What’s Wrong with Virtual Trees? Restoring from Stress in a Mediated Environment. J. Environ. Psychol. 2006, 26, 309–320. [Google Scholar] [CrossRef]

- Akers, A.; Barton, J.; Cossey, R.; Gainsford, P.; Griffin, M.; Micklewright, D. Visual Color Perception in Green Exercise: Positive Effects on Mood and Perceived Exertion. Environ. Sci. Technol. 2012, 46, 8661–8666. [Google Scholar] [CrossRef] [PubMed]

- Pilotti, M.; Klein, E.; Golem, D.; Piepenbrink, E.; Kaplan, K. Is Viewing a Nature Video After Work Restorative? Effects on Blood Pressure, Task Performance, and Long-Term Memory. Environ. Behav. 2015, 47, 947–969. [Google Scholar] [CrossRef]

- Bornioli, A.; Parkhurst, G.; Morgan, P.L. Psychological Wellbeing Benefits of Simulated Exposure to Five Urban Settings: An Experimental Study from the Pedestrian’s Perspective. J. Transp. Health 2018, 9, 105–116. [Google Scholar] [CrossRef]

- Snell, T.L.; McLean, L.A.; McAsey, F.; Zhang, M.; Maggs, D. Nature Streaming: Contrasting the Effectiveness of Perceived Live and Recorded Videos of Nature for Restoration. Environ. Behav. 2019, 51, 1082–1105. [Google Scholar] [CrossRef]

- Felnhofer, A.; Kothgassner, O.D.; Schmidt, M.; Heinzle, A.-K.; Beutl, L.; Hla vacs, H.; Kryspin-Exner, I. Is Virtual Reality Emotionally Arousing? Investigating Five Emotion Inducing Virtual Park Scenarios. Int. J. Hum. Comput. Stud. 2015, 82, 48–56. [Google Scholar] [CrossRef]

- Higuera-Trujillo, J.L.; López-Tarruella Maldonado, J.; Llinares Millán, C. Psychological and Physiological Human Responses to Simulated and Real Environments: A Comparison between Photographs, 360° Panoramas, and Virtual Reality. Appl. Ergon. 2017, 65, 398–409. [Google Scholar] [CrossRef]

- Chirico, A.; Gaggioli, A. When Virtual Feels Real: Comparing Emotional Responses and Presence in Virtual and Natural Environments. Cyberpsychol. Behav. Soc. Netw. 2019, 22, 220–226. [Google Scholar] [CrossRef]

- Hartig, T.; Korpela, K.; Evans, G.W.; Gärling, T. A Measure of Restorative Quality in Environments. Scand. Hous. Plan. Res. 1997, 14, 175–194. [Google Scholar] [CrossRef]

- Mayer, F.S.; Frantz, C.M.; Bruehlman-Senecal, E.; Dolliver, K. Why Is Nature Beneficial?: The Role of Connectedness to Nature. Environ. Behav. 2009, 41, 607–643. [Google Scholar] [CrossRef]

- Ulrich, R.S. Natural Versus Urban Scenes: Some Psychophysiological Effects. Environ. Behav. 1981, 13, 523–556. [Google Scholar] [CrossRef]

- Chirico, A.; Ferrise, F.; Cordella, L.; Gaggioli, A. Designing Awe in Virtual Reality: An Experimental Study. Front. Psychol. 2018, 8, 2351. [Google Scholar] [CrossRef] [PubMed]

- Cacioppo, J.T.; Petty, R.E.; Losch, M.E.; Kim, H.S. Electromyographic Activity over Facial Muscle Regions Can Differentiate the Valence and Intensity of Affective Reactions. J. Pers. Soc. Psychol. 1986, 50, 260–268. [Google Scholar] [CrossRef] [PubMed]

- Chang, C.-Y.; Chen, P.-K. Human Response to Window Views and Indoor Plants in the Workplace. Hort. Sci. 2005, 40, 1354–1359. [Google Scholar] [CrossRef]

- Chang, C.-Y.; Hammitt, W.E.; Chen, P.-K.; Machnik, L.; Su, W.-C. Psychophysiological Responses and Restorative Values of Natural Environments in Taiwan. Landsc. Urban Plan. 2008, 85, 79–84. [Google Scholar] [CrossRef]

- Wei, H.; Ma, B.; Hauer, R.J.; Liu, C.; Chen, X.; He, X. Relationship between Environmental Factors and Facial Expressions of Visitors during the Urban Forest Experience. Urban For. Urban Green. 2020, 53, 126699. [Google Scholar] [CrossRef]

- Wei, H.; Hauer, R.J.; He, X. A Forest Experience Does Not Always Evoke Positive Emotion: A Pilot Study on Unconscious Facial Expressions Using the Face Reading Technology. For. Policy Econ. 2021, 123, 102365. [Google Scholar] [CrossRef]

- Darwin, C.; Prodger, P. The Expression of Emotion in Man and Animals; John Murray: London, UK, 1872. [Google Scholar]

- Ekman, P. Universal Facial Expressions of Emotion. Calif. Ment. Health Res. Dig. 1970, 8, 151–158. [Google Scholar]

- Jack, R.E.; Garrod, O.G.B.; Yu, H.; Caldara, R.; Schyns, P.G. Facial Expressions of Emotion Are Not Culturally Universal. Proc. Natl. Acad. Sci. USA 2012, 109, 7241–7244. [Google Scholar] [CrossRef]

- Whalen, P.J.; Kagan, J.; Cook, R.G.; Davis, F.C.; Kim, H.; Polis, S.; McLaren, D.G.; Somerville, L.H.; McLean, A.A.; Maxwell, J.S.; et al. Human Amygdala Responsivity to Masked Fearful Eye Whites. Science 2004, 306, 2061. [Google Scholar] [CrossRef]

- Blair, R.J.R.; Morris, J.S.; Frith, C.D.; Perrett, D.I.; Dolan, R.J. Dissociable Neural Responses to Facial Expressions of Sadness and Anger. Brain 1999, 122, 883–893. [Google Scholar] [CrossRef]

- Sprengelmeyer, R.; Young, A.W.; Calder, A.J.; Karnat, A.; Lange, H.; Hömberg, V.; Perrett, D.I.; Rowland, D. Loss of Disgust: Perception of Faces and Emotions in Huntington’s Disease. Brain 1996, 119, 1647–1665. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P.; Davidson, R.J. Voluntary Smiling Changes Regional Brain Activity. Psychol. Sci. 1993, 4, 342–345. [Google Scholar] [CrossRef]

- Reisenzein, R.; Studtmann, M.; Horstmann, G. Coherence between Emotion and Facial Expression: Evidence from Laboratory Experiments. Emot. Rev. 2013, 5, 16–23. [Google Scholar] [CrossRef]

- Barrett, L.F.; Adolphs, R.; Marsella, S.; Martinez, A.M.; Pollak, S.D. Emotional Expressions Reconsidered: Challenges to Inferring Emotion From Human Facial Movements. Psychol. Sci. Public Interest 2019, 20, 1–68. [Google Scholar] [CrossRef] [PubMed]

- Fridlund, A.J.; Cacioppo, J.T. Guidelines for Human Electromyographic Research. Psychophysiology 1986, 23, 567–589. [Google Scholar] [CrossRef] [PubMed]

- Sariyanidi, E.; Gunes, H.; Cavallaro, A. Automatic Analysis of Facial Affect: A Survey of Registration, Representation, and Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1113–1133. [Google Scholar] [CrossRef]

- Calvo, M.G.; Nummenmaa, L. Perceptual and Affective Mechanisms in Facial Expression Recognition: An Integrative Review. Cogn. Emot. 2016, 30, 1081–1106. [Google Scholar] [CrossRef]

- Lewinski, P.; den Uyl, T.M.; Butler, C. Automated Facial Coding: Validation of Basic Emotions and FACS AUs in FaceReader. J. Neurosci. Psychol. Econ. 2014, 7, 227–236. [Google Scholar] [CrossRef]

- Guan, H.; Wei, H.; Hauer, R.J.; Liu, P. Facial Expressions of Asian People Exposed to Constructed Urban Forests: Accuracy Validation and Variation Assessment. PLoS ONE 2021, 16, e0253141. [Google Scholar] [CrossRef]

- Liu, P.; Liu, M.; Xia, T.; Wang, Y.; Wei, H. Can Urban Forest Settings Evoke Positive Emotion? Evidence on Facial Expressions and Detection of Driving Factors. Sustainability 2021, 13, 8687. [Google Scholar] [CrossRef]

- Farnsworth, B. Facial Action Coding System (FACS)—A Visual Guidebook. Available online: https://imotions.com/blog/facial-action-coding-system/ (accessed on 25 July 2022).

- Affectiva Science Resources. Available online: https://www.affectiva.com/science-resource/ (accessed on 25 July 2022).

- Beringer, M.; Spohn, F.; Hildebrandt, A.; Wacker, J.; Recio, G. Reliability and Validity of Machine Vision for the Assessment of Facial Expressions. Cogn. Syst. Res. 2019, 56, 119–132. [Google Scholar] [CrossRef]

- Kulke, L.; Feyerabend, D.; Schacht, A. A Comparison of the Affectiva IMotions Facial Expression Analysis Software With EMG for Identifying Facial Expressions of Emotion. Front. Psychol. 2020, 11, 329. [Google Scholar] [CrossRef] [PubMed]

- Höfling, T.T.A.; Alpers, G.W.; Gerdes, A.B.M.; Föhl, U. Automatic Facial Coding versus Electromyography of Mimicked, Passive, and Inhibited Facial Response to Emotional Faces. Cogn. Emot. 2021, 35, 874–889. [Google Scholar] [CrossRef]

- Küntzler, T.; Höfling, T.T.A.; Alpers, G.W. Automatic Facial Expression Recognition in Standardized and Non-Standardized Emotional Expressions. Front. Psychol. 2021, 12, 627561. [Google Scholar] [CrossRef]

- Franěk, M.; Petružálek, J. Viewing Natural vs. Urban Images and Emotional Facial Expressions: An Exploratory Study. IJERPH 2021, 18, 7651. [Google Scholar] [CrossRef]

- Joye, Y.; Bolderdijk, J.W. An Exploratory Study into the Effects of Extraordinary Nature on Emotions, Mood, and Prosociality. Front. Psychol. 2015, 5, 1577. [Google Scholar] [CrossRef] [PubMed]

- Herzog, T.R.; Chernick, K.K. Tranquility and Danger in Urban and Natural Settings. J. Environ. Psychol. 2000, 20, 29–39. [Google Scholar] [CrossRef]

- Andrews, M.; Gatersleben, B. Variations in Perceptions of Danger, Fear and Preference in a Simulated Natural Environment. J. Environ. Psychol. 2010, 30, 473–481. [Google Scholar] [CrossRef]

- Blöbaum, A.; Hunecke, M. Perceived Danger in Urban Public Space: The Impacts of Physical Features and Personal Factors. Environ. Behav. 2005, 37, 465–486. [Google Scholar] [CrossRef]

- Appleton, J. The Experience of Landscape, Revised ed.; Wiley: Chichester, UK, 1996; ISBN 978-0-471-96233-5. [Google Scholar]

- Lis, A.; Pardela, Ł.; Iwankowski, P.; Haans, A. The Impact of Plants Offering Cover on Female Students’ Perception of Danger in Urban Green Spaces in Crime Hot Spots. Landsc. Online 2021, 91, 1–14. [Google Scholar] [CrossRef]

- Faul, F.; Erdfelder, E.; Buchner, A.; Lang, A.-G. Statistical Power Analyses Using G*Power 3.1: Tests for Correlation and Regression Analyses. Behav. Res. Methods 2009, 41, 1149–1160. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Otamendi, F.J.; Sutil Martín, D.L. The Emotional Effectiveness of Advertisement. Front. Psychol. 2020, 11, 2088. [Google Scholar] [CrossRef] [PubMed]

- Mehta, A.; Sharma, C.; Kanala, M.; Thakur, M.; Harrison, R.; Torrico, D.D. Self-Reported Emotions and Facial Expressions on Consumer Acceptability: A Study Using Energy Drinks. Foods 2021, 10, 330. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P. Emotions Revealed: Recognizing Faces and Feelings to Improve Communication and Emotional Life; Henry Holt and Co.: New York, NY, USA, 2007; ISBN 978-0-8050-7516-8. [Google Scholar]

- Yan, W.-J.; Wu, Q.; Liang, J.; Chen, Y.-H.; Fu, X. How Fast Are the Leaked Facial Expressions: The Duration of Micro-Expressions. J. Nonverbal. Behav. 2013, 37, 217–230. [Google Scholar] [CrossRef]

- Vail, A.K.; Grafsgaard, J.F.; Boyer, K.E.; Wiebe, E.N.; Lester, J.C. Gender Differences in Facial Expressions of Affect during Learning. In Proceedings of the 2016 Conference on User Modeling Adaptation and Personalization, Halifax, NS, Canada, 13–17 July 2016; pp. 65–73. [Google Scholar]

- Sawyer, R.; Smith, A.; Rowe, J.; Azevedo, R.; Lester, J. Enhancing Student Models in Game-Based Learning with Facial Expression Recognition. In Proceedings of the 25th Conference on User Modeling, Adaptation and Personalization, Bratislava, Slovakia, 9 July 2017; pp. 192–201. [Google Scholar]

- Timme, S.; Brand, R. Affect and Exertion during Incremental Physical Exercise: Examining Changes Using Automated Facial Action Analysis and Experiential Self-Report. PLoS ONE 2020, 15, e0228739. [Google Scholar] [CrossRef] [Green Version]

| Attractive Open | Attractive Closed | Unattractive Open | Unattractive Closed | |||||

|---|---|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Mean | SD | Mean | SD | |

| Emotion | ||||||||

| Anger | 0.066 | 0.230 | 0.096 | 0.288 | 0.117 | 0.668 | 0.129 | 0.455 |

| Contempt | 0.546 | 1.582 | 0.225 | 0.145 | 0.607 | 2.672 | 0.472 | 1.159 |

| Disgust | 0.519 | 0.438 | 0.538 | 0.500 | 0.464 | 0.280 | 0.744 | 1.992 |

| Fear | 0.009 | 0.016 | 0.075 | 0.323 | 0.033 | 0.179 | 0.106 | 0.557 |

| Joy | 1.703 | 4.701 | 1.319 | 5.080 | 1.732 | 6.040 | 1.126 | 3.463 |

| Sadness | 0.098 | 0.347 | 0.239 | 0.836 | 0.173 | 0.564 | 0.258 | 1.281 |

| Surprise | 0.365 | 0.375 | 0.395 | 0.613 | 0.549 | 1.529 | 0.410 | 0.573 |

| Involvement indicators | ||||||||

| Engagement | 4.972 | 7.449 | 4.275 | 8.492 | 4.515 | 8.885 | 4.538 | 7.111 |

| Valence | 1.317 | 6.369 | 0.669 | 6.129 | 1.259 | 7.975 | 0.457 | 6.147 |

| Attractive Open | Attractive Closed | Unattractive Open | Unattractive Closed | |||||

|---|---|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Mean | SD | Mean | SD | |

| Emotion | ||||||||

| Joy | 3.8 | 0.56 | 3.2 | 0.62 | 2.8 | 0.72 | 2.8 | 0.69 |

| Surprise | 4.0 | 0.55 | 3.2 | 0.63 | 2.2 | 0.75 | 2.2 | 0.75 |

| Fear | 1.5 | 0.49 | 2.2 | 0.87 | 1.4 | 0.51 | 1.3 | 0.46 |

| Sadness | 1.5 | 0.48 | 2.3 | 0.87 | 1.6 | 0.62 | 1.6 | 0.74 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Franěk, M.; Petružálek, J.; Šefara, D. Facial Expressions and Self-Reported Emotions When Viewing Nature Images. Int. J. Environ. Res. Public Health 2022, 19, 10588. https://doi.org/10.3390/ijerph191710588

Franěk M, Petružálek J, Šefara D. Facial Expressions and Self-Reported Emotions When Viewing Nature Images. International Journal of Environmental Research and Public Health. 2022; 19(17):10588. https://doi.org/10.3390/ijerph191710588

Chicago/Turabian StyleFraněk, Marek, Jan Petružálek, and Denis Šefara. 2022. "Facial Expressions and Self-Reported Emotions When Viewing Nature Images" International Journal of Environmental Research and Public Health 19, no. 17: 10588. https://doi.org/10.3390/ijerph191710588

APA StyleFraněk, M., Petružálek, J., & Šefara, D. (2022). Facial Expressions and Self-Reported Emotions When Viewing Nature Images. International Journal of Environmental Research and Public Health, 19(17), 10588. https://doi.org/10.3390/ijerph191710588