A Study on the Effectiveness of IT Application Education for Older Adults by Interaction Method of Humanoid Robots

Abstract

:1. Introduction

2. Literature Review

2.1. Functional Aspect in Education

2.2. Emotional Aspects in Education

2.3. Research Questions

3. Materials and Methods

3.1. Apparatus

3.2. Interaction Types of the Robot

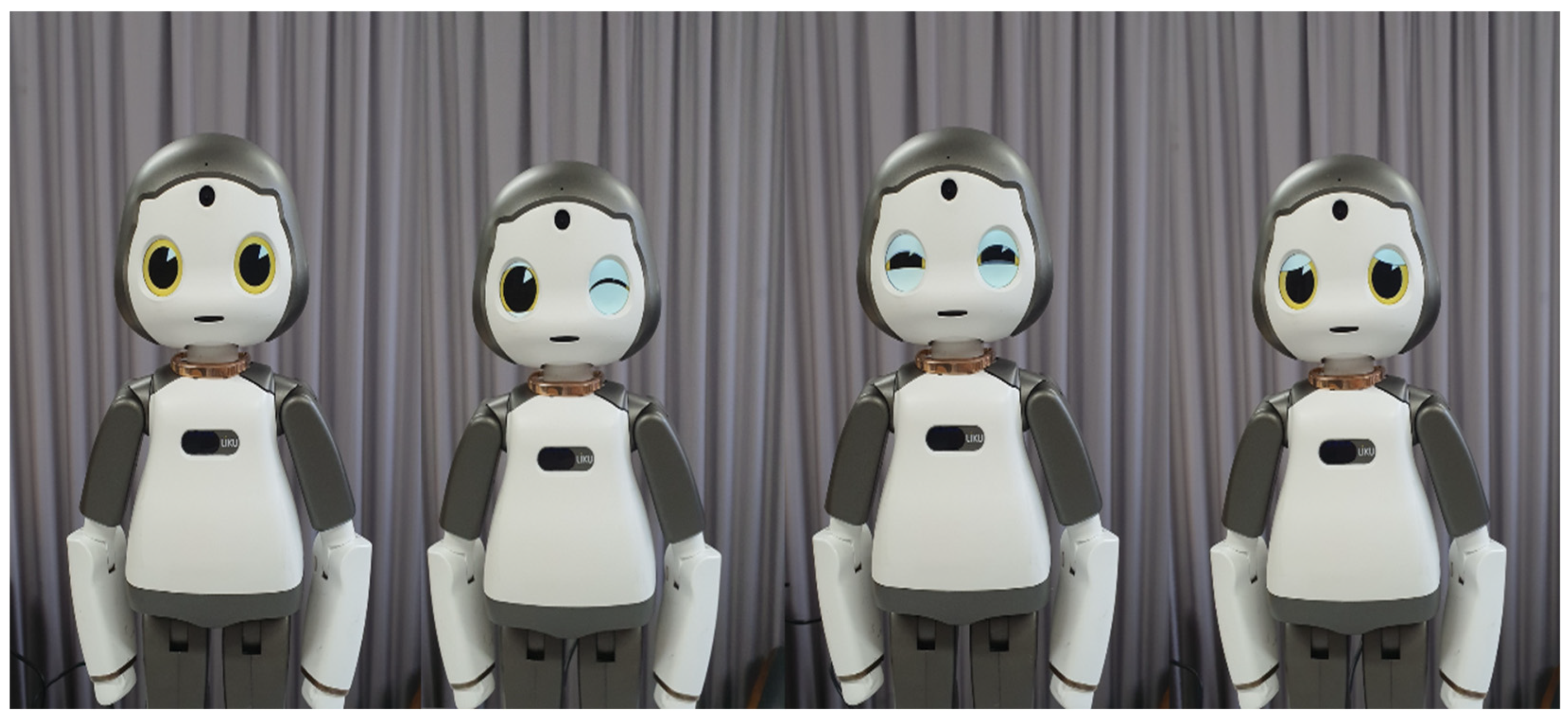

3.3. Gestures and Facial Expressions of the Humanoid Robot

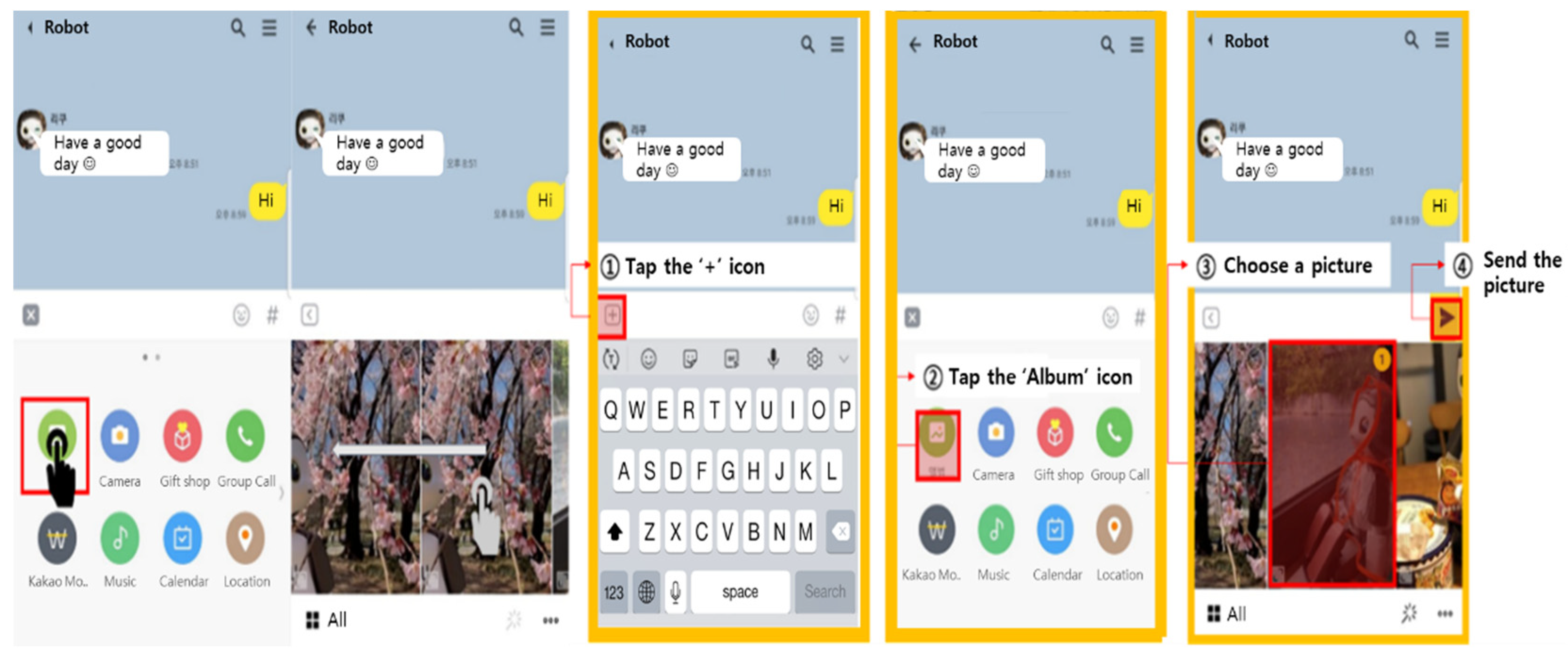

3.4. Target Application

3.5. Participants

3.6. Experimental Environment

3.7. Experimental Procedure

3.8. Measures

4. Results

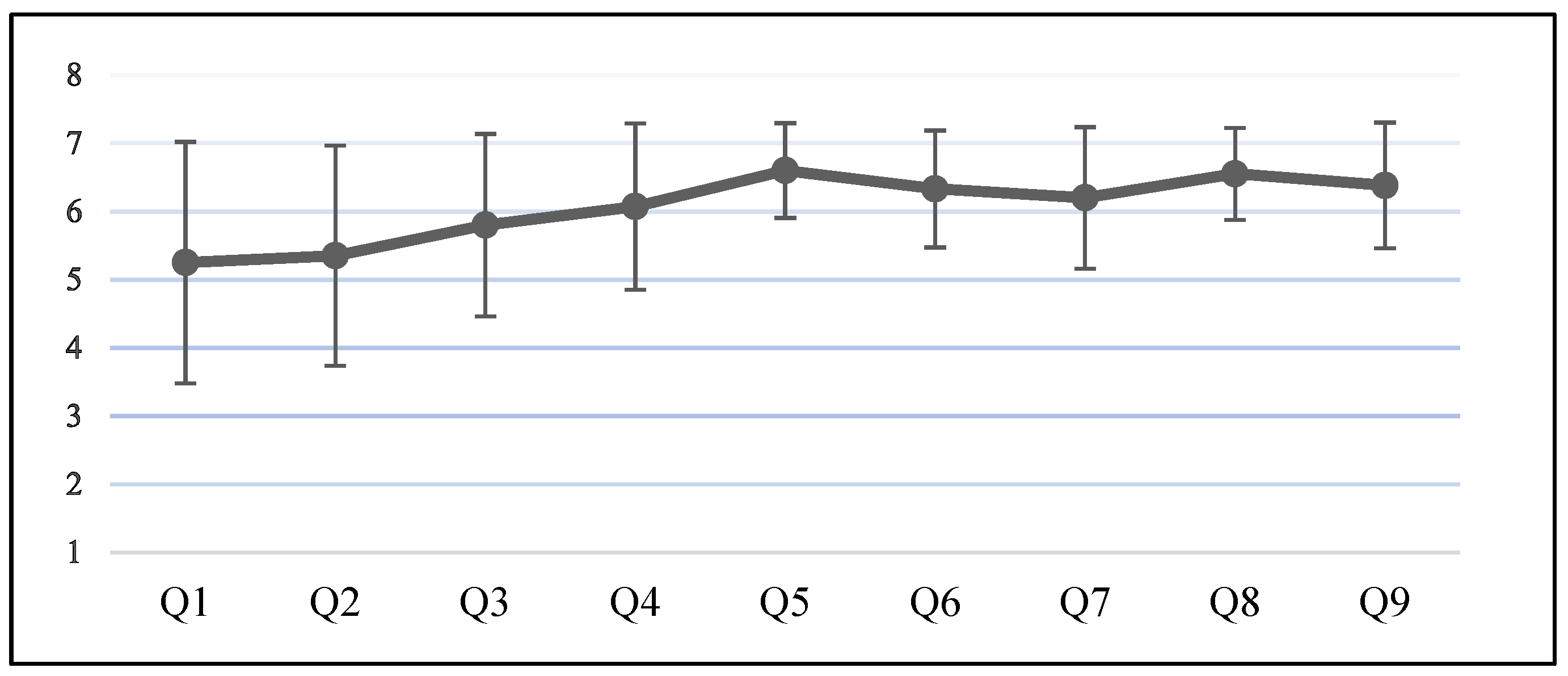

4.1. Descriptive Statistics

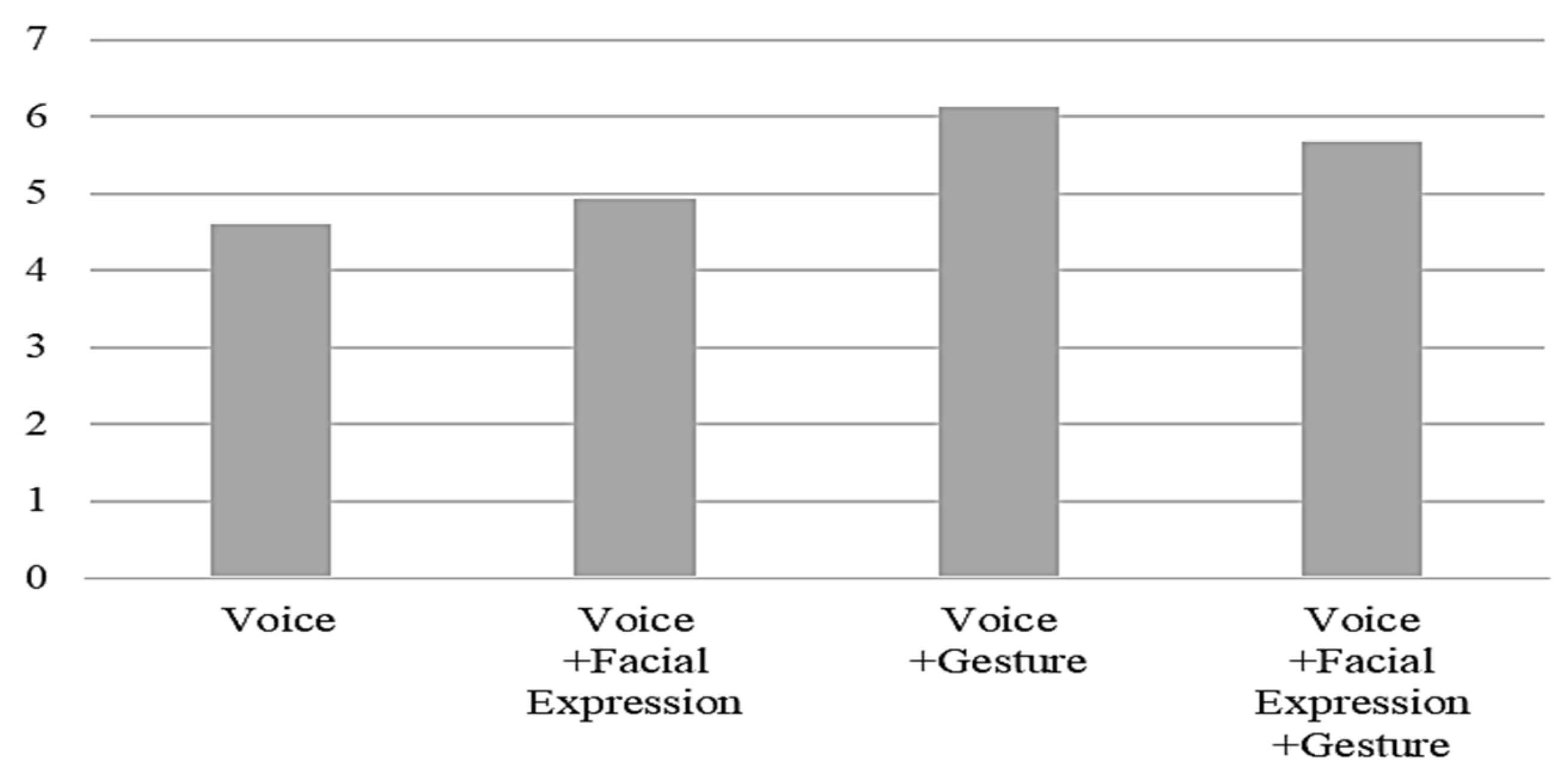

4.2. Subjective Evaluation according to Interaction Type

4.3. Task Performance according to the Interaction Type

4.3.1. Touch Errors according to the Interaction Type

4.3.2. Number of Retrainings according to the Interaction Type

4.3.3. Success Rate according to the Interaction Type

4.4. Results of a Survey on the Digital Divide

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Soja, E.; Soja, P. Overcoming difficulties in ICT use by the elderly. In Challenges and Development Trends of Modern Economy, Finance and Information Technology; Foundation of the Cracow University of Economics: Cracow, Poland, 2015; pp. 413–422. [Google Scholar]

- Wu, Y.H.; Damnée, S.; Kerhervé, H.; Ware, C.; Rigaud, A.S. Bridging the digital divide in older adults: A study from an initiative to inform older adults about new technologies. Clin. Interv. Aging 2015, 10, 193. [Google Scholar] [CrossRef] [PubMed]

- Shin, Y.J.; Koo, M.J. An explorative study on computer education for the elderly and their life satisfaction. Andragogy Today Int. J. Adult Contin. Educ. 2010, 13, 119–147. [Google Scholar]

- Swoboda, W.; Holl, F.; Pohlmann, S.; Denkinger, M.; Hehl, A.; Brönner, M.; Gewald, H. A Digital Speech Assistant for the Elderly. In Proceedings of the MIE Medical Informatics in Europe 2019, Geneva, Switzerland, 28 April–1 May 2020. [Google Scholar]

- Blažič, B.J.; Blažič, A.J. Overcoming the digital divide with a modern approach to learning digital skills for the elderly adults. Educ. Inf. Technol. 2020, 25, 259–279. [Google Scholar] [CrossRef]

- Antonio, A.; Tuffley, D. Bridging the age-based digital divide. Int. J. Digit. Lit. Digit. Competence (IJDLDC) 2015, 6, 1–15. [Google Scholar] [CrossRef]

- Jung, Y.; Peng, W.; Moran, M.; Jin, S.A.A.; McLaughlin, M.; Cody, M.; Albright, J.; Silverstein, M. Low-income minority seniors’ enrollment in a cybercafé: Psychological barriers to crossing the digital divide. Educ. Gerontol. 2010, 36, 193–212. [Google Scholar] [CrossRef]

- Rosenthal, D.A.; Layman, E.J. Utilization of information technology in eastern North Carolina physician practices: Determining the existence of a digital divide. Perspect. Health Inf. Manag. AHIMA 2008, 5, 3. [Google Scholar]

- Shin, H.; Jeon, C. When Robots Meet the Elderly: The Contexts of Interaction and the Role of Mediators. J. Sci. Technol. Stud. 2018, 18, 135–179. [Google Scholar]

- Wada, K.; Shibata, T.; Saito, T.; Sakamoto, K.; Tanie, K. Psychological and social effects of one year robot assisted activity on elderly people at a health service facility for the aged. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2005; IEEE: Piscataway, NJ, USA, 2005; pp. 2785–2790. [Google Scholar]

- Piezzo, C.; Suzuki, K. Feasibility study of a socially assistive humanoid robot for guiding elderly individuals during walking. Future Internet 2017, 9, 30. [Google Scholar] [CrossRef]

- Park, C.; Kim, J.; Kang, J.H. Robot social skills for enhancing social interaction in physical training. In Proceedings of the 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Christchurch, New Zealand, 7–10 March 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 493–494. [Google Scholar]

- Avioz-Sarig, O.; Olatunji, S.; Sarne-Fleischmann, V.; Edan, Y. Robotic System for Physical Training of Older Adults. Int. J. Soc. Robot. 2020, 13, 1109–1124. [Google Scholar] [CrossRef]

- Oh, J.H.; Yi, Y.J.; Shin, C.J.; Park, C.; Kang, S.; Kim, J.; Kim, I.S. Effects of Silver-Care-Robot Program on Cognitive Function, Depression, and Activities of Daily Living for Institutionalized Elderly People. J. Korean Acad. Nurs. 2015, 45, 388–396. [Google Scholar] [CrossRef]

- Kidd, C.D.; Taggart, W.; Turkle, S. A sociable robot to encourage social interaction among the elderly. In Proceedings of the 2006 IEEE International Conference on Robotics and Automation, Orlando, FL, USA, 15–19 May 2006; IEEE: Piscataway, NJ, USA, 2006; pp. 3972–3976. [Google Scholar]

- Wada, K.; Shibata, T.; Musha, T.; Kimura, S. Robot therapy for elders affected by dementia. IEEE Eng. Med. Biol. Mag. 2008, 27, 53–60. [Google Scholar] [CrossRef]

- Cho, H.K.; Han, J.K. Current Status and Prospect of Educational Robots. J. Softw. Eng. Soc. 2007, 20, 19–26. [Google Scholar]

- Szafir, D.; Mutlu, B. Pay attention: Designing adaptive agents that monitor and improve user engagement. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012; ACM: New York, NY, USA, 2012; pp. 11–20. [Google Scholar]

- Saerbeck, M.; Schut, T.; Bartneck, C.; Janse, M.D. Expressive robots in education: Varying the degree of social supportive behavior of a robotic tutor. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Atlanta, GA, USA, 10–15 April 2010; ACM: New York, NY, USA, 2010; pp. 1613–1622. [Google Scholar]

- Brown, L.; Kerwin, R.; Howard, A.M. Applying behavioral strategies for student engagement using a robotic educational agent. In Proceedings of the 2013 IEEE International Conference on Systems, Man, and Cybernetics, Manchester, UK, 13 October 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 4360–4365. [Google Scholar]

- Heerink, M. Exploring the influence of age, gender, education and computer experience on robot acceptance by older adults. In Proceedings of the 2011 6th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Lausanne, Switzerland, 8–11 March 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 147–148. [Google Scholar]

- Tsai, H.Y.S.; Shillair, R.; Cotten, S.R. Social support and “playing around” an examination of how older adults acquire digital literacy with tablet computers. J. Appl. Gerontol. 2017, 36, 29–55. [Google Scholar] [CrossRef] [PubMed]

- Wouters, P.; Paas, F.; van Merriënboer, J.J. How to optimize learning from animated models: A review of guidelines based on cognitive load. Rev. Educ. Res. 2008, 78, 645–675. [Google Scholar] [CrossRef] [Green Version]

- Johnson, W.L.; Rickel, J.W.; Lester, J.C. Animated pedagogical agents: Face-to-face interaction in interactive learning environments. Int. J. Artif. Intell. Educ. 2000, 11, 47–78. [Google Scholar]

- Moreno, R.; Reislein, M.; Ozogul, G. Using virtual peers to guide visual attention during learning: A test of the persona hypothesis. J. Media Psychol. Theor. Methods Appl. 2010, 22, 52. [Google Scholar] [CrossRef]

- Ryu, J.; Yu, J. The impact of gesture and facial expression on learning comprehension and persona effect of pedagogical agent. Sci. Emot. Sensib. 2013, 16, 281–292. [Google Scholar]

- Breazeal, C. Emotion and sociable humanoid robots. Int. J. Hum.-Comput. Stud. 2003, 59, 119–155. [Google Scholar] [CrossRef]

- Dou, X.; Wu, C.F.; Lin, K.C.; Tseng, T.M. The effects of robot voice and gesture types on the perceived robot personalities. In Proceedings of the International Conference on Human-Computer Interaction, Orlando, FL, USA, 26–31 July 2019; Springer International Publishing: Berlin/Heidelberg, Germany, 2019; pp. 299–309. [Google Scholar]

- Shinozawa, K.; Naya, F.; Yamato, J.; Kogure, K. Differences in effect of robot and screen agent recommendations on human decision-making. Int. J. Hum.-Comput. Stud. 2005, 62, 267–279. [Google Scholar] [CrossRef]

- Powers, A.; Kiesler, S.; Fussell, S.; Torrey, C. Comparing a computer agent with a humanoid robot. In Proceedings of the International Conference on Human-Robot Interaction, Arlington, VA, USA, 10–12 March 2007; IEEE: Piscataway, NJ, USA, 2012; pp. 145–152. [Google Scholar]

- Damiano, L.; Dumouchel, P. Anthropomorphism in human–robot co-evolution. Front. Psychol. 2018, 9, 468. [Google Scholar] [CrossRef]

- Liu, B.; Markopoulos, P.; Tetteroo, D. How Anthropomorphism Affects User Acceptance of a Robot Trainer in Physical Rehabilitation. In Proceedings of the HEALTHINF 2019—12th International Conference on Health Informatics, Prague, Czech Republic, 22–24 February 2019; pp. 30–40. [Google Scholar]

- Zhang, T.; Zhu, B.; Lee, L.; Kaber, D. Service robot anthropomorphism and interface design for emotion in human-robot interaction. In Proceedings of the 2008 IEEE International Conference on Automation Science and Engineering, Arlington, VA, USA, 23–26 August 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 674–679. [Google Scholar]

- Salem, M.; Eyssel, F.; Rohlfing, K.; Kopp, S.; Joublin, F. To err is human (-like): Effects of robot gesture on perceived anthropomorphism and likability. Int. J. Soc. Robot. 2013, 5, 313–323. [Google Scholar] [CrossRef]

- Schneider, S.; Häßler, A.; Habermeyer, T.; Beege, M.; Rey, G.D. The more human, the higher the performance? Examining the effects of anthropomorphism on learning with media. J. Educ. Psychol. 2019, 111, 57. [Google Scholar] [CrossRef]

- Baylor, A.L.; Kim, S. Designing nonverbal communication for pedagogical agents: When less is more. Comput. Hum. Behav. 2009, 25, 450–457. [Google Scholar] [CrossRef]

- Fong, T.; Nourbakhsh, I.; Dautenhahn, K. A survey of socially interactive robots. Robot. Auton. Syst. 2003, 42, 143–166. [Google Scholar] [CrossRef]

- Ao, Y.; Yu, Z. Exploring the Relationship between Interactions and Learning Performance in Robot-Assisted Language Learning. Educ. Res. Int. 2022, 2022, 1958317. [Google Scholar] [CrossRef]

- Gordon, G.; Breazeal, C.; Engel, S. Can children catch curiosity from a social robot. In Proceedings of the 10th ACM/IEEE International Conference on Human-Robot Interaction, Portland, OR, USA, 2–5 March 2015; ACM/IEEE: New York, NY, USA, 2015; pp. 91–98. [Google Scholar]

- Shin, J.; Shin, D. Robot as a facilitator in language conversation class. In Proceedings of the 10th ACM/IEEE International Conference on Human-Robot Interaction, Portland, OR, USA, 2–5 March 2015; ACM/IEEE: New York, NY, USA, 2015; pp. 11–12. [Google Scholar]

- Demir-Lira, Ö.E.; Kanero, J.; Oranç, C.; Koskulu, S.; Franko, I.; Göksun, T.; Küntay, A. L2 vocabulary teaching by social robots: The role of gestures and on-screen cues as scaffolds. Front. Educ. 2020, 5, 599636. [Google Scholar] [CrossRef]

- Granata, C.; Chetouani, M.; Tapus, A.; Bidaud, P.; Dupourqué, V. Voice and graphical-based interfaces for interaction with a robot dedicated to elderly and people with cognitive disorders. In Proceedings of the 19th International Symposium in Robot and Human Interactive Communication, Viareggio, Italy, 13–15 September 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 785–790. [Google Scholar]

- Kondo, Y.; Takemura, K.; Takamatsu, J.; Ogasawara, T. A gesture-centric android system for multi-party human-robot interaction. J. Hum.-Robot. Interact. 2013, 2, 133–151. [Google Scholar] [CrossRef]

- Tung, F.W. Child perception of humanoid robot appearance and behavior. Int. J. Hum.-Comput. Interact. 2016, 32, 493–502. [Google Scholar] [CrossRef]

- Wu, Y.H.; Fassert, C.; Rigaud, A.S. Designing robots for the elderly: Appearance issue and beyond. Arch. Gerontol. Geriatr. 2012, 54, 121–126. [Google Scholar] [CrossRef]

- Peters, C.; Castellano, G.; De Freitas, S. An exploration of user engagement in HCI. In Proceedings of the 2009 International Workshop on Affective-Aware Virtual Agents and Social Robots, Boston, MA, USA, 6 November 2009; pp. 1–3. [Google Scholar]

- Moriuchi, E. An empirical study on anthropomorphism and engagement with disembodied AIs and consumers’ re-use behavior. Psychol. Mark. 2021, 38, 21–42. [Google Scholar] [CrossRef]

| Success | Failure |

|---|---|

| Well done. | Was it a little difficult? It’s okay. I will tell you again |

| It is correct. Will you be able to do well alone next time? | Can’t you remember? It’s okay. I will tell you again. |

| Details | |

|---|---|

| General gestures | (1) Raise and lower both arms diagonally |

| (2) Raise and lower left and right arm alternatively | |

| (3) Raise and lower with one arm bent | |

| (4) Raise and lower one arm | |

| General facial expression | (1) Smile |

| (2) Wink | |

| (3) Blink | |

| (4) Concentrating | |

| (5) Sad | |

| Feedback gestures | (1) Nod and clench a fist (in cases where tasks were successful) |

| (2) Raise hands above head, shaking the body (in cases where tasks were successful) | |

| (3) Shake head from side to side, placing both hands on its chest (in cases where participants failed the task) | |

| Feedback facial expression | (1) Smile (in cases where tasks were successful) |

| (2) Sad (in cases where participants failed the task) |

| Task | Function (Sub-Function) |

|---|---|

| 1 | Creating a chat room (search a specific person/check profile image/create a new chat room) |

| 2 | Sending and saving pictures |

| 3 | Forwarding messages or pictures |

| 4 | Additional features of chat rooms (turn off notifications and invite another person to an existing chat room) |

| 5 | Pinning a specific chat room on the top |

| 6 | Deleting sent messages |

| Item | Mean | SD | |

|---|---|---|---|

| Anthropo-morphism | Q1. Did you feel that the robot had emotions? | 5.25 | 1.772 |

| Q2. Did the robot feel like a human? | 5.35 | 1.614 | |

| Q3. Did you feel familiar with the robot? | 5.80 | 1.338 | |

| Satisfaction | Q4. Was the tutoring interesting? | 6.07 | 1.219 |

| Q5. Were you generally satisfied with the tutoring? | 6.60 | 0.694 | |

| Perceived effectiveness | Q6. Could you understand the content the robot provided well? | 6.33 | 0.857 |

| Q7. Could you focus on the tutoring? | 6.20 | 1.038 | |

| Q8. Do you think that you can learn through the robot? | 6.55 | 0.675 | |

| Q9. Do you think robots can educate? | 6.38 | 0.922 | |

| Item | Kruskal–Wallis H | p-Value | |

|---|---|---|---|

| Anthropo-morphism | Did you feel that the robot had emotions? | 8.921 | 0.030 * |

| Did the robot feel like a human? | 11.38 | 0.010 * | |

| Did you feel familiar with the robot? | 8.368 | 0.039 * | |

| Satisfaction | Was the tutoring interesting? | 7.620 | 0.055 |

| Were you generally satisfied with the tutoring? | 6.511 | 0.089 | |

| Perceived effectiveness | Could you understand the content the robot provided well? | 0.902 | 0.825 |

| Could you focus on the tutoring? | 1.833 | 0.608 | |

| Do you think that you can learn through the robot? | 2.129 | 0.546 | |

| Do you think robots can educate? | 3.886 | 0.274 | |

| Measurement | Interaction Method | N | Mean Rank | df | p-Value | |

|---|---|---|---|---|---|---|

| Touch errors | Voice | 14 | 28.00 | 3 | 0.328 | 0.955 |

| Voice + Facial expression | 14 | 30.54 | ||||

| Voice + Gesture | 14 | 27.64 | ||||

| Voice + Facial expression + Gesture | 14 | 27.82 | ||||

| Retraining | Voice | 14 | 27.07 | 3 | 0.709 | 0.871 |

| Voice + Facial expression | 14 | 30.86 | ||||

| Voice + Gesture | 14 | 28.79 | ||||

| Voice + Facial expression + Gesture | 14 | 27.29 |

| Interaction Type | Task Performance | p-Value | ||

|---|---|---|---|---|

| Success | Failure | |||

| Voice | 10(71.4%) | 4(28.6%) | 0.876 | 0.831 |

| Voice + Facial expression | 8(57.1%) | 6(42.9%) | ||

| Voice + Gesture | 9(64.3%) | 5(35.7%) | ||

| Voice + Facial expression + Gesture | 10(71.4%) | 4(28.6%) | ||

| Item | N | Mean | SD |

|---|---|---|---|

| Do you think training by robot could be helpful in the case of other IT devices? | 14 | 6.3 | 2.11 |

| Was the training by robot burdensome or inconvenient? (1 = very burdensome, 7 = not very burdensome) | 14 | 5.7 | 2.37 |

| Do you think that training by robot can help to build social relationships? | 14 | 6.3 | 1.27 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jung, S.; Ahn, S.H.; Ha, J.; Bahn, S. A Study on the Effectiveness of IT Application Education for Older Adults by Interaction Method of Humanoid Robots. Int. J. Environ. Res. Public Health 2022, 19, 10988. https://doi.org/10.3390/ijerph191710988

Jung S, Ahn SH, Ha J, Bahn S. A Study on the Effectiveness of IT Application Education for Older Adults by Interaction Method of Humanoid Robots. International Journal of Environmental Research and Public Health. 2022; 19(17):10988. https://doi.org/10.3390/ijerph191710988

Chicago/Turabian StyleJung, Sungwook, Sung Hee Ahn, Jiwoong Ha, and Sangwoo Bahn. 2022. "A Study on the Effectiveness of IT Application Education for Older Adults by Interaction Method of Humanoid Robots" International Journal of Environmental Research and Public Health 19, no. 17: 10988. https://doi.org/10.3390/ijerph191710988