An Exploratory Study on the Acoustic Musical Properties to Decrease Self-Perceived Anxiety

Abstract

1. Introduction

- (i)

- To investigate the relaxing properties of four contrasting musical samples from different Western historical traditions. Two of these traditions have already been investigated in previous works [11,20], i.e., Baroque and Impressionism; two are still under-researched, i.e., Gregorian chant and Expressionism (the latter two chosen for their contrasting characteristics with respect to the former two). In order to assess low intensity states of anxiety that might be more common in every-day situations, anxiety induced through Mood Induction Procedures (MIP) was preferred to the medical one—note that, through MIP, only low aroused emotions should be elicited [48].

- (ii)

- To assess whether music with the capability to reduce users’ self-perceived (induced) anxiety acoustically differs with respect to that without such a capability. For this, well-established audio feature sets tailored to emotional modelling in the context of speech and music processing are taken into account [39,49,50]. Note that feature sets from both domains are considered since speech and music are communication channels that share the same acoustic code for expressing emotions [41,51].

- (iii)

- To connect the massive research on the treatment of anxiety from music psychology and music therapy with the continuously increasing studies on emotion from Music Information Retrieval (MIR), in particular MER. This connection will be highly beneficial in the identification of the musical and acoustic properties suitable to reduce listeners’ anxiety.

2. Materials and Methods

2.1. Musical Stimuli

2.2. Anxiety Induction and Measurement

2.3. User Study

2.4. Acoustic Features

3. Results

3.1. User Study

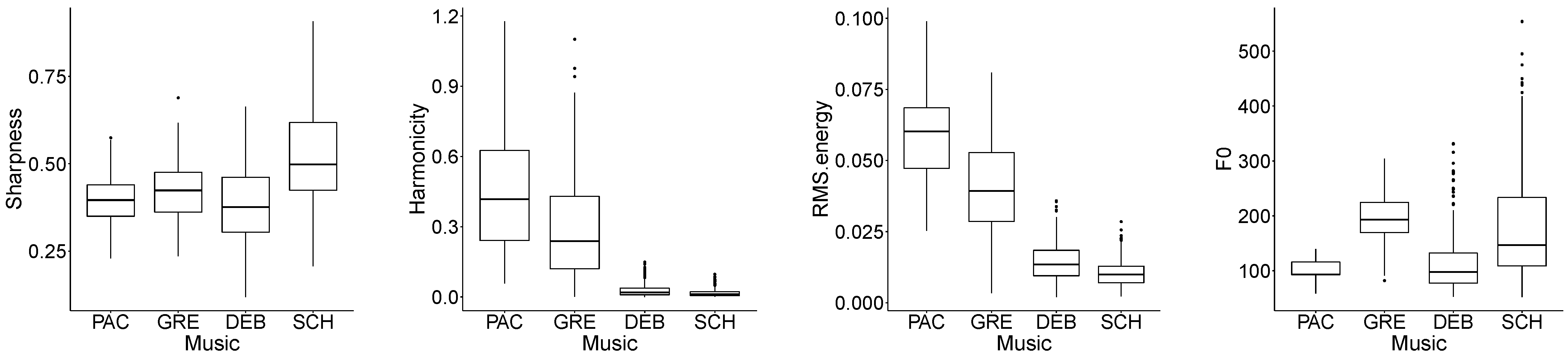

3.2. Acoustic Features

4. Discussion

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- American Psychological Association. Anxiety Definition. Available online: https://www.apa.org/topics/anxiety (accessed on 5 December 2021).

- Jackson, P.; Everts, J. Anxiety as social practice. Environ. Plan. A 2010, 42, 2791–2806. [Google Scholar] [CrossRef]

- Deacon, B.J.; Abramowitz, J.S. Patients’ perceptions of pharmacological and cognitive-behavioral treatments for anxiety disorders. Behav. Ther. 2005, 36, 139–145. [Google Scholar] [CrossRef]

- De Witte, M.; Lindelauf, E.; Moonen, X.; Stams, G.J.; Hooren, S.V. Music therapy interventions for stress reduction in adults with mild intellectual disabilities: Perspectives from clinical practice. Front. Psychol. 2020, 11, 1–15. [Google Scholar] [CrossRef] [PubMed]

- De Witte, M.; Spruit, A.; van Hooren, S.; Moonen, X.; Stams, G.J. Effects of music interventions on stress-related outcomes: A systematic review and two meta-analyses. Health Psychol. Rev. 2020, 14, 294–324. [Google Scholar] [CrossRef] [PubMed]

- Allen, K.; Golden, L.; Izzo, J.; Ching, M.I.; Forrest, A.; Niles, C.R.; Niswander, P.R.; Barlow, J.C. Normalization of hypertensive responses during ambulatory surgical stress by perioperative music. Psychosom. Med. 2001, 63, 487–492. [Google Scholar] [CrossRef]

- Jiang, J.; Zhou, L.; Rickson, D.; Jiang, C. The effects of sedative and stimulative music on stress reduction depend on music preference. Arts Psychother. 2013, 40, 201–205. [Google Scholar] [CrossRef]

- Nilsson, U. The anxiety-and pain-reducing effects of music interventions. Assoc. Perioper. Regist. Nurses J. 2008, 87, 780–807. [Google Scholar]

- Van Goethem, A.; Sloboda, J. The functions of music for affect regulation. Music. Sci. 2011, 15, 208–228. [Google Scholar] [CrossRef]

- Mok, E.; Wong, K.Y. Effects of music on patient anxiety. Assoc. Perioper. Regist. Nurses J. 2003, 77, 396–410. [Google Scholar] [CrossRef]

- Pelletier, C.L. The effect of music on decreasing arousal due to stress. J. Music Ther. 2004, 41, 192–214. [Google Scholar] [CrossRef]

- Linnemann, A.; Ditzen, B.; Strahler, J.; Doerr, J.M.; Nater, U.M. Music listening as a means of stress reduction in daily life. Psychoneuroendocrinology 2015, 60, 82–90. [Google Scholar] [CrossRef]

- Linnemann, A.; Strahler, J.; Nater, U.M. The stress-reducing effect of music listening varies depending on the social context. Psychoneuroendocrinology 2016, 72, 97–105. [Google Scholar] [CrossRef]

- Linnemann, A.; Wenzel, M.; Grammes, J.; Kubiak, T.; Nater, U.M. Music listening and stress in daily life—A matter of timing. Int. J. Behav. Med. 2018, 25, 223–230. [Google Scholar] [CrossRef] [PubMed]

- Ilkkaya, N.K.; Ustun, F.E.; Sener, E.B.; Kaya, C.; Ustun, Y.B.; Koksal, E.; Kocamanoglu, I.S.; Ozkan, F. The effects of music, white noise, and ambient noise on sedation and anxiety in patients under spinal anesthesia during surgery. J. Perianesthesia Nurs. 2014, 29, 418–426. [Google Scholar] [CrossRef] [PubMed]

- Baird, A.; Parada-Cabaleiro, E.; Fraser, C.; Hantke, S.; Schuller, B. The perceived emotion of isolated synthetic audio: The EmoSynth dataset and results. In Proceedings of the Audio Mostly on Sound in Immersion and Emotion; ACM: North Wales, UK, 2018; pp. 1–8. [Google Scholar]

- Parada-Cabaleiro, E.; Baird, A.; Cummins, N.; Schuller, B. Stimulation of psychological listener experiences by semi-automatically composed electroacoustic environments. In Proceedings of the International Conference on Multimedia and Expo, Hong Kong, China, 10–14 July 2017; pp. 1051–1056. [Google Scholar]

- Rohner, S.; Miller, R. Degrees of familiar and affective music and their effects on state anxiety. J. Music Ther. 1980, 17, 2–15. [Google Scholar] [CrossRef]

- Iwanaga, M.; Moroki, Y. Subjective and physiological responses to music stimuli controlled over activity and preference. J. Music Ther. 1999, 36, 26–38. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Orsillo, S. Investigating cognitive flexibility as a potential mechanism of mindfulness in generalized anxiety disorder. J. Behav. Ther. Exp. Psychiatry 2014, 45, 208–216. [Google Scholar] [CrossRef]

- Rad, M.S.; Martingano, A.J.; Ginges, J. Toward a psychology of Homo sapiens: Making psychological science more representative of the human population. Proc. Natl. Acad. Sci. USA 2018, 115, 11401–11405. [Google Scholar] [CrossRef]

- Kennedy, M.; Kennedy, J. The Oxford Dictionary of Music; Oxford University Press: Oxford, UK, 2013. [Google Scholar]

- Johnson, B.; Raymond, S.; Goss, J. Perioperative music or headsets to decrease anxiety. J. PeriAnesthesia Nurs. 2012, 27, 146–154. [Google Scholar] [CrossRef]

- Bailey, L. Strategies for decreasing patient anxiety in the perioperative setting. Assoc. Perioper. Regist. Nurses J. 2010, 92, 445–460. [Google Scholar] [CrossRef]

- Chuang, C.H.; Chen, P.C.; Lee, C.S.; Chen, C.H.; Tu, Y.K.; Wu, S.C. Music intervention for pain and anxiety management of the primiparous women during labour: A systematic review and meta-analysis. J. Adv. Nurs. 2019, 75, 723–733. [Google Scholar] [CrossRef] [PubMed]

- van Willenswaard, K.C.; Lynn, F.; McNeill, J.; McQueen, K.; Dennis, C.L.; Lobel, M.; Alderdice, F. Music interventions to reduce stress and anxiety in pregnancy: A systematic review and meta-analysis. BMC Psychiatry 2017, 17, 1–9. [Google Scholar] [CrossRef]

- Jiang, J.; Rickson, D.; Jiang, C. The mechanism of music for reducing psychological stress: Music preference as a mediator. Arts Psychother. 2016, 48, 62–68. [Google Scholar] [CrossRef]

- Lee, K.S.; Jeong, H.C.; Yim, J.E.; Jeon, M.Y. Effects of music therapy on the cardiovascular and autonomic nervous system in stress-induced university students: A randomized controlled trial. J. Altern. Complement. Med. 2016, 22, 59–65. [Google Scholar] [CrossRef] [PubMed]

- Thoma, M.V.; La Marca, R.; Brönnimann, R.; Finkel, L.; Ehlert, U.; Nater, U.M. The effect of music on the human stress response. PLoS ONE 2013, 8, e70156. [Google Scholar] [CrossRef]

- Knight, W.; Rickard, N. Relaxing music prevents stress-induced increases in subjective anxiety, systolic blood pressure, and heart rate in healthy males and females. J. Music Ther. 2001, 38, 254–272. [Google Scholar] [CrossRef]

- Juslin, P.N.; Västfjäll, D. Emotional responses to music: The need to consider underlying mechanisms. Behav. Brain Sci. 2008, 31, 559–575. [Google Scholar] [CrossRef]

- Susino, M.; Schubert, E. Cross-cultural anger communication in music: Towards a stereotype theory of emotion in music. Music. Sci. 2017, 21, 60–74. [Google Scholar] [CrossRef]

- Sharman, L.; Dingle, G.A. Extreme metal music and anger processing. Front. Hum. Neurosci. 2015, 9, 1–11. [Google Scholar] [CrossRef]

- Susino, M.; Schubert, E. Cultural stereotyping of emotional responses to music genre. Psychol. Music 2019, 47, 342–357. [Google Scholar] [CrossRef]

- Picard, R.W. Affective Computing; MIT Press: Cambridge, MA, USA, 2000. [Google Scholar]

- Calvo, R.A.; D’Mello, S.; Gratch, J.M.; Kappas, A. The Oxford Handbook of Affective Computing; Oxford University Press: New York, NY, USA, 2015. [Google Scholar]

- Yang, X.; Dong, Y.; Li, J. Review of data features-based music emotion recognition methods. Multimed. Syst. 2018, 24, 365–389. [Google Scholar] [CrossRef]

- Coutinho, E.; Cangelosi, A. Musical emotions: Predicting second-by-second subjective feelings of emotion from low-level psychoacoustic features and physiological measurements. Emotion 2011, 11, 921–937. [Google Scholar] [CrossRef] [PubMed]

- Coutinho, E.; Dibben, N. Psychoacoustic cues to emotion in speech prosody and music. Cogn. Emot. 2013, 27, 658–684. [Google Scholar] [CrossRef] [PubMed]

- Panda, R.; Malheiro, R.; Paiva, R.P. Novel audio features for music emotion recognition. IEEE Trans. Affect. Comput. 2018, 11, 614–626. [Google Scholar] [CrossRef]

- Weninger, F.; Eyben, F.; Schuller, B.W.; Mortillaro, M.; Scherer, K.R. On the acoustics of emotion in audio: What speech, music, and sound have in common. Front. Psychol. 2013, 4, 1–12. [Google Scholar] [CrossRef]

- Larsen, R.J.; Diener, E. Promises and problems with the circumplex model of emotion. In Review of Personality and Social Psychology; Sage Publications, Inc.: New York, NY, USA, 1992; pp. 25–59. [Google Scholar]

- Russell, J.A. A circumplex model of affect. J. Personal. Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Eyben, F.; Salomão, G.L.; Sundberg, J.; Scherer, K.R.; Schuller, B.W. Emotion in the singing voice—A deeper look at acoustic features in the light of automatic classification. EURASIP J. Audio Speech Music Process. 2015, 1, 1–9. [Google Scholar]

- Konečni, V.J. Does music induce emotion? A theoretical and methodological analysis. Psychol. Aesthet. Creat. Arts 2008, 2, 115–129. [Google Scholar] [CrossRef]

- Lundqvist, L.; Carlsson, F.; Hilmersson, P.; Juslin, P. Emotional responses to music: Experience, expression, and physiology. Psychol. Music 2009, 37, 61–90. [Google Scholar] [CrossRef]

- Vempala, N.N.; Russo, F.A. Modeling music emotion judgments using machine learning methods. Front. Psychol. 2018, 8, 1–12. [Google Scholar] [CrossRef]

- Parada-Cabaleiro, E.; Costantini, G.; Batliner, A.; Schmitt, M.; Schuller, B.W. DEMoS: An Italian emotional speech corpus. Elicitation methods, machine learning, and perception. Lang. Resour. Eval. 2020, 54, 341–383. [Google Scholar] [CrossRef]

- Schuller, B.; Steidl, S.; Batliner, A.; Vinciarelli, A.; Scherer, K.; Ringeval, F.; Chetouani, M.; Weninger, F.; Eyben, F.; Marchi, E.; et al. The Interspeech 2013 computational paralinguistics challenge: Social signals, conflict, emotion, autism. In Proceedings of the Annual Conference of the International Speech Communication Association (Interspeech), Lyon, France, 25–29 August 2013; pp. 148–152. [Google Scholar]

- Eyben, F.; Scherer, K.R.; Schuller, B.W.; Sundberg, J.; André, E.; Busso, C.; Devillers, L.Y.; Epps, J.; Laukka, P.; Narayanan, S.S.; et al. The Geneva minimalistic acoustic parameter set (GeMAPS) for voice research and affective computing. IEEE Trans. Affect. Comput. 2015, 7, 190–202. [Google Scholar] [CrossRef]

- Nordström, H.; Laukka, P. The time course of emotion recognition in speech and music. J. Acoust. Soc. Am. 2019, 145, 3058–3074. [Google Scholar] [CrossRef]

- Panteleeva, Y.; Ceschi, G.; Glowinski, D.; Courvoisier, D.S.; Grandjean, D. Music for anxiety? Meta-analysis of anxiety reduction in non-clinical samples. Psychol. Music 2018, 46, 473–487. [Google Scholar] [CrossRef]

- Labbé, E.; Schmidt, N.; Babin, J.; Pharr, M. Coping with stress: The effectiveness of different types of music. Appl. Psychophysiol. Biofeedback 2007, 32, 163–168. [Google Scholar] [CrossRef]

- Han, L.; Li, J.P.; Sit, J.W.; Chung, L.; Jiao, Z.Y.; Ma, W.G. Effects of music intervention on physiological stress response and anxiety level of mechanically ventilated patients in China: A randomised controlled trial. J. Clin. Nurs. 2010, 19, 978–987. [Google Scholar] [CrossRef] [PubMed]

- Parada-Cabaleiro, E.; Batliner, A.; Schuller, B.W. The effect of music in anxiety reduction: A psychological and physiological assessment. Psychol. Music 2021, 49, 1637–1653. [Google Scholar] [CrossRef]

- Allen, K.; Blascovich, J. Effects of music on cardiovascular reactivity among surgeons. J. Am. Med. Assoc. 1994, 272, 882–884. [Google Scholar] [CrossRef]

- Chafin, S.; Roy, M.; Gerin, W.; Christenfeld, N. Music can facilitate blood pressure recovery from stress. Br. J. Health Psychol. 2004, 9, 393–403. [Google Scholar] [CrossRef]

- Voices of Music. Available online: https://www.voicesofmusic.org/ (accessed on 5 December 2021).

- Apel, W. The Harvard Dictionary of Music; Harvard University Press: Cambridge, MA, USA, 2003. [Google Scholar]

- Grout, D.J.; Palisca, C.V. A History of Western Music; Norton: New York, NY, USA, 2001. [Google Scholar]

- Delmonte, M.M. Meditation and anxiety reduction: A literature review. Clin. Psychol. Rev. 1985, 5, 91–102. [Google Scholar] [CrossRef]

- Bartkowski, J.P.; Acevedo, G.A.; Van Loggerenberg, H. Prayer, meditation, and anxiety: Durkheim revisited. Religions 2017, 8, 1–14. [Google Scholar] [CrossRef]

- Bolwerk, C.A.L. Effects of relaxing music on state anxiety in myocardial infarction patients. Crit. Care Nurs. Q. 1990, 13, 63–72. [Google Scholar] [CrossRef]

- Teixeira-Silva, F.; Prado, G.B.; Ribeiro, L.C.G.; Leite, J.R. The anxiogenic video-recorded Stroop Color–Word Test: Psychological and physiological alterations and effects of diazepam. Physiol. Behav. 2004, 82, 215–230. [Google Scholar] [CrossRef]

- MacLeod, C.M. Half a century of research on the Stroop effect: An integrative review. Psychol. Bull. 1991, 109, 163–203. [Google Scholar] [CrossRef]

- Scarpina, F.; Tagini, S. The stroop color and word test. Front. Psychol. 2017, 8, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Stroop, J.R. Studies of interference in serial verbal reactions. J. Exp. Psychol. 1935, 18, 643–662. [Google Scholar] [CrossRef]

- Marteau, T.; Bekker, H. The development of a six-item short-form of the state scale of the Spielberger State-Trait Anxiety Inventory. Br. J. Clin. Pharmacol. 1992, 31, 301–306. [Google Scholar] [CrossRef]

- Daniel, E. Music used as anti-anxiety intervention for patients during outpatient procedures: A review of the literature. Complement. Ther. Clin. Pract. 2016, 22, 21–23. [Google Scholar] [CrossRef]

- Hammer, S. The effects of guided imagery through music on state and trait anxiety. J. Music Ther. 1996, 33, 47–70. [Google Scholar] [CrossRef]

- Bradt, J.; Dileo, C. Music for stress and anxiety reduction in coronary heart disease patients. Cochrane Database Syst. Rev. 2009, 2, CD006577. [Google Scholar]

- Typeform. Available online: https://www.typeform.com/ (accessed on 5 December 2021).

- Cullen, C.; Vaughan, B.; Kousidis, S.; McAuley, J. Emotional speech corpus construction, annotation and distribution. In Proceedings of the Workshop on Corpora for Research on Emotion and Affect, Marrakesh, Morocco, 26–27 May 2008; pp. 32–37. [Google Scholar]

- Wasserstein, R.L.; Lazar, N.A. The ASA’s statement on p-values: Context, process, and purpose. Am. Stat. 2016, 70, 129–133. [Google Scholar] [CrossRef]

- Kotrlik, J.; Williams, H. The incorporation of effect size in information technology, learning, and performance research. Inf. Technol. Learn. Perform. J. 2003, 21, 1–7. [Google Scholar]

- Coutinho, E.; Deng, J.; Schuller, B. Transfer learning emotion manifestation across music and speech. In Proceedings of the International Joint Conference on Neural Networks, Beijing, China, 6–11 July 2014; pp. 3592–3598. [Google Scholar]

- Knox, D.; Beveridge, S.; Mitchell, L.A.; MacDonald, R.A. Acoustic analysis and mood classification of pain-relieving music. J. Acoust. Soc. Am. 2011, 130, 1673–1682. [Google Scholar] [CrossRef]

- Kirch, W. (Ed.) Pearson’s correlation coefficient. In Encyclopedia of Public Health; Springer: Dordrecht, The Netherlands, 2008; pp. 1090–1091. [Google Scholar]

- Eyben, F.; Wöllmer, M.; Schuller, B. OpenSMILE: The Munich versatile and fast open-source audio feature extractor. In Proceedings of the International Conference on Multimedia, Florence, Italy, 25–29 October 2010; pp. 1459–1462. [Google Scholar]

- Eyben, F. Real-Time Speech and Music Classification by Large Audio Feature Space Extraction; Springer: Cham, Switzerland, 2015. [Google Scholar]

- Gallagher, M. The Music Tech Dictionary: A Glossary of Audio-Related Terms and Technologies; Nelson Education: Boston, MA, USA, 2009. [Google Scholar]

- Brown, M.B.; Forsythe, A.B. 372: The ANOVA and multiple comparisons for data with heterogeneous variances. Biometrics 1974, 30, 719–724. [Google Scholar] [CrossRef]

- Bagheri, A.; Midi, H.; Imon, A.H.M.R. Two-step robust diagnostic method for identification of multiple high leverage points. J. Math. Stat. 2009, 5, 97–101. [Google Scholar] [CrossRef][Green Version]

- Chatfield, C.; Collins, A. Introduction to Multivariate Analysis; Springer: New York, NY, USA, 2013. [Google Scholar]

- Jacoby, N.; Margulis, E.H.; Clayton, M.; Hannon, E.; Honing, H.; Iversen, J.; Klein, T.R.; Mehr, S.A.; Pearson, L.; Peretz, I.; et al. Cross-cultural work in music cognition: Challenges, insights, and recommendations. Music Percept. 2020, 37, 185–195. [Google Scholar] [CrossRef]

- Schedl, M.; Gómez, E.; Trent, E.S.; Tkalčič, M.; Eghbal-Zadeh, H.; Martorell, A. On the interrelation between listener characteristics and the perception of emotions in classical orchestra music. IEEE Trans. Affect. Comput. 2018, 9, 507–525. [Google Scholar] [CrossRef]

- Becker, J. Exploring the habitus of listening. In Handbook of Music and Emotion: Theory, Research, and Applications; Juslin, P., Sloboda, J., Eds.; Oxford University Press: New York, NY, USA, 2010; pp. 127–157. [Google Scholar]

- Gómez-Cañón, J.S.; Cano, E.; Eerola, T.; Herrera, P.; Hu, X.; Yang, Y.H.; Gómez, E. Music emotion recognition: Toward new, robust standards in personalized and context-sensitive applications. IEEE Signal Process. Mag. 2021, 38, 106–114. [Google Scholar] [CrossRef]

- Zentner, M.; Eerola, T. Self-report measures and models. In Handbook of Music and Emotion: Theory, Research, and Applications; Juslin, P., Sloboda, J., Eds.; Oxford University Press: Boston, MA, USA, 2010; pp. 367–400. [Google Scholar]

| Description | LLDs | |

|---|---|---|

| EmoMusic | Eight descriptors: roll off, sharpness, spectral centroid, | 8 |

| energy, harmonicity, loudness, F0, spectral flux | ||

| ComParE | Four types of descriptors: spectral (41), Mel-Frequency Cepstral | 65 |

| Coefficients—MFCCs (14), prosodic (5), sound quality (5) | ||

| eGeMAPS | Three types of descriptors: spectral (7), frequency (11), | 25 |

| energy/amplitude (7) | ||

| NoAnx | Eleven descriptors: roll off, sharpness, spectral centroid, | 11 |

| energy, harmonicity, loudness, F0, spectral flux, | ||

| alpha ratio, Hammaberg index, MFCC2 |

| Diff | lwr | upr | p | d | |||

|---|---|---|---|---|---|---|---|

| Control | − | − | − | − | − | ||

| Pachelbel | 0.013 | ||||||

| Gregorian | |||||||

| Debussy | |||||||

| Schönberg |

| Feature | Welch | Games–Howell Post-Hoc | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| ANOVA | Gregorian | Debussy | Schönberg | |||||||

| F | df1 | df2 | Diff | g | Diff | g | Diff | g | ||

| Timbre | ||||||||||

| Roll off | 3 | 1129 | ||||||||

| Sharpness | 3 | 1129 | ||||||||

| Centroid | 3 | 1129 | ||||||||

| Harmonicity | 3 | 1129 | ||||||||

| MFCC | 3 | 1129 | ||||||||

| Dynamics | ||||||||||

| RMS.energy | 3 | 1129 | ||||||||

| Loudness | 3 | 1129 | ||||||||

| Pitch | ||||||||||

| F0 | 3 | 1129 | ||||||||

| Spec.Flux | 3 | 1129 | ||||||||

| Alpha.Ratio | 3 | 1129 | ||||||||

| Hammarberg | 3 | 1129 | ||||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Parada-Cabaleiro, E.; Batliner, A.; Schedl, M. An Exploratory Study on the Acoustic Musical Properties to Decrease Self-Perceived Anxiety. Int. J. Environ. Res. Public Health 2022, 19, 994. https://doi.org/10.3390/ijerph19020994

Parada-Cabaleiro E, Batliner A, Schedl M. An Exploratory Study on the Acoustic Musical Properties to Decrease Self-Perceived Anxiety. International Journal of Environmental Research and Public Health. 2022; 19(2):994. https://doi.org/10.3390/ijerph19020994

Chicago/Turabian StyleParada-Cabaleiro, Emilia, Anton Batliner, and Markus Schedl. 2022. "An Exploratory Study on the Acoustic Musical Properties to Decrease Self-Perceived Anxiety" International Journal of Environmental Research and Public Health 19, no. 2: 994. https://doi.org/10.3390/ijerph19020994

APA StyleParada-Cabaleiro, E., Batliner, A., & Schedl, M. (2022). An Exploratory Study on the Acoustic Musical Properties to Decrease Self-Perceived Anxiety. International Journal of Environmental Research and Public Health, 19(2), 994. https://doi.org/10.3390/ijerph19020994