1. Introduction

During the COVID-19 pandemic, almost all countries imposed strict regulations, such as social distancing and movement restrictions [

1,

2], which had a negative effect on mental health, leading to symptoms such as depression and anxiety [

3,

4]. According to a large number of empirical studies, exposure to urban green space (UGS) can contribute to promoting the physical, psychological, emotional, and mental health of urban residents [

5,

6,

7] and help people perceive more positive moods and cope with tough situations [

8,

9]. Natural elements, such as grass [

10], trees [

11], bodies of water [

12], and sky [

13] in urban environments have restorative potential to promote positive emotions and have the power to improve urban settings. However, few studies have explored the emotional evaluation of human-viewed UGSs in a dynamic process; thus, we have little understanding of how the changing visual variables of UGS relate to aesthetic emotions and thus affect the public’s mental status. For the time being, it is crucial to develop a framework for real-time emotive landscape assessment in order to better understand how the public emotionally responds to UGS and to create appropriate planning and design strategies that will optimize their benefits to quality of life.

Aesthetic emotions reflect subjective aesthetic judgement, which is a major predictor for public appreciation of the aesthetic appeal of UGS [

14,

15]. Given the importance of aesthetic emotions, measuring people’s dynamic emotional perception and preferences for UGS is crucial. Prior studies applied self-report methods to measure emotional responses to stimuli, which only capture “high-order emotions” based on deeper perceived processing of the stimuli with a variety of forms of bias [

16,

17,

18,

19,

20]. However, with the rapid advancement of facial expression recognition technology (FER), some studies have applied this model to map the interaction between humans and UGS [

21,

22,

23]. In this study, the objective FER approach was employed to collect emotional perception in supplement with subjective aesthetic preference results. Facial expressions reflect instant and valid emotion data when participants view the urban landscape stimuli. Researchers often use two main categories to describe emotions: (1) basic (e.g., happiness, sadness, anger, and fear) and (2) dimensional approaches [

24,

25]. The two dimensions used to distinguish emotions are valence and arousal. Valence evaluates pleasantness (positive or negative), while arousal indicates the level of emotional activation [

26,

27]. The face recognition model detects and reads images of the participants’ faces frame by frame after inputting the facial video recordings, classifies them using deep learning techniques, and then outputs emotional perception big data in two dimensions [

28,

29], which enables the possibility of capturing real-time emotional perception towards stimulation.

In recent years, researchers have used street-level-image-based methods to conduct research in a more human-centric way [

30]. Utilizing GSV images [

31,

32] has been proposed as a valuable library for providing panoramic and street-level urban streetscape environments from the perspective of pedestrians [

33]. Classification is essential for obtaining quantitative data on physical properties in GSV-based visual variable estimation. Traditional information extraction methods, such as Adobe Photoshop software, are falling increasingly short of expectations for big data mining [

34] since they are inaccurate, easily affected by image quality, and can only delineate the greenery as a whole class [

35]. In this study, a state-of-the-art deep learning framework was employed to extract objective physical properties [

36] at multiple detailed levels with high accuracy [

37,

38]. Deep learning models have the ability to automatically learn hierarchical feature representations and have been widely utilized in image classification and pattern recognition [

39,

40]. The semantic segmentation model was taught by datasets containing a high number of pictures, allowing for the automatic detection of elements such as grass, buildings, and sky in the scenes, which facilitated it to calculate the changing visual variables of UGS.

In this study, deep learning models were used in tandem to capture accurate and valid emotional perception data and extract detailed variables of the percentage of landscape elements from stimulation in real time. Furthermore, we took video-simulated British Heritage landscapes as a case study, and we obtained changing visual variables and corresponding emotional responses in a controlled setting. The following research topics were explored in this study: (1) the feasibility of this novel quantitative research methodology for instant sentimental assessment of UGS; (2) real-time emotional perceptions towards changing visual variables in a scene; (3) prediction models of public perception with different sets of finer visual variables; and (4) the relationship between FER technology, self-report survey, and body sensor measurements and their distinctions.

2. Materials and Methods

2.1. Site Selection

For primary stimulation, non-fragmentary landscapes were selected to ensure that each landscape element was distributed in a concentrated and continuous manner to highlight the influence of visual variables. With grand architecture, expansive grass, and lakes, the British Heritage landscape satisfies these requirements. The British landscape stimulation was chosen from the National Heritage List for England (NHLE), which is the United Kingdom’s official list of buildings, monuments, parks and gardens, wrecks, battlefields, and World Heritage Sites.

To maintain emotional levels and avoid emotional declines while watching the primary stimulation with a similar landscape throughout the experiment [

25], scenes with a strong contrast with the main stimulus were interspersed as auxiliary stimulation. For auxiliary stimulation, Japanese landscapes with considerable fragmentation and radically different landscaping styles were considered. Similarly, the Japanese landscape was selected from a list of Special Places of Scenic Beauty, Special Historic Sites, and Special Natural Monuments designated by The Minister of Education, Culture, Sports, Science and Technology (MEXT) of Japan under the Law for the Protection of Cultural Properties.

2.2. Stimulation Generation

The procedure begins with downloading GSV photos from Google Maps using the GSV Application Program Interface (API) key via Street View Download 360 Pro (version 3.1.3) software (Thomas Orlita, United Kingdom). The collection and analysis of network behaviour data, such as community-driven hashtags, which are ubiquitous and adaptable annotations of public data, has become a new tool to research public preferences in the era of big data [

41]. In line with the Instagram hashtag ranking, heritage was scanned by popularity, and the most representative panoramic views of each heritage were selected. Following a series of filters, primary and auxiliary stimulation observation points were selected in the heritage sites listed in

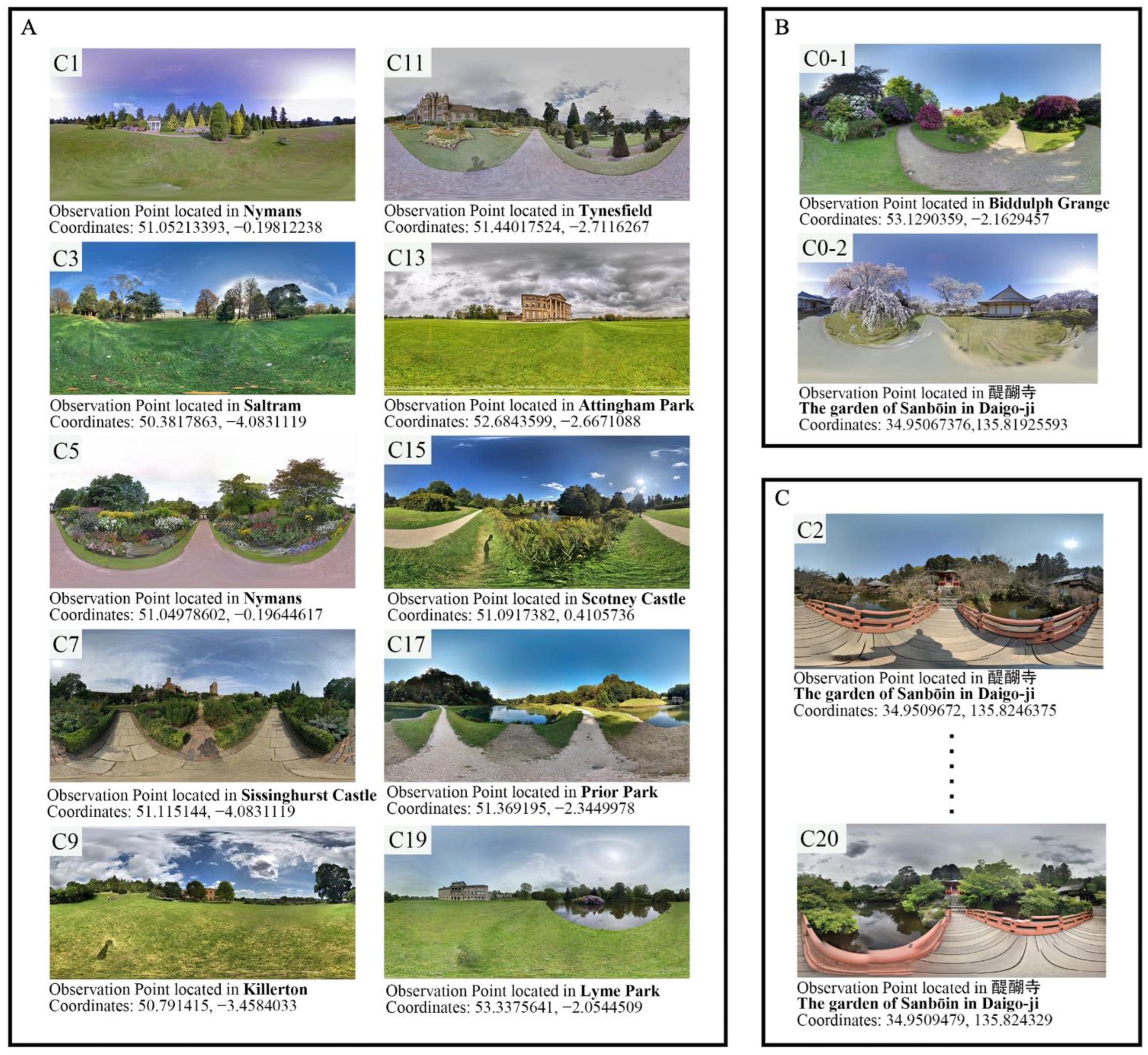

Table 1, and the panoramas are presented in

Figure 1. Only high-definition panoramas shot under clement weather conditions and from the typical observation angle with the best view were included, while some properties should be discarded due to a lack of images of a specific location, poor weather, or low resolution.

After downloading the GSV photos, a software project transformed the panoramas into panoramic video clips to imitate a human-centric vision. The following parameters were used: size = 1920 × 1080, FOV (field of view) = 70, pitch = 0, frames per second (FPS) = 30, length = 24 s per clip, spin direction = clockwise (primary stimulation) or anticlockwise (auxiliary stimulation). The stimuli are generated at a reasonably high bitrate in high definition, with adjacent videos spun in reverse to lessen the disorientation that can occur when the video clip is rotated. In this scenario, panoramic video clips can provide participants with a more immersive, comprehensive, and realistic experience of viewing the area from the intended viewpoint than pictures (

Figure 2). In the actual experimental process, panorama video clips can be freely generated and mixed in accordance with varied experimental designs.

2.3. Segmentation of Primary Landscape Elements

For region proposal and feature extraction, we employed the ImageNet detector with the PSPNet-101 backbone for each frame of main stimulation. ImageNet is a deep convolutional network architecture developed for pixel-level semantic segmentation and built on top of the PSA deep learning library [

39]. ImageNet outperformed previous algorithms for scene segmentation in more detailed classes and was more computationally efficient. The ADE20K, PSACAL VOC 2012, and Cityscapes datasets were used to pretrain the detectors. We chose the model pretrained on ADE20K for this study because it divides a scene image into 150 detailed classes as opposed to the 21 and 19 classes of the other two models. For example, the Cityscapes model only classifies greenery into one vegetation class, whereas the ADE20K model classifies trees, grasses, and shrubs individually. In our framework, we adjusted the model conforming to the experimental requirements and then obtained seven detailed classes (

Figure 3). Researchers had to compile the code and write a batch script in Python to batch process thousands of photos.

After classification of stimulation, we used the ImageMagick program to count the number of pixels in each class with a unique colour. The pixel results allow for precise calculation of the visual variables of various primary landscape elements. The framework is also scalable to quantify attributes of space quality, such as space openness and building closure. In this study, eight objectively measured variables of UGS are studied, including green view index (GVI), visible plant index (VPI), proportion of tree (P_Tree), proportion of grass (P_Grass), proportion of shrub (P_Shrub), proportion of waterscape (P_Water), proportion of sky (P_Sky), and proportion of architecture (P_Archi). The containment relationships among the eight variables are shown in

Figure 4.

2.4. Acquirement for 5 Million Sentiment Data

To capture participants’ immediate emotional perceptions, we adopted the AffectFace dataset and available resources on the ABAW website to retrain the deep learning model for FER. Small samples are common in studies applying physiological techniques (e.g., [

24,

25,

26]), and we recruited 50 healthy participants and gathered valid datasets from 42 of them, resulting in five million big data points for analysis. The mean age of the participants was 23.4 years (SD 1.5, minimum 20 years, maximum 27 years). See

Table 2 for sociodemographic information. To ensure a specific degree of emotional awakening, participants should all be at a similar level of unfamiliarity with the landscape in primary stimulation. To avoid different cognitive backgrounds, participants were selected from the population of Chinese college students who lived in China before the age of 15 and had no background knowledge of systematic planning and tourism. Participants were also excluded if they had ever visited the United Kingdom or had any history of mental illnesses or eye diseases.

2.5. Laboratory Setting

Potential difficulties linked to the laboratory setting that may affect the results are examined in advance based on previous experience and study [

24]. The laboratory was clean and comfortable, with a consistent temperature. Since FER data collection is sensitive to light changes, all lights were kept on throughout the experiment to maintain a stable and homogeneous laboratory environment for emotion tracking. We used a 32-inch 1800R curved-screen monitor to play target videos and a Canon PowerShot G7 X Mark II Digital Camera (Canon, Tokyo, Japan) to capture the expressions to acquire the most realistic portrayal of emotion. The investigator sat directly behind the monitor to avoid eye interference to participants and used MacBook Pro (Apple Inc., Cupertino, CA, United States) to control the video progress through the HDMI cable. To avoid eye contact with participants, the investigator sat directly behind the monitor and utilized a MacBook Pro to manage the video progress via HDMI cable. All experiments were conducted in the same laboratory room with the same settings.

2.6. Procedure for Aesthetic Emotion Tracking

When participants arrived at the testing lab, they were asked to take a seat in front of the monitor, approximately 60 cm away, with the centre at eye level. The researcher chatted with the participants to put them at ease and then briefed them on the procedure and the issues that needed to be addressed. Participants were then instructed to settle down and feel their own pulse for 60 s after completing the background questionnaire.

After preparation, participants watched the pre-set stimuli in random order (see one of the random orders in

Table 3). Participants conducted practice trials after a ten-second white blank screen to become used to the process. Participants were invited to observe specific landscape panoramic videos and provide a score between zero and ten for their overall aesthetic preference for the scene at the end of each video when the white blank screen appeared. The white blank internals between videos were intended to guarantee that the previous video had no effect on the emotions evoked by the subsequent video. After the rating was completed, researchers controlled and began playing the following video clip.

Following the practice trials, participants began the main experiment. Primary and auxiliary stimulation were cross-played. Except for practice trials and auxiliary stimulation, each participant viewed the primary stimulation in a different random order. Participants watched and rated all the panoramic video clips at their own pace (

Figure 5).

2.7. Analysis

For data cleaning, we sampled one frame per second uniformly and extracted region features for both emotional perception and objectively assessed visual variables data. Unfortunately, because the video clips were produced at a high bit rate, approximately six participants claimed that the video paused occasionally when C3 was played, leading to a nonsensical negative reaction. Consequently, C3 data were eliminated from further investigation.

For the research question of this paper, the valence and arousal dimensions of emotional data, aesthetic preference, and the dominant visual variables of UGS were investigated. Descriptive statistics, summary t-tests, paired t-tests, correlation analysis, and regression analysis were all performed with SPSS. The extensive Matplotlib library was used to process the overall visualization in Python. Pearson’s r correlations were calculated to investigate the correlations between visual variables of dominant landscape elements and public emotional response. Then, backwards multiple linear regression analysis was performed with the valence and arousal emotion dimensions and rating scores as the dependent variable and the proportion of dominant landscape elements in a scene as independent variables. Because it is hypothesized that the explained variance for more detailed sets of visual variables is likely to be higher than for all-inclusive variables, the linear regression was analysed independently for different sets of visual variables. Furthermore, paired t-tests were calculated to study the possible emotional responses elicited by the amount of green in a scene. Finally, the measurements of public perception were studied to evaluate how aesthetic preferences relate to the two major dimensions of emotion.

4. Discussion

4.1. Emotional-Oriented Dynamic Landscape Assessment Framework

Robust evidence is critical for policy-makers and urban planners, as urban development is time-consuming and costly [

33,

42]. In this paper, we approached the issue from a big data perspective by first proposing a quantitative research framework and demonstrating its feasibility for the continuous emotional assessment of UGS. By applying two deep learning models together, physical features of stimuli and participants’ emotional reactions were extracted accurately and efficiently, ultimately producing five million pieces of big data. The framework can be utilized for UGS dynamic assessment anywhere GSV images are available, and it is adaptable to any experimental design for other computed spatial quality properties.

For stimuli generation, we obtained GSV panoramas of the alternative properties that receive much public attention in the manner of hashtag ranking, which might help prevent cognitive biases caused by the controversial nature of the landscape. Scenic panoramas were first converted into panoramic video clips and then generated to provide experimental stimuli in our procedure. The first step in acquiring quantitative information of each variable was to classify primary landscape elements from panoramic video clips frame by frame. To segment primary landscape elements into different classes, this study used ImageNet trained on the ADE20K dataset. Compared to traditional methods, ImageNet achieved higher scores for scene segmentation in more detailed classes with improved computational efficiency and accuracy, allowing for the linear regression of more detailed sets of visual variables rather than using all-inclusive variables such as GVI. Accordingly, the researchers did not need to gather the hundreds of questionnaires that a self-reported study would ordinarily necessitate.

Moment-to-moment measurements were taken each frame for emotional perception data, allowing variations between respondents and short-lived emotional changes to be reliably assessed. Since the visual stimulation was well set, the researcher was able to determine which frame the observers were observing when they produced a subtle expression change through time nodes to match the emotional data with the objective variables of UGS one by one. Everyone viewed the same video clips of panoramic scenes at the same speed in a laboratory setting. Compared to field observation, a slight bias caused by participants’ varied view angles [

43] can be eliminated using this strategy.

4.2. Comparison of Real-Time FER Technology, Self-Report Survey, and Body Sensor Methods

Objective measurements of emotions such as facial expression recognition (FER), skin conductance (SC), and facial electromyography (EMG) have been widely used in recent decades to be consistent with self-reported and post hoc interview results and to be able to better distinguish between different dimensions of emotion [

18,

25,

44]. All of the methods listed are capable of accurately recording dynamic and short-lived emotional changes [

44], but only FER allows people to feel free during an experimental laboratory setting because it detects subtle and instant expression differences from facial muscle movements recorded by camera, whereas other methods require attaching a sensor, such as an electrode, which may interfere with participants’ natural reactions [

25,

45].

The self-report score only reflects the aesthetic preference for the entire clip [

46], while the emotional evaluation is subconscious and non-discrete and occurs in real time [

19,

24,

26]. Self-reported surveys using questionnaires are straightforward to administer, but they have been criticized since the interval between when perceptions are elicited and when participants report them may result in recall inaccuracy and may not be representative of the emotions experienced [

18,

25]. FER was employed to capture initial emotional reactions while assessing emotional responses in a more relaxed state than other psychophysiological methods [

18]. Using FER to capture moment-to-moment emotional responses that are not disclosed by self-report methods can avoid retrospective reflection and cognitive bias [

47,

48]. FER may clearly be used cooperatively to provide a better and more accurate understanding of emotional experiences by extracting reliable and valid emotion data from participants [

18,

24,

25,

45]. Because emotional perception results track minor emotional reactions and distinguish changes promptly and correctly, it is possible to examine emotional evaluation with just a few clips.

FER emotional perception refers to short-lived and unconscious emotional responses to stimuli, while self-reported aesthetic preference relates to the overall view of a scene. The valence dimension refers to pleasant sentiments, and it is worth mentioning that aesthetic preference is significantly related to the scene’s maximum valence result. This suggests that if there are several frames of scene in the clip that give people more pleasure, the overall scores of aesthetic preference may be higher. Thus, the maximum result of valence of each scene can mainly reveal perception judgements to some extent.

One notable outcome is that the real-time FER aids in the detection of minor variations that are difficult to distinguish from aesthetic preferences. The huge valence disparity demonstrated that women were more sensitive to changes in the amount of green than men, implying that women were more likely than men to experience pleasure when watching scenes with higher greenness. However, there was essentially no difference in aesthetic preference between men and women. As a result, FER was able to capture the differences more easily than self-report surveys, emphasizing the importance of applying FER techniques in supplement with self-report surveys to provide a real-time assessment and improved understanding of emotional perception.

4.3. Relationship between Visual Variables and Emotional Perception

Regarding the changing visual variables of different landscape elements in a scene, participants reported great changes in aesthetic preference and FER emotions. Knowing this, researchers continued to examine the association between the volatility of UGS visual variables and emotional expressions.

When viewing the high-green clip of a nearly identical scene for the second time, participants reported greater perceived values among the various perception results. The amount of green is mostly influenced by trees, which are the most vital landscape elements in urban contexts, and higher tree coverage has a greater function in stress recovery [

49,

50]. This result replicates previous studies showing that viewing tree canopies can reduce stress and enhance mood while also providing physical, biological, and aesthetic benefits [

11,

51]. Participants were more emotionally sensitive to the amount of green in a scene and felt more pleased when the green proportion was higher.

Three different combinations of variables were set as independent variables in a backwards multiple linear regression analysis to predict valence and arousal. Finer visual indicators were classified, and superior regression results were obtained for both valence and arousal by applying deep learning algorithms. The proportion of grass for Model 3, which was the same for arousal, was the best predictive variable of the likelihood of valence. This result replicates previous findings that the amount of grass present in an image is positively related to the restoration likelihood [

10]. Scenes with a higher percentage of grass have a greater restorative potential for stress reduction, mental healing, and positive emotional responses. Moreover, the proportion of waterscape was the second most important predictor of valence. Waterscape is widely acknowledged as one of the most essential landscape elements in the creation of therapeutic landscapes, and exposure to blue space promotes healing and wellness [

12]. However, little research has been conducted on the relationship between waterscape and human well-being. Participants were sensitive to the presence of waterscape in a scene, feeling calm and peaceful, as shown in

Table A1, revealing the restorative effect of blue space. The comfort and attractiveness of the landscape are related to the degree of sky visibility, which can explain many emotional shifts [

13]. In agreement with our expectations, the proportion of sky was a significant predictive variable in all three models, which confirmed our expectations.

This study attempted to develop a new research framework for investigating the relationship between diverse landscape elements and aesthetic emotions, which could contribute to the assessment and comprehension of UGS. The current selection of visual variables was founded on the assumption that visual properties and emotional perception are linked. Using the innovative framework, future research can explore the perceivable properties related to landscape character.

4.4. Limitations and Future Research

There are some limitations to this study. First, the experimental design did not strictly control the sampling ratio of different sexes. The general results were unaffected by the very small sex differentiation in aesthetic preference and arousal and the larger but parallel differences in valence. Research into the relationship between participants’ emotional perception of UGS and their sociodemographic characteristics like gender, age, occupation, and cultural background could be valuable. Second, GSV makes it possible to present the scene of observation points in various seasonal colours [

52], which can be an interesting perspective in further research. Third, precision was sacrificed to extract objective physical features in more detail. The segmentation accuracy of the ImageNet model trained on ADE20K was 81.7%, which is lower than the models trained on the PSACAL VOC 2012 (95.5%) and Cityscapes (96.4%) datasets. It is believed that a higher-precision model will emerge with the rapid development of artificial intelligence, and we can further modify it based on the most state-of-the-art deep learning framework. Fourth, although each panoramic video clip lasted 24 s, participants found it challenging to experience a deeper perceived processing of the stimuli when completing a self-reported survey [

17]. New research can also be conducted to enhance the current framework. Furthermore, building a 3D model is a method of better controlling various variables of stimuli, and virtual reality could be considered an effective medium to simulate immersive experiences and elicit emotional perception [

53,

54]. Finally, while presenting the stimulation gives participants a highly realistic observation experience, it is still unable to restore UGS perception. In realistic environments, complex aspects, such as spatial structure [

31], vegetation layout [

55], and species diversity [

56] can influence aesthetic emotions. In the future, greater in-depth study and synchronous collection of real-time data in the built environment will be necessary.