5.1. Dataset

There is no publicly available dataset about Chinese fusion emoji reviews of tourist attractions. In this paper, we collected more than 40,000 datasets from the Ctrip website, mainly collected for the reviews of Badaling Great Wall, Forbidden City, Disneyland, Beijing Zoo, Tianjin Eye, and other attractions in the past year. The dataset was processed to consist of four categories, including positive and negative reviews without emojis and positive and negative reviews with emojis. The pre-processing process is shown in

Figure 7.

After processing, we found that although there were many emojis, some of them could not express specific feelings, such as some plants, animals, and other emojis. We added emojis to some good and bad reviews in reasonable positions. The main reason was that the collected dataset contained some emojis that were unique to Android or Apple systems and could not be displayed in Windows and Linux for encoding and decoding. We added an emoji that can be displayed to the original non-displayable symbols (expressing the same sentiment). In addition, another part was that we observed the location of emojis in real-life evaluations and found that most of them were at the end of a sentence and that people will type more than one symbol when they are happy or angry, so we expanded the dataset appropriately. In the collected dataset, medium reviews were discarded, but when including medium reviews, plain text and reviews containing emojis could reach roughly 9:1 in the overall dataset. After discarding medium reviews, a large number of reviews with emojis were discarded, and we added them manually in order to compensate for this part and maintain the overall ratio at 9:1. The main added emojis are shown in

Table 1.

We added the symbolic expressions in

Table 1 to part of the dataset. Since people will add more than one expression when they are extremely happy and angry, we added one to five expressions randomly, and the dataset after adding the expressions is shown in

Table 2.

Table 2 shows the presentation of the dataset, which was divided into four subcategories for presentation, namely, reviews containing emojis and reviews without emojis. Each category contained positive and negative reviews, and for the convenience of understanding, this paper translated the Chinese in the table into English for display purposes. To be more intuitive, this paper made word clouds of positive and negative reviews according to their word frequencies. The effect is shown in

Figure 8.

Observing

Figure 8, it is easy to conclude that the keywords for good reviews in (a) are: not bad, go again, recommendable, worth, etc. In (b), the keywords for bad reviews are line up, service, guide, hour, poor experience, etc.

To show the overall data distribution of CAEIE, the overall number of data and the number of individual categories are shown in

Table 3.

As shown in

Table 3, the dataset was counted in this paper, and there were 36,197 bad reviews without emojis and 5611 positive reviews, 262 bad reviews with emojis, and 2601 positive reviews. The training set, test set, and validation set were divided according to the total number of 8:1:1, and there were 35,735, 4468, and 4468 items, respectively, in total.

In order to show the distribution of CAEIE lengths, the distribution of lengths is calculated and plotted in

Figure 9.

To demonstrate the diversity of CAEIE, information entropy was calculated for the four categories () and the overall dataset in this paper, and the results are shown in

Figure 10.

denotes the random variable. denotes the output probability function. The greater the uncertainty of a variable, the greater the entropy.

5.4. Experiments

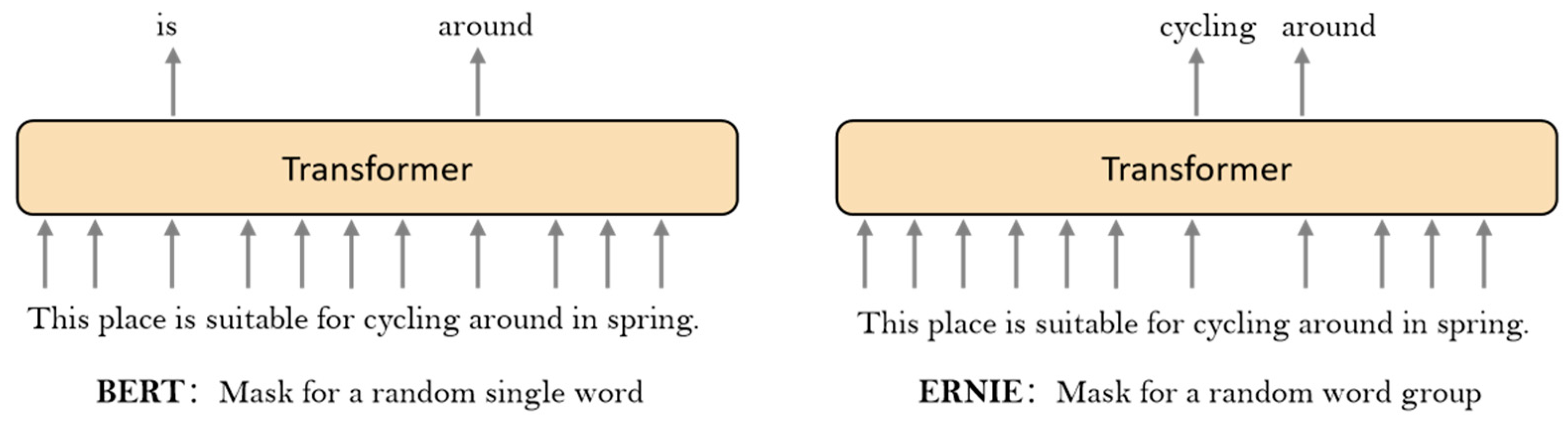

In this paper, several models were chosen for comparison, including BERT, as well as the more advanced robustly optimized BERT (Roberta) [

33], and the BERT-based improvements in ERNIE, as well as TEXTGCN and TEXTGAT. BERT is used for pre-training to learn the embedding representation of a given word in a given context [

34]. Roberta builds on BERT by modifying the key hyperparameters in BERT, removing the next sentence pre-training target of BERT and training with a larger batch size and learning rate. Roberta takes an order of magnitude longer to train than BERT. A lite BERT for self-supervised learning of language representations (ALBERT) is also based on BERT’s model [

35]. The main differences are the change in the sentence prediction task to predicting coherence between sentences, the factorization of embedding, and the sharing of parameters across layers. Sentiment Knowledge Enhanced Pre-training for Sentiment Analysis (Skep) is based on Roberta’s model. Unlike Roberta, Skep uses a dynamic mask mechanism. The model is more focused on emotional knowledge to build pre-trained targets, which in turn allows the machine to learn to understand emotional semantics.

ERNIE obtained state-of-the-art results on natural language processing (NLP) tasks in Chinese based on the BERT model. ERNIE improved the mechanism of the mask. Instead of the basic word piece mask, its mask added external knowledge in the pretraining stage. ERNIE-Gram is based on ERNIE and refined the mask mechanism. The model established semantic relationships between n-grams synchronously and achieves simultaneous learning of fine and coarse grains.

TextGCN is based on GCN to build a heterogeneous graph of the dataset, treating words and documents as nodes, which were continuously trained to obtain a representation of the nodes. The current optimal results were obtained using Roberta combined with GCN on five typical datasets—20 Newsgroups, R8, R52, Ohsumed, and Movie Review.

As shown in

Table 5, the E2G model achieved the best results on the CAEIE dataset compared to several other comparable models. E2G had an accuracy lead of 1.37% and 1.35% compared to ERNIE-Gram and TextGCN, respectively. The reason was that although ERNIE-Gram achieved good results and ERNIE-Gram fully considered the connection between n-grams when extracting features, it lacks global considerations. On the contrary, although TextGCN fully considers the relationship of the whole dataset, it ignores the word order and lacks consideration between n-grams. Incorporating ERNIE-Gram is just able to solve this problem. In addition, in order to verify the stability of E2G, this paper used the ten-fold crossover method for validation, and the error of the obtained accuracy results did not exceed 0.001.

To demonstrate the effectiveness of E2G, we performed ablation experiments that tested the combination of GAT in ERNIE and ERNIE-Gram. GAT is a neural network based on graph-structured data. The model addresses the shortcomings of GCN using a hidden self-attentive layer.

As shown in

Table 6, ERNIE and ERNIE-Gram combined with GAT lagged 1.44% and 0.62% behind the effect of combining with GCN, respectively. Although both GCN and GAT aggregate features from neighboring vertices to the center vertex, GCN utilizes the Laplace matrix while GAT utilizes attention coefficients. To some extent, GAT is stronger, but the experimental results proved that, in textual data, the use of fixed coefficients was more applicable, which was also confirmed in BERTGCN.

To verify the effects of different activation functions on GCN, this paper tried to use different activation functions in the second layer of GCN. The main ones used for the experiments included rectified linear unit (RELU), ReLU6, exponential linear unit (ELU), scaled exponential linear unit (Selu), continuously differentiable exponential linear units (CELU), leaky rectified linear units (Leaky ReLU), randomized rectified linear units (RReLU), and gaussian error linear units (Gelu).

The ReLU function is a segmented linear function [

36] that changes all negative values to 0, while positive values remain unchanged. In the case that the input is negative, it will output 0, and then the neuron will not be activated. This means that only some of the neurons will be activated at the same time, thus making the network very sparse, which is very efficient for computation.

ReLU6 is the ordinary ReLU but restricts the maximum output value to 6 [

37], and Relu uses x for linear activation in the region where x > 0, which may cause the value after activation to be too large and thus affect the stability of the model.

ELU solves the problem that exists with ReLU [

38]. ELU has a negative value, which causes the average value of activation to approach zero and thus speeds up learning. At x < 0, the gradient is 0. This neuron and subsequent neurons have a gradient of 0 forever and no longer respond to any data, resulting in the corresponding parameters never being updated.

Selu after this activation function makes the sample distribution automatically normalized to 0 mean and unit variance [

39]. Selu’s positive semi-axis is greater than 1, which allows it to increase when the variance is too small and prevents the gradient from vanishing. The activation function then has an immobility point. The output of each layer has a mean of 0 and a variance of 1.

CELU is similar to SELU [

40], in that CELU uses negative intervals for exponential computation and integer intervals for linear computation. CELU facilitates the convergence and generalization performance of neural networks.

Leaky ReLU is a variant of the ReLU activation function [

41]. The output of this function has a small slope for negative-valued inputs. Since the derivative is always non-zero, this reduces the appearance of silent neurons. Moreover, allowing gradient-based learning solves the ReLU function after it enters the negative interval.

RReLU is a variant of Leaky ReLU [

42]. In RReLU, the slope of the negative values is random in training and becomes fixed in testing.

GELU achieves regularization by multiplying the input by 0 or 1 [

43], but whether the input is multiplied by 0 or 1 depends on the random choice of the input’s distribution.

As shown in

Table 7, ReLU6 achieved the best results on the CAEIE dataset when m = 0.2, layer = 2, and dropout = 0.1. ReLU6 led by 0.126 and 0.027 in F1 and accuracy, respectively. ReLU6 was essentially the same as ReLU but limited the maximum output value to 6. If there is no restriction on the activation range of ReLU, the output ranges from 0 to positive infinity. If the activation values are very large and distributed over a large range, it is not possible to describe such a large range of values well and accurately, resulting in a loss of accuracy. ReLU6 avoids this problem well and obtained the highest accuracy.

。

。

.

.

。

。

.

. 。

。 .

. ,小孩子喜欢

,小孩子喜欢 。

。 , children like it

, children like it .

.