Identification of Typical Ecosystem Types by Integrating Active and Passive Time Series Data of the Guangdong–Hong Kong–Macao Greater Bay Area, China

Abstract

:1. Introduction

2. Materials and Methods

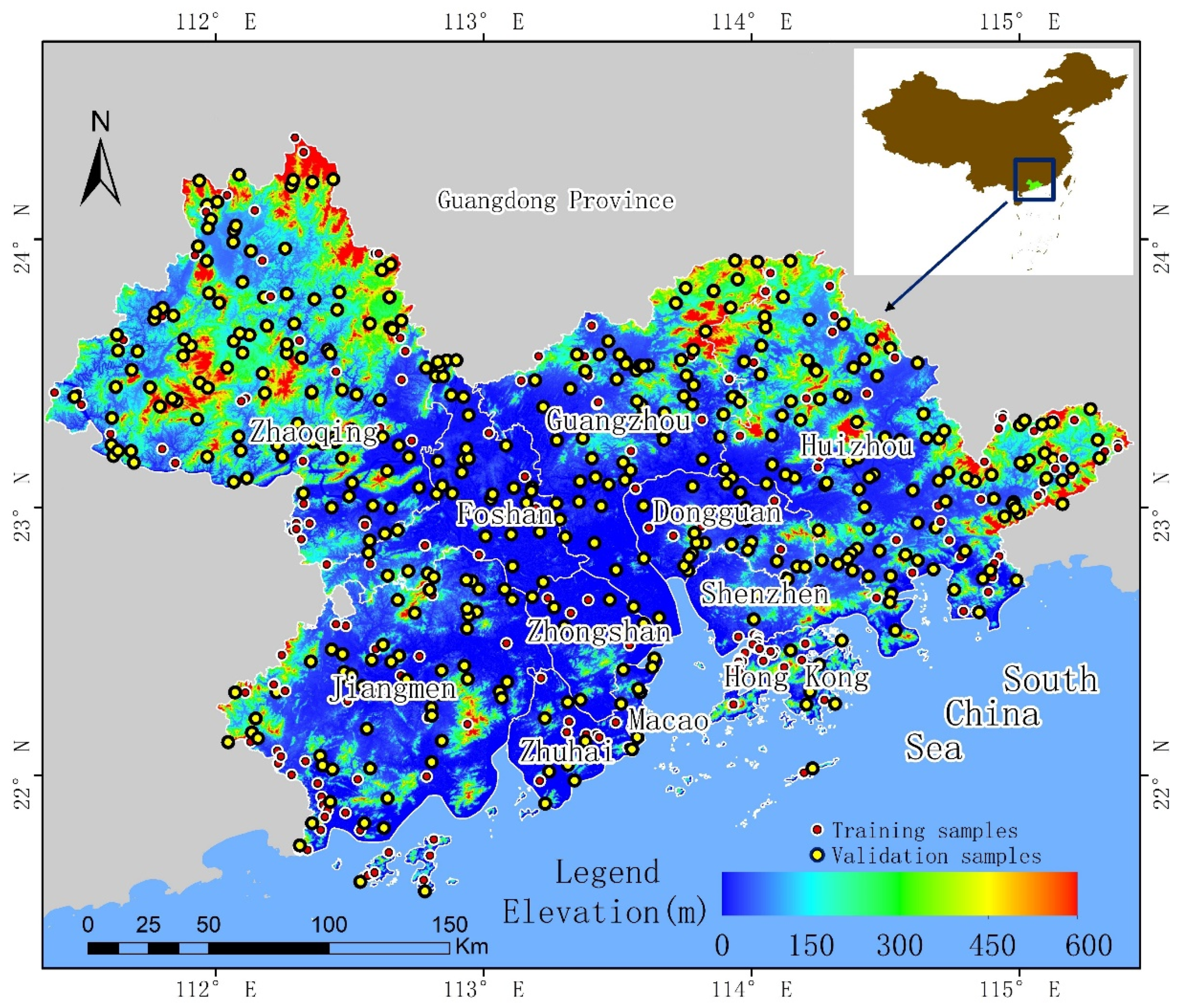

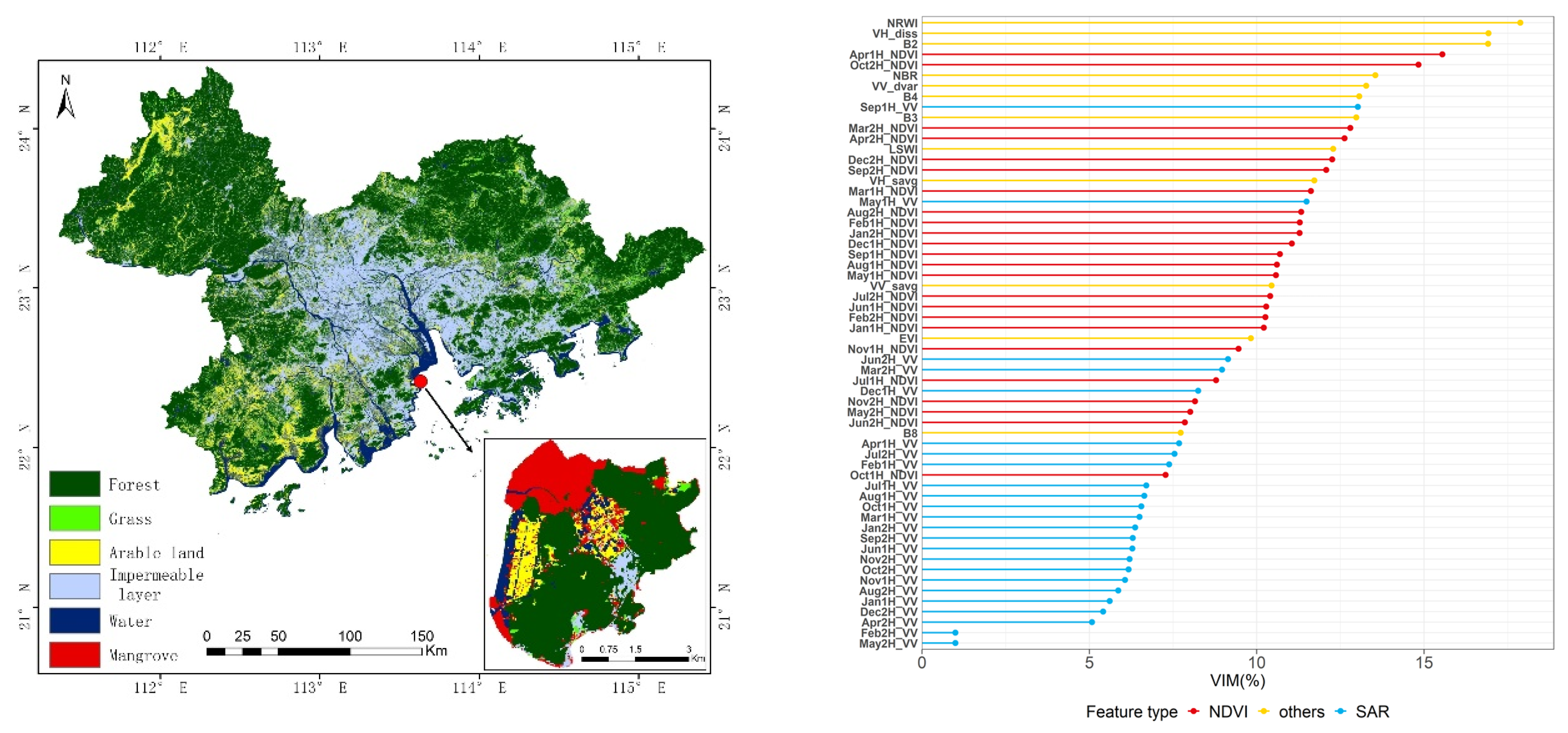

2.1. Study Area

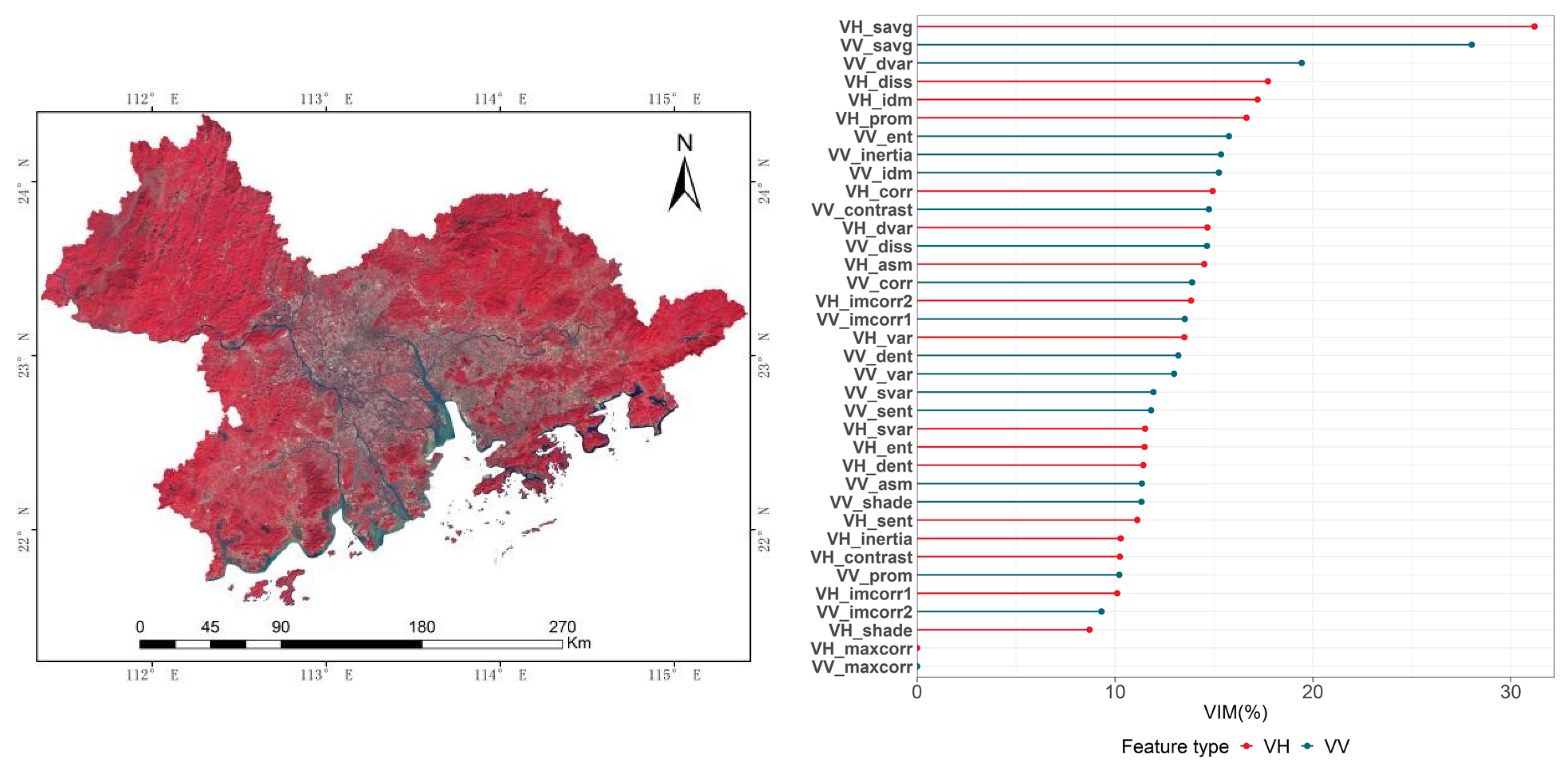

2.2. Data Acquisition and Preprocessing

2.3. Classification System and Features Set

2.4. Study Methods

2.4.1. SNIC Segmentation Algorithm

2.4.2. RF Classification Algorithm

2.4.3. Accuracy Evaluation

3. Results

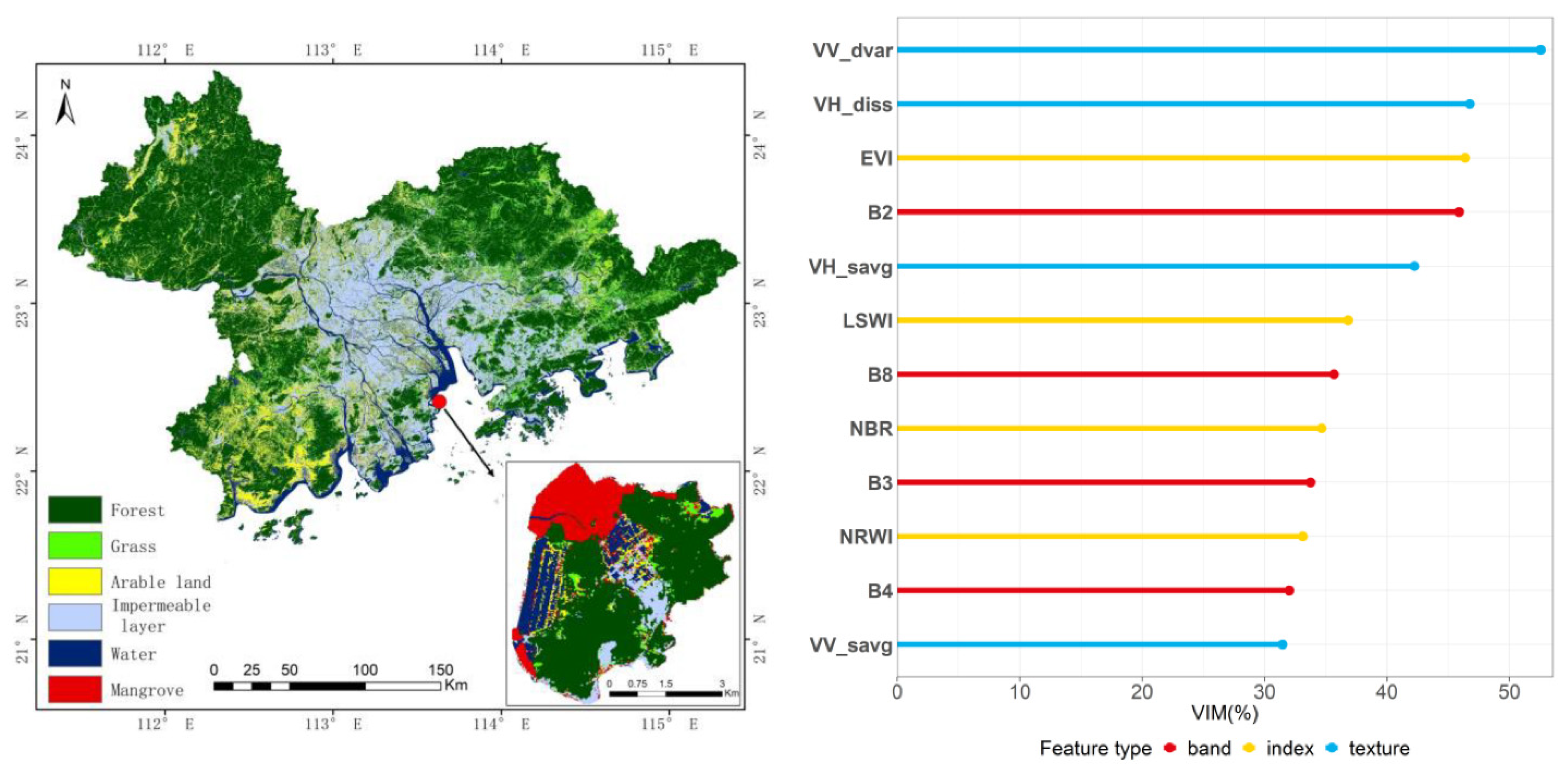

3.1. Single-Temporal Remote Sensing Image Classification

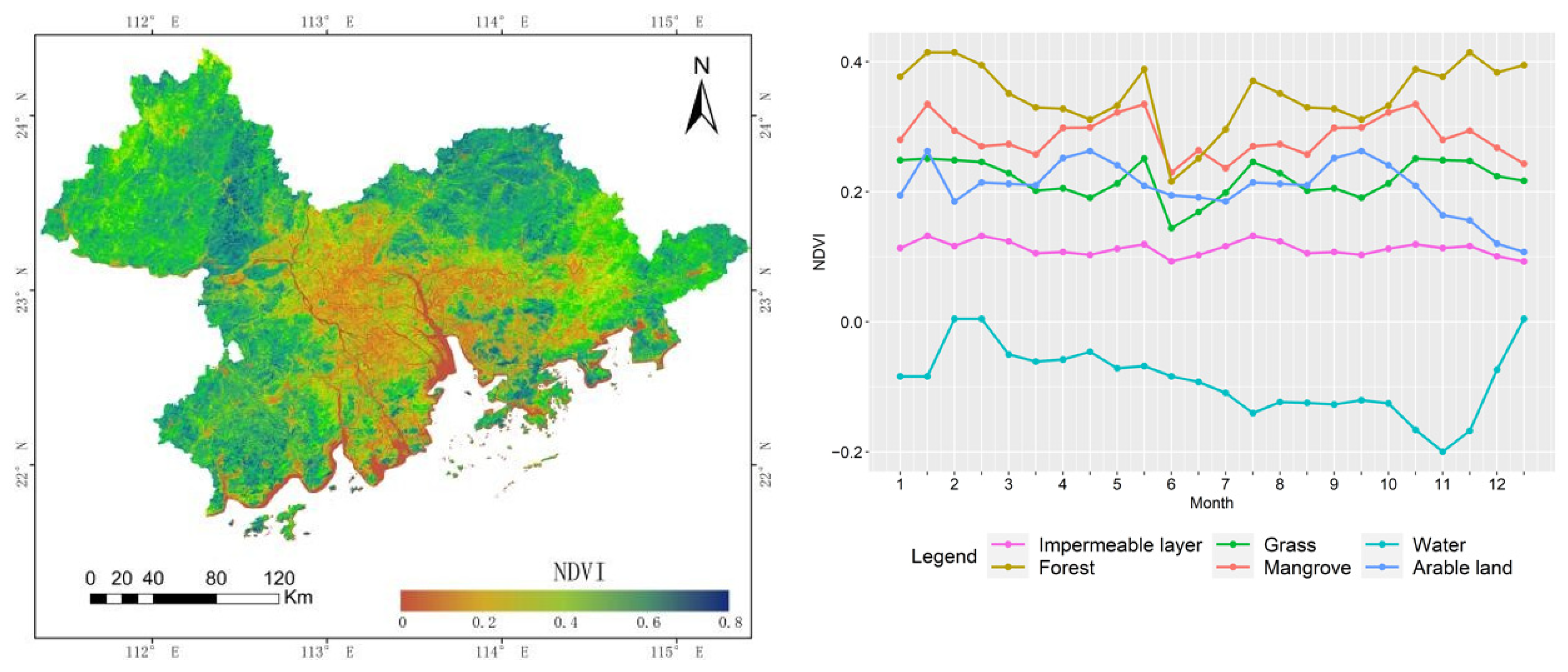

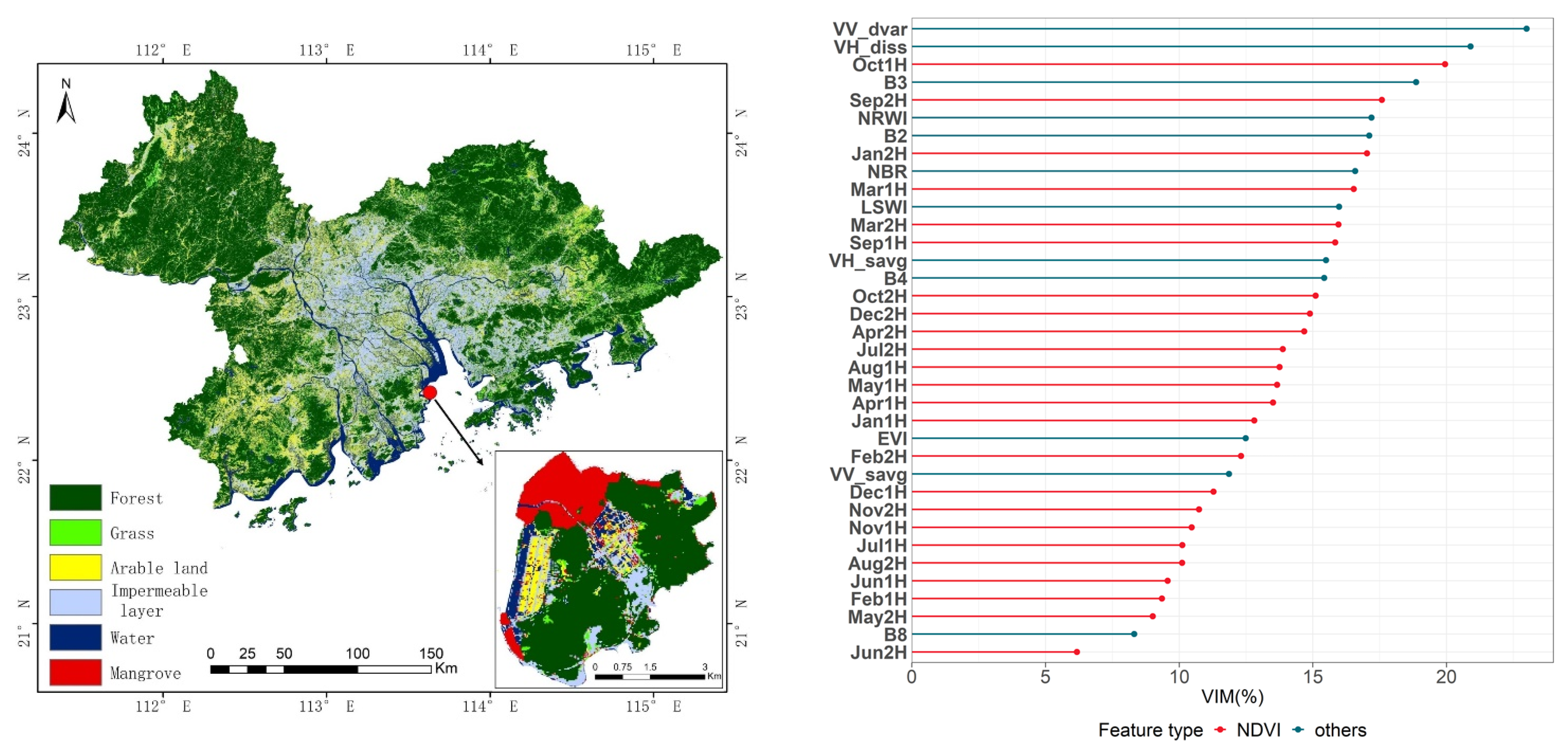

3.2. Integrated Time Series NDVI Data Classification

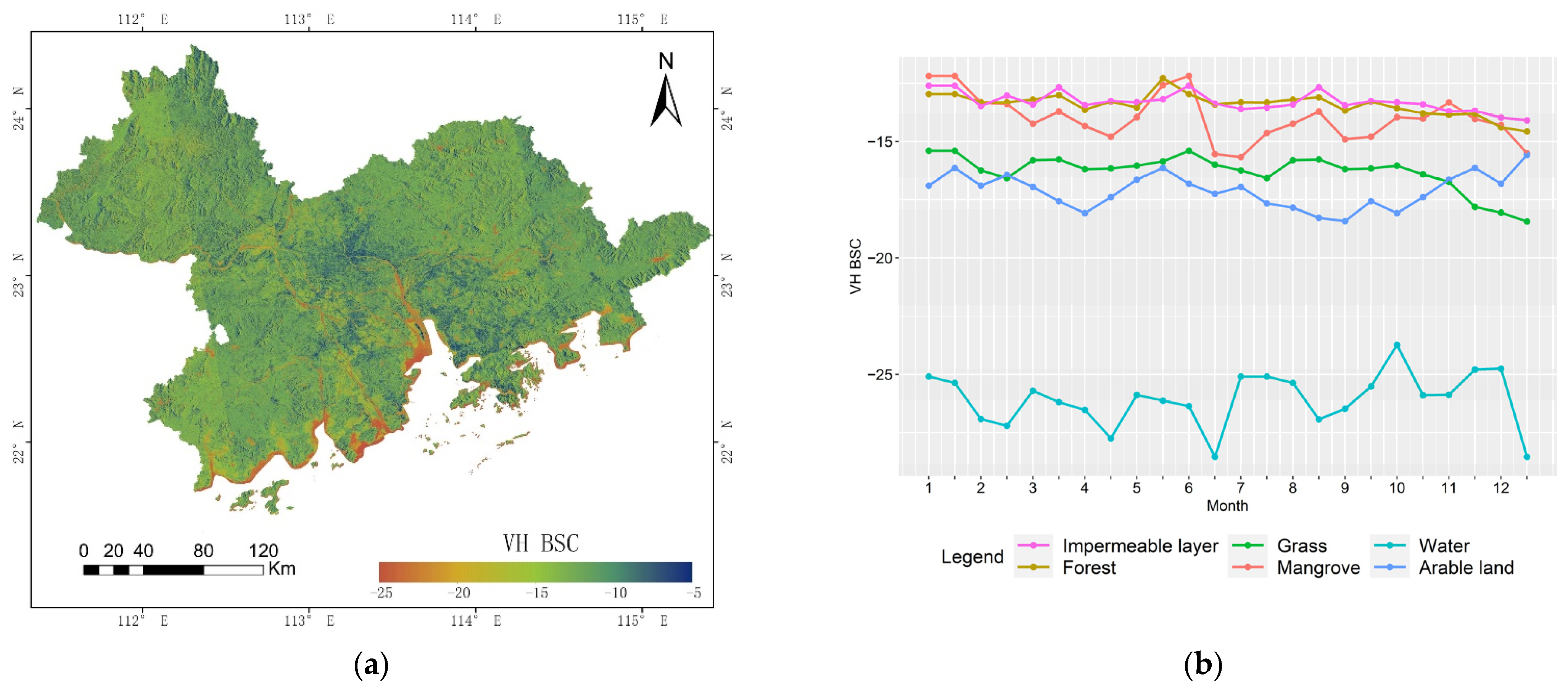

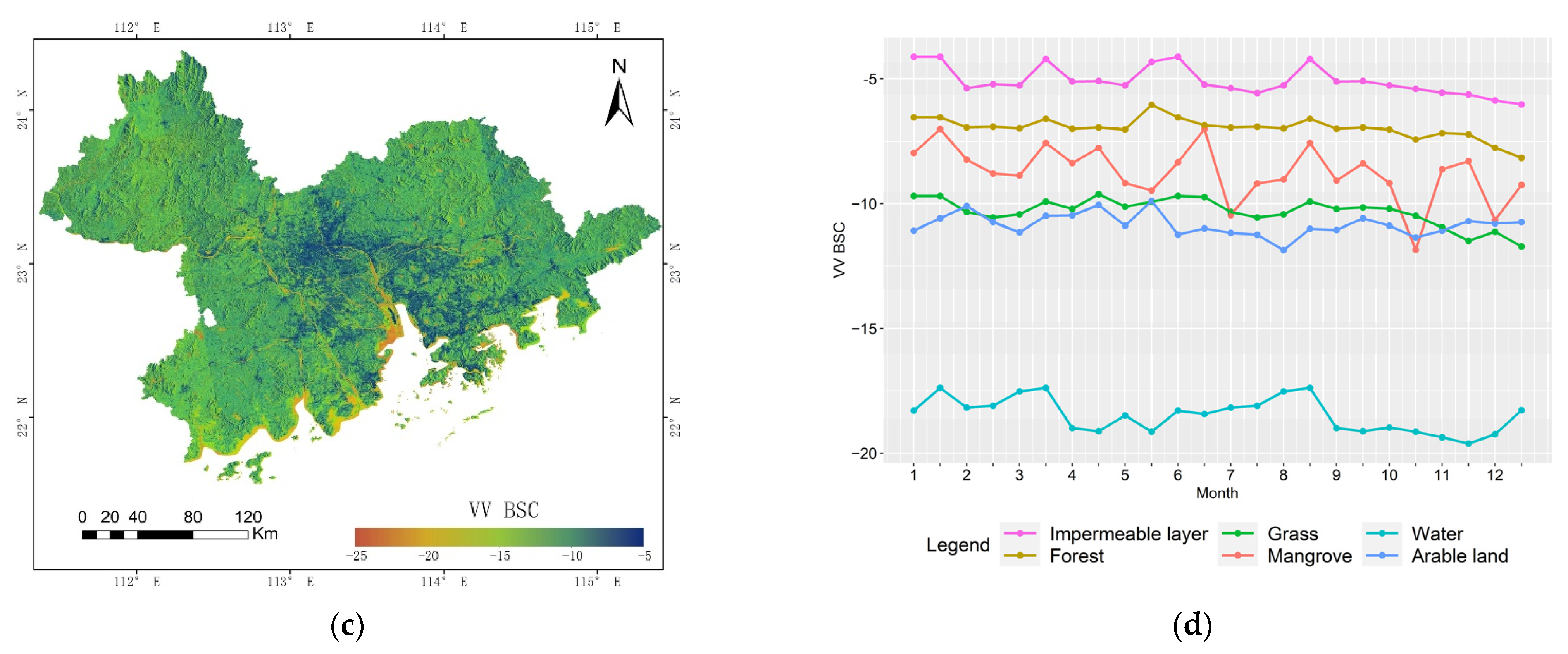

3.3. Integrated Time Series SAR Data Classification

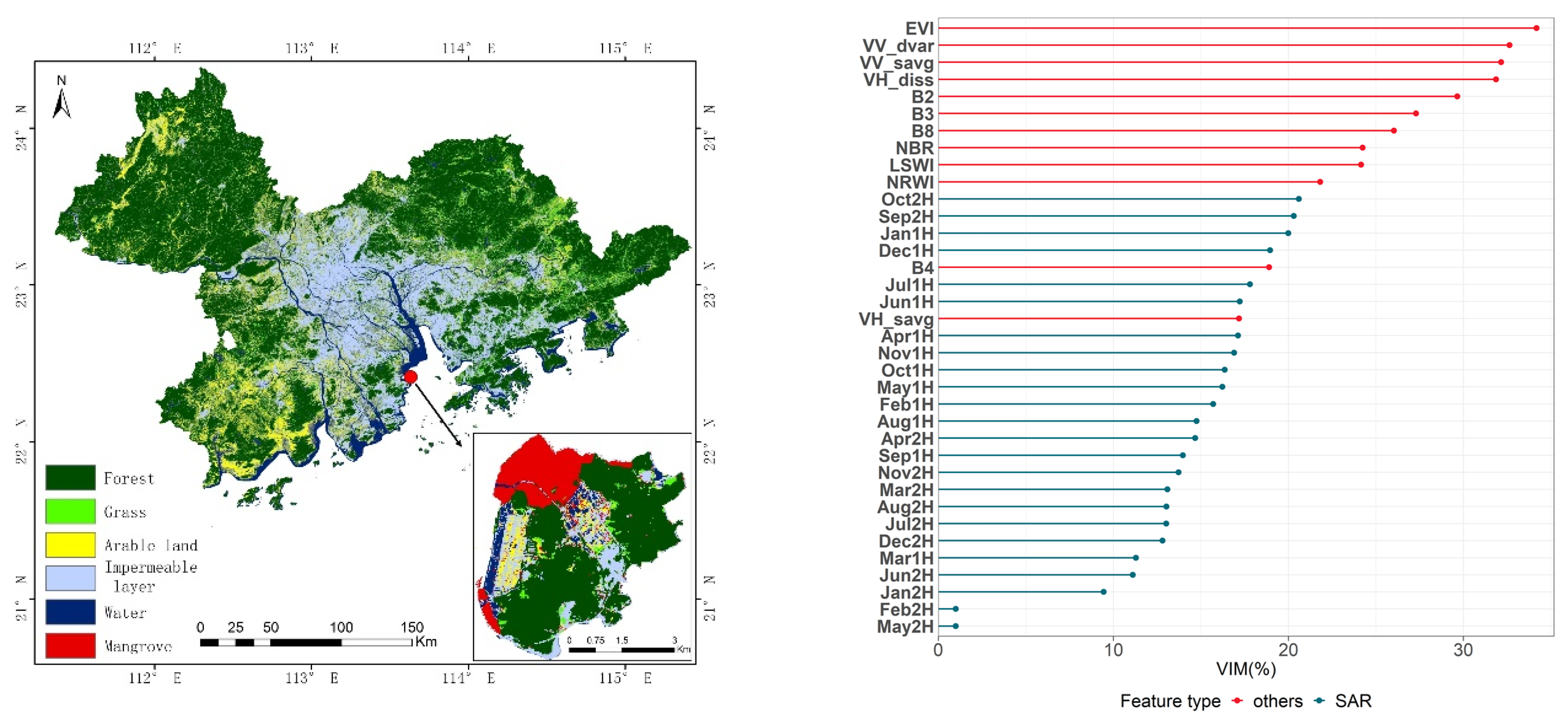

3.4. Integrated Active and Passive Time Series Data Classification

4. Discussion

4.1. Effect of Window Size on Extraction of Texture Feature Information

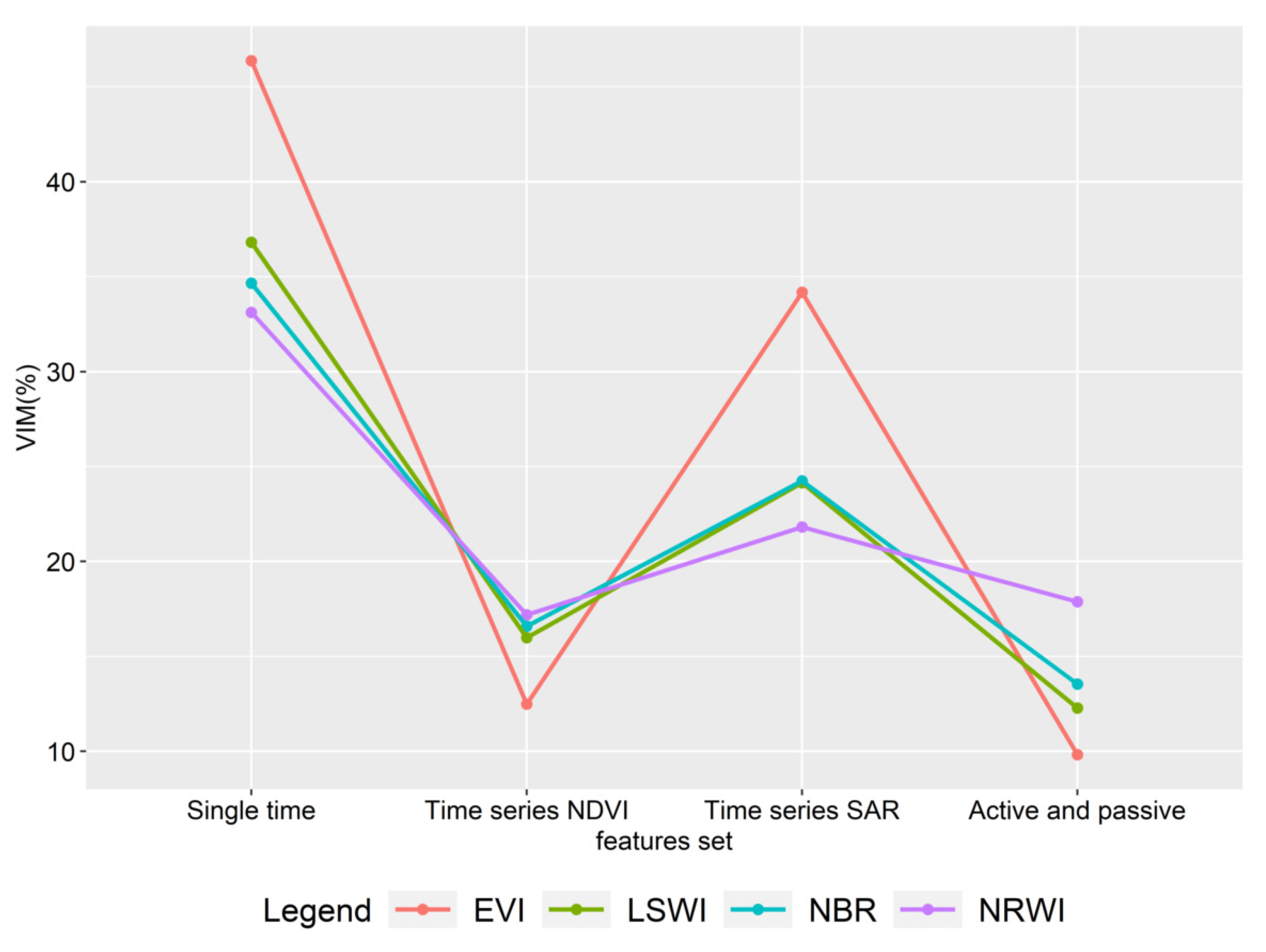

4.2. Importance Analysis of Different Vegetation Indexes

4.3. Comparative Analysis of Different Classification Methods

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yu, H. The Guangdong-Hong Kong-Macau greater bay area in the making: Development plan and challenges. Camb. Rev. Int. Aff. 2021, 34, 481–509. [Google Scholar] [CrossRef]

- Wang, Z.; Liang, L.; Sun, Z.; Wang, X. Spatiotemporal differentiation and the factors influencing urbanization and ecological environment synergistic effects within the Beijing-Tianjin-Hebei urban agglomeration. J. Environ. Manag. 2019, 243, 227–239. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Cai, Z. Ecological security assessment of the Guanzhong Plain urban agglomeration based on an adapted ecological footprint model. J. Clean. Prod. 2020, 260, 120973. [Google Scholar] [CrossRef]

- Lindenmayer, D.B.; Cunningham, R.B.; Donnelly, C.F.; Lesslie, R. On the use of landscape surrogates as ecological indicators in fragmented forests. For. Ecol. Manag. 2002, 159, 203–216. [Google Scholar] [CrossRef]

- Haggar, J.; Pons, D.; Saenz, L.; Vides, M. Contribution of agroforestry systems to sustaining biodiversity in fragmented forest landscapes. Agric. Ecosyst. Environ. 2019, 283, 106567. [Google Scholar] [CrossRef]

- Valdés, A.; Lenoir, J.; De Frenne, P.; Andrieu, E.; Brunet, J.; Chabrerie, O.; Cousins, S.A.O.; Deconchat, M.; De Smedt, P.; Diekmann, M.; et al. High ecosystem service delivery potential of small woodlands in agricultural landscapes. J. Appl. Ecol. 2020, 57, 4–16. [Google Scholar] [CrossRef] [Green Version]

- Dominati, E.J.; Maseyk, F.J.; Mackay, A.D.; Rendel, J.M. Farming in a changing environment: Increasing biodiversity on farm for the supply of multiple ecosystem services. Sci. Total Environ. 2019, 662, 703–713. [Google Scholar] [CrossRef]

- Bioresita, F.; Puissant, A.; Stumpf, A.; Malet, J.P. Active and passive remote sensing data time series for flood detection and surface water mapping. In Proceedings of the EGU General Assembly, EGU2017, Vienna, Austria, 23–28 April 2017; p. 10082. [Google Scholar]

- Kaplan, G.; Avdan, U. Sentinel-1 and Sentinel-2 fusion for wetland mapping: Balikdami, Turkey. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII-3, 729–734. [Google Scholar] [CrossRef] [Green Version]

- Borges, J.; Higginbottom, T.P.; Symeonakis, E.; Jones, M. Sentinel-1 and sentinel-2 data for savannah land cover mapping: Optimising the combination of sensors and seasons. Remote Sens. 2020, 12, 3862. [Google Scholar] [CrossRef]

- Komisarenko, V.; Voormansik, K.; Elshawi, R.; Sakr, S. Exploiting time series of Sentinel-1 and Sentinel-2 to detect grassland mowing events using deep learning with reject region. Sci. Rep. 2022, 12, 983. [Google Scholar] [CrossRef]

- Urban, M.; Schellenberg, K.; Morgenthal, T.; Dubois, C.; Hirner, A.; Gessner, U.; Mogonong, B.; Zhang, Z.; Baade, J.; Collett, A.; et al. Using sentinel-1 and sentinel-2 time series for slangbos mapping in the free state province, South Africa. Remote Sens. 2021, 13, 3342. [Google Scholar] [CrossRef]

- Lechner, M.; Dostálová, A.; Hollaus, M.; Atzberger, C.; Immitzer, M. Combination of Sentinel-1 and Sentinel-2 Data for Tree Species Classification in a Central European Biosphere Reserve. Remote Sens. 2022, 14, 2687. [Google Scholar] [CrossRef]

- Mercier, A.; Betbeder, J.; Rumiano, F.; Baudry, J.; Gond, V.; Blanc, L.; Bourgoin, C.; Cornu, G.; Ciudad, C.; Marchamalo, M.; et al. Evaluation of Sentinel-1 and 2 time series for land cover classification of forest–agriculture mosaics in temperate and tropical landscapes. Remote Sens. 2019, 11, 979. [Google Scholar] [CrossRef] [Green Version]

- Sun, L.; Chen, J.; Guo, S.; Deng, X.; Han, Y. Integration of time series sentinel-1 and sentinel-2 imagery for crop type mapping over oasis agricultural areas. Remote Sens. 2020, 12, 158. [Google Scholar] [CrossRef] [Green Version]

- Valero, S.; Arnaud, L.; Planells, M.; Ceschia, E. Synergy of Sentinel-1 and Sentinel-2 Imagery for Early Seasonal Agricultural Crop Mapping. Remote Sens. 2021, 13, 4891. [Google Scholar] [CrossRef]

- Hu, B.; Xu, Y.; Huang, X.; Cheng, Q.; Ding, Q.; Bai, L.; Li, Y. Improving Urban Land Cover Classification with Combined Use of Sentinel-2 and Sentinel-1 Imagery. ISPRS Int. J. Geo-Inf. 2021, 10, 533. [Google Scholar] [CrossRef]

- Newbold, T.; Hudson, L.N.; Phillips, H.R.P.; Hill, S.L.L.; Contu, S.; Lysenko, I.; Blandon, A.; Butchart, S.H.M.; Booth, H.L.; Day, J.; et al. A global model of the response of tropical and sub-tropical forest biodiversity to anthropogenic pressures. Proc. R. Soc. B 2014, 281, 20141371. [Google Scholar] [CrossRef] [Green Version]

- Roy, P.S.; Tomar, S. Biodiversity characterization at landscape level using geospatial modelling technique. Biol. Conserv. 2000, 95, 95–109. [Google Scholar] [CrossRef]

- Gyamfi-Ampadu, E.; Gebreslasie, M. Two Decades Progress on the Application of Remote Sensing for Monitoring Tropical and Sub-Tropical Natural Forests: A Review. Forests 2021, 12, 739. [Google Scholar] [CrossRef]

- Abbas, Z.; Zhu, Z.; Zhao, Y. Spatiotemporal analysis of landscape pattern and structure in the Greater Bay Area, China. Earth Sci. Inform. 2022, 15, 1977–1992. [Google Scholar] [CrossRef]

- Wang, X.; Cong, P.; Jin, Y.; Jia, X.; Wang, J.; Han, Y. Assessing the Effects of Land Cover Land Use Change on Precipitation Dynamics in Guangdong–Hong Kong–Macao Greater Bay Area from 2001 to 2019. Remote Sens. 2021, 13, 1135. [Google Scholar] [CrossRef]

- Mohandes, M.; Deriche, M.; Aliyu, S.O. Classifiers combination techniques: A comprehensive review. IEEE Access 2018, 6, 19626–19639. [Google Scholar] [CrossRef]

- Shen, H.; Lin, Y.; Tian, Q.; Xu, K.; Jiao, J. A comparison of multiple classifier combinations using different voting-weights for remote sensing image classification. Int. J. Remote Sens. 2018, 39, 3705–3722. [Google Scholar] [CrossRef]

- Hirayama, H.; Sharma, R.C.; Tomita, M.; Hara, K. Evaluating multiple classifier system for the reduction of salt-and-pepper noise in the classification of very-high-resolution satellite images. Int. J. Remote Sens. 2019, 40, 2542–2557. [Google Scholar] [CrossRef]

- Tan, K.; Zhang, Y.; Wang, X.; Chen, Y. Object-based change detection using multiple classifiers and multi-scale uncertainty analysis. Remote Sens. 2019, 11, 359. [Google Scholar] [CrossRef] [Green Version]

- Hashim, H.; Abd Latif, Z.; Adnan, N.A. Urban vegetation classification with NDVI threshold value method with very high resolution (VHR) Pleiades imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 237–240. [Google Scholar] [CrossRef] [Green Version]

- Li, Q.; Qiu, C.; Ma, L.; Schmitt, M.; Zhu, X.X. Mapping the land cover of Africa at 10 m resolution from multi-source remote sensing data with Google Earth Engine. Remote Sens. 2020, 12, 602. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Xi, B.; Li, Y.; Du, Q.; Wang, K. Hyperspectral classification based on texture feature enhancement and deep belief networks. Remote Sens. 2018, 10, 396. [Google Scholar] [CrossRef] [Green Version]

- Liu, X.; Zhang, R.; Meng, Z.; Hong, R.; Liu, G. On fusing the latent deep CNN feature for image classification. World Wide Web 2019, 22, 423–436. [Google Scholar] [CrossRef]

- Chandra, M.A.; Bedi, S. Survey on SVM and their application in image classification. Int. J. Inf. Technol. 2021, 13, 1–11. [Google Scholar] [CrossRef]

- Mishra, V.N.; Prasad, R.; Rai, P.K.; Vishwakarma, A.K.; Arora, A. Performance evaluation of textural features in improving land use/land cover classification accuracy of heterogeneous landscape using multi-sensor remote sensing data. Earth Sci. Inform. 2019, 12, 71–86. [Google Scholar] [CrossRef]

- Borfecchia, F.; Pollino, M.; De Cecco, L.; Lugari, A.; Martini, S.; La Porta, L.; Ristoratore, E.; Pascale, C. Active and passive remote sensing for supporting the evaluation of the urban seismic vulnerability. Ital. J. Remote Sens. 2010, 42, 129–141. [Google Scholar] [CrossRef]

- Ndikumana, E.; Minh, D.H.T.; Baghdadi, N.; Courault, D.; Hossard, L. Deep recurrent neural network for agricultural classification using multitemporal SAR Sentinel-1 for Camargue, France. Remote Sens. 2018, 10, 1217. [Google Scholar] [CrossRef] [Green Version]

- Dostálová, A.; Wagner, W.; Milenković, M.; Hollaus, M. Annual seasonality in Sentinel-1 signal for forest mapping and forest type classification. Int. J. Remote Sens. 2018, 39, 7738–7760. [Google Scholar] [CrossRef]

- Gašparović, M.; Dobrinić, D. Comparative assessment of machine learning methods for urban vegetation mapping using multitemporal sentinel-1 imagery. Remote Sens. 2020, 12, 1952. [Google Scholar] [CrossRef]

- Chakhar, A.; Hernández-López, D.; Ballesteros, R.; Moreno, M.A. Improving the accuracy of multiple algorithms for crop classification by integrating sentinel-1 observations with sentinel-2 data. Remote Sens. 2021, 13, 243. [Google Scholar] [CrossRef]

- Tavares, P.A.; Beltrão, N.E.S.; Guimarães, U.S.; Teodoro, A.C. Integration of sentinel-1 and sentinel-2 for classification and LULC mapping in the urban area of Belém, eastern Brazilian Amazon. Sensors 2019, 19, 1140. [Google Scholar] [CrossRef] [Green Version]

- Zhang, R.; Tang, X.; You, S.; Duan, K.; Xiang, H.; Luo, H. A novel feature-level fusion framework using optical and SAR remote sensing images for land use/land cover (LULC) classification in cloudy mountainous area. Appl. Sci. 2020, 10, 2928. [Google Scholar] [CrossRef]

- Spadoni, G.L.; Cavalli, A.; Congedo, L.; Munafò, M. Analysis of Normalized Difference Vegetation Index (NDVI) multi-temporal series for the production of forest cartography. Remote Sens. Appl. Soc. Environ. 2020, 20, 100419. [Google Scholar] [CrossRef]

- Zhong, L.; Hu, L.; Zhou, H. Deep learning based multi-temporal crop classification. Remote Sens. Environ. 2019, 221, 430–443. [Google Scholar] [CrossRef]

- Persson, M.; Lindberg, E.; Reese, H. Tree species classification with multi-temporal Sentinel-2 data. Remote Sens. 2018, 10, 1794. [Google Scholar] [CrossRef] [Green Version]

- Liu, H. Classification of urban tree species using multi-features derived from four-season RedEdge-MX data. Comput. Electron. Agric. 2022, 194, 106794. [Google Scholar] [CrossRef]

- Van Deventer, H.; Cho, M.A.; Mutanga, O. Multi-season RapidEye imagery improves the classification of wetland and dryland communities in a subtropical coastal region. ISPRS J. Photogramm. Remote Sens. 2019, 157, 171–187. [Google Scholar] [CrossRef]

- Kpienbaareh, D.; Sun, X.; Wang, J.; Luginaah, I.; Bezner Kerr, R.; Lupafya, E.; Dakishoni, L. Crop Type and Land Cover Mapping in Northern Malawi Using the Integration of Sentinel-1, Sentinel-2, and PlanetScope Satellite Data. Remote Sens. 2021, 13, 700. [Google Scholar] [CrossRef]

- Hafner, S.; Ban, Y.; Nascetti, A. Unsupervised domain adaptation for global urban extraction using sentinel-1 SAR and sentinel-2 MSI data. Remote Sens. Environ. 2022, 280, 113192. [Google Scholar] [CrossRef]

- Battisti, C. Unifying the trans-disciplinary arsenal of project management tools in a single logical framework: Further suggestion for IUCN project cycle development. J. Nat. Conserv. 2018, 41, 63–72. [Google Scholar] [CrossRef]

- Giovacchini, P.; Battisti, C.; Marsili, L. Evaluating the Effectiveness of a Conservation Project on Two Threatened Birds: Applying Expert-Based Threat Analysis and Threat Reduction Assessment in a Mediterranean Wetland. Diversity 2022, 14, 94. [Google Scholar] [CrossRef]

- Wen, M.; Liu, C.; Galina, P.; Tao, C.; Hou, X.; Bai, F.; Zhao, H. Efficiency analysis of the marine economy in the Guangdong–Hong Kong–Macao greater bay area based on a DEA model. J. Coast. Res. 2020, 106, 225–228. [Google Scholar] [CrossRef]

- Yang, F.; Sun, Y.; Zhang, Y.; Wang, T. Factors Affecting the Manufacturing Industry Transformation and Upgrading: A Case Study of Guangdong–Hong Kong–Macao Greater Bay Area. Int. J. Environ. Res. Public Health 2021, 18, 7157. [Google Scholar] [CrossRef]

- Xie, H.; Zhang, Y.; Chen, Y.; Peng, Q.; Liao, Z.; Zhu, J. A case study of development and utilization of urban underground space in Shenzhen and the Guangdong-Hong Kong-Macao Greater Bay Area Tunnelling and Underground. Space Technol. 2021, 107, 103651. [Google Scholar] [CrossRef]

- Wu, M.; Wu, J.; Zang, C. A comprehensive evaluation of the eco-carrying capacity and green economy in the Guangdong-Hong Kong-Macao Greater Bay Area, China. J. Clean. Prod. 2021, 281, 124945. [Google Scholar] [CrossRef]

- Liu, K. China’s Guangdong-Hong Kong-Macao Greater Bay Area: A Primer. Cph. J. Asian Stud. 2019, 37, 36–56. [Google Scholar] [CrossRef]

- Lee, I.; Lin, R.F.-Y. Economic Complexity of the City Cluster in Guangdong–Hong Kong–Macao Greater Bay Area, China. Sustainability 2020, 12, 5639. [Google Scholar] [CrossRef]

- Algehyne, E.A.; Jibril, M.L.; Algehainy, N.A.; Alamri, O.A.; Alzahrani, A.K. Fuzzy neural network expert system with an improved Gini index random forest-based feature importance measure algorithm for early diagnosis of breast cancer in Saudi Arabia. Big Data Cogn. Comput. 2022, 6, 13. [Google Scholar] [CrossRef]

- Tan, X.J.; Mustafa, N.; Mashor, M.Y.; Rahman, K.S.A. Spatial neighborhood intensity constraint (SNIC) and knowledge-based clustering framework for tumor region segmentation in breast histopathology images. Multimed. Tools Appl. 2022, 81, 18203–18222. [Google Scholar] [CrossRef]

- Sheykhmousa, M.; Mahdianpari, M.; Ghanbari, H.; Mohammadimanesh, F.; Ghamisi, P.; Homayouni, S. Support vector machine versus random forest for remote sensing image classification: A meta-analysis and systematic review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 6308–6325. [Google Scholar] [CrossRef]

- Gibson, R.; Danaher, T.; Hehir, W.; Collins, L. A remote sensing approach to mapping fire severity in south-eastern Australia using sentinel 2 and random forest. Remote Sens. Environ. 2020, 240, 111702. [Google Scholar] [CrossRef]

- Lan, Z.; Liu, Y. Study on Multi-Scale Window Determination for GLCM Texture Description in High-Resolution Remote Sensing Image Geo-Analysis Supported by GIS and Domain Knowledge. ISPRS Int. J. Geo-Inf. 2018, 7, 175. [Google Scholar] [CrossRef] [Green Version]

- Sekulić, A.; Kilibarda, M.; Heuvelink, G.B.M.; Nikolić, M.; Bajat, B. Random Forest Spatial Interpolation. Remote Sens. 2020, 12, 1687. [Google Scholar] [CrossRef]

- Cheng, G.; Xie, X.; Han, J.; Guo, L.; Xia, G.S. Remote sensing image scene classification meets deep learning: Challenges, methods, benchmarks, and opportunities. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3735–3756. [Google Scholar] [CrossRef]

| No. | Type | Definition |

|---|---|---|

| 1 | Forest | Forest land dominated by trees, with canopy closure ≥ 0.2 |

| 2 | Grass | Land that produces herbaceous plants |

| 3 | Arable land | Land where crops are the main surface type |

| 4 | Impermeable layer | Artificial surfaces such as buildings, roads, factories, etc. |

| 5 | Mangrove | Wetland woody plant communities composed of evergreen trees or shrubs dominated by mangroves |

| 6 | Water | Inland waters, beaches, ditches, swamps, hydraulic structures, etc. |

| Sensor | Feature Type | Feature Variable |

|---|---|---|

| Sentinel-1 | Polarization mode | VH |

| VV | ||

| Texture features | Fourteen GLCM features proposed by Haralick, and four additional features from Conners | |

| Sentinel-2 | Spectral features | Blue band (B2) |

| Green band (B3) | ||

| Red band (B4) | ||

| Near-infrared band (NIR, B8) | ||

| Index features | Normalized difference vegetation index, NDVI | |

| Enhanced vegetation index, EVI | ||

| Normalized difference water index, NDWI | ||

| Red–green ratio index, RGRI | ||

| Normalized difference built-up index, NDBI |

| Types | Water | Arable Land | Impermeable Layer | Mangrove | Forest | Grass | Sum | Producer Accuracy % |

|---|---|---|---|---|---|---|---|---|

| Water | 42 | 0 | 0 | 0 | 0 | 0 | 42 | 100.00 |

| Arable land | 7 | 39 | 11 | 0 | 11 | 12 | 80 | 48.75 |

| Impermeable layer | 0 | 4 | 67 | 0 | 0 | 0 | 71 | 94.37 |

| Mangrove | 0 | 4 | 0 | 7 | 4 | 4 | 19 | 36.84 |

| Forest | 0 | 0 | 0 | 0 | 140 | 10 | 150 | 93.33 |

| Grass | 0 | 13 | 0 | 0 | 14 | 31 | 58 | 53.45 |

| Sum | 49 | 60 | 78 | 7 | 169 | 57 | 420 | |

| User accuracy % | 85.71 | 65.00 | 85.90 | 100.00 | 82.84 | 54.39 | 77.62 | |

| Kappa coefficient | 0.7080 | |||||||

| Types | Water | Arable Land | Impermeable Layer | Mangrove | Forest | Grass | Sum | Producer Accuracy % |

|---|---|---|---|---|---|---|---|---|

| Water | 42 | 0 | 0 | 0 | 0 | 0 | 42 | 100.00 |

| Arable land | 0 | 48 | 18 | 0 | 10 | 4 | 80 | 60.00 |

| Impermeable layer | 0 | 4 | 67 | 0 | 0 | 0 | 71 | 94.37 |

| Mangrove | 0 | 4 | 0 | 7 | 4 | 4 | 19 | 36.84 |

| Forest | 0 | 0 | 0 | 0 | 139 | 11 | 150 | 92.67 |

| Grass | 0 | 0 | 11 | 0 | 10 | 37 | 58 | 63.79 |

| Sum | 42 | 56 | 96 | 7 | 163 | 56 | 420 | |

| User accuracy % | 100.00 | 85.71 | 69.79 | 100.00 | 85.28 | 66.07 | 80.95 | |

| Kappa coefficient | 0.7520 | |||||||

| Types | Water | Arable Land | Impermeable Layer | Mangrove | Forest | Grass | Sum | Producer Accuracy % |

|---|---|---|---|---|---|---|---|---|

| Water | 42 | 0 | 0 | 0 | 0 | 0 | 42 | 100.00 |

| Arable land | 7 | 41 | 18 | 0 | 7 | 7 | 80 | 51.25 |

| Impermeable layer | 0 | 4 | 67 | 0 | 0 | 0 | 71 | 94.37 |

| Mangrove | 0 | 0 | 0 | 12 | 7 | 0 | 19 | 63.16 |

| Forest | 0 | 0 | 0 | 0 | 143 | 7 | 150 | 95.33 |

| Grass | 0 | 6 | 0 | 0 | 11 | 41 | 58 | 70.69 |

| Sum | 49 | 51 | 85 | 12 | 168 | 55 | 420 | |

| User accuracy % | 85.71 | 80.39 | 78.82 | 100.00 | 85.12 | 74.55 | 82.38 | |

| Kappa coefficient | 0.7708 | |||||||

| Types | Water | Arable Land | Impermeable Layer | Mangrove | Forest | Grass | Sum | Producer Accuracy % |

|---|---|---|---|---|---|---|---|---|

| Water | 42 | 0 | 0 | 0 | 0 | 0 | 42 | 100.00 |

| Arable land | 7 | 46 | 10 | 0 | 10 | 7 | 80 | 57.50 |

| Impermeable layer | 0 | 5 | 66 | 0 | 0 | 0 | 71 | 92.96 |

| Mangrove | 0 | 0 | 0 | 12 | 7 | 0 | 19 | 63.16 |

| Forest | 0 | 0 | 0 | 0 | 143 | 7 | 150 | 95.33 |

| Grass | 0 | 7 | 0 | 0 | 6 | 45 | 58 | 77.59 |

| Sum | 49 | 58 | 76 | 12 | 166 | 59 | 420 | |

| User accuracy % | 85.71 | 79.31 | 86.84 | 100.00 | 86.14 | 76.27 | 84.29 | |

| Kappa coefficient | 0.7958 | |||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, C.; Wang, Y.; Gao, Z.; Sun, B.; Xing, H.; Zang, Y. Identification of Typical Ecosystem Types by Integrating Active and Passive Time Series Data of the Guangdong–Hong Kong–Macao Greater Bay Area, China. Int. J. Environ. Res. Public Health 2022, 19, 15108. https://doi.org/10.3390/ijerph192215108

Li C, Wang Y, Gao Z, Sun B, Xing H, Zang Y. Identification of Typical Ecosystem Types by Integrating Active and Passive Time Series Data of the Guangdong–Hong Kong–Macao Greater Bay Area, China. International Journal of Environmental Research and Public Health. 2022; 19(22):15108. https://doi.org/10.3390/ijerph192215108

Chicago/Turabian StyleLi, Changlong, Yan Wang, Zhihai Gao, Bin Sun, He Xing, and Yu Zang. 2022. "Identification of Typical Ecosystem Types by Integrating Active and Passive Time Series Data of the Guangdong–Hong Kong–Macao Greater Bay Area, China" International Journal of Environmental Research and Public Health 19, no. 22: 15108. https://doi.org/10.3390/ijerph192215108

APA StyleLi, C., Wang, Y., Gao, Z., Sun, B., Xing, H., & Zang, Y. (2022). Identification of Typical Ecosystem Types by Integrating Active and Passive Time Series Data of the Guangdong–Hong Kong–Macao Greater Bay Area, China. International Journal of Environmental Research and Public Health, 19(22), 15108. https://doi.org/10.3390/ijerph192215108