Prediction of Cavity Length Using an Interpretable Ensemble Learning Approach

Abstract

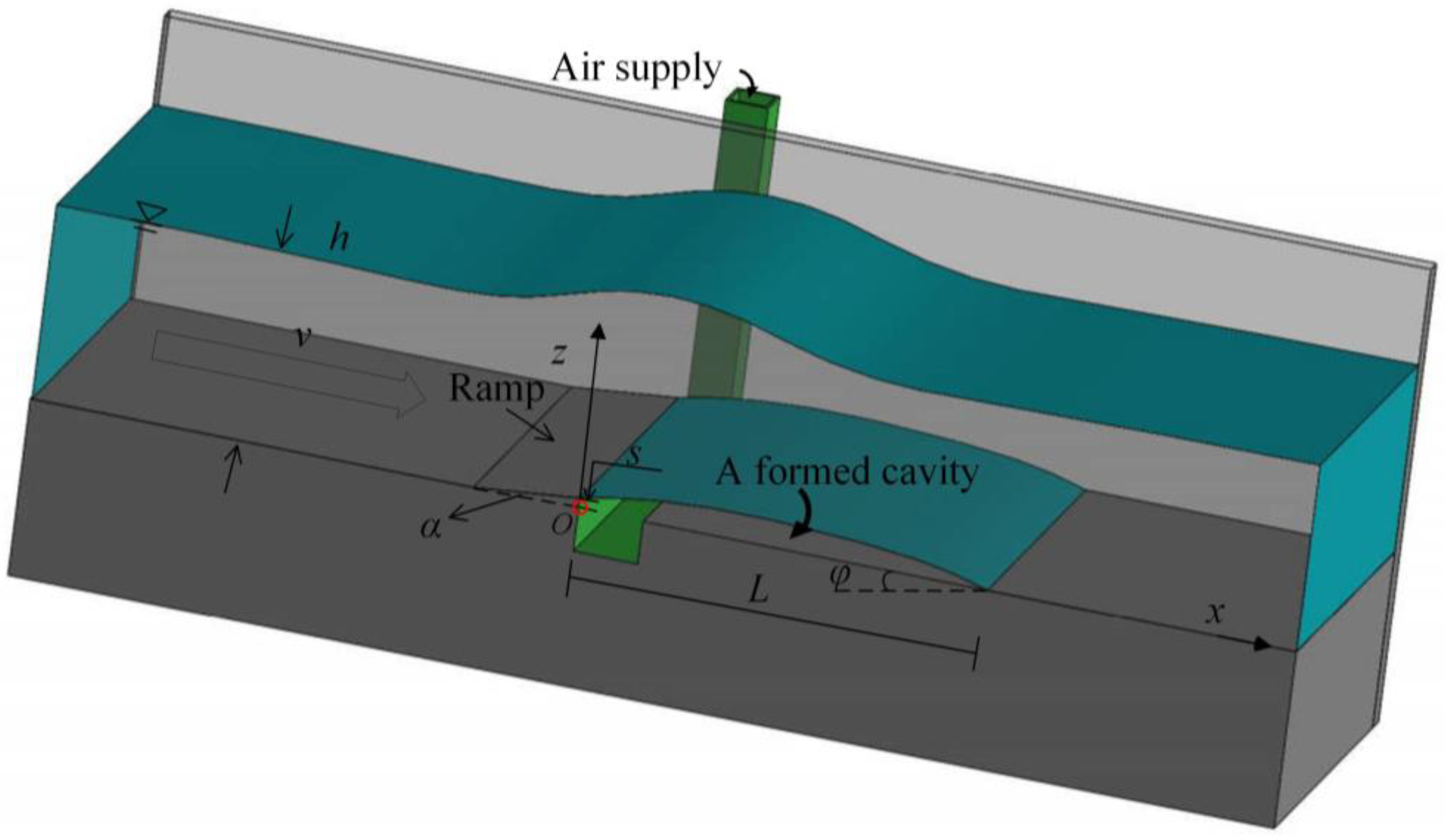

1. Introduction

2. Machine Learning Models

2.1. Support Vector Regression

2.2. Radom Forest (RF)

2.3. Gradient Boosting Decision Tree (GBDT)

- (1)

- Initialize the iteration starting point h0(x).

- (2)

- The mth residual along the gradient direction is:

- (3)

- The establishment of the mth tree depends on the dataset x and rmi. Each sample has a prediction result y′ that is used to update the mth strong learner to obtain hm (xi).

- (4)

- After completing m iterations, the final strong learner H(x) is obtained.

2.4. Extreme Gradient Boosting

2.5. Bayesian Optimization

2.6. Model Evaluation Indices

2.7. Sobol Sensitivity Analysis

2.8. Dataset and Dimensional Analysis

3. Results

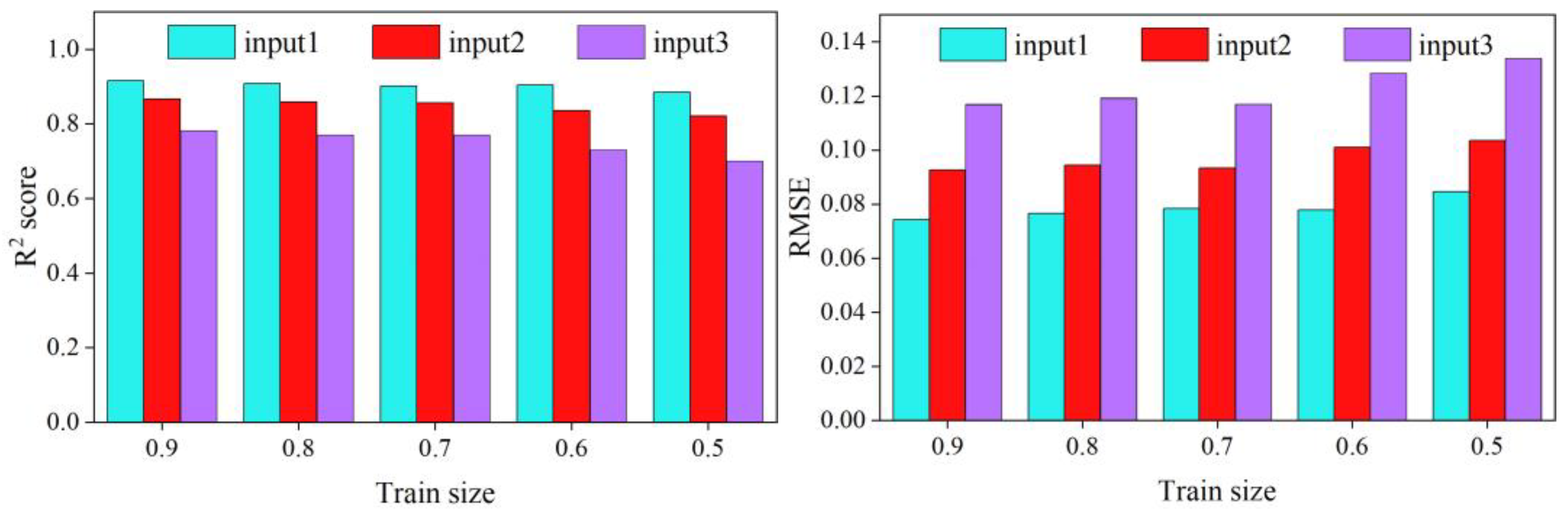

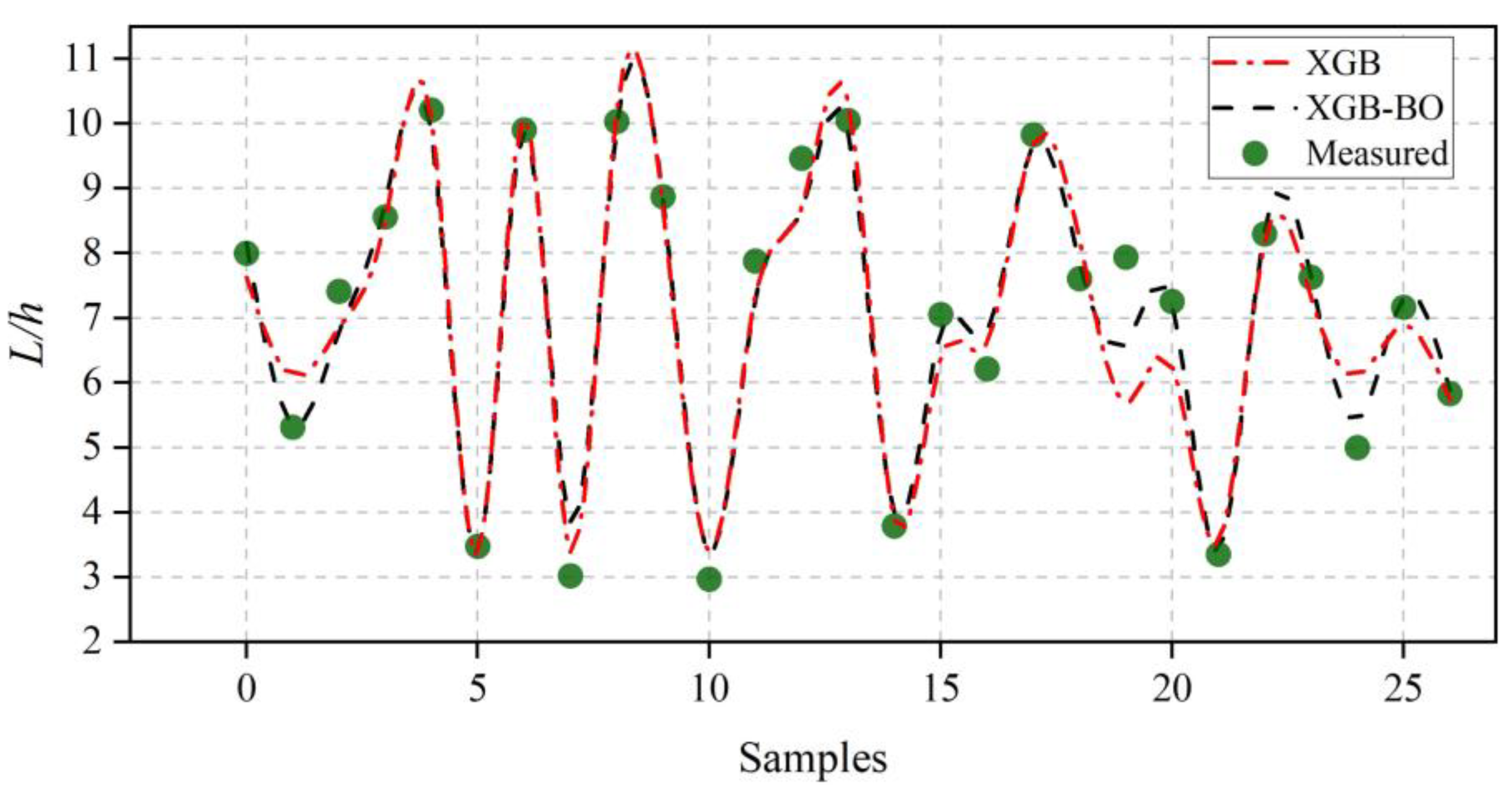

3.1. Cavity Length Prediction Using Different Ensemble Learning Models

3.1.1. Effect of BO Optimization

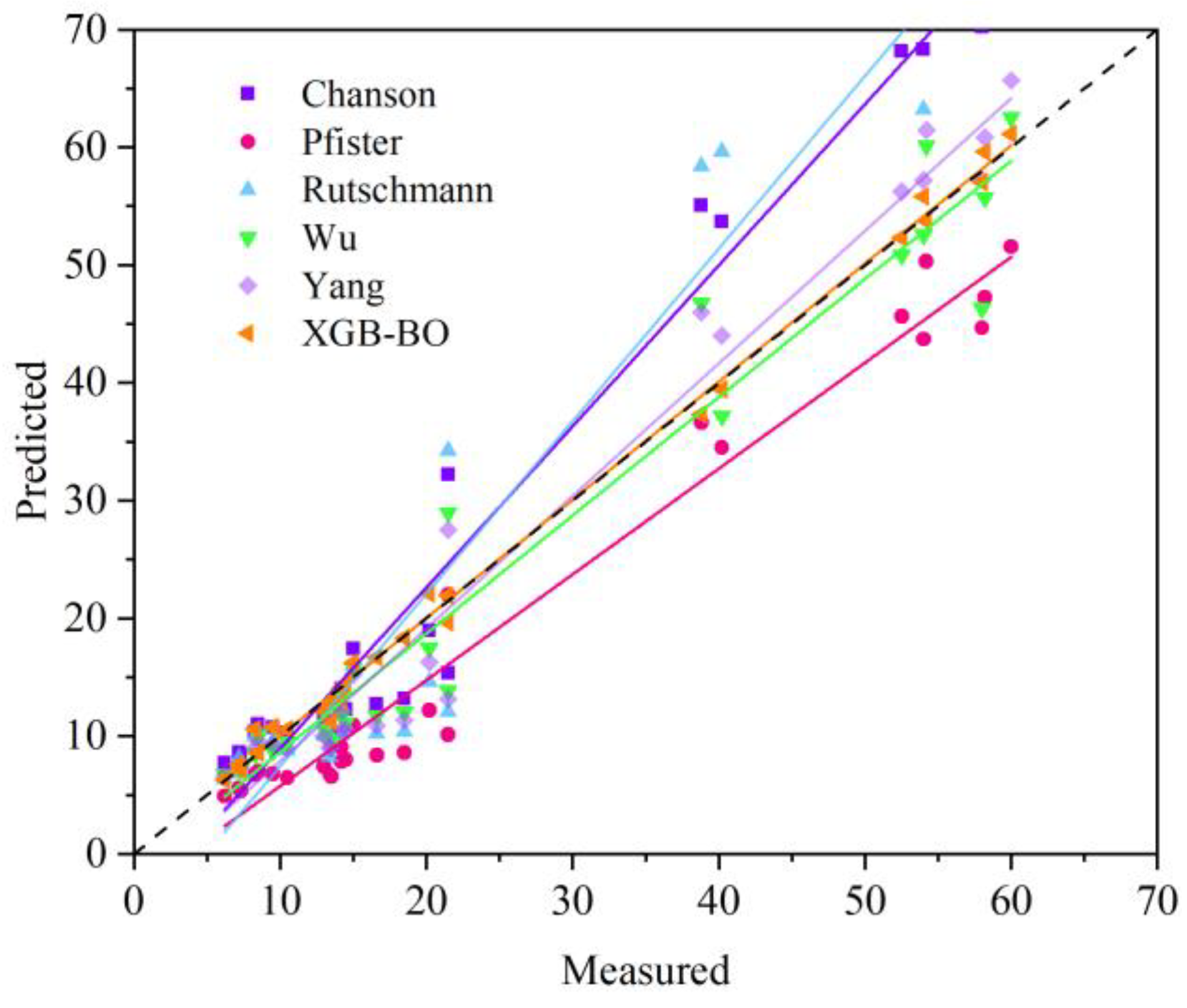

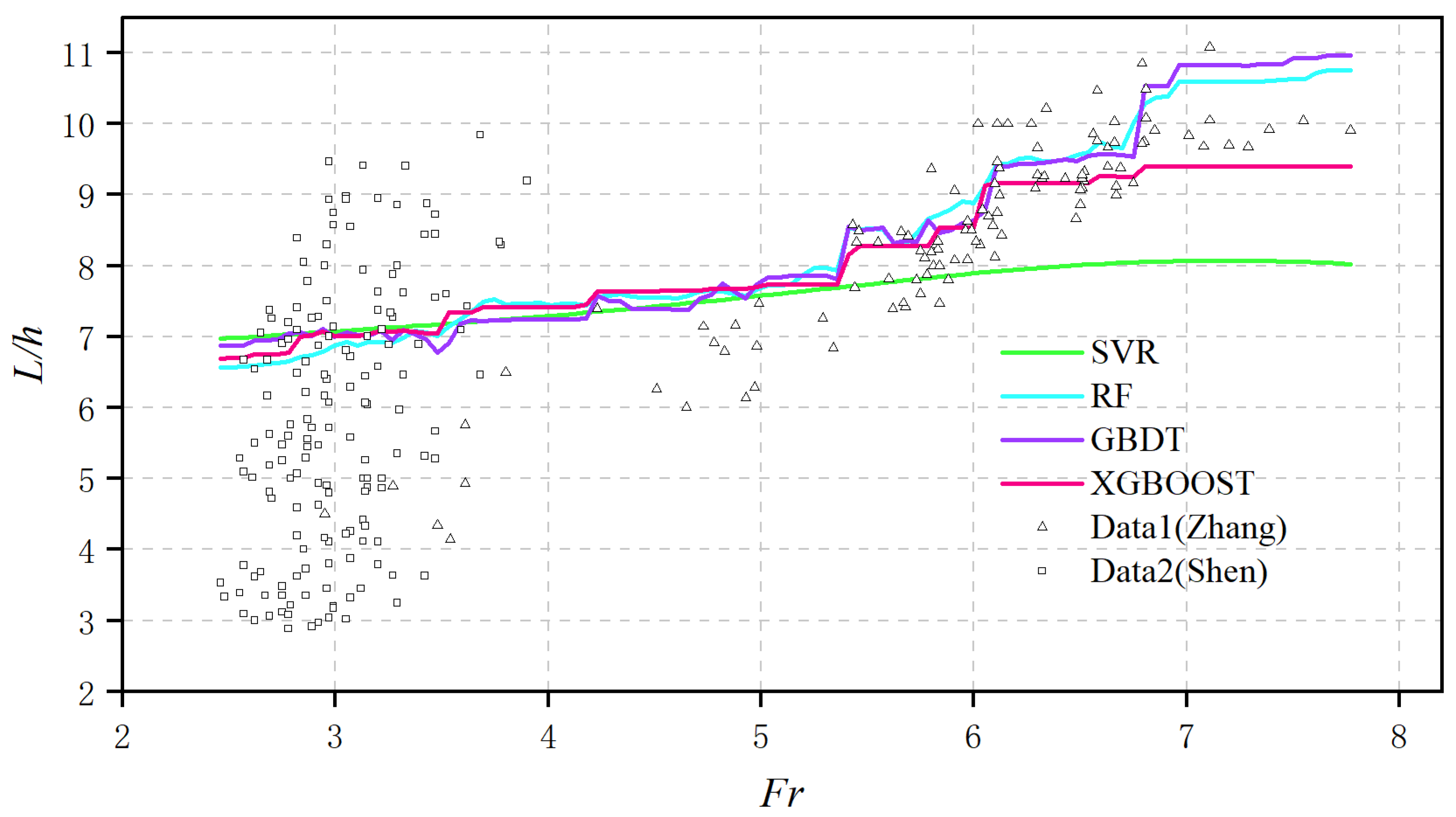

3.1.2. Comparison with Empirical Correlations

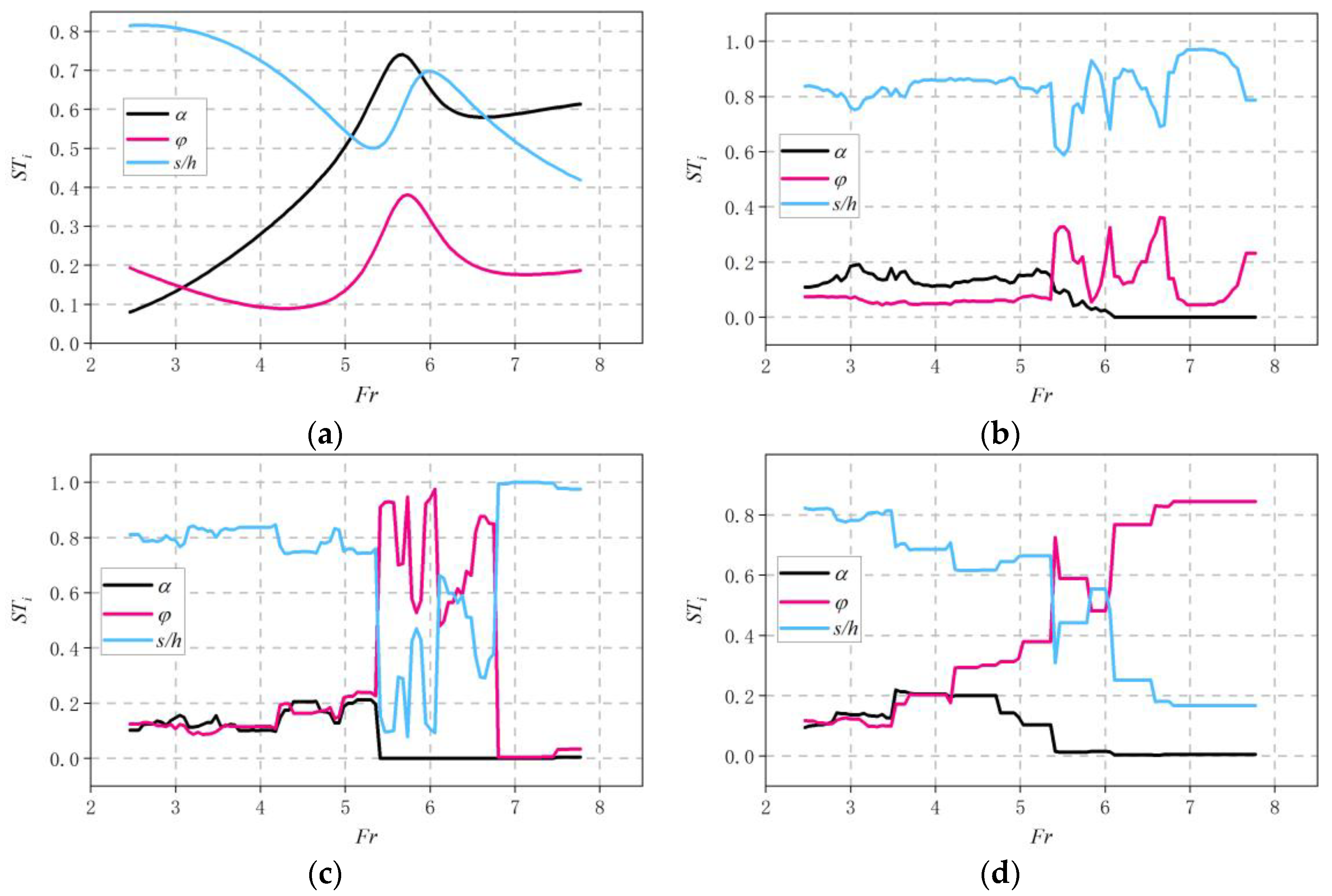

3.2. Model Interpretation Using the Sobol Technique

3.2.1. Global Interpretations

3.2.2. Physical Interpretation of the Sobol Analysis Results

3.2.3. Evaluation of Model Accuracy at Different Fr values

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Pfister, M.; Hager, W.H. Chute aerators. II: Hydraulic design. J. Hydraul. Eng. 2010, 136, 360–367. [Google Scholar] [CrossRef]

- Wu, J.; Ruan, S. Emergence Angle of Flow Over an Aerator. J. Hydrodyn. 2007, 19, 601–606. [Google Scholar] [CrossRef]

- Wu, J.; Ruan, S. Cavity length below chute aerators. Sci. China Ser. E Technol. Sci. 2008, 51, 170–178. [Google Scholar] [CrossRef]

- Rutschmann, P.; Hager, W.H. Air entrainment by spillway aerators. J. Hydraul. Eng. 1990, 116, 765–782. [Google Scholar] [CrossRef]

- Chanson, M.H. Predicting the filling of ventilated cavities behind spillway aerators. J. Hydraul. Res. 2010, 33, 361–372. [Google Scholar] [CrossRef]

- Pfister, M.; Hager, W.H. Chute aerators. I: Air transport characteristics. J. Hydraul. Eng. 2010, 136, 352–359. [Google Scholar] [CrossRef]

- Ahmed, A.N.; Yafouz, A.; Birima, A.H.; Kisi, O.; Huang, Y.F.; Sherif, M.; Sefelnasr, A.; El-Shafie, A. Water level prediction using various machine learning algorithms: A case study of Durian Tunggal river, Malaysia. Eng. Appl. Comput. Fluid Mech. 2022, 16, 422–440. [Google Scholar] [CrossRef]

- Pal, M.; Singh, N.K.; Tiwari, N.K. Support vector regression based modeling of pier scour using field data. Eng. Appl. Artif. Intell. 2011, 24, 911–916. [Google Scholar] [CrossRef]

- Zaji, A.H.; Bonakdari, H. Optimum Support Vector Regression for Discharge Coefficient of Modified Side Weirs Prediction. INAE Lett. 2017, 2, 25–33. [Google Scholar] [CrossRef]

- Bhattarai, A.; Dhakal, S.; Gautam, Y.; Bhattarai, R. Prediction of Nitrate and Phosphorus Concentrations Using Machine Learning Algorithms in Watersheds with Different Landuse. Water 2021, 13, 3096. [Google Scholar] [CrossRef]

- AlDahoul, N.; Ahmed, A.N.; Allawi, M.F.; Sherif, M.; Sefelnasr, A.; Chau, K.W.; El-Shafie, A. A comparison of machine learning models for suspended sediment load classification. Eng. Appl. Comput. Fluid Mech. 2022, 16, 1211–1232. [Google Scholar] [CrossRef]

- Çimen, M. Estimation of daily suspended sediments using support vector machines. Hydrol. Sci. J. 2008, 53, 656–666. [Google Scholar] [CrossRef]

- Hu, Z.; Karami, H.; Rezaei, A.; DadrasAjirlou, Y.; Piran, M.J.; Band, S.S.; Chau, K.W.; Mosavi, A. Using soft computing and machine learning algorithms to predict the discharge coefficient of curved labyrinth overflows. Eng. Appl. Comput. Fluid Mech. 2021, 15, 1002–1015. [Google Scholar] [CrossRef]

- Dursun, O.F.; Kaya, N.; Firat, M. Estimating discharge coefficient of semi-elliptical side weir using ANFIS. J. Hydrol. 2012, 426, 55–62. [Google Scholar] [CrossRef]

- Roushangar, K.; Akhgar, S.; Salmasi, F. Estimating discharge coefficient of stepped spillways under nappe and skimming flow regime using data driven approaches. Flow Meas. Instrum. 2018, 59, 79–87. [Google Scholar] [CrossRef]

- Liang, W.; Luo, S.; Zhao, G.; Wu, H. Predicting Hard Rock Pillar Stability Using GBDT, XGBoost, and LightGBM Algorithms. Mathematics 2020, 8, 765. [Google Scholar] [CrossRef]

- Zhang, W.; Yu, J.; Zhao, A.; Zhou, X. Predictive model of cooling load for ice storage air-conditioning system by using GBDT. Energy Rep. 2021, 7, 1588–1597. [Google Scholar] [CrossRef]

- Qiu, Y.; Zhou, J.; Khandelwal, M.; Yang, H.; Yang, P.; Li, C. Performance evaluation of hybrid WOA-XGBoost, GWO-XGBoost and BO-XGBoost models to predict blast-induced ground vibration. Eng. Comput. 2021, 38, 4145–4162. [Google Scholar] [CrossRef]

- Afan, H.A.; Ibrahem Ahmed Osman, A.; Essam, Y.; Ahmed, A.N.; Huang, Y.F.; Kisi, O.; Sherif, M.; Sefelnasr, A.; Chau, K.W.; El-Shafie, A. Modeling the fluctuations of groundwater level by employing ensemble deep learning techniques. Eng. Appl. Comput. Fluid Mech. 2021, 15, 1420–1439. [Google Scholar] [CrossRef]

- Chen, Y.-J.; Chen, Z.-S. A prediction model of wall shear stress for ultra-high-pressure water-jet nozzle based on hybrid BP neural network. Eng. Appl. Comput. Fluid Mech. 2022, 16, 1902–1920. [Google Scholar] [CrossRef]

- Wu, J.; Ma, D.; Wang, W. Leakage Identification in Water Distribution Networks Based on XGBoost Algorithm. J. Water Resour. Plan. Manag. 2022, 148, 04021107. [Google Scholar] [CrossRef]

- Altmann, A.; Toloşi, L.; Sander, O.; Lengauer, T. Permutation importance: A corrected feature importance measure. Bioinformatics 2010, 26, 1340–1347. [Google Scholar] [CrossRef]

- Mi, J.-X.; Li, A.-D.; Zhou, L.-F. Review Study of Interpretation Methods for Future Interpretable Machine Learning. IEEE Access 2020, 8, 191969–191985. [Google Scholar] [CrossRef]

- Wang, S.; Peng, H.; Liang, S. Prediction of estuarine water quality using interpretable machine learning approach. J. Hydrol. 2022, 605, 127320. [Google Scholar] [CrossRef]

- Hall, J.W.; Boyce, S.A.; Wang, Y.; Dawson, R.J.; Tarantola, S.; Saltelli, A. Sensitivity Analysis for Hydraulic Models. J. Hydraul. Eng. 2009, 135, 959–969. [Google Scholar] [CrossRef]

- Feng, D.-C.; Wang, W.J.; Mangalathu, S.; Taciroglu, E. Interpretable XGBoost-SHAP machine-learning model for shear strength prediction of squat RC walls. J. Struct. Eng. 2021, 147, 04021173. [Google Scholar] [CrossRef]

- Sobol, I.M. Global sensitivity indices for nonlinear mathematical models and their Monte Carlo estimates. Math. Comput. Simul. 2001, 55, 271–280. [Google Scholar] [CrossRef]

- Vapnik, V. The Nature of Statistical Learning Theory; Springer Science & Business Media: Berlin/Heidelberg, Germany, 1999. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Svetnik, V.; Liaw, A.; Tong, C.; Culberson, J.C.; Sheridan, R.P.; Feuston, B.P. Random forest: A classification and regression tool for compound classification and QSAR modeling. J. Chem. Inf. Comput. Sci. 2003, 43, 1947–1958. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Jones, D.R. A taxonomy of global optimization methods based on response surfaces. J. Glob. Optim. 2001, 21, 345–383. [Google Scholar] [CrossRef]

- Kushner, H.J. A new method of locating the maximum point of an arbitrary multipeak curve in the presence of noise. J. Basic Eng. Mar. 1964, 86, 97–106. [Google Scholar] [CrossRef]

- Mockus, J.; Tiesis, V.; Zilinskas, A. The application of Bayesian methods for seeking the extremum. Towards Glob. Optim. 1978, 2, 2. [Google Scholar]

- Srinivas, N.; Krause, A.; Kakade, S.M.; Seeger, M. Gaussian process optimization in the bandit setting: No regret and experimental design. arXiv 2009, arXiv:0912.3995. [Google Scholar]

- Wang, G.C.; Zhang, Q.; Band, S.S.; Dehghani, M.; Chau, K.W.; Tho, Q.T.; Zhu, S.; Samadianfard, S.; Mosavi, A. Monthly and seasonal hydrological drought forecasting using multiple extreme learning machine models. Eng. Appl. Comput. Fluid Mech. 2022, 16, 1364–1381. [Google Scholar] [CrossRef]

- Singh, V.K.; Panda, K.C.; Sagar, A.; Al-Ansari, N.; Duan, H.F.; Paramaguru, P.K.; Vishwakarma, D.K.; Kumar, A.; Kumar, D.; Kashyap, P.S.; et al. Novel Genetic Algorithm (GA) based hybrid machine learning-pedotransfer Function (ML-PTF) for prediction of spatial pattern of saturated hydraulic conductivity. Eng. Appl. Comput. Fluid Mech. 2022, 16, 1082–1099. [Google Scholar] [CrossRef]

- Campolongo, F.; Saltelli, A.; Cariboni, J. From screening to quantitative sensitivity analysis. A Unified Approach. Comput. Phys. Commun. 2011, 182, 978–988. [Google Scholar] [CrossRef]

- Saltelli, A. Making best use of model evaluations to compute sensitivity indices. Comput. Phys. Commun. 2002, 145, 280–297. [Google Scholar] [CrossRef]

- Saltelli, A.; Annoni, P.; Azzini, I.; Campolongo, F.; Ratto, M.; Tarantola, S. Variance based sensitivity analysis of model output. Design and estimator for the total sensitivity index. Comput. Phys. Commun. 2010, 181, 259–270. [Google Scholar] [CrossRef]

- Yang, Y.; Yang, Y.; Shuai, Q. The hydraulic and aeration characteristics of low Froude number flow over a step aerator. J. Hydraul. Eng. 2000, 31, 27–31. [Google Scholar]

| α | h/(cm) | v/(m/s) | Fr | s/(cm) | φ | L/(cm) | Samplers | |

|---|---|---|---|---|---|---|---|---|

| Data1 (Zhang) | 0.087 0.105 0.122 | 2.5~8.5 | 1.5~6.0 | 2.95~7.77 | 1.5 2.5 3.0 4.0 | 0.1 | 13.5~75.0 | 108 |

| Data2 (Shen) | 0.07 0.087 0.096 | 1.25~3.40 | 1.0~1.8 | 2.46~3.90 | 1.0 2.0 3.0 | 0.2 0.143 0.1 | 4.9~26.5 | 162 |

| Model | Input Configuration | Training Set Ratio (%) | |

|---|---|---|---|

| Name | Features | ||

| SVR | input1 | 90 80 70 60 50 | |

| input2 | |||

| input3 | |||

| Model | Hyperparameters | Time (s) | ||

|---|---|---|---|---|

| SVR-BO | C = 47.84 | γ = 8.08 | ε = 0.068 | 21.8 |

| RF-BO | n_estimators = 28 | max_depth = 50 | —— | 305.1 |

| GBDT-BO | n_estimators = 55 | max_depth = 9 | Learning_rate = 0.43 | 29.7 |

| XGboost-BO | n_estimators = 47 | max_depth = 3 | Learning_rate = 0.29 | 145.7 |

| Model | Train | Test | ||||

|---|---|---|---|---|---|---|

| R2 | RMSE | MAE | R2 | RMSE | MAE | |

| SVR | 0.931 | 0.067 | 0.06 | 0.921 | 0.081 | 0.060 |

| SVR-BO | 0.929 | 0.068 | 0.057 | 0.936 | 0.069 | 0.058 |

| RF | 0.989 | 0.0268 | 0.020 | 0.924 | 0.076 | 0.057 |

| RF-BO | 0.989 | 0.0264 | 0.020 | 0.947 | 0.063 | 0.050 |

| GBDT | 0.976 | 0.04 | 0.029 | 0.949 | 0.062 | 0.047 |

| GBDT-BO | 1.000 | 0 | 0 | 0.957 | 0.056 | 0.046 |

| XGBOOST | 0.999 | 0.00077 | 0.0016 | 0.921 | 0.077 | 0.055 |

| XGBOOST-BO | 0.964 | 0.015 | 0.038 | 0.964 | 0.051 | 0.036 |

| Reference | Equation | Parameters | R2 |

|---|---|---|---|

| Rutschman, 1990 [4] | , emergence angle | 0.617 | |

| Chanson, 2010 [5] | 0.758 | ||

| Yang, 2000 [42] | 0.945 | ||

| Wu, 2008 [3] | 0.948 | ||

| Pfister, 2010 [6] | 0.868 |

| α | Fr | φ | s/h | |||||

|---|---|---|---|---|---|---|---|---|

| Total Index | First Index | Total Index | First Index | Total Index | First Index | Total Index | First Index | |

| SVR-BO | 0.235 | 0.0057 | 0.711 | 0.091 | 0.126 | 0.038 | 0.646 | 0.20 |

| RF-BO | 0.044 | 0.020 | 0.813 | 0.658 | 0.020 | 0.008 | 0.263 | 0.152 |

| GBDT-BO | 0.049 | 0.021 | 0.789 | 0.607 | 0.057 | 0.016 | 0.292 | 0.145 |

| XGBOOST-BO | 0.064 | 0.023 | 0.703 | 0.484 | 0.123 | 0.098 | 0.332 | 0.171 |

| α, Fr | α, φ | α, s/h | Fr, φ | Fr, s/h | φ, s/h | |

|---|---|---|---|---|---|---|

| SVR-BO | 0.143 | 0.057 | 0.068 | 0.041 | 0.39 | 0.014 |

| RF-BO | 0.017 | 0.0 | 0.036 | 0.013 | 0.100 | 0.006 |

| GBDT-BO | 0.010 | 0.0 | 0.0 | 0.020 | 0.120 | 0.010 |

| XGBOOST-BO | 0.020 | 0.0 | 0.014 | 0.030 | 0.140 | 0.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, G.; Li, S.; Liu, Y.; Cao, Z.; Deng, Y. Prediction of Cavity Length Using an Interpretable Ensemble Learning Approach. Int. J. Environ. Res. Public Health 2023, 20, 702. https://doi.org/10.3390/ijerph20010702

Guo G, Li S, Liu Y, Cao Z, Deng Y. Prediction of Cavity Length Using an Interpretable Ensemble Learning Approach. International Journal of Environmental Research and Public Health. 2023; 20(1):702. https://doi.org/10.3390/ijerph20010702

Chicago/Turabian StyleGuo, Ganggui, Shanshan Li, Yakun Liu, Ze Cao, and Yangyu Deng. 2023. "Prediction of Cavity Length Using an Interpretable Ensemble Learning Approach" International Journal of Environmental Research and Public Health 20, no. 1: 702. https://doi.org/10.3390/ijerph20010702

APA StyleGuo, G., Li, S., Liu, Y., Cao, Z., & Deng, Y. (2023). Prediction of Cavity Length Using an Interpretable Ensemble Learning Approach. International Journal of Environmental Research and Public Health, 20(1), 702. https://doi.org/10.3390/ijerph20010702