The Two-Stage Ensemble Learning Model Based on Aggregated Facial Features in Screening for Fetal Genetic Diseases

Abstract

:1. Introduction

2. Materials and Methods

2.1. Data Acquisition

2.2. Date Preprocessing

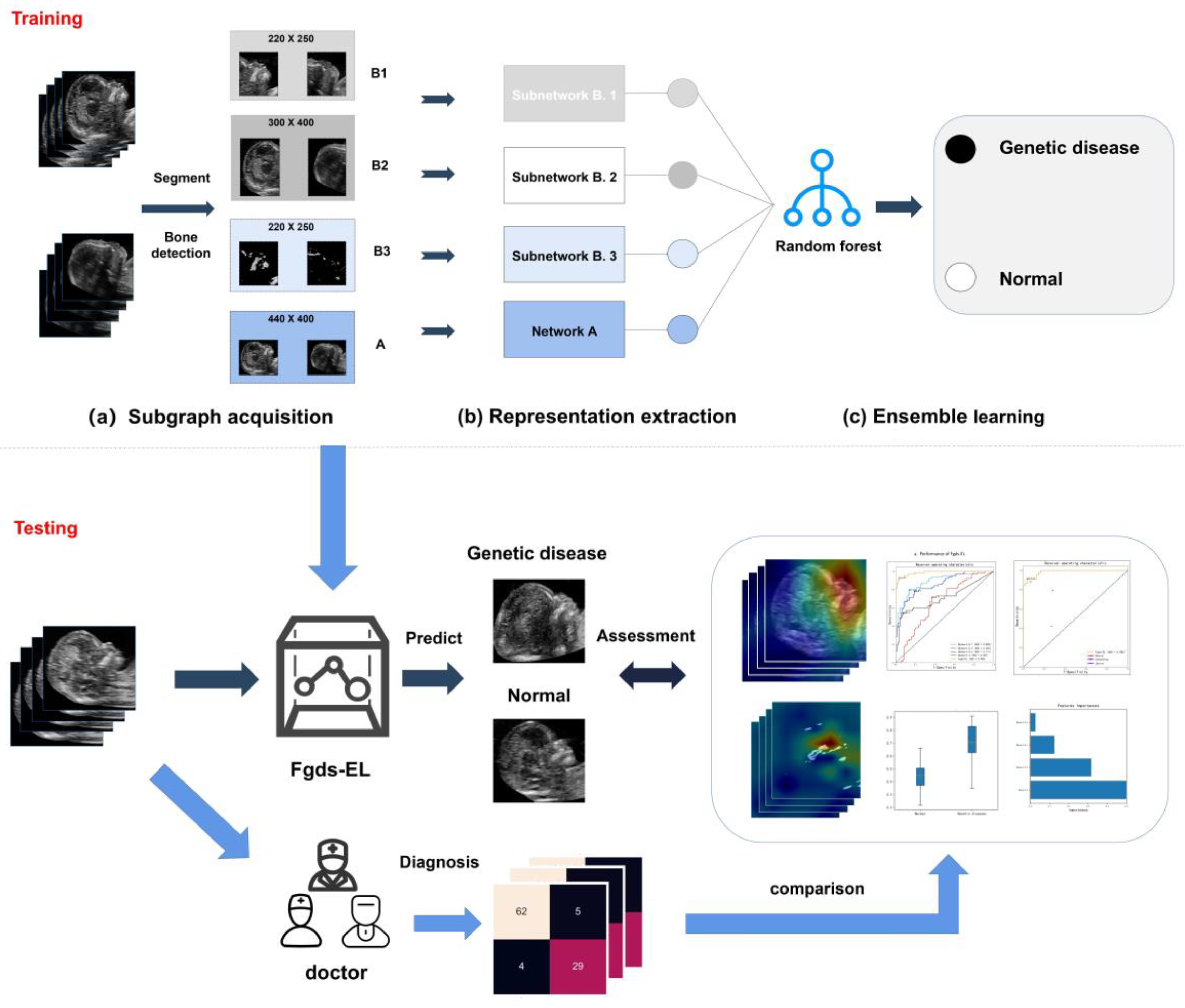

2.3. Ensemble Learning Architecture

2.3.1. Subgraph Acquisition

2.3.2. Fetal Facial Representation Extraction

2.3.3. Ensemble Learning

2.4. Performance Evaluation

2.5. Performance Comparison between the Fgds-EL and Prenatal Diagnostic Doctor

2.6. Heat Map Generation

3. Results

3.1. Dataset Characteristics

3.2. Performance of the Fgds-EL and Each Subnetwork

3.3. Performance of the Fgds-EL and Other Deep Learning Algorithms

3.4. Importance Score from Fgds-EL

3.5. Fgds-EL Detects Facial Abnormalities by Subnetworks’ Heatmaps

3.6. Fgds-EL’s Performance Is on Par with the Senior Sonographers

4. Discussion

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Baird, P.A.; Anderson, T.; Newcombe, H.; Lowry, R. Genetic disorders in children and young adults: A population study. Am. J. Hum. Genet. 1988, 42, 677–693. [Google Scholar] [PubMed]

- McKusick-Nathans Institute of Genetic Medicine. Online Mendelian Inheritance in Man; OMIM: Johns Hopkins University: Baltimore, MD, USA, 2020. [Google Scholar]

- Hart, T.; Hart, P. Genetic studies of craniofacial anomalies: Clinical implications and applications. Orthod. Craniofac. Res. 2009, 12, 212–220. [Google Scholar] [CrossRef] [Green Version]

- Gonzaludo, N.; Belmont, J.W.; Gainullin, V.G.; Taft, R.J. Estimating the burden and economic impact of pediatric genetic disease. Genet. Med. 2019, 21, 1781–1789. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lo, J.O.; Feist, C.D.; Norton, M.E.; Caughey, A.B. Noninvasive prenatal testing. Obstet. Gynecol. Surv. 2014, 69, 89–99. [Google Scholar] [CrossRef] [PubMed]

- Reddy, U.M.; Filly, R.A.; Copel, J.A. Prenatal imaging: Ultrasonography and magnetic resonance imaging nih public access author manuscript. Obstet. Gynecol. 2019, 112, 145. [Google Scholar] [CrossRef] [PubMed]

- Ettema, A.M.; Wenghoefer, M.; Hansmann, M.; Carels, C.E.; Borstlap, W.A.; Berge, S.J. Prenatal diagnosis of craniomaxillofacial malformations: A characterization of phenotypes in trisomies 13, 18, and 21 by ultrasound and pathology. Cleft Palate Craniofac. J. 2010, 47, 189–196. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Renna, M.D.; Pisani, P.; Conversano, F.; Perrone, E.; Casciaro, E.; Di Renzo, G.C.; Di Paola, M.; Perrone, A.; Casciaro, S. Sonographic markers for early diagnosis of fetal malformations. World J. Radiol. 2013, 5, 356. [Google Scholar] [CrossRef] [PubMed]

- Hsiao, C.H.; Liu, W.L.; Chen, R.C.; Cheng, B.J.; Tseng, Y.J.; Chu, W.C. The fetal frontomaxillary facial angle in normal and trisomy 21 ultrasounds at 11–13+6 weeks of gestation: Findings among the ethnic Chinese compared with Caucasian. Prenat Diagn. 2013, 33, 711–715. [Google Scholar] [CrossRef] [PubMed]

- Chen, M.; Wang, H.F.; Leung, T.Y.; Sahota, D.S.; Borenstein, M.; Nicolaides, K.; Lao, T.T.; Lau, T.K. Frontomaxillary facial angle at 11 + 0 to 13 + 6 weeks in Chinese population. J. Matern. Fetal Neonatal Med. 2011, 24, 498–501. [Google Scholar] [CrossRef]

- Cicero, S.; Curcio, P.; Rembouskos, G.; Sonek, J.; Nicolaides, K.H. Maxillary length at 11–14 weeks of gestation in fetuses with trisomy 21. Ultrasound Obstet. Gynecol. 2004, 24, 19–22. [Google Scholar] [CrossRef]

- Sacchini, C.; El-Sheikhah, A.; Cicero, S.; Rembouskos, G.; Nicolaides, K.H. Ear length in trisomy 21 fetuses at 11–14 weeks of gestation. Ultrasound Obstet. Gynecol. 2003, 22, 460–463. [Google Scholar] [CrossRef] [Green Version]

- Piard, J.; Philippe, C.; Marvier, M.; Beneteau, C.; Roth, V.; Valduga, M.; Beri, M.; Bonnet, C.; Gregoire, M.; Jonveaux, P.; et al. Clinical and molecular characterization of a large family with an interstitial 15q11q13 duplication. Am. J. Med. Genet. Part A 2010, 152, 1933–1941. [Google Scholar] [CrossRef] [PubMed]

- Durmaz, C.D.; Altıner, S.; Taşdelen, E.; Karabulut, H.G.; Ruhi, H.I. Extending Phenotypic Spectrum of 17q22 Microdeletion: Growth Hormone Deficiency. Fetal Pediatr. Pathol. 2021, 40, 486–492. [Google Scholar] [CrossRef] [PubMed]

- Breen, M.S.; Garg, P.; Tang, L.; Mendonca, D.; Levy, T.; Barbosa, M.; Arnett, A.B.; Kurtz-Nelson, E.; Agolini, E.; Battaglia, A.; et al. Episignatures stratifying Helsmoortel-Van Der Aa syndrome show modest correlation with phenotype. Am. J. Hum. Genet. 2020, 107, 555–563. [Google Scholar] [CrossRef] [PubMed]

- Haleem, A.; Javaid, M.; Khan, I.H. Current status and applications of artificial intelligence (AI) in medical field: An overview. Curr. Med. Res. Pract. 2019, 9, 231–237. [Google Scholar] [CrossRef]

- Sjoding, M.W.; Taylor, D.; Motyka, J.; Lee, E.; Co, I.; Claar, D.; McSparron, J.; Ansari, S.; Kerlin, M.; Reilly, J.; et al. Deep learning to detect acute respiratory distress syndrome on chest radiographs: A retrospective study with external validation. Lancet Digit. Health 2021, 3, e340–e348. [Google Scholar] [CrossRef]

- Li, Z.; Jiang, J.; Chen, K.; Chen, Q.; Zheng, Q.; Liu, X.; Weng, H.; Wu, S.; Chen, W. Preventing corneal blindness caused by keratitis using artificial intelligence. Nat. Commun. 2021, 12, 3738. [Google Scholar] [CrossRef]

- Soenksen, L.R.; Kassis, T.; Conover, S.T.; Marti-Fuster, B.; Birkenfeld, J.S.; Tucker-Schwartz, J.; Naseem, A.; Stavert, R.R.; Kim, C.C.; Senna, M.M.; et al. Using deep learning for dermatologist-level detection of suspicious pigmented skin lesions from wide-field images. Sci. Transl. Med. 2021, 13, eabb3652. [Google Scholar] [CrossRef]

- Chen, H.; Ni, D.; Qin, J.; Li, S.; Yang, X.; Wang, T.; Heng, P.A. Standard plane localization in fetal ultrasound via domain transferred deep neural networks. IEEE J. Biomed. Health Inform. 2015, 19, 1627–1636. [Google Scholar] [CrossRef]

- Qu, R.; Xu, G.; Ding, C.; Jia, W.; Sun, M. Standard plane identification in fetal brain ultrasound scans using a differential convolutional neural network. IEEE Access 2020, 8, 83821–83830. [Google Scholar] [CrossRef]

- Schmidt-Richberg, A.; Brosch, T.; Schadewaldt, N.; Klinder, T.; Cavallaro, A.; Salim, I.; Roundhill, D.; Papageorghiou, A.; Lorenz, C. Abdomen Segmentation in 3D Fetal Ultrasound Using CNN-Powered Deformable Models. In Fetal, Infant and Ophthalmic Medical Image Analysis; Springer: Berlin/Heidelberg, Germany, 2017; pp. 52–61. [Google Scholar]

- Lin, M.; He, X.; Guo, H.; He, M.; Zhang, L.; Xian, J.; Lei, T.; Xu, Q.; Zheng, J.; Feng, J.; et al. Use of real-time artificial intelligence in detection of abnormal image patterns in standard sonographic reference planes in screening for fetal intracranial malformations. Ultrasound Obstet. Gynecol. 2022, 59, 304–316. [Google Scholar] [CrossRef]

- Xie, H.N.; Wang, N.; He, M.; Zhang, L.H.; Cai, H.M.; Xian, J.B.; Lin, M.F.; Zheng, J.; Yang, Y.Z. Using deep-learning algorithms to classify fetal brain ultrasound images as normal or abnormal. Ultrasound Obstet. Gynecol. 2020, 56, 579–587. [Google Scholar] [CrossRef] [PubMed]

- Arnaout, R.; Curran, L.; Zhao, Y.; Levine, J.C.; Chinn, E.; Moon-Grady, A.J. An ensemble of neural networks provides expert-level prenatal detection of complex congenital heart disease. Nat. Med. 2021, 27, 882–891. [Google Scholar] [CrossRef] [PubMed]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef] [Green Version]

- Gurovich, Y.; Hanani, Y.; Bar, O.; Nadav, G.; Fleischer, N.; Gelbman, D.; Basel-Salmon, L.; Krawitz, P.M.; Zenker, M.; Gripp, K.W.; et al. Identifying facial phenotypes of genetic disorders using deep learning. Nat. Med. 2019, 25, 60–64. [Google Scholar] [CrossRef]

- Porras, A.R.; Rosenbaum, K.; Tor-Diez, C.; Summar, M.; Linguraru, M.G. Development and evaluation of a machine learning-based point-of-care screening tool for genetic syndromes in children: A multinational retrospective study. Lancet Digit. Health 2021, 3, 635–643. [Google Scholar] [CrossRef] [PubMed]

- Wee, L.K.; Arooj, A.; Supriyanto, E. Computerized automatic nasal bone detection based on ultrasound fetal images using cross correlation techniques. WSEAS Trans. Inf. Sci. Appl. 2010, 7, 1068–1077. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Li, Z.; Qiang, W.; Chen, H.; Pei, M.; Yu, X.; Wang, L.; Li, Z.; Xie, W.; Wu, X.; Jiang, J.; et al. Artificial intelligence to detect malignant eyelid tumors from photographic images. NPJ Digit. Med. 2022, 5, 23. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Wu, C.; Zhang, Z.; Zhu, Y.; Lin, H.; Zhang, Z.; Sun, Y.; He, T.; Mueller, J.; Manmatha, R.; et al. Resnest: Split-Attention Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 2736–2746. [Google Scholar]

- Li, Y.; Yuan, G.; Wen, Y.; Hu, J.; Evangelidis, G.; Tulyakov, S.; Wang, Y.; Ren, J. EfficientFormer: Vision Transformers at MobileNet Speed. arXiv 2021, arXiv:2206.01191. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Ding, X.; Zhang, X.; Han, J.; Ding, G. Scaling up your kernels to 31 × 31: Revisiting large kernel design in cnns. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 11963–11975. [Google Scholar]

- Costello, B.J.; Edwards, S.P. Prenatal diagnosis and treatment of craniomaxillofacial anomalies. Oral Maxillofac. Surg. Clin. 2010, 22, 5–15. [Google Scholar] [CrossRef] [PubMed]

- Cnota, W.; Borowski, D.; Wloch, A. Pomiar kąta twarzowo-szczękowego u płodów pomiędzy 11+ 0 a 13+ 6 tygodniem ciąży. Zastosowanie w diagnostyce prenatalnej trisomii 21. Ginekol. Pol. 2013, 84, 624–629. [Google Scholar]

- Yang, X.; Zhen, L.; Pan, M.; Han, J.; Li, D.; Liao, C. PT/NBL ratio assessment at mid-trimester in prenatal screening for Down syndrome in a Chinese population. J. Matern. Fetal Neonatal Med. 2014, 27, 1860–1863. [Google Scholar] [CrossRef] [PubMed]

- Gilboa, Y.; Achiron, R.; Kivilevitch, Z.; Hochberg, A.; Bardin, R.; Zalel, Y.; Perlman, S. Imaging of the Fetal Zygomatic Bone: A Key Role in Prenatal Diagnosis of First Branchial Arch Syndrome. J. Ultrasound Med. 2020, 39, 2165–2172. [Google Scholar] [CrossRef] [PubMed]

| Development (Training) Set | Test Set | |

|---|---|---|

| No. of pregnancies with genetic anomaly | 111 | |

| No. of normal pregnancies | 556 | |

| Ultrasonic equipment | GE Volution E10 | |

| Institution | Guangzhou Women and Children’s Medical Center | |

| Total no. of qualified ultrasound images | 776 | 156 |

| Genetic disease images | 189 | 63 |

| Normal pregnancies images | 587 | 93 |

| AUROC (95% CI) | Sensitivity (95% CI) | Specificity (95% CI) | F1 | |

|---|---|---|---|---|

| Fgds-EL | 0.986 (0.984–0.987) | 0.92 (0.82–0.97) | 0.97 (0.90–0.99) | 0.935 |

| Network A | 0.857 (0.849–0.861) | 0.76 (0.64–0.86) | 0.81 (0.71–0.88) | 0.744 |

| Network B.1 | 0.889 (0.884–0.896) | 0.78 (0.65–0.87) | 0.86 (0.77–0.92) | 0.784 |

| Network B.2 | 0.660 (0.654–0.671) | 0.70 (0.57–0.80) | 0.63 (0.52–0.72) | 0.620 |

| Network B.3 | 0.711 (0.703–0.721) | 0.52 (0.40–0.65) | 0.93 (0.85–0.97) | 0.641 |

| AUROC (95% CI) | Sensitivity (95% CI) | Specificity (95% CI) | F1 | Loading Time (Seconds) | Prediction Time (Seconds) | |

|---|---|---|---|---|---|---|

| Fgds-EL | 0.986 (0.984–0.987) | 0.92 (0.82–0.97) | 0.97 (0.90–0.99) | 0.935 | 2.638 | 0.942 |

| ResNet-50 | 0.870 (0.862–0.875) | 0.76 (0.64–0.86) | 0.85 (0.76–0.91) | 0.768 | 1.535 | 0.503 |

| DenseNet-169 | 0.920 (0.915–0.926) | 0.89 (0.78–0.95) | 0.88 (0.80–0.94) | 0.862 | 3.167 | 1.446 |

| DenseNet-201 | 0.909 (0.907–0.915) | 0.73 (0.60–0.83) | 0.91 (0.83–0.96) | 0.786 | 3.843 | 1.744 |

| VGG-16 | 0.818 (0.812–0.828) | 0.70 (0.57–0.80) | 0.95 (0.87–0.98) | 0.786 | 1.377 | 0.102 |

| ResNest50 | 0.959 (0.956–0.963) | 0.889 (0.778–0.950) | 0.883 (0.796–0.937) | 0.862 | 24.457 | 2.232 |

| EfficientFormer | 0.890 (0.883–0.894) | 0.714 (0.585–0.818) | 0.926 (0.848–0.967) | 0.783 | 5.050 | 0.956 |

| Swin Transformer | 0.915 (0.912–0.921) | 0.857 (0.741–0.929) | 0.830 (0.735–0.897) | 0.812 | 0.931 | 0.211 |

| RepLKNet | 0.916 (0.912–0.922) | 0.857 (0.741–0.929) | 0.840 (0.747–0.905) | 0.818 | 34.128 | 1.534 |

| Fgds-EL | Junior | Attending | Senior | |

|---|---|---|---|---|

| Accuracy | 0.93 | 0.63 | 0.74 | 0.91 |

| Sensitivity (95% CI) | 0.91 (0.78–0.97) | 0.58 (0.42–0.72) | 0.84 (0.70–0.93) | 0.91 (0.78–0.97) |

| Specificity (95% CI) | 0.95 (0.84–0.98) | 0.67 (0.53–0.79) | 0.65 (0.51–0.77) | 0.91 (0.79–0.97) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tang, J.; Han, J.; Xie, B.; Xue, J.; Zhou, H.; Jiang, Y.; Hu, L.; Chen, C.; Zhang, K.; Zhu, F.; et al. The Two-Stage Ensemble Learning Model Based on Aggregated Facial Features in Screening for Fetal Genetic Diseases. Int. J. Environ. Res. Public Health 2023, 20, 2377. https://doi.org/10.3390/ijerph20032377

Tang J, Han J, Xie B, Xue J, Zhou H, Jiang Y, Hu L, Chen C, Zhang K, Zhu F, et al. The Two-Stage Ensemble Learning Model Based on Aggregated Facial Features in Screening for Fetal Genetic Diseases. International Journal of Environmental Research and Public Health. 2023; 20(3):2377. https://doi.org/10.3390/ijerph20032377

Chicago/Turabian StyleTang, Jiajie, Jin Han, Bingbing Xie, Jiaxin Xue, Hang Zhou, Yuxuan Jiang, Lianting Hu, Caiyuan Chen, Kanghui Zhang, Fanfan Zhu, and et al. 2023. "The Two-Stage Ensemble Learning Model Based on Aggregated Facial Features in Screening for Fetal Genetic Diseases" International Journal of Environmental Research and Public Health 20, no. 3: 2377. https://doi.org/10.3390/ijerph20032377

APA StyleTang, J., Han, J., Xie, B., Xue, J., Zhou, H., Jiang, Y., Hu, L., Chen, C., Zhang, K., Zhu, F., & Lu, L. (2023). The Two-Stage Ensemble Learning Model Based on Aggregated Facial Features in Screening for Fetal Genetic Diseases. International Journal of Environmental Research and Public Health, 20(3), 2377. https://doi.org/10.3390/ijerph20032377