When Artificial Intelligence Voices Human Concerns: The Paradoxical Effects of AI Voice on Climate Risk Perception and Pro-Environmental Behavioral Intention

Abstract

1. Introduction

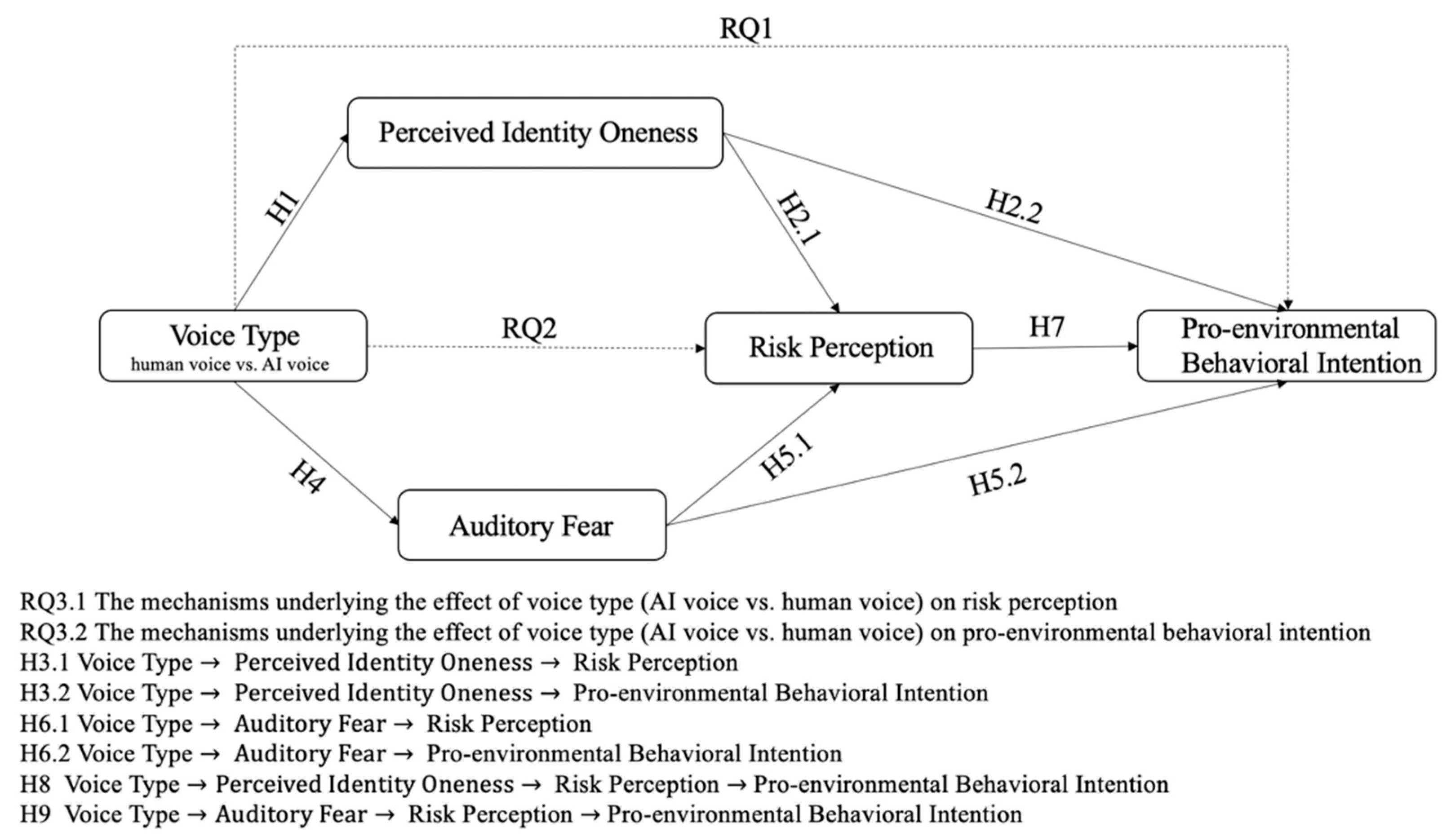

2. Theoretical Background and Hypotheses Development

2.1. Persuasive Effects of AI Voice and Human Voice

2.2. Social Heuristics: The Mediating Role of Perceived Identity Oneness

2.3. Affect Heuristics: The Mediating Role of Auditory Fear

2.4. Parallel Mediation Effect of Perceived Identity Oneness and Auditory Fear

3. Materials and Methods

3.1. Participants

3.2. Stimuli

3.3. Procedure

3.4. Measures

3.4.1. Manipulation Check

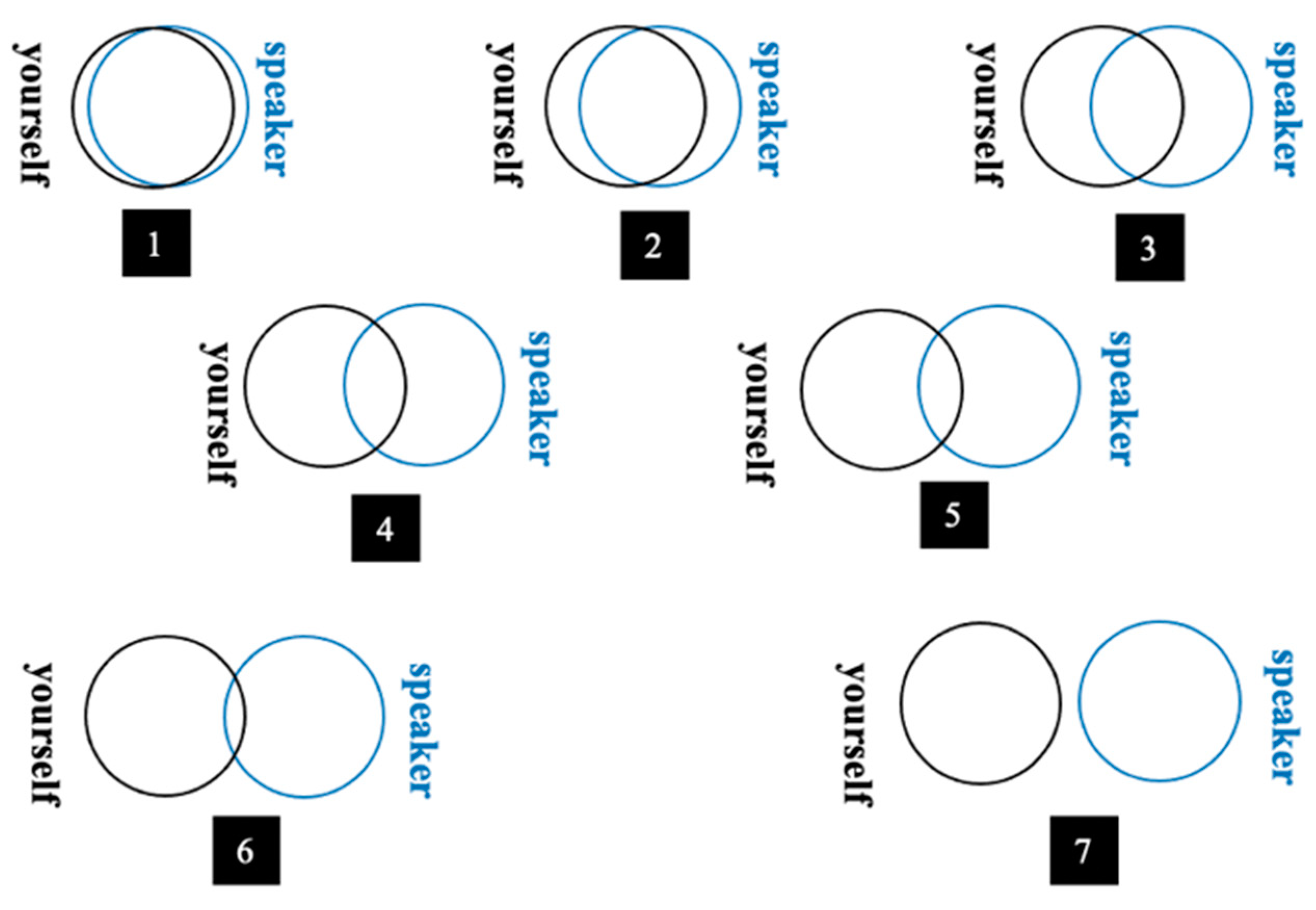

3.4.2. Perceived Identity Oneness

3.4.3. Auditory Fear

3.4.4. Risk Perception

3.4.5. Pro-Environmental Behavioral Intention

3.5. Statistical Analyses

4. Results

4.1. Preliminary Analyses

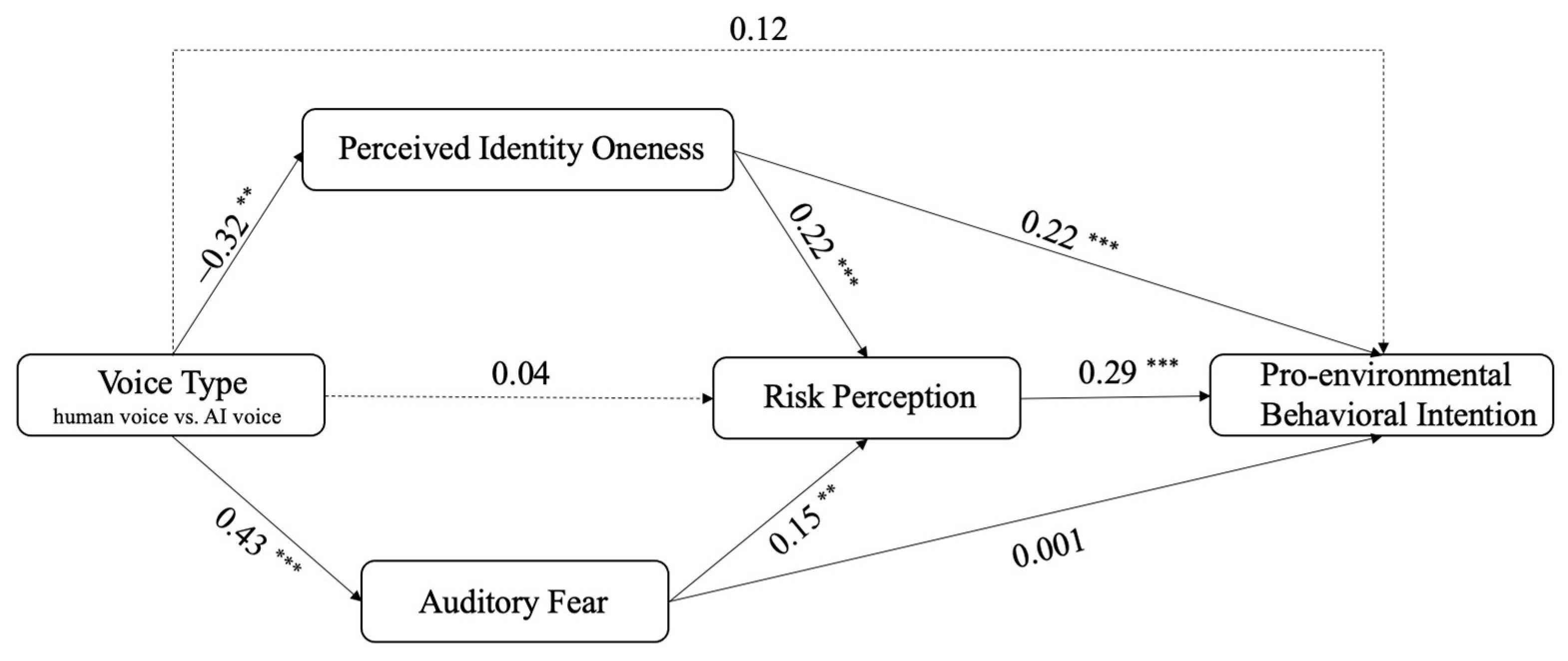

4.2. Main Effects

4.3. Parallel Mediation Effects

4.4. Serial Mediation Analyses

5. Discussion

5.1. Theoretical Implications

5.2. Practical Implications

5.3. Limitations and Future Research

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

References

- Haltinner, K.; Sarathchandra, D. Considering Attitudinal Uncertainty in the Climate Change Skepticism Continuum. Glob. Environ. Change 2021, 68, 102243. [Google Scholar] [CrossRef]

- Poortinga, W.; Pidgeon, N.F. Exploring the Dimensionality of Trust in Risk Regulation. Risk Anal. Int. J. 2003, 23, 961–972. [Google Scholar] [CrossRef] [PubMed]

- Jay, M.; Marmot, M.G. Health and Climate Change. BMJ 2009, 339, b3669. [Google Scholar] [CrossRef] [PubMed]

- Haines, A.; Kovats, R.S.; Campbell-Lendrum, D.; Corvalán, C. Climate Change and Human Health: Impacts, Vulnerability and Public Health. Public Health 2006, 120, 585–596. [Google Scholar] [CrossRef] [PubMed]

- Brick, C.; McDowell, M.; Freeman, A.L. Risk Communication in Tables versus Text: A Registered Report Randomized Trial on ‘fact Boxes’. R. Soc. Open Sci. 2020, 7, 190876. [Google Scholar] [CrossRef]

- Carvalho, A.; Burgess, J. Cultural Circuits of Climate Change in UK Broadsheet Newspapers, 1985–2003. Risk Anal. Int. J. 2005, 25, 1457–1469. [Google Scholar] [CrossRef]

- Liu, B.; Wei, L. Machine Authorship in Situ: Effect of News Organization and News Genre on News Credibility. Digit. J. 2019, 7, 635–657. [Google Scholar] [CrossRef]

- Xu, R.; Cao, J.; Wang, M.; Chen, J.; Zhou, H.; Zeng, Y.; Wang, Y.; Chen, L.; Yin, X.; Zhang, X. Xiaomingbot: A Multilingual Robot News Reporter. arXiv 2020, arXiv:2007.08005. [Google Scholar]

- Goh, A.S.Y.; Wong, L.L.; Yap, K.Y.-L. Evaluation of COVID-19 Information Provided by Digital Voice Assistants. Int. J. Digit. Health 2021, 1, 1–11. [Google Scholar] [CrossRef]

- Kim, J.; Xu, K.; Merrill, K., Jr. Man vs. Machine: Human Responses to an AI Newscaster and the Role of Social Presence. Soc. Sci. J. 2022, 1–13. [Google Scholar] [CrossRef]

- Lee, S.; Oh, J.; Moon, W.-K. Adopting Voice Assistants in Online Shopping: Examining the Role of Social Presence, Performance Risk, and Machine Heuristic. Int. J. Hum. Comput. Interact. 2022, 1–15. [Google Scholar] [CrossRef]

- Aronovitch, C.D. The Voice of Personality: Stereotyped Judgments and Their Relation to Voice Quality and Sex of Speaker. J. Soc. Psychol. 1976, 99, 207–220. [Google Scholar] [CrossRef] [PubMed]

- Apple, W.; Streeter, L.A.; Krauss, R.M. Effects of Pitch and Speech Rate on Personal Attributions. J. Personal. Soc. Psychol. 1979, 37, 715–727. [Google Scholar] [CrossRef]

- Cialdini, R.B.; Brown, S.L.; Lewis, B.P.; Luce, C.; Neuberg, S.L. Reinterpreting the Empathy–Altruism Relationship: When One into One Equals Oneness. J. Personal. Soc. Psychol. 1997, 73, 481. [Google Scholar] [CrossRef]

- Whitmer, D.E.; Sims, V.K. Fear Language in a Warning Is Beneficial to Risk Perception in Lower-Risk Situations. Hum. Factors 2021, 0, 00187208211029444. [Google Scholar] [CrossRef]

- Hasan, R.; Shams, R.; Rahman, M. Consumer Trust and Perceived Risk for Voice-Controlled Artificial Intelligence: The Case of Siri. J. Bus. Res. 2021, 131, 591–597. [Google Scholar] [CrossRef]

- Nallam, P.; Bhandari, S.; Sanders, J.; Martin-Hammond, A. A Question of Access: Exploring the Perceived Benefits and Barriers of Intelligent Voice Assistants for Improving Access to Consumer Health Resources Among Low-Income Older Adults. Gerontol. Geriatr. Med. 2020, 6, 2333721420985975. [Google Scholar] [CrossRef]

- Stern, S.E.; Mullennix, J.W.; Dyson, C.; Wilson, S.J. The Persuasiveness of Synthetic Speech versus Human Speech. Hum. Factors 1999, 41, 588–595. [Google Scholar] [CrossRef]

- Stern, S.E.; Mullennix, J.W. Sex Differences in Persuadability of Human and Computer-Synthesized Speech: Meta-Analysis of Seven Studies. Psychol. Rep. 2004, 94, 1283–1292. [Google Scholar] [CrossRef]

- Chérif, E.; Lemoine, J.-F. Anthropomorphic Virtual Assistants and the Reactions of Internet Users: An Experiment on the Assistant’s Voice. Rech. Appl. Mark. 2019, 34, 28–47. [Google Scholar] [CrossRef]

- Zanbaka, C.; Goolkasian, P.; Hodges, L. Can a Virtual Cat Persuade You? The Role of Gender and Realism in Speaker Persuasiveness. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 22–27 April 2006; pp. 1153–1162. [Google Scholar] [CrossRef]

- Ogawa, K.; Bartneck, C.; Sakamoto, D.; Kanda, T.; Ono, T.; Ishiguro, H. Can an Android Persuade You? In Proceedings of the RO-MAN 2009-The 18th IEEE International Symposium on Robot and Human Interactive Communication, Toyama, Japan, 27 September–2 October 2009; pp. 516–521. [Google Scholar] [CrossRef]

- Wang, S.; Leviston, Z.; Hurlstone, M.; Lawrence, C.; Walker, I. Emotions Predict Policy Support: Why It Matters How People Feel about Climate Change. Glob. Environ. Change 2018, 50, 25–40. [Google Scholar] [CrossRef]

- Palosaari, E.; Herne, K.; Lappalainen, O.; Hietanen, J.K. Effects of Fear on Donations to Climate Change Mitigation. J. Exp. Soc. Psychol. 2023, 104, 104422. [Google Scholar] [CrossRef]

- Gustafson, A.; Ballew, M.T.; Goldberg, M.H.; Cutler, M.J.; Rosenthal, S.A.; Leiserowitz, A. Personal Stories Can Shift Climate Change Beliefs and Risk Perceptions: The Mediating Role of Emotion. Commun. Rep. 2020, 33, 121–135. [Google Scholar] [CrossRef]

- Corner, A.; Whitmarsh, L.; Xenias, D. Uncertainty, Scepticism and Attitudes towards Climate Change: Biased Assimilation and Attitude Polarisation. Clim. Change 2012, 114, 463–478. [Google Scholar] [CrossRef]

- Chen, M.-F. Impact of Fear Appeals on Pro-Environmental Behavior and Crucial Determinants. Int. J. Advert. 2016, 35, 74–92. [Google Scholar] [CrossRef]

- Dong, Y.; Hu, S.; Zhu, J. From Source Credibility to Risk Perception: How and When Climate Information Matters to Action. Resour. Conserv. Recycl. 2018, 136, 410–417. [Google Scholar] [CrossRef]

- Yoon, A.; Jeong, D.; Chon, J. The Impact of the Risk Perception of Ocean Microplastics on Tourists’ pro-Environmental Behavior Intention. Sci. Total Environ. 2021, 774, 144782. [Google Scholar] [CrossRef]

- Maner, J.K.; Luce, C.L.; Neuberg, S.L.; Cialdini, R.B.; Brown, S.; Sagarin, B.J. The Effects of Perspective Taking on Motivations for Helping: Still No Evidence for Altruism. Personal. Soc. Psychol. Bull. 2002, 28, 1601–1610. [Google Scholar] [CrossRef]

- Aron, A.; Lewandowski, G.W., Jr.; Mashek, D.; Aron, E.N. The Self-Expansion Model of Motivation and Cognition in Close Relationships. In The Oxford Handbook of Close Relationships; Oxford University Press: Oxford, UK, 2013; pp. 90–115. [Google Scholar] [CrossRef]

- Aron, A.; Norman, C.C.; Aron, E.N.; Lewandowski, G. Shared Participation in Self-Expanding Activities: Positive Effects on Experienced Marital Quality. In Understanding Marriage: Developments in the Study Couple Interaction; Cambridge University Press: Cambridge, UK, 2002; pp. 177–194. [Google Scholar] [CrossRef]

- Lee, S.; Bai, B.; Busser, J.A. Pop Star Fan Tourists: An Application of Self-Expansion Theory. Tour. Manag. 2019, 72, 270–280. [Google Scholar] [CrossRef]

- Stürmer, S.; Snyder, M.; Omoto, A.M. Prosocial Emotions and Helping: The Moderating Role of Group Membership. J. Personal. Soc. Psychol. 2005, 88, 532–546. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Beveridge, A.J.; Fu, P.P. Put Yourself in Others’ Age: How Age Simulation Facilitates Inter-Generational Cooperation. Proceedings 2018, 2018, 16250. [Google Scholar] [CrossRef]

- Mael, F.; Ashforth, B.E. Alumni and Their Alma Mater: A Partial Test of the Reformulated Model of Organizational Identification. J. Organ. Behav. 1992, 13, 103–123. [Google Scholar] [CrossRef]

- Nass, C.; Steuer, J.; Tauber, E.R. Computers Are Social Actors. In Proceedings of the Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Boston, MA, USA, 24–28 April 1994; pp. 72–78. [Google Scholar]

- Abrams, D.; Hogg, M.A. Social Identifications: A Social Psychology of Intergroup Relations and Group Processes; Routledge: Oxforshire, UK, 2006; ISBN 0-203-13545-8. [Google Scholar]

- Gambino, A.; Fox, J.; Ratan, R.A. Building a Stronger CASA: Extending the Computers Are Social Actors Paradigm. Hum. Mach. Commun. 2020, 1, 71–85. [Google Scholar] [CrossRef]

- Oh, C.; Lee, T.; Kim, Y.; Park, S.; Kwon, S.; Suh, B. Us vs. Them: Understanding Artificial Intelligence Technophobia over the Google Deepmind Challenge Match. In Proceedings of 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 2523–2534. [Google Scholar] [CrossRef]

- Savela, N.; Kaakinen, M.; Ellonen, N.; Oksanen, A. Sharing a Work Team with Robots: The Negative Effect of Robot Co-Workers on in-Group Identification with the Work Team. Comput. Hum. Behav. 2021, 115, 106585. [Google Scholar] [CrossRef]

- Ahn, J.; Kim, J.; Sung, Y. AI-Powered Recommendations: The Roles of Perceived Similarity and Psychological Distance on Persuasion. Int. J. Advert. 2021, 40, 1366–1384. [Google Scholar] [CrossRef]

- Fraune, M.R. Our Robots, Our Team: Robot Anthropomorphism Moderates Group Effects in Human–Robot Teams. Front. Psychol. 2020, 11, 1275. [Google Scholar] [CrossRef]

- Bigman, Y.E.; Waytz, A.; Alterovitz, R.; Gray, K. Holding Robots Responsible: The Elements of Machine Morality. Trends Cogn. Sci. 2019, 23, 365–368. [Google Scholar] [CrossRef]

- Longoni, C.; Bonezzi, A.; Morewedge, C.K. Resistance to Medical Artificial Intelligence. J. Consum. Res. 2019, 46, 629–650. [Google Scholar] [CrossRef]

- Wang, J.; Molina, M.D.; Sundar, S.S. When Expert Recommendation Contradicts Peer Opinion: Relative Social Influence of Valence, Group Identity and Artificial Intelligence. Comput. Hum. Behav. 2020, 107, 106278. [Google Scholar] [CrossRef]

- Lattner, S.; Friederici, A.D. Talker’s Voice and Gender Stereotype in Human Auditory Sentence Processing–Evidence from Event-Related Brain Potentials. Neurosci. Lett. 2003, 339, 191–194. [Google Scholar] [CrossRef]

- Mullennix, J.W.; Johnson, K.A.; Topcu-Durgun, M.; Farnsworth, L.M. The Perceptual Representation of Voice Gender. J. Acoust. Soc. Am. 1995, 98, 3080–3095. [Google Scholar] [CrossRef] [PubMed]

- Nass, C.I.; Brave, S. Wired for Speech: How Voice Activates and Advances the Human-Computer Relationship; MIT Press: Cambridge, MA, USA, 2005; ISBN 0-262-14092-6. [Google Scholar]

- Kamm, C.; Walker, M.; Rabiner, L. The Role of Speech Processing in Human–Computer Intelligent Communication. Speech Commun. 1997, 23, 263–278. [Google Scholar] [CrossRef]

- Nass, C.; Lee, K.M. Does Computer-Synthesized Speech Manifest Personality? Experimental Tests of Recognition, Similarity-Attraction, and Consistency-Attraction. J. Exp. Psychol. Appl. 2001, 7, 171. [Google Scholar] [CrossRef] [PubMed]

- Nelson, T.E.; Garst, J. Values-based Political Messages and Persuasion: Relationships among Speaker, Recipient, and Evoked Values. Political Psychol. 2005, 26, 489–516. [Google Scholar] [CrossRef]

- Lane, D.S.; Coles, S.M.; Saleem, M. Solidarity Effects in Social Movement Messaging: How Cueing Dominant Group Identity Can Increase Movement Support. Hum. Commun. Res. 2019, 45, 1–26. [Google Scholar] [CrossRef]

- Elbert, S.P.; Dijkstra, A. Source Reliability in Auditory Health Persuasion: Its Antecedents and Consequences. J. Appl. Biobehav. Res. 2015, 20, 211–228. [Google Scholar] [CrossRef]

- Carpenter, C.J.; Cruz, S.M. Promoting Climate Change Abatement Policies in the Face of Motivated Reasoning: Oneness with the Source and Attitude Generalization. Int. J. Commun. 2021, 15, 21. [Google Scholar]

- Mobbs, D.; Hagan, C.C.; Dalgleish, T.; Silston, B.; Prévost, C. The Ecology of Human Fear: Survival Optimization and the Nervous System. Front. Neurosci. 2015, 9, 55. [Google Scholar] [CrossRef]

- Zentner, M.; Grandjean, D.; Scherer, K.R. Emotions Evoked by the Sound of Music: Characterization, Classification, and Measurement. Emotion 2008, 8, 494. [Google Scholar] [CrossRef]

- Aylett, M.P.; Sutton, S.J.; Vazquez-Alvarez, Y. The Right Kind of Unnatural: Designing a Robot Voice. In Proceedings of the 1st International Conference on Conversational User Interfaces, Dublin, Ireland, 22–23 August 2019; pp. 1–2. [Google Scholar] [CrossRef]

- Youssef, A.B.; Varni, G.; Essid, S.; Clavel, C. On-the-Fly Detection of User Engagement Decrease in Spontaneous Human-Robot Interaction, International Journal of Social Robotics, 2019. arXiv 2019, arXiv:2004.09596. [Google Scholar]

- Glass, D.C.; Reim, B.; Singer, J.E. Behavioral Consequences of Adaptation to Controllable and Uncontrollable Noise. J. Exp. Soc. Psychol. 1971, 7, 244–257. [Google Scholar] [CrossRef]

- Westman, J.C.; Walters, J.R. Noise and Stress: A Comprehensive Approach. Environ. Health Perspect. 1981, 41, 291–309. [Google Scholar] [CrossRef] [PubMed]

- Mitchell, W.J.; Szerszen Sr, K.A.; Lu, A.S.; Schermerhorn, P.W.; Scheutz, M.; MacDorman, K.F. A Mismatch in the Human Realism of Face and Voice Produces an Uncanny Valley. I-Percept. 2011, 2, 10–12. [Google Scholar] [CrossRef] [PubMed]

- Kühne, K.; Fischer, M.H.; Zhou, Y. The Human Takes It All: Humanlike Synthesized Voices Are Perceived as Less Eerie and More Likable. Evidence from a Subjective Ratings Study. Front. Neurorobotics 2020, 14, 105. [Google Scholar] [CrossRef] [PubMed]

- Baird, A.; Parada-Cabaleiro, E.; Hantke, S.; Burkhardt, F.; Cummins, N.; Schuller, B. The Perception and Analysis of the Likeability and Human Likeness of Synthesized Speech. In Proceedings of the Interspeech, Hyderabad, India, 2–6 September 2018. [Google Scholar] [CrossRef]

- Romportl, J. Speech Synthesis and Uncanny Valley. In Proceedings of the International Conference on Text, Speech, and Dialogue, Brno, Czech Republic, 8–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 595–602. [Google Scholar] [CrossRef]

- Zibrek, K.; Cabral, J.; McDonnell, R. Does Synthetic Voice Alter Social Response to a Photorealistic Character in Virtual Reality? Motion Interact. Games 2021, 1–6. [Google Scholar] [CrossRef]

- Lerner, J.S.; Keltner, D. Beyond Valence: Toward a Model of Emotion-Specific Influences on Judgement and Choice. Cogn. Emot. 2000, 14, 473–493. [Google Scholar] [CrossRef]

- Lerner, J.S.; Keltner, D. Fear, Anger, and Risk. J. Personal. Soc. Psychol. 2001, 81, 146. [Google Scholar] [CrossRef]

- Han, S.; Lerner, J.S.; Keltner, D. Feelings and Consumer Decision Making: The Appraisal-Tendency Framework. J. Consum. Psychol. 2007, 17, 158–168. [Google Scholar] [CrossRef]

- Zheng, D.; Luo, Q.; Ritchie, B.W. Afraid to Travel after COVID-19? Self-Protection, Coping and Resilience against Pandemic ‘Travel Fear.’ Tour. Manag. 2021, 83, 104261. [Google Scholar] [CrossRef]

- Meijnders, A.L.; Midden, C.J.; Wilke, H.A. Communications About Environmental Risks and Risk-Reducing Behavior: The Impact of Fear on Information Processing 1. J. Appl. Soc. Psychol. 2001, 31, 754–777. [Google Scholar] [CrossRef]

- Nabi, R.L.; Roskos-Ewoldsen, D.; Dillman Carpentier, F. Subjective Knowledge and Fear Appeal Effectiveness: Implications for Message Design. Health Commun. 2008, 23, 191–201. [Google Scholar] [CrossRef] [PubMed]

- Sarrina Li, S.-C.; Huang, L.-M.S. Fear Appeals, Information Processing, and Behavioral Intentions toward Climate Change. Asian J. Commun. 2020, 30, 242–260. [Google Scholar] [CrossRef]

- Rogers, R.W. A Protection Motivation Theory of Fear Appeals and Attitude Change1. J. Psychol. 1975, 91, 93–114. [Google Scholar] [CrossRef] [PubMed]

- Lerner, J.S.; Gonzalez, R.M.; Small, D.A.; Fischhoff, B. Effects of Fear and Anger on Perceived Risks of Terrorism: A National Field Experiment. Psychol. Sci. 2003, 14, 144–150. [Google Scholar] [CrossRef]

- Lewis, I.; Watson, B.; Tay, R.; White, K.M. The Role of Fear Appeals in Improving Driver Safety: A Review of the Effectiveness of Fear-Arousing (Threat) Appeals in Road Safety Advertising. Int. J. Behav. Consult. Ther. 2007, 3, 203. [Google Scholar] [CrossRef]

- O’neill, S.; Nicholson-Cole, S. “Fear Won’t Do It” Promoting Positive Engagement with Climate Change through Visual and Iconic Representations. Sci. Commun. 2009, 30, 355–379. [Google Scholar] [CrossRef]

- Ettinger, J.; Walton, P.; Painter, J.; DiBlasi, T. Climate of Hope or Doom and Gloom? Testing the Climate Change Hope vs. Fear Communications Debate through Online Videos. Clim. Change 2021, 164, 19. [Google Scholar] [CrossRef]

- Lu, J.; Xie, X.; Zhang, R. Focusing on Appraisals: How and Why Anger and Fear Influence Driving Risk Perception. J. Saf. Res. 2013, 45, 65–73. [Google Scholar] [CrossRef]

- Wake, S.; Wormwood, J.; Satpute, A.B. The Influence of Fear on Risk Taking: A Meta-Analysis. Cogn. Emot. 2020, 34, 1143–1159. [Google Scholar] [CrossRef]

- Pahl, S.; Harris, P.R.; Todd, H.A.; Rutter, D.R. Comparative Optimism for Environmental Risks. J. Environ. Psychol. 2005, 25, 1–11. [Google Scholar] [CrossRef]

- Zhou, Z.; Liu, J.; Zeng, H.; Zhang, T.; Chen, X. How Does Soil Pollution Risk Perception Affect Farmers’ pro-Environmental Behavior? The Role of Income Level. J. Environ. Manag. 2020, 270, 110806. [Google Scholar] [CrossRef] [PubMed]

- Bradley, G.L.; Babutsidze, Z.; Chai, A.; Reser, J.P. The Role of Climate Change Risk Perception, Response Efficacy, and Psychological Adaptation in pro-Environmental Behavior: A Two Nation Study. J. Environ. Psychol. 2020, 68, 101410. [Google Scholar] [CrossRef]

- Zhu, W.; Yao, N. Public Risk Perception and Intention to Take Actions on City Smog in China. Hum. Ecol. Risk Assess. Int. J. 2019, 25, 1531–1546. [Google Scholar] [CrossRef]

- Chen, F.; Dai, S.; Zhu, Y.; Xu, H. Will Concerns for Ski Tourism Promote Pro-Environmental Behaviour? An Implication of Protection Motivation Theory. Int. J. Tour. Res. 2020, 22, 303–313. [Google Scholar] [CrossRef]

- Salim, E.; Ravanel, L.; Deline, P. Does Witnessing the Effects of Climate Change on Glacial Landscapes Increase Pro-Environmental Behaviour Intentions? An Empirical Study of a Last-Chance Destination. Curr. Issues Tour. 2022, 1–19. [Google Scholar] [CrossRef]

- Zhou, S.; Wang, Y. How Negative Anthropomorphic Message Framing and Nostalgia Enhance Pro-Environmental Behaviors during the COVID-19 Pandemic in China: An SEM-NCA Approach. Front. Psychol. 2022, 13, 1–20. [Google Scholar] [CrossRef]

- Lee, T.H.; Jan, F.-H.; Chen, J.-C. Influence Analysis of Interpretation Services on Ecotourism Behavior for Wildlife Tourists. J. Sustain. Tour. 2021, 1–19. [Google Scholar] [CrossRef]

- Song, Y.; Zhang, L.; Zhang, M. Research on the Impact of Public Climate Policy Cognition on Low-Carbon Travel Based on SOR Theory—Evidence from China. Energy 2022, 261, 125192. [Google Scholar] [CrossRef]

- Eerola, T.; Armitage, J.; Lavan, N.; Knight, S. Online Data Collection in Auditory Perception and Cognition Research: Recruitment, Testing, Data Quality and Ethical Considerations. Audit. Percept. Cogn. 2021, 4, 251–280. [Google Scholar] [CrossRef]

- Fu, C.; Lyu, X.; Mi, M. Collective Value Promotes the Willingness to Share Provaccination Messages on Social Media in China: Randomized Controlled Trial. JMIR Form. Res. 2022, 6, e35744. [Google Scholar] [CrossRef]

- Wang, H.; Fu, H.; Wu, Y. To Gain Face or Not to Lose Face: The Effect of Face Message Frame on Response to Public Service Advertisements. Int. J. Advert. 2020, 39, 1301–1321. [Google Scholar] [CrossRef]

- Schwär, H.; Moynihan, R. There’sa Clever Psychological Reason Why Amazon Gave Alexa a Female Voice. Buisness Insider. 2018. Available online: https://www.businessinsider.fr/us/theres-psychological-reason-why-amazon-gave-alexa-a-female-voice-2018-9 (accessed on 7 February 2020).

- Aron, A.; Aron, E.N.; Smollan, D. Inclusion of Other in the Self Scale and the Structure of Interpersonal Closeness. J. Personal. Soc. Psychol. 1992, 63, 596. [Google Scholar] [CrossRef]

- Dillard, J.P.; Anderson, J.W. The Role of Fear in Persuasion. Psychol. Mark. 2004, 21, 909–926. [Google Scholar] [CrossRef]

- Ogunbode, C.A.; Doran, R.; Böhm, G. Exposure to the IPCC Special Report on 1.5 C Global Warming Is Linked to Perceived Threat and Increased Concern about Climate Change. Clim. Change 2020, 158, 361–375. [Google Scholar] [CrossRef]

- Tsai, C.-C.; Li, X.; Wu, W.-N. Explaining Citizens’ Pro-Environmental Behaviours in Public and Private Spheres: The Mediating Role of Willingness to Sacrifice for the Environment. Aust. J. Public Adm. 2021, 80, 510–538. [Google Scholar] [CrossRef]

- Witte, K. The Role of Threat and Efficacy in AIDS Prevention. Int. Q Community Health Educ. 1991, 12, 225–249. [Google Scholar] [CrossRef]

- Gong, L.; Lai, J. To Mix or Not to Mix Synthetic Speech and Human Speech? Contrasting Impact on Judge-Rated Task Performance versus Self-Rated Performance and Attitudinal Responses. Int. J. Speech Technol. 2003, 6, 123–131. [Google Scholar] [CrossRef]

- Bracken, C.C.; Lombard, M. Social Presence and Children: Praise, Intrinsic Motivation, and Learning with Computers. J. Commun. 2004, 54, 22–37. [Google Scholar] [CrossRef]

- Nass, C.; Steuer, J. Voices, Boxes, and Sources of Messages: Computers and Social Actors. Hum. Commun. Res. 1993, 19, 504–527. [Google Scholar] [CrossRef]

- Jiang, Q.; Zhang, Y.; Pian, W. Chatbot as an Emergency Exist: Mediated Empathy for Resilience via Human-AI Interaction during the COVID-19 Pandemic. Inf. Process. Manag. 2022, 59, 103074. [Google Scholar] [CrossRef]

- Merrill, K.; Kim, J.; Collins, C. AI Companions for Lonely Individuals and the Role of Social Presence. Commun. Res. Rep. 2022, 39, 93–103. [Google Scholar] [CrossRef]

- Goble, H.; Edwards, C. A Robot That Communicates With Vocal Fillers Has … Uhhh … Greater Social Presence. Commun. Res. Rep. 2018, 35, 256–260. [Google Scholar] [CrossRef]

- Carpenter, C.J.; Spottswood, E.L. Exploring Romantic Relationships on Social Networking Sites Using the Self-Expansion Model. Comput. Hum. Behav. 2013, 29, 1531–1537. [Google Scholar] [CrossRef]

- Graham, J.M. Self-Expansion and Flow in Couples’ Momentary Experiences: An Experience Sampling Study. J. Personal. Soc. Psychol. 2008, 95, 679. [Google Scholar] [CrossRef] [PubMed]

- Smith, J.R.; Terry, D.J.; Hogg, M.A. Social Identity and the Attitude–Behaviour Relationship: Effects of Anonymity and Accountability. Eur. J. Soc. Psychol. 2007, 37, 239–257. [Google Scholar] [CrossRef]

- Perez Garcia, M.; Saffon Lopez, S. Exploring the Uncanny Valley Theory in the Constructs of a Virtual Assistant Personality. In Proceedings of the Proceedings of SAI Intelligent Systems Conference, London, UK, 5–6 September 2019; Springer: Cham, Switzerland, 2019; pp. 1017–1033. [Google Scholar] [CrossRef]

- Goto, M.; Nakano, T.; Kajita, S.; Matsusaka, Y.; Nakaoka, S.; Yokoi, K. VocaListener and VocaWatcher: Imitating a Human Singer by Using Signal Processing. In Proceedings of the 2012 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Kyoto, Japan, 25–30 March 2012; pp. 5393–5396. [Google Scholar] [CrossRef]

- Gong, L.; Nass, C. When a Talking-Face Computer Agent Is Half-Human and Half-Humanoid: Human Identity and Consistency Preference. Hum. Commun. Res. 2007, 33, 163–193. [Google Scholar] [CrossRef]

- Kuchenbrandt, D.; Eyssel, F. The Mental Simulation of a Human-Robot Interaction: Positive Effects on Attitudes and Anxiety toward Robots. In Proceedings of the 2012 IEEE RO-MAN: The 21st IEEE International Symposium on Robot and Human Interactive Communication, Paris, France, 9–13 September 2012; pp. 463–468. [Google Scholar] [CrossRef]

- Siegel, M.; Breazeal, C.; Norton, M.I. Persuasive Robotics: The Influence of Robot Gender on Human Behavior. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 10–15 October 2009; pp. 2563–2568. [Google Scholar] [CrossRef]

- Ahn, J.; Kim, J.; Sung, Y. The Effect of Gender Stereotypes on Artificial Intelligence Recommendations. J. Bus. Res. 2022, 141, 50–59. [Google Scholar] [CrossRef]

- Mitchell, W.J.; Ho, C.-C.; Patel, H.; MacDorman, K.F. Does Social Desirability Bias Favor Humans? Explicit–Implicit Evaluations of Synthesized Speech Support a New HCI Model of Impression Management. Comput. Hum. Behav. 2011, 27, 402–412. [Google Scholar] [CrossRef]

- Lin, X.; Lachlan, K.A.; Spence, P.R. “ I Thought about It and I May Follow What You Said”: Three Studies Examining the Effects of Elaboration and Source Credibility on Risk Behavior Intentions. J. Int. Crisis Risk Commun. Res. 2022, 5, 9–28. [Google Scholar] [CrossRef]

- Dai, Z.; MacDorman, K.F. Creepy, but Persuasive: In a Virtual Consultation, Physician Bedside Manner, Rather than the Uncanny Valley, Predicts Adherence. Front. Virtual Real. 2021, 2, 739038. [Google Scholar] [CrossRef]

- Nabi, R.L.; Gustafson, A.; Jensen, R. Framing Climate Change: Exploring the Role of Emotion in Generating Advocacy Behavior. Sci. Commun. 2018, 40, 442–468. [Google Scholar] [CrossRef]

- Sheeran, P.; Webb, T.L. The Intention–Behavior Gap. Soc. Personal. Psychol. Compass 2016, 10, 503–518. [Google Scholar] [CrossRef]

- Moser, S.; Kleinhückelkotten, S. Good Intents, but Low Impacts: Diverging Importance of Motivational and Socioeconomic Determinants Explaining Pro-Environmental Behavior, Energy Use, and Carbon Footprint. Environ. Behav. 2018, 50, 626–656. [Google Scholar] [CrossRef]

- Farrow, K.; Grolleau, G.; Ibanez, L. Social Norms and Pro-Environmental Behavior: A Review of the Evidence. Ecol. Econ. 2017, 140, 1–13. [Google Scholar] [CrossRef]

- Chwialkowska, A.; Bhatti, W.A.; Glowik, M. The Influence of Cultural Values on Pro-Environmental Behavior. J. Clean. Prod. 2020, 268, 122305. [Google Scholar] [CrossRef]

| Measure | Item | Frequency | Percentage (%) |

|---|---|---|---|

| Gender | Male | 186 | 46.9% |

| Female | 211 | 53.1% | |

| Age | 15–18 | 4 | 1.0% |

| 19–24 | 194 | 48.9% | |

| 25–34 | 165 | 42.5% | |

| 35–44 | 24 | 6.1% | |

| 45–65 | 10 | 2.5% | |

| Education level | Middle school or below | 4 | 1.0% |

| High school | 8 | 2.0% | |

| Bachelor or vocational school | 355 | 89.4% | |

| Master or PhD | 30 | 7.6% | |

| Monthly income | Less than 1000 RMB | 26 | 6.5% |

| 1000–3000 RMB | 116 | 29.2% | |

| 3001–6000 RMB | 99 | 24.9% | |

| 6001–10,000 RMB | 115 | 29.0% | |

| More than 10,000 RMB | 41 | 10.3% |

| News Content: “Climate Change Is Closely Related to You and Me! Foreign Media Anticipated the Impacts of Global Warming.” |

|---|

| Global warming describes the rise of the temperature worldwide resulted from the greenhouse gas effect. Currently, the speed of global warming is accelerating, and is faster than ever before. According to the World Meteorological Organization (WMO), the Earth is nearly 1 °C warmer than it was in the early industrial age. At this rate, the global temperature will be 3 to 5 °C warmer than it was in the pre-industrial age by 2100. Slight as this increase might seem, the Intergovernmental Panel on Climate Change (IPCC) noted that humans would face catastrophic consequences if no effective countermeasures were taken. For instance, the sea levels would rise; some islands and coastal lowlands would be inundated; the temperature and acidity of the seas would increase; agriculture and animal husbandry would face great challenges. Indeed, climate change is relevant to everyone who lives on the planet. Each individual would be affected by global warming if no effective actions were taken. Thus, everyone should contribute to the mitigation of climate change. |

| Predictors | Model 1 | Model 2 | Model 3 | Model 4 | Model 5 |

|---|---|---|---|---|---|

| PIO | Auditory Fear | Risk Perception | PEBI | PEBI | |

| β(SE) | β(SE) | β(SE) | β(SE) | β(SE) | |

| Voice Type | −0.32 (0.10) ** | 0.43 (0.10) *** | 0.04 (0.10) | 0.12 (0.10) | 0.11 (0.10) |

| PIO | 0.22 (0.05) *** | 0.22 (0.05) *** | 0.16 (0.05) ** | ||

| Auditory Fear | 0.15 (0.05) ** | 0.001 (0.05) | −0.04 (0.05) | ||

| Risk Perception | 0.29 (0.05) *** | ||||

| R | 0.03 | 0.05 | 0.08 | 0.05 | 0.12 |

| F | 10.64 ** | 19.09 *** | 11.34 *** | 6.58 *** | 13.78 *** |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ni, B.; Wu, F.; Huang, Q. When Artificial Intelligence Voices Human Concerns: The Paradoxical Effects of AI Voice on Climate Risk Perception and Pro-Environmental Behavioral Intention. Int. J. Environ. Res. Public Health 2023, 20, 3772. https://doi.org/10.3390/ijerph20043772

Ni B, Wu F, Huang Q. When Artificial Intelligence Voices Human Concerns: The Paradoxical Effects of AI Voice on Climate Risk Perception and Pro-Environmental Behavioral Intention. International Journal of Environmental Research and Public Health. 2023; 20(4):3772. https://doi.org/10.3390/ijerph20043772

Chicago/Turabian StyleNi, Binbin, Fuzhong Wu, and Qing Huang. 2023. "When Artificial Intelligence Voices Human Concerns: The Paradoxical Effects of AI Voice on Climate Risk Perception and Pro-Environmental Behavioral Intention" International Journal of Environmental Research and Public Health 20, no. 4: 3772. https://doi.org/10.3390/ijerph20043772

APA StyleNi, B., Wu, F., & Huang, Q. (2023). When Artificial Intelligence Voices Human Concerns: The Paradoxical Effects of AI Voice on Climate Risk Perception and Pro-Environmental Behavioral Intention. International Journal of Environmental Research and Public Health, 20(4), 3772. https://doi.org/10.3390/ijerph20043772