AI-Enhanced Healthcare: Integrating ChatGPT-4 in ePROs for Improved Oncology Care and Decision-Making: A Pilot Evaluation

Abstract

1. Introduction

2. Materials and Methods

2.1. Data Collection and Preparation

2.2. ChatGPT-4 Prompt

2.3. Evaluation Workflow

3. Results

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Open AI. ChatGPT: Optimizing Language Models for Dialogue. Available online: https://openai.com/blog/chatgpt/ (accessed on 15 February 2023).

- Uprety, D.; Zhu, D.; West, H. ChatGPT—A Promising Generative AI Tool and Its Implications for Cancer Care. Cancer 2023, 129, 2284–2289. [Google Scholar] [CrossRef]

- Ayers, J.W.; Poliak, A.; Dredze, M.; Leas, E.C.; Zhu, Z.; Kelley, J.B.; Faix, D.J.; Goodman, A.M.; Longhurst, C.A.; Hogarth, M.; et al. Comparing Physician and Artificial Intelligence Chatbot Responses to Patient Questions Posted to a Public Social Media Forum. JAMA Intern. Med. 2023, 183, 589. [Google Scholar] [CrossRef]

- Waisberg, E.; Ong, J.; Masalkhi, M.; Kamran, S.A.; Zaman, N.; Sarker, P.; Lee, A.G.; Tavakkoli, A. GPT-4: A New Era of Artificial Intelligence in Medicine. Ir. J. Med. Sci. 1971 2023, 192, 3197–3200. [Google Scholar] [CrossRef]

- Hopkins, A.M.; Logan, J.M.; Kichenadasse, G.; Sorich, M.J. Artificial Intelligence Chatbots Will Revolutionize How Cancer Patients Access Information: ChatGPT Represents a Paradigm-Shift. JNCI Cancer Spectr. 2023, 7, pkad010. [Google Scholar] [CrossRef] [PubMed]

- Browsing Is Rolling Back out to Plus Users. Available online: https://help.openai.com/en/articles/6825453-chatgpt-release-notes#h_4799933861 (accessed on 27 September 2023).

- Kung, T.H.; Cheatham, M.; Medenilla, A.; Sillos, C.; De Leon, L.; Elepaño, C.; Madriaga, M.; Aggabao, R.; Diaz-Candido, G.; Maningo, J.; et al. Performance of ChatGPT on USMLE: Potential for AI-Assisted Medical Education Using Large Language Models. PLoS Digit. Health 2023, 2, e0000198. [Google Scholar] [CrossRef] [PubMed]

- Huang, J.; Tan, M. The Role of ChatGPT in Scientific Communication: Writing Better Scientific Review Articles. Am. J. Cancer Res. 2023, 13, 1148. [Google Scholar] [PubMed]

- Liu, J.; Wang, C.; Liu, S. Utility of ChatGPT in Clinical Practice. J. Med. Internet Res. 2023, 25, e48568. [Google Scholar] [CrossRef]

- Dave, T.; Athaluri, S.A.; Singh, S. ChatGPT in Medicine: An Overview of Its Applications, Advantages, Limitations, Future Prospects, and Ethical Considerations. Front. Artif. Intell. 2023, 6, 1169595. [Google Scholar] [CrossRef]

- Yeo, Y.H.; Samaan, J.S.; Ng, W.H.; Ting, P.-S.; Trivedi, H.; Vipani, A.; Ayoub, W.; Yang, J.D.; Liran, O.; Spiegel, B.; et al. Assessing the Performance of ChatGPT in Answering Questions Regarding Cirrhosis and Hepatocellular Carcinoma. Clin. Mol. Hepatol. 2023, 29, 721–732. [Google Scholar] [CrossRef] [PubMed]

- Johnson, S.B.; King, A.J.; Warner, E.L.; Aneja, S.; Kann, B.H.; Bylund, C.L. Using ChatGPT to Evaluate Cancer Myths and Misconceptions: Artificial Intelligence and Cancer Information. JNCI Cancer Spectr. 2023, 7, pkad015. [Google Scholar] [CrossRef]

- Dennstädt, F.; Hastings, J.; Putora, P.M.; Vu, E.; Fischer, G.F.; Süveg, K.; Glatzer, M.; Riggenbach, E.; Hà, H.-L.; Cihoric, N. Exploring Capabilities of Large Language Models Such as ChatGPT in Radiation Oncology. Adv. Radiat. Oncol. 2024, 9, 101400. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.; Kann, B.H.; Foote, M.B.; Aerts, H.J.W.L.; Savova, G.K.; Mak, R.H.; Bitterman, D.S. Use of Artificial Intelligence Chatbots for Cancer Treatment Information. JAMA Oncol. 2023, 9, 1459–1462. [Google Scholar] [CrossRef]

- Frailley, S.A.; Blakely, L.J.; Owens, L.; Roush, A.; Perry, T.S.; Hellen, V.; Dickson, N.R. Electronic Patient-Reported Outcomes (ePRO) Platform Engagement in Cancer Patients during COVID-19. J. Clin. Oncol. 2020, 38 (Suppl. S29), 172. [Google Scholar] [CrossRef]

- Basch, E.; Deal, A.M.; Dueck, A.C.; Scher, H.I.; Kris, M.G.; Hudis, C.; Schrag, D. Overall Survival Results of a Trial Assessing Patient-Reported Outcomes for Symptom Monitoring During Routine Cancer Treatment. JAMA 2017, 318, 197. [Google Scholar] [CrossRef]

- Meirte, J.; Hellemans, N.; Anthonissen, M.; Denteneer, L.; Maertens, K.; Moortgat, P.; Van Daele, U. Benefits and Disadvantages of Electronic Patient-Reported Outcome Measures: Systematic Review. JMIR Perioper. Med. 2020, 3, e15588. [Google Scholar] [CrossRef]

- Basch, E.; Barbera, L.; Kerrigan, C.L.; Velikova, G. Implementation of Patient-Reported Outcomes in Routine Medical Care. Am. Soc. Clin. Oncol. Educ. Book 2018, 38, 122–134. [Google Scholar] [CrossRef]

- Daly, B.; Nicholas, K.; Flynn, J.; Silva, N.; Panageas, K.; Mao, J.J.; Gazit, L.; Gorenshteyn, D.; Sokolowski, S.; Newman, T.; et al. Analysis of a Remote Monitoring Program for Symptoms Among Adults With Cancer Receiving Antineoplastic Therapy. JAMA Netw. Open 2022, 5, e221078. [Google Scholar] [CrossRef] [PubMed]

- Basch, E.; Stover, A.M.; Schrag, D.; Chung, A.; Jansen, J.; Henson, S.; Carr, P.; Ginos, B.; Deal, A.; Spears, P.A.; et al. Clinical Utility and User Perceptions of a Digital System for Electronic Patient-Reported Symptom Monitoring During Routine Cancer Care: Findings From the PRO-TECT Trial. JCO Clin. Cancer Inform. 2020, 4, 947–957. [Google Scholar] [CrossRef] [PubMed]

- Rocque, G.B. Learning From Real-World Implementation of Daily Home-Based Symptom Monitoring in Patients With Cancer. JAMA Netw. Open 2022, 5, e221090. [Google Scholar] [CrossRef] [PubMed]

- Denis, F.; Basch, E.; Septans, A.-L.; Bennouna, J.; Urban, T.; Dueck, A.C.; Letellier, C. Two-Year Survival Comparing Web-Based Symptom Monitoring vs Routine Surveillance Following Treatment for Lung Cancer. JAMA 2019, 321, 306. [Google Scholar] [CrossRef] [PubMed]

- Lizée, T.; Basch, E.; Trémolières, P.; Voog, E.; Domont, J.; Peyraga, G.; Urban, T.; Bennouna, J.; Septans, A.-L.; Balavoine, M.; et al. Cost-Effectiveness of Web-Based Patient-Reported Outcome Surveillance in Patients With Lung Cancer. J. Thorac. Oncol. 2019, 14, 1012–1020. [Google Scholar] [CrossRef] [PubMed]

- Schwartzberg, L. Electronic Patient-Reported Outcomes: The Time Is Ripe for Integration Into Patient Care and Clinical Research. Am. Soc. Clin. Oncol. Educ. Book 2016, 36, e89–e96. [Google Scholar] [CrossRef]

- Lokmic-Tomkins, Z.; Davies, S.; Block, L.J.; Cochrane, L.; Dorin, A.; Von Gerich, H.; Lozada-Perezmitre, E.; Reid, L.; Peltonen, L.-M. Assessing the Carbon Footprint of Digital Health Interventions: A Scoping Review. J. Am. Med. Inform. Assoc. 2022, 29, 2128–2139. [Google Scholar] [CrossRef] [PubMed]

- Gesner, E.; Dykes, P.C.; Zhang, L.; Gazarian, P. Documentation Burden in Nursing and Its Role in Clinician Burnout Syndrome. Appl. Clin. Inform. 2022, 13, 983–990. [Google Scholar] [CrossRef] [PubMed]

- Guveli, H.; Anuk, D.; Oflaz, S.; Guveli, M.E.; Yildirim, N.K.; Ozkan, M.; Ozkan, S. Oncology Staff: Burnout, Job Satisfaction and Coping with Stress. Psychooncology 2015, 24, 926–931. [Google Scholar] [CrossRef] [PubMed]

- Garcia, M.B. ChatGPT as a Virtual Dietitian: Exploring Its Potential as a Tool for Improving Nutrition Knowledge. Appl. Syst. Innov. 2023, 6, 96. [Google Scholar] [CrossRef]

- Cancell Tech Co., Ltd. Taiwan Web ePRO System. Available online: https://cancell.tw (accessed on 1 August 2023).

- National Cancer Institute Side Effect of Cancer Treatment. Available online: https://www.cancer.gov/about-cancer/treatment/side-effects (accessed on 1 August 2023).

- Open Ai ChatGPT. Available online: https://chat.openai.com (accessed on 5 January 2024).

- ChatGPT Prompt Engineering Guide. Available online: https://platform.openai.com/docs/guides/prompt-engineering (accessed on 5 January 2024).

- Sorin, V.; Brin, D.; Barash, Y.; Konen, E.; Charney, A.; Nadkarni, G.; Klang, E. Large Language Models (LLMs) and Empathy—A Systematic Review. medRxiv 2023. [Google Scholar] [CrossRef]

- Athaluri, S.A.; Manthena, S.V.; Kesapragada, V.S.R.K.M.; Yarlagadda, V.; Dave, T.; Duddumpudi, R.T.S. Exploring the Boundaries of Reality: Investigating the Phenomenon of Artificial Intelligence Hallucination in Scientific Writing Through ChatGPT References. Cureus 2023, 15, e37432. [Google Scholar] [CrossRef] [PubMed]

- Kresevic, S.; Giuffrè, M.; Ajcevic, M.; Accardo, A.; Crocè, L.S.; Shung, D.L. Optimization of hepatological clinical guidelines interpretation by large language models: A retrieval augmented generation-based framework. NPJ Digit. Med. 2024, 7, 102. [Google Scholar] [CrossRef]

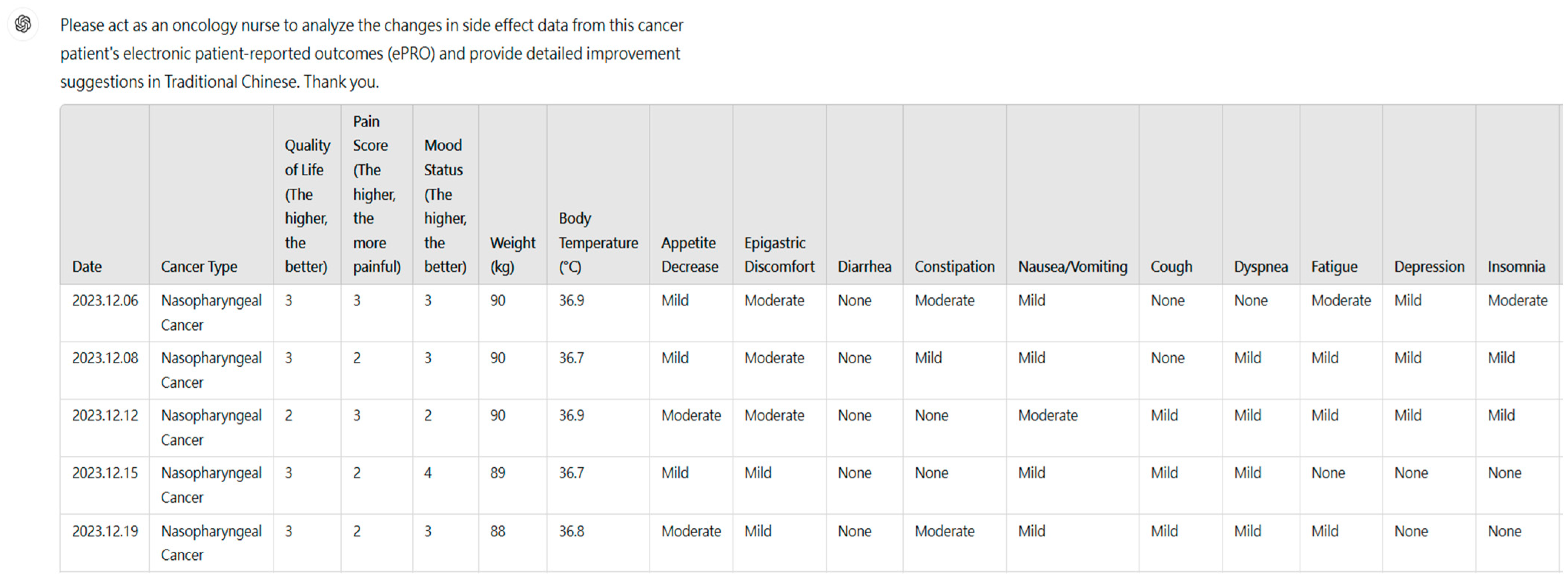

| Patient | ChatGPT-4 Responses of Acting as Nurse | Example of the Expertise Evaluations |

|---|---|---|

| Nasopharyngeal cancer patient’s ePRO data. | Analysis of ePRO Side Effect Data

| 1 oncologist Completeness: 8 Accuracy: 8 Minimal Risk to the patient: 9 Empathetic response: 6 Emotional support: 6 Improvement in patient communication: 8 Reduction in medical care stress: 8 Increased in health literacy: 7 1 dietitian Completeness: 8 Accuracy: 8 Minimal Risk to the patient: 9 Empathetic response: 7 Emotional support: 6 Improvement in patient communication: 7 Reduction in medical care stress: 8 Increased in health literacy: 8 1 nurse Completeness: 9 Accuracy: 9 Minimized risk to the patient: 9 Empathetic response: 7 Emotional support: 6 Improvement in patient communication: 8 Reduction in medical care stress: 8 Increased in health literacy: 8 |

Improvement Suggestions

|

| Characteristics | Numbers |

|---|---|

| Breast cancer | 8 |

| Head neck cancer | 7 |

| Lung cancer | 3 |

| Prostate cancer | 2 |

| Pancreas cancer | 2 |

| Lymphoma | 2 |

| Renal cell carcinoma | 2 |

| Uterine sarcoma | 2 |

| Endometrial cancer | 2 |

| Stage | |

| I | 5 |

| II | 7 |

| III | 8 |

| IV | 10 |

| Treatment modality | |

| Chemotherapy | 12 |

| Concurrent chemoradiotherapy | 8 |

| Radiotherapy | 5 |

| Target therapy | 5 |

| Act as Oncologist | Act as Dietitian | Act as Nurse | p-Value | |

|---|---|---|---|---|

| Completeness | 7.3 (4–10) | 7.2 (6–10) | 7.3 (5–9) | p = 0.881 |

| Accuracy | 7.6 (3–9) | 7.7 (4–9) | 7.7 (0–9) | p = 0.149 |

| Minimal risk | 9.4 (7–10) | 9.2 (7–10) | 9.5 (7–10) | p = 0.507 |

| Empathetic response | 5.0 (0–9) | 6.0 (4–9) | 5.4 (1–10) | p = 0.187 |

| Emotional support | 4.8 (0–9) | 5.9 (2–9) | 5.3 (0–10) | p = 0.101 |

| Improvement in patient communication efficiency | 6.7 (1–9) | 7.3 (2–9) | 7.0 (1–9) | p = 0.242 |

| Reduction in medical care stress | 7.2 (1–10) | 7.6 (2–10) | 7.8 (1–10) | p = 0.197 |

| Increase in health literacy | 6.9 (2–10) | 7.6 (2–10) | 7.3 (3–10) | p = 0.08 |

| Average score | 6.85 | 7.31 | 7.16 |

| ChatGPT-4.0 Role Simulation | ChatGPT-4 Evaluations and Responses |

|---|---|

| Oncologist | Evaluation Radiation Dermatitis: Emerges on 12/19 as Grade 1 and persists but does not progress to a higher grade. Grade 1 dermatitis usually involves mild erythema or dryness. Response Skin Hygiene: Cleanse with lukewarm water and mild soap, gently pat dry. Apply physician-recommended topical agents (e.g., moisturizing lotions, low-potency steroids) if needed. Skin Protection: Avoid tight clothing or harsh chemicals. Monitor daily for changes such as increased redness or peeling. |

| Dietitian | Evaluation Weight Changes: Decrease from 90 kg to 87 kg suggests progressive weight loss, potentially due to moderate appetite decrease and persistent GI issues (e.g., constipation). Ongoing malnutrition risk warrants nutritional intervention. Response Nutritional Support: Calorie-Dense Diet: Incorporate high-calorie, high-protein options (e.g., lean meats, dairy, beans) to address weight loss and support healing. Small Frequent Meals: Help the patient manage appetite decrease by dividing meals into smaller portions throughout the day. This strategy can also ease epigastric discomfort. Nutritional Supplements: If food intake remains insufficient, consider oral supplements or consult a dietitian for a tailored meal plan. |

| Nurse | Evaluation Fatigue: Fatigue is consistently reported as “Mild” to “Moderate”, with no days marked as “None”. Persistent fatigue, coupled with moderate levels of insomnia (e.g., 6 December 2023), may further impact QoL and mood. Response Fatigue: Encourage light physical activity (e.g., short walks) and prioritize energy-conserving strategies to mitigate fatigue. Refer the patient for counseling or psychosocial support to address mood dips and depression. Sleep hygiene education (e.g., regular sleep schedule, limiting screen time before bed) may help alleviate insomnia. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liao, C.; Chu, C.; Lien, M.; Wu, Y.; Wang, T. AI-Enhanced Healthcare: Integrating ChatGPT-4 in ePROs for Improved Oncology Care and Decision-Making: A Pilot Evaluation. Curr. Oncol. 2025, 32, 7. https://doi.org/10.3390/curroncol32010007

Liao C, Chu C, Lien M, Wu Y, Wang T. AI-Enhanced Healthcare: Integrating ChatGPT-4 in ePROs for Improved Oncology Care and Decision-Making: A Pilot Evaluation. Current Oncology. 2025; 32(1):7. https://doi.org/10.3390/curroncol32010007

Chicago/Turabian StyleLiao, Chihying, Chinnan Chu, Mingyu Lien, Yaochung Wu, and Tihao Wang. 2025. "AI-Enhanced Healthcare: Integrating ChatGPT-4 in ePROs for Improved Oncology Care and Decision-Making: A Pilot Evaluation" Current Oncology 32, no. 1: 7. https://doi.org/10.3390/curroncol32010007

APA StyleLiao, C., Chu, C., Lien, M., Wu, Y., & Wang, T. (2025). AI-Enhanced Healthcare: Integrating ChatGPT-4 in ePROs for Improved Oncology Care and Decision-Making: A Pilot Evaluation. Current Oncology, 32(1), 7. https://doi.org/10.3390/curroncol32010007