Virtual Intelligence: A Systematic Review of the Development of Neural Networks in Brain Simulation Units

Abstract

:1. Introduction

- A.

- Series of causes, predispositions or internal properties in the subject’s mental states can be determined as intrinsic properties. Material descriptions seek the development of pure neuroscience away from problems concerning Penrose’s mind [1].

- B.

- Activities can be translated through the generation of programming languages that seek to interpret brain-behaviour blocks scientifically [10].

- C.

2. Methods and Results

- Programming languages capable of simulating brain behaviour.

- Brain plasticity and learning.

- Processing and execution of cognitive tasks in real real time.

2.1. Research Question and Objectives

- A.

- Identify the primary research into developing neural networks for brain stimulation of conscious activities.

- B.

- Determine the key concepts within the research question for the article search.

- C.

- Determine the exclusion or inclusion factors for the article search.

2.2. Inclusion and Exclusion Criteria

- IEEE Xplore (https://ieeexplore-ieee-org.wdg.biblio.udg.mx:8443/Xplore/home.jsp) (accessed on 12 August 2022);

- Computing Machinery Association (https://dl-acm-org.wdg.biblio.udg.mx:8443) (accessed on 23 August 2022);

- EBSCO Host, Library, Information Science and Technology Abstract (https://web-p-ebscohost-com.wdg.biblio.udg.mx:8443/ehost/search/basic?vid=0&sid=165ca839-ee96-4612-bc68-1d443e1073b7%40redis) (accessed on 29 August 2022).

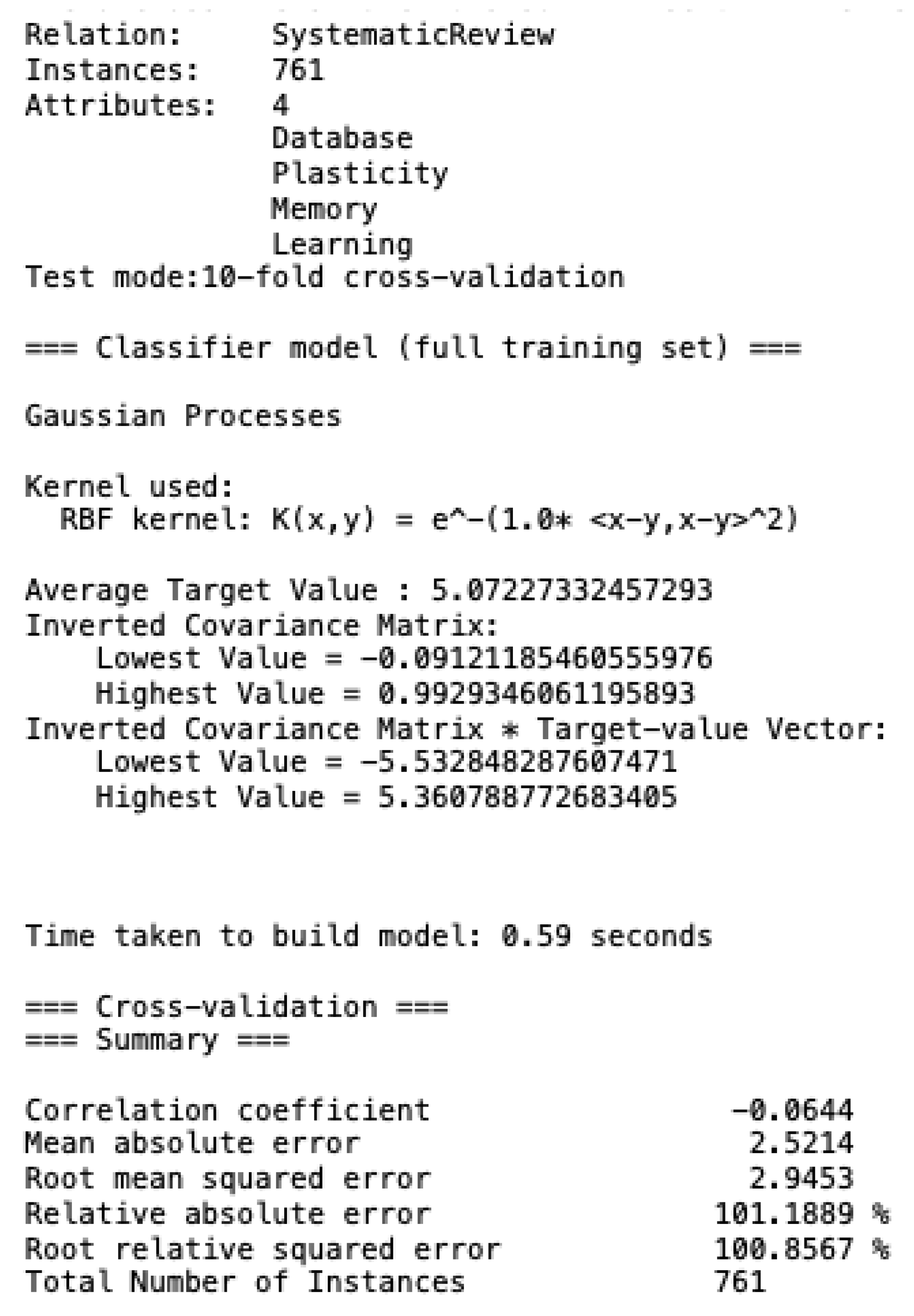

2.3. Data Extraction

2.4. Statistical Analysis

- New generation data collection for the study of brain activities.

- Reproduction of the brain structure and its primary functions through the development of brain simulation software to study molecular and subcellular aspects and neuronal functioning at a micro- and macroscopic level is shown.

- Development of cognitive computing through neuromorphic processors and silicon chips.

- The design of brain models for executing brain tasks, such as behaviour, decision making and learning.

3. Discussion

- A.

- Memory.

- B.

- Learning.

- C.

- Decision making or free will.

- Recognition, classification, memory and data deduction through inputs through visuals.

- Acoustic or speech channels that are processed through electrical action potentials.

- Hybrid architectures that have neuromorphic processors with better capacities and speeds.

- Algorithms and new forms of synaptic exchanges based on chemical processes.

- The Human Brain Project ( HBP ) is a collaborative European research project with a ten-year program launched by the European Commission’s Future and Emerging Technologies in 2017 (https://www.humanbrainproject.eu) (accessed on 5 August 2022).

- The Brain Initiative was developed in the United States in 2014. It has the support of several government and private organizations within the United States but is under the direction and financing of the NIH (https://braininitiative.nih.gov) (accessed on 12 September 2022).

- Center for Research and Cognition in Neuroscience includes the neuroscience research laboratory founded in 2012 as a research center of the faculty of psychological science and education of the Université Libre de Bruxelles (https://crcn.ulb.ac.be) (accessed on 7 September 2022).

- A.

- Large-scale recording and modulation in the nervous system.

- B.

- Next generation brain imaging.

- C.

- Integrated approaches to brain circuit analysis.

- D.

- Neuromorphic computing with biological neural networks as analog or digital copies in neurological circuits.

- E.

- Brain modeling and simulation.

4. Conclusions

- The need to build multidisciplinary projects for the study of brain functions.

- The application of multiple technologies for the simulation and modeling of brain activities.

- The creation of a global platform of free access for researchers.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Penrose, R. Las Sombras de la Mente; Crítica: Barcelona, España, 1992. [Google Scholar]

- Baars, B.J. A Cognitive Theory of Consciousness; Cambridge University Press: Cambridge, UK, 1988. [Google Scholar]

- Ryle, G. El Concepto de lo Mental; Paidos: Barcelona, España, 2005. [Google Scholar]

- Kungl, A.F.; Schmitt, S.; Klähn, J.; Müller, P.; Baumbach, A.; Dold, D.; Kugele, A.; Müller, E.; Koke, C.; Kleider, M.; et al. Accelerated Physical Emulation of Bayesian Inference in Spiking Neural Networks. Front. Neurosci. 2019, 13, 1201. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Davidson, D. Essays on Actions and Events; Oxford University Press: Oxford, UK, 2002. [Google Scholar]

- Searle, J. El Misterio de la Conciencia.; Paidos: Barcelona, España, 2000; pp. 17–30. [Google Scholar]

- Tononi, G. Consciousness and Complexity. Science 1998, 282, 1846–1851. [Google Scholar] [CrossRef] [PubMed]

- Eccles, J. La Evolución del cerebro; Labor: Barcelona, España, 1992. [Google Scholar]

- Giacopelli, G.; Migliore, M.; Tegolo, D. Graph-theoretical derivation of brain structural connectivity. Appl. Math. Comput. 2020, 377, 125150. [Google Scholar] [CrossRef]

- Block, N. On a confusion about a function of consciousness. Behav. Brain Sci. 1995, 18, 227–247. [Google Scholar] [CrossRef]

- Braun, R. El futuro de la filosofia de la mente. In Persona, núm. 10; Universidad de Lima: Lima, Perú, 2007; pp. 109–123. [Google Scholar]

- Dennett, D. La Conciencia Explicada. Una TeoríA Interdisciplinar; Paidos: Barcelona, España, 1995. [Google Scholar]

- Ahmed, M.U.; Li, L.; Cao, J.; Mandic, D.P. Multivariate multiscale entropy for brain consciousness analysis. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; pp. 810–813. [Google Scholar] [CrossRef] [Green Version]

- Blanke, O.; Slater, M.; Serino, A. Behavioral, Neural, and Computational Principles of Bodily Self-Consciousness. Neuron 2015, 88, 145–166. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Blankertz, B.; Losch, F.; Krauledat, M.; Dornhege, G.; Curio, G.; Muller, K.R. The Berlin Brain-Computer Interface: Accurate performance from first-session in BCI-naive subjects. IEEE Trans. Biomed. Eng. 2008, 55, 2452–2462. [Google Scholar] [CrossRef]

- Wokke, M.E.; Achoui, D.; Cleeremans, A. Action information contributes to metacognitive decision-making. Sci. Rep. 2020, 10, 3632. [Google Scholar] [CrossRef] [Green Version]

- Coucke, N.; Heinrich, M.K.; Cleeremans, A.; Dorigo, M. HuGoS: A virtual environment for studying collective human behaviour from a swarm intelligence perspective. Swarm Intell. 2021, 15, 339–376. [Google Scholar] [CrossRef]

- di Volo, M.; Romagnoni, A.; Capone, C.; Destexhe, A. Biologically Realistic Mean-Field Models of Conductance-Based Networks of Spiking Neurons with Adaptation. Neural Comput. 2019, 31, 653–680. [Google Scholar] [CrossRef]

- Hagen, E.; Næss, S.; Ness, T.V.; Einevoll, G.T. Multimodal Modeling of Neural Network Activity: Computing LFP, ECoG, EEG, and MEG Signals With LFPy 2.0. Front. Neuroinform. 2018, 12, 92. [Google Scholar] [CrossRef] [Green Version]

- Skaar, J.E.W.; Stasik, A.J.; Hagen, E.; Ness, T.V.; Einevoll, G.T. Estimation of neural network model parameters from local field potentials (LFPs). PLoS Comput. Biol. 2020, 16, e1007725. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Deger, M.; Seeholzer, A.; Gerstner, W. Multicontact Co-operativity in Spike-Timing–Dependent Structural Plasticity Stabilizes Networks. Cereb. Cortex 2018, 28, 1396–1415. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Illing, B.; Gerstner, W.; Brea, J. Biologically plausible deep learning—But how far can we go with shallow networks? Neural Netw. 2019, 118, 90–101. [Google Scholar] [CrossRef] [PubMed]

- Zumalabe-Makirriain, J. El estudio neurológico de la conciencia: Una valoración crítica. An. Psicol. 2016, 32, 266–278. [Google Scholar] [CrossRef] [Green Version]

- Amsalem, O.; Eyal, G.; Rogozinski, N.; Gevaert, M.; Kumbhar, P.; Schürmann, F.; Segev, I. An efficient analytical reduction of detailed nonlinear neuron models. Nat. Commun. 2020, 11, 288. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kaiser, J.; Hoff, M.; Konle, A.; Tieck, J.C.V.; Kappel, D.; Reichard, D.; Subramoney, A.; Legenstein, R.; Roennau, A.; Maass, W.; et al. Embodied Synaptic Plasticity with Online Reinforcement Learning. Front. Neurorobot. 2019, 13, 81. [Google Scholar] [CrossRef]

- Hoya, T. Notions of Intuition and Attention Modeled by a Hierarchically Arranged Generalized Regression Neural Network. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 2004, 34, 200–209. [Google Scholar] [CrossRef]

- Pokorny, C.; Ison, M.J.; Rao, A.; Legenstein, R.; Papadimitriou, C.; Maass, W. STDP Forms Associations between Memory Traces in Networks of Spiking Neurons. Cereb. Cortex 2020, 30, 952–968. [Google Scholar] [CrossRef] [Green Version]

- Taylor, J.; Hartley, M. Through Reasoning to Cognitive Machines. IEEE Comput. Intell. Mag. 2007, 2, 12–24. [Google Scholar] [CrossRef]

- Popovych, O.V.; Manos, T.; Hoffstaedter, F.; Eickhoff, S.B. What Can Computational Models Contribute to Neuroimaging Data Analytics? Front. Syst. Neurosci. 2019, 12, 68. [Google Scholar] [CrossRef] [Green Version]

- Trensch, G.; Gutzen, R.; Blundell, I.; Denker, M.; Morrison, A. Rigorous Neural Network Simulations: A Model Substantiation Methodology for Increasing the Correctness of Simulation Results in the Absence of Experimental Validation Data. Front. Neuroinform. 2018, 12, 81. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Berlemont, K.; Martin, J.R.; Sackur, J.; Nadal, J.P. Nonlinear neural network dynamics accounts for human confidence in a sequence of perceptual decisions. Sci. Rep. 2020, 10, 7940. [Google Scholar] [CrossRef] [PubMed]

- Stimberg, M.; Goodman, D.F.M.; Nowotny, T. Brian2GeNN: Accelerating spiking neural network simulations with graphics hardware. Sci. Rep. 2020, 10, 410. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pozo, J. Teorías Cognitivas del Aprendizaje; Morata: Madrid, España, 1997. [Google Scholar]

- Senk, J.; Korvasová, K.; Schuecker, J.; Hagen, E.; Tetzlaff, T.; Diesmann, M.; Helias, M. Conditions for wave trains in spiking neural networks. Phys. Rev. Res. 2020, 2, 023174. [Google Scholar] [CrossRef]

- Bicanski, A.; Burgess, N. A neural-level model of spatial memory and imagery. eLife 2018, 7, e33752. [Google Scholar] [CrossRef] [PubMed]

- Schmitt, S.; Klähn, J.; Bellec, G.; Grübl, A.; Güttler, M.; Hartel, A.; Hartmann, S.; Husmann, D.; Husmann, K.; Jeltsch, S.; et al. Neuromorphic hardware in the loop: Training a deep spiking network on the BrainScaleS wafer-scale system. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 2227–2234. [Google Scholar] [CrossRef] [Green Version]

- de Kamps, M.; Lepperød, M.; Lai, Y.M. Computational geometry for modeling neural populations: From visualization to simulation. PLoS Comput. Biol. 2019, 15, e1006729. [Google Scholar] [CrossRef] [Green Version]

- Einevoll, G.T.; Destexhe, A.; Diesmann, M.; Grün, S.; Jirsa, V.; de Kamps, M.; Migliore, M.; Ness, T.V.; Plesser, H.E.; Schürmann, F. The Scientific Case for Brain Simulations. Neuron 2019, 102, 735–744. [Google Scholar] [CrossRef] [Green Version]

- Gutzen, R.; von Papen, M.; Trensch, G.; Quaglio, P.; Grün, S.; Denker, M. Reproducible Neural Network Simulations: Statistical Methods for Model Validation on the Level of Network Activity Data. Front. Neuroinform. 2018, 12, 90. [Google Scholar] [CrossRef]

- Jordan, J.; Helias, M.; Diesmann, M.; Kunkel, S. Efficient Communication in Distributed Simulations of Spiking Neuronal Networks With Gap Junctions. Front. Neuroinform. 2020, 14, 12. [Google Scholar] [CrossRef]

- Dong, Q.; Ge, F.; Ning, Q.; Zhao, Y.; Lv, J.; Huang, H.; Yuan, J.; Jiang, X.; Shen, D.; Liu, T. Modeling Hierarchical Brain Networks via Volumetric Sparse Deep Belief Network. IEEE Trans. Biomed. Eng. 2020, 67, 1739–1748. [Google Scholar] [CrossRef]

- Gao, S.; Zhou, M.; Wang, Y.; Cheng, J.; Yachi, H.; Wang, J. Dendritic Neuron Model With Effective Learning Algorithms for Classification, Approximation, and Prediction. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 601–614. [Google Scholar] [CrossRef] [PubMed]

- Heinrich, S.; Wermter, S. Interactive natural language acquisition in a multi-modal recurrent neural architecture. Connect. Sci. 2018, 30, 99–133. [Google Scholar] [CrossRef] [Green Version]

- Hwu, T.; Krichmar, J.L. A neural model of schemas and memory encoding. Biol. Cybern. 2020, 114, 169–186. [Google Scholar] [CrossRef]

- Kong, D.; Hu, S.; Wang, J.; Liu, Z.; Chen, T.; Yu, Q.; Liu, Y. Study of Recall Time of Associative Memory in a Memristive Hopfield Neural Network. IEEE Access 2019, 7, 58876–58882. [Google Scholar] [CrossRef]

- Mheich, A.; Hassan, M.; Khalil, M.; Gripon, V.; Dufor, O.; Wendling, F. SimiNet: A Novel Method for Quantifying Brain Network Similarity. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 2238–2249. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Oess, T.; Löhr, M.P.R.; Schmid, D.; Ernst, M.O.; Neumann, H. From Near-Optimal Bayesian Integration to Neuromorphic Hardware: A Neural Network Model of Multisensory Integration. Front. Neurorobot. 2020, 14, 29. [Google Scholar] [CrossRef]

- Pyle, S.D.; Zand, R.; Sheikhfaal, S.; Demara, R.F. Subthreshold Spintronic Stochastic Spiking Neural Networks with Probabilistic Hebbian Plasticity and Homeostasis. IEEE J. Explor.-Solid-State Comput. Devices Circuits 2019, 5, 43–51. [Google Scholar] [CrossRef]

- Even-Chen, N.; Stavisky, S.; Pandarinath, C.; Nuyujukian, P.; Blabe, C.; Hochberg, L.; Shenoy, K. Feasibility of Automatic Error Detect-and-Undo System in Human Intracortical Brain–Computer Interfaces. IEEE Trans. Biomed. Eng. 2018, 65, 1771–1784. [Google Scholar] [CrossRef]

- Jacobsen, S.; Meiron, O.; Salomon, D.Y.; Kraizler, N.; Factor, H.; Jaul, E.; Tsur, E.E. Integrated Development Environment for EEG-Driven Cognitive-Neuropsychological Research. IEEE J. Transl. Eng. Health Med. 2020, 8, 1–8. [Google Scholar] [CrossRef]

- Andalibi, V.; Hokkanen, H.; Vanni, S. Controlling Complexity of Cerebral Cortex Simulations—I: CxSystem, a Flexible Cortical Simulation Framework. Neural Comput. 2019, 31, 1048–1065. [Google Scholar] [CrossRef]

- Moulin-Frier, C.; Fischer, T.; Petit, M.; Pointeau, G.; Puigbo, J.Y.; Pattacini, U.; Low, S.C.; Camilleri, D.; Nguyen, P.; Hoffmann, M.; et al. DAC-h3: A Proactive Robot Cognitive Architecture to Acquire and Express Knowledge About the World and the Self. IEEE Trans. Cogn. Dev. Syst. 2018, 10, 1005–1022. [Google Scholar] [CrossRef] [Green Version]

- Nadji-Tehrani, M.; Eslami, A. A Brain-Inspired Framework for Evolutionary Artificial General Intelligence. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 5257–5271. [Google Scholar] [CrossRef] [PubMed]

- Jones, A.; Jha, R. A Compact Gated-Synapse Model for Neuromorphic Circuits. IEEE Trans. -Comput.-Aided Des. Integr. Circuits Syst. 2021, 40, 1887–1895. [Google Scholar] [CrossRef]

- Abibullaev, B.; Zollanvari, A. Learning Discriminative Spatiospectral Features of ERPs for Accurate Brain–Computer Interfaces. IEEE J. Biomed. Health Inform. 2019, 23, 2009–2020. [Google Scholar] [CrossRef] [PubMed]

- Bandara, D.; Arata, J.; Kiguchi, K. A noninvasive brain–computer interface approach for predicting motion intention of activities of daily living tasks for an upper-limb wearable robot. Int. J. Adv. Robot. Syst. 2018, 15, 172988141876731. [Google Scholar] [CrossRef] [Green Version]

- Bermudez-Contreras, E.; Clark, B.J.; Wilber, A. The Neuroscience of Spatial Navigation and the Relationship to Artificial Intelligence. Front. Comput. Neurosci. 2020, 14, 63. [Google Scholar] [CrossRef]

- Lee, Y.E.; Kwak, N.S.; Lee, S.W. A Real-Time Movement Artifact Removal Method for Ambulatory Brain-Computer Interfaces. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 2660–2670. [Google Scholar] [CrossRef]

- Davies, M.; Wild, A.; Orchard, G.; Sandamirskaya, Y.; Guerra, G.A.F.; Joshi, P.; Plank, P.; Risbud, S.R. Advancing Neuromorphic Computing with Loihi: A Survey of Results and Outlook. Proc. IEEE 2021, 109, 911–934. [Google Scholar] [CrossRef]

- Hole, K.J.; Ahmad, S. Biologically Driven Artificial Intelligence. Computer 2019, 52, 72–75. [Google Scholar] [CrossRef]

- Zeng, Y.; Zhao, Y.; Zhang, T.; Zhao, D.; Zhao, F.; Lu, E. A Brain-Inspired Model of Theory of Mind. Front. Neurorobot. 2020, 14, 60. [Google Scholar] [CrossRef]

| Database | Publication |

|---|---|

| ACM | 1511 |

| EBSCO | 306 |

| IEEE | 1511 |

| Database | Publication |

|---|---|

| ACM | 369 |

| EBSCO | 115 |

| IEEE | 278 |

| Journal | N. Paper | Title | Types of Computational Architecture |

|---|---|---|---|

| Biological Cybernetics | 1 | A neural model of schemas and memory encoding | Design of neural networks and learning algorithms for classification, predictions, memory, and learning |

| Computer | 1 | Biologically driven artificial intelligence | A critical review of the development of AI in brain stimulation through theoretical models |

| Connection Science | 1 | Interactive natural language acquisition in a multi-modal recurrent neural architecture | Design of neural networks and learning algorithms for classification, predictions, memory, and learning |

| Frontiers in Computational Neuroscience | 1 | The neuroscience of spatial navigation and the relationship to artificial intelligence | Machine learning to analyze brain datasets (work of brain functions, measure of brain activities) |

| Frontiers in Neurorobotics | 2 | From near-optimal Bayesian integration to neuromorphic hardware: a neural network model of multisensory integration—a brain-inspired model of theory of mind | Design of neural networks and learning algorithms for classification, predictions, memory, and learning—A critical review of the development of AI in brain stimulation through theoretical models |

| IEEE Access | 1 | Study of recall time of associative memory in a memristive hopfield neural network | design of neural networks and learning algorithms for classification, predictions, memory, and learning |

| International Journal of Advanced Robotic Systems | 1 | A noninvasive brain–computer interface approach for predicting motion intention of activities of daily living tasks for an upper-limb wearable robot | Brain–computer interfaces |

| IEEE Journal of Biomedical and Health Informatics | 1 | Learning discriminative spatiospectral features of erps for accurate brain-computer interfaces | Brain–computer interfaces |

| IEEE Journal on Exploratory Solid-State Computational Devices and Circuits | 1 | Subthreshold spintronic stochastic spiking neural networks with probabilistic hebbian plasticity and homeostasis | Design of neural networks and learning algorithms for classification, predictions, memory, and learning |

| IEEE Journal of Translational Engineering in Health and Medicine | 1 | Integrated development environment for eeg-driven cognitive-neuropsychological research | Brain software simulation |

| IEEE Transactions on Biomedical Engineering | 2 | Modeling hierarchical brain networks via volumetric sparse deep belief network (VS-DBN)—feasibility of automatic error detect-and-undo system in human intracortical brain-computer interfaces | Design of neural networks and learning algorithms for classification, predictions, memory, and learning—Brain software simulation |

| IEEE Transactions on Cognitive and Developmental Systems | 1 | DAC-h3: A proactive robot cognitive architecture to acquire and express knowledge about the world and the self | Hybrid architectures |

| IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems | 1 | A compact gated-synapse model for neuromorphic circuits | Hybrid architectures |

| IEEE Transactions on Neural Networks and Learning Systems | 2 | Dendritic neuron model with effective learning algorithms for classification, approximation, and prediction—a brain-inspired framework for evolutionary artificial general intelligence | Design of neural networks and learning algorithms for classification, predictions, memory, and learning—Hybrid architectures |

| IEEE Transactions on Neural Systems and Rehabilitation Engineering | 1 | A real-time movement artifact removal method for ambulatory brain-computer interfaces | Machine learning to analyze brain datasets (work of brain functions, measure of brain activities) |

| IEEE Transactions on Pattern Analysis and Machine Intelligence | 1 | SimiNet: A novel method for quantifying brain network similarity | Design of neural networks and learning algorithms for classification, predictions, memory, and learning |

| Neural Computation | 1 | Controlling complexity of cerebral cortex simulations—I: CxSystem, a flexible cortical simulation framework | Brain software simulation |

| Proceedings of the IEEE | 1 | Advancing Neuromorphic Computing With Loihi: A Survey of Results and Outlook | Development of neuromorphic processors (information processing, neural connectivity in real time [brain synapse], and learning) |

| Input Data | Paper Related |

|---|---|

| Inputs data through visual datasets or experience in real time | 12 |

| Inputs data through language datasets or experience in real time | 2 |

| Others (mixes inputs data and neural connection) | 5 |

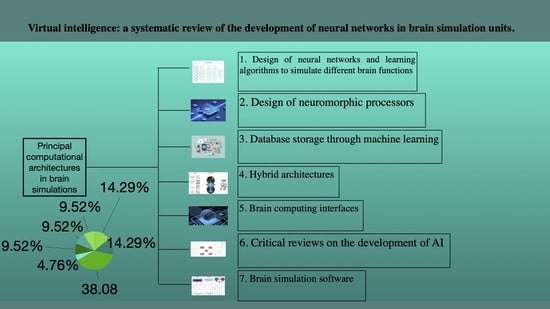

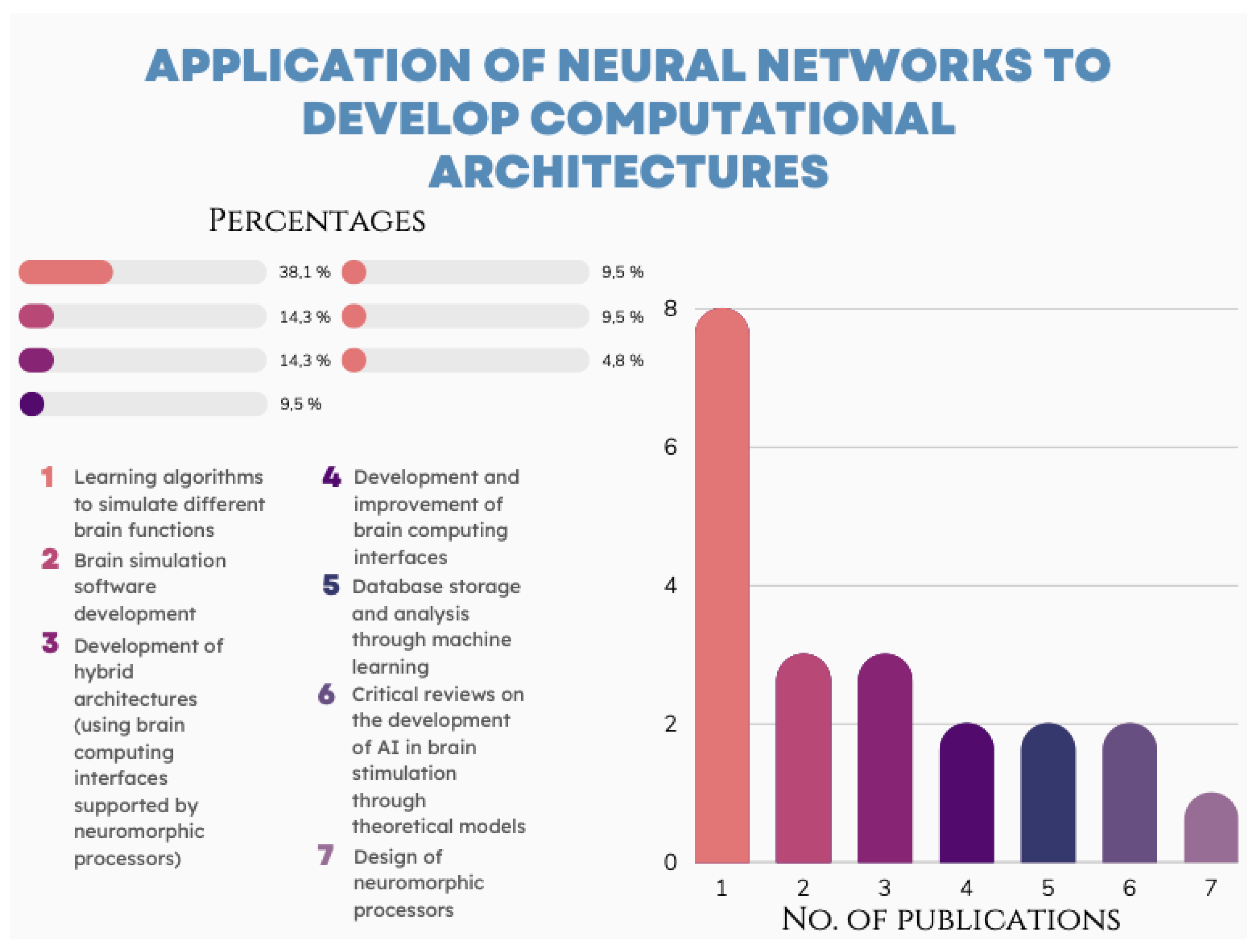

| Database | Publication |

|---|---|

| Design of neural networks and learning algorithms for classification, predictions, memory, and learning. | 8 |

| Brain software simulation | 3 |

| Hybrid architectures | 3 |

| Machine learning to analyze brain datasets (work of brain functions, measure of brain activities) | 2 |

| Brain–computer interfaces | 2 |

| Development of neuromorphic processors (information processing, neural connectivity in real time [brain synapse], and learning) | 1 |

| A critical review of the development of AI in brain stimulation through theoretical models | 2 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zavala Hernández, J.G.; Barbosa-Santillán, L.I. Virtual Intelligence: A Systematic Review of the Development of Neural Networks in Brain Simulation Units. Brain Sci. 2022, 12, 1552. https://doi.org/10.3390/brainsci12111552

Zavala Hernández JG, Barbosa-Santillán LI. Virtual Intelligence: A Systematic Review of the Development of Neural Networks in Brain Simulation Units. Brain Sciences. 2022; 12(11):1552. https://doi.org/10.3390/brainsci12111552

Chicago/Turabian StyleZavala Hernández, Jesús Gerardo, and Liliana Ibeth Barbosa-Santillán. 2022. "Virtual Intelligence: A Systematic Review of the Development of Neural Networks in Brain Simulation Units" Brain Sciences 12, no. 11: 1552. https://doi.org/10.3390/brainsci12111552

APA StyleZavala Hernández, J. G., & Barbosa-Santillán, L. I. (2022). Virtual Intelligence: A Systematic Review of the Development of Neural Networks in Brain Simulation Units. Brain Sciences, 12(11), 1552. https://doi.org/10.3390/brainsci12111552