TL-Net: A Novel Network for Transmission Line Scenes Classification

Abstract

1. Introduction

2. Related Works

2.1. Machine Learning

2.2. Deep Learning

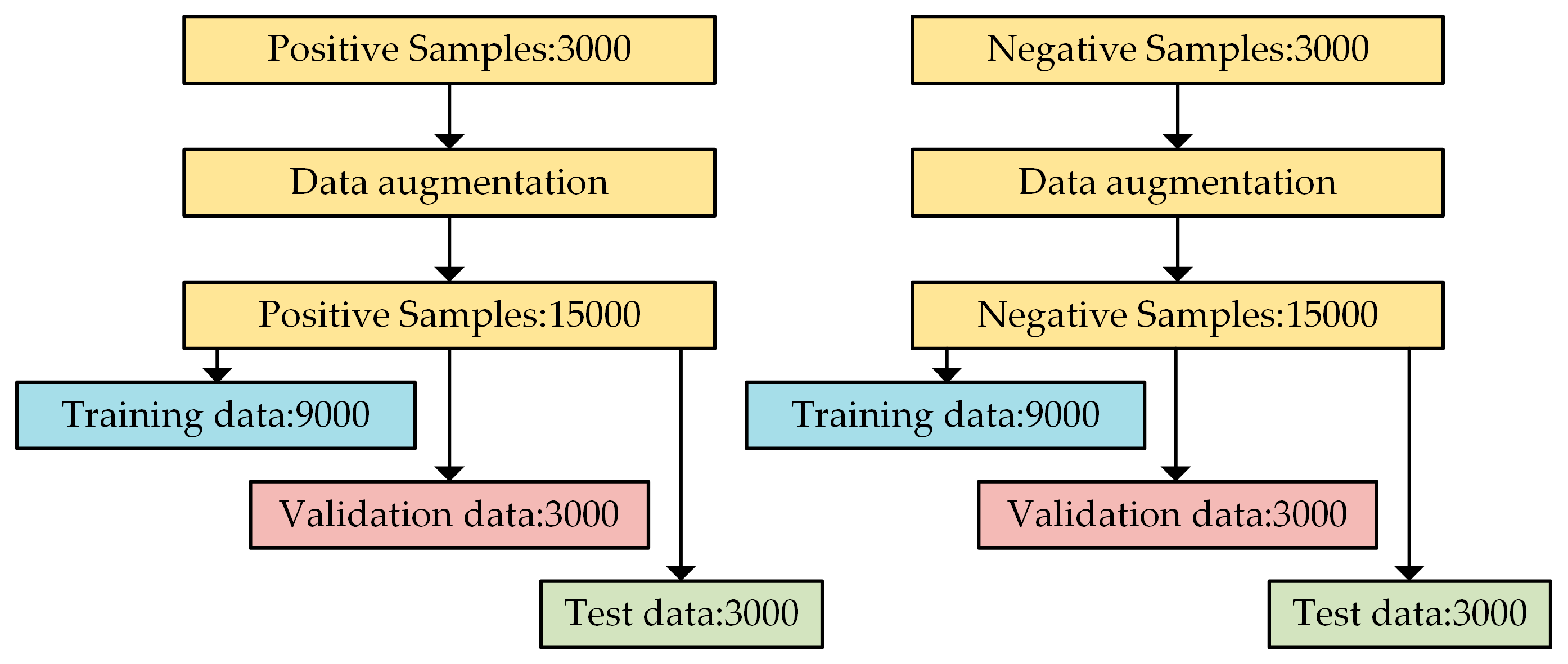

3. Data Collection and Augmentation

3.1. Data Collection

3.2. Data Augmentation

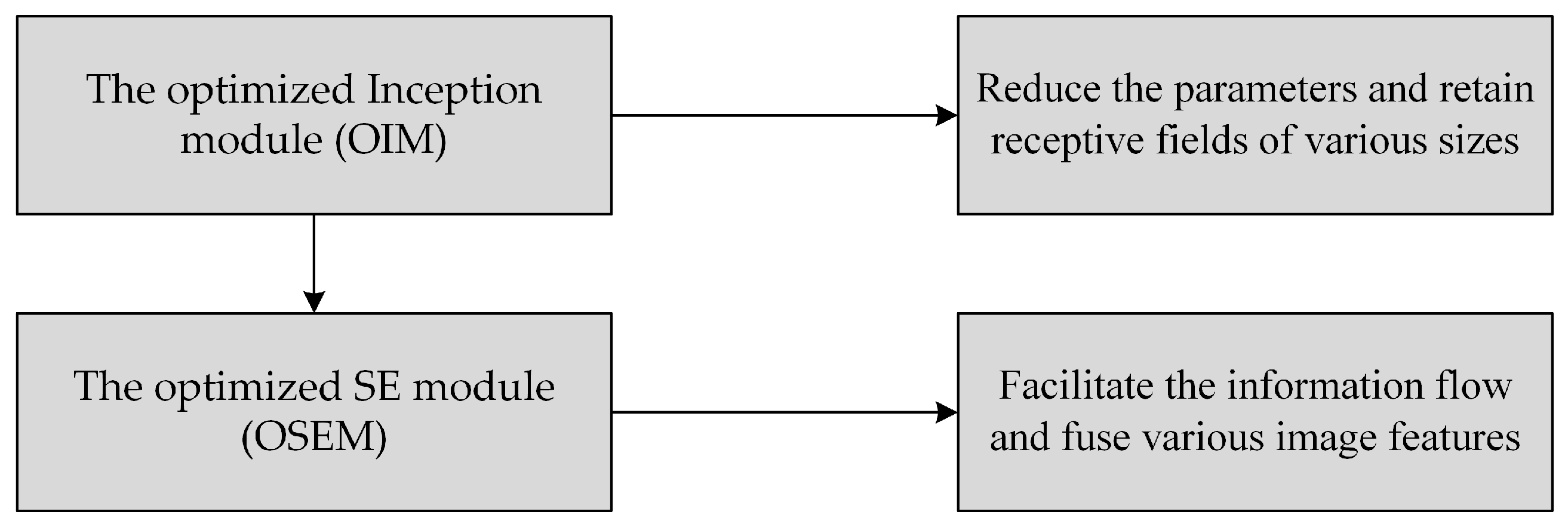

4. Method

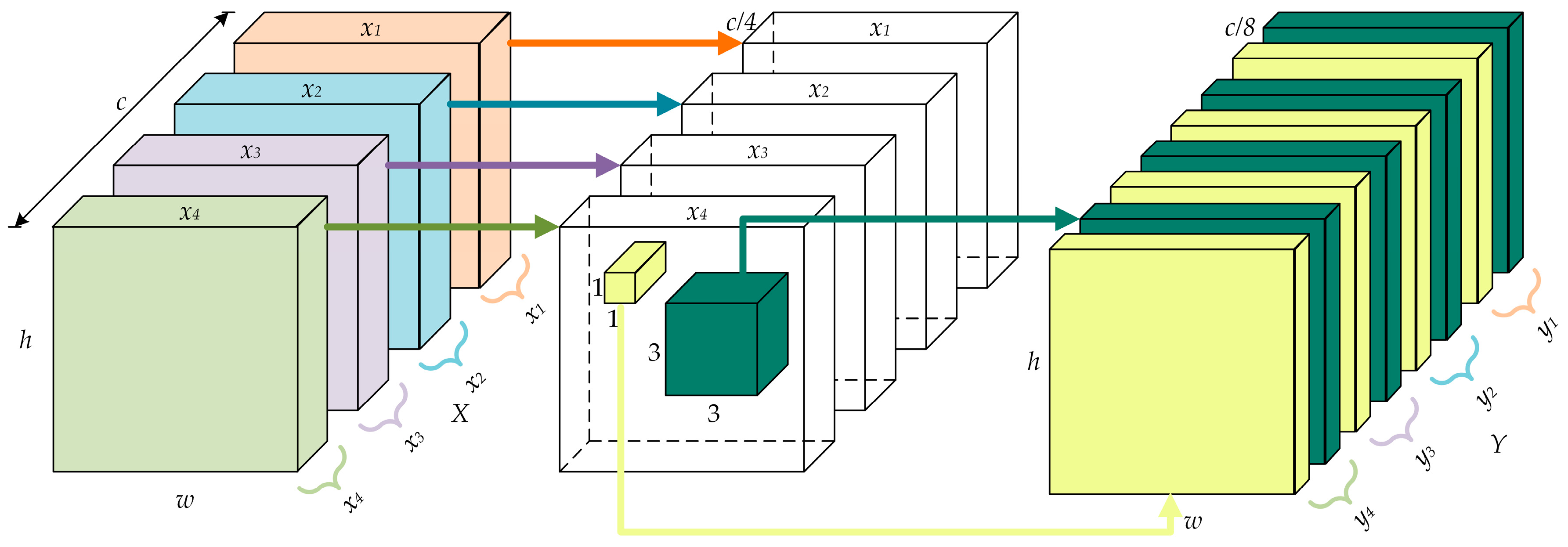

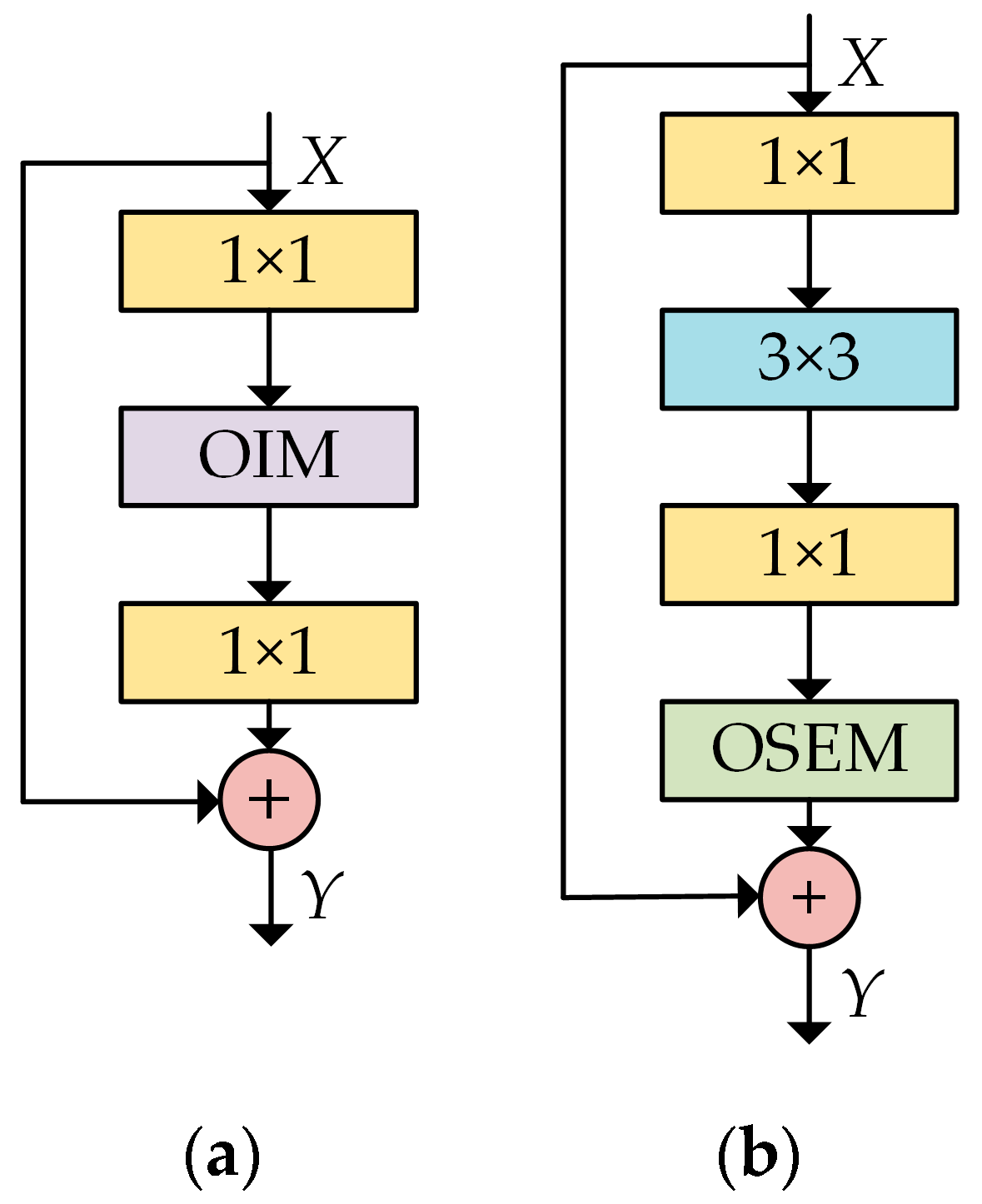

4.1. Optimized Inception Module

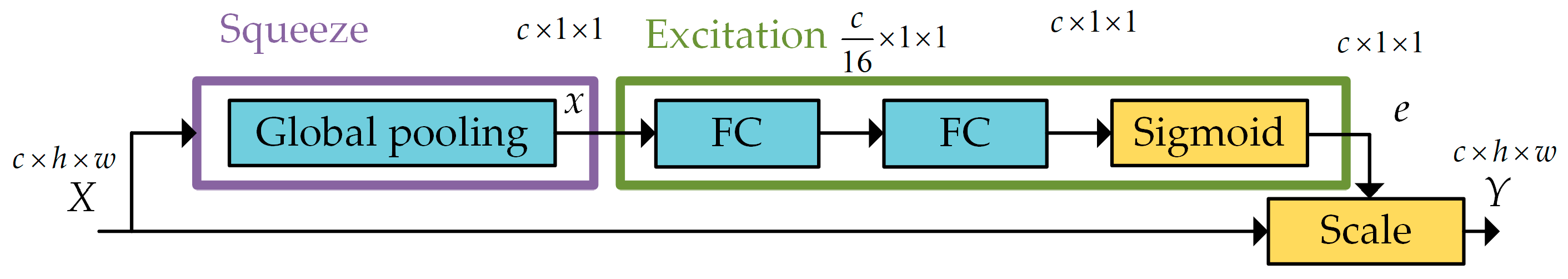

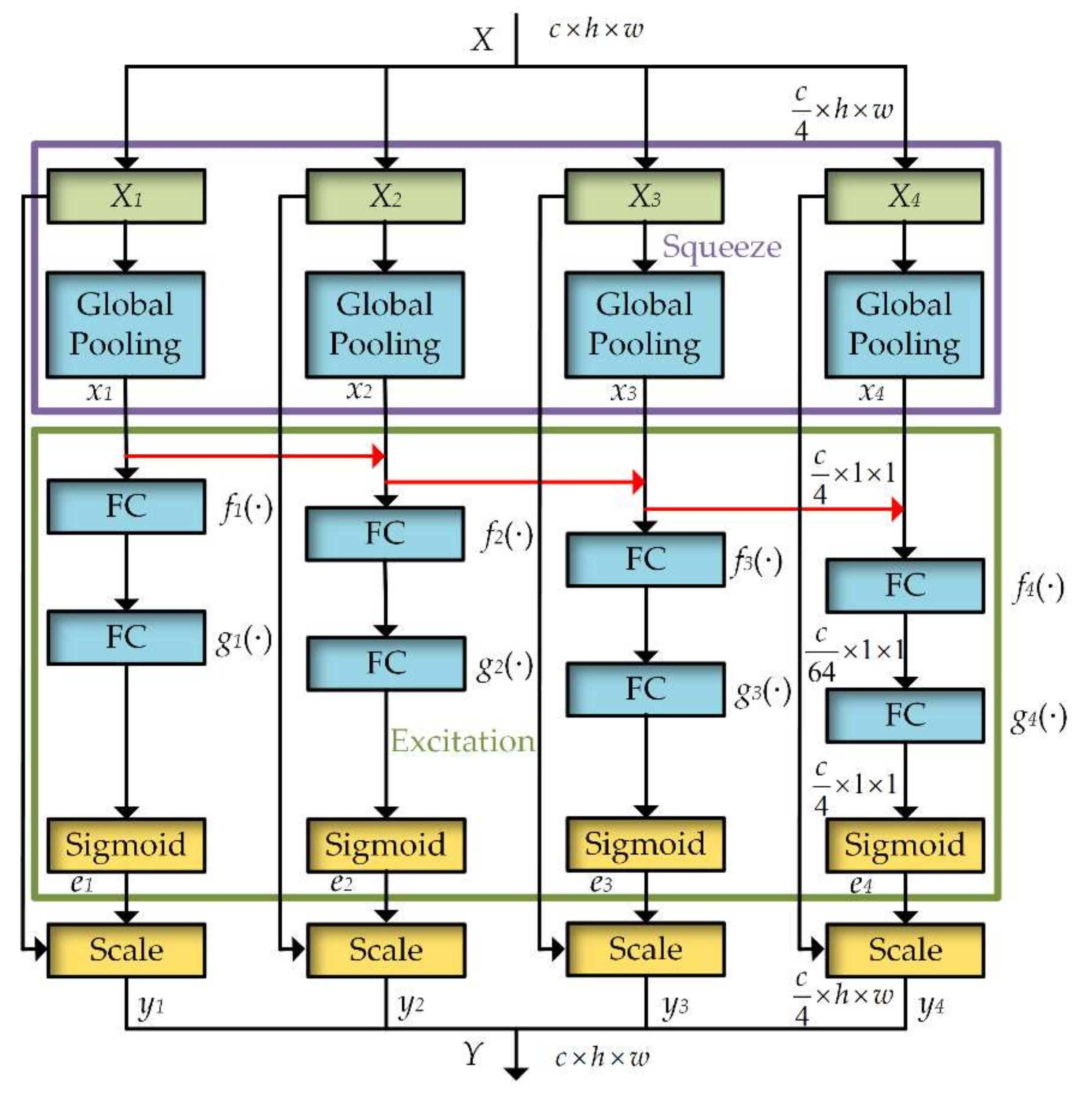

4.2. Optimized SE Module

4.3. Network Implementation Details

5. Experimental Results and Analysis

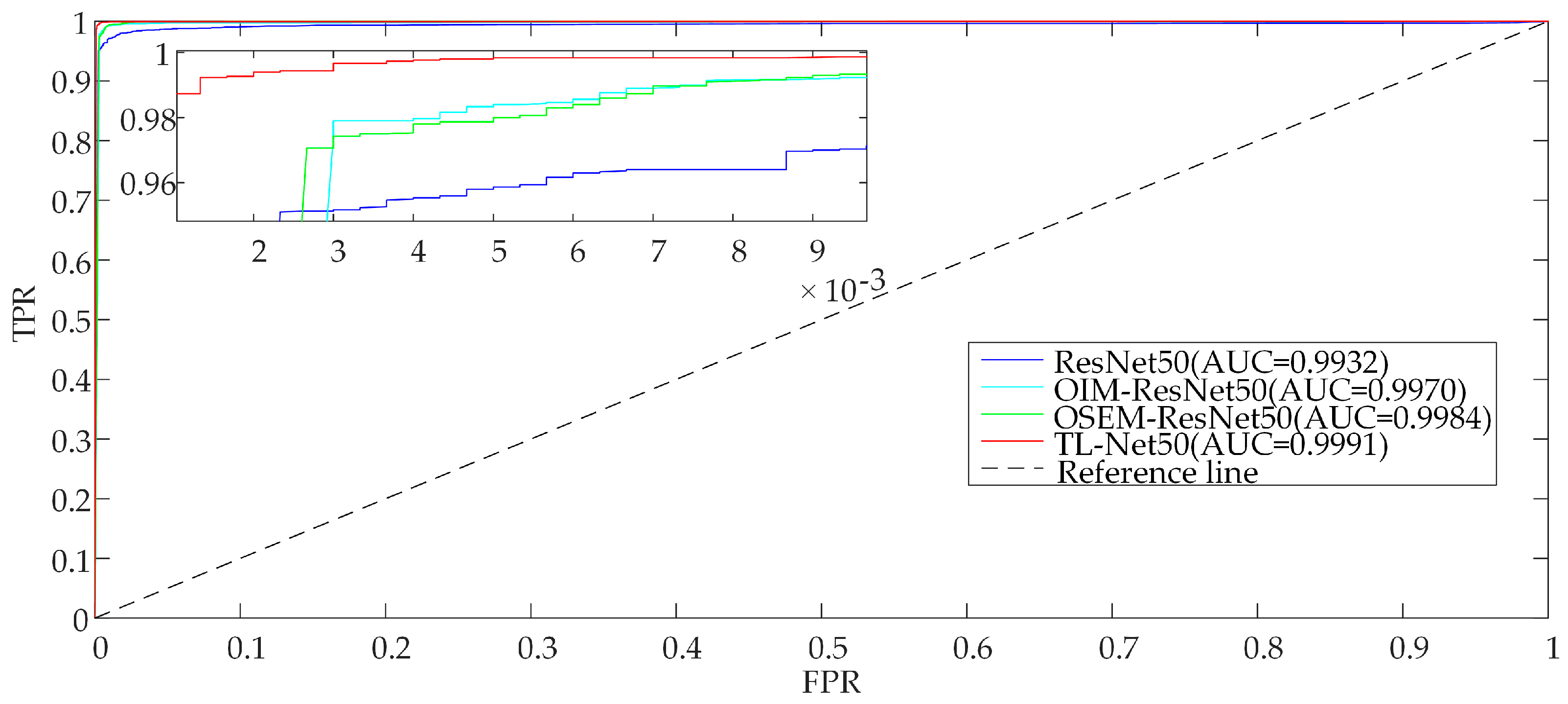

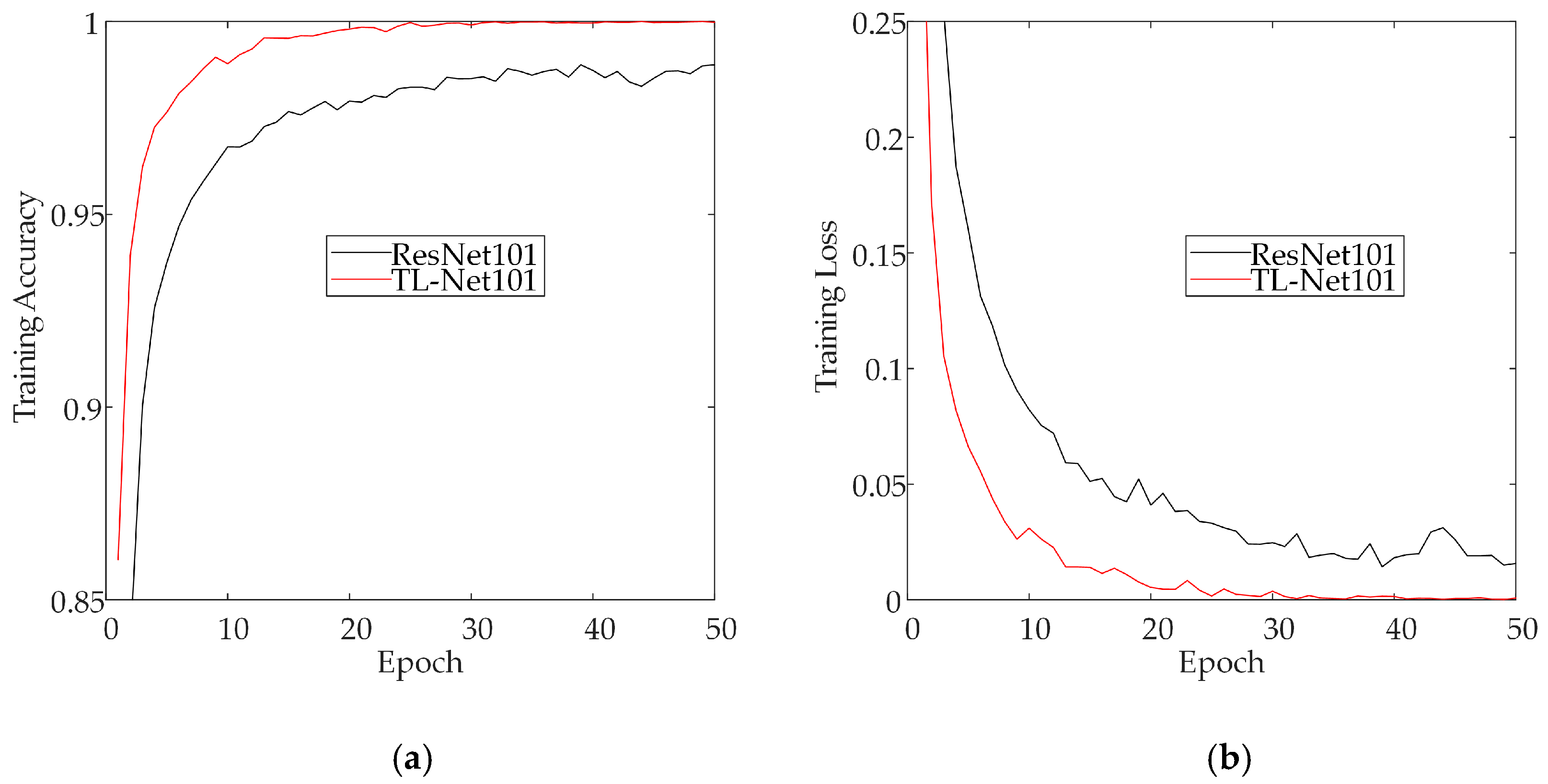

5.1. Ablation Studies

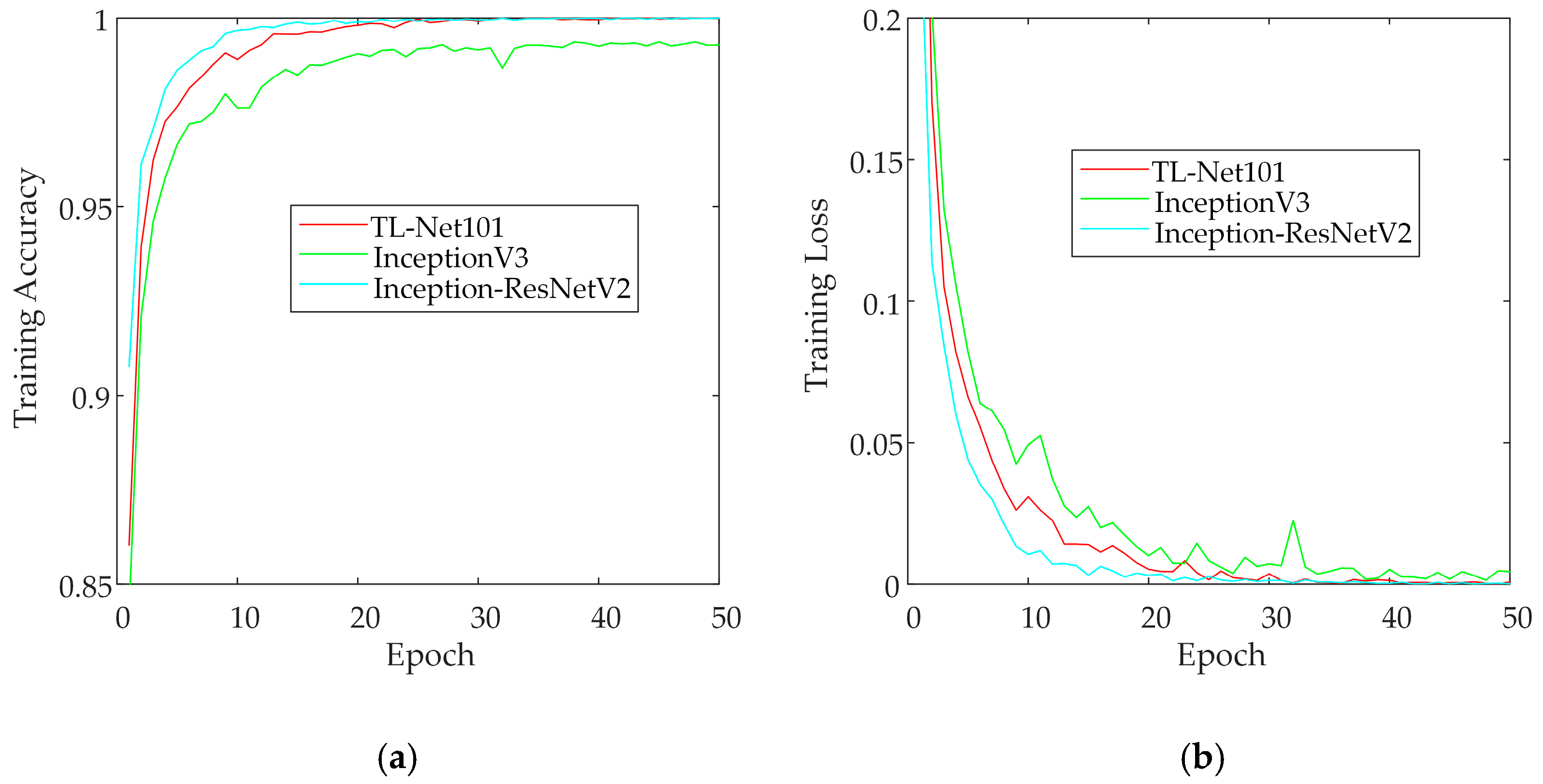

5.2. Comparison with Typical Deep Learning Methods

6. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Jenssen, R.; Roverso, D. Automatic autonomous vision-based power line inspection: A review of current status and the potential role of deep learning. Int. J. Electr. Power Energy Syst. 2018, 99, 107–120. [Google Scholar] [CrossRef]

- Deng, C.; Cheung, H.; Huang, Z.; Tan, Z.; Liu, J. Unmanned aerial vehicles for power line inspection: A cooperative way in platforms and communications. J. Commun. 2014, 9, 687–692. [Google Scholar] [CrossRef]

- Akmaz, D.; Mamiş, M.S.; Arkan, M.; Tağluk, M.E. Transmission line fault location using traveling wave frequencies and extreme learning machine. Electr. Power Syst. Res. 2018, 155. [Google Scholar] [CrossRef]

- Zhou, Z.; Zhang, C.; Xu, C.; Xiong, F.; Zhang, Y.; Umer, T. Energy-efficient industrial internet of UAVs for power line inspection in smart grid. IEEE Trans. Ind. Inform. 2018, 14, 2705–2714. [Google Scholar] [CrossRef]

- Qiu, J. How to build an electric power transmission network considering demand side management and a risk constraint? Int. J. Electr. Power Energy Syst. 2018, 94, 311–320. [Google Scholar] [CrossRef]

- Han, J.; Yang, Z.; Zhang, Q.; Chen, C.; Li, H.; Lai, S.; Hu, G.; Xu, C.; Xu, H.; Wang, D.; et al. A method of insulator faults detection in aerial images for high-voltage transmission lines inspection. Appl. Sci. 2019, 9, 2009. [Google Scholar] [CrossRef]

- Chan, T.-H.; Jia, K.; Gao, S.; Lu, J.; Zeng, Z.; Ma, Y. PCANet: A simple deep learning baseline for image classification? IEEE Trans. Image Process. 2015, 24, 5017–5032. [Google Scholar] [CrossRef]

- Yamato, Y.; Demizu, T.; Noguchi, H.; Kataoka, M. Automatic GPU offloading technology for open IoT environment. IEEE Internet Things J. 2018, 6, 2669–2678. [Google Scholar] [CrossRef]

- Pan, B.; Shi, Z.; Xu, X. MugNet: Deep learning for hyperspectral image classification using limited samples. ISPRS J. Photogramm. Remote Sens. 2018, 145, 108–119. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.-W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Foody, G.M.; Mathur, A. The use of small training sets containing mixed pixels for accurate hard image classification: Training on mixed spectral responses for classification by a SVM. Remote Sens. Environ. 2006, 103, 179–189. [Google Scholar] [CrossRef]

- Chipman, H.A.; George, E.I.; McCulloch, R.E. BART: Bayesian additive regression trees. Ann. Appl. Stat. 2010, 4, 266–298. [Google Scholar] [CrossRef]

- Meinshausen, N. Quantile regression forests. J. Mach. Learn. Res. 2006, 7, 983–999. [Google Scholar]

- Yang, F.; Wanik, D.W.; Cerrai, D.; Bhuiyan, A.E.; Anagnostou, E.N. Quantifying uncertainty in machine learning-based power outage prediction model training: A tool for sustainable storm restoration. Sustainability 2020, 12, 1525. [Google Scholar] [CrossRef]

- Ehsan, B.M.A.; Begum, F.; Ilham, S.J.; Khan, R.S. Advanced wind speed prediction using convective weather variables through machine learning application. Appl. Comput. Geosci. 2019, 1, 100002. [Google Scholar] [CrossRef]

- Cerrai, D.; Wanik, D.W.; Bhuiyan, M.A.E.; Zhang, X.; Yang, J.; Frediani, M.E.; Anagnostou, E.N. Predicting storm outages through new representations of weather and vegetation. IEEE Access 2019, 7, 29639–29654. [Google Scholar] [CrossRef]

- Bengio, Y.; Courville, A.; Vincent, P. Representation learning: A review and new perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1798–1828. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Pdf ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–10 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Zhao, Z.; Zhen, Z.; Zhang, L.; Qi, Y.; Kong, Y.; Zhang, K. Insulator detection method in inspection image based on improved faster R-CNN. Energies 2019, 12, 1204. [Google Scholar] [CrossRef]

- Han, J.; Yang, Z.; Xu, H.; Hu, G.; Zhang, C.; Li, H.; Lai, S.; Zeng, H. Search like an eagle: A cascaded model for insulator missing faults detection in aerial images. Energies 2020, 13, 713. [Google Scholar] [CrossRef]

- Liu, Y.; Shi, J.; Liu, Z.; Huang, J.; Zhou, T. Two-layer routing for high-voltage powerline inspection by cooperated ground vehicle and drone. Energies 2019, 12, 1385. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, H. Automatic detection of transformer components in inspection images based on improved faster R-CNN. Energies 2018, 11, 3496. [Google Scholar] [CrossRef]

- Juntao, Y.; Zhizhong, K. Multi-scale features and markov random field model for powerline scene classification. Acta Geod. Cartogr. Sin. 2018, 47, 188. [Google Scholar]

- Kim, H.B.; Sohn, G. Point-based classification of power line corridor scene using random forests. Photogramm. Eng. Remote Sens. 2013, 79, 821–833. [Google Scholar] [CrossRef]

- Zhao, Z.; Xu, G.; Qi, Y.; Liu, N.; Zhang, T. Multi-patch deep features for power line insulator status classification from aerial images. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 25–29 July 2016; pp. 3187–3194. [Google Scholar]

- Wei, D.; Wang, B.; Lin, G.; Liu, D.; Dong, Z.Y.; Liu, H.; Liu, Y. Research on unstructured text data mining and fault classification based on RNN-LSTM with malfunction inspection report. Energies 2017, 10, 406. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-first AAAI conference on artificial intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

| Layer Name | TL-Net50 | TL-Net101 |

|---|---|---|

| conv1 | 7×7 convolution, 64, stride 2 | |

| conv2_x | 3×3 max pooling, stride 2 | |

| conv3_x | ||

| conv4_x | ||

| conv5_x | ||

| output | Average pooling, 1-d fc, sigmoid | |

| Model | Test_acc | AUC Value |

|---|---|---|

| ResNet50 | 98.03% | 0.9932 |

| OIM-ResNet50 | 98.87% | 0.9970 |

| OSEM-ResNet50 | 99.12% | 0.9984 |

| TL-Net50 | 99.53% | 0.9991 |

| ResNet101 | 98.23% | 0.9964 |

| OIM-ResNet101 | 99.30% | 0.9983 |

| OSEM-ResNet101 | 99.42% | 0.9994 |

| TL-Net101 | 99.68% | 0.9995 |

| Model | Test_acc | AUC Value |

|---|---|---|

| InceptionV3 | 98.87% | 0.9974 |

| ResNet50 | 98.03% | 0.9932 |

| ResNet101 | 98.23% | 0.9964 |

| Inception-ResNetV2 | 99.58% | 0.9990 |

| TL-Net50 | 99.53% | 0.9991 |

| TL-Net101 | 99.68% | 0.9995 |

| Model | Memory Consumption | ART |

|---|---|---|

| InceptionV3 | 92 M | 0.024 s |

| ResNet50 | 90 M | 0.017 s |

| ResNet101 | 163 M | 0.031 s |

| Inception-ResNetV2 | 214 M | 0.050 s |

| TL-Net50 | 57 M | 0.023 s |

| TL-Net101 | 100 M | 0.048 s |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, H.; Yang, Z.; Han, J.; Lai, S.; Zhang, Q.; Zhang, C.; Fang, Q.; Hu, G. TL-Net: A Novel Network for Transmission Line Scenes Classification. Energies 2020, 13, 3910. https://doi.org/10.3390/en13153910

Li H, Yang Z, Han J, Lai S, Zhang Q, Zhang C, Fang Q, Hu G. TL-Net: A Novel Network for Transmission Line Scenes Classification. Energies. 2020; 13(15):3910. https://doi.org/10.3390/en13153910

Chicago/Turabian StyleLi, Hongchen, Zhong Yang, Jiaming Han, Shangxiang Lai, Qiuyan Zhang, Chi Zhang, Qianhui Fang, and Guoxiong Hu. 2020. "TL-Net: A Novel Network for Transmission Line Scenes Classification" Energies 13, no. 15: 3910. https://doi.org/10.3390/en13153910

APA StyleLi, H., Yang, Z., Han, J., Lai, S., Zhang, Q., Zhang, C., Fang, Q., & Hu, G. (2020). TL-Net: A Novel Network for Transmission Line Scenes Classification. Energies, 13(15), 3910. https://doi.org/10.3390/en13153910