AE-LSTM Based Deep Learning Model for Degradation Rate Influenced Energy Estimation of a PV System

Abstract

1. Introduction

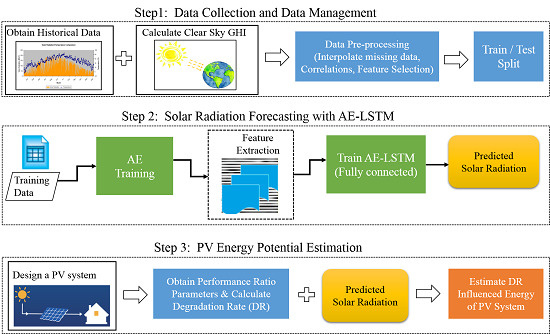

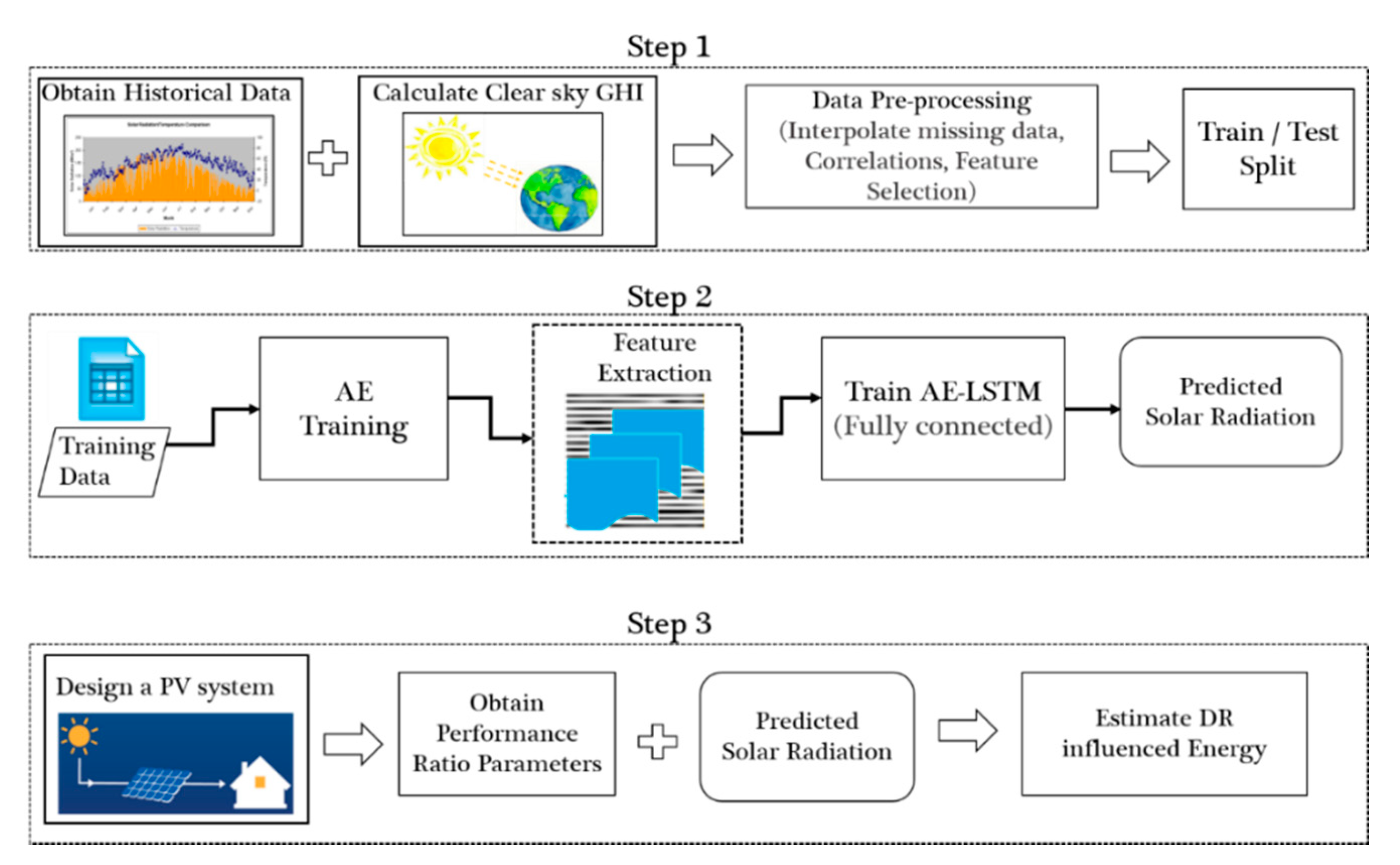

2. Methodology

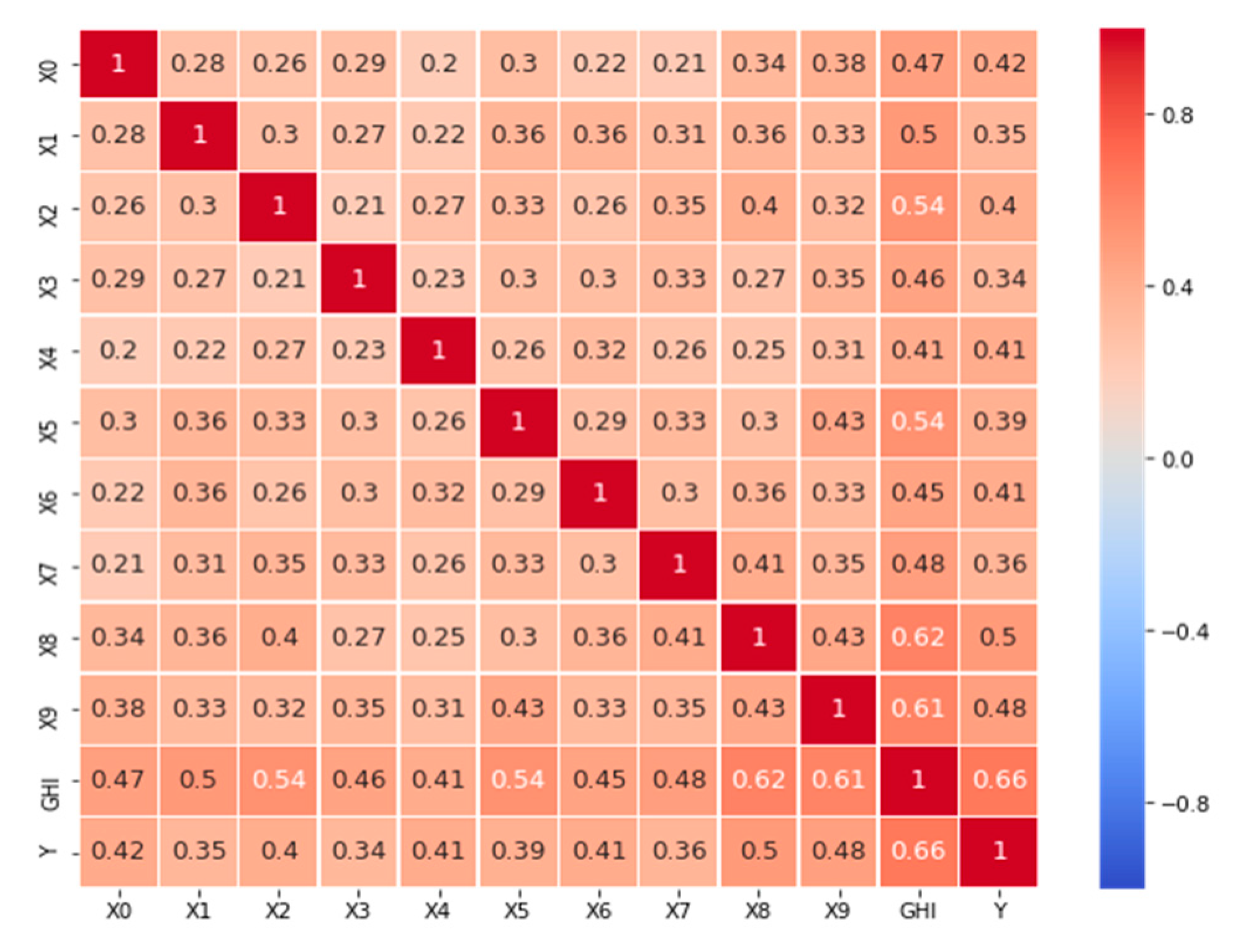

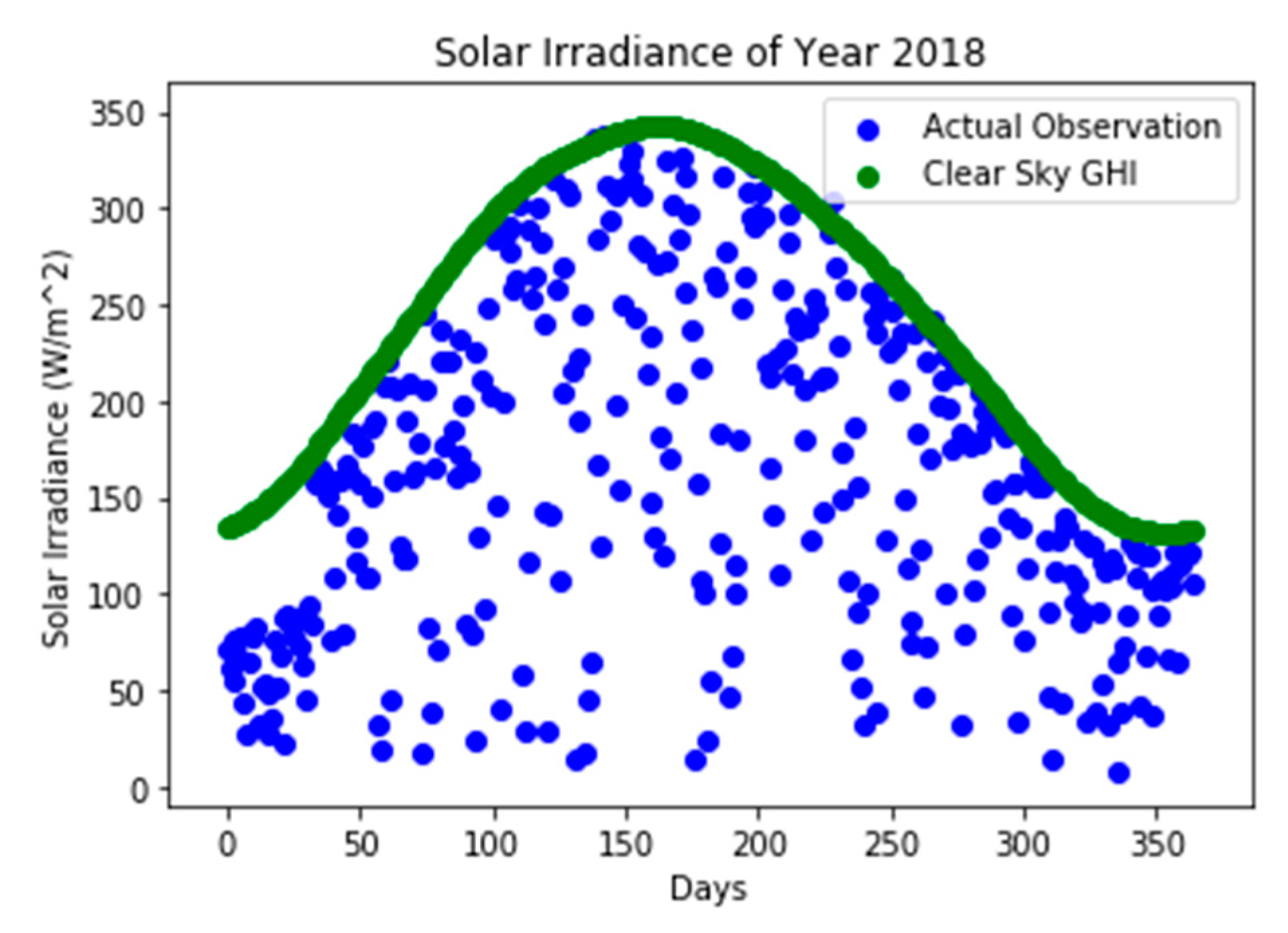

2.1. Input Feature Selection

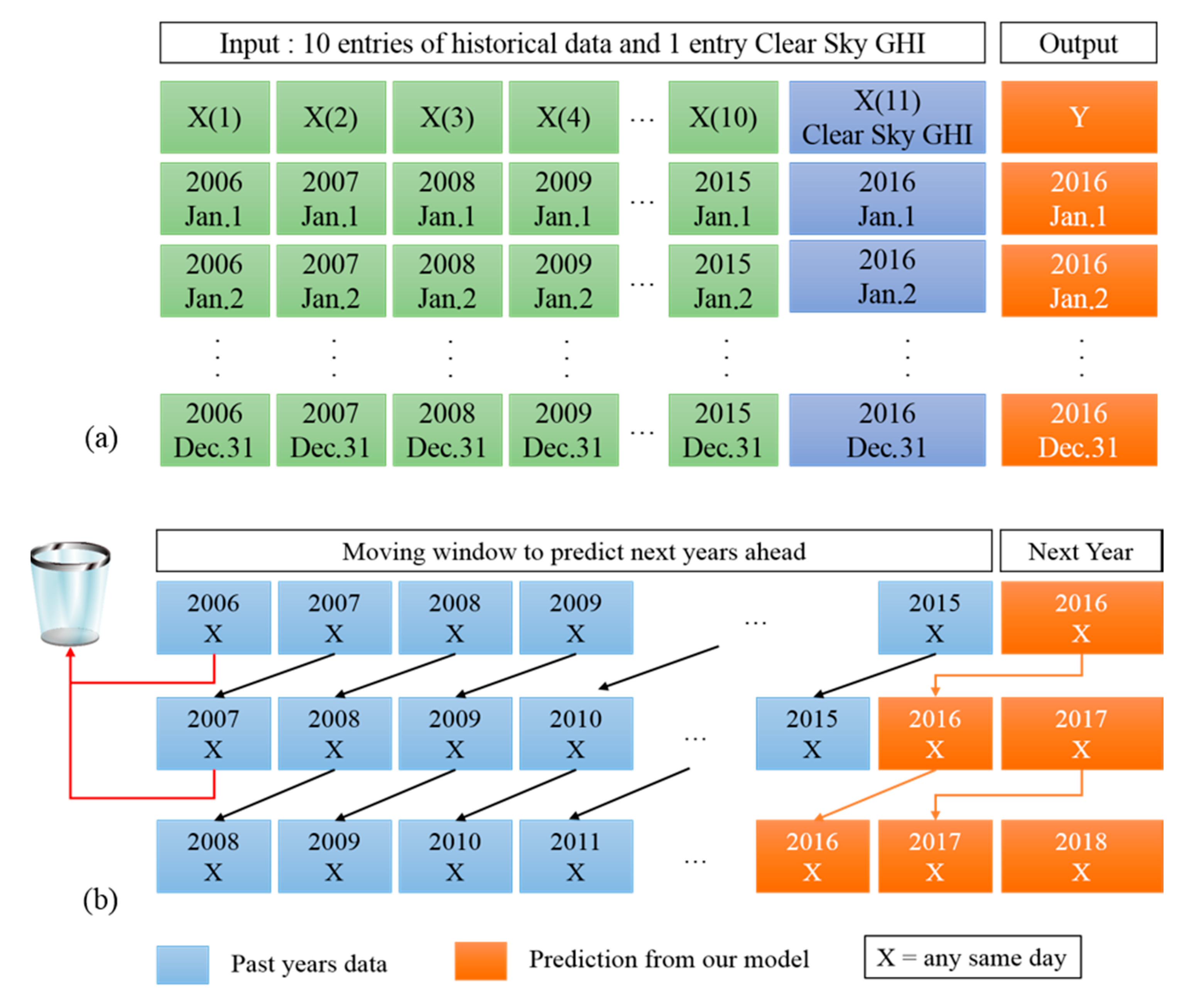

2.2. Data Management Technique

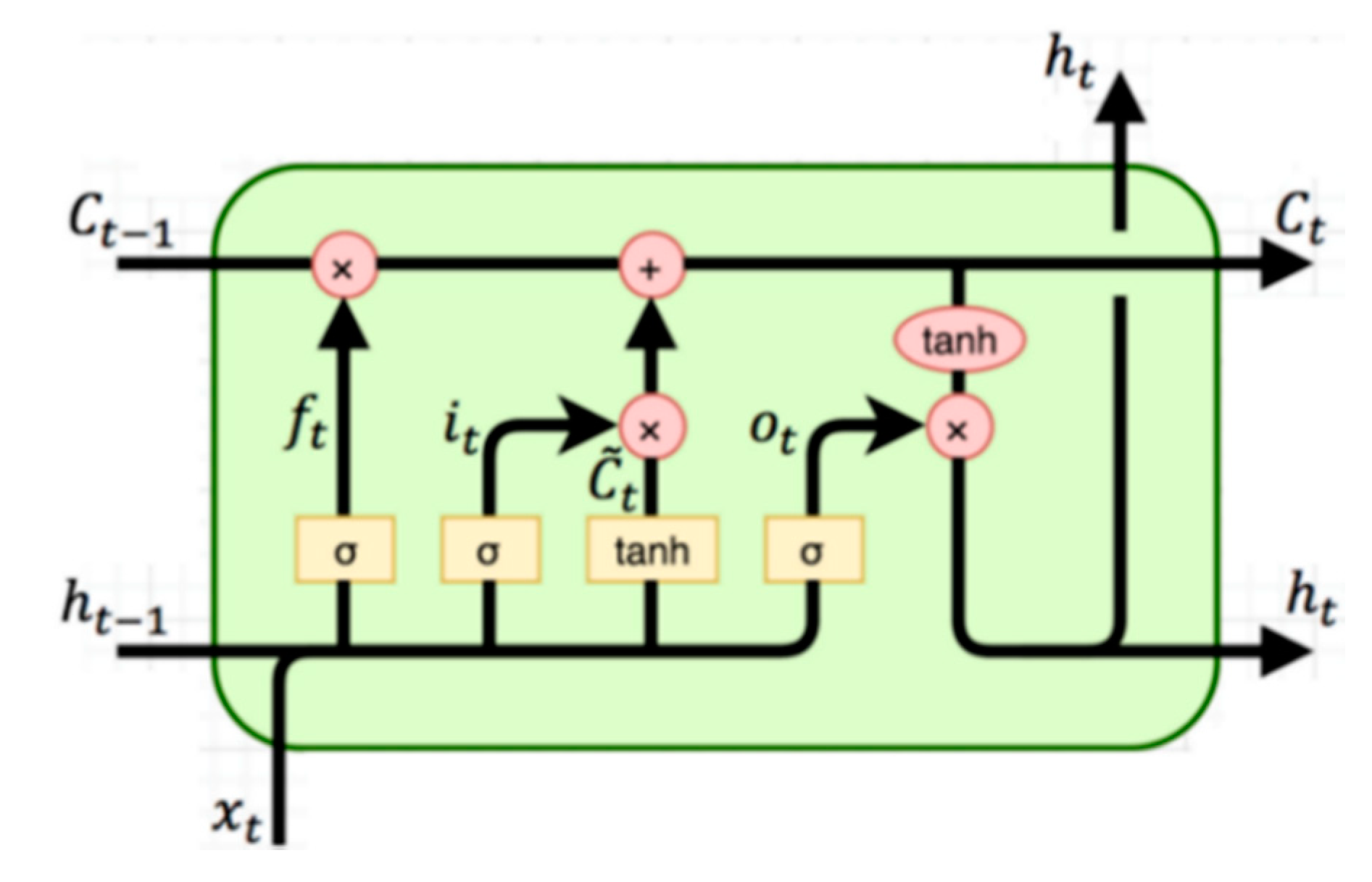

3. Deep Learning Model

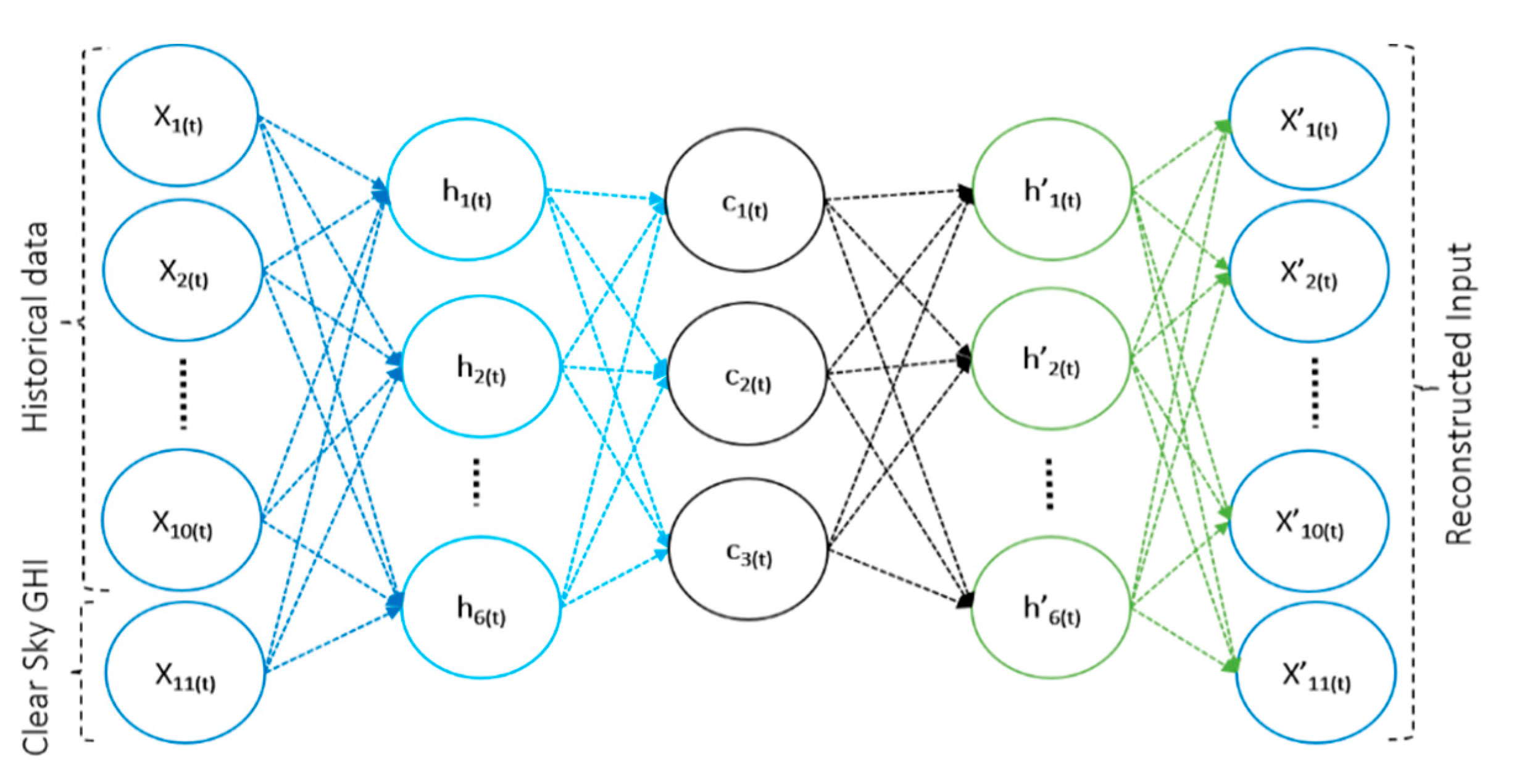

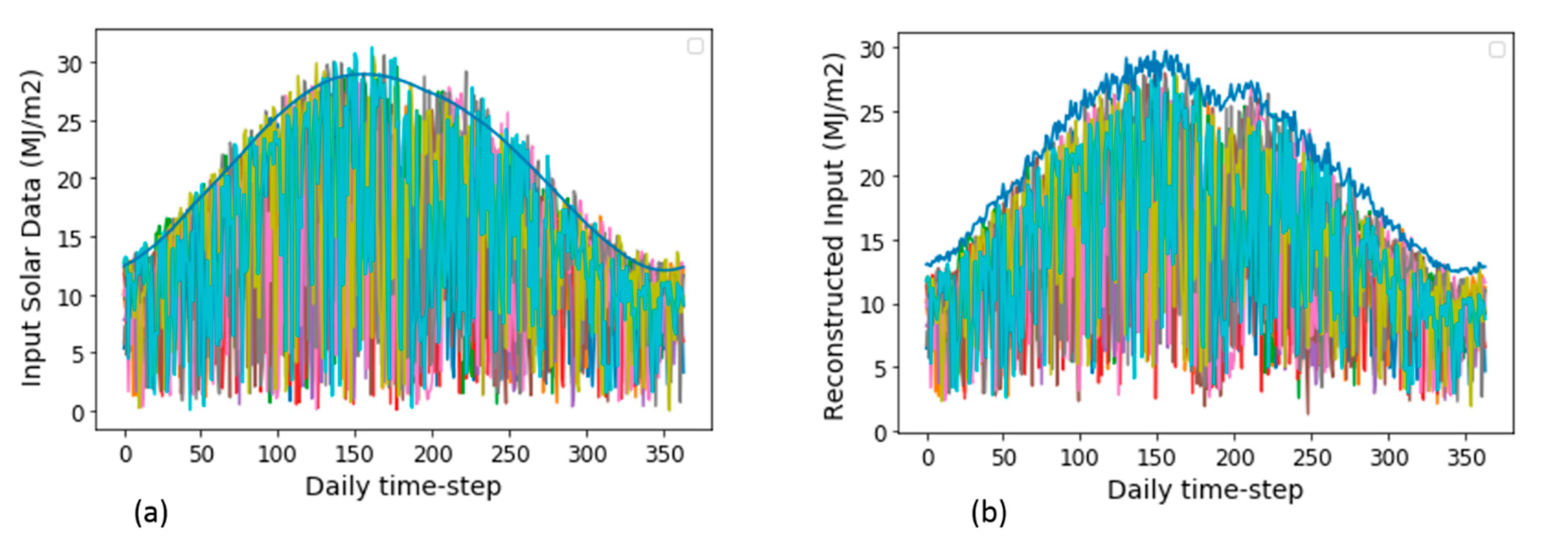

3.1. Auto-Encoder (AE)

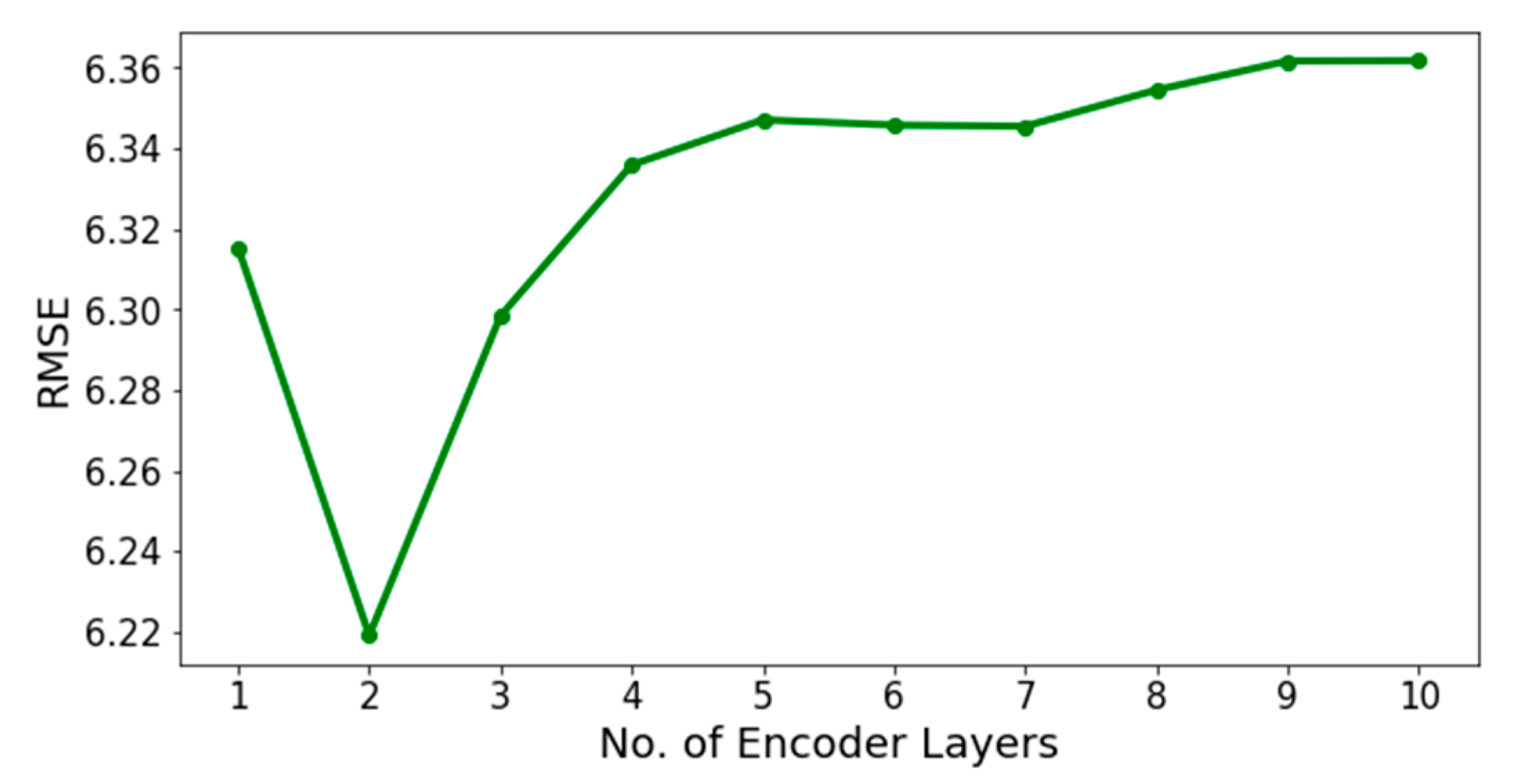

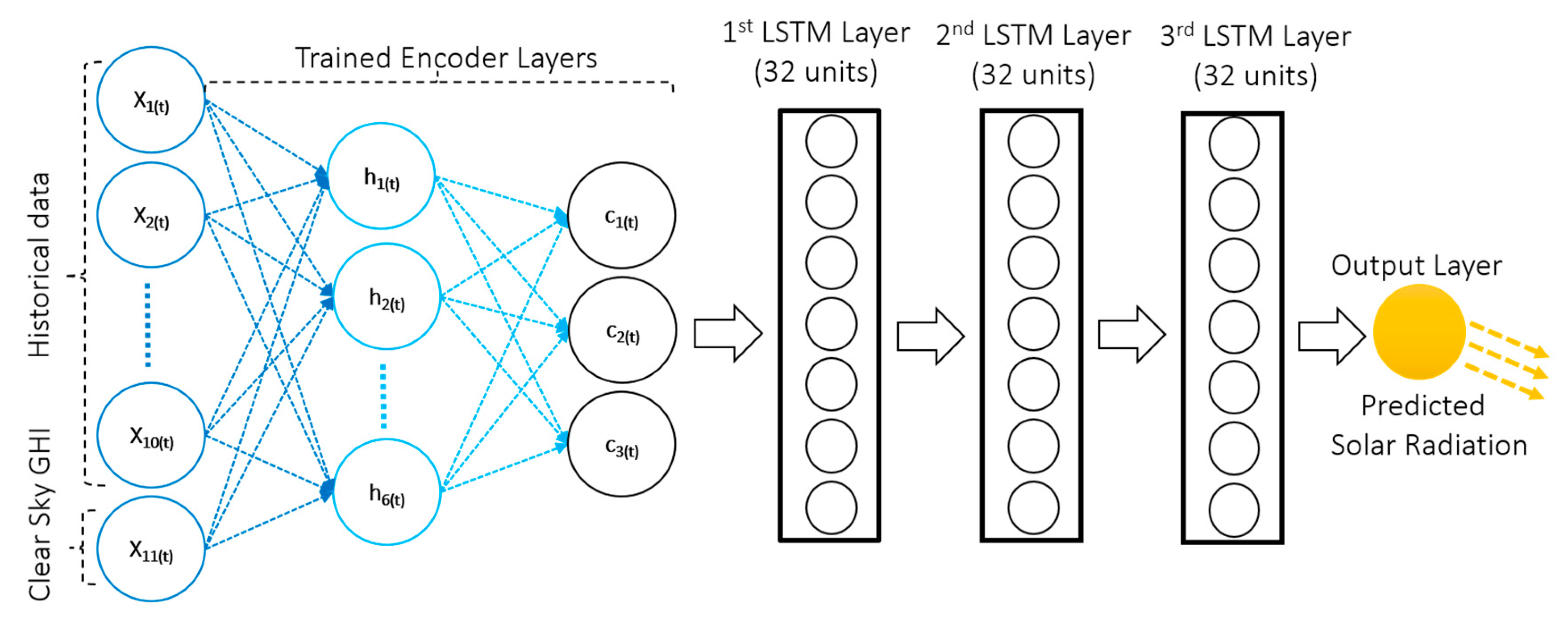

3.2. Proposed AE-LSTM Model

4. Experiments and Results

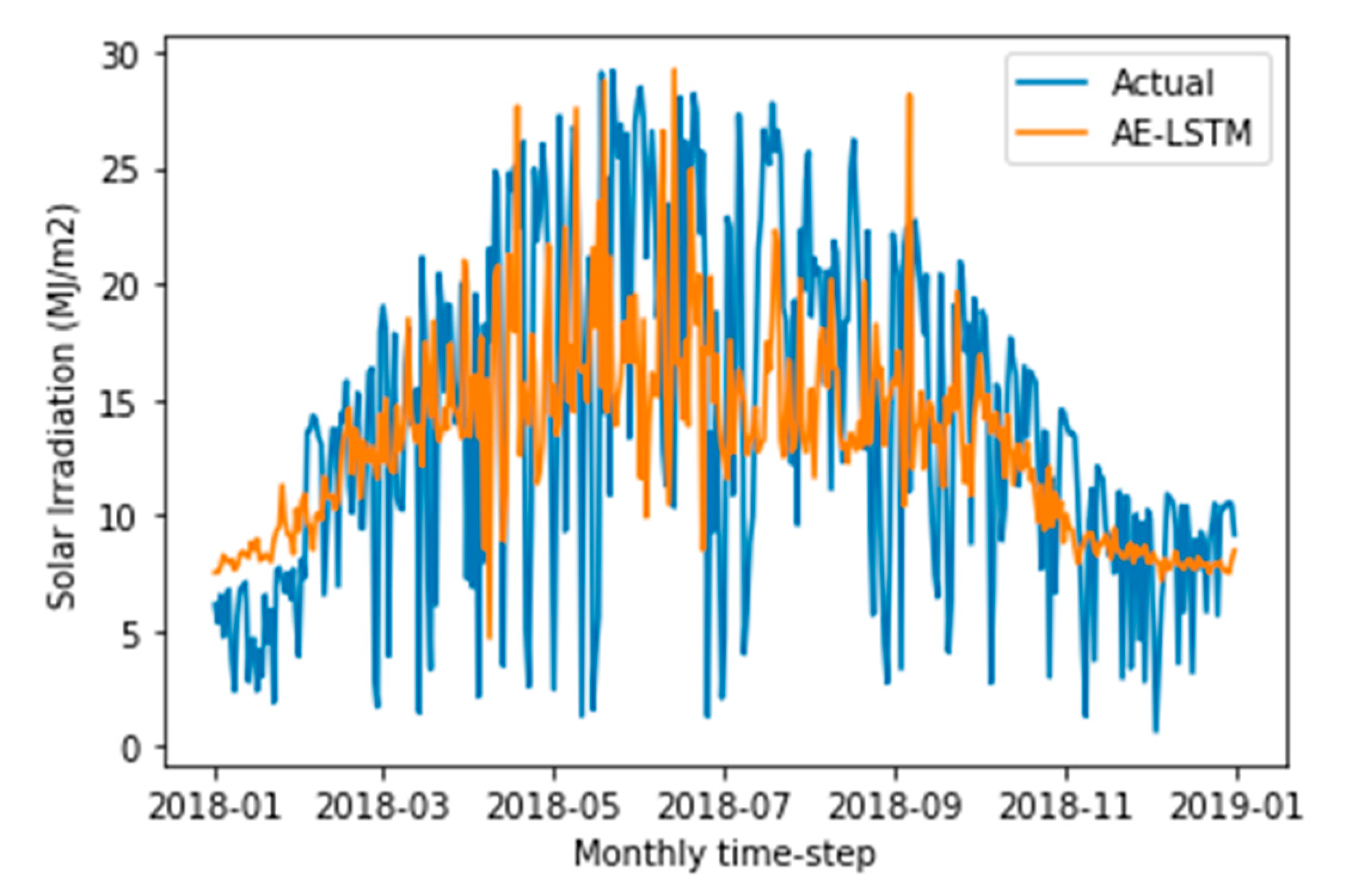

4.1. Solar Radiation Forecasting

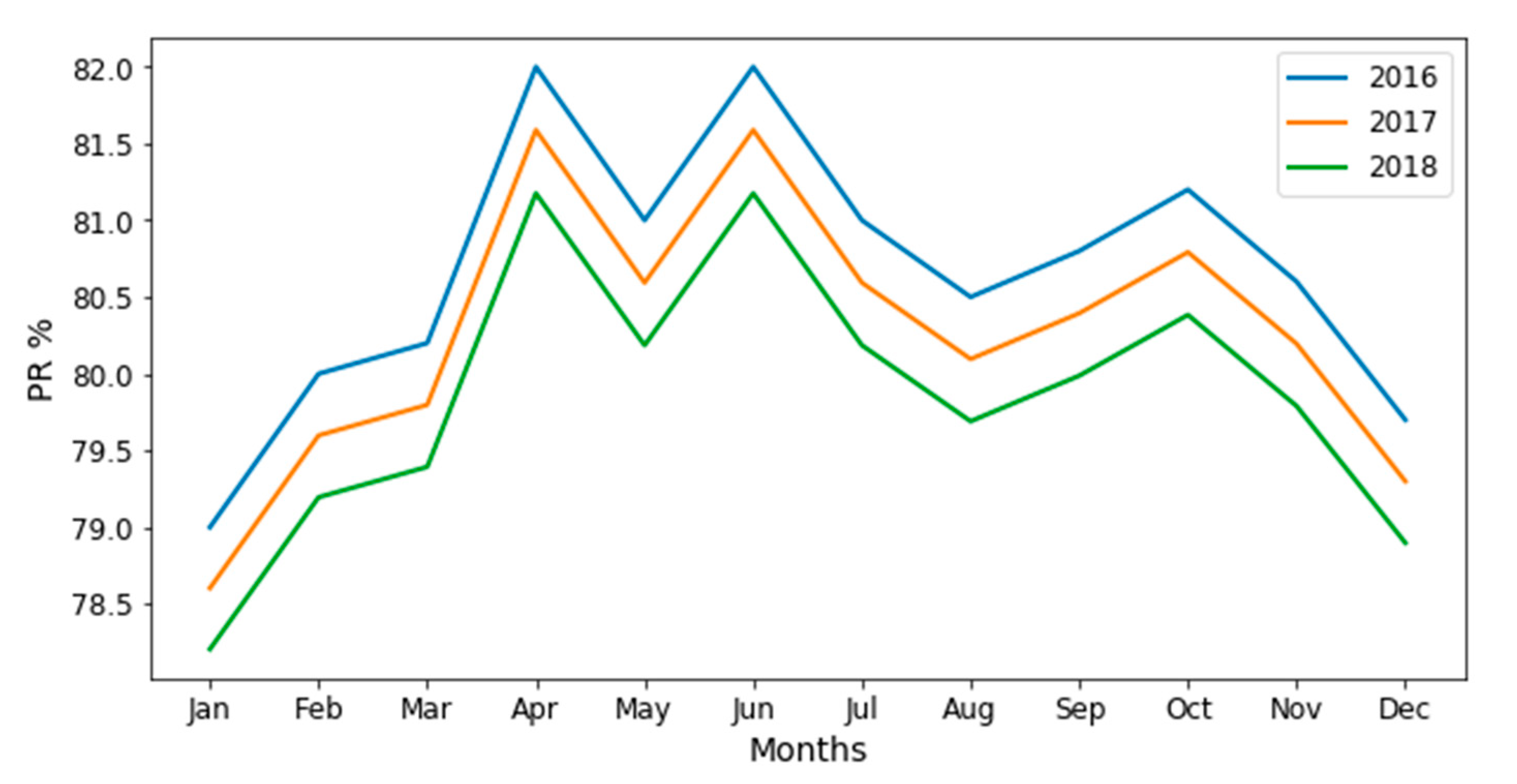

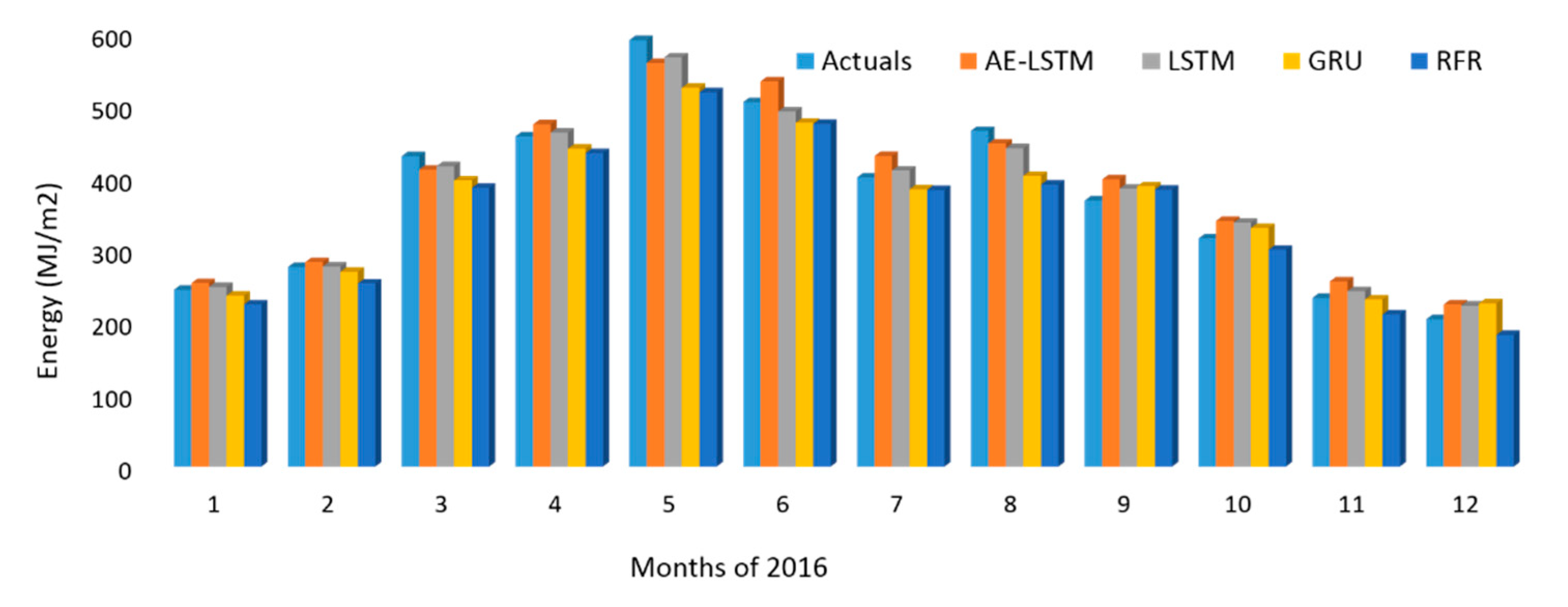

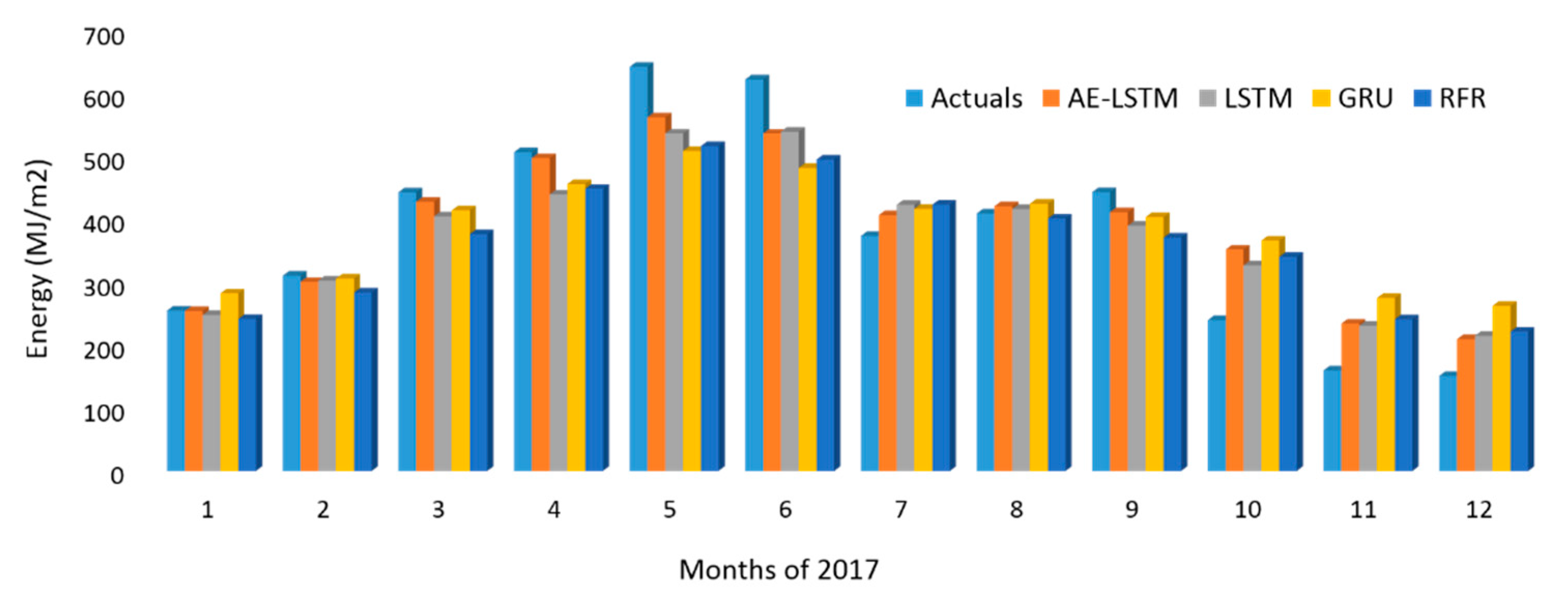

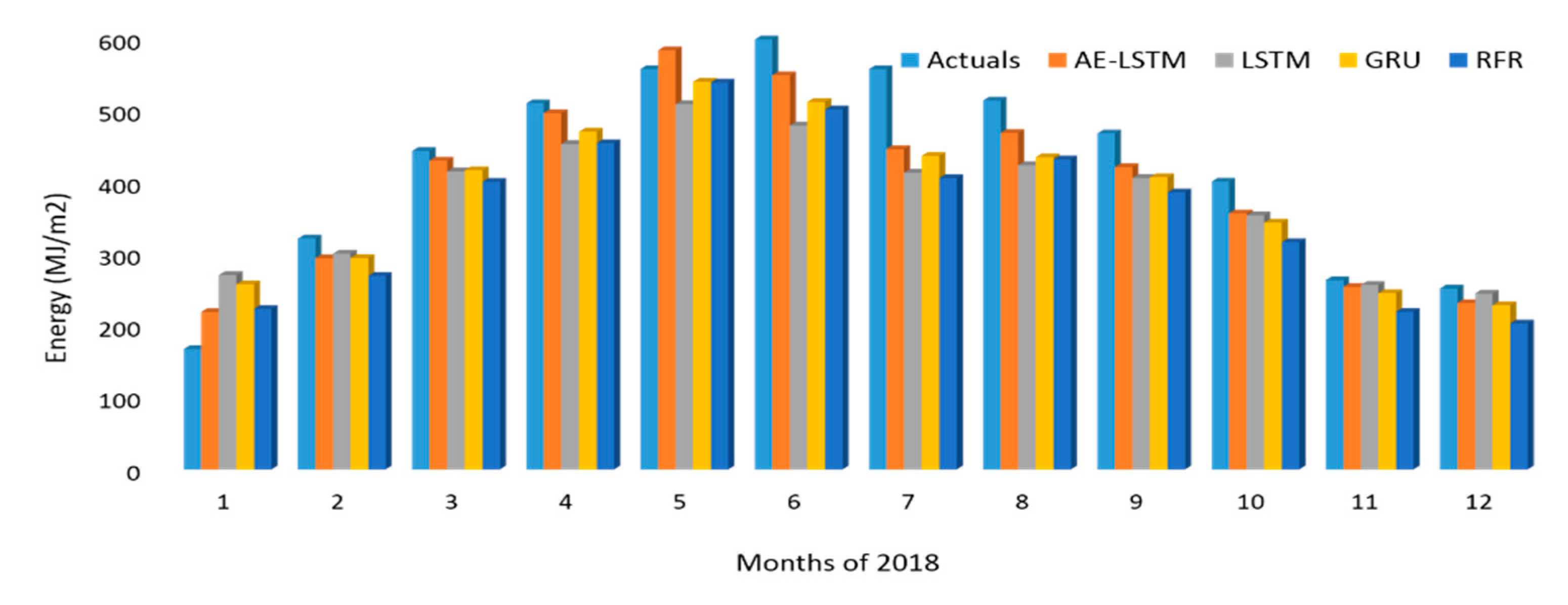

4.2. Degradation Rate Influenced Energy Estimation of PV System

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Short, W.; Packey, D.J.; Holt, T. A Manual for the Economic Evaluation of Energy Efficiency and Renewable Energy Technologies; U.S. Department of Energy Managed by Midwest Research Institute for the U.S. Department of Energy: Golden, CO, USA, 1995.

- Jordan, D. Methods for Analysis of Outdoor Performance Data. In Proceedings of the Photovoltaic Module Reliability, Golden, CO, USA, 16 February 2011; Workshop: Golden, CO, USA, 2011. Available online: https://www.nrel.gov/docs/fy11osti/51120.pdf (accessed on 29 July 2020).

- Meeker, W.; Escobar, L. Statistical Methods for Reliability Data; Wiley-Interscience: Hoboken, NJ, USA, 2014. [Google Scholar]

- Silvestre, S.; Kichou, S.; Guglielminotti, L.; Nofuentes, G.; Alonso-Abella, M. Degradation analysis of thin film photovoltaic modules under outdoor long term exposure in Spanish continental climate conditions. Sol. Energy 2016, 139, 599–607. [Google Scholar] [CrossRef]

- Jordan, D.; Kurtz, S. Photovoltaic Degradation Rates-an Analytical Review. Prog. Photovolt. Res. Appl. 2011, 21, 12–29. [Google Scholar] [CrossRef]

- Leva, S.; Dolara, A.; Grimaccia, F.; Mussetta, M.; Ogliari, E. Analysis and validation of 24 hours ahead neural network forecasting of photovoltaic output power. Math. Comput. Simul. 2017, 131, 88–100. [Google Scholar] [CrossRef]

- Monteiro, C.; Fernandez-Jimenez, L.; Ramirez-Rosado, I.; Muñoz-Jimenez, A.; Lara-Santillan, P. Short-Term Forecasting Models for Photovoltaic Plants: Analytical versus Soft-Computing Techniques. Math. Probl. Eng. 2013, 2013, 1–9. [Google Scholar] [CrossRef]

- Naveen Chakkaravarthy, A.; Subathra, M.; Jerin Pradeep, P.; Manoj Kumar, N. Solar irradiance forecasting and energy optimization for achieving nearly net zero energy building. J. Renew. Sustain. Energy 2018, 10, 035103. [Google Scholar] [CrossRef]

- Ogliari, E.; Grimaccia, F.; Leva, S.; Mussetta, M. Hybrid Predictive Models for Accurate Forecasting in PV Systems. Energies 2013, 6, 1918–1929. [Google Scholar] [CrossRef]

- Manoj Kumar, N.; Subathra, M. Three years ahead solar irradiance forecasting to quantify degradation influenced energy potentials from thin film (a-Si) photovoltaic system. Results Phys. 2019, 12, 701–703. [Google Scholar] [CrossRef]

- Kumar, N.; Gupta, R.; Mathew, M.; Jayakumar, A.; Singh, N. Performance, energy loss, and degradation prediction of roof-integrated crystalline solar PV system installed in Northern India. Case Stud. Therm. Eng. 2019, 13, 100409. [Google Scholar] [CrossRef]

- Gasparin, A.; Lukovic, S.; Alippi, C. Deep Learning for Time Series Forecasting: The Electric Load Case. arXiv 2019, arXiv:1907.09207. [Google Scholar]

- Jafar, A.; Lee, M. Performance Improvements of Deep Residual Convolutional Network with Hyperparameter Opimizations; The Korea Institute of Information Scientists and Engineers: Seoul, Korea, 2019; pp. 13–15. [Google Scholar]

- Sezer, O.B.; Gudelek, M.U.; Ozbayoglu, A.M. Financial time series forecasting with deep learning: A systematic literature review: 2005–2009. arXiv 2019, arXiv:1911.13288. [Google Scholar]

- Bengio, Y.; Simard, P.; Frasconi, P. Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Netw. 1994, 5, 157–166. [Google Scholar] [CrossRef] [PubMed]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Aslam, M.; Lee, J.; Kim, H.; Lee, S.; Hong, S. Deep Learning Models for Long-Term Solar Radiation Forecasting Considering Microgrid Installation: A Comparative Study. Energies 2019, 13, 147. [Google Scholar] [CrossRef]

- Che, Y.; Chen, L.; Zheng, J.; Yuan, L.; Xiao, F. A Novel Hybrid Model of WRF and Clearness Index-Based Kalman Filter for Day-Ahead Solar Radiation Forecasting. Appl. Sci. 2019, 9, 3967. [Google Scholar] [CrossRef]

- Zhang, X.; Wei, Z. A Hybrid Model Based on Principal Component Analysis, Wavelet Transform, and Extreme Learning Machine Optimized by Bat Algorithm for Daily Solar Radiation Forecasting. Sustainability 2019, 11, 4138. [Google Scholar] [CrossRef]

- Huang, C.; Wang, L.; Lai, L. Data-Driven Short-Term Solar Irradiance Forecasting Based on Information of Neighboring Sites. IEEE Trans. Ind. Electron. 2019, 66, 9918–9927. [Google Scholar] [CrossRef]

- Cavallari, G.; Ribeiro, L.; Ponti, M. Unsupervised Representation Learning Using Convolutional and Stacked Auto-Encoders: A Domain and Cross-Domain Feature Space Analysis. In Proceedings of the 31st SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Rio de Janeiro, Brazil, 28–31 October 2018; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar]

- Charte, D.; Charte, F.; García, S.; del Jesus, M.; Herrera, F. A practical tutorial on autoencoders for nonlinear feature fusion: Taxonomy, models, software and guidelines. Inf. Fusion 2018, 44, 78–96. [Google Scholar] [CrossRef]

- Hecht-Nielsen Theory of the backpropagation neural network. In Proceedings of the International Joint Conference on Neural Networks, San Diego, CA, USA, 18–22 June 1989; IEEE: Washington, DC, USA, 1989.

- Available online: https://leportella.com/missing-data.html (accessed on 29 July 2020).

- Bird, R.; Hulstorm, R. A Simplified Clear Sky Model for Direct and Diffuse Insolation on Horizontal Surfaces; Solar Energy Research Institute: Golden, CO, USA, 1981. [Google Scholar]

- Ineichen, P. A broadband simplified version of the Solis clear sky model. Sol. Energy 2008, 82, 758–762. [Google Scholar] [CrossRef]

- Ineichen, P.; Perez, R. A new airmass independent formulation for the Linke turbidity coefficient. Sol. Energy 2002, 73, 151–157. [Google Scholar] [CrossRef]

- Makhzani, A.; Frey, B. k-Sparse autoencoders. arXiv 2013, arXiv:1312.5663. [Google Scholar]

- Building autoencoders in Keras. Available online: https://blog.keras.io/building-autoencoders-in-keras.html (accessed on 29 July 2020).

- Arpit, D.; Zhou, Y.; Ngo, H.; Govindaraju, V. Why regularized auto-encoder learn sparse representation? arXiv 2015, arXiv:1505.05561. [Google Scholar]

- Korea Meteorological Administration. Available online: https://web.kma.go.kr/eng/index.jsp (accessed on 29 July 2020).

- Mermoud, A.; Bruno, W. PVSYST User’s Manual; PVSYST SA: Satigny, Switzerland, 2014. [Google Scholar]

- Sharma, V.; Sastry, O.; Kumar, A.; Bora, B.; Chandel, S. Degradation analysis of a-Si, (HIT) hetro-junction intrinsic thin layer silicon and m-C-Si solar photovoltaic technologies under outdoor conditions. Energy 2014, 72, 536–546. [Google Scholar] [CrossRef]

| Parameter | Values |

|---|---|

| Adam optimizer (learning rate) | 0.001 |

| Batch size | 16 |

| Epochs | 150 |

| Activity regularizer (l1) | 0.0001 |

| Dropout rate | 0.2 |

| RMSE Values | ||||

|---|---|---|---|---|

| Year | RFR | LSTM | GRU | AE-LSTM |

| 2018 | 6.5817 | 6.3750 | 6.3313 | 6.2191 |

| 2017 | 5.6705 | 5.3696 | 5.3315 | 5.3060 |

| 2016 | 5.1201 | 4.7768 | 4.8233 | 4.7577 |

| MAE values | ||||

| Year | RFR | LSTM | GRU | AE-LSTM |

| 2016 | 3.9595 | 3.7845 | 3.8760 | 3.7358 |

| 2017 | 4.5513 | 4.2836 | 4.3134 | 4.2809 |

| 2018 | 5.0418 | 4.9738 | 5.0329 | 4.9521 |

| R2 Score values | ||||

| Year | RFR | LSTM | GRU | AE-LSTM |

| 2016 | 0.2767 | 0.3723 | 0.3550 | 0.3749 |

| 2017 | 0.3472 | 0.4349 | 0.4283 | 0.4381 |

| 2018 | 0.2585 | 0.2779 | 0.2672 | 0.3034 |

| Year | Actual (MJ/m2) | RFR (MJ/m2) | GRU (MJ/m2) | LSTM (MJ/m2) | AE-LSTM (MJ/m2) |

|---|---|---|---|---|---|

| 2018 | 5078.36 | 4779.46 | 4791.15 | 4869.95 | 4881.474 |

| 2017 | 4577.29 | 4326.26 | 4430.23 | 4443.65 | 4527.382 |

| 2016 | 4520.88 | 4377.96 | 4450.80 | 4422.67 | 4589.863 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aslam, M.; Lee, J.-M.; Altaha, M.R.; Lee, S.-J.; Hong, S. AE-LSTM Based Deep Learning Model for Degradation Rate Influenced Energy Estimation of a PV System. Energies 2020, 13, 4373. https://doi.org/10.3390/en13174373

Aslam M, Lee J-M, Altaha MR, Lee S-J, Hong S. AE-LSTM Based Deep Learning Model for Degradation Rate Influenced Energy Estimation of a PV System. Energies. 2020; 13(17):4373. https://doi.org/10.3390/en13174373

Chicago/Turabian StyleAslam, Muhammad, Jae-Myeong Lee, Mustafa Raed Altaha, Seung-Jae Lee, and Sugwon Hong. 2020. "AE-LSTM Based Deep Learning Model for Degradation Rate Influenced Energy Estimation of a PV System" Energies 13, no. 17: 4373. https://doi.org/10.3390/en13174373

APA StyleAslam, M., Lee, J.-M., Altaha, M. R., Lee, S.-J., & Hong, S. (2020). AE-LSTM Based Deep Learning Model for Degradation Rate Influenced Energy Estimation of a PV System. Energies, 13(17), 4373. https://doi.org/10.3390/en13174373