Optimization of a Stirling Engine by Variable-Step Simplified Conjugate-Gradient Method and Neural Network Training Algorithm

Abstract

1. Introduction

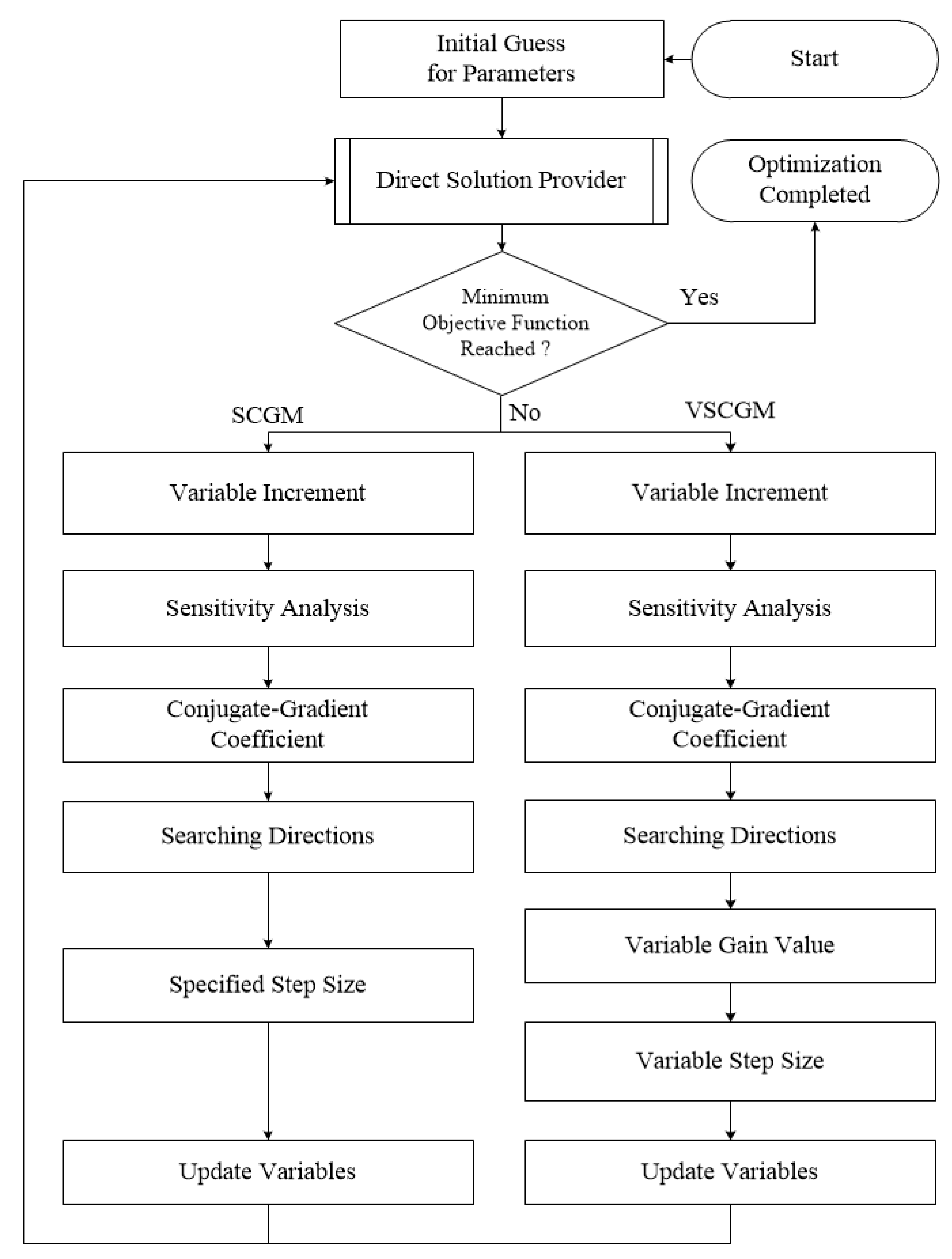

2. Optimization Methods

2.1. CGM Method

2.2. SCGM Method

2.3. VSCGM Method

3. Neural Network Algorithm

3.1. CFD Module Generating Dataset of Training

3.2. Neural Network

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Nocedal, J.; Wright, S.J. Numerical Optimization; Springer: New York, NY, USA, 2006; pp. 30–62. [Google Scholar]

- Fliege, J.; Svaiter, B. Steepest descent methods for multicriteria optimization. Math. Methods Oper. Res. 2000, 51, 479–797. [Google Scholar] [CrossRef]

- Wedderburn, R.W.M. Quasi-likelihood functions, generalized linear models, and the Gauss—Newton method. Biometrika 1974, 61, 439–447. [Google Scholar]

- Rao, S.S. Engineering Optimization: Theory and Practice; Wiley: Hoboken, NJ, USA, 2009. [Google Scholar]

- Cheng, C.H.; Chang, M.H. A simplified conjugate-gradient method for shape identification based on thermal data. Numer. Heat Transf. Part B Fundam. 2003, 43, 489–507. [Google Scholar] [CrossRef]

- Jang, J.Y.; Cheng, C.H.; Huang, Y.X. Optimal design of baffles locations with interdigitated flow channels of a centimeter-scale proton exchange membrane fuel cell. Int. J. Heat Mass Transf. 2010, 53, 732–743. [Google Scholar] [CrossRef]

- Huang, Y.X.; Wang, X.D.; Cheng, C.H.; Lin, D.T.W. Geometry optimization of thermoelectric coolers using simplified conjugate gradient method. Energy 2013, 59, 689–697. [Google Scholar] [CrossRef]

- Cheng, C.H.; Huang, Y.X.; King, S.C.; Lee, C.I.; Leu, C.H. CFD-based optimal design of a micro-reformer by integrating computational fluid dynamics code using a simplified conjugate-gradient method. Energy 2014, 70, 355–365. [Google Scholar] [CrossRef]

- McCulloch, W.S.; Pitts, W. A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biophys. 1943, 5, 115–133. [Google Scholar] [CrossRef]

- Landahl, H.D.; McCulloch, W.S.; Pitts, W. A statistical consequence of the logical calculus of nervous nets. Bull. Math. Biophys. 1943, 5, 135–137. [Google Scholar] [CrossRef]

- Rosenblatt, F. The perceptron: A probabilistic model for information storage and organization in the brain. Psychol. Rev. 1958, 65, 386. [Google Scholar] [CrossRef] [PubMed]

- DARPA, Neural Network Study: October 1987-February 1988; AFCEA International Press: Fairfax, VA, USA, 1998.

- Rumelhart, D.E.; McClelland, J.L. PDP Research Group. Parallel Distributed Processing; 1 and 2; The MIT Press: Cambridge, MA, USA, 1987. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning internal representations by error propagation. In Parallel Distributed Processing: Explorations in the Microstructure of Cognition; 1: Foundations; MIT Press: Cambridge, MA, USA, 1987; ISBN 0-262-18120-7. [Google Scholar]

- Munakata, T. Neural Networks: Fundamentals and the Backpropagation Model. Fundamentals of the New Artificial Intelligence; Munakata, T., Ed.; Springer: London, UK, 2008; pp. 7–36. [Google Scholar]

- Goodfellow, Y.B.; Courville, A. 6.5 Back-propagation and other differentiation algorithms. Deep Learning; The MIT Press: Cambridge, MA, USA, 2016; pp. 200–220. [Google Scholar]

- Hagan, M.T.; Menhaj, M. Training feed-forward networks with the Marquardt algorithm. IEEE Trans. Neural Netw. 1994, 5, 989–993. [Google Scholar] [CrossRef] [PubMed]

- Narendra, K.S.; Parthasarathy, K. Gradient methods for the optimization of dynamical systems containing neural networks. IEEE Trans. Neural Netw. 1991, 2, 252–262. [Google Scholar] [CrossRef] [PubMed]

- Kongtragool, B.; Wongwises, S. A review of solar-powered Stirling engines and low temperature differential Stirling engines. Renew. Sustain. Energy Rev. 2003, 7, 131–154. [Google Scholar] [CrossRef]

- Cheng, C.H.; Le, Q.T.; Huang, J.S. Numerical prediction of performance of a low-temperature-differential gamma-type Stirling engine. Numer. Heat Transf. Part A Appl. 2018, 74, 1770–1785. [Google Scholar] [CrossRef]

- Lera, G.; Pinzolas, M. Neighborhood based Levenberg-Marquardt algorithm for neural network training. IEEE Trans. Neural Netw. 2002, 13, 1200–1203. [Google Scholar] [CrossRef]

- Yu, H.; Wilamowski, B.M. Levenberg-Marquardt training. Ind. Electron. Handb. 2011, 5, 1. [Google Scholar]

- Jang, J.S.R.; Sun, C.T.; Mizutani, E. Neuro-Fuzzy and Soft Computing: A Computional Approach to Learning and Machine Intelligence; Prentice-Hall: Upper Saddle River, NJ, USA, 1997; pp. 226–250. [Google Scholar]

- Haykin, S. Neural Networks: A Comprehensive Foundation; Prentice-Hall: Upper Saddle River, NJ, USA, 1999; pp. 10–23. [Google Scholar]

| Turbulence model | Realizable k-ε model |

| Porous medium model | Darcy–Forchheimer law |

| Pressure-velocity coupling | PISO |

| Spatial discretization | Second-order upwind scheme |

| Equation of state | Soave–Redlich–Kwong real-gas model |

| Number of elements | 1,141,047 |

| Parameter | Value |

|---|---|

| Phase angle θph (deg.) | 90 |

| Piston diameter Dp (m) | 0.17 |

| Porosity ϕ | 0.9 |

| Rotation speed ω (rpm) | 100 |

| Displacer stroke sd (m) | 0.04 |

| Piston stroke sp (m) | 0.08 |

| Charged pressure Pch (bar) | 5 |

| Equilibrium position of piston xp (m) | 0.196 |

| Heating Temperature TH (K) | 423 |

| Cooling Temperature TL (K) | 300 |

| Working fluid | Helium |

| Indicated Power (W) | 161.616 |

| Thermal Efficiency (%) | 16.532 |

| Case | ω [rpm] | Pch [bar] | θph [deg] | Dp [m] | xp [m] | sd [m] | TH [K] | ϕ | W [Watt] | ε [%] |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 100 | 1 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 33.495 | 10.417 |

| 2 | 100 | 2 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 67.966 | 12.820 |

| 3 | 100 | 3 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 102.501 | 15.191 |

| 4 | 100 | 5 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 161.616 | 16.532 |

| 5 | 100 | 7 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 217.457 | 17.277 |

| 6 | 100 | 9 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 271.267 | 17.794 |

| 7 | 100 | 5 | 70 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 142.664 | 15.556 |

| 8 | 100 | 5 | 80 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 154.294 | 16.211 |

| 9 | 100 | 5 | 95 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 162.973 | 16.638 |

| 10 | 100 | 5 | 97.5 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 163.776 | 16.686 |

| 11 | 100 | 5 | 100 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 164.183 | 16.722 |

| 12 | 100 | 5 | 102.5 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 165.674 | 16.985 |

| 13 | 100 | 5 | 105 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 168.071 | 17.375 |

| 14 | 100 | 5 | 107.5 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 164.156 | 17.109 |

| 15 | 100 | 5 | 110 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 160.242 | 16.838 |

| 16 | 100 | 5 | 90 | 0.17 | 0.196 | 0.04 | 470 | 0.9 | 228.422 | 19.921 |

| 17 | 100 | 5 | 90 | 0.17 | 0.196 | 0.04 | 500 | 0.9 | 264.706 | 21.262 |

| 18 | 100 | 5 | 90 | 0.17 | 0.196 | 0.04 | 550 | 0.9 | 313.565 | 22.930 |

| 19 | 100 | 5 | 90 | 0.17 | 0.196 | 0.04 | 600 | 0.9 | 346.658 | 23.281 |

| 20 | 100 | 5 | 90 | 0.17 | 0.196 | 0.04 | 700 | 0.9 | 432.119 | 23.655 |

| 21 | 100 | 5 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.7 | 158.124 | 12.567 |

| 22 | 100 | 5 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.8 | 160.069 | 13.629 |

| 23 | 100 | 5 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 161.615 | 16.532 |

| 24 | 100 | 5 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.915 | 164.067 | 17.488 |

| 25 | 100 | 5 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.93 | 166.815 | 18.518 |

| 26 | 100 | 5 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.95 | 164.633 | 18.900 |

| 27 | 100 | 5 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.97 | 162.767 | 20.276 |

| 28 | 100 | 5 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.99 | 158.087 | 22.235 |

| 29 | 30 | 5 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 22.368 | 11.913 |

| 30 | 60 | 5 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 87.817 | 17.659 |

| 31 | 80 | 5 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 132.797 | 17.840 |

| 32 | 90 | 5 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 147.652 | 17.103 |

| 33 | 120 | 5 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 181.683 | 15.138 |

| 34 | 150 | 5 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 205.998 | 13.885 |

| 35 | 200 | 5 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 243.438 | 12.613 |

| 36 | 250 | 5 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 277.842 | 11.761 |

| 37 | 300 | 5 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 303.382 | 10.941 |

| 38 | 350 | 5 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 324.460 | 10.237 |

| 39 | 400 | 5 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 336.335 | 9.448 |

| 40 | 450 | 5 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 347.323 | 8.795 |

| 41 | 500 | 5 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 352.782 | 8.146 |

| 42 | 550 | 5 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 357.819 | 7.630 |

| 43 | 600 | 5 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 359.252 | 7.087 |

| 44 | 700 | 5 | 90 | 0.17 | 0.196 | 0.04 | 423 | 0.9 | 347.057 | 5.987 |

| 45 | 100 | 5 | 90 | 0.17 | 0.196 | 0.025 | 423 | 0.9 | 108.004 | 16.073 |

| 46 | 100 | 5 | 90 | 0.17 | 0.196 | 0.03 | 423 | 0.9 | 128.198 | 16.551 |

| 47 | 100 | 5 | 90 | 0.17 | 0.196 | 0.035 | 423 | 0.9 | 147.035 | 17.107 |

| 48 | 100 | 5 | 90 | 0.17 | 0.201 | 0.04 | 423 | 0.9 | 159.561 | 16.638 |

| 49 | 100 | 5 | 90 | 0.17 | 0.205 | 0.04 | 423 | 0.9 | 158.210 | 16.559 |

| 50 | 100 | 5 | 90 | 0.17 | 0.211 | 0.04 | 423 | 0.9 | 156.170 | 16.377 |

| 51 | 100 | 5 | 90 | 0.17 | 0.216 | 0.04 | 423 | 0.9 | 154.047 | 16.192 |

| 52 | 100 | 5 | 90 | 0.15 | 0.196 | 0.04 | 423 | 0.9 | 131.950 | 15.565 |

| 53 | 100 | 5 | 90 | 0.16 | 0.196 | 0.04 | 423 | 0.9 | 146.496 | 16.008 |

| 54 | 100 | 5 | 90 | 0.18 | 0.196 | 0.04 | 423 | 0.9 | 177.939 | 16.812 |

| 55 | 100 | 5 | 90 | 0.19 | 0.196 | 0.04 | 423 | 0.9 | 192.662 | 16.685 |

| Samples | MSE | R | |

|---|---|---|---|

| Training (70%) | 2218 | 157.68 | 0.9935 |

| Validation (15%) | 475 | 29.38 | 0.9988 |

| Testing (15%) | 475 | 54.95 | 0.9977 |

| Parameter | Initial Design | Lower and Upper Bounds | Optimal Design |

|---|---|---|---|

| Rotation speed ω (rpm) | 100 | 30~700 | 218.399 |

| Phase angle θph (deg.) | 90 | 70~110 | 94.885 |

| Piston diameter Dp (m) | 0.17 | 0.15~0.19 | 0.19 |

| Displacer stroke sd (m) | 0.04 | 0.025~0.04 | 0.04 |

| Porosity ϕ | 0.9 | 0.7~0.99 | 0.99 |

| Indicated power output (W) | 161.616 | - | 327.980 |

| Thermal efficiency (%) | 16.532 | - | 17.399 |

| Minimum objective function | 0.002078 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, C.-H.; Lin, Y.-T. Optimization of a Stirling Engine by Variable-Step Simplified Conjugate-Gradient Method and Neural Network Training Algorithm. Energies 2020, 13, 5164. https://doi.org/10.3390/en13195164

Cheng C-H, Lin Y-T. Optimization of a Stirling Engine by Variable-Step Simplified Conjugate-Gradient Method and Neural Network Training Algorithm. Energies. 2020; 13(19):5164. https://doi.org/10.3390/en13195164

Chicago/Turabian StyleCheng, Chin-Hsiang, and Yu-Ting Lin. 2020. "Optimization of a Stirling Engine by Variable-Step Simplified Conjugate-Gradient Method and Neural Network Training Algorithm" Energies 13, no. 19: 5164. https://doi.org/10.3390/en13195164

APA StyleCheng, C.-H., & Lin, Y.-T. (2020). Optimization of a Stirling Engine by Variable-Step Simplified Conjugate-Gradient Method and Neural Network Training Algorithm. Energies, 13(19), 5164. https://doi.org/10.3390/en13195164