Research on the Estimate of Gas Hydrate Saturation Based on LSTM Recurrent Neural Network

Abstract

1. Introduction

2. Long Short-Term Memory (LSTM) Recurrent Neural Network

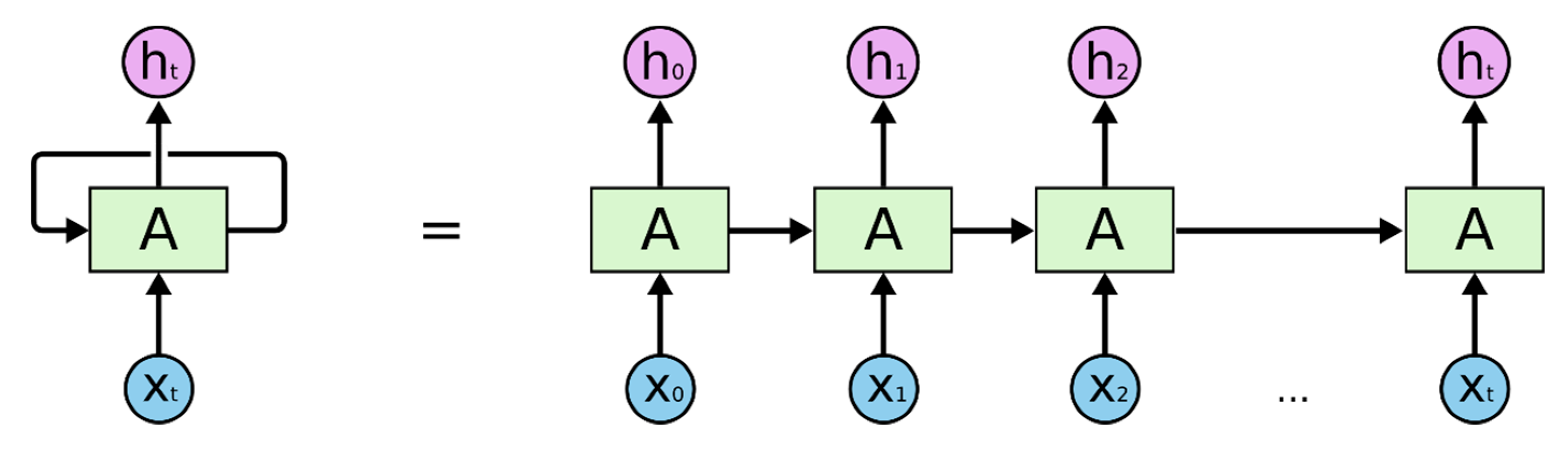

2.1. Recurrent Neural Network (RNN)

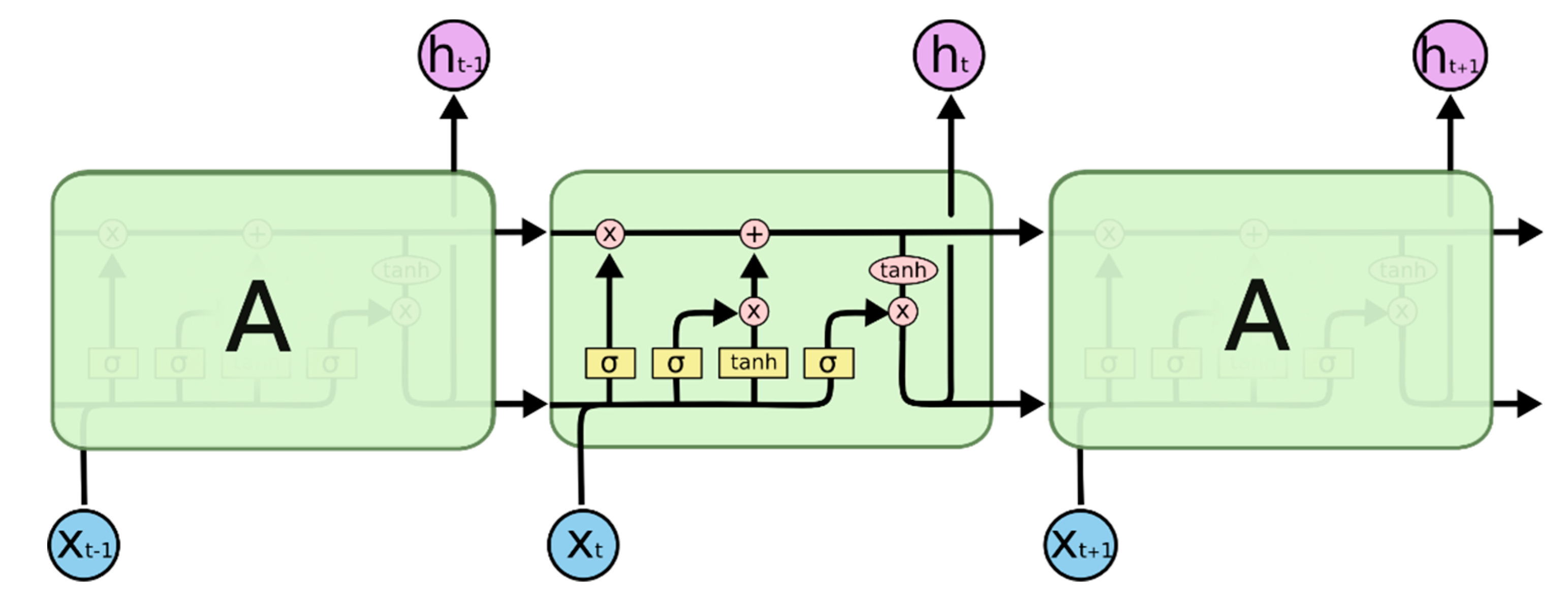

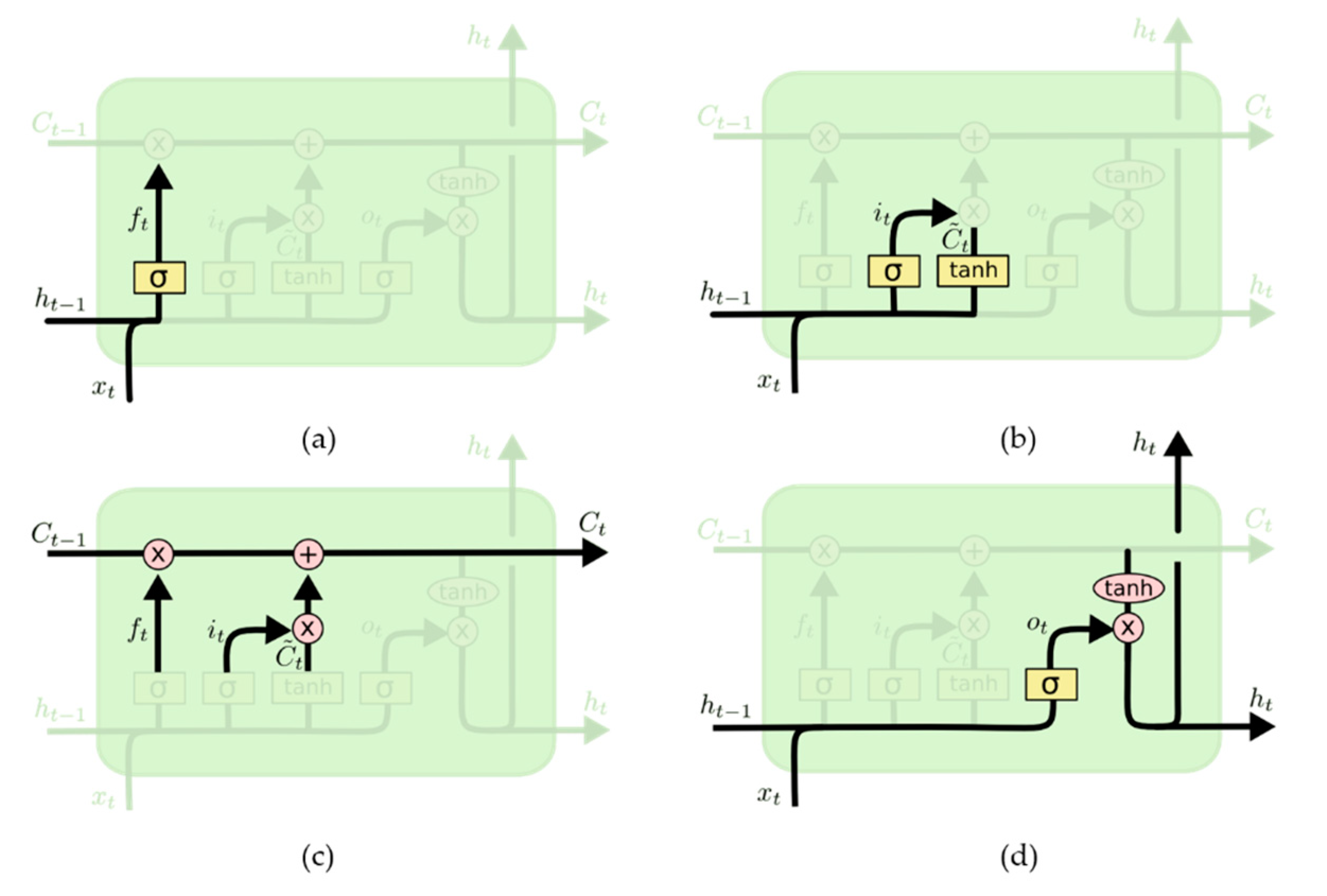

2.2. LSTM Recurrent Neural Network

3. Gas Hydrate Saturation Estimate

3.1. Geological Background

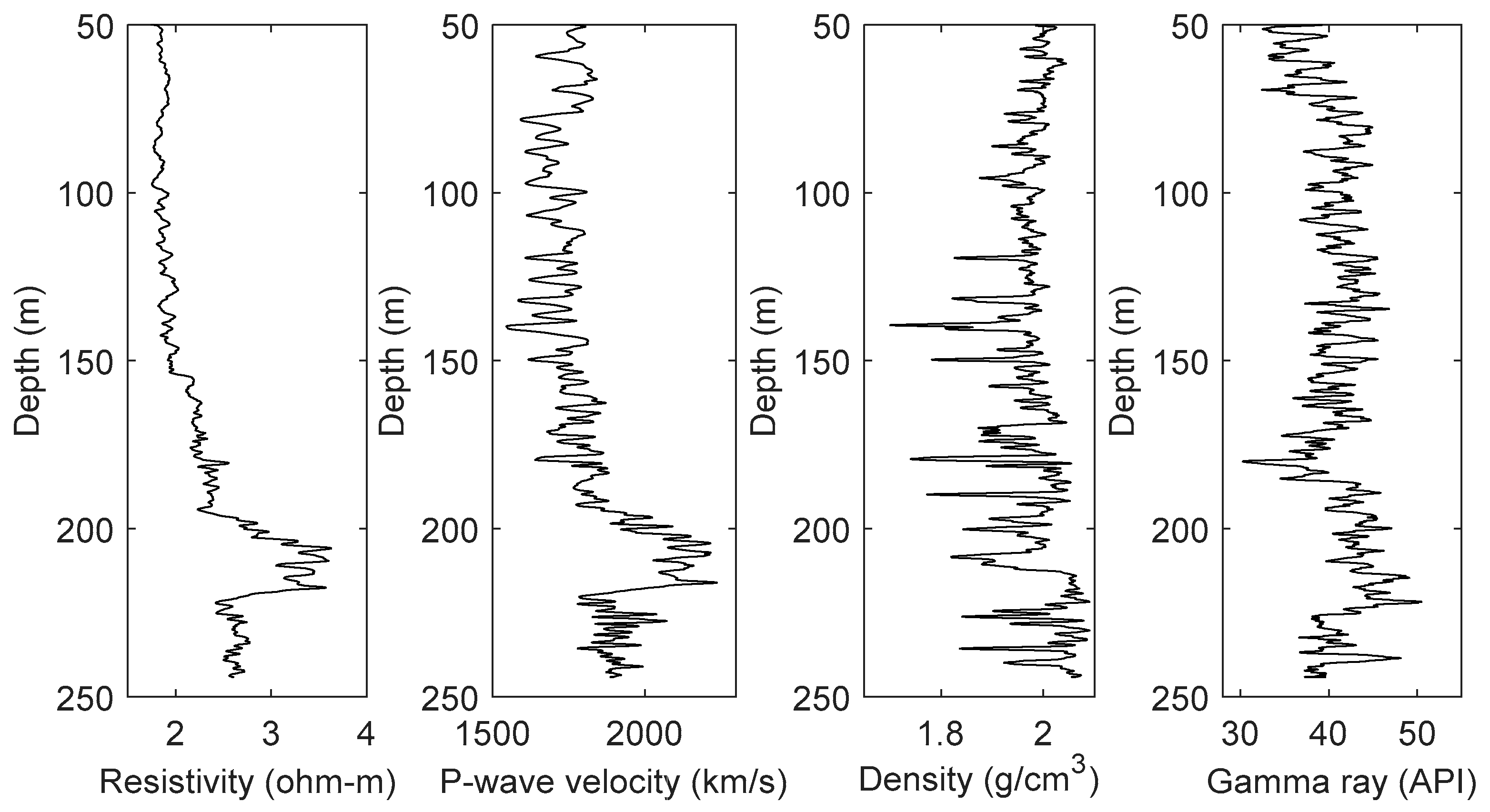

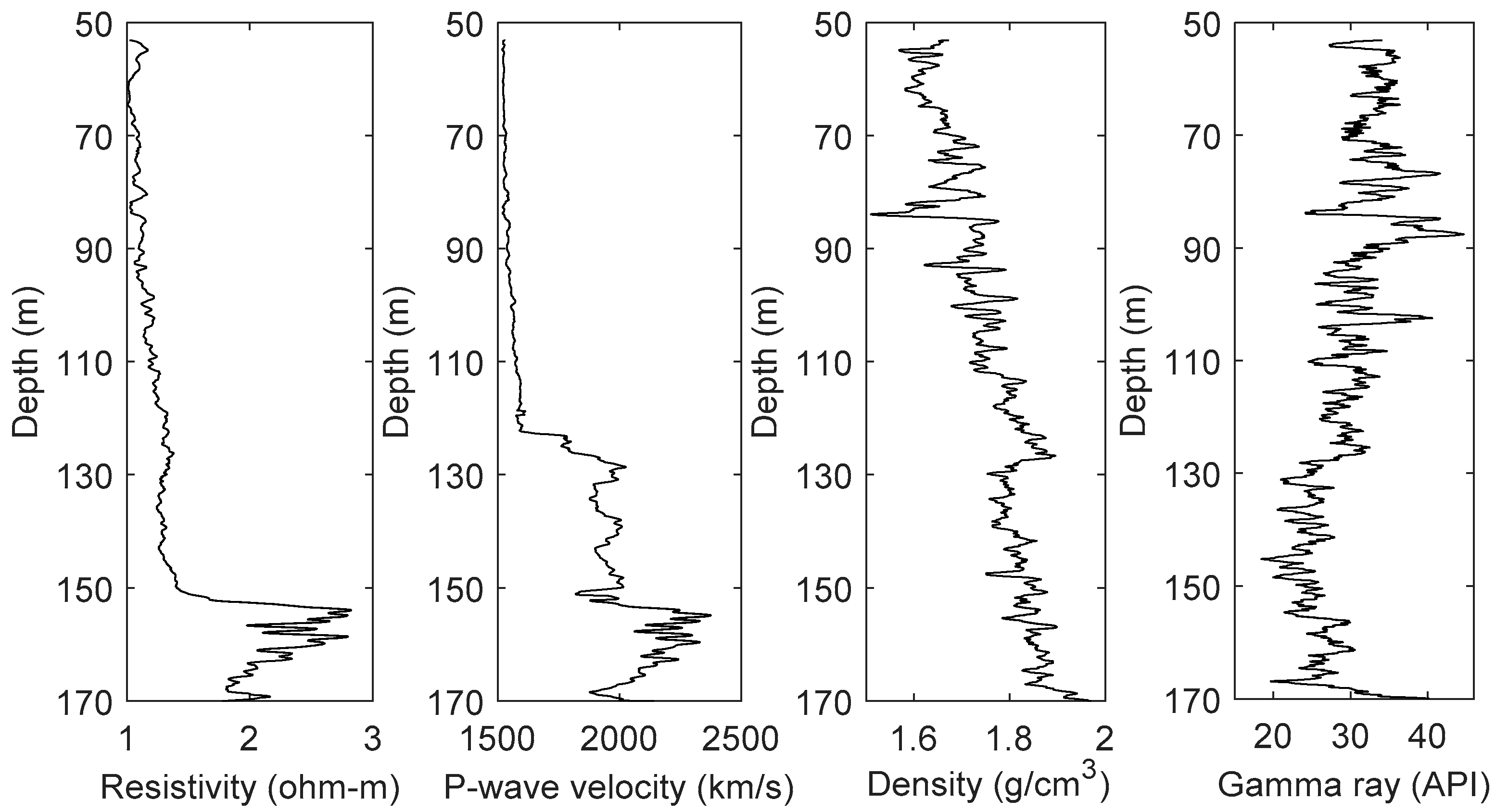

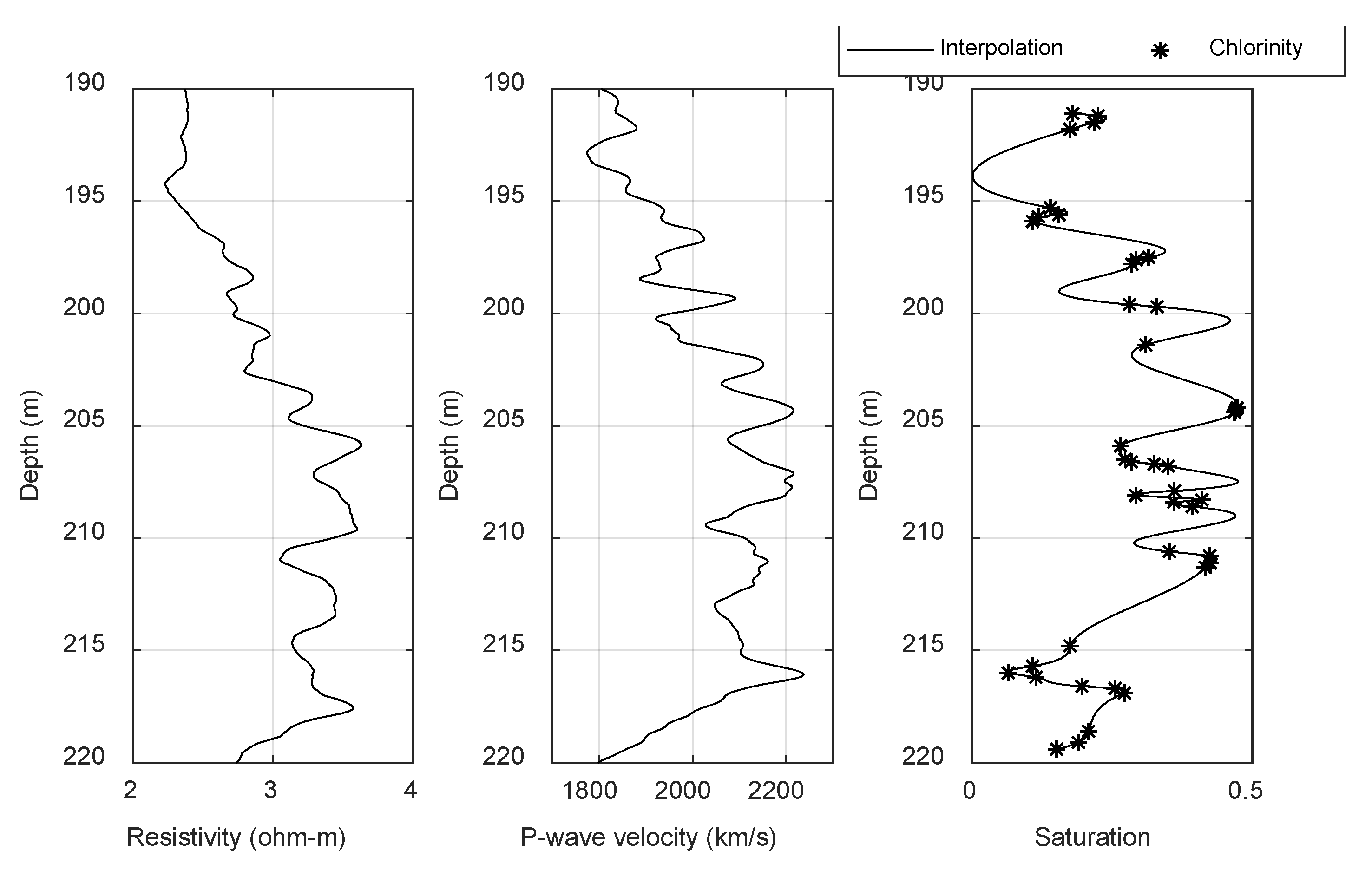

3.2. Well Logs

3.3. Data Preparation

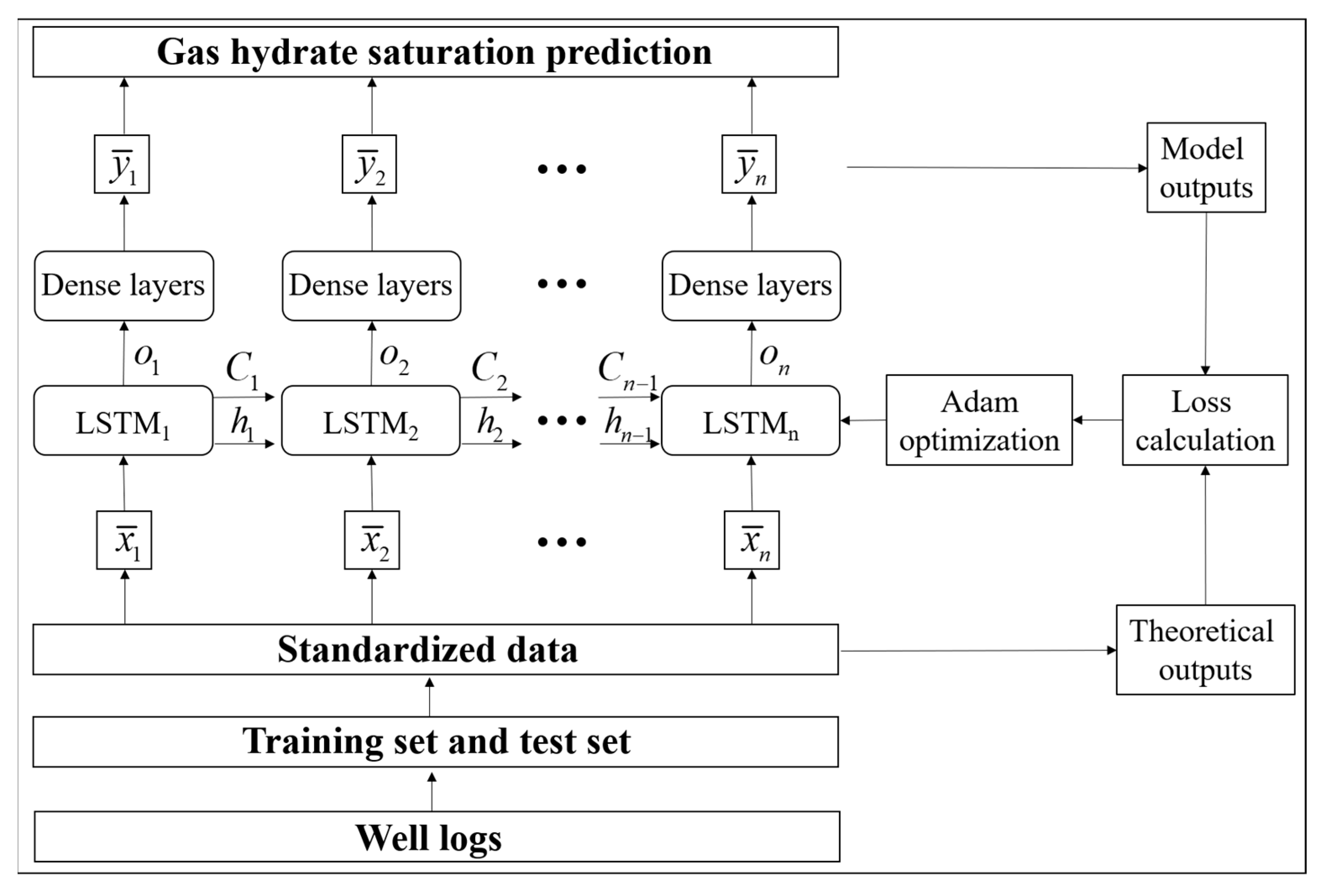

3.4. The Prediction Framework of the LSTM Recurrent Neural Network

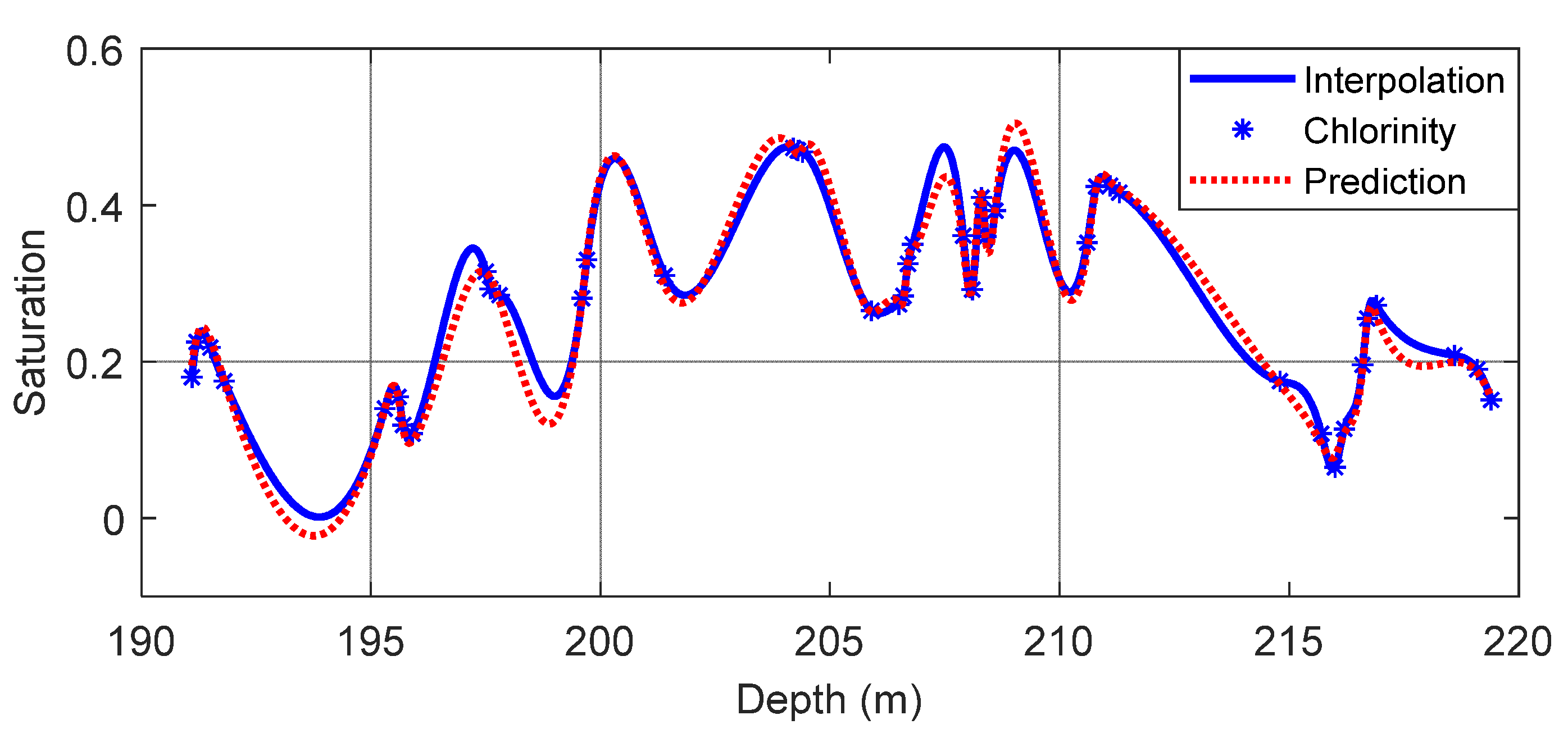

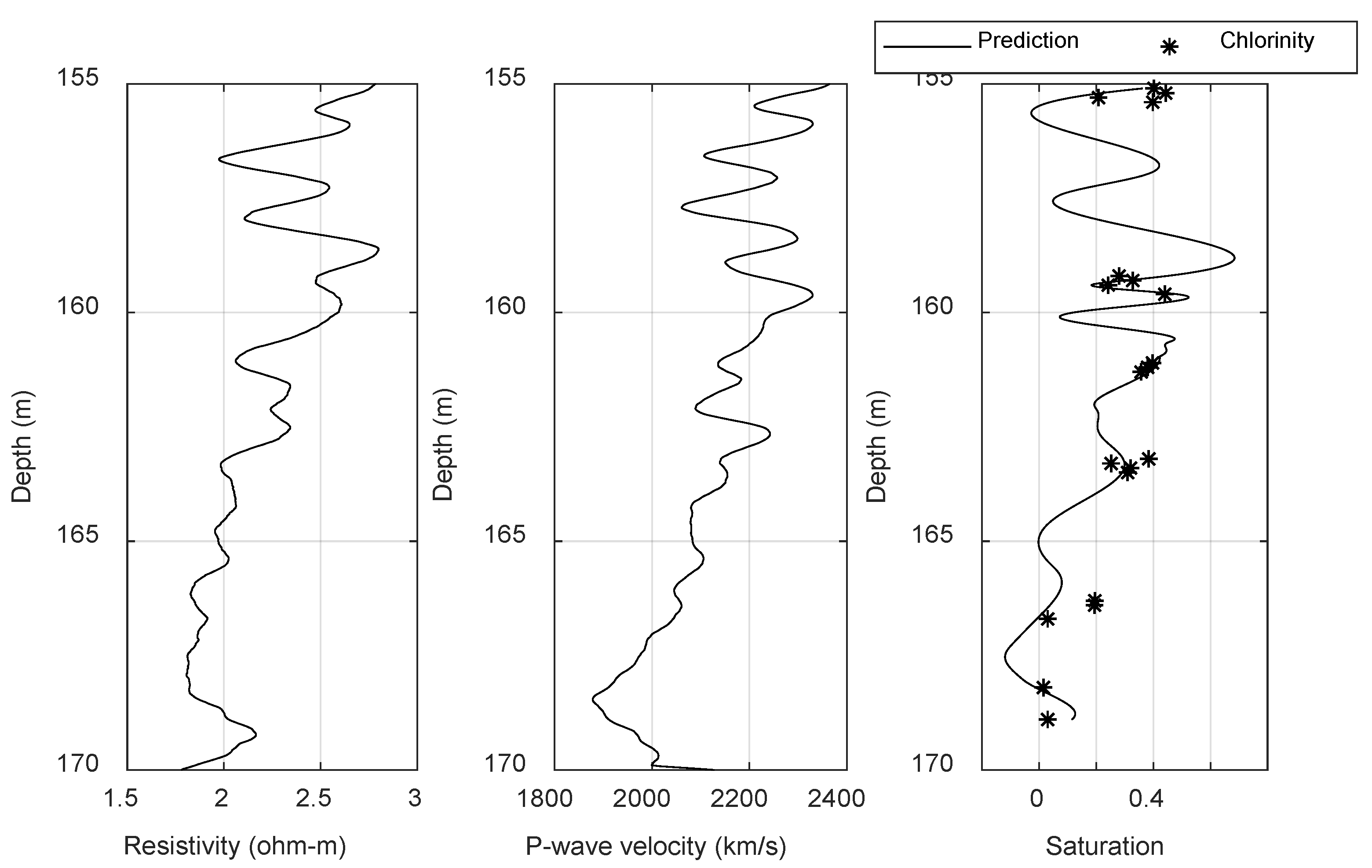

3.5. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Ruppel, C.D. Tapping methane hydrates for unconventional natural gas. Elements 2007, 3, 193–199. [Google Scholar] [CrossRef]

- Archer, D. Methane hydrate stability and anthropogenic climate change. Biogeosci. Discuss. 2007, 4, 993–1057. [Google Scholar] [CrossRef]

- Wang, X.J.; Hutchinson, D.R.; Wu, S.G.; Yang, S.X.; Guo, Y.Q. Elevated gas hydrate saturation within silt and silty clay sediments in the Shenhu area, Souch China Sea. J. Geophys. Res. 2011, 116, B05102. [Google Scholar]

- Collett, T.S.; Ladd, J. Detection of gas hydrate with downhole logs and assessment of gas hydrate concentrations and gas volumes on the Blake Ridge with electrical resistivity log data. Proc. Ocean Drill. Program Sci. Results 2000, 164, 179–191. [Google Scholar]

- Lee, M.W.; Collett, T.S. Gas hydrate saturations estimated from fractured reservoir at Site NGHP-01-10, Krishna-Godavari Basin, India. J. Geophys. Res. 2009, 114, B07102. [Google Scholar] [CrossRef]

- Wood, W.T.; Stoffa, P.L.; Shipley, T.H. Quantitative detection of methane hydrate through high-resolution seismic velocity analysis. J. Geophys. Res. 1994, 99, 9681–9969. [Google Scholar] [CrossRef]

- Helgerud, M.B.; Dvorkin, J.; Nur, A.; Sakai, A.; Collett, T. Elastic-wave velocity in marine sediments with gas hydrates: Effective medium modeling. Geophys. Res. Lett. 1999, 26, 2021–2024. [Google Scholar] [CrossRef]

- Jakobsen, M.; Hudson, J.A.; Minshull, T.A.; Singh, S.C. Elastic properties of hydrate-bearing sediment using effective medium theory. J. Geophys. Res. 2000, 105, 561–577. [Google Scholar] [CrossRef]

- Carcione, J.M.; Gei, D. Gas-hydrate concentration estimated from P- and S-wave velocities at the Mallik 2L-38 research well, Mackenzie Delta, Canada. J. Appl. Geophys. 2004, 56, 73–78. [Google Scholar] [CrossRef]

- Carcione, J.M.; Tinivella, U. Bottom-simulating reflectors: Seismic velocities and AVO effects. Geophysics 2000, 65, 54–67. [Google Scholar] [CrossRef]

- Singh, H.; Seol, Y.; Myshakin, E.M. Prediction of gas hydrate saturation using machine learning and optimail set of well-logs. Comput. Geosci. 2020, 1–17. [Google Scholar] [CrossRef]

- Singh, H.; Seol, Y.; Myshakin, E.M. Automated Well-Log Processing and Lithology Classification by Identifying Optimal Features Through Unsupervised and Supervised Machine-Learning Algorithms. SPE J. 2020, 25. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; The, Y.W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef] [PubMed]

- Hall, B. Facies classification using machine learning. Lead. Edge 2016, 35, 906–909. [Google Scholar] [CrossRef]

- Bestagini, P.; Lipari, V.; Tubaro, S. A machine learning approach to facies classification using well logs. SEG Tech. Program Expand. Abstr. 2017, 2137–2142. [Google Scholar] [CrossRef]

- Zhang, L.; Zhan, C. Machine learning in rock facies classification—An application of XGBoost. Int. Geophys. Conf. Qingdao China. 2017, 1371–1374. [Google Scholar] [CrossRef]

- Hall, M.; Hall, B. Distribution collaborative prediction: Results of the machine learning contest. Leading Edge 2017, 36, 267–269. [Google Scholar] [CrossRef]

- Sidahmed, M.; Roy, A.; Sayed, A. Streamline rock facies classification with deep learning cognitive process. SPE Annu. Technical Conf. Exhib. 2017. [Google Scholar] [CrossRef]

- An, P.; Cao, D. Research and application of logging lithology identification based on deep learning. Prog. Geophys. 2018, 33, 1029–1034. (In Chinese) [Google Scholar]

- An, P.; Cao, D. Shale content prediction based on LSTM recurrent neural network. In Proceedings of the SEG 2018 Workshop: SEG Maximizing Asset Value Through Artificial Intelligence and Machine Learning, Beijing, China, 17–19 September 2018; pp. 49–52. [Google Scholar]

- An, P.; Cao, D.; Zhao, B.; Yang, X.; Zhang, M. Reservoir physical parameters prediction based on LSTM recurrent neural network. Prog. Geophys. 2019, 34, 1849–1858. (In Chinese) [Google Scholar]

- Sofiyanti, N.; Fitmawati, D.I.; Roza, A.A. Understand LSTM Networks. GITHUB Colah Blog 2015, 22, 137–141. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Graves, A. Supervised Sequence Labelling with Recurrent Neural Networks; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Briais, A.; Patriat, P.; Tapponnier, P. Updated interpretation of magnetic anomalies and seafloor spreading stages in the South China Sea: Implications for the tertiary tectonics of Southeast Asia. J. Geophys. Res. 1993, 98, 6299–6328. [Google Scholar] [CrossRef]

- Clift, P.; Lin, J.; Barckhausen, U. Evidence of low flexural rigidity and low viscosity lower continental crust during continental break-up in the South China Sea. Mar. Pet. Geol. 2002, 19, 951–970. [Google Scholar] [CrossRef]

- Wang, X.; Collett, T.S.; Lee, M.W.; Yang, S.; Guo, Y.; Wu, S. Geological controls on the occurrence of gas hydrate from core, downhole log, and seismic data in the Shenhu are, South China Sea. Mar. Geol. 2014, 357, 272–292. [Google Scholar] [CrossRef]

- Yuan, T.; Hyndman, R.D.; Spence, G.D.; Desmons, B. Seismic velocity increase and deep-sea gas hydrate concentration above a bottom-simulating reflector on the northern Cascadia continental slope. J. Geophys. Res. 1996, 101, 655–671. [Google Scholar] [CrossRef]

- Chen, Y.; Dunn, K.; Liu, X.; Du, M.; Lei, X. New method for estimating gas hydrate saturation in the Shenhu area. Geophysics 2014, 79, IM11–IM22. [Google Scholar] [CrossRef]

- Gal, Y.; Ghahramani, Z. A theoretically grounded application of dropout in recurrent neural networks. arXiv 2015, arXiv:1512.05287. [Google Scholar]

- Chen, F.; Zhou, Y.; Su, X.; Liu, G.; Lu, H.; Wang, J. Gas hydrate saturation and its relation with grain size of the hydrate-bearing sediments in the Shenhu Area of northern South China Sea. Mar. Geol. Quat. Geol. 2011, 31, 95–100. (In Chinese) [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, C.; Liu, X. Research on the Estimate of Gas Hydrate Saturation Based on LSTM Recurrent Neural Network. Energies 2020, 13, 6536. https://doi.org/10.3390/en13246536

Li C, Liu X. Research on the Estimate of Gas Hydrate Saturation Based on LSTM Recurrent Neural Network. Energies. 2020; 13(24):6536. https://doi.org/10.3390/en13246536

Chicago/Turabian StyleLi, Chuanhui, and Xuewei Liu. 2020. "Research on the Estimate of Gas Hydrate Saturation Based on LSTM Recurrent Neural Network" Energies 13, no. 24: 6536. https://doi.org/10.3390/en13246536

APA StyleLi, C., & Liu, X. (2020). Research on the Estimate of Gas Hydrate Saturation Based on LSTM Recurrent Neural Network. Energies, 13(24), 6536. https://doi.org/10.3390/en13246536