Nature-Inspired Algorithm Implemented for Stable Radial Basis Function Neural Controller of Electric Drive with Induction Motor

Abstract

1. Introduction

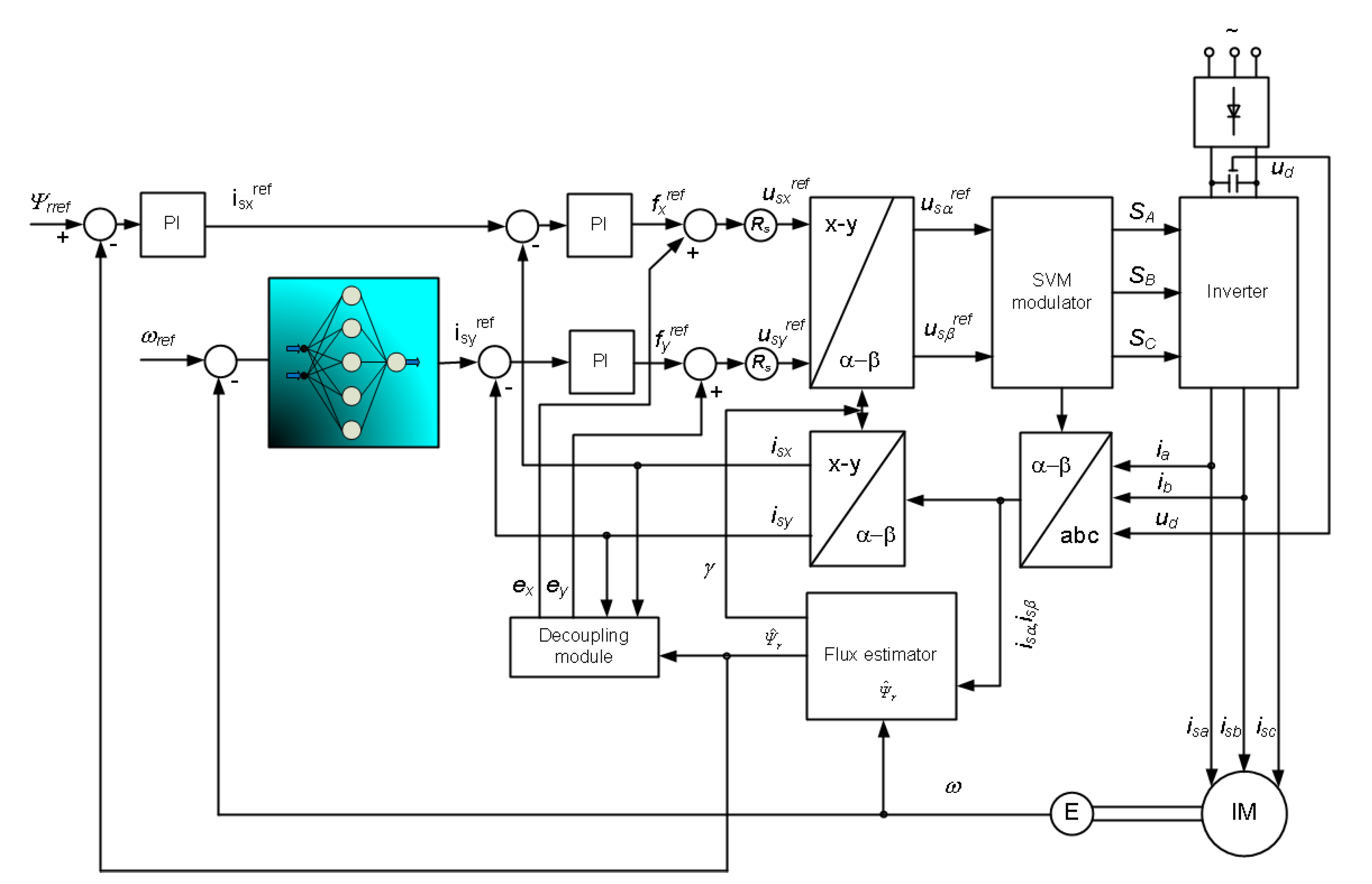

2. Field-Oriented Control of the Induction Motor (IM)—Short Description

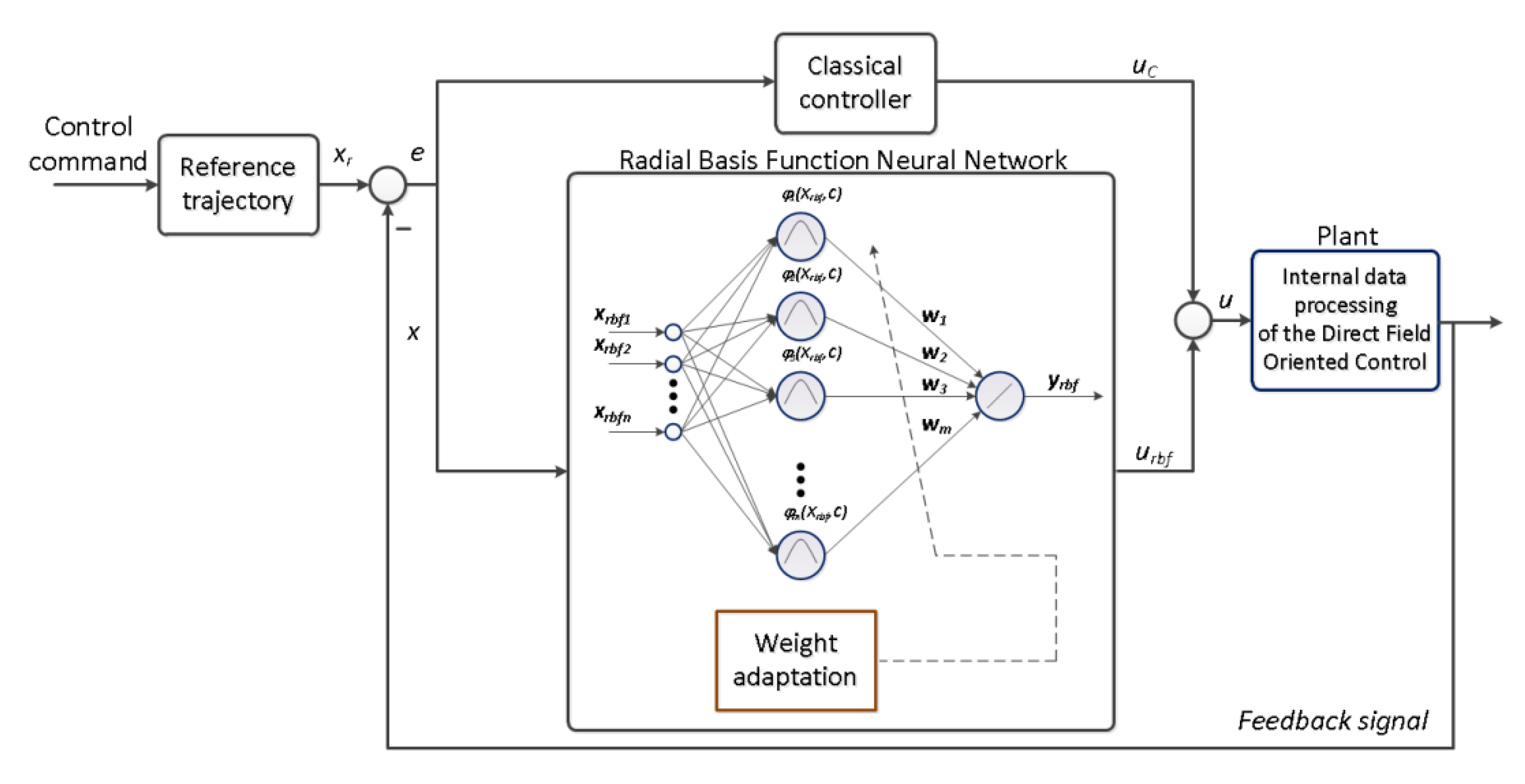

3. Design Process for Parallel Neural Controller

4. The Grey Wolf Optimizer

4.1. Details of Data Processing in the GWO

| Algorithm 1. Grey Wolf Optimizer |

| 1: INITIALIZATION STAGE: 2: environment preparation: 3: preparation of workspace 4: conditions of simulations for model (frequency, solver, etc.) 5: reference signals definition 6: parameters of the plant 7: initial state of the GWO: 8: overall conditions of calculation (number of iterations (imax), size 9: of population, number of optimized variable, bounds for solutions) 10: random initialization of controller gains 11: initial calculations of aGWO, AGWO, CGWO 12: the GWO-calculations for best solutions finding 13: MAIN CALCULATIONS of the GREY WOLF OPTIMIZER: 14: for i = 1 to imax do 15: update of features for each element of population 16: calculations of cost function for each element of population 17: finding new best solutions 18: update of aGWO 19: end for 20: SAVING RESULTS: 21: presentation of results 22: data for summary file 23: report generation |

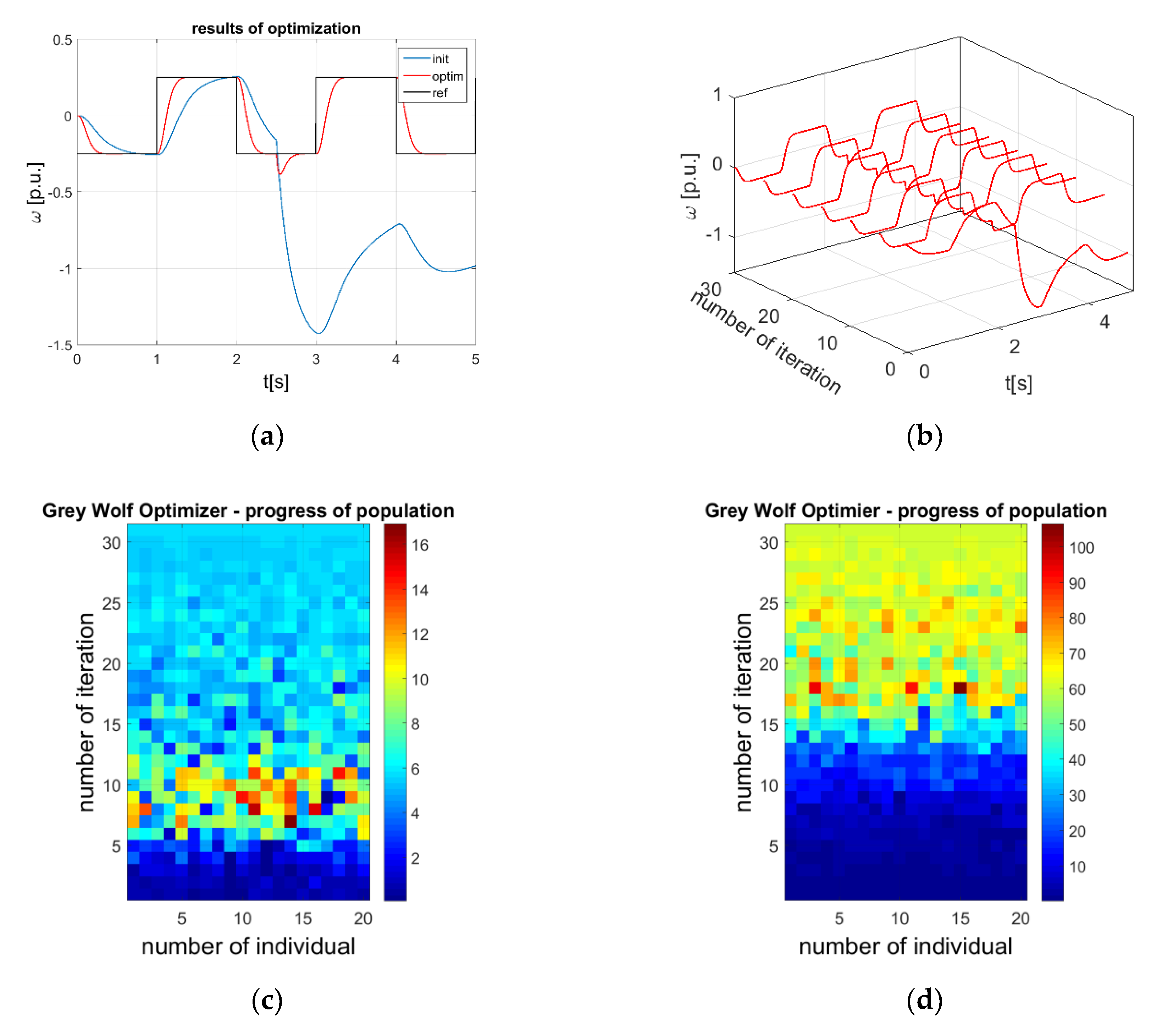

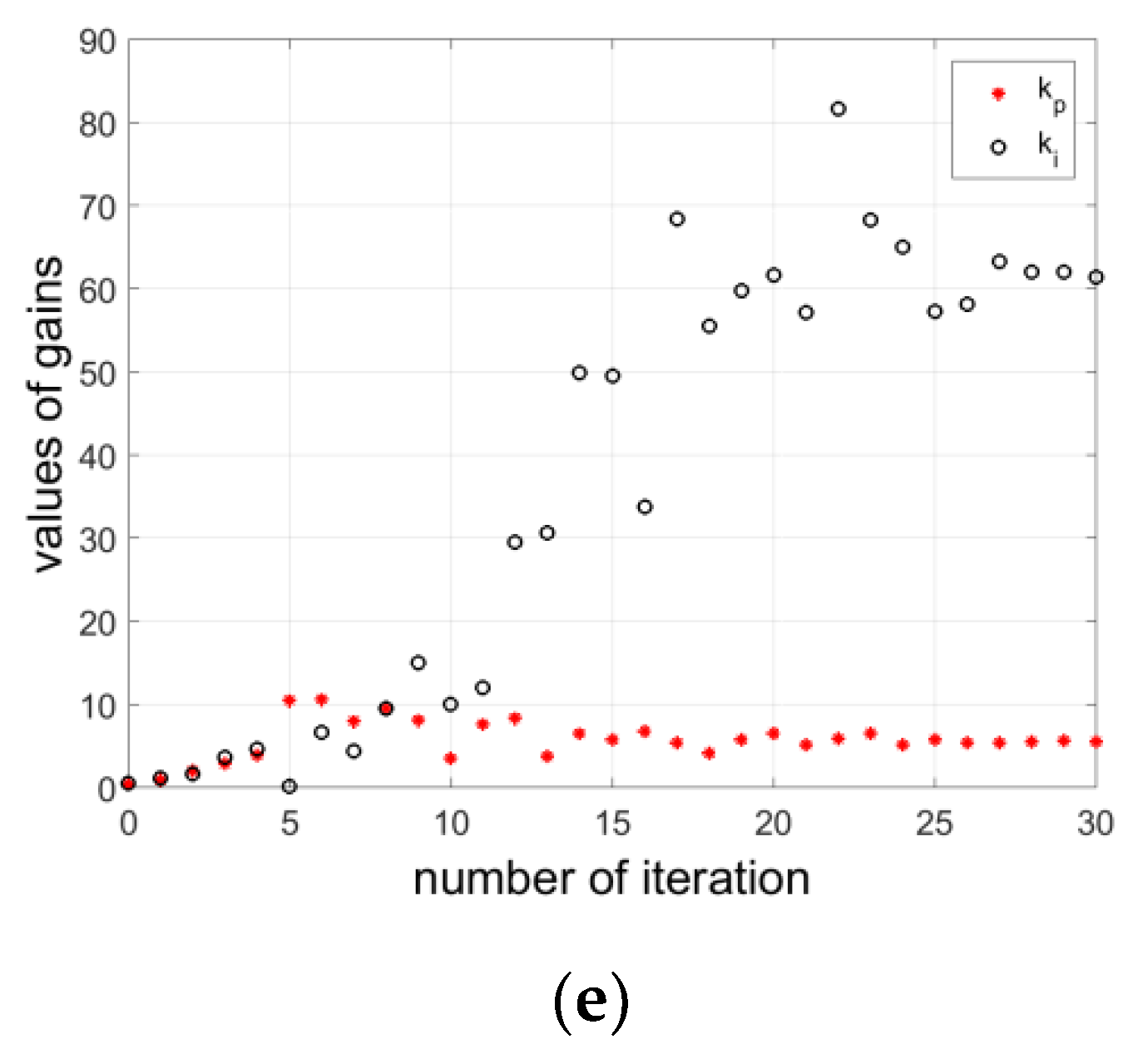

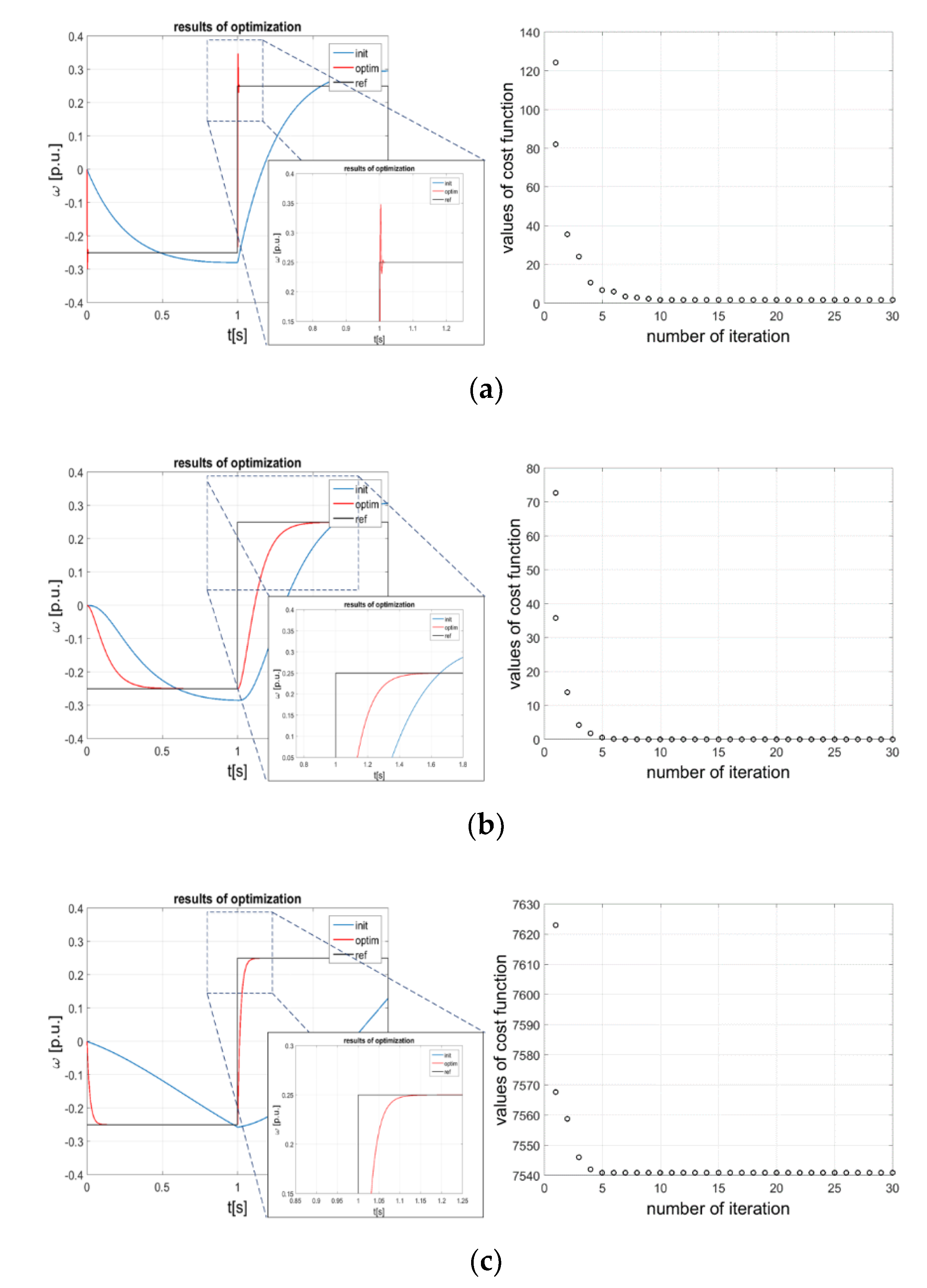

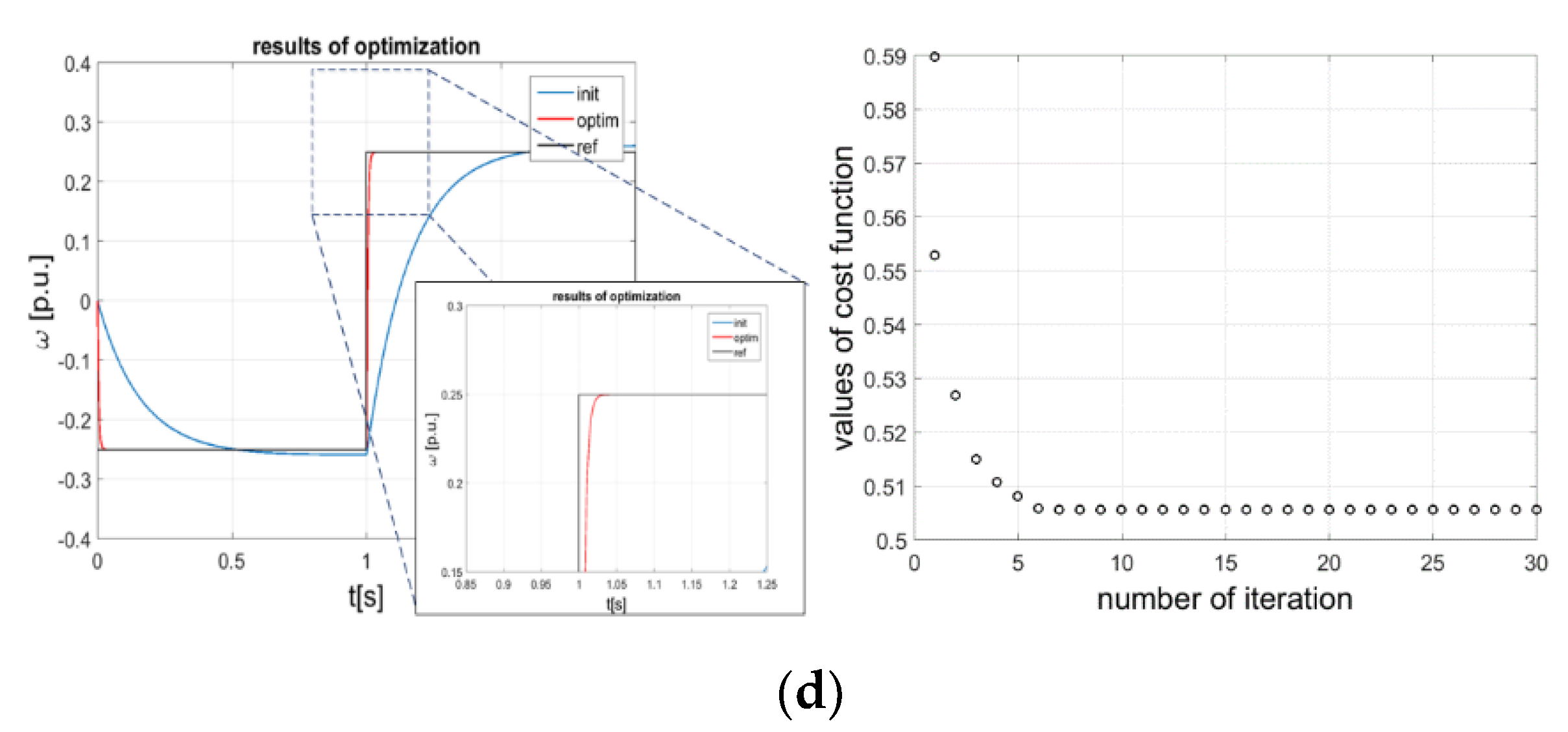

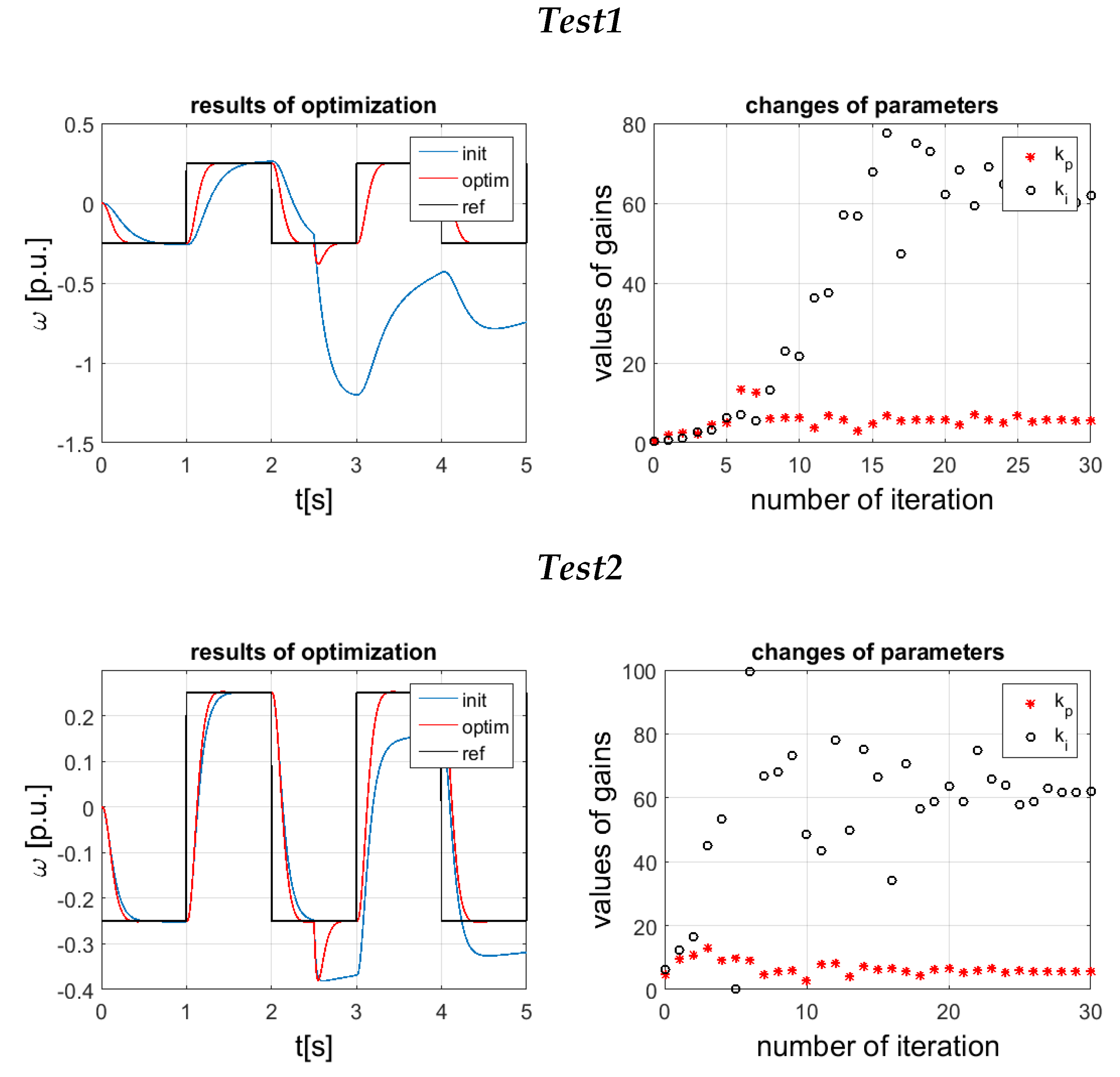

4.2. Analysis of Optimization Process

4.3. Common Problems of Metaheuristic Methods Applied for Classical Controller Tuning

- ➢

- The modification of command signals (the application of input filters or slope trajectories);

- ➢

- The insertion of additional noise to the feedback paths;

- ➢

- The alternative definition of the cost function.

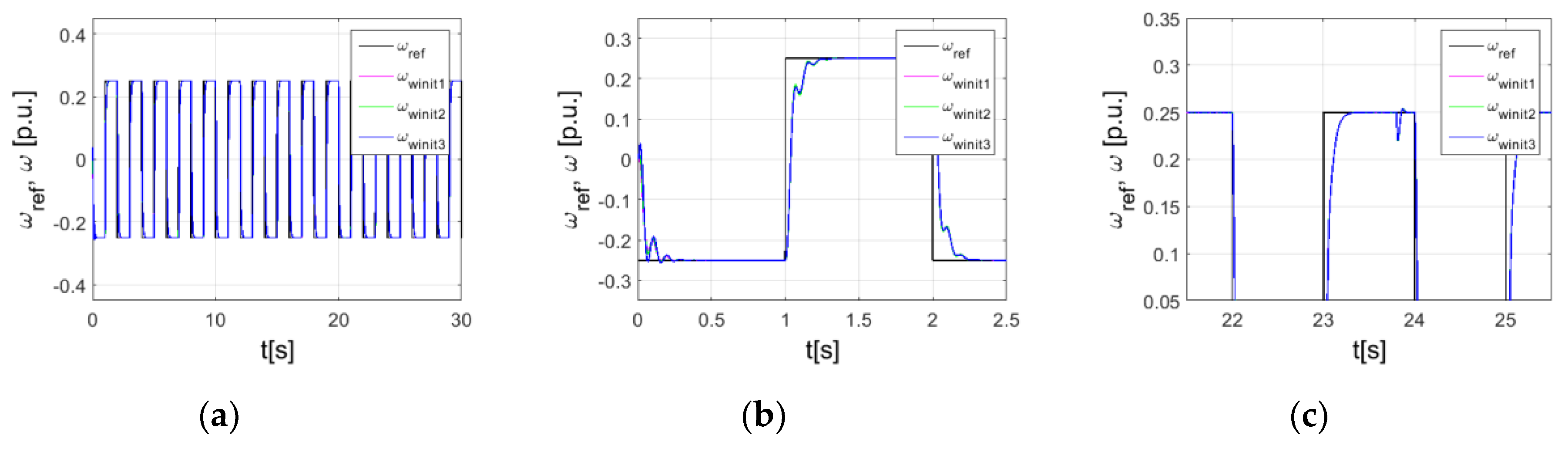

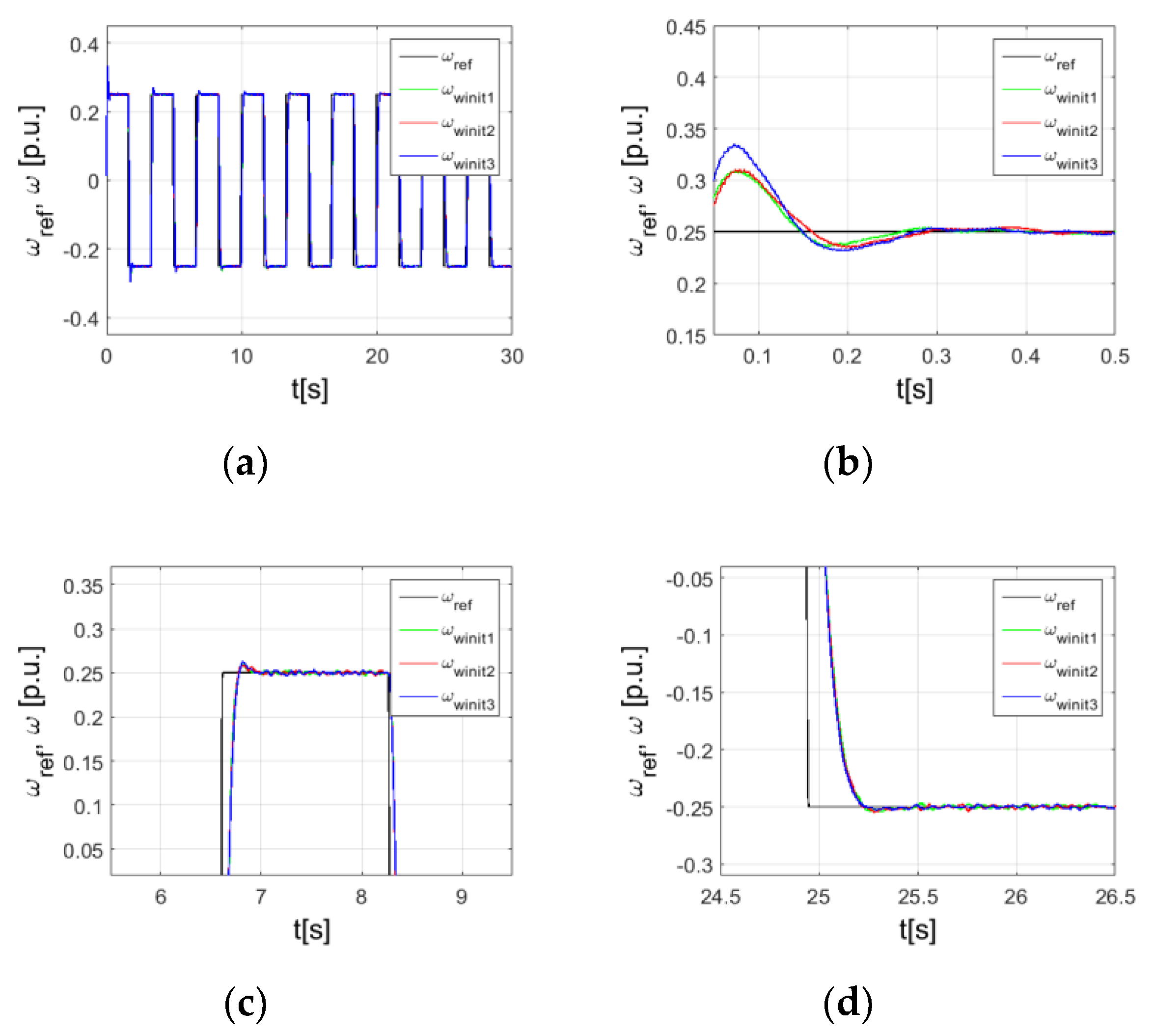

4.4. Starting Point of the Grey Wolf Optimizer

- ➢

- The values of the speed controller gains were selected from the uniformly distributed pseudorandom numbers. This ensured a lack of the initial information about the problem.

- ➢

- The parameters have to be positive. This is due to the structure of the controller (PI).

- ➢

- The random number should be a fractional value (the calculation starting point is distant from the optimal solution). It seems to be the most difficult case to optimize.

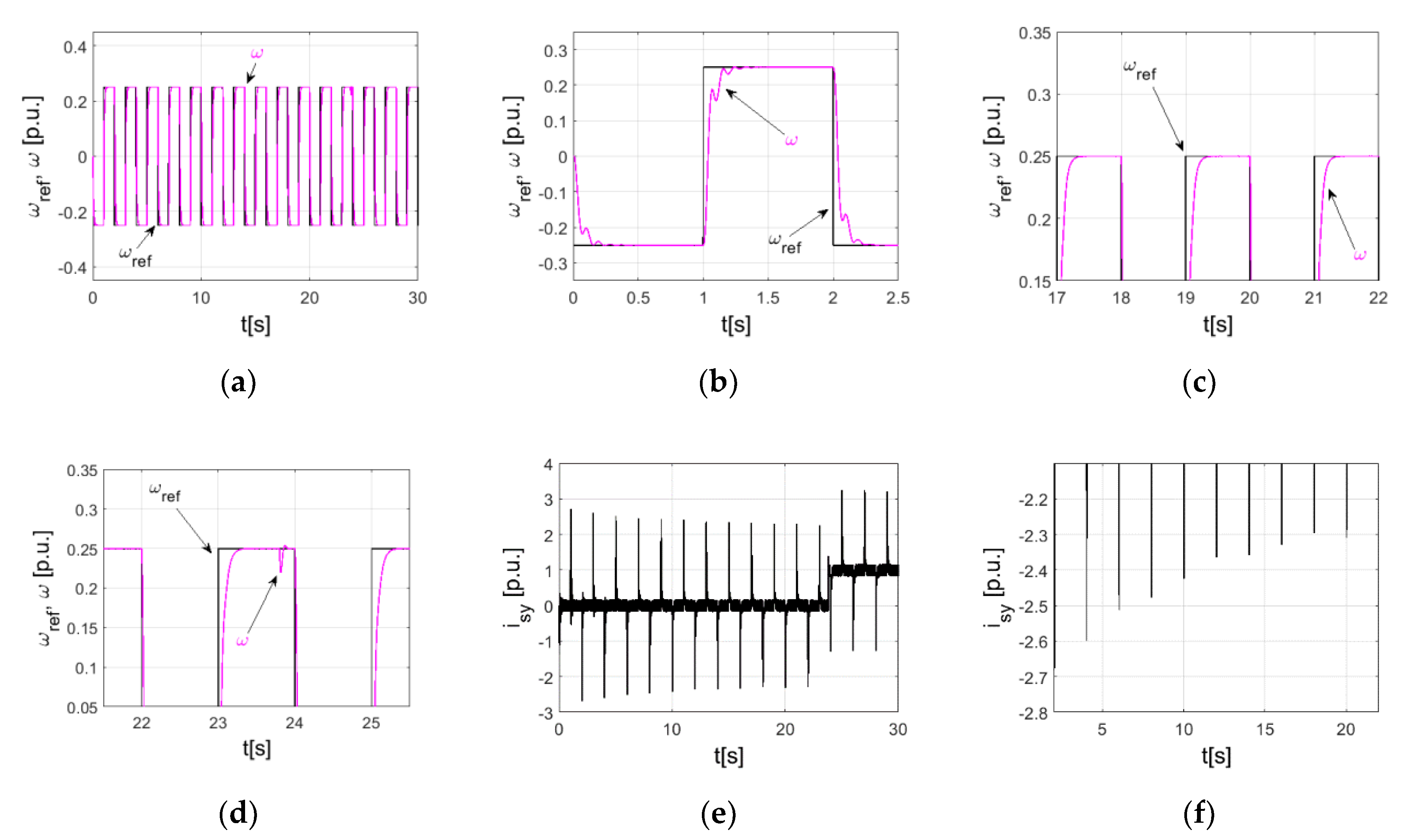

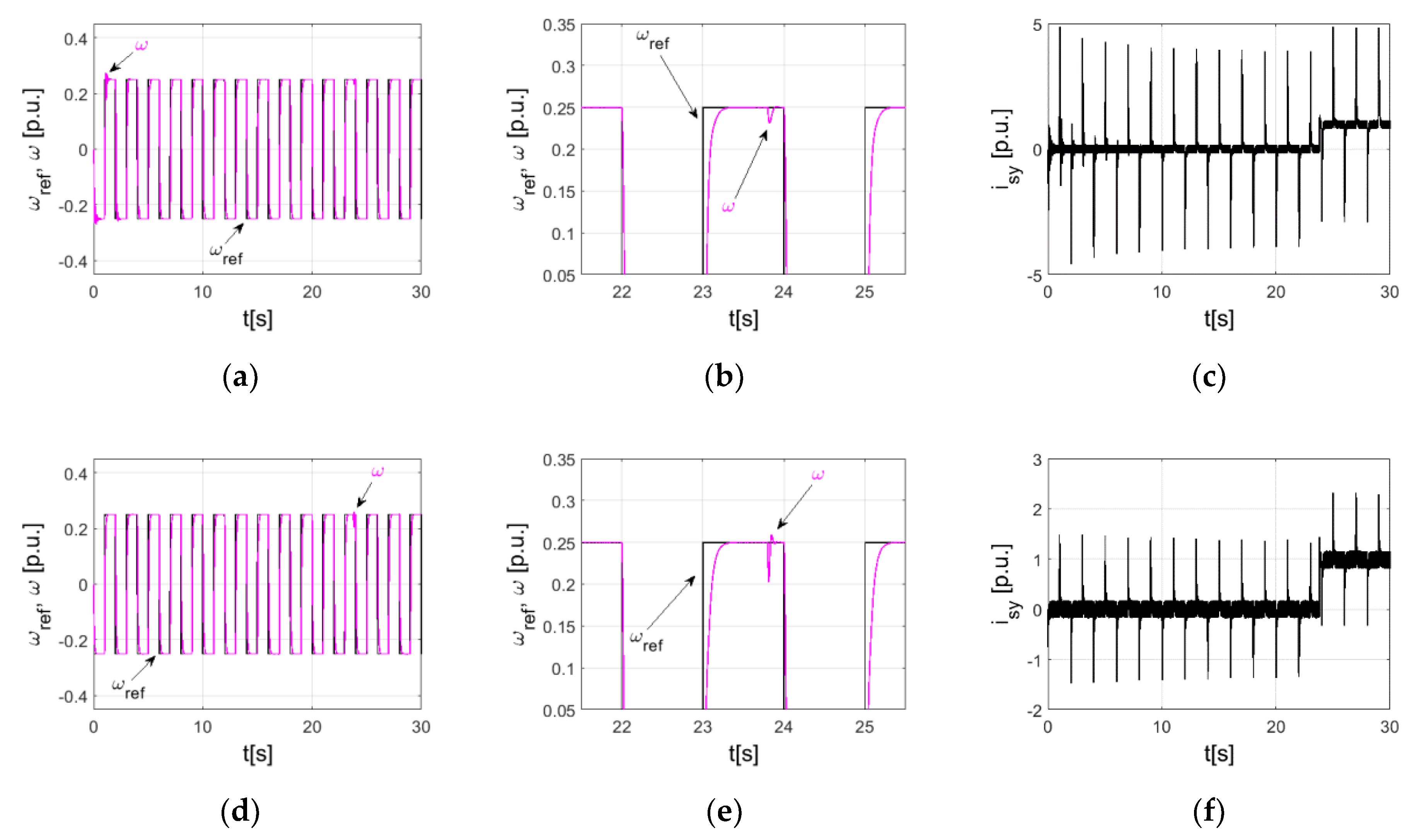

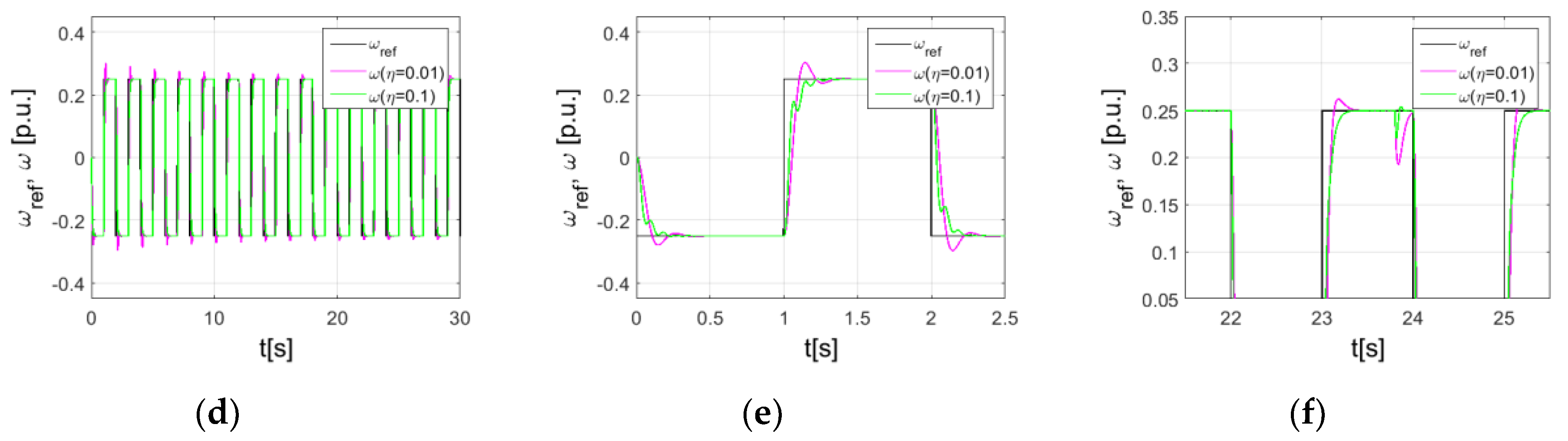

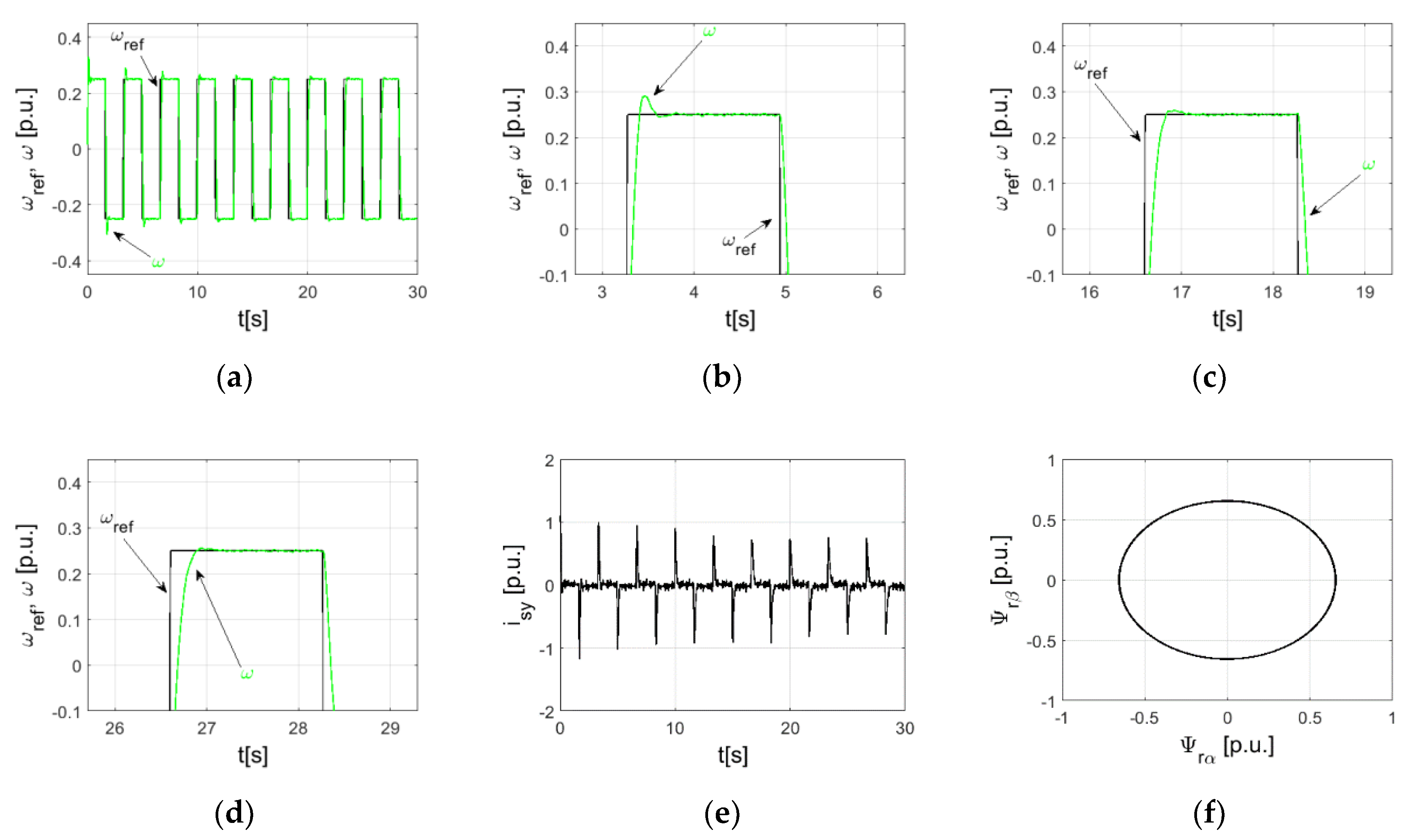

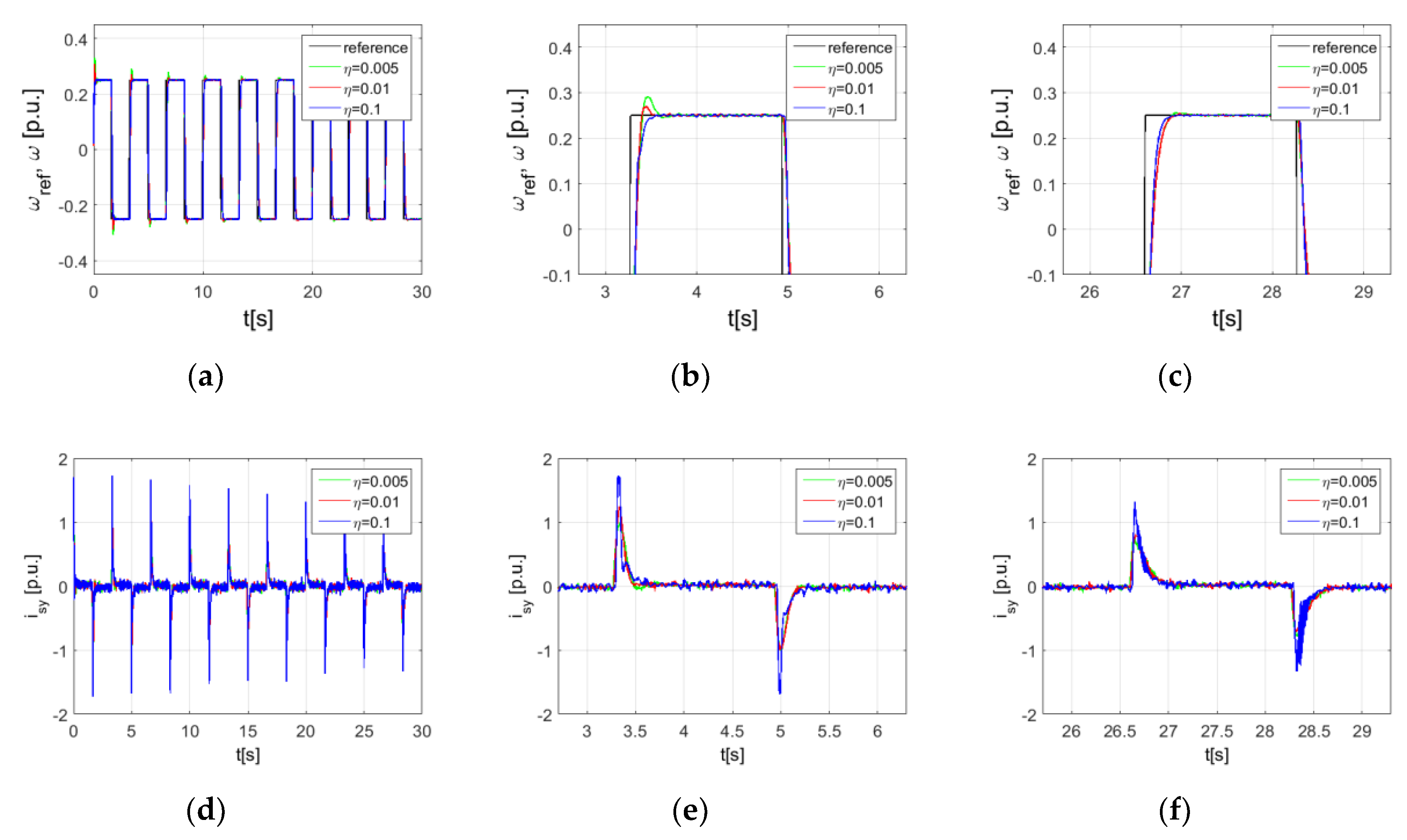

5. Simulations

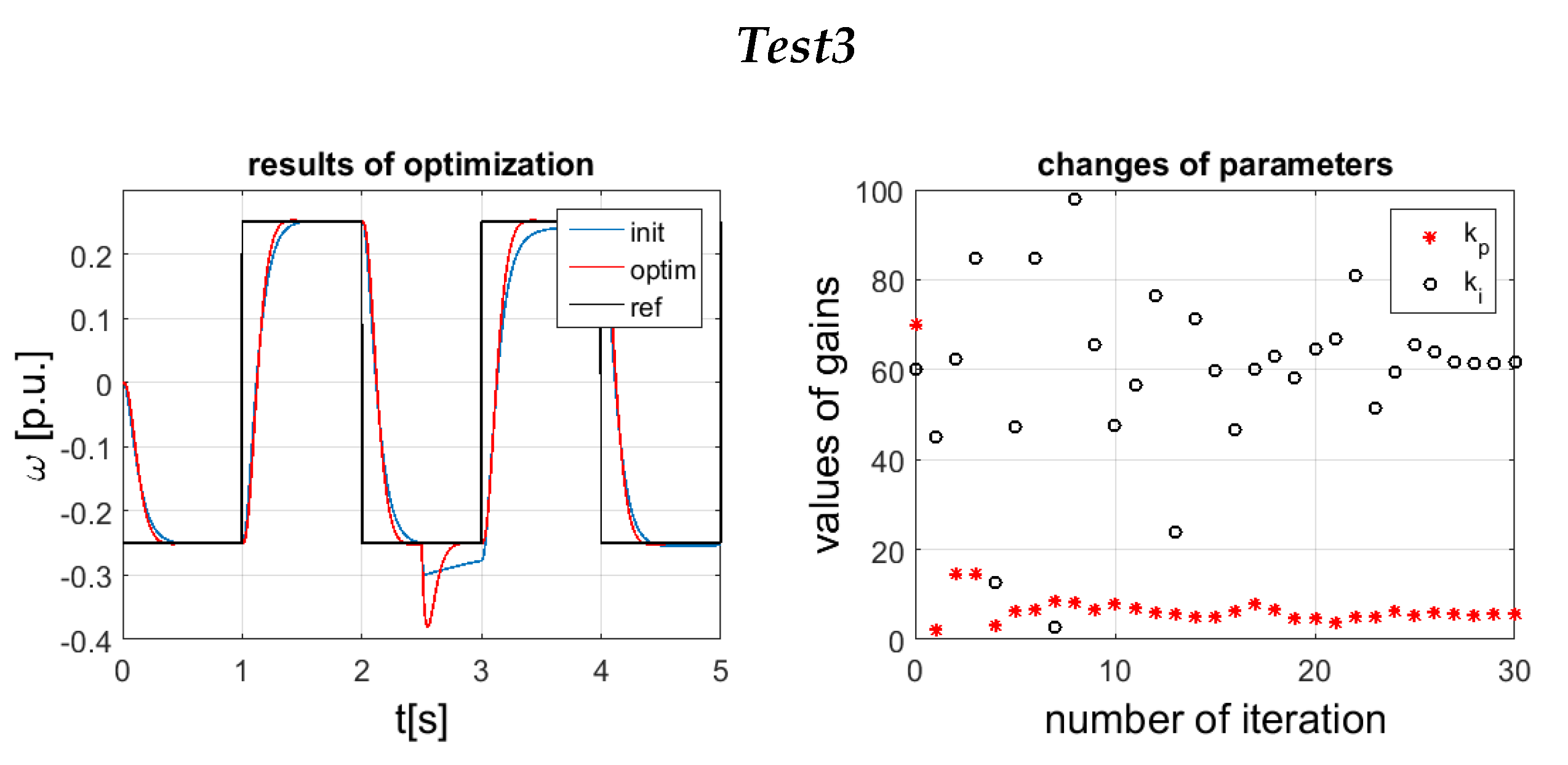

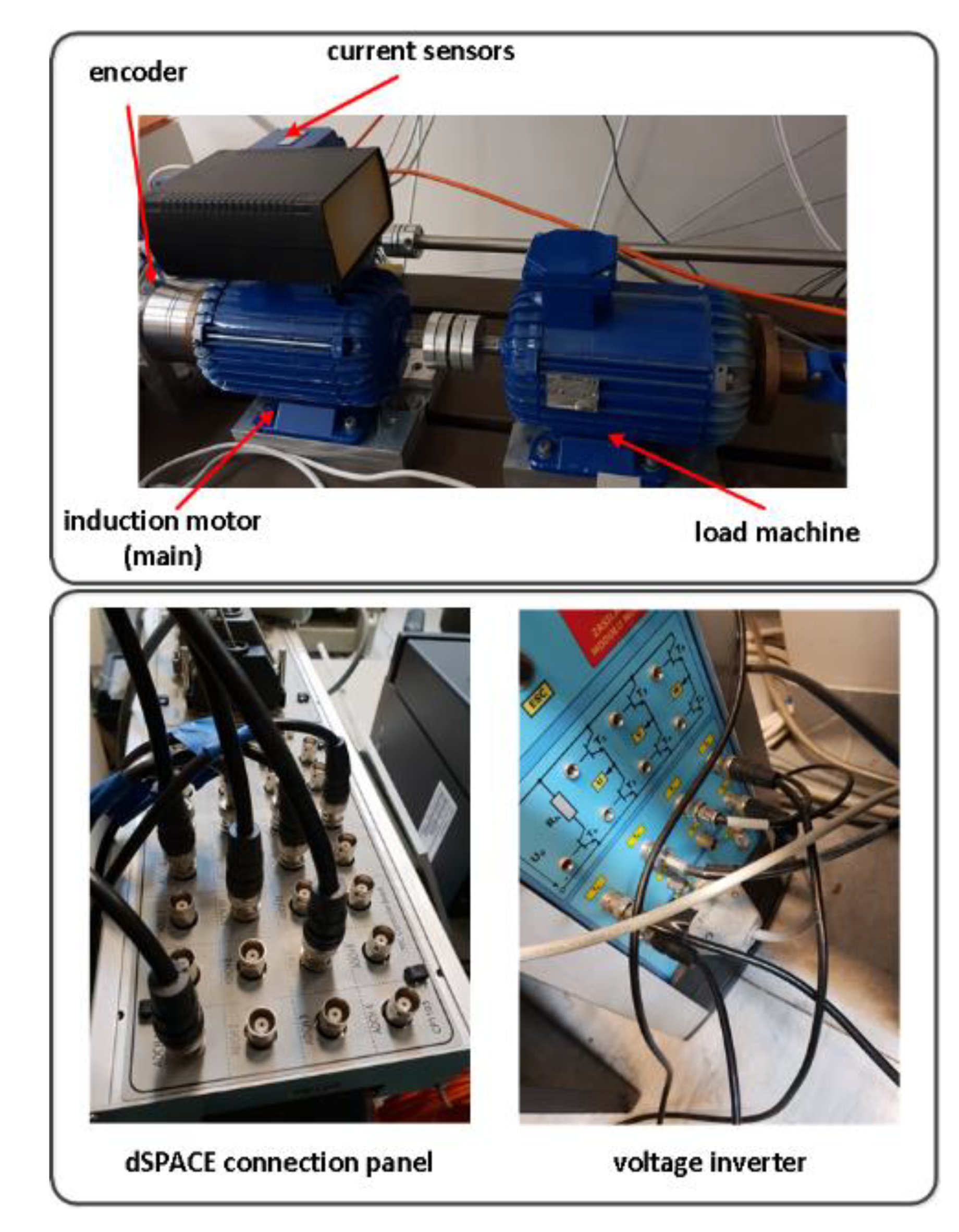

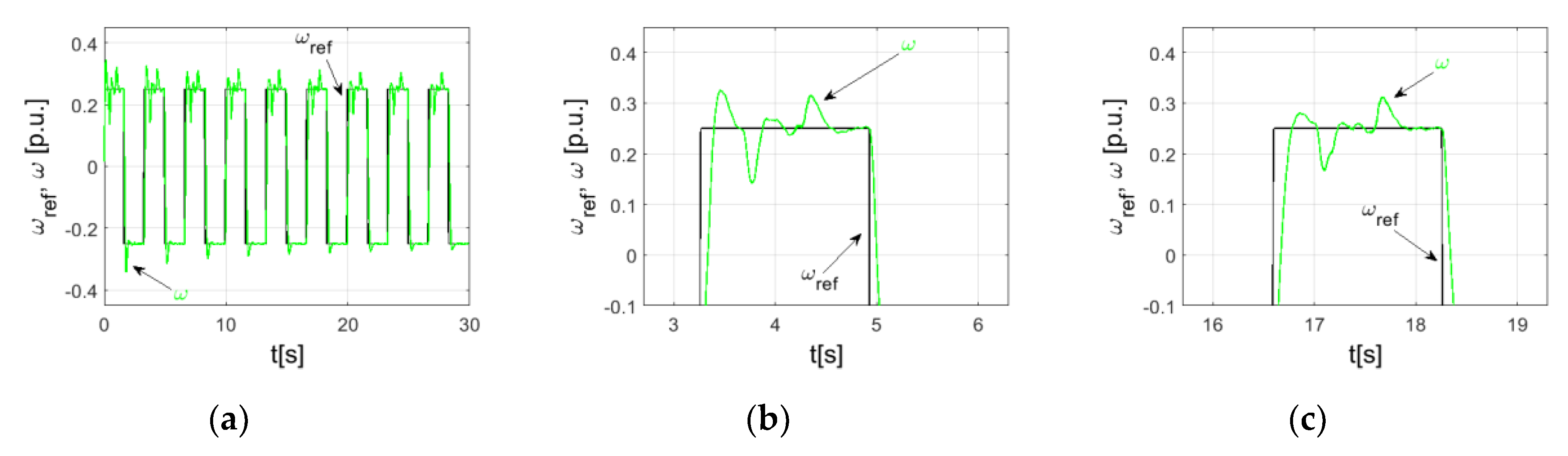

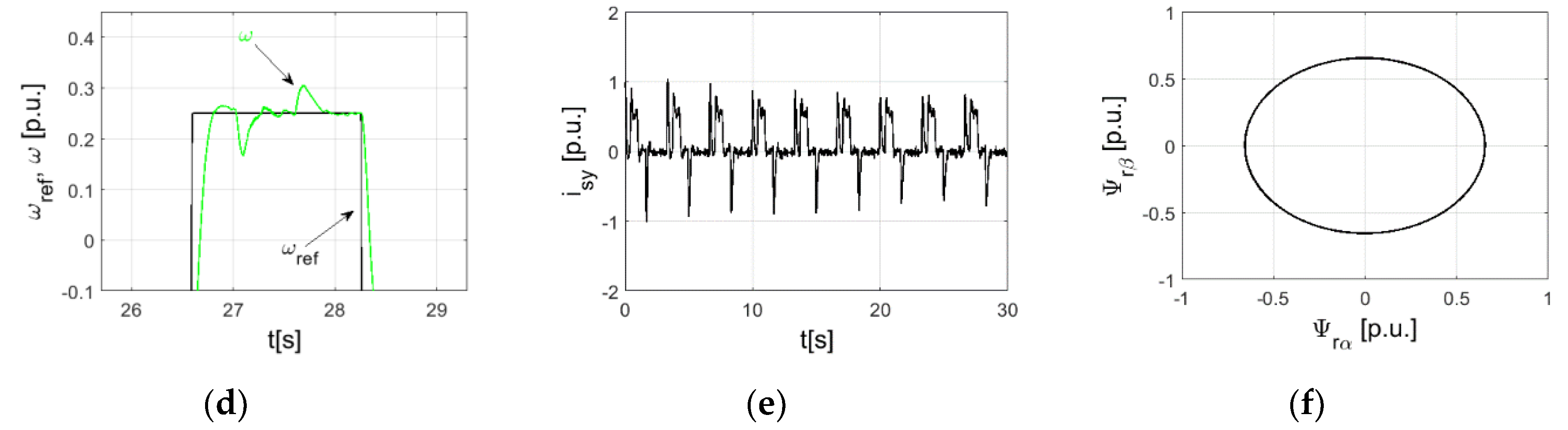

6. Experiment

- ➢

- The simulations were performed for a model of the drive based on non-precise identification.

- ➢

- Nonlinear elements were not taken into account in the simulation.

- ➢

- The limitations of current were not considered in the calculations.

- ➢

- The initial weights were randomized.

7. Conclusions

- ➢

- It is possible to improve the work of the classical speed controller applied in the Direct Field Oriented Control structure using a neural compensator.

- ➢

- Nature-inspired algorithms can be techniques for the auto-tuning of controllers implemented for composed, including nonlinear, plants.

- ➢

- Starting from the random state of the population, after following modifications, optimal solutions (without complicated mathematical calculations) were found.

- ➢

- The stable adaptation law, based on the Lyapunov theory, was successfully tested in a real application.

- ➢

- The constant parameters used in the equation defining the modification of the weights in the RBFNN are important for the work of the speed controller (the dynamics of the control structure).

- ➢

- The cooperation of a classical controller with a neural network allows the correct work of the drive under changes in the mechanical time constant of the electric motor.

- ➢

- The simulations and experiment (after the implementation of the algorithm in a digital signal processor) showed high-quality control. The reference speed and measured value are very close, without overshoots and oscillations.

Author Contributions

Funding

Conflicts of Interest

References

- Zeb, K.; Din, W.U.; Khan, M.A.; Khan, A.; Younas, U.; Busarello, T.D.C.; Kim, H.J. Dynamic simulations of adaptive design approaches to control the speed of an induction machine considering parameter uncertainties and external perturbations. Energies 2018, 11, 2339. [Google Scholar] [CrossRef]

- Alger, P.L. Induction Machines: Their Behavior and Uses; CRC Press: Boca Raton, FL, USA, 1995. [Google Scholar]

- Pimkumwong, N.; Wang, M.-S. Online speed estimation using artificial neural network for speed sensorless direct torque control of induction motor based on constant V/F control technique. Energies 2018, 11, 2176. [Google Scholar] [CrossRef]

- Lopez, J.C.; David Flórez Cediel, O.; Mora, J.H. A low-cost adjustable speed drive for three phase induction motor. In Proceedings of the IEEE Workshop on Power Electronics and Power Quality Applications (PEPQA), Bogota, Colombia, 31 May–2 June 2017; pp. 1–4. [Google Scholar]

- He, H.; Zhang, C.; Wang, C. Research on active disturbance rejection control of induction motor. In Proceedings of the IEEE 4th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chengdu, China, 20–22 December 2019; pp. 1167–1171. [Google Scholar]

- Cheng, G.; Yu, W.; Hu, J. Improving the performance of motor drive servo systems via composite nonlinear control. CES Trans. Electr. Mach. Syst. 2018, 2, 399–408. [Google Scholar] [CrossRef]

- Khalil, H.K. Nonlinear Control; Prentice Hall: Upper Saddle River, NJ, USA, 2015. [Google Scholar]

- Bai, G.; Liu, L.; Meng, Y.; Luo, W.; Gu, Q.; Ma, B. Path tracking of mining vehicles based on nonlinear model predictive control. Appl. Sci. 2019, 9, 1372. [Google Scholar] [CrossRef]

- Errouissi, R.; Al-Durra, A.; Muyeen, S.M. Experimental validation of a novel PI speed controller for AC motor drives with improved transient performances. IEEE Trans. Control Syst. Technol. 2018, 26, 1414–1421. [Google Scholar] [CrossRef]

- Rodriguez-Martinez, A.; Garduno-Ramirez, R.; Vela-Valdes, L.G. PI fuzzy gain-scheduling speed control at startup of a gas-turbine power plant. IEEE Trans. Energy Convers. 2011, 26, 310–317. [Google Scholar] [CrossRef]

- Wang, C.; Zhu, Z.Q. Fuzzy logic speed control of permanent magnet synchronous machine and feedback voltage ripple reduction in flux-weakening operation region. IEEE Trans. Ind. Appl. 2020, 56, 1505–1517. [Google Scholar] [CrossRef]

- Chen, D.; Mohler, R.R.; Chen, L.-K. Synthesis of neural controller applied to flexible AC transmission systems. IEEE Trans. Circuits Syst. I Fundam. Theory Appl. 2000, 47, 376–388. [Google Scholar] [CrossRef]

- Chen, S.; Kuo, C. Design and implement of the recurrent radial basis function neural network control for brushless DC motor. In Proceedings of the International Conference on Applied System Innovation (ICASI), Sapporo, Japan, 13–17 May 2017; pp. 562–565. [Google Scholar]

- Lewis, F.W.; Jagannathan, S.; Yesildirek, A. Neural Network Control of Robot Manipulators and Nonlinear Systems; CRC Press: Boca Raton, FL, USA, 1998. [Google Scholar]

- Pajchrowski, T.; Zawirski, K. Application of artificial neural network to robust speed control of servodrive. IEEE Trans. Ind. Electron. 2007, 54, 200–207. [Google Scholar] [CrossRef]

- El-Sousy, F.F.M.; Abuhasel, K.A. Self-organizing recurrent fuzzy wavelet neural network-based mixed H2/H∞ adaptive tracking control for uncertain two-axis motion control system. IEEE Trans. Ind. Appl. 2016, 52, 5139–5155. [Google Scholar] [CrossRef]

- El-Sousy, F.F.M.; El-Naggar, M.F.; Amin, M.; Abu-Siada, A.; Abuhasel, K.A. Robust adaptive neural-network backstepping control design for high-speed permanent-magnet synchronous motor drives: Theory and experiments. IEEE Access 2019, 7, 99327–99348. [Google Scholar] [CrossRef]

- Li, S.; Won, H.; Fu, X.; Fairbank, M.; Wunsch, D.C.; Alonso, E. Neural-network vector controller for permanent-magnet synchronous motor drives: Simulated and hardware-validated results. IEEE Trans. Cybern. 2019, 50, 3218–3230. [Google Scholar] [CrossRef]

- Na, J.; Wang, S.; Liu, Y.; Huang, Y.; Ren, X. Finite-time convergence adaptive neural network control for nonlinear servo systems. IEEE Trans. Cybern. 2019, 50, 2568–2579. [Google Scholar] [CrossRef] [PubMed]

- Liu, T.; Li, Q.; Tong, Q.; Zhang, Q.; Liu, K. An adaptive strategy to compensate nonlinear effects of voltage source inverters based on artificial neural networks. IEEE Access 2020, 8, 129992–130002. [Google Scholar] [CrossRef]

- Tan, K. Squirrel-cage induction generator system using wavelet petri fuzzy neural network control for wind power applications. IEEE Trans. Power Electron. 2015, 31, 5242–5254. [Google Scholar] [CrossRef]

- Lin, Z.; Wang, J.; Howe, D. A learning feed-forward current controller for linear reciprocating vapor compressors. IEEE Trans. Ind. Electron. 2011, 58, 3383–3390. [Google Scholar] [CrossRef]

- Pajchrowski, T.; Zawirski, K.; Nowopolski, K. Neural speed controller trained online by means of modified RPROP algorithm. IEEE Trans. Ind. Inform. 2014, 11, 560–568. [Google Scholar] [CrossRef]

- El-Sousy, F.F.M.; Abuhasel, K.A. Intelligent adaptive dynamic surface control system with recurrent wavelet Elman neural networks for DSP-based induction motor servo drives. IEEE Trans. Ind. Appl. 2019, 55, 1998–2020. [Google Scholar] [CrossRef]

- Mahmud, N.; Biswas, P.C. Single neuron ANN based current controlled permanent magnet brushless DC motor drives. In Proceedings of the 4th International Conference on Electrical Engineering and Information & Communication Technology (iCEEiCT), Dhaka, Bangladesh, 13–15 September 2018; pp. 80–85. [Google Scholar]

- Pajchrowski, T. Porównanie struktur regulacyjnych dla napędu bezpośredniego z silnikiem PMSM ze zmiennym momentem bezwładności i obciążenia. Przegląd Elektrotechniczny 2018, 94, 133–138. [Google Scholar] [CrossRef]

- Zhang, M.; Xia, C.; Tian, Y.; Liu, D.; Li, Z. Speed control of brushless DC motor based on single neuron PID and wavelet neural network. In Proceedings of the IEEE International Conference on Control and Automation, Guangzhou, China, 30 May–1 June 2007; pp. 617–620. [Google Scholar]

- Cui, S.; Pan, H.; Li, J. Application of self-tuning of PID control based on BP neural networks in the mobile robot target tracking. In Proceedings of the 2013 Third International Conference on Instrumentation, Measurement, Computer, Communication and Control, Shenyang, China, 21–23 September 2013; pp. 1574–1577. [Google Scholar]

- Rossomando, F.; Soria, C. Design and implementation of adaptive neural PID for nonlinear dynamics in mobile robots. IEEE Lat. Am. Trans. 2015, 13, 913–918. [Google Scholar] [CrossRef]

- Chen, S.; Wen, J.T. Neural-learning trajectory tracking control of flexible-joint robot manipulators with unknown dynamics. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 128–135. [Google Scholar]

- Kaminski, M.; Orlowska-Kowalska, T. Adaptive neural speed controllers applied for a drive system with an elastic mechanical coupling—A comparative study. Eng. Appl. Artif. Intell. 2015, 45, 152–167. [Google Scholar] [CrossRef]

- Xia, W.; Zhao, D.; Hua, M.; Han, J. Research on switched reluctance motor drive system for the electric forklift based on DSP and µC/OS. In Proceedings of the 2010 International Conference on Electrical and Control Engineering, Wuhan, China, 25–27 June 2010; pp. 4132–4135. [Google Scholar]

- Kaminski, M.; Orlowska-Kowalska, T. FPGA implementation of ADALINE-based speed controller in a two-mass system. IEEE Trans. Ind. Inform. 2012, 9, 1301–1311. [Google Scholar] [CrossRef]

- Chen, J.; Liu, G.; Zhao, W.; Chen, Q.; Zhou, H.; Zhang, D. Fault-tolerant control of three-motor drive based on neural network right inversion. In Proceedings of the 19th International Conference on Electrical Machines and Systems (ICEMS), Chiba, Japan, 13–16 November 2016; pp. 1–4. [Google Scholar]

- Beni, G.; Wang, J. Swarm intelligence in cellular robotic systems. In Robots and Biological Systems: Towards a New Bionics? NATO ASI Series; Springer: Berlin/Heidelberg, Germany, 1993; Volume 102, pp. 703–712. [Google Scholar]

- Calvini, M.; Carpita, M.; Formentini, A.; Marchesoni, M. PSO-based self-commissioning of electrical motor drives. IEEE Trans. Ind. Electron. 2015, 62, 768–776. [Google Scholar] [CrossRef]

- Zhao, J.; Lin, M.; Xu, D.; Hao, L.; Zhang, W. Vector control of a hybrid axial field flux-switching permanent magnet machine based on particle swarm optimization. IEEE Trans. Magn. 2015, 51, 1–4. [Google Scholar]

- Rajasekhar, A.; Das, S.; Abraham, A. Fractional order PID controller design for speed control of chopper fed DC motor drive using artificial bee colony algorithm. In Proceedings of the 2013 World Congress on Nature and Biologically Inspired Computing, Fargo, ND, USA, 12–14 August 2013; pp. 259–266. [Google Scholar]

- Tarczewski, T.; Grzesiak, L.M. An application of novel nature-inspired optimization algorithms to auto-tuning state feedback speed controller for PMSM. IEEE Trans. Ind. Appl. 2018, 54, 2913–2925. [Google Scholar] [CrossRef]

- Hannan, M.A.; Ali, J.A.; Mohamed, A.; Hussain, A. Optimization techniques to enhance the performance of induction motor drives: A review. Renew. Sustain. Energy Rev. 2018, 81, 1611–1626. [Google Scholar] [CrossRef]

- Tarczewski, T.; Niewiara, L.J.; Grzesiak, L.M. An application of flower pollination algorithm to auto-tuning of linear-quadratic regulator for DC-DC power converter. In Proceedings of the 20th European Conference on Power Electronics and Applications (EPE’18 ECCE Europe), Riga, Latvia, 17–21 September 2018; pp. P.1–P.8. [Google Scholar]

- Brock, S.; Łuczak, D.; Nowopolski, K.; Pajchrowski, T.; Zawirski, K. Two approaches to speed control for multi-mass system with variable mechanical parameters. IEEE Trans. Ind. Electron. 2017, 64, 3338–3347. [Google Scholar] [CrossRef]

- Sul, S.K. Control of Electric Machine Drive Systems; Wiley: Hoboken, NJ, USA, 2011. [Google Scholar]

- Chen, T.C.; Sheu, T.T. Model reference neural network controller for induction motor speed control. IEEE Trans. Energy Convers. 2002, 17, 157–163. [Google Scholar] [CrossRef]

- Holtz, J. Sensorless control of induction motor drives. Proc. IEEE 2002, 90, 1359–1394. [Google Scholar] [CrossRef]

- Bishop, C.M. Neural networks and their applications. Rev. Sci. Instrum. 1994, 65, 1803–1832. [Google Scholar] [CrossRef]

- Gao, J.; Proctor, A.; Bradley, C. Adaptive neural network visual servo control for dynamic positioning of underwater vehicles. Neurocomputing 2015, 167, 604–613. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Chottiyanont, P.; Konghirun, M.; Lenwari, W. Genetic algorithm based speed controller design of IPMSM drive for EV. In Proceedings of the 11th International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology (ECTI-CON), Nakhon Ratchasima, Thailand, 14–17 May 2014. [Google Scholar]

- Szabat, K.; Tran-Van, T.; Kamiński, M. A modified fuzzy Luenberger observer for a two-mass drive system. IEEE Trans. Ind. Inform. 2015, 11, 531–539. [Google Scholar] [CrossRef]

| Number of Test | Before Optimization | After Optimization | ||

|---|---|---|---|---|

| kp_init | ki_init | kp | ki | |

| Test 1 | 0.4726 | 0.4328 | 5.5872 | 61.9276 |

| Test 2 | 4.6631 | 6.1357 | 5.5943 | 61.9402 |

| Test 3 | 69.9780 | 60.0084 | 5.5715 | 61.7623 |

| Element | Parameter | Value |

|---|---|---|

| Induction motor | Nominal power | 1.1 kW |

| Voltage | 230 V | |

| Current | 2.9 A | |

| cos ϕ | 0.76 | |

| Torque | 7.6 Nm | |

| Stator flux | 0.9809 Wb | |

| Efficiency | 76% | |

| Rotor speed | 1380 rpm | |

| Frequency | 50 Hz | |

| Moment of inertia | 0.002655 kgm2 | |

| Stator resistance | 5.9 Ω | |

| Rotor resistance | 4.559 Ω | |

| Magnetizing impedance | 392.5 mH | |

| Stator leakage impedance | 24.8 mH | |

| Rotor leakage impedance | 24.8 mH | |

| GWO algorithm | Size of population | 20 |

| Number of iterations | 30 | |

| Total time of calculations | 159.5262 s | |

| PI controller | kp | 5.5892 |

| ki | 61.4973 | |

| RBFNN compensator | Number of hidden nodes | 5 |

| Range of training coefficient | η ∈ <0.005; 0.1> | |

| Initial weights | Random numbers |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kaminski, M. Nature-Inspired Algorithm Implemented for Stable Radial Basis Function Neural Controller of Electric Drive with Induction Motor. Energies 2020, 13, 6541. https://doi.org/10.3390/en13246541

Kaminski M. Nature-Inspired Algorithm Implemented for Stable Radial Basis Function Neural Controller of Electric Drive with Induction Motor. Energies. 2020; 13(24):6541. https://doi.org/10.3390/en13246541

Chicago/Turabian StyleKaminski, Marcin. 2020. "Nature-Inspired Algorithm Implemented for Stable Radial Basis Function Neural Controller of Electric Drive with Induction Motor" Energies 13, no. 24: 6541. https://doi.org/10.3390/en13246541

APA StyleKaminski, M. (2020). Nature-Inspired Algorithm Implemented for Stable Radial Basis Function Neural Controller of Electric Drive with Induction Motor. Energies, 13(24), 6541. https://doi.org/10.3390/en13246541