1. Introduction

In the United States (U.S.), building energy use was responsible for 40% of total energy consumption and 75% of total electricity consumption in 2016 [

1]. As a cost-effective manner for reducing energy usage, building energy codes, which govern building construction to meet minimum energy requirements, have been implemented and regularly strengthened for new and existing buildings in many countries [

2].

Building energy codes have many advantages, such as lower utility bills for consumers, improved energy resilience, health and comfort, environmental sustainability, and a lower need for energy subsidies [

2,

3]. Studies have shown that building energy codes have led to 6%–22% reduction of average annual energy consumption per dwellings in the residential building sector of the European Union [

2,

4], have the potential to curtail the energy usage and CO

2 emissions by 13%–22% by 2100 in China [

5], and could dwindle building electricity use in Gujarat, India by 20% in 2050 [

6]. In the U.S., building energy codes have helped saving approximately 4.2 quads of energy and more than

$44 billion for customers between 1992 and 2012 [

7]. Athalye et al. [

8] evaluated the national impact of energy codes from 2010 to 2040 by accounting for the varying rates that states adopt the model building codes and a modest pace at which energy codes update over the years, and they estimated that building energy codes could bring

$126 billion savings to consumers’ utility bills in that timeframe, which equates to a carbon pollution cutback of 841 million metric tons: an equivalent to the greenhouse gases emitted by 177 million passenger vehicles driven for one year or the carbon dioxide emissions from 245 coal power plants for one year or 89 million homes. Nowadays, it is widely accepted that building energy codes do help save energy [

3,

9,

10,

11].

Code compliance is a vital link between actual energy savings and the energy efficiency prescribed in the energy code books [

12,

13]. The energy savings from stringent energy codes cannot be delivered unless new buildings are constructed to completely comply with the code. Non-compliance has been noted as an issue internationally, e.g., in the United Kingdom, [

14], U.S. [

12], China [

13,

15], and many other developing countries [

16]. It also seems that countries such as China with a mandatory energy efficiency code show quite high compliance [

15], and the effectiveness of the building energy efficiency standards (BEES) of China was confirmed through field study and analysis with a data-driven approach [

17]. In the U.S., state legislatures, local government, utility companies, and energy efficiency organizations are interested in knowing the status quo of code compliance and the energy-savings potential in their jurisdictions [

12]. Obsolete building codes hurt the international competition of the U.S. construction industry [

3]. Knowing the status quo allows the state to identify common causes of non-compliance and assess the energy and economic impacts of updating to a more stringent code [

18]. Identifying key technology trends and quantifying the value of increased compliance are often required by state regulatory agencies (e.g., utility commissions) as a prerequisite to assigning value and attribution for programs contributing to state energy efficiency goals [

18]. Utilities also rely on energy code compliance for resource planning and the payback of investments [

19], and they are credited with energy savings from their code compliance assistance efforts [

13]. Improving code compliance could have tremendous economic impact. Research estimates that the national savings from ensuring just one year’s worth of new residential and commercial construction in the U.S. to complete compliance to the building energy code is 2.8–8.5 trillion Btu annually, or

$63–

$189 million in energy cost savings annually, which equates to lifetime savings of up to

$37 billion for just five years of construction. [

12]. A study conducted by the Institute for Market Transformation (IMT) indicated that every dollar spent on code compliance and enforcement efforts leads to a six-fold return in energy savings [

20].

Historically, most building energy code compliance studies do not directly estimate energy savings due to compliance rate methods, relying on some form of prescriptive checklist being the most commonly used approach [

12,

21]. A compliance rate captures the fraction of buildings that meet all the prescribed code requirements. Most compliance evaluations stop at providing merely a compliance rate; however, simply knowing the compliance rate makes it difficult to estimate the potential energy savings impact of improved compliance [

21,

22]. Additional analysis is needed to convert the raw or aggregate compliance scores resulting from a checklist or pass–fail approach into energy metrics [

12]. Prescriptive checklists were developed with the possible weighting of code items to account for varying energy impacts [

23,

24]. In addition, code compliance evaluation requires multiple site visits to complete a survey; based on the given stage of the construction process, a measurement can be recorded. These surveys are expensive, as it is difficult to collect enough data points to perform engineering calculations or computer simulations that would provide statistically representative code compliance rates at the state level.

As more builders follow the performance pathway to meet building energy code requirements, building energy performance metrics and building energy simulation models serve a greater role in conducting code compliance evaluation [

25,

26]. Building simulation has been widely used to support building energy-efficiency study for a variety of research and practical purposes. Compared to in situ building experiments, building energy simulation provides a numerical experiment with a relatively fast, low-cost, and controllable environment to investigate the impact of design choices and technologies on an overall building’s energy performance. There are many sophisticated building energy modeling tools that apply physics-based principles to simulate detailed building energy patterns. EnergyPlus [

27] is one that has been used widely for the building energy codes development in the U.S. and was thus chosen for the evaluation of code compliance in this study.

There are several challenges of using building simulation to evaluate the energy impacts of code compliance. One of the primary challenges is having enough data inputs to inform the building energy model. When building energy simulation is used to compare individual building design options and technologies or evaluate retrofit measures, model inputs can be derived from building design blueprints, building permits, or from actual observations of individual buildings under retrofit. Prototypical building models are generally used to support energy code development or evaluate energy efficiency measures for a population of buildings [

28,

29,

30]. The general energy characteristics of the prototypical buildings are known as well as the operations and control schemes [

31,

32]. However, these assumptions are not necessarily valid to develop a building simulation tool based off code compliance measurements taken from actual buildings.

The evaluation of code compliance requires in-field data collection to compare results against minimum code requirements. The average completion time of a single-family home is around several months, and the entire building construction process is complex. These make it very difficult to know whether a home complies with the energy code in its entirety, as not all energy-efficiency measures are in place or visible at any given point during the home construction process. For example, when homes are visited during earlier stages of construction, key features affecting energy performance (e.g., walls with insulation) may not be in place yet. However, these items may also not be observable, because they might be already covered if the homes are visited during later stages. Therefore, to gather all the data required in the sampling plan, field teams needed to visit homes in various stages of the construction process [

24]. Multiple site visits during different construction phases not only increases the survey cost, but also introduces biases on the data collection due to the awareness of the builder of the upcoming visits. The builder’s practice may be altered by knowing there are follow-up compliance assessments in the future. To account for these potential biases, field visits are conducted on a small sample of buildings where code items are recorded from a variety of different homes. As such, no home provides a complete representation of its compliance.

This shortage of complete data for individual homes introduces an analytical challenge, because building energy simulation tools require a complete set of inputs to generate reliable results [

24]. Since comprehensive field surveys for the energy simulation of individual buildings becomes impractical for a code compliance evaluation at the scale of an entire state, this study leverages a novel modeling framework to limited field data collection with large-scale simulation. The framework consists of all aspects of conducting a residential energy code field study in single-family homes, including sampling homes under construction for field visit, data collection during a site survey, and the subsequent building energy simulation and analysis. The research questions we are trying to address include determining the status quo of code compliance in the new single-family homes in a state in terms of building energy consumption, the energy impact of non-compliance with energy codes, and whether targeted education and training could reduce the non-compliance and its impact.

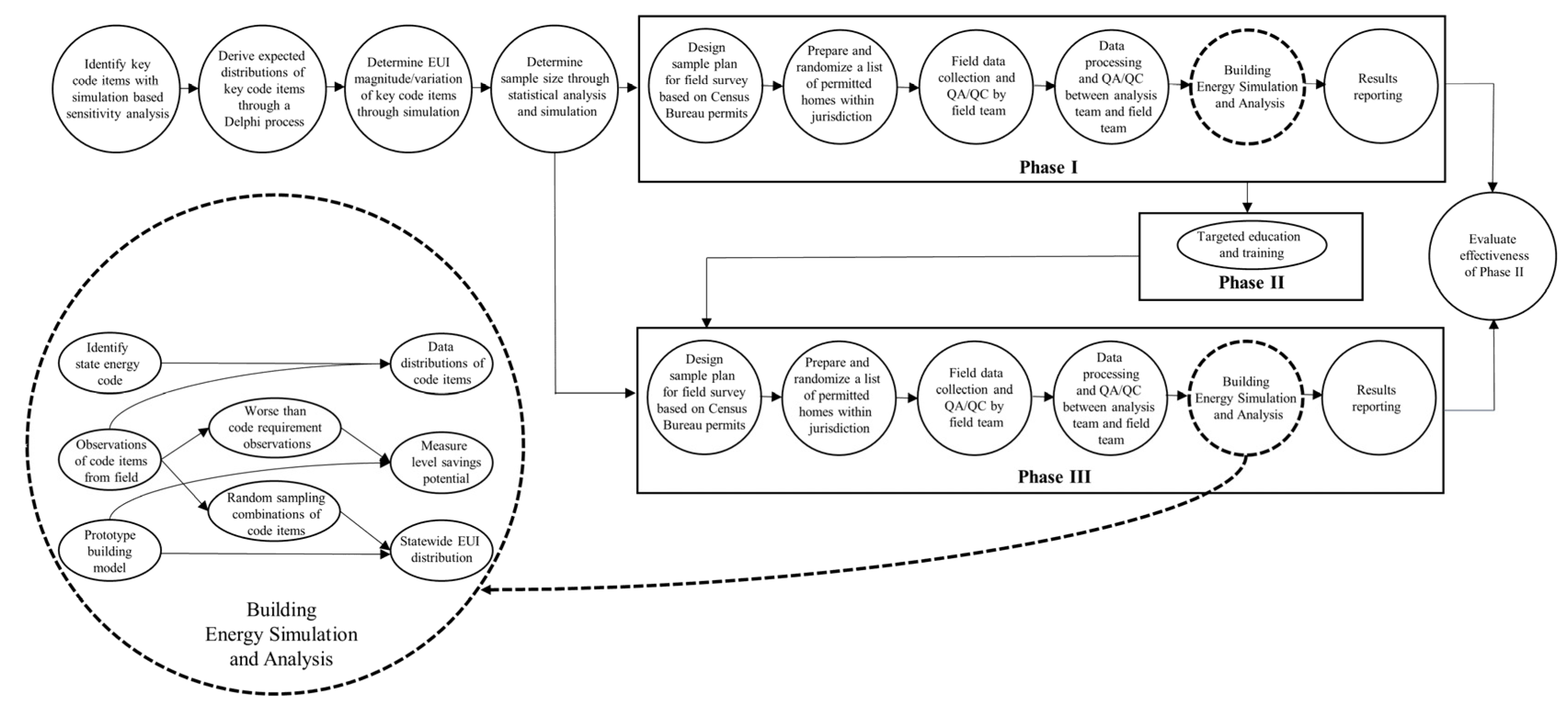

The entire study includes three phases. Phase I establishes a baseline to evaluate the status quo of energy use in typical new construction residential homes in the state and identify specific code items that are not complied with, and therefore can be targeted to achieve better energy savings. Once specific code measures are identified, specific education, training, and outreach activities can be developed for Phase II. These multi-year activities are offered to builders to improve compliance rates and installation practices. Phase III is the final stage of the field studies, where follow-up field data is conducted, following the same survey methodology from Phase I. This paper focuses on the complete data analysis of Phase III, the comparison of analysis results between Phase III and Phase I, providing results on the impact of the education and training activities on code compliance and energy-savings potential.

The remainder of this paper is organized into five sections.

Section 2 provides an overview of the three phases of this study and states participating in the initial pilot.

Section 3 introduces the aspects of the framework that have otherwise been described and applied in Phase I [

33] and part of Phase III [

34] with a focus on the comparison between phases.

Section 4 presents the results for seven states of the U.S.

Section 5 describes the larger impact and opportunities that the code studies present.

Section 6 concludes the paper and summaries the key contributions.

2. Background

Building energy codes save energy, and savings can be theoretically quantified through code-book to code-book comparison with the aid of computer simulation. However, construction is neither a simulation model nor a physical laboratory; the savings that assume perfect code compliance do not reflect reality. The commonly used checklist compliance rate approach has weaknesses because it is assumed to be a proxy for energy, but that connection was never empirically established [

35]. Little research has been done to evaluate compliance in a consistent and reproducible manner, due to the complex nature of this matter [

36]. To address the lack of information available on energy code impacts, the U.S. Department of Energy initiated an Energy Code Field Study to help documenting baseline practices and targeting areas for improvement as well as further quantifying related savings potential [

24]. This information is intended to assist states in measuring energy code compliance and to identify areas of focus for future education and training initiatives [

37].

A multi-year residential energy code field study was initiated by the United States Department of Energy (U.S. DOE) in 2015. The goal of the study was to determine whether an investment in education, training, and outreach programs targeted at improving code compliance can produce a significant, measurable change in single-family residential building energy use [

35]. The study consists of (1) establishing a framework to evaluate the current status of code compliance and quantify code-related energy savings opportunities in single-family residential construction, and (2) testing whether compliance could be improved through energy code education, training, and outreach activities. Eight U.S. states, including Alabama (AL), Arkansas (AR), Georgia (GA), Kentucky (KY), Maryland (MD), North Carolina (NC), Pennsylvania (PA), and Texas (TX) participated in the pilot study by responding to the U.S. DOE Funding Opportunity Announcement (FOA), “Strategies to Increase Residential Energy Code Compliance Rates and Measure Results” [

37,

38].

The study includes three phases. A framework for evaluating residential building code compliance has been developed during Phase I. The framework includes plans for site surveys, protocols for data collection, and a methodology for data analysis including EnergyPlus simulation. The analysis methodology replaces the historic compliance rate approach with the use of building energy simulation [

24]. Prototype building models are used for the analysis. Limited field data is collected and bootstrap sampling [

39,

40] is applied to generate inputs for many building models on which EnergyPlus simulation is conducted. Bootstrap is a widely used computational-intensive statistical tool based on empirical distribution, and the repeated sampling with replacement on it for improving statistical assessment of the population. It has increasing use in the energy efficiency area [

41,

42,

43]. In the context of assessing code compliance in a state, the population is all the new homes constructed in one year. While it is not possible to survey all homes under construction, ideally one wants to draw large, non-repeated, samples from the population. However, one is generally limited to one sample with limited instances because of limited resources. Like other statistics tools, bootstrap is based on the plug-in principle, which is to substitute something unknown with an estimate [

39,

40]. For example, one uses sample mean as an estimate of population mean. With bootstrap, one goes one step farther—instead of plugging in an estimate for a single parameter, one plugs in an estimate for the whole population by treating this single sample as a mini population, from which repeated samples are drawn with the replacement. The developed methodology has previously been applied to field data collected during Phase I in the eight pilot states funded by the FOA [

33]. The analysis identified gaps in code compliance, and those to-be-improved code items became targets for training, education, and outreach activities. The energy-savings potential of to-be-improved code items is also estimated [

33].

Following Phase I, seven of the eight pilot states (Arkansas dropped out after Phase I) spent two years implementing a variety of intervention strategies, which were focused on the to-be-improved code items identified in Phase I. The education, training, and outreach activities include in-person trainings, circuit rider assistance with code officials or builders, handing out code books, compliance guides, and distributing energy stickers for panel certificates, creating online videos, and organizing workshops with presentations. These Phase II activities varied by state based on local stakeholder preferences and other state-specific constraints.

The Phase III field data collection and analysis are based on the same framework developed and applied in Phase I, aiming to assess the effectiveness of the education, training, and outreach activities of Phase II. Partial results of four pilot states at Phase III were previously reported [

34]. All pilot states (except for Arkansas, which dropped out of the study) have completed the Phase III data collection and analysis. Additionally, a dozen more other states used the methodology to start single-phase studies evaluating the current status of code compliance and quantifying code-related energy savings opportunities in the states, with the U.S. DOE providing the technical analyses through the Pacific Northwest National Laboratory (PNNL). This paper focuses on results of the pilot states that have completed the full three-phase study.

4. Results

This section presents the results of seven of the eight pilot states that have completed all three phases: Alabama, Georgia, Kentucky, Maryland, North Carolina, Pennsylvania, and Texas. The average state-wide energy consumption results are first presented in

Section 4.1. The results also include the distributions of the modeled energy-use intensity (EUI) based on the recorded observations from Phase I and Phase III, as well as the model code-compliant EUI for that given state.

Section 4.2 presents the measure-level savings potential of Phase III and Phase I. The measure-level savings potential roughly estimates how much saving can be achieved if worse than code observations can be boosted up to the code requirement level through improved code compliance. A reduction on the measure-level savings potential from Phase I to Phase indicates an improvement in the code compliance in Phase III.

Section 4.3 presents the distributions of the key items collected in Phase III and Phase I.

4.1. State-Wide Average Energy Consumption

Figure 2 shows the EUI distributions of the 1500 pseudo-homes of the seven states by climate zone at Phase I and Phase III, respectively. The solid vertical line denotes the EUI of code-compliant homes and the dashed vertical line represents the average EUI of the observed homes. Alabama, Georgia, Kentucky, Maryland, and Texas show a leftward shift of the dashed vertical line of Phase III to Phase I, indicating a lower average EUI of the observed homes at Phase III than Phase I and suggesting a potential outcome of the improved code compliance. North Carolina and Pennsylvania show a rightward shift of the dashed vertical line of Phase III to Phase I, indicating a higher average EUI of the observed homes at Phase III than Phase I and suggesting a potential outcome of decreased code compliance, which is worthy of further investigation.

Table 4 compares the baseline (code compliant) EUI and average observed EUI for the seven states at both Phase I and Phase III.

The initial U.S. DOE field study methodology was designed to detect an EUI difference of 14.20 MJ/m2·yr between Phases I and III. Any change in excess of that threshold would indicate that a statistically significant change between phases was found.

The average observed EUI decrease for five of the seven states ranges from 3.9% in Alabama to 9.8% in Maryland. The absolute reduction in Georgia, Kentucky, Maryland, and Texas exceeds the threshold of 14.20 MJ/m2·yr, indicating that there is a significant reduction of energy consumption from Phase I to Phase III in these four states on average. The observed average EUI in Alabama decreases from Phase I to Phase III but the difference is below the 14.20 MJ/m2·yr threshold, so the result is inconclusive. In contrast, North Carolina and Pennsylvania saw an increase in the state average EUI from Phase I to Phase III but achieved EUIs that remained below the code compliance EUI.

4.2. Measure-Level Saving Analysis

The measure-level savings potential of each of the key items, which were accumulated across all seven states that participated in the entire study, are presented in

Table 5. The measure-level savings potential is an indicator of how well homes performed compared to code-compliant homes. If all homes meet code, there is no savings potential. Therefore, a reduction in savings potential indicates an improvement in code compliance. It can be seen from

Table 5 that the joint savings potential of all of the key items across the states show a reduction in both energy and cost savings potential, indicating improved compliance.

In

Supplementary Materials, Table S1 presents the measure-level savings potential of each key item for individual state based on both Phase I and Phase III calculations.

In the seven states, most key items exhibit improvement. For Alabama, Georgia, and Maryland, improvements were shown in all to-be-improved key items identified at Phase I with a 12% to 98% reduction on energy savings potential and from 13% to 94% reduction on cost-savings potential, respectively, which leads to a 28% to 78% reduction on energy-savings potential to 29% to 80% reduction on cost-savings potential overall in these three states.

Four out of six key items in Kentucky and four out of five key items in Texas show a reduction on both energy and cost savings potential. However, two key items in Kentucky and one key item in Texas show increase in both energy and cost-savings potential, hinting that the code compliance of those few key items deteriorated from Phase I to Phase III. Despite this, there is still a 25% to 46% reduction in either the energy or cost-savings potential in these two states.

North Carolina and Pennsylvania show an opposite trend. Although half of the to-be-improved key items were improved, the other half got worse, leading to an overall increase of energy and cost-savings potential and suggesting that overall, code compliance became worse in these two states. The measure-level results are consistent with the state-wide results shown in

Table 4.

4.3. Distribution of Key Items

Figures S1–S6 present the histograms of several key items collected in both Phase III and Phase I for the seven states. In each figure from

Figures S1–S6, there are seven plots, one for each state. Each plot consists of two panels. The top panel shows the data distribution of Phase I, and the bottom panel shows the data distribution of Phase III. A text box located on either top left or top right corner of each panel displays the number, the mean, and the median of the observations collected during Phase I or Phase III. The dashed vertical line(s) shows the code requirement(s). The state name is shown in the plot title. While observations of the entire distribution will contribute to the state average EUI, as shown in

Table 4 and

Figure 2, only those observations to the left of the code compliance denoted by the dashed vertical lines will contribute to the savings potential calculations, as shown in

Table 5 and

Table S1.

4.3.1. High-Efficacy Lighting

Figure S1 shows the distribution of high-efficacy lighting. By visualizing the histograms and checking the mean and median in the text boxes of the plots, Phase III shows obvious higher values than Phase I for all seven states, indicating an unambiguous improvement in terms of code compliance.

By comparing the portion of the histogram to the left of the dashed vertical line, which are the distribution of the worse than code requirement observation, both the value magnitude and the occurring frequency are reduced from Phase I to Phase III. This is consistent with the reduction in energy and cost-savings potential for all seven states, as shown in

Table S1. As discussed in the description of the measure-level savings potential analysis, the measure-level savings potential focuses on bringing the worse than code requirement observation up to the code requirement, so it is associated with the observation distribution on the left side of the dashed vertical line in the plots. For this key item, the histogram, especially the part on the left side of the dashed vertical line, supports the reduction of savings potential in Phase III from Phase I.

4.3.2. Exterior Wall Insulation

Figure S2 shows the distribution of U factors of exterior wall insulation.

Both the mean and median in the text boxes show lower values in Phase III than Phase I for Kentucky, Maryland, North Caroline, Pennsylvania, and Texas, but not unambiguously for Alabama and Georgia.

The higher median in Phase III than Phase I, as shown in the text box of the plot for Alabama, seems contradictory to the reduction of savings potential, as shown in

Table S1. However, it should be emphasized that the measure-level saving is a compound result of all observations on the left side of the code requirement dashed vertical line. Therefore, it is not easy to have a direct mapping of the changes in the savings potential to the visual comprehension of the distributions.

Although there is no straightforward mapping between the savings potential and the histogram, the distribution of observations to the left side of the dashed vertical line in Maryland shows a clear example that the reduction of savings potential of this key item must be driven by the decrease in number of worse than code requirements and the rightward shift of the worse than code requirement observations to the dashed vertical line in Phase III from Phase I.

As already pointed out above, the value distributions shown in

Figures S1–S6 carry different information from those carried in the savings potential in

Table S1. It is only the observations on the left side of the dashed vertical line that contribute to the savings potential calculated.

4.3.3. Envelope Tightness (ACH50)

Figure S3 shows the distribution of envelope tightness. As indicated by the numbers shown in the text boxes of the plots for Alabama, Georgia, Kentucky, Maryland, and Texas, both mean and median are lower in Phase III than in Phase I in these five states. By visualizing the portion of the histogram to the left of the dashed vertical line at George, Kentucky, Maryland, and Texas, the reduction on the energy and cost-savings potential is self-evident.

For North Carolina and Pennsylvania, both the mean and median of the observations of Phase III are larger than those from Phase I. Furthermore, looking at the portion of the histogram to the left of the dashed vertical line in North Carolina and Pennsylvania, the cause of the increase of energy and cost-savings potential from Phase I to Phase III are obvious for North Carolina and Pennsylvania, as shown in

Table S1.

4.3.4. Ceiling Insulation

Figure S4 shows the distribution of U factors of ceiling insulation. While the portion of the histograms to the left of the dashed vertical line for Alabama, Georgia, and Maryland might clearly suggest the reduction of the energy and cost-savings potential from Phase I to Phase III, as shown in

Table S1, it is not easy to make such a mapping between the histogram and the reduction or increase of the savings potential shown in

Table S1 in other states.

Worsening ceiling insulation is one of the major contributors to the increasing of the savings potential for Pennsylvania, as shown in

Table S1. Further investigating the R-value distribution of ceiling insulation found that the R-value of the insulation material meets or exceeds the code requirement in Phase III, and the distributions between Phase III and Phase I are similar. However, the insulation installation quality of the two phases is quite different. While Phase I has fractions of 53%, 45%, and 23% split for type I, II, and III installation, respectively, the fractions of installation quality are 12%, 75%, and 7%, respectively for Phase III. The less than perfect installation quality (type II and III) caused the inferior thermal performance and made the overall ceiling performance much worse than in Phase I.

4.3.5. Duct Leakage

Figure S5 shows the distributions of duct leakage. The mean and median in the text boxes of the plots show a decrease trend for Georgia, Maryland, Texas, and the portion of the histograms to the left of the dashed vertical line also show a clear enhancement on Phase III from Phase I. These are consistent to the reduced savings potential presented in

Table S1.

Although the mean and median at Alabama shows opposite trends between Phase III and Phase I, the improvement of the worse than code observation portion is clearly seen in the portion of the histogram to the dashed vertical line, which is consistent with the savings potential reduction, as shown in

Table S1.

The means and medians for Kentucky, North Carolina, and Pennsylvania are increased from Phase I to Phase III. The portion of the histogram to the left of the dashed vertical line suggests an obvious deterioration from Phase I to Phase III for Kentucky and North Carolina, which are consistent with the increase savings potential shown in

Table S1. It seems that the outliers on the high-value tail of the histogram of Phase III for Kentucky and North Carolina are contributing to the increase of savings potential, as shown in

Table S1. The higher mean and median of Phase III for Pennsylvania are partially due to the lack of high frequency of low duct leakage observations in Phase I. While this might have an impact that increases the state average EUI at Phase III, it will not necessarily have the impact of increasing the savings potential at Phase III, because measure-level savings potential focuses on the improvement of the worse than code observations and, in this case, the savings potential did show improvement in Phase III for Pennsylvania.

4.3.6. Window SHGC

Figure S6 shows the distribution of Window SHGC. Window SHGC is one of the key items that meets code compliance in the Phase I baseline study of most of the states, which is revealed by the fact that most of the observations are located on the right side of the code requirement denoted by the dashed vertical line. Alabama was the exception, with Window SHGC identified as a to-be-improved key item in Phase I. Visual inspection of the portion of the distribution left to the dashed vertical line of the two phases explains the savings potential reduction of Phase III, as shown in

Table S1.

5. Discussion

A consistent framework based on an energy metric has been established that can quantify gaps in code compliance and the effectiveness of compliance improving intervention strategies. This approach has recently been used by eight states. We evaluated the state-wide average EUIs of new residential construction and individual key item measure-level savings potential both before and after intervention activities such as education and training. We compared both the state-wide average EUI and the measure-level savings potential of the seven states that have completed all three phases. The state-wide EUI results show significant EUI reductions in four states (Georgia, Kentucky, Maryland, and Texas), an inconclusive EUI reduction in Alabama, an EUI increase in Pennsylvania, and an inconclusive EUI increase in North Carolina. The measure-level savings potential analysis shows that all to-be-improved key items identified in Phase I at Alabama, Georgia, and Maryland have been improved. Although the savings potential of two key items increase in Kentucky, and one key item increases in Texas, the overall savings potential in Kentucky and Texas decreased after Phase II. While the overall savings potential in North Carolina and Pennsylvania increases, three key items in both North Carolina and Pennsylvania show savings potential reduction after Phase II.

Table S1 indicates code compliance improvement after Phase II’s education, training, and outreach activities. There was an overall improvement in five of the seven states. In three of the seven states, every to-be-improved key item showed improvement, while in the other four states, some key items improved, while some got worse. By looking at key item performance across all seven states as shown in

Table 5, all key items show overall improvement. Future study is needed specifically for those key items in the states showing deteriorated performance after the targeted education, training, and outreach activities.

The distribution of the key items collected at the two phases have also been inspected. High-efficacy lighting shows unambiguous improvement after Phase II in all states. Frame wall insulation shows improvement in the states of Kentucky, Maryland, North Carolina, Pennsylvania, and Texas, but it seems to deteriorate a little in Alabama and Georgia in terms of means and medians. However, if focusing on the effort to bring worse than code occurrence to meet or be better than code, even Alabama and Georgia show improvement, as suggested by the reduction of savings potential in Phase III from Phase I, as shown in

Table S1. Envelope tightness shows improvement in five of the seven states, i.e., Alabama, Georgia, Kentucky, Maryland, and Texas, based on checking the descriptive statistics and visual inspection. Ceiling insulation improved in Alabama, Georgia, Maryland, and North Carolina, and it deteriorated in Pennsylvania, which was supported by descriptive statistics and visual inspection. There is an improvement in Kentucky, which can also be supported by the higher frequency of the meet-code observations and reduced savings potential observed in Phase III. The descriptive statistics suggest an improvement in Texas in Phase III, but the change in the worse than code portion shows an opposite trend based on the histograms in

Figure S4 and the measure-level savings potential in

Table S1. For duct leakage, the descriptive statistics and visual inspection of histogram support that it is improved in Georgia, Maryland, and Texas, and it deteriorated in North Carolina and Pennsylvania. The means and medians at Alabama and Kentucky show opposite trends from Phase I to Phase III. In Alabama, the heavy tail in the histogram of Phase I suggests an improvement from Phase I to Phase III. In Kentucky, the existence of outliers in the high-value tail in the histogram of Phase III leads to a deterioration on the descriptive statistic values.

Code compliance of window SHGC is generally good for all states in the two phases, judging by the very few occurrences of observations to the left of the dashed vertical line in the histograms in

Figure S5. Window SHGC was identified as a to-be-improved key item in Alabama during Phase I. The descriptive statistic and visual inspection of the histogram conclude that there is an improvement in Phase III over Phase I for Alabama, Maryland, and Texas. For other states, the construction practice in terms of Window SHGC may stay the same between the two phases.

The purpose of this study aims to evaluate code compliance, in term of energy metrics, of a large population of buildings in the scale of U.S. states, and the methodology was designed for this purpose. The single site-visit principle enforced during field data collection and the use of limited field data to infer a large number of building energy models constitute the novelty of this study, but these also lead to limitations. For example, it is impossible to know whether a home complies with the building energy code in its entirety from a single visit, since insufficient information can be gathered in a single visit to determine if all code requirements have been met. For the same reasons, the prescriptive path was assumed in this study, because it is not possible to determine if common tradeoffs were present. In addition, the building energy model was constructed using prototypes instead of the surveyed homes. Thus, the impact of certain field-observable items such as size, height, orientation, window area, floor-to-ceiling height, equipment sizing, and equipment efficiency were not included in the analysis [

44]. Experiences and lessons learned during this study might be very useful for future similar studies.