Energy Conservation Measures for a Research Data Center in an Academic Campus

Abstract

:1. Introduction

2. Materials and Methods

3. Results and Discussion

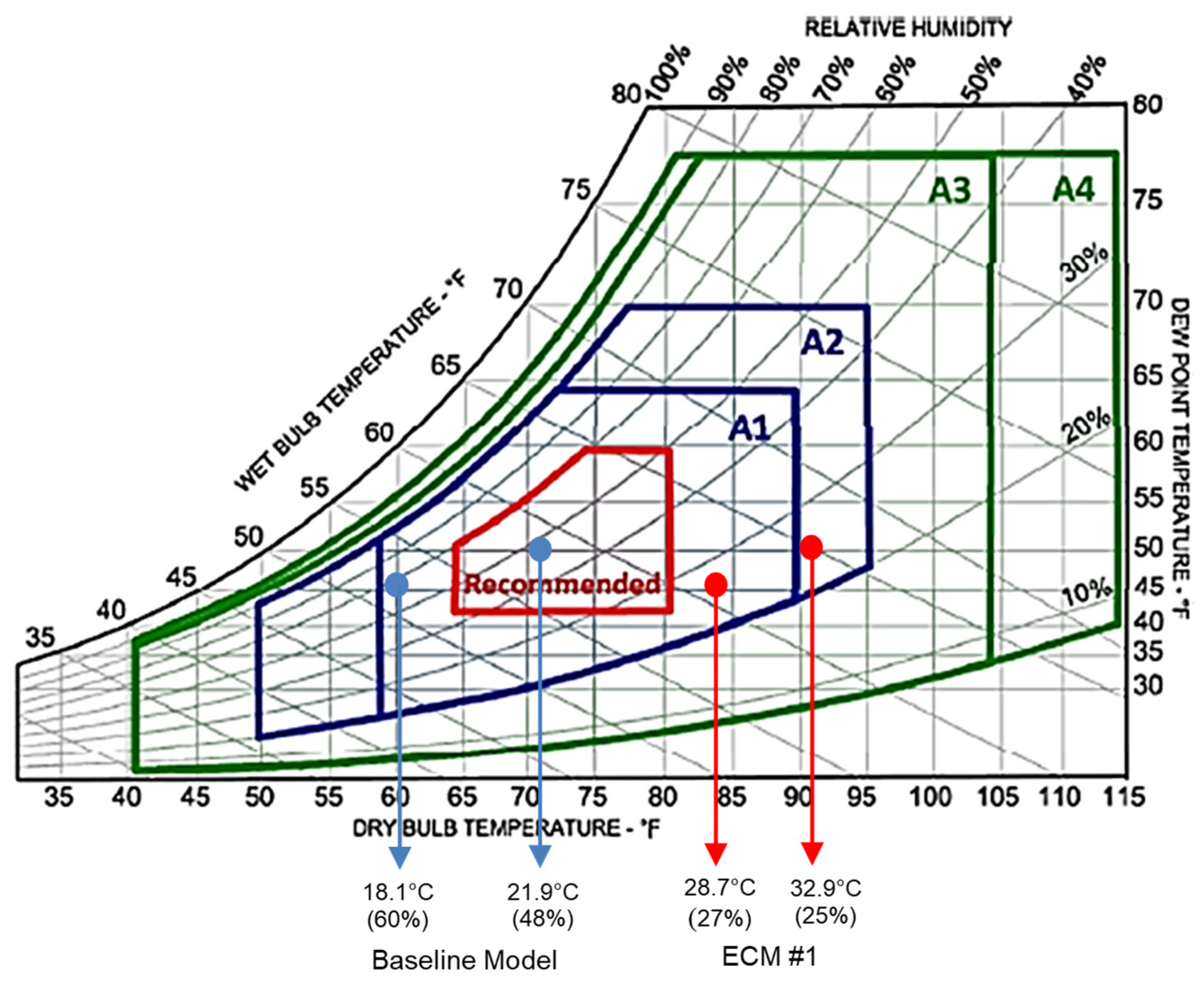

3.1. ECM #1—Increase the Return Air Temperature

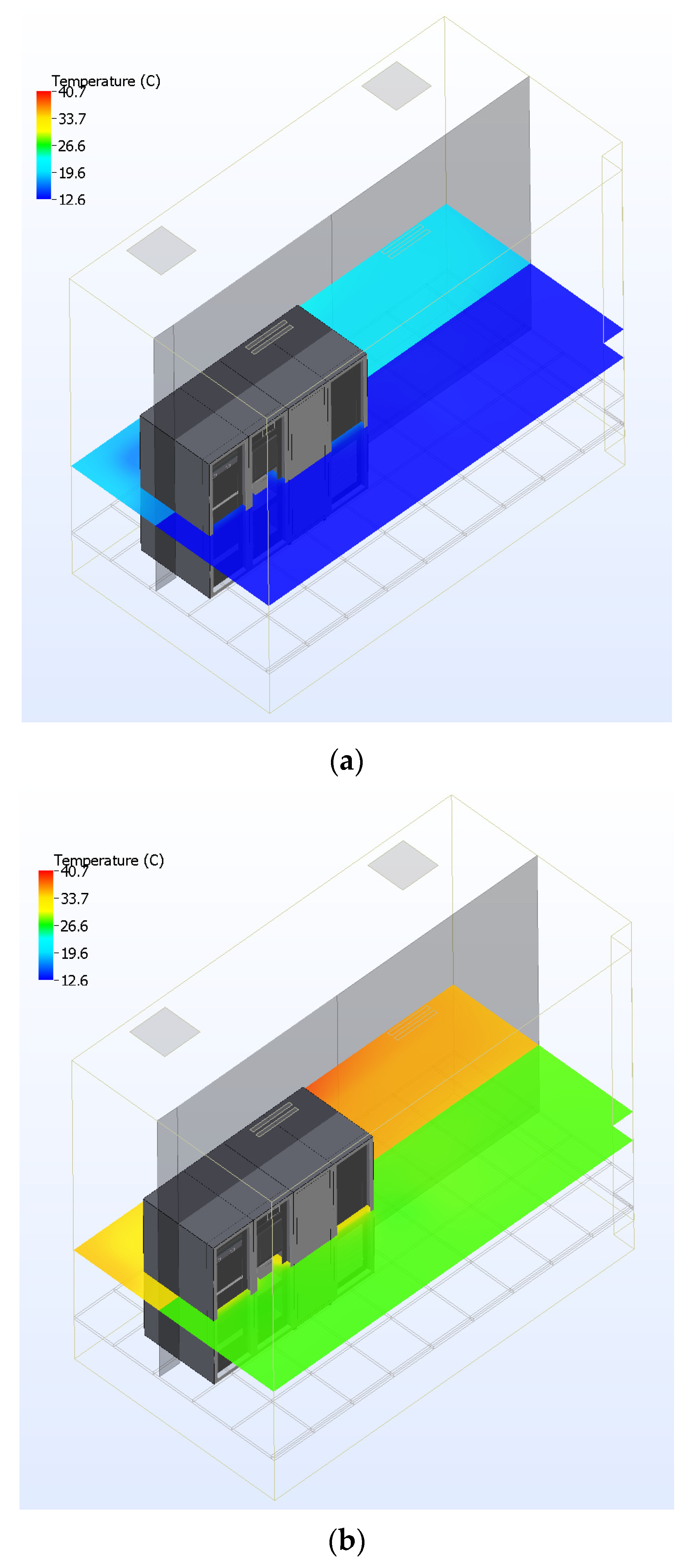

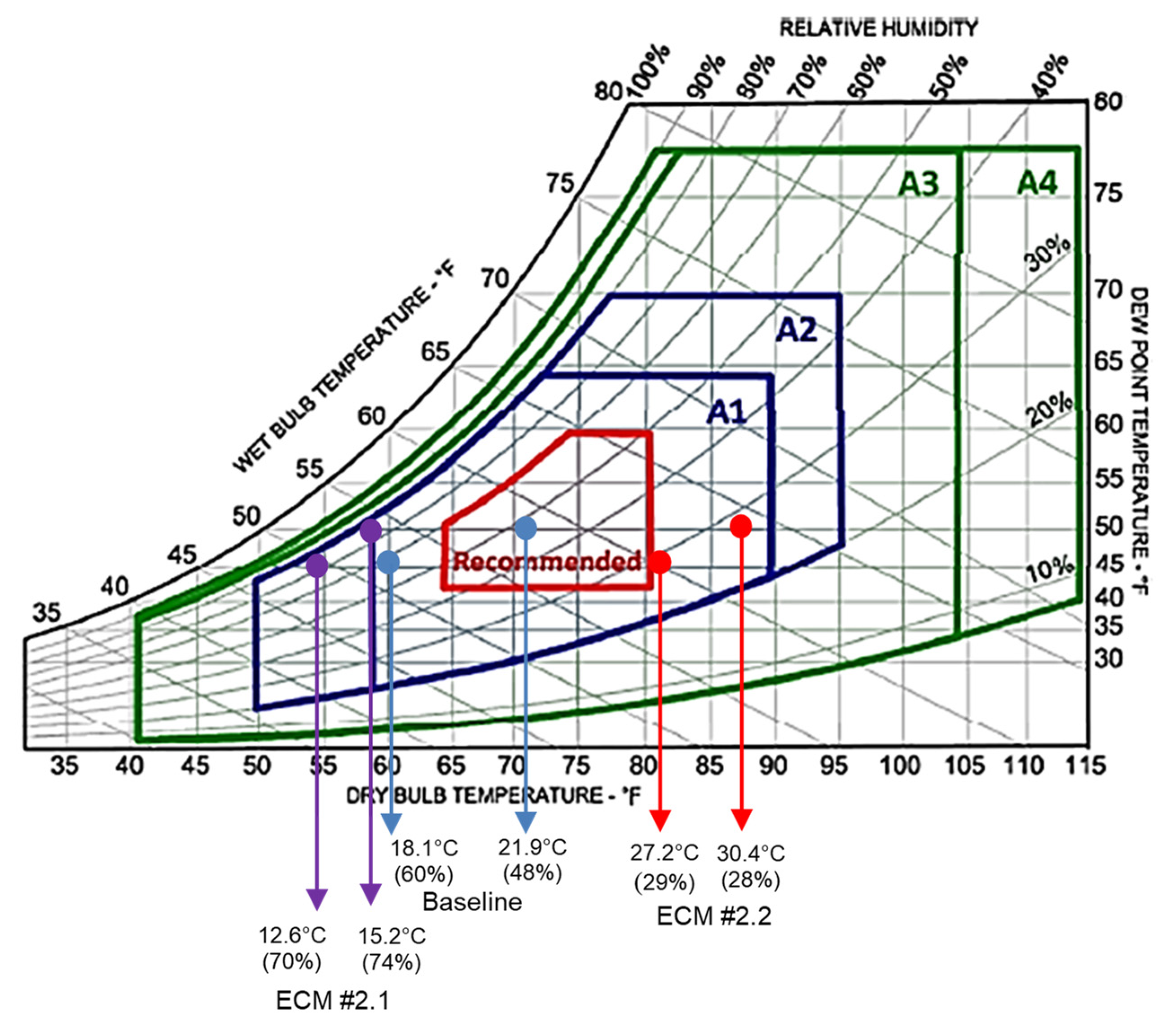

3.2. ECM #2.1—Cold and Hot Aisle Containment and ECM #2.2 Set Point Temperature Increase

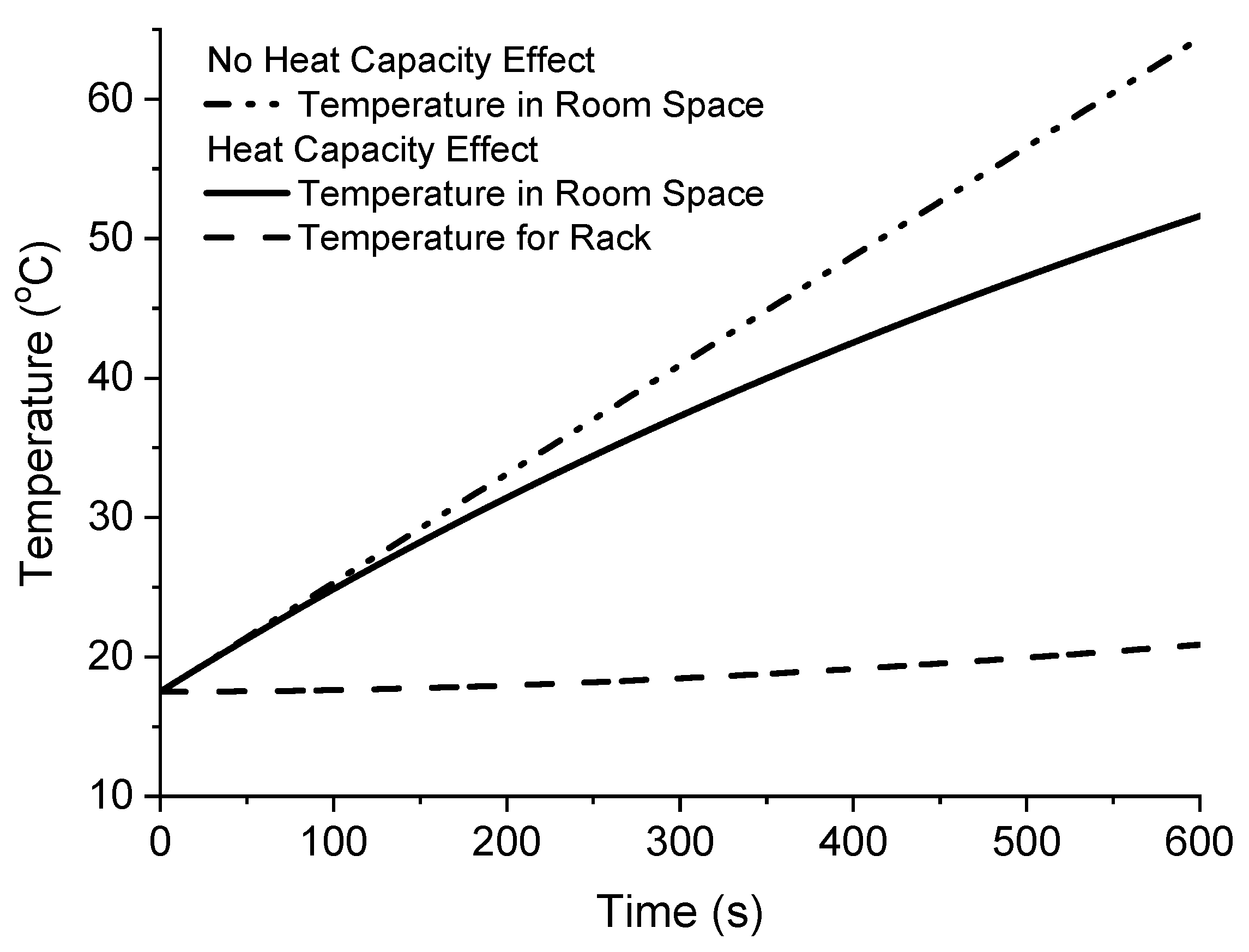

3.3. Transient Analysis

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Nomenclature

| Mass flow rate [kg/s] | |

| Temperature [°C] | |

| t | Time [s] |

| cp | Specific heat [J/kg·K] |

| Q | Generated heat [W] |

| Heat capacity of room air (J/K) | |

| Heat capacity of rack mass (J/K) | |

| Thermal conductance between room air and rack thermal mass (W/K) |

References

- Koomey, J.G. Growth in Data Center Electricity Use 2005 to 2010; Analytics Press: Oakland, CA, USA, 2011. [Google Scholar]

- Schlichting, A.D. Data Center Energy Efficiency—Technologies and Methodologies; The MITRE Corporation: McLean, VA, USA, 2016. [Google Scholar]

- Laganà, D.; Mastroianni, C.; Meo, M.; Renga, D. Reducing the Operational Cost of Cloud Data Centers through Renewable Energy. Algorithms 2018, 11, 145. [Google Scholar] [CrossRef] [Green Version]

- Andrae, A. Total Consumer Power Consumption Forecast. In Proceedings of the Nordic Digital Business Summit, Helsinki, Finland, 5 October 2017. [Google Scholar]

- Bruschi, J.; Rumsey, P.; Anliker, R.; Chu, L.; Gregson, S. Best practices guide for energy-efficient data center design. Natl. Renew. Energy Lab. 2011, 1–28. [Google Scholar] [CrossRef] [Green Version]

- Lia, J.; Jurasza, J.; Li, H.; Tao, W.Q.; Duan, Y.; Yan, J. A new indicator for a fair comparison on the energy performance of data centers. Appl. Energy 2020, 276, 115497. [Google Scholar] [CrossRef]

- Song, M.; Chen, K.; Wang, K. Numerical Study on the Optimized Control of CRACs in a Data Center Based on a Fast Temperature-Predicting Model. J. Energy Eng. 2017, 143–145. [Google Scholar] [CrossRef]

- Zhang, S.; Ma, G.; Zhou, F. Experimental Study on a Pump Driven Loop-Heat Pipe for Data Center Cooling. J. Energy Eng. 2015, 141. [Google Scholar] [CrossRef]

- Kahsay, M.T.; Bitsuamlak, G.; Tariku, F. Effect of localized exterior convective heat transfer on high-rise building energy consumption. Build. Simul. 2020, 13, 127–139. [Google Scholar] [CrossRef]

- Tian, H.; Liang, H.; Li, Z. A new mathematical model for multi-scale thermal management of data centers using entransy theory. Build. Simul. 2019, 12, 323–336. [Google Scholar] [CrossRef]

- Choo, K.; Galante, R.M.; Ohadi, M.M. Energy consumption analysis of a medium-size primary data center in an academic campus. Energy Build. 2014, 76, 414–421. [Google Scholar] [CrossRef]

- Phan, L.; Lin, C.-X. A multi-zone building energy simulation of a data center model with hot and cold aisles. Energy Build. 2014, 77, 364–376. [Google Scholar] [CrossRef]

- Cupertino, L.; Da Costa, G.; Oleksiak, A.; Piatek, W.; Pierson, J.-M.; Salom, J.; Siso, L.; Stolf, P.; Sun, H.; Zilio, T. Energy-Efficient, Thermal-Aware Modeling and Simulation of Datacenters: The CoolEmAll Approach and Evaluation Results. Ad. Hoc. Netw. 2015, 25, 535–553. [Google Scholar] [CrossRef] [Green Version]

- Fu, Y.; Zuo, W.; Wetter, M.; VanGlider, J.W.; Yang, P. Equation-based object-oriented modeling and simulation of data center cooling systems. Energy Build. 2019, 198, 503–519. [Google Scholar] [CrossRef] [Green Version]

- Dvorak, V.; Zavrel, V.; Galdiz, J.I.T.; Hensen, J.L.M. Simulation-based assessment of data center waste heat utilization using aquifer thermal energy storage of a university campus. Build. Simul. 2020, 13, 823–836. [Google Scholar] [CrossRef] [Green Version]

- High-Performance Computing Data Center Power Usage Effectiveness Webpage. Available online: https://www.nrel.gov/computational-science/measuring-efficiency-pue.html (accessed on 17 March 2021).

- Green, M.; Karajgikar, S.; Vozza, P.; Gmitter, N.; Dyer, D. Achieving energy efficient data centers using cooling path management coupled with ASHRAE standards. In Proceedings of the 28th IEEE Semi thermal Symposium, San Jose, CA, USA, 18–22 March 2012; pp. 288–292. [Google Scholar]

- Siriwardana, J.; Halgamuge, S.K.; Scherer, T.; Schott, W. Minimizing the thermal impact of computing equipment upgrades in data centers. Energy Build. 2012, 50, 81–92. [Google Scholar] [CrossRef]

- Seymour, M.; Ikemoto, S. Design and management of data center effectiveness, risks and costs. In Proceedings of the 2012 28th Annual IEEE Semiconductor Thermal Measurement and Management Symposium (SEMI-THERM), San Jose, CA, USA, 18–22 March 2012; pp. 64–68. [Google Scholar]

- Ahuja, N. Datacenter power savings through high ambient datacenter operation: CFD modeling study. In Proceedings of the 2012 28th Annual IEEE Semiconductor Thermal Measurement and Management Symposium (SEMI-THERM), San Jose, CA, USA, 18–22 March 2012; pp. 104–107. [Google Scholar]

- Almoli, A.; Thompson, A.; Kapur, N.; Summers, J.; Thompson, H.; Hannah, G. Computational fluid dynamic investigation of liquid rack cooling in data centers. Appl. Energy 2012, 89, 150–155. [Google Scholar] [CrossRef]

- ASHRAE TC9.9 2011. Thermal Guidelines for Data Processing Environments—Expanded Data Center Classes and Usage Guidance. Available online: https://eehpcwg.llnl.gov/documents/infra/01_ashraewhitepaper-2011thermalguidelines.pdf (accessed on 11 November 2020).

- Miller, R. Google: Raise Your Data Center Temperature Data Center Knowledge. Available online: https://www.datacenterknowledge.com/archives/2008/10/14/google-raise-your-data-center-temperature (accessed on 11 November 2020).

- Ghahramani, A.; Zhang, K.; Dutta, K.; Yang, Z.; Becerik-Gerber, B. Energy savings from temperature setpoints and deadband: Quantifying the influence of building and system properties on savings. Appl. Energy 2016, 165, 930–942. [Google Scholar] [CrossRef] [Green Version]

- Hoyt, T.; Arens, E.; Zhang, H. Extending air temperature setpoints; simulated energy savings and design consideration for new and retrofit buildings. Build. Environ. 2015, 88, 89–96. [Google Scholar] [CrossRef] [Green Version]

- Fernandez, N.; Katipamula, S.; Wang, W.; Huang, Y.; Liu, G. Energy savings modeling of standard commercial building re-tuning measures; large office building. Pac. Northwest Natl. Lab. 2012, 1–94. [Google Scholar] [CrossRef] [Green Version]

- Khankari, K. Thermal Mass Availability for Cooling Data Centers during Power Shutdown. ASHRAE Trans. 2010, 116, 205–218. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alsharif, K.I.; Glaspell, A.; Choo, K. Energy Conservation Measures for a Research Data Center in an Academic Campus. Energies 2021, 14, 2820. https://doi.org/10.3390/en14102820

Alsharif KI, Glaspell A, Choo K. Energy Conservation Measures for a Research Data Center in an Academic Campus. Energies. 2021; 14(10):2820. https://doi.org/10.3390/en14102820

Chicago/Turabian StyleAlsharif, Khaled Iyad, Aspen Glaspell, and Kyosung Choo. 2021. "Energy Conservation Measures for a Research Data Center in an Academic Campus" Energies 14, no. 10: 2820. https://doi.org/10.3390/en14102820