A Forward-Collision Warning System for Electric Vehicles: Experimental Validation in Virtual and Real Environment

Abstract

:1. Introduction

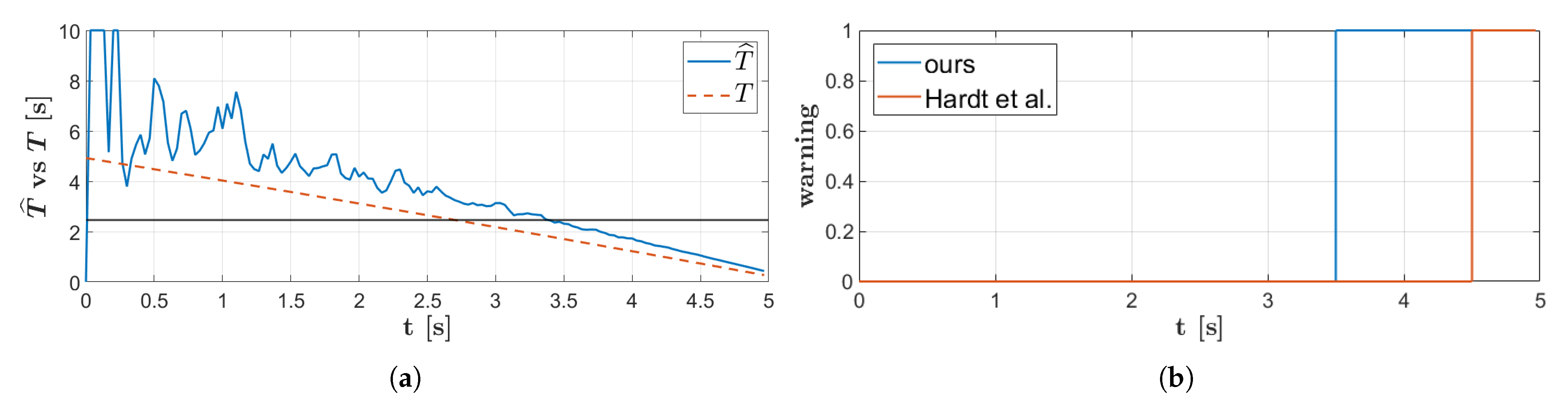

2. Forward Collision Warning

2.1. Object Detection

2.2. Multi-Target-Tracking

2.3. Collision Risk Evaluation

3. Testing and Deployment

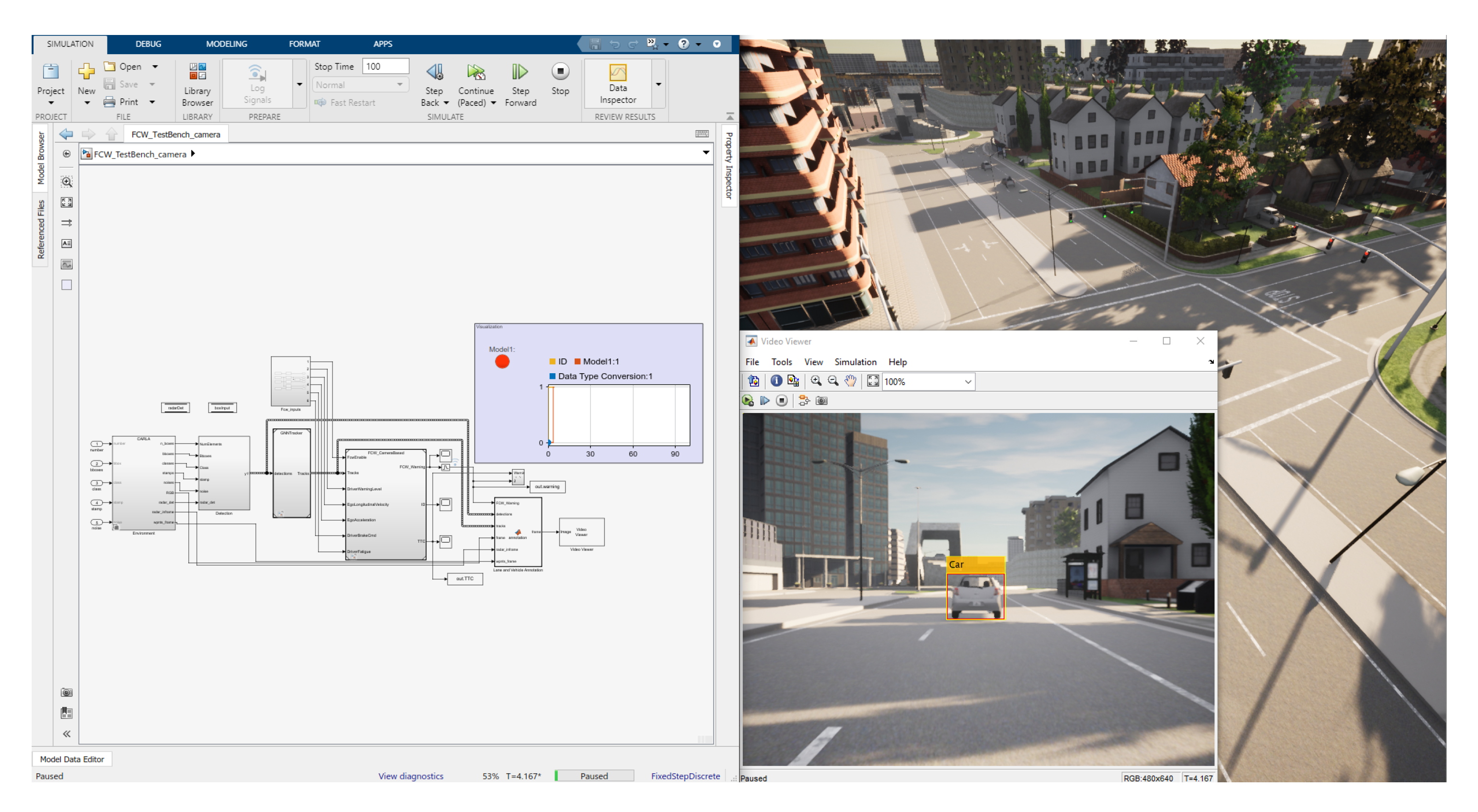

Model-in-the-Loop Testing

- Matlab/Simulink has been used to develop the algorithm and lately auto-generating C code through the Embedded Coder toolbox.

- the open-source urban simulator CARLA (CAR Learning to Act) [31] has been used to design traffic scenarios and generate synthetic sensor measurements.

- Car-to-Car Rear Stationary (CCRS): A collision in which a vehicle travels toward a stationary leading vehicle;

- Car-to-Car Rear Moving (CCRM): A collision in which a vehicle travels towards a slower vehicle moving at constant speed;

- Car-to-Car Rear Braking (CCRB): A collision in which a vehicle travels towards a braking vehicle.

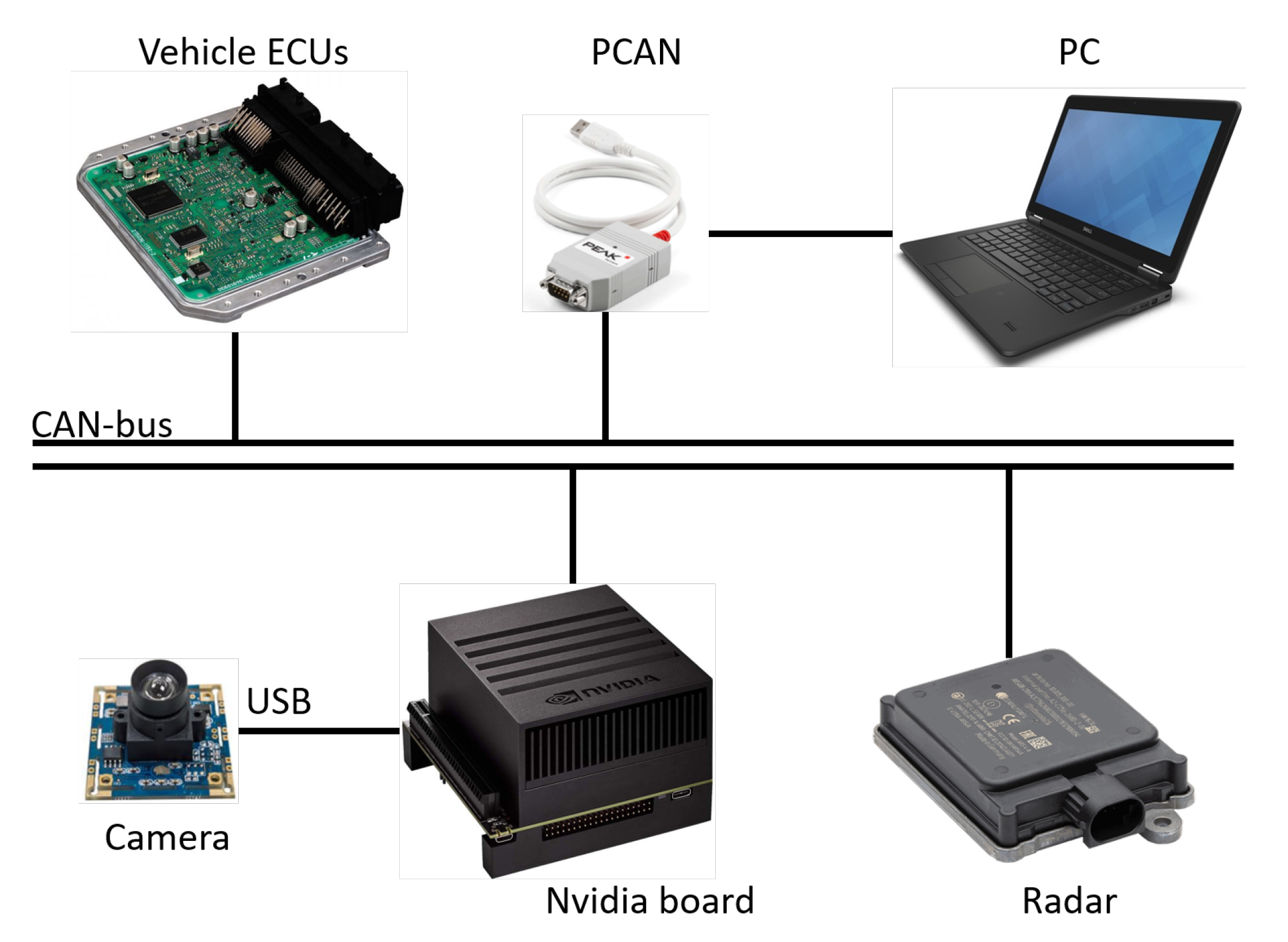

4. Experimental Validation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| FCW | Forward Collision Warning |

| TTC | Time To Collision |

| CNN | Convolutional Neural Network |

| YOLO | You Only Look Once |

| SSD | Single Shot Detector |

| FPN | Feature Pyramid Network |

| mAP | mean Average Precision |

| MTT | Multi Target Tracker |

| GNN | Global Nearest Neighbour |

| CUDA | Compute Unified Device Architecture |

| CPU | Central Processing Unit |

| GPU | Graphics Processing Unit |

| RAM | Random Access Memory |

| MIL | Model-In-the-Loop |

| CARLA | CAR Learning to Act |

| CCRS | Car-to-Car Rear Stationary |

| CCRM | Car-to-Car Rear Moving |

| CCRB | Car-to-Car Rear Braking |

| RGB | Red Green Blue |

| RADAR | RAdio Detection And Ranging |

| LIDAR | Laser Detection and Ranging |

| EuroNCAP | European New Car Assessment Programme |

References

- WHO. Global Status Report on Road Safety. 2018. Available online: https://www.who.int/publications-detail/global-status-report-on-road-safety-2018 (accessed on 1 July 2021).

- USDOT. Early Estimate of Motor Vehicle Traffic Fatalities for the First 9 Months of 2019. Available online: https://crashstats.nhtsa.dot.gov/Api/Public/ViewPublication/812874#:~:text=A%20statistical%20projection%20of%20traffic,as%20shown%20in%20Table%201 (accessed on 1 July 2021).

- Petrillo, A.; Pescapé, A.; Santini, S. A Secure Adaptive Control for Cooperative Driving of Autonomous Connected Vehicles in the Presence of Heterogeneous Communication Delays and Cyberattacks. IEEE Trans. Cybern. 2021, 51, 1134–1149. [Google Scholar] [CrossRef] [PubMed]

- Di Vaio, M.; Fiengo, G.; Petrillo, A.; Salvi, A.; Santini, S.; Tufo, M. Cooperative shock waves mitigation in mixed traffic flow environment. IEEE Trans. Intell. Transp. Syst. 2019, 20, 4339–4353. [Google Scholar] [CrossRef]

- Santini, S.; Albarella, N.; Arricale, V.M.; Brancati, R.; Sakhnevych, A. On-Board Road Friction Estimation Technique for Autonomous Driving Vehicle-Following Maneuvers. Appl. Sci. 2021, 11, 2197. [Google Scholar] [CrossRef]

- Castiglione, L.M.; Falcone, P.; Petrillo, A.; Romano, S.P.; Santini, S. Cooperative Intersection Crossing Over 5G. IEEE/ACM Trans. Netw. 2021, 29, 303–317. [Google Scholar] [CrossRef]

- Di Vaio, M.; Falcone, P.; Hult, R.; Petrillo, A.; Salvi, A.; Santini, S. Design and experimental validation of a distributed interaction protocol for connected autonomous vehicles at a road intersection. IEEE Trans. Veh. Technol. 2019, 68, 9451–9465. [Google Scholar] [CrossRef]

- Raphael, E.L.; Kiefer, R.; Reisman, P.; Hayon, G. Development of a Camera-Based Forward Collision Alert System. SAE Int. J. Passeng. Cars Electron. Electr. Syst. 2011, 4, 467–478. [Google Scholar] [CrossRef]

- Liu, J.F.; Su, Y.F.; Ko, M.K.; Yu, P.N. Development of a Vision-Based Driver Assistance System with Lane Departure Warning and Forward Collision Warning Functions. In Proceedings of the 2008 Digital Image Computing: Techniques and Applications, Canberra, ACT, Australia, 1–3 December 2008; pp. 480–485. [Google Scholar]

- Kuo, Y.C.; Pai, N.S.; Li, Y.F. Vision-based vehicle detection for a driver assistance system. Comput. Math. Appl. 2011, 61, 2096–2100. [Google Scholar] [CrossRef] [Green Version]

- Cui, J.; Liu, F.; Li, Z.; Jia, Z. Vehicle localisation using a single camera. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium, La Jolla, CA, USA, 21–24 June 2010; pp. 871–876. [Google Scholar]

- Salari, E.; Ouyang, D. Camera-based Forward Collision and lane departure warning systems using SVM. In Proceedings of the 2013 IEEE 56th International Midwest Symposium on Circuits and Systems (MWSCAS), Columbus, OH, USA, 4–7 August 2013; pp. 1278–1281. [Google Scholar]

- Kim, H.; Lee, Y.; Woo, T.; Kim, H. Integration of vehicle and lane detection for forward collision warning system. In Proceedings of the 2016 IEEE 6th International Conference on Consumer Electronics—Berlin (ICCE-Berlin), Berlin, Germany, 5–7 September 2016; pp. 5–8. [Google Scholar]

- Liu, M.; Jin, C.B.; Park, D.; Kim, H. Integrated detection and tracking for ADAS using deep neural network. In Proceedings of the 2019 IEEE Conference on Multimedia Information Processing and Retrieval (MIPR), San Jose, CA, USA, 28–30 March 2019; pp. 71–76. [Google Scholar]

- Mulyanto, A.; Borman, R.I.; Prasetyawan, P.; Jatmiko, W.; Mursanto, P. Real-Time Human Detection and Tracking Using Two Sequential Frames for Advanced Driver Assistance System. In Proceedings of the 2019 3rd International Conference on Informatics and Computational Sciences (ICICoS), Semarang, Indonesia, 29–30 October 2019; pp. 1–5. [Google Scholar]

- Lim, Q.; He, Y.; Tan, U.X. Real-Time Forward Collision Warning System Using Nested Kalman Filter for Monocular Camera. In Proceedings of the 2018 IEEE International Conference on Robotics and Biomimetics (ROBIO), Kuala Lumpur, Malaysia, 12–15 December 2018; pp. 868–873. [Google Scholar]

- Nava, D.; Panzani, G.; Savaresi, S.M. A Collision Warning Oriented Brake Lights Detection and Classification Algorithm Based on a Mono Camera Sensor. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 319–324. [Google Scholar]

- Hardt, W.; Rellan, C.; Nine, J.; Saleh, S.; Surana, S.P. Collision Warning Based on Multi-Object Detection and Distance Estimation. 2021; p. 68. Available online: https://www.researchgate.net/profile/Shadi-Saleh-3/publication/348155370_Collision_Warning_Based_on_Multi-Object_Detection_and_Distance_Estimation/links/5ff0e41ca6fdccdcb8264e2c/Collision-Warning-Based-on-Multi-Object-Detection-and-Distance-Estimation.pdf (accessed on 1 July 2021).

- Srinivasa, N.; Chen, Y.; Daniell, C. A fusion system for real-time forward collision warning in automobiles. In Proceedings of the 2003 IEEE International Conference on Intelligent Transportation Systems, Shanghai, China, 12–15 October 2003; Volume 1, pp. 457–462. [Google Scholar]

- Tang, C.; Feng, Y.; Yang, X.; Zheng, C.; Zhou, Y. The Object Detection Based on Deep Learning. In Proceedings of the 2017 4th International Conference on Information Science and Control Engineering (ICISCE), Changsha, China, 21–23 July 2017; pp. 723–728. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Sanchez, S.A.; Romero, H.J.; Morales, A.D. A review: Comparison of performance metrics of pretrained models for object detection using the TensorFlow framework. IOP Conf. Ser. Mater. Sci. Eng. 2020, 844, 012024. [Google Scholar] [CrossRef]

- Bar-Shalom, Y.; Li, X.R. Multitarget-Multisensor Tracking: Principles and Techniques; YBS publishing: Storrs, CT, USA, 1995; Volume 19. [Google Scholar]

- Konstantinova, P.; Udvarev, A.; Semerdjiev, T. A study of a target tracking algorithm using global nearest neighbor approach. In Proceedings of the International Conference on Computer Systems and Technologies (CompSysTech’03), Sofia, Bulgaria, 19–20 June 2003; pp. 290–295. [Google Scholar]

- Munkres, J. Algorithms for the assignment and transportation problems. J. Soc. Ind. Appl. Math. 1957, 5, 32–38. [Google Scholar] [CrossRef] [Green Version]

- Del Carmen, D.J.R.; Cajote, R.D. Assessment of Vision-Based Vehicle Tracking for Traffic Monitoring Applications. In Proceedings of the 2018 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Honolulu, HI, USA, 12–15 November 2018; pp. 2014–2021. [Google Scholar]

- Kalman, R.E. A New Approach to Linear Filtering and Prediction Problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef] [Green Version]

- Stein, G.P.; Mano, O.; Shashua, A. Vision-based ACC with a single camera: Bounds on range and range rate accuracy. In Proceedings of the IEEE IV2003 Intelligent Vehicles Symposium. Proceedings (Cat. No.03TH8683), Columbus, OH, USA, 9–11 June 2003; pp. 120–125. [Google Scholar]

- Dosovitskiy, A.; Ros, G.; Codevilla, F.; Lopez, A.; Koltun, V. CARLA: An open urban driving simulator. In Proceedings of the Conference on Robot Learning, Mountain View, CA, USA, 18 October 2017; pp. 1–16. [Google Scholar]

- EURONcap. Safety Assist Protocol. 2020. Available online: https://cdn.euroncap.com/media/56143/euro-ncap-aeb-c2c-test-protocol-v302.pdf (accessed on 1 July 2021).

- Darknet YoloV3 Github Repository. Available online: https://github.com/AlexeyAB/darknet (accessed on 1 July 2021).

- OpenCV Project Referecence. Available online: https://opencv.org/ (accessed on 1 July 2021).

- Knoll, P.M. HDR Vision for Driver Assistance; Springer: Berlin/Heidelberg, Germany, 2007; pp. 123–136. [Google Scholar]

- NHTSA. Vehicle Safety Communications—Applications (VSC-A) Final Report: Appendix Volume 1 System Design and Objective Test. 2011. Available online: https://www.nhtsa.gov/sites/nhtsa.gov/files/811492b.pdf (accessed on 1 July 2021).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Albarella, N.; Masuccio, F.; Novella, L.; Tufo, M.; Fiengo, G. A Forward-Collision Warning System for Electric Vehicles: Experimental Validation in Virtual and Real Environment. Energies 2021, 14, 4872. https://doi.org/10.3390/en14164872

Albarella N, Masuccio F, Novella L, Tufo M, Fiengo G. A Forward-Collision Warning System for Electric Vehicles: Experimental Validation in Virtual and Real Environment. Energies. 2021; 14(16):4872. https://doi.org/10.3390/en14164872

Chicago/Turabian StyleAlbarella, Nicola, Francesco Masuccio, Luigi Novella, Manuela Tufo, and Giovanni Fiengo. 2021. "A Forward-Collision Warning System for Electric Vehicles: Experimental Validation in Virtual and Real Environment" Energies 14, no. 16: 4872. https://doi.org/10.3390/en14164872

APA StyleAlbarella, N., Masuccio, F., Novella, L., Tufo, M., & Fiengo, G. (2021). A Forward-Collision Warning System for Electric Vehicles: Experimental Validation in Virtual and Real Environment. Energies, 14(16), 4872. https://doi.org/10.3390/en14164872