Internal Wind Turbine Blade Inspections Using UAVs: Analysis and Design Issues

Abstract

:1. Introduction

2. Inspections of Wind Turbine Blades

3. Unmanned Wind Turbine Blade Inspections

4. UAVs for Internal Wind Turbine Blade Inspections

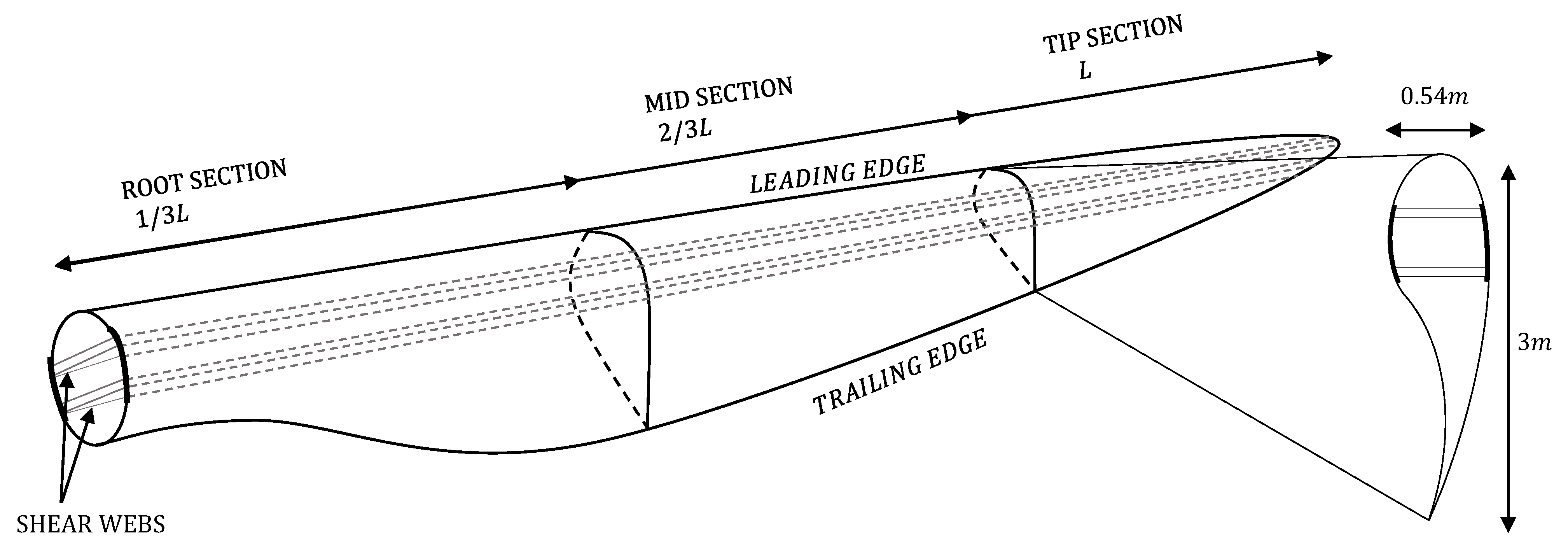

4.1. Physical Design

4.1.1. Required Flight Time

4.1.2. Achievable Flight Time

4.1.3. Size

4.2. Navigation and Control

4.2.1. Aerodynamic Disturbances and Mitigation

4.2.2. Manual Navigation

4.2.3. Autonomous Navigation

Obstacle Avoidance and Localization

Path Planning

4.3. Damage Detection

5. Discussion and Future of Interior WTB Inspections

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| WT | Wind turbine |

| WTB | Wind turbine blade |

| GNSS | Global navigation satellite system |

| IPS | Indoor positioning System |

| AI | Artificial intelligence |

| UV | Unmanned vehicle |

| UAV | Unmanned aerial vehicle |

| ToF | Time-of-flight |

| NDT | Non-destructive testing |

| SLAM | Simultaneous localization and mapping |

| V-SLAM | Visual simultaneous localization and mapping |

| LiDAR | Light detection and ranging |

| MPC | Model predictive control |

References

- Price, T.J. James Blyth—Britain’s first modern wind power pioneer. Wind. Eng. 2005, 29, 191–200. [Google Scholar] [CrossRef]

- Righter, R.W. Wind Energy in America—A History; University of Oklahoma Press: Norman, OK, USA, 1996. [Google Scholar]

- Wind Energy International. Global Wind Installations. Available online: https://library.wwindea.org/global-statistics/ (accessed on 31 July 2020).

- U.S. Department of Energy. Projected Growth of the Wind Industry From Now Until 2050. 2011. Available online: https://www.energy.gov/maps/map-projected-growth-wind-industry-now-until-2050 (accessed on 7 July 2020).

- Wind Energy International. World Wind Capacity at 650,8 GW, Corona Crisis will Slow Down Markets in 2020, Renewables to be Core of Economic Stimulus Programmes. Available online: https://wwindea.org/blog/2020/04/16/world-wind-capacity-at-650-gw/ (accessed on 31 July 2020).

- Office of Energy Efficiency and Renewable Energy. Wind Vision Study Scenario Viewer. Available online: https://openei.org/apps/wv_viewer/ (accessed on 31 July 2020).

- Sheng, S.; O’Connor, R. Chapter 15—Reliability of Wind Turbines. In Wind Energy Engineering; Academic Press: Cambridge, MA, USA, 2017; pp. 299–327. [Google Scholar]

- Sareen, A.; Sapre, C.A.; Selig, M.S. Effects of leading edge erosion on wind turbine blade performance. Wind Energy 2014, 17, 1531–1542. [Google Scholar] [CrossRef]

- Haselbach, P.; Bitsche, R.; Branner, K. The effect of delaminations on local buckling in wind turbine blades. Renew. Energy 2016, 85, 295–305. [Google Scholar] [CrossRef]

- Castorrini, A.; Corsini, A.; Rispoli, F.; Venturini, P.; Takizawa, K.; Tezduyar, T.E. Computational analysis of wind-turbine blade rain erosion. Advances in Fluid-Structure Interaction. Comput. Fluids 2016, 141, 175–183. [Google Scholar] [CrossRef]

- Lin, Y.; Tu, L.; Liu, H.; Li, W. Fault analysis of wind turbines in China. Renew. Sustain. Energy Rev. 2016, 55, 482–490. [Google Scholar] [CrossRef]

- Carroll, J.; McDonald, A.; McMillan, D. Failure rate, repair time and unscheduled O&M cost analysis of offshore wind turbines. Wind Energy 2016, 19, 1107–1119. [Google Scholar]

- GE Renewable Energy. GROWTH AND POTENTIAL The Onshore Wind Power Industry. Available online: https://www.ge.com/renewableenergy/wind-energy/onshore-wind (accessed on 7 July 2020).

- Megavind. Strategy for Extending the Useful Lifetime of a Wind Turbine. Available online: https://megavind.winddenmark.dk/sites/megavind.windpower.org/files/media/document/Strategy%20for%20Extending%20the%20Useful%20Lifetime%20of%20a%20Wind%20Turbine.pdf (accessed on 7 July 2020).

- Andrawus, J.A.; Watson, J.; Kishk, M. Wind Turbine Maintenance Optimisation: Principles of Quantitative Maintenance Optimisation. Wind Eng. 2007, 31, 101–110. [Google Scholar] [CrossRef]

- Mishnaevsky, L.; Branner, K.; Petersen, H.; Beauson, J.; McGugan, M.; Sørensen, B. Materials for Wind Turbine Blades: An Overview. Materials 2017, 10, 1285. [Google Scholar] [CrossRef] [Green Version]

- Sandia National Laboratories. Cost Study for Large Wind Turbine Blades: WindPACT Blade System Design Studies; Technical Report; Sandia National Laboratories: Warren, RI, USA, 2003. [Google Scholar]

- Bortolotti, P.; Berry, D.; Murray, R.; Gaertner, E.; Jenne, D.; Damiani, R.; Barter, G.; Dykes, K. A Detailed Wind Turbine Blade Cost Model; Technical Report; National Renewable Energy Laboratory: Golden, CO, USA, 2019. [Google Scholar]

- Juengert, A. Damage Detection in Wind Turbine Blades Using Two Different Acoustic Techniques. NDT Database J. 2008. Available online: https://www.ndt.net/article/v13n12/juengert.pdf (accessed on 7 July 2020).

- Forum, C.W.I. Summary of Wind Turbine Accident Data to 31 March 2020. 2020. Available online: http://www.caithnesswindfarms.co.uk (accessed on 7 July 2020).

- Deign, J. Fully Automated Drones Could Double Wind Turbine Inspection Rates. 2016. Available online: https://analysis.newenergyupdate.com/wind-energy-update/fully-automated-drones-could-double-wind-turbine-inspection-rates (accessed on 29 July 2020).

- Skyspecs. Autonomous Inspection. Available online: https://skyspecs.com/skyspecs-solutions/autonomous-inspection/ (accessed on 7 July 2020).

- Dolan, S.L.; Heath, G.A. Life Cycle Greenhouse Gas Emissions of Utility-Scale Wind Power. J. Ind. Ecol. 2012, 16, S136–S154. [Google Scholar] [CrossRef]

- Rhodes, J. Nuclear and Wind Power Estimated to have Lowest Levelized CO2 Emissions. 2017. Available online: https://energy.utexas.edu/news/nuclear-and-wind-power-estimated-have-lowest-levelized-co2-emissions (accessed on 3 August 2020).

- U.S. Energy Information Administration. How Much Carbon Dioxide is Produced Per Kilowatthour of U.S. Electricity Generation? 2020. Available online: https://www.eia.gov/tools/faqs/faq.php?id=74&t=11 (accessed on 3 August 2020).

- Whitaker, M.; Heath, G.A.; O’Donoughue, P.; Vorum, M. Life Cycle Greenhouse Gas Emissions of Coal-Fired Electricity Generation. J. Ind. Ecol. 2012, 16, S53–S72. [Google Scholar] [CrossRef]

- McLean, A. Horns Rev 3 Offshore Wind Farm Technical Report no.22—AIR EMISSIONS; Technical Report; Energinet.dk: Fredericia, Denmark, 2014. [Google Scholar]

- Inagaki, Y.; Ikeda, H.; Takeuchi, P.K.; Yato, Y.; Sawai, T. An effective measure for evaluating sewer condition: UAV screening in comparison with CCTVS and manhole cameras. Water Pract. Technol. 2020, 15, 482–488. [Google Scholar] [CrossRef]

- Gao, J.; Yan, Y.; Wang, C. Research on the Application of UAV Remote Sensing in Geologic Hazards Investigation for Oil and Gas Pipelines; ASCE: Reston, VA, USA, 2011. [Google Scholar]

- Birk, A.; Wiggerich, B.; Bülow, H.; Pfingsthorn, M.; Schwertfeger, S. Safety, Security, and Rescue Missions with an Unmanned Aerial Vehicle (UAV). J. Intell. Robot. Syst. 2011, 64, 57–76. [Google Scholar] [CrossRef]

- Alotaibi, E.T.; Alqefari, S.S.; Koubaa, A. LSAR: Multi-UAV Collaboration for Search and Rescue Missions. IEEE Access 2019, 7, 55817–55832. [Google Scholar] [CrossRef]

- Gonzalez, L.; Lee, D.; Walker, R.; Periaux, J. Optimal Mission Path Planning (MPP) For An Air Sampling Unmanned Aerial System. In Proceedings of the 2009 Australasian Conference on Robotics & Automation, Sydney, Australia, 2–4 December 2009. [Google Scholar]

- Navia, J.; Mondragon, I.; Patino, D.; Colorado, J. Multispectral mapping in agriculture: Terrain mosaic using an autonomous quadcopter UAV. In Proceedings of the 2016 International Conference on Unmanned Aircraft Systems (ICUAS), Arlington, VA, USA, 7–10 June 2016; pp. 1351–1358. [Google Scholar]

- Honkavaara, E.; Saari, H.; Kaivosoja, J.; Pölönen, I.; Hakala, T.; Litkey, P.; Mäkynen, J.; Pesonen, L. Processing and assessment of spectrometric, stereoscopic imagery collected using a lightweight UAV spectral camera for precision agriculture. Remote Sens. 2013, 5, 5006–5039. [Google Scholar] [CrossRef] [Green Version]

- Greenwood, W.W.; Lynch, J.P.; Zekkos, D. Applications of UAVs in Civil Infrastructure. J. Infrastruct. Syst. 2019, 25, 04019002. [Google Scholar] [CrossRef]

- TSRWind. Improving the Wind—High Technology for Wind Turbine Maintenance. Available online: http://tsrwind.com/en (accessed on 23 September 2020).

- Cornis. Onshore & Offshore Panoblade Inspection—External Blade Inspection Made Easy! Available online: https://home.cornis.fr/panoblade/ (accessed on 23 September 2020).

- Arthwind. Available online: http://arthwind.com.br/ (accessed on 23 September 2020).

- Aetos Drones. Available online: http://www.aetosdrones.be/ (accessed on 23 September 2020).

- ABJDrones. Drone Wind Turbine and Blade Inspection—Or Offshore and Onshore Wind Farms. Available online: https://abjdrones.com/drone-wind-turbine-inspection/ (accessed on 23 September 2020).

- Terra-Drone Europe. Available online: https://terra-drone.eu/en/ (accessed on 23 September 2020).

- Sulzer Schmid. Available online: https://www.sulzerschmid.ch/ (accessed on 23 September 2020).

- Blade Edge. Available online: https://bladeedge.net (accessed on 23 September 2020).

- Alerion. Available online: https://www.aleriontec.com (accessed on 23 September 2020).

- Clobotics. Available online: https://www.clobotics.com/ (accessed on 23 September 2020).

- Aero-Enterprise. Available online: https://www.aero-enterprise.com/ (accessed on 23 September 2020).

- Aerial Tronics. Available online: https://www.aerialtronics.com (accessed on 23 September 2020).

- Radii Robotics. Available online: https://www.radiirobotics.com/ (accessed on 23 September 2020).

- Flytbase. Available online: https://flytbase.com/ (accessed on 23 September 2020).

- Prodrone. Available online: https://www.pro-drone.eu/ (accessed on 23 September 2020).

- CyberHawk. Available online: https://thecyberhawk.com/ (accessed on 23 September 2020).

- Force Technology. Available online: https://forcetechnology.com/ (accessed on 23 September 2020).

- Sattar, T.; Leon Rodriguez, H.; Bridge, B. Climbing ring robot for inspection of offshore wind turbines. Ind. Robot. 2009, 36, 326–330. [Google Scholar] [CrossRef]

- Galleguillos, C.; Zorrilla, A.; Jimenez, A.; Diaz, L.; Montiano, A.L.; Barroso, M.; Viguria, A.; Lasagni, F. Thermographic non-destructive inspection of wind turbine blades using unmanned aerial systems. Plast. Rubber Compos. 2015, 44, 98–103. [Google Scholar] [CrossRef]

- Zhang, D.; Watson, R.; Dobie, G.; MacLeod, C.; Pierce, G. Autonomous Ultrasonic Inspection Using Unmanned Aerial Vehicle. In Proceedings of the 2018 IEEE International Ultrasonics Symposium (IUS), Kobe, Japan, 22–25 October 2018; pp. 1–4. [Google Scholar]

- Seton, J.; Frosas, V.; Gao, J. Report on Demonstrations in a Working Environment. 2019. Available online: https://ec.europa.eu/research/participants/documents/downloadPublic?documentIds=080166e5c6ba24d6&appId=PPGMS (accessed on 25 July 2020).

- Bladena and KIRT x THOMSEN. The Blade Handbook. 2019. Available online: https://www.bladena.com/uploads/8/7/3/7/87379536/cortir_handbook_2019.pdf (accessed on 31 July 2020).

- Corten, G.; Veldkamp, H. Insects can halve wind-turbine power. Nature 2001, 412, 41–42. [Google Scholar] [CrossRef]

- Bladena and Vattenfall and EON and Statkraft and KIRT x THOMSEN. INSTRUCTION—Blade Inspections. 2018. Available online: https://www.bladena.com/uploads/8/7/3/7/87379536/blade_inspections_report.pdf (accessed on 31 July 2020).

- Force Technology. Successful Corrosion Protection of Offshore Wind Farms. Available online: https://forcetechnology.com/en/articles/successful-corrosion-protection-of-offshore-wind-farms (accessed on 6 November 2020).

- Juengert, A.; Grosse, C.U. Inspection techniques for wind turbine blades using ultrasound and sound waves. In Proceedings of the Non-Destructive Testing in Civil Engineering, Nantes, France, 30 June–3 July 2009. [Google Scholar]

- Force Technology. Services—NDT on Wind Turbines. Available online: https://forcetechnology.com/en/services/ndt-on-wind-turbines (accessed on 31 July 2020).

- Windnostics. Windnostics—Services. Available online: https://www.windnostics.com/services (accessed on 31 July 2020).

- Amenabar, I.; Mendikute, A.; López-Arraiza, A.; Lizaranzu, M.; Aurrekoetxea, J. Comparison and analysis of non-destructive testing techniques suitable for delamination inspection in wind turbine blades. Compos. Part B Eng. 2011, 42, 1298–1305. [Google Scholar] [CrossRef]

- iPEK International. Rovion—Internal Inspectionof Wind Rotor Blades. 2014. Available online: https://www.ipek.at/fileadmin/FILES/downloads/brochures-datasheets/brochures/iPEK-Industrial-Application-Wind_EN_web.pdf (accessed on 10 August 2020).

- Sørensen, B.F.; Lading, L.; Sendrup, P.; McGugan, M.; Debel, C.P.; Kristensen, O.J.; Larsen, G.C.; Hansen, A.M.; Rheinländer, J.; Rusborg, J.; et al. Fundamentals for Remote Structural Health Monitoring of Wind Turbine Blades—A Preproject; DTU Library: Roskilde, Denmark, 2002. [Google Scholar]

- Jonkman, J.; Butterfield, S.; Musial, W.; Scott, G. Definition of a 5-MW Reference Wind Turbine for Offshore System Development; Technical Report; National Renewable Energy Laboratory: Golden, CO, USA, 2009. [Google Scholar]

- Froese, M. LM Wind Unveils 107-m Turbine Blade, Currently the World’s Largest. 2019. Available online: https://www.windpowerengineering.com/lm-wind-unveils-107-m-turbine-blade-currently-the-worlds-largest/ (accessed on 19 August 2020).

- GE Renewable Energy. GE’s Haliade 150-6MW High Yield Offshore Wind Turbine. 2015. Available online: https://www.ge.com/renewableenergy/sites/default/files/related_documents/wind-offshore-haliade-wind-turbine.pdf (accessed on 31 July 2020).

- Fauteux, L.; Jolin, N. Drone Solutions for Wind Turbine Inspections; Technical Report; Nergica: Gaspe, QC, Canada, 2018. [Google Scholar]

- Flyability. Confined Spaces Inspection. Available online: https://www.flyability.com/articles-and-media/confined-spaces-inspection (accessed on 7 July 2020).

- Davis, M.S.; Madani, M.R. Investigation into the Effects of Static Electricity on Wind Turbine Systems. In Proceedings of the 2018 6th International Renewable and Sustainable Energy Conference (IRSEC), Rabat, Morocco, 5–8 December 2018; pp. 1–7. [Google Scholar]

- Stojković, A. Occupational Safety In Hazardous Confined Space. Inženjerstvo Zaštite 2013, 137. [Google Scholar] [CrossRef]

- Lim, S.; Park, C.; Hwang, J.; Kim, D.; Kim, T. The inchworm type blade inspection robot system. In Proceedings of the 2012 9th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Daejeon, Korea, 26–28 November 2012; pp. 604–607. [Google Scholar]

- Netland, Ø.; Jenssen, G.; Schade, H.M.; Skavhaug, A. An Experiment on the Effectiveness of Remote, Robotic Inspection Compared to Manned. In Proceedings of the 2013 IEEE International Conference on Systems, Man, and Cybernetics, Manchester, UK, 13–16 October 2013; pp. 2310–2315. [Google Scholar]

- Schäfer, B.E.; Picchi, D.; Engelhardt, T.; Abel, D. Multicopter unmanned aerial vehicle for automated inspection of wind turbines. In Proceedings of the 2016 24th Mediterranean Conference on Control and Automation (MED), Athens, Greece, 21–24 June 2016; pp. 244–249. [Google Scholar]

- Stokkeland, M.; Klausen, K.; Johansen, T.A. Autonomous visual navigation of Unmanned Aerial Vehicle for wind turbine inspection. In Proceedings of the 2015 International Conference on Unmanned Aircraft Systems (ICUAS), Denver, CO, USA, 9–12 June 2015; pp. 998–1007. [Google Scholar]

- Jung, S.; Shin, J.; Myeong, W.; Myung, H. Mechanism and system design of MAV(Micro Aerial Vehicle)-type wall-climbing robot for inspection of wind blades and non-flat surfaces. In Proceedings of the 2015 15th International Conference on Control, Automation and Systems (ICCAS), Busan, Korea, 13–16 October 2015; pp. 1757–1761. [Google Scholar]

- Shihavuddin, A.; Chen, X.; Fedorov, V.; Nymark Christensen, A.; Andre Brogaard Riis, N.; Branner, K.; Bjorholm Dahl, A.; Reinhold Paulsen, R. Wind Turbine Surface Damage Detection by Deep Learning Aided Drone Inspection Analysis. Energies 2019, 12, 676. [Google Scholar] [CrossRef] [Green Version]

- Martinez, C.; Asare Yeboah, F.; Herford, S.; Brzezinski, M.; Puttagunta, V. Predicting Wind Turbine Blade Erosion using Machine Learning. SMU Data Sci. Rev. 2019, 2, 17. [Google Scholar]

- Fritz, P.J.; Harding, K.G.; Song, G.; Yang, Y.; Tao, L.; Wan, X. System and Method for Performing an Internal Inspection on A Wind Turbine Rotor Blade. U.S. Patent Application 13/980,345, 14 November 2013. [Google Scholar]

- Murphy, J.T.; Mishra, D.; Silliman, G.R.; Kumar, V.P.; Mandayam, S.T.; Sharma, P. Method and System for Wind Turbine Inspection. U.S. Patent Application 13/021,056, 31 May 2012. [Google Scholar]

- Pedersen, H. Internal Inspection of a Wind Turbine. European Patent EP3287367A1, 28 February 2018. [Google Scholar]

- Rizos, C. Locata: A Positioning System for Indoor and Outdoor Applications Where GNSS Does Not Work. In Proceedings of the 18th Association of Public Authority Surveyors Conference (APAS2013), Canberra, Australia, 12–14 March 2013; pp. 73–83. [Google Scholar]

- Lachapelle, G. GNSS Indoor Location Technologies. J. Glob. Position. Syst. 2004, 3, 2–11. [Google Scholar] [CrossRef] [Green Version]

- Cornis. Onshore & Offshore Intrablade Inspection—Internal Blade Inspection Made Easy! Available online: https://home.cornis.fr/intrablade/ (accessed on 31 July 2020).

- TSRWind. CERBERUS—Internal Blade Inspection. Available online: https://tsrwind.com/cerberus/ (accessed on 27 December 2020).

- ROBINS Project. Crawler Platform for NDT Measurements. Available online: https://www.robins-project.eu/geir-crawler-platform/ (accessed on 7 July 2020).

- Nichols, G. A Drone Designed to Fly in Dark, Confined Spaces. Available online: https://www.zdnet.com/article/a-drone-designed-to-fly-in-dark-confined-spaces/ (accessed on 7 July 2020).

- WInspector. Oscillation Measurement on Wind Turbine Blade. 2016. Available online: http://www.winspector.eu/news-and-events/oscillation-measurement-on-wind-turbine-blade/ (accessed on 25 July 2020).

- David. Hexacopter vs. Quadcopter: The Pros and Cons. Available online: https://skilledflyer.com/hexacopter-vs-quadcopter/ (accessed on 29 September 2020).

- Ebeid, E.; Skriver, M.; Terkildsen, K.H.; Jensen, K.; Schultz, U.P. A survey of Open-Source UAV flight controllers and flight simulators. Microprocess. Microsyst. 2018, 61, 11–20. [Google Scholar] [CrossRef]

- BetaFPV. Beta65S BNF Micro Whoop Quadcopter. Available online: https://betafpv.com/products/beta65s-bnf-micro-whoop-quadcopter (accessed on 29 July 2020).

- Emax USA. Tinyhawk Indoor FPV Racing Drone BNF. Available online: https://emax-usa.com/products/tinyhawk2 (accessed on 29 July 2020).

- DJI. DJI Mavic 2. Available online: https://www.dji.com/dk/mavic-2 (accessed on 29 July 2020).

- Flyability. Elios 2—Indoor Drone for Confined Space Inspections. Available online: https://www.flyability.com/elios-2 (accessed on 21 July 2020).

- MIT Electric Vehicle Team. A Guide to Understanding Battery Specifications. 2018. Available online: http://web.mit.edu/evt/summary_battery_specifications.pdf (accessed on 10 September 2020).

- Gibiansky, A. Quadcopter Dynamics and Simulation. 2012. Available online: https://andrew.gibiansky.com/blog/physics/quadcopter-dynamics/ (accessed on 19 August 2020).

- Liang, O. Why Mini Quad Motors Getting too Hot? Available online: https://oscarliang.com/mini-quad-motors-overheat/ (accessed on 30 September 2020).

- Siemens Gamesa. SWT-6.0-154 Offshore Wind Turbine. Available online: https://www.siemensgamesa.com/en-int/products-and-services/offshore/wind-turbine-swt-6-0-154 (accessed on 31 July 2020).

- Wiser, R.; Hand, M.; Seel, J.; Paulos, B. The Future of Wind Energy, Part 3: Reducing Wind Energy Costs through Increased Turbine Size: Is the Sky the Limit? 2016. Available online: https://emp.lbl.gov/news/future-wind-energy-part-3-reducing-wind (accessed on 27 August 2020).

- Sanchez-Cuevas, P.J.; Heredia, G.; Ollero, A. Experimental Approach to the Aerodynamic Effects Produced in Multirotors Flying Close to Obstacles. In Proceedings of the ROBOT 2017: Third Iberian Robotics Conference, Seville, Spain, 22–24 November 2017; pp. 742–752. [Google Scholar]

- Conyers, S.A. Empirical Evaluation of Ground, Ceiling, and Wall Effect for Small-Scale Rotorcraft. Master’s Thesis, University of Denver, Denver, CO, USA, 2019. [Google Scholar]

- Cheeseman, I.; Bennett, W. The effect of the ground on a helicopter rotor. R & M 1957, 3021. [Google Scholar]

- Powers, C.; Mellinger, D.; Kushleyev, A.; Kothmann, B.; Kumar, V. Influence of aerodynamics and proximity effects in quadrotor flight. Exp. Robot. 2013, 289–302. [Google Scholar] [CrossRef]

- Sharf, I.; Nahon, M.; Harmat, A.; Khan, W.; Michini, M.; Speal, N.; Trentini, M.; Tsadok, T.; Wang, T. Ground effect experiments and model validation with Draganflyer X8 rotorcraft. In Proceedings of the 2014 International Conference on Unmanned Aircraft Systems (ICUAS), Orlando, FL, USA, 27–30 May 2014; pp. 1158–1166. [Google Scholar]

- Matus-Vargas, A.; Rodríguez-Gómez, G.; Martínez-Carranza, J. Aerodynamic Disturbance Rejection Acting on a Quadcopter Near Ground. In Proceedings of the 2019 6th International Conference on Control, Decision and Information Technologies (CoDIT), Paris, France, 23–26 April 2019; pp. 1516–1521. [Google Scholar]

- Hentzen, D.; Stastny, T.; Siegwart, R.; Brockers, R. Disturbance estimation and rejection for high-precision multirotor position control. arXiv 2019, arXiv:1908.03166. [Google Scholar]

- McKinnon, C.D.; Schoellig, A.P. Estimating and reacting to forces and torques resulting from common aerodynamic disturbances acting on quadrotors. Robot. Auton. Syst. 2020, 123, 103314. [Google Scholar] [CrossRef]

- FrSky. FrSky 900MHz Long Range RC System—R9&R9M. 2017. Available online: https://www.frsky-rc.com/frsky-900mhz-long-range-rc-system-r9r9m/ (accessed on 29 July 2020).

- Raspberry Pi Foundation. FAQs—Raspberry Pi Documentation. Available online: https://www.raspberrypi.org/documentation/faqs/ (accessed on 27 December 2020).

- Intel Realsense Technology. Intel RealSense Product Family D400 Series. Available online: https://www.intelrealsense.com/wp-content/uploads/2020/06/Intel-RealSense-D400-Series-Datasheet-June-2020.pdf (accessed on 27 December 2020).

- Intel Realsense Technology. Intel RealSense LiDAR Camera L515. Available online: file:///F:/Users/user/Downloads/Intel_RealSense_LiDAR_L515_Datasheet_Rev002.pdf. (accessed on 27 December 2020).

- Huang, B.; Zhao, J.; Liu, J. A Survey of Simultaneous Localization and Mapping. arXiv 2019, arXiv:1909.05214. [Google Scholar]

- Einsiedler, J.; Radusch, I.; Wolter, K. Vehicle indoor positioning: A survey. In Proceedings of the 2017 14th Workshop on Positioning, Navigation and Communications (WPNC), Bremen, Germany, 25–26 October 2017; pp. 1–6. [Google Scholar]

- Thrun, S.; Burgard, W.; Fox, D.; Arkin, R. Probabilistic Robotics; Intelligent Robotics and Autonomous Agents Series; MIT Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Droeschel, D.; Behnke, S. Efficient Continuous-Time SLAM for 3D Lidar-Based Online Mapping. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 5000–5007. [Google Scholar]

- Abouzahir, M.; Elouardi, A.; Latif, R.; Bouaziz, S.; Tajer, A. Embedding SLAM algorithms: Has it come of age? Robot. Auton. Syst. 2018, 100, 14–26. [Google Scholar] [CrossRef]

- Naik, N.; Kadambi, A.; Rhemann, C.; Izadi, S.; Raskar, R.; Bing Kang, S. A Light Transport Model for Mitigating Multipath Interference in Time-of-Flight Sensors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Luo, S. Laser SLAM vs. VSLAM. Available online: https://www.linkedin.com/pulse/laser-slam-vs-vslam-selina-luo/ (accessed on 29 November 2020).

- Skoda, J.; Bartak, R. Camera-Based Localization and Stabilization of a Flying Drone. In Proceedings of the Twenty-Eighth International Florida Artificial Intelligence Research Society Conference, Hollywood, FL, USA, 18–20 May 2015. [Google Scholar]

- García, S.; López, M.E.; Barea, R.; Bergasa, L.M.; Gómez, A.; Molinos, E.J. Indoor SLAM for Micro Aerial Vehicles Control Using Monocular Camera and Sensor Fusion. In Proceedings of the 2016 International Conference on Autonomous Robot Systems and Competitions (ICARSC), Bragança, Portugal, 4–6 May 2016; pp. 205–210. [Google Scholar]

- Von Stumberg, L.; Usenko, V.; Engel, J.; Stückler, J.; Cremers, D. From monocular SLAM to autonomous drone exploration. In Proceedings of the 2017 European Conference on Mobile Robots (ECMR), Paris, France, 6–8 September 2017; pp. 1–8. [Google Scholar]

- Tiemann, J.; Ramsey, A.; Wietfeld, C. Enhanced UAV Indoor Navigation through SLAM-Augmented UWB Localization. In Proceedings of the 2018 IEEE International Conference on Communications Workshops (ICC Workshops), Kansas City, MO, USA, 20–24 May 2018; pp. 1–6. [Google Scholar]

- Taketomi, T.; Uchiyama, H.; Ikeda, S. Visual SLAM algorithms: A survey from 2010 to 2016. IPSJ Trans. Comput. Vis. Appl. 2017, 9, 16. [Google Scholar] [CrossRef]

- Fuentes-Pacheco, J.; Ruiz-Ascencio, J.; Rendón-Mancha, J.M. Visual simultaneous localization and mapping: A survey. Artif. Intell. Rev. 2015, 43, 55–81. [Google Scholar] [CrossRef]

- Zhao, S.; Fang, Z. Direct depth SLAM: Sparse geometric feature enhanced direct depth SLAM system for low-texture environments. Sensors 2018, 18, 3339. [Google Scholar] [CrossRef] [Green Version]

- Ouellette, R.; Hirasawa, K. A comparison of SLAM implementations for indoor mobile robots. In Proceedings of the 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007; pp. 1479–1484. [Google Scholar]

- Debeunne, C.; Vivet, D. A Review of Visual-LiDAR Fusion based Simultaneous Localization and Mapping. Sensors 2020, 20, 2068. [Google Scholar] [CrossRef] [Green Version]

- Hoy, M.; Matveev, A.S.; Savkin, A.V. Algorithms for collision-free navigation of mobile robots in complex cluttered environments: A survey. Robotica 2015, 33, 463–497. [Google Scholar] [CrossRef] [Green Version]

- Wang, F.; Wang, K.; Lai, S.; Phang, S.K.; Chen, B.M.; Lee, T.H. An efficient UAV navigation solution for confined but partially known indoor environments. In Proceedings of the 11th IEEE International Conference on Control Automation (ICCA), Taichung, Taiwan, 18–20 June 2014; pp. 1351–1356. [Google Scholar]

- Phang, S.K.; Lai, S.; Wang, F.; Lan, M.; Chen, B.M. UAV calligraphy. In Proceedings of the 11th IEEE International Conference on Control Automation (ICCA), Taichung, Taiwan, 18–20 June 2014; pp. 422–428. [Google Scholar]

- Ciftler, B.S.; Tuncer, A.; Guvenc, I. Indoor uav navigation to a rayleigh fading source using q-learning. arXiv 2017, arXiv:1705.10375. [Google Scholar]

- Lumelsky, V.; Stepanov, A. Dynamic path planning for a mobile automaton with limited information on the environment. IEEE Trans. Autom. Control 1986, 31, 1058–1063. [Google Scholar] [CrossRef]

- Maravall, D.; de Lope, J.; Fuentes, J.P. Navigation and Self-Semantic Location of Drones in Indoor Environments by Combining the Visual Bug Algorithm and Entropy-Based Vision. Front. Neurorobot. 2017, 11, 46. [Google Scholar] [CrossRef] [Green Version]

- Kamel, M.; Burri, M.; Siegwart, R. Linear vs nonlinear MPC for trajectory tracking applied to rotary wing micro aerial vehicles. IFAC Pap. 2017, 50, 3463–3469. [Google Scholar] [CrossRef]

- Brooks, A.; Kaupp, T.; Makarenko, A. Randomised MPC-based motion-planning for mobile robot obstacle avoidance. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 3962–3967. [Google Scholar]

- Sun, Z.; Dai, L.; Liu, K.; Xia, Y.; Johansson, K.H. Robust MPC for tracking constrained unicycle robots with additive disturbances. Automatica 2018, 90, 172–184. [Google Scholar] [CrossRef]

- Roach, D.; Rice, T.; Neidigk, S.; Duvall, R. Non-Destructive Inspection of Blades. 2015. Available online: https://www.osti.gov/servlets/purl/1244426 (accessed on 14 July 2020).

- Yang, R.; He, Y.; Zhang, H. Progress and trends in nondestructive testing and evaluation for wind turbine composite blade. Renew. Sustain. Energy Rev. 2016, 60, 1225–1250. [Google Scholar] [CrossRef]

- Raišutis, R.; Jasiūnienė, E.; Žukauskas, E. Ultrasonic NDT of wind turbine blades using guided waves. Ultragarsas Ultrasound 2008, 63, 7–11. [Google Scholar]

- Corrigan, F. Multispectral Imaging Camera Drones in Farming Yield Big Benefits. 2020. Available online: https://www.dronezon.com/learn-about-drones-quadcopters/multispectral-sensor-drones-in-farming-yield-big-benefits/ (accessed on 21 July 2020).

- Vespadrones. Tetracam ADC Lite. Available online: http://vespadrones.com/product/tetracam-adc-lite/ (accessed on 15 September 2020).

- MAIA. MAIA WV—the Multispectral Camera. Available online: https://www.spectralcam.com/maia-tech/ (accessed on 15 September 2020).

- Kumar, S.S.; Wang, M.; Abraham, D.M.; Jahanshahi, M.R.; Iseley, T.; Cheng, J.C.P. Deep Learning—Based Automated Detection of Sewer Defects in CCTV Videos. J. Comput. Civ. Eng. 2020, 34, 04019047. [Google Scholar] [CrossRef]

- Li, D.; Cong, A.; Guo, S. Sewer damage detection from imbalanced CCTV inspection data using deep convolutional neural networks with hierarchical classification. Autom. Constr. 2019, 101, 199–208. [Google Scholar] [CrossRef]

- Cheng, J.C.; Wang, M. Automated detection of sewer pipe defects in closed-circuit television images using deep learning techniques. Autom. Constr. 2018, 95, 155–171. [Google Scholar]

- Kumar, S.S.; Abraham, D.M.; Jahanshahi, M.R.; Iseley, T.; Starr, J. Automated defect classification in sewer closed circuit television inspections using deep convolutional neural networks. Autom. Constr. 2018, 91, 273–283. [Google Scholar] [CrossRef]

- Hassan, S.I.; Dang, L.M.; Mehmood, I.; Im, S.; Choi, C.; Kang, J.; Park, Y.S.; Moon, H. Underground sewer pipe condition assessment based on convolutional neural networks. Autom. Constr. 2019, 106, 102849. [Google Scholar]

- Meijer, D.; Scholten, L.; Clemens, F.; Knobbe, A. A defect classification methodology for sewer image sets with convolutional neural networks. Autom. Constr. 2019, 104, 281–298. [Google Scholar]

- Myrans, J.; Everson, R.; Kapelan, Z. Automated detection of fault types in CCTV sewer surveys. J. Hydroinform. 2018, 21, 153–163. [Google Scholar] [CrossRef]

- Moselhi, O.; Shehab-Eldeen, T. Automated detection of surface defects in water and sewer pipes. Autom. Constr. 1999, 8, 581–588. [Google Scholar] [CrossRef]

- Moselhi, O.; Shehab-Eldeen, T. Classification of Defects in Sewer Pipes Using Neural Networks. J. Infrastruct. Syst. 2000, 6, 97–104. [Google Scholar] [CrossRef]

- Chae, M.J.; Abraham, D.M. Neuro-Fuzzy Approaches for Sanitary Sewer Pipeline Condition Assessment. J. Comput. Civ. Eng. 2001, 15, 4–14. [Google Scholar] [CrossRef]

- Yang, M.D.; Su, T.C. Automated diagnosis of sewer pipe defects based on machine learning approaches. Expert Syst. Appl. 2008, 35, 1327–1337. [Google Scholar] [CrossRef]

- Intel. Intel RealSense Technology. Available online: https://www.intel.com/content/www/us/en/architecture-and-technology/realsense-overview.html (accessed on 9 November 2020).

- OpenMV. Machine Vision with Python. Available online: https://openmv.io/ (accessed on 9 November 2020).

- Chien, W.Y. Stereo-Camera Occupancy Grid Mapping. Master’s Thesis, Pennsylvania State University, State College, PA, USA, 2020. [Google Scholar]

- Aluckal, C.; Mohan, B.K.; Turkar, Y.; Agarwadkar, Y.; Dighe, Y.; Surve, S.; Deshpande, S.; Daga, B. Dynamic real-time indoor environment mapping for Unmanned Autonomous Vehicle navigation. In Proceedings of the 2019 International Conference on Advances in Computing, Communication and Control (ICAC3), Mumbai, India, 20–21 December 2019; pp. 1–6. [Google Scholar]

- AprilRobotics. AprilTag 3. Available online: https://github.com/AprilRobotics/apriltag (accessed on 9 November 2020).

- IDTechEx. Charging Infrastructure for Electric Vehicles 2020–2030. Available online: https://www.idtechex.com/en/research-report/charging-infrastructure-for-electric-vehicles-2020-2030/729 (accessed on 9 November 2020).

- Howell, D.; Boyd, S.; Cunningham, B.; Gillard, S.; Slezak, L. Enabling Fast Charging: A Technology Gap Assessment; Technical Report; U.S. Department of Energy: Idaho Falls, ID, USA, 2017.

| NDT Method | Advantages | Disadvantages | Used in UVs |

|---|---|---|---|

| RGB cameras | Easy-to-use, cheap | Surface damages only, requires proper illumination | UAVs [22,40,45] |

| Optical thermography | Sub-surface damage detection | - | UAVs [54] |

| Ultrasound | Sub-surface damage detection | Heavy equipment that requires contact; Sensitive to noise and aim accuracy | UAVs [55] |

| Sherography | - | Limited sub-surface detection | Robotic platform [56] |

| Radiography | Sub-surface damage detection | Dangerous to use | Robotic platforms [53] |

| Multi-spectral cameras | Various spectral ranges | Expensive | UAVs [33,142] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kulsinskas, A.; Durdevic, P.; Ortiz-Arroyo, D. Internal Wind Turbine Blade Inspections Using UAVs: Analysis and Design Issues. Energies 2021, 14, 294. https://doi.org/10.3390/en14020294

Kulsinskas A, Durdevic P, Ortiz-Arroyo D. Internal Wind Turbine Blade Inspections Using UAVs: Analysis and Design Issues. Energies. 2021; 14(2):294. https://doi.org/10.3390/en14020294

Chicago/Turabian StyleKulsinskas, Andrius, Petar Durdevic, and Daniel Ortiz-Arroyo. 2021. "Internal Wind Turbine Blade Inspections Using UAVs: Analysis and Design Issues" Energies 14, no. 2: 294. https://doi.org/10.3390/en14020294