Communication Requirements for a Hybrid VSC Based HVDC/AC Transmission Networks State Estimation

Abstract

1. Introduction

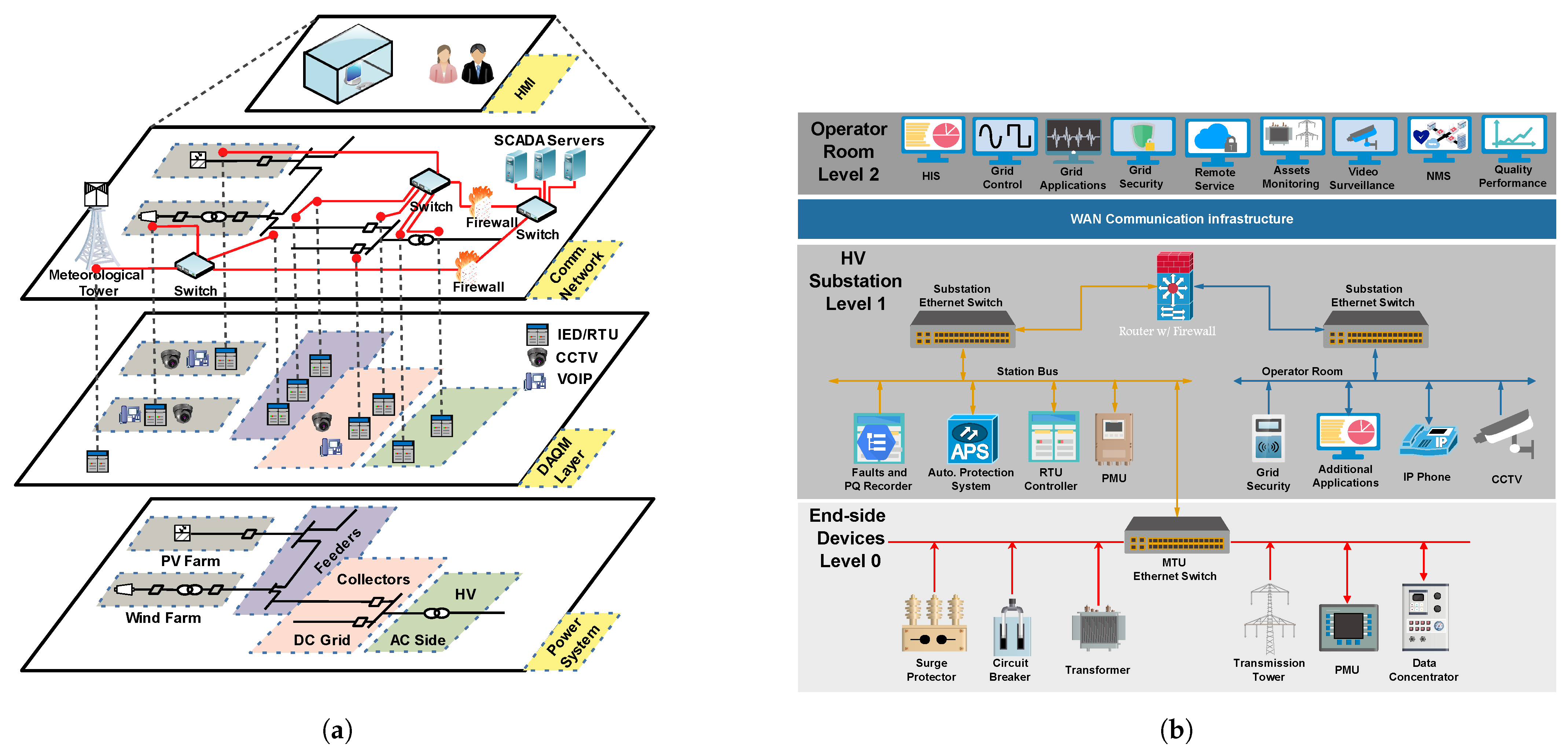

- Power System Layer: it is the lower end layer. It covers all the electrical units; generators, transformers, converters, feeders, and collector buses.

- Data Acquisition and Monitoring Layer: contains sensors and actuators represented by RTUs. This layer reports measurements in different forms, data rates, and frequency to the communication layer. This layer has circuit breaker controllers and several power protection devices.

- Communication Network Layer: The third layer and the backbone of the SCADA system. It connects the three main levels of the system, as described below and shown in Figure 1b:

- Controller area network: is the smallest communication network inside the RTU. It is responsible for connecting the microcontrollers with their slaves (sensors and actuators).

- Power area network: is a narrow area network, positioned above the controller area network and provides a stable connection between the main Master Terminal Unit (MTU) and the connected RTUs. Multiple communication protocols are used depending on the characteristics of the controller type.

- Station area network: is a station WAN, and it provides the communication link between the power area network and the SCADA command center.

- Human Machine Interface (HMI) Layer: consists of several softwares and graphical user interface applications that support the system operators. Usually, the interface has different menus, options, and screens for each layer of the SCADA.

2. SCADA Components and Structure

2.1. Remote and Master Terminal Units

2.1.1. Remote Terminal Units

- Controllers or PLC are embedded systems with multiple digital and analog Input/Output (I/O) ports, memory, communication interface, Analog-to-Digital (A/D) and Digital-to-Analog (D/A), instrumentation amplifiers and signal filters;

- Sensors: comes with a driver to convert and process the signal, for example, Current Transformer (CT) or Voltage Transformer (VT) with 4–20 mA transducer;

- Actuators: comes with isolated drivers, used to trigger circuit breakers or relays;

- HMI: (Not mandatory) a small-scale interface, screen or display.

2.1.2. Master Terminal Units

2.2. Communication Infrastructure

- Distributed (2nd Generation): the SCADA architecture was a low-traffic semi-real-time LAN network. It connects master stations and remote terminal with the command room. As a result, the reliability has improved, and the processing time and failure rate have been reduced. However, no standards were designed for the LAN protocols, and the security of the protocol was not guaranteed [30,33].

- Networked (3rd Generation): is an extension of the 2nd generation, and it is used widely today. It was introduced to override the protocol’s limitations. It consists of a centralized command center with multiple remote stations connected in one network with a single application interface to access all the stations. This generation breakthrough is the integration of the IP and TCP protocols. This improvement increased network security and extended the accessibility to the I/O devices. The system’s reliability has also been enhanced due to duplicated/redundant components and strong WAN technology. Despite these improvements, there is an uprising risk on system cyber-security due to internet protocols [4,30,33].

- Internet of Things (IoT) (4th Generation): is an under research generation, it came with the so-called Industry 4.0 revolution. Researchers claim that it will be suitable for low voltage distribution networks and microgrids [34]. It has the advantage of dealing with big data using simpler communication equipment; it uses cloud infrastructure and Wi-Fi technology. Also, it proposes cheaper and simpler controllable devices to replace the complicated IEDs and measuring units. Although this generation claims higher stability and robustness system, others stand against it due to privacy and security concerns. IoT is expected to add data aggregation, predictive/prescriptive analytics and unified data structure for the the different SCADA applications [33,34,35].

2.2.1. Communication Mediums

- Fiber Optic cables: is considered the best solid medium for long-distance communication (140+ km), and it is in continuous enhancement since the 1970s. The commercial fiber wire has reached a signal attenuation of less than 0.3 dB/km, and the optical detectors and data modulators have archived a higher accuracy [36].The simplified fiber optic cable is built from three layers, a nanometer-diameter glass core, a glass cladding, and a protective sheath (plastic jacket). The cables are manufactured to handle one of the following light propagation technologies: multi-mode graded-index, multi-mode step-index, and single-mode step-index. The single-mode fiber optic can handle a distance of 60+ km with speed up to 10 Gbps, while multi-mode covers lower distance with data rate up to 40 Gbps [37,38].The Fiber optic cables are used widely in power systems SCADA, three major modified fiber optic cables are used [36]:

- (a)

- Optical Power Ground Wire: is a cable used between transmission lines, either underground or overhead.

- (b)

- All-Dielectric Self-Supporting: is a completely dielectric cable designed to work alongside the conductor part of the HV transmission lines/towers.

- (c)

- Wrapped Optical Cable: is used in power transmission and distribution lines. Usually, it is wrapped around the grounding wire or the phase conductor.

Fiber optic technology is considered a secured and straightforward medium, but with high installation and maintenance costs. Underground or offshore installations require complicated permissions compared to other communication mediums. The most two fiber optic standard technologies in power systems are: Synchronous Optical Networking and Synchronous Digital Hierarchy [36,39]. - Power Line Carrier communication (PLCC) (analog/digital): is power-dependent communication technology, it uses the power transmission lines as a communication medium. The analog version is still used up-to-date; it can handle two communication channels, and transmit voice, data, and SCADA commands. The medium is reliable, secure, and uses frequency signals between 30 kHz and 500 kHz, with a baud rate of 9600. PLCC was installed and tested on 220/230, 110/115 and 66 kV power lines [40,41,42]. This technology can cover distance of 200 m with data rate >1 Mbps, or distance >3 km with data rate between 10 and 500 Kbps [37,43]. The digital PLCC is an under research technology. It provides higher reliability and at least 4 channels with higher data rate/speed. It is expected to be less affected by the electrical noise, and more secured than the analog PLCC [44].

- Satellites: are considered the preferred communication technology for the graphically separated receiver and transmitter terminals; it is widely used in remote access systems [30,32]. The signal is sent from one earth-stationary terminal through a special antenna (dish) pointing towards the satellite. The satellite receives, amplifies and retransmits the signal towards another earth-stationary terminal. The antenna has a special low-noise amplifier and works on C-band and Ku-band frequencies. The C-band covers 5.2625–26.0265 GHz uplink and 3.26–4.8 GHz downlink, while Ku-band covers 12.265–18.10 uplink and 10.260–13.25 GHz downlink [30].

- Microwave Radio: is part of the ultra-high frequency technologies that operate on frequencies higher than 1 GHz or as a multichannel medium in lower frequencies. Microwave technology was invented as an analog communication medium with high data rates and secured multichannel capabilities [25,30]. Despite the improvements on the analog microwave, such as complexity reduction, the digital microwave leads with cost reduction and high communication flexibility. Also, it provides a higher data rate, new communication protocols, and standards. WiMax technology is one of the ultra-high frequency microwaves, and it can cover a distance up to 50 km with speed up to 75 Mbps [30,37].The microwave technology has two main network topologies; the PTP and PTM. The PTP is a directional long-distance communication link, while PTM is an omnidirectional communication that can be structured as star or tree networks [25,30]. PTM is more suitable for on-demand channels. Both topologies are power-independent, but the line of sight between the communications nodes must always be guaranteed.

- Omnidirectional Wireless/Cellular: is part of the radio based technologies which operated in specific frequency bandwidths. Table 1 shows the most common Wireless/Cellular communication technologies along with their specifications and data speeds [39,45,46]:The advantage of ZigBee over traditional Wi-Fi is the ability to connect a higher number of cell nodes (approx. 60k instead of 2k). Cellular networks can provide high speed, low latency, and stable communication networks for power systems. However, the security side is still doubtable [39,45]. Further visions are available in [47] for the 6th generation (6G).

2.2.2. SCADA Communication Protocols

- Modbus: One of the most used protocols in SCADA systems, provides real-time communication using the Open Systems Interconnection (OSI) seven layers model [25,49]. Modbus protocol has four operating modes between the main station and the remote station. It converts the transmitted requests into a protocol data unit (PDU: function code and data request). The data linking layer in the OSI converts the PDU into an application data unit (ADU), which the receiver side can understand. Modbus is considered as a serial communication tool and usually use RS-232, and RS-485 modems [31]. Also, it can be extended to deal with TCP/IP protocols through a new layer to generate the PDU’s “encapsulation” [49].

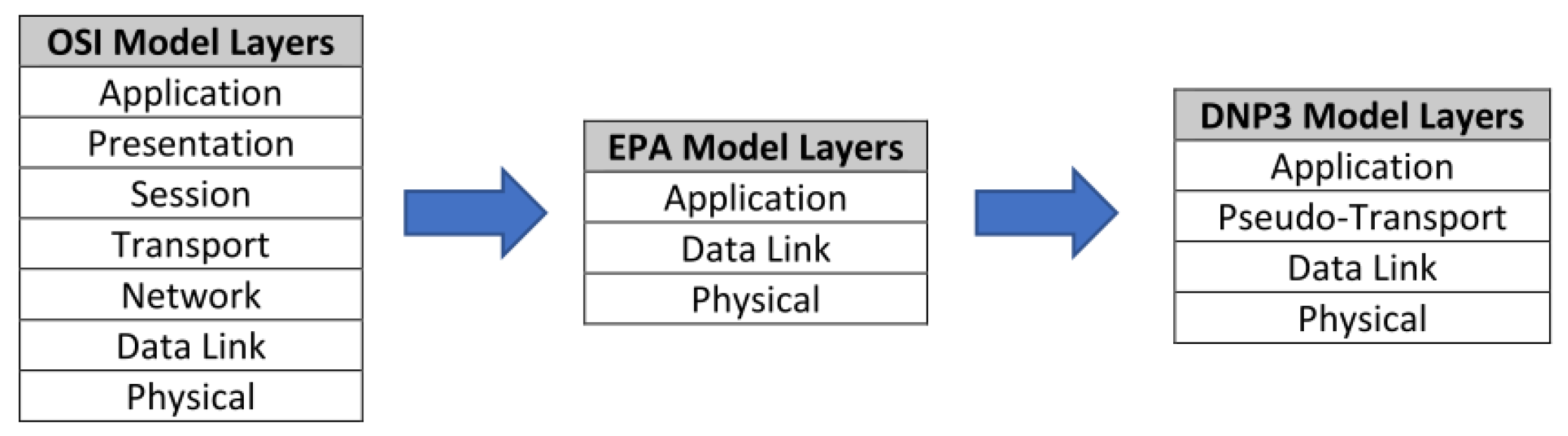

- DNP3: is a protocol developed by IEC based on a simplified version of the OSI called the Enhanced Performance Architecture (EPA) model, as shown in Figure 3. DNP3 is widely used in SCADA systems to establish a connection between multiple MTUs and RTUs. DNP3 can handle low bandwidth serial and IP communication modems, or TCP/IP over internet connection (WAN) [7,50].

- IEC 60870-5: the protocol is based on EPA model and was developed by IEC. An additional layer is added called the User Application layer. It is a front-end layer that can be used to set up the functions of the telecontrol system to interact with the SCADA hardware devices [30]. There are several versions of the protocol 60870-5-(101 to 104), each with different data objects, functions codes, and specifications. The most generic one is 101, which has basic definitions of the data objects, the geographical areas, and the WAN technology. The 104 version has two additional layers from the OSI model to deal with TCP/IP protocols (Figure 4) [25,30]. This protocol is used in power transmission and distribution SCADA’s, with a data transfer speed of 64 kbits/s depending on the interface of the IEC 60870-5 [30].

- IEC 60870-6: is known as Inter-Control Center Communications Protocol (ICCP), globally used for telecontrol of SCADA. TASE.2 version of the standard has 5–7 layers of the OSI model. It is used to connect command centres of SCADA together, and exchange measurements, time-tagged data and events [51].

- IEC 61850: is considered the most widely adopted protocol in substations automation [27], especially for connecting multiple RTUs/IEDs. The protocol is based on the OSI model and it overcomes the DNP3 by providing higher bandwidth communication and real-time protection and control [27]. The communication architecture of IEC 61850 contains 3 hierarchical levels [7]:

- (a)

- Station Level: contains the main station HMI and computers;

- (b)

- Bay Level: is presented by the different RTUs, such as IEDs and PMU.This level is connected to the upper level through the Station Bus;

- (c)

- Process Level: is the RTU’s terminals such as sensors (CT/VT) and actuators (circuit breaker). This level uses the Process Bus to communicate.

The IEC 61850 provide five Ethernet/IP communication services, as shown in Figure 5, which makes it superior over IEC 60870-5; these services are (1) Abstract Communication Service Interface (ACSI), (2) Generic Object Oriented Substation Event (GOOSE), (3) Generic Substation Status Event (GSSE), (4) Sampled Measured Value multicast (SMV), and (5) Time Synchronization (TS). The GOOSE and GSSE are the main two services for data and events exchange. GOOSE provides the master/slave multicast messaging and allows the transfer of binary, integers, and analog values from the slave nodes. However, GSSE is more restricted to binary status data. The TS service ensures that all IEDs work in a synchronized timestamp. This process can be implemented through GPS or the IEEE 1588 Precision Time Protocol [27,52]. ASCI is responsible of the system reports and status logging while SMV service transfer sampled analog data and binary status to the IED’s Bay level via the Process bus [27]. - Profibus or the “Process field bus”: is an OSI model protocol used in the industrial monitoring environment. It has a bus control unit that establishes the connection between the different hardware equipment. Usually, it has a type D connector, 127 points of data, speed up to 12 Mbps, and a data message size up to 244 bytes per node [31]. There are three standard versions of the Profibus; Field bus Message Specification, Distributed Peripheral, and Processes Automation. The Distributed Peripheral version data speed can vary between 93.75 kbps and 12 Mbps for a distance of 1.2 Km and 100 m, respectively [31].

- Highway Addressable Remote Transducer (HART): is a protocol developed by Rosemount for smart communication with sensors and actuators. It provides smart digital techniques to collect data and send commands. HART is a hybrid protocol since the physical layer can deal with 4–20 mA scheme and frequency-shift keying for analog and digital communication, respectively. Therefore, it is commonly used between PLCs and RTUs. [31,53]. The protocol has provided higher speed and efficiency, and simplified the maintenance and diagnostics processes. HART protocol can be operated in two topologies: PTP and PTM [31].

- Modbus+ (plus): is proposed to overcome the limitations of the Modbus protocol. The Modbus+ was developed to establish a LAN connection between master stations. Also, it allows the “token” approach with unique addresses for each station in the network up to 64 addresses. Therefore, an additional special cable is used to transmit data, but this came with a price that Modbus+ is not suitable for real-time communication. Modbus+ transceivers are polarities independent, and usually twisted pair cables are used, with an additional shield wire [31].

- Data Highway (DH)-485 and DH+: are standard protocols for Allen Bradley. The general specifications of these protocols are Peer to Peer (P2P), half-duplex LAN, up to 64 nodes, and 57.6 kbaudbit. DH+ supports the “token” approach (floating master), and it is based on 3 layers of the OSI model; the application, the data link, and the physical layers. The DH-485 is a RS-485 serial master/slave protocol [31].

- Foundation Fieldbus: is a protocol based on the OSI model with an extra user application layer, which provides a convenient interface for users. Fieldbus protocol has less wire costs, higher data integrity, and compatibility with several industrial vendors. In addition, it uses HART protocol, making it compatible with analog and digital signals. The protocol can handle up to 32 self-powered or 12 powered devices through the communication link, with 31.25 kb/s transfer speed [31].

2.3. Operator Room

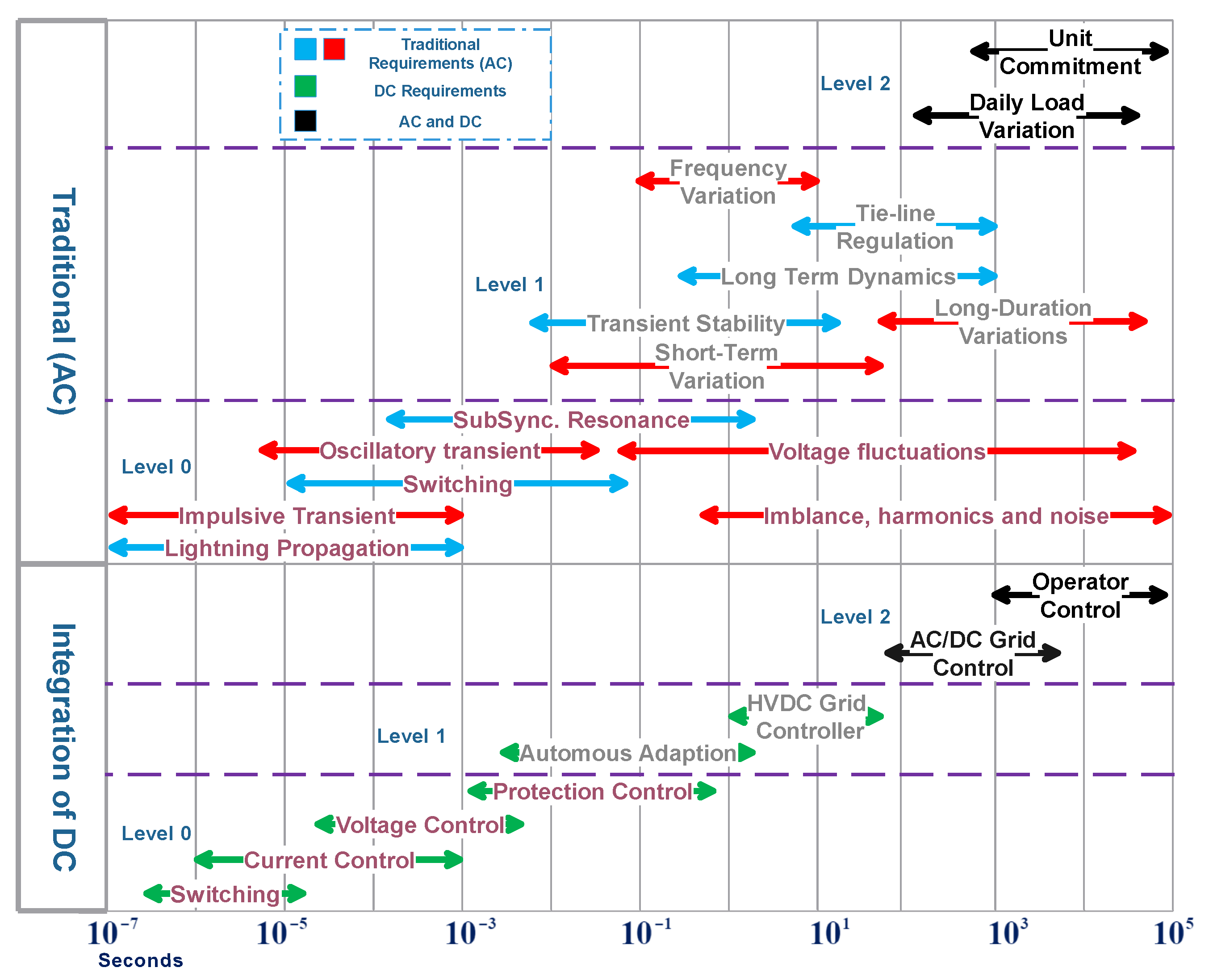

3. RTUs and Communication Networks Requirements for HVDC/AC

3.1. The HVDC/AC RTUs

- Friendly user interface, flexible and expandable to meet future requirements.

- Provided state-of-the-art in HVDC protection with a swift reaction. The papers refer to speed requirements for the DC side circuit breakers to act in 2 ms with a sampling rate >50 kHz.

- I/O ports are: (16 analog and 26 digital) Inputs/28 digital Outputs

3.2. The HVDC/AC Communication Network

3.2.1. Inter-Control Center Communications Protocol ICCP

- DNP3 protocol is used, with the maximum data rate (speed);

- The communication network is serial optical fiber, RS232 Master/Slave architecture;

- The data exchange scheme is defined as shown in Table 4.

3.2.2. Time Requirements

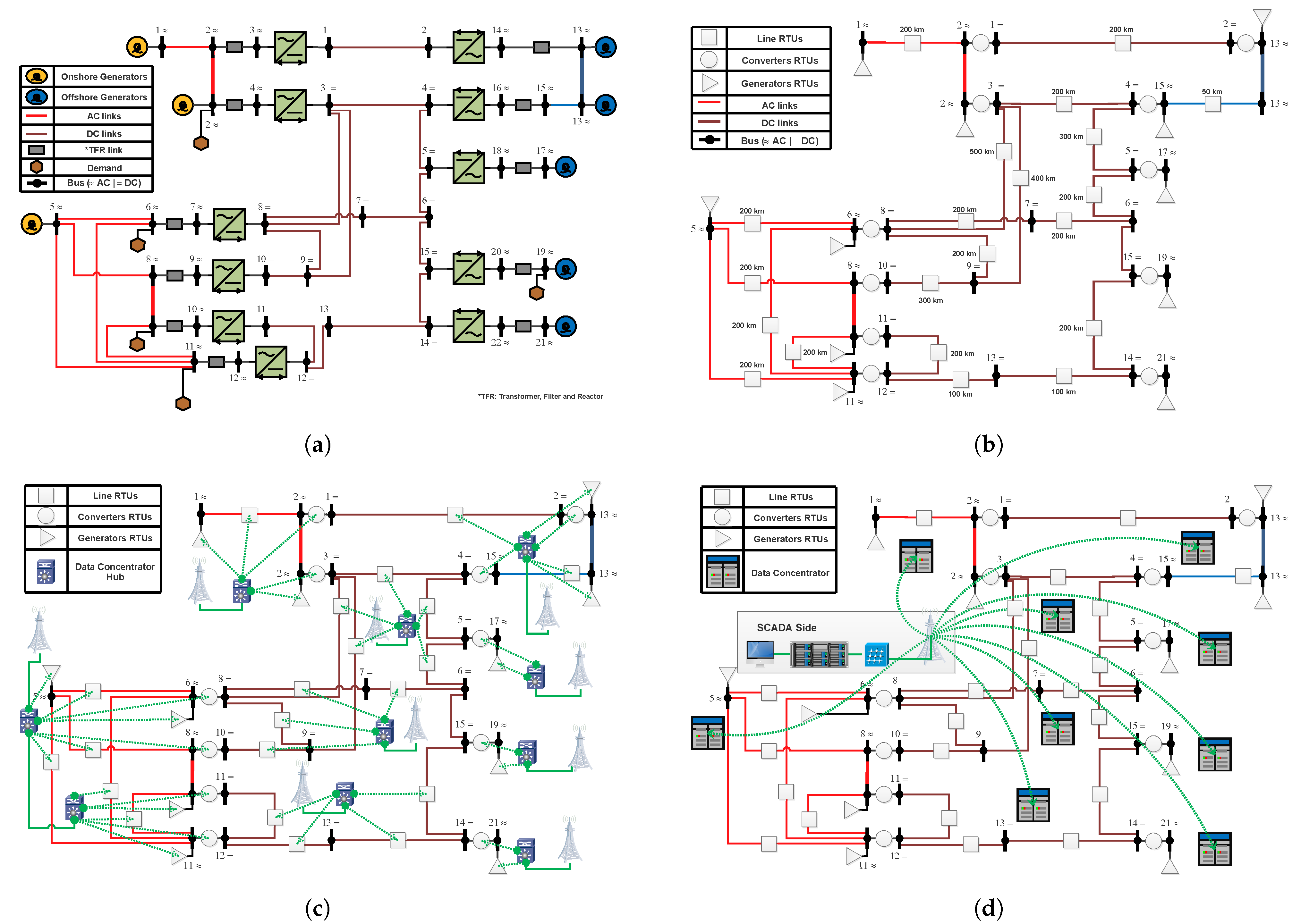

4. Case Study: HVDC/AC State Estimation Time Requirements

- : the measuring time from the sensor to the buffer of the RTUs;

- and : the time elapsed in data acquisition, from RTUs to the SCADA;

- : the state estimation processing time of the unified WLS from the moment of receiving the data.

- Propagation delay (): theoretically it can be calculated using the below equation, for example, 1 km fiber optic link has 3.33 s delay. Further simulations are carried out for comparison.

4.1. The 1st Layer: From Sensors to RTUs

4.2. The 2nd Layer: RTU to Data Concentrator

- D9: The max. transmission and propagation delays in D9 are 0.759 ms and 0.01320355 ms, respectively. D9 has 6 links leading to an overall muxponders and protocols delays of 0.02023 ms and 0.2244 ms. Therefore, the D9 delay is 1.01683 ms.

- D10: The max. transmission and propagation delays in D10 are 0.7345087 ms and 0.053371 ms, respectively. D10 has 8 links leading to an overall muxponders and protocols delays of 0.026975 ms and 0.2992 ms. Then D10 delay is 1.1140547 ms.

4.3. The 3rd Layer: DC to SCADA

4.4. The 4th Layer: State Estimation Processing Time

4.5. Outcomes and Results

- The total state estimation cycle time is less than 100 ms, which is less than the time requirements for a hybrid HVDC/AC systems.

- Changing the communication medium to wifi or microwave has an impact on the propagation delay.

- The static (snapshot) state estimation can be carried out in higher frequency (seconds range) instead of the traditional 5–15 min. Furthermore, the dynamic state estimation can be implemented at the local level since the accumulated delays are in few milliseconds.

5. Main Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| A/D | Analog-to-Digital |

| AC | Alternating Current |

| CT | Current Transformer |

| D/A | Digital-to-Analog |

| DNP3 | Distributed Network Protocol |

| EPA | Enhanced Performance Architecture |

| FPGA | Field-Programmable Gate Array |

| GPS | Global Positioning System |

| HART | Highway Addressable Remote Transducer |

| HMI | Human Machine Interface |

| HVDC | High Voltage Direct Current |

| I/O | Input/Output |

| ICCP | Inter-Control Center Communications Protocol |

| IEC | International Electro Technical Commission |

| IED | Intelligent Electronic Devices |

| IoT | Internet of Things |

| IP | Internet Protocol |

| LAN | Local Area Network |

| MTM | Multiple to Multiple |

| MTU | Master Terminal Unit |

| OSI | Open Systems Interconnection |

| P2P | Peer to Peer |

| PDC | Phasor Data Concentrator |

| PLC | Programmable Logic Controllers |

| PLCC | Power Line Carrier communication |

| PMU | Phasor Measurements Unit |

| PTM | Point to Multiple |

| PTP | Point to Point |

| RTU | Remote Terminal Unit |

| SCADA | Supervisory, Control and Data Acquisition |

| TCP | Transmission Control Protocol |

| VSC | Voltage Source Converter |

| VT | Voltage Transformer |

| WAN | Wide Area Network |

| WLS | Weighted Least Squares |

Appendix A

Appendix A.1. Communication Medium Lengths for Cigre B4 Network

| DataConc. # | RTU # | Distance (km) |

|---|---|---|

| #1 | #1G | 100 |

| #2G | 100 | |

| #3C | 100 | |

| #4C | 100 | |

| #5L | 100 | |

| #2 | #1G | 100 |

| #2G | 100 | |

| #3C | 100 | |

| #4C | 100 | |

| #5L | 100 | |

| #6L | 160 | |

| #3 | #1G | 0.5–1 |

| #2C | 0.5–1 | |

| #4 | #1G | 0.5–1 |

| #2C | 0.5–1 | |

| #5 | #1G | 0.5–1 |

| #2C | 0.5–1 | |

| #6 | #1L | 100 |

| #2L | 100 | |

| #3L | 100 | |

| #4L | 315 | |

| #5L | 320 | |

| #7 | #1L | 100 |

| #2L | 100 | |

| #3L | 100 | |

| #4L | 100 | |

| #8 | #1L | 150 |

| #2L | 100 | |

| #3L | 100 | |

| #4L | 150 | |

| #9 | #1L | 100 |

| #2L | 100 | |

| #3G | 155 | |

| #4G | 155 | |

| #5C | 155 | |

| #6C | 155 | |

| #10 | #1G | 150 |

| #2G | 50 | |

| #3L | 50 | |

| #4L | 100 | |

| #5L | 100 | |

| #6C | 155 | |

| #7C | 155 | |

| #8 (DC) | 150 |

| Data Concentrators | Distance to SCADA (km) |

|---|---|

| #1 | 250 |

| #2 | 300 |

| #3 | 220 |

| #4 | 200 |

| #5 | 400 |

| #6 | 200 |

| #7 | 300 |

| #8 | 350 |

| #10 | 250 |

References

- Kim, T.H. Securing Communication of SCADA Components in Smart Grid Environment. Int. J. Syst. Appl. Eng. Dev. 2011, 5, 135–142. [Google Scholar]

- Wood, A.J.; Wollenberg, B.F.; Sheblé, G.B. Power Generation, Operation, and Control; Wiley: Hoboken, NJ, USA, 2012; p. 632. [Google Scholar]

- Roy, R.B. Controlling of Electrical Power System Network by using SCADA. Int. J. Sci. Eng. Res. 2012, 3, 1–6. [Google Scholar]

- Miceli, R. Energy Management and Smart Grids. Energies 2013, 6, 2262–2290. [Google Scholar] [CrossRef]

- Pan, X.; Zhang, L.; Xiao, J.; Choo, F.H.; Rathore, A.K.; Wang, P. Design and implementation of a communication network and operating system for an adaptive integrated hybrid AC/DC microgrid module. CSEE J. Power Energy Syst. 2018, 4, 19–28. [Google Scholar] [CrossRef]

- Northcote-Green, J.; Wilson, R. Control and Automation of Electrical Power Distribution Systems; CRC Press: Boca Raton, FL, USA, 2017; pp. 1–464. [Google Scholar] [CrossRef]

- Khan, R.H.; Khan, J.Y. A comprehensive review of the application characteristics and traffic requirements of a smart grid communications network. Comput. Netw. 2013, 57, 825–845. [Google Scholar] [CrossRef]

- Laursen, O.; Björklund, H.; Stein, G. Modern Man-Machine Interface for HVDC Systems; Technical Report; ABB Power Systems: Ludvika, Sweden, 2002. [Google Scholar]

- Castello, P.; Ferrari, P.; Flammini, A.; Muscas, C.; Rinaldi, S. A New IED With PMU Functionalities for Electrical Substations. IEEE Trans. Instrum. Meas. 2013, 62, 3209–3217. [Google Scholar] [CrossRef]

- Wenge, C.; Pelzer, A.; Naumann, A.; Komarnicki, P.; Rabe, S.; Richter, M. Wide area synchronized HVDC measurement using IEC 61850 communication. In Proceedings of the 2014 IEEE PES General Meeting | Conference Exposition, National Harbor, MD, USA, 27–31 July 2014; pp. 1–5. [Google Scholar]

- Ahmed, M.A.; Kim, C.H. Communication Architecture for Grid Integration of Cyber Physical Wind Energy Systems. Appl. Sci. 2017, 7, 1034. [Google Scholar] [CrossRef]

- Tielens, P.; Hertem, D.V. The relevance of inertia in power systems. Renew. Sustain. Energy Rev. 2016, 55, 999–1009. [Google Scholar] [CrossRef]

- Babazadeh, D.; Hertem, D.V.; Rabbat, M.; Nordstrom, L. Coordination of Power Injection in HVDC Grids with Multi-TSOs and Large Wind Penetration. In Proceedings of the 11th IET International Conference on AC and DC Power Transmission, Birmingham, UK, 10–12 February 2015; pp. 1–7. [Google Scholar] [CrossRef]

- Egea-Alvarez, A.; Beerten, J.; Van Hertem, D.; Bellmunt, O. Hierarchical power control of multiterminal HVDC grids. Electr. Power Syst. Res. 2015, 121, 207–215. [Google Scholar] [CrossRef]

- IEEE PC37.247/D2.48. IEEE Approved Draft Standard for Phasor Data Concentrators for Power Systems; IEEE: New York, NY, USA, 2019. [Google Scholar]

- IEEE Std C37.1-2007. IEEE Standard for SCADA and Automation Systems; Revision of IEEE Std C37.1-1994; IEEE: New York, NY, USA, 2008; pp. 1–143. [Google Scholar] [CrossRef]

- Ali, I.; Hussain, S.S. Control and management of distribution system with integrated DERs via IEC 61850 based communication. Eng. Sci. Technol. Int. J. 2017, 20, 956–964. [Google Scholar] [CrossRef]

- IEEE Std 1646-2004. IEEE Standard Communication Delivery Time Performance Requirements for Electric Power Substation Automation; IEEE: New York, NY, USA, 2005; pp. 1–36. [Google Scholar] [CrossRef]

- IEC/IEEE 60255-118-1:2018. IEEE/IEC International Standard—Measuring Relays and Protection Equipment—Part 118-1: Synchrophasor for Power Systems-Measurements; IEEE: New York, NY, USA, 2018; pp. 1–78. [Google Scholar] [CrossRef]

- Boyd, M. High-Speed Monitoring of Multiple Grid-Connected Photovoltaic Array Configurations and Supplementary Weather Station. J. Sol. Energy Eng. 2017, 139. [Google Scholar] [CrossRef]

- Ahmed, M.A.; Kim, Y.C. Communication Network Architectures for Smart-Wind Power Farms. Energies 2014, 7, 3900–3921. [Google Scholar] [CrossRef]

- Pettener, A.L. SCADA and communication networks for large scale offshore wind power systems. In Proceedings of the IET Conference on Renewable Power Generation (RPG 2011), Edinburgh, UK, 6–8 September 2011; pp. 1–6. [Google Scholar] [CrossRef]

- Ayiad, M.M.; Katti, A.; Fatta, G. Agreement in Epidemic Information Dissemination. In International Conference on Internet and Distributed Computing Systems, Proceedings of the IDCS 2016: Internet and Distributed Computing Systems, Wuhan, China, 28–30 September 2016; Springer: Cham, Switzerland, 2016; Volume 9864, pp. 95–106. [Google Scholar] [CrossRef]

- Herrera, J.; Mingarro, M.; Barba, S.; Dolezilek, D.; Calero, F.; Kalra, A.; Waldron, B. New Deterministic, High-Speed, Wide-Area Analog Synchronized Data Acquisition-Creating Opportunities for Previously Unachievable Control Strategies. In Proceedings of the Power and Energy Automation Conference, Spokane, WA, USA, 10 March 2016. [Google Scholar]

- Thomas, M.; McDonald, J. Power System SCADA and Smart Grids; CRC Press: Boca Raton, FL, USA, 2017; pp. 1–329. [Google Scholar] [CrossRef]

- Boyer, S.A. Scada: Supervisory Control And Data Acquisition, 4th ed.; International Society of Automation: Pittsburgh, PA, USA, 2009. [Google Scholar]

- Communication Networks and Systems for Power Utility Automation Part 1–2: Guidelines on Extending IEC 61850; Technical Report; IEC: London, UK, 2020.

- Das, R.; Kanabar, M.; Adamiak, M.; Antonova, G.; Apostolov, A.; Brahma, S.; Dadashzadeh, M.; Hunt, R.; Jester, J.; Kezunovic, M.; et al. Centralized Substation Protection and Control; Technical Report; IEEE PES Power System Relaying Committee: Bethlehem, PA, USA, 2015. [Google Scholar] [CrossRef]

- Jahn, I.; Hohn, F.; Chaffey, G.; Norrga, S. An Open-Source Protection IED for Research and Education in Multiterminal HVDC Grids. IEEE Trans. Power Syst. 2020, 35, 2949–2958. [Google Scholar] [CrossRef]

- Marihart, D.J. Communications technology guidelines for EMS/SCADA systems. IEEE Trans. Power Deliv. 2001, 16, 181–188. [Google Scholar] [CrossRef]

- Reynders, D.; Mackay, S.; Wright, E.; Mackay, S. Practical Industrial Data Communications. In Practical Industrial Data Communications; Butterworth-Heinemann: Oxford, UK, 2004. [Google Scholar] [CrossRef]

- Morreale, P.A.; Terplan, K. (Eds.) The CRC Handbook of Modern Telecommunications, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2010. [Google Scholar]

- Koushik, A.; Bs, R. 4th Generation SCADA Implementation for Automation. Int. J. Adv. Res. Comput. Commun. Eng. 2016, 5, 629. [Google Scholar]

- Mlakić, D.; Baghaee, H.; Nikolovski, S.; Vukobratović, M.; Balkić, Z. Conceptual Design of IoT-based AMR Systems based on IEC 61850 Microgrid Communication Configuration using Open-Source Hardware/Software IED. Energies 2019, 12, 4281. [Google Scholar] [CrossRef]

- Sajid, A.; Abbas, H.; Saleem, K. Cloud-Assisted IoT-Based SCADA Systems Security: A Review of the State of the Art and Future Challenges. IEEE Access 2016, 4, 1375–1384. [Google Scholar] [CrossRef]

- Bonaventura, G.; Hanson, T.; Tomita, S.; Shiraki, K.; Cottino, E.; Teichmann, B.; Stassar, S.C.P.; Anslow, P.; Murakami, M.; Shiraki, K.; Solina, P.; Araki, N. Optical Fibres, Cables and Systems; IUT International Telecommunication Union: Geneva, Switzerland, 2010; p. 319. [Google Scholar]

- Khan, A.R.; Mahmood, A.; Safdar, A.; Khan, Z.; Khan, N. Load forecasting, dynamic pricing and DSM in smart grid: A review. Renew. Sustain. Energy Rev. 2015. [Google Scholar] [CrossRef]

- The Future Is 40 Gigabit Ethernet. Technical Report, Cisco Systems, 2016, Ref.: C11-737238-00. Available online: https://www.cisco.com/c/dam/en/us/products/collateral/switches/catalyst-6500-series-switches/white-paper-c11-737238.pdf (accessed on 19 February 2021).

- Lo, C.; Ansari, N. The Progressive Smart Grid System from Both Power and Communications Aspects. IEEE Commun. Surv. Tutorials 2012, 14, 799–821. [Google Scholar] [CrossRef]

- IEEE Std 643-2004. IEEE Guide for Power-Line Carrier Applications; Revision of IEEE Std 643-1980; IEEE: New York, NY, USA, 2005; pp. 1–134. [Google Scholar] [CrossRef]

- Ahmed, M.; Soo, W.L. Power line carrier (PLC) based communication system for distribution automation system. In Proceedings of the 2008 IEEE 2nd International Power and Energy Conference, Johor Bahru, Malaysia, 1–3 December 2008; pp. 1638–1643. [Google Scholar] [CrossRef]

- Greer, R.; Allen, W.; Schnegg, J.; Dulmage, A. Distribution automation systems with advanced features. In Proceedings of the 2011 Rural Electric Power Conference, Chattanooga, TN, USA, 10–13 April 2011. [Google Scholar]

- Yousuf, M.S.; El-Shafei, M. Power Line Communications: An Overview-Part I. In Proceedings of the 2007 Innovations in Information Technologies (IIT), Dubai, United Arab Emirates, 18–20 November 2007; pp. 218–222. [Google Scholar] [CrossRef]

- Merkulov, A.G.; Adelseck, R.; Buerger, J. Wideband digital power line carrier with packet switching for high voltage digital substations. In Proceedings of the 2018 IEEE International Symposium on Power Line Communications and its Applications (ISPLC), Manchester, UK, 8–11 April 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Eluwole, O.; Udoh, N.; Ojo, M.; Okoro, C.; Akinyoade, A. From 1G to 5G, what next? IAENG Int. J. Comput. Sci. 2018, 45, 413–434. [Google Scholar]

- Baronti, P.; Pillai, P.; Chook, V.W.C.; Chessa, S.; Gotta, A.; Hu, Y.F. Wireless Sensor Networks: A Survey on the State of the Art and the 802.15.4 and ZigBee Standards. Comput. Commun. 2007, 30, 1655–1695. [Google Scholar] [CrossRef]

- David, K.; Berndt, H. 6G Vision and Requirements: Is There Any Need for Beyond 5G? IEEE Veh. Technol. Mag. 2018, 13, 72–80. [Google Scholar] [CrossRef]

- Gao, J.; Liu, J.; Rajan, B.; Nori, R.; Fu, B.; Xiao, Y.; Liang, W.; Chen, C. SCADA communication and security issues. Secur. Commun. Netw. 2014, 7. [Google Scholar] [CrossRef]

- MODBUS Messaging on TCP/IP Implementation Guide V1.0b; Modbus-IDA: Hopkinton, MA, USA, 24 October 2006; Available online: https://modbus.org/docs/Modbus_Messaging_Implementation_Guide_V1_0b.pdf (accessed on 19 February 2021).

- East, S.; Butts, J.; Papa, M.; Shenoi, S. Chapter 5 a Taxonomy of Attacks on the DNP 3 Protocol; Springer: Berlin, Germany, 2014. [Google Scholar]

- Schwarz, K. Telecontrol Standard IEC 60870-6 TASE.2 Globally Adopted. In Fieldbus Technology; Dietrich, D., Schweinzer, H., Neumann, P., Eds.; Springer: Vienna, Austria, 1999; pp. 38–45. [Google Scholar]

- IEEE C37.237. IEEE Standard for Requirements for Time Tags Created by Intelligent Electronic Devices; IEEE: New York, NY, USA, 5 December 2018. [Google Scholar]

- Park, J.; Mackay, S.; Wright, E. Practical Data Communications for Instrumentation and Control. In Practical Data Communications for Instrumentation and Control; Newnes: Oxford, UK, 2003; pp. xi–xiii. [Google Scholar] [CrossRef]

- Roberson, D.; Kim, H.C.; Chen, B.; Page, C.; Nuqui, R.; Valdes, A.; Macwan, R.; Johnson, B.K. Improving Grid Resilience Using High-Voltage dc: Strengthening the Security of Power System Stability. IEEE Power Energy Mag. 2019, 17, 38–47. [Google Scholar] [CrossRef]

- IEEE Std C37.244-2013. IEEE Guide for Phasor Data Concentrator Requirements for Power System Protection, Control, and Monitoring; IEEE: New York, NY, USA, 2013; pp. 1–65. [Google Scholar] [CrossRef]

- Adamiak, M.; Premerlani, W.; Kasztenny, B. Synchrophasors: Definition, Measurement, and Application; Technical Report; GE Grid Solutions: Atlanta, GA, USA, 2015. [Google Scholar]

- Wang, M.; Abedrabbo, M.; Leterme, W.; Van Hertem, D.; Spallarossa, C.; Oukaili, S.; Grammatikos, I.; Kuroda, K. Abedrabbo, M.; Leterme, W.; Van Hertem, D.; Spallarossa, C.; Oukaili, S.; Grammatikos, I.; Kuroda, K. A Review on AC and DC Protection Equipment and Technologies: Towards Multivendor Solution. In Cigrè Winnipeg 2017 Colloquium; Cigrè: Winnipeg, MB, Canada, 2017; pp. 1–11. [Google Scholar]

- TPU D500 IED Datasheet; Technical Report; EFACEC: Maia, Portugal, 2016.

- Schmid, J.; Kunde, K. Application of non conventional voltage and currents sensors in high voltage transmission and distribution systems. In Proceedings of the 2011 IEEE International Conference on Smart Measurements of Future Grids (SMFG) Proceedings, Bologna, Italy, 14–16 November 2011; pp. 64–68. [Google Scholar] [CrossRef]

- Xiang, Y.; Chen, K.; Xu, Q.; Jiang, Z.; Hong, Z. A Novel Contactless Current Sensor for HVDC Overhead Transmission Lines. IEEE Sens. J. 2018, 18, 4725–4732. [Google Scholar] [CrossRef]

- Zhu, K.; Lee, W.K.; Pong, P.W.T. Non-Contact Voltage Monitoring of HVDC Transmission Lines Based on Electromagnetic Fields. IEEE Sens. J. 2019, 19, 3121–3129. [Google Scholar] [CrossRef]

- Leterme, W.; Beerten, J.; Van Hertem, D. Nonunit Protection of HVDC Grids With Inductive DC Cable Termination. IEEE Trans. Power Deliv. 2016, 31, 820–828. [Google Scholar] [CrossRef]

- Pirooz Azad, S.; Van Hertem, D. A Fast Local Bus Current-Based Primary Relaying Algorithm for HVDC Grids. IEEE Trans. Power Deliv. 2017, 32, 193–202. [Google Scholar] [CrossRef]

- Zhang, Y.; Ma, Y.; Xing, F. A prototype optical fibre direct current sensor for HVDC system. Trans. Inst. Meas. Control 2016, 38, 55–61. [Google Scholar] [CrossRef]

- Michalski, J.; Lanzone, A.J.; Trent, J.; Smith, S. Secure ICCP Integration Considerations and Recommendations; Sandia Report; Sandia National Laboratories: Albuquerque, NM, USA, 2007. [Google Scholar]

- PJM Manual 01: Control Center and Data Exchange Requirements; Technical Report; PJM System Operations Division: Columbia, SC, USA, 2020.

- Ayiad, M.; Leite, H.; Martins, H. State Estimation for Hybrid VSC Based HVDC/AC Transmission Networks. Energies 2020, 13, 4932. [Google Scholar] [CrossRef]

- Customer ICCP Interconnection Policy; Techreport 4; Transpower: Wellington, New Zealand, 2019.

- Abedrabbo, M.; Van Hertem, D. A Primary and Backup Protection Algorithm based on Voltage and Current Measurements for HVDC Grids. In Proceedings of the International High Voltage Direct Current Conference, Shanghai, China, 25–27 October 2016. [Google Scholar]

- Commission Regulation (EU) 2016/1447 of 26 August 2016 Establishing a Network Code on Requirements for Grid Connection of High Voltage Direct Current Systems and Direct Current-Connected Power Park Modules; Technical Report; Official Journal of the European Union: Brussels, Belgium, 2016.

- Requirements for Grid Connection of High Voltage Direct Current Systems and Direct current-Connected Power Park Modules (HVDC), Articles 11-54; Technical Report; Energinet: Fredericia, Denmark, 2019.

- Coffey, J. Latency in Optical Fiber Systems; Techreport; CommScope: Hickory, NC, USA, 2017. [Google Scholar]

- OptiSystems and OptiSPICE; Technical Report; Optiwave Systems Inc.: Ottawa, ON, Canada, 2021.

- Bobrovs, V.; Spolitis, S.; Ivanovs, G. Latency causes and reduction in optical metro networks. In Optical Metro Networks and Short-Haul Systems VI; Weiershausen, W., Dingel, B.B., Dutta, A.K., Srivastava, A.K., Eds.; International Society for Optics and Photonics, SPIE: San Francisco, CA, USA, 2014; Volume 9008, pp. 91–101. [Google Scholar] [CrossRef]

- Leon, H.; Montez, C.; Stemmer, M.; Vasques, F. Simulation models for IEC 61850 communication in electrical substations using GOOSE and SMV time-critical messages. In Proceedings of the IEEE World Conference on Factory Communication Systems (WFCS), Aveiro, Portugal, 3–6 May 2016; pp. 1–8. [Google Scholar] [CrossRef]

- Juárez, J.; Rodríguez-Morcillo, C.; Mondejar, J. Simulation of IEC 61850-based substations under OMNeT++. In Proceedings of the 5th International ICST Conference on Simulation Tools and Techniques, Sirmione-Desenzano, Italy, 12–23 March 2012; pp. 319–326. [Google Scholar] [CrossRef]

- Bezanson, J.; Edelman, A.; Karpinski, S.; Shah, V.B. Julia: A Fresh Approach to Numerical Computing. SIAM Rev. 2017, 59, 65–98. [Google Scholar] [CrossRef]

- Mouco, A.; Abur, A. A robust state estimator for power systems with HVDC components. In Proceedings of the North American Power Symposium (NAPS), Morgantown, WV, USA, 17–19 September 2017; pp. 1–5. [Google Scholar]

| Technology | Channel Bandwidth | Latency | Data Rate | Cell Size |

|---|---|---|---|---|

| ZigBee | 0.3–2 MHz | <100 ms | 250 kbps | <100 m |

| Wifi | 22 MHz | <100 ms | 54 Mbps | <100 m |

| 2G | 0.2–1.25 MHz | 300–750 ms | 64 kbps–2 Mbps | <20 km |

| 3G | 1.25–20 MHz | 40–400 ms | 2.4–300 Mbps | <10 km |

| 4G | <100 MHz | 40–50 ms | <3 Gbps | <10 km |

| 5G | <100 GHz | <=1 ms | >3 Gbps | <1 km |

| Characteristics | Measurements | |

|---|---|---|

| AC | I/O: −8 digital input and 8 to 32 analog input with 16–24 bits A/D resolution and sampling frequency of 4 or 8 kHz for a 50 Hz system [57]. −16 to 264 digital output for large scale protection RTU [58]. | Voltages, Currents. Active and reactive power/energy. Power factor. Frequency, rate of change of frequency (ROCOF), total vector error (TVE) and the frequency error (FE) [19]. Digital inputs (e.g., CB). |

| IEC 61131-3 based or FPGA processor | ||

| Transfer rate: 64 Kbps to 2 Mbps | ||

| Time-Synchronization based on global navigation satellite system or GPS. The standard acceptable error is in range of ±500 nanoseconds [19]. | ||

| Reporting rate based on IEC/IEEE 60255-118-1 are {10, 25, 50, 100} frame per second (fps) for 50 Hz system, and {10, 12, 15, 20, 30, 60, 120} fps for 60 Hz [19]. | ||

| DC | I/O: −32 analog input with 24 bits A/D resolution. −16 to 264 digital output for large scale protection RTU (ABB [8] Open Source [29]). | Voltages, Currents. Real power/energy. Digital inputs (e.g., CB). |

| Sensors with Very high sampling frequency (in range of 100 kHz) [57,59,60,61,62,63] due to the voltage fluctuations (approx. 1.6% per minute [13]) | ||

| Sensing time interval is within 30 ms. Transmitting time interval between RTU and MTU is within 10 ms [24]. |

| Category | Type | Bandwidth | Suitable for |

|---|---|---|---|

| Current Transformers/sensors | Electromagnetic (iron-core) | in kHz | AC |

| Rogowski coil Integrated with optical signal | in MHz | AC/DC | |

| Fibre optic CT | in MHz | AC/DC | |

| Zero-flux (Direct Current or Hall-effect CT) | in hundred kHz | DC | |

| Voltage Transformers/sensors | Inductive/Capacitive VT | in kHz | AC |

| Compensated RC-divider | in MHz | AC/DC | |

| Fibre optic VT | in MHz | AC/DC |

| I/O Type | Data Slots | Description |

|---|---|---|

| Analog | 5 | Voltages, Currents, Active & Reactive Power and Fault Location |

| Flags | 10–17 | Protection and Trips |

| Control | 2 | Circuit Breakers control |

| Status | 10–11 | Circuit Breakers status |

| Protocol | Message Application | Delay Tolerance (ms) |

|---|---|---|

| GOOSE | Fast trips | 3–10 |

| Fast commands/messages | 20–100 | |

| Measurements/Parameters | 100–500 | |

| SMV | Raw data | 3–10 |

| TS | Station bus | 1 |

| Process bus | 0.004–0.025 | |

| (Reset) | File Transfer | ≥1000 |

| Low–Medium speed | 100–500 |

| Data Type | AC Substations (S) | DC Substations (S) | Data Size [7] | ||

|---|---|---|---|---|---|

| Information | Within (ms) | Between and(ms) | Within (ms) | Between and(ms) | Range |

| Error Time Synchronization | <0.1 [52], <2 [19] | - | <0.020 [10] | <1000 [10] | Bytes |

| Protection | 5/4 for 50/60 Hz (1/4 cycle) | 8–12, 5–10 [28] | 0.1–0.5 [62,69] | 3–4 [62,69] | 10 s of Bytes |

| Monitoring and control | 16 | 1000 | 10 [70] | 250–500 [70,71] | 10 s of Bytes |

| Operation and Maintenance | 1000 | 10k | 1000 | 10k | 100 s of Bytes |

| Text Data | 2000 | 10k | 2000 | 10k | KB to MB |

| Files | 10k–60k | 30k–600k | 10k–60k | 30k–600k | KB to MB |

| Data Streams | 1000 | 1000 | 1000 | 1000 | KB to MB |

| Time Delay Type | Theoretical (ns) | Simulation (ns) |

|---|---|---|

| Propagation | 489.66 | 491.959 |

| Transmission | 306 | 326.6521 |

| Amplification | - | 0.0258 |

| Total | 795.66 | 818.6111 |

| Message Application | Data Packets | Sampling Rate (Hz) | Data Rate |

|---|---|---|---|

| Protection Signals | 50 Bytes | 1 | 400 bps |

| Measurements data | 102–198 Bytes | 1440 @ Non-Sync AC | 1147.5–2227.5 Kbps |

| 4096 @ 50Hz AC | 3264–6336 Kbps | ||

| Interlocks | 150 Bytes | 250 | 293 Kbps |

| Control Signals | 200 Bytes | 10 | 15.26 Kbps |

| File Transfer | 1 Mb | 1/3600 | 248 bps |

| Total Traffic | 1.422–2.477 Mbps | ||

| 3.489–6.489 Mbps |

| RTU Location/Type | Generated Data | Theoretical (ms) | Simulated (ms) |

|---|---|---|---|

| Line | 0.623 MB | 0.004867 | 0.005226 |

| Converter | 1.574 MB | 0.012296 | 0.01320355 |

| Generator (50 Hz) | 1.027 MB | 0.008023 | 0.008615 |

| Distance (km) | Theor. (ms) | Simu. (ms) | Distance (km) | Theor. (ms) | Simu. (ms) |

|---|---|---|---|---|---|

| 50 | 0.244834 | 0.24483625 | 250 | 1.224170 | 1.2241813 |

| 100 | 0.489668 | 0.4896725 | 300 | 1.469004 | 1.4690175 |

| 150 | 0.734502 | 0.73450875 | 350 | 1.713838 | 1.7138538 |

| 200 | 0.979336 | 0.979345 | 400 | 1.958672 | 1.958690 |

| Reference | Msg. Type | Delay Range (s) |

|---|---|---|

| 2016 [75] | GOOSE | 24–37.4 |

| SMV | 24 | |

| 2012 [76] | GOOSE | 14–35 |

| SMV | 26–23 |

| Type | D1 | D2 | D3 | D4 | D5 | D6 | D7 | D8 | D9 | D10 |

|---|---|---|---|---|---|---|---|---|---|---|

| Prop. | 2.44836 | 3.23183 | 0.004896 | 0.004896 | 0.004896 | 4.57843 | 1.95869 | 2.44836 | 4.01531 | 4.45602 |

| Tran. | 0.0485 | 0.05337 | 0.02181 | 0.02181 | 0.02181 | 0.02433 | 0.01946 | 0.01946 | 0.05337 | 0.11161 |

| Prot. | 0.187 | 0.2244 | 0.0748 | 0.0748 | 0.0748 | 0.187 | 0.1496 | 0.1496 | 0.2244 | 0.2992 |

| Data Conc. # | Generated Data | Theoretical (ms) | Simulated (ms) |

|---|---|---|---|

| #1 | 5.825 MB | 0.045507 | 0.048863 |

| #2 | 6.448 MB | 0.050375 | 0.054089 |

| #3 | 2.601 MB | 0.02032 | 0.021818 |

| #4 | 2.601 MB | 0.02032 | 0.021818 |

| #5 | 2.601 MB | 0.02032 | 0.021818 |

| #6 | 3.115 MB | 0.024336 | 0.0261302 |

| #7 | 2.492 MB | 0.019468 | 0.020904 |

| #8 | 2.492 MB | 0.019468 | 0.020904 |

| #10 | 13.519 MB | 0.105617 | 0.1134045 |

| Type | D1 | D2 | D3 | D4 | D5 | D6 | D7 | D8 | D10 |

|---|---|---|---|---|---|---|---|---|---|

| Prop. | 1.22418 | 1.46901 | 1.07728 | 0.97934 | 1.95869 | 0.97934 | 1.46901 | 1.71385 | 1.22418 |

| Data Set | Measurements Count | Elapsed Time (ms) |

|---|---|---|

| Power-injection only | 89 | 23.3709 |

| Powerflow and Injection | 140 | 26.3246 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ayiad, M.; Maggioli, E.; Leite, H.; Martins, H. Communication Requirements for a Hybrid VSC Based HVDC/AC Transmission Networks State Estimation. Energies 2021, 14, 1087. https://doi.org/10.3390/en14041087

Ayiad M, Maggioli E, Leite H, Martins H. Communication Requirements for a Hybrid VSC Based HVDC/AC Transmission Networks State Estimation. Energies. 2021; 14(4):1087. https://doi.org/10.3390/en14041087

Chicago/Turabian StyleAyiad, Motaz, Emily Maggioli, Helder Leite, and Hugo Martins. 2021. "Communication Requirements for a Hybrid VSC Based HVDC/AC Transmission Networks State Estimation" Energies 14, no. 4: 1087. https://doi.org/10.3390/en14041087

APA StyleAyiad, M., Maggioli, E., Leite, H., & Martins, H. (2021). Communication Requirements for a Hybrid VSC Based HVDC/AC Transmission Networks State Estimation. Energies, 14(4), 1087. https://doi.org/10.3390/en14041087