Development and Validation of a Data-Driven Fault Detection and Diagnosis System for Chillers Using Machine Learning Algorithms

Abstract

1. Introduction

- Complex process: Many researchers combine FDD methods and use data collected by the system and specific components. Analyzing and building such models consumes a lot of time and incurs high costs. For example, the residual method through PCA is suitable for chiller sensors but unsuitable for each component. The FDD model through PCA should determine the appropriate mathematical model for the data to distinguish normal data from fault data.

- Excessive data usage: A large data set exhibits efficient performance in FDD, but the data are difficult to obtain because the data can only be obtained when an actual fault occurs. The performance is sensitive not only to the amount of data, but also the composition of the data. Therefore, it is better to carefully select the necessary severity and type of data. However, several methods overuse fault data without determining the exact severity level and type required.

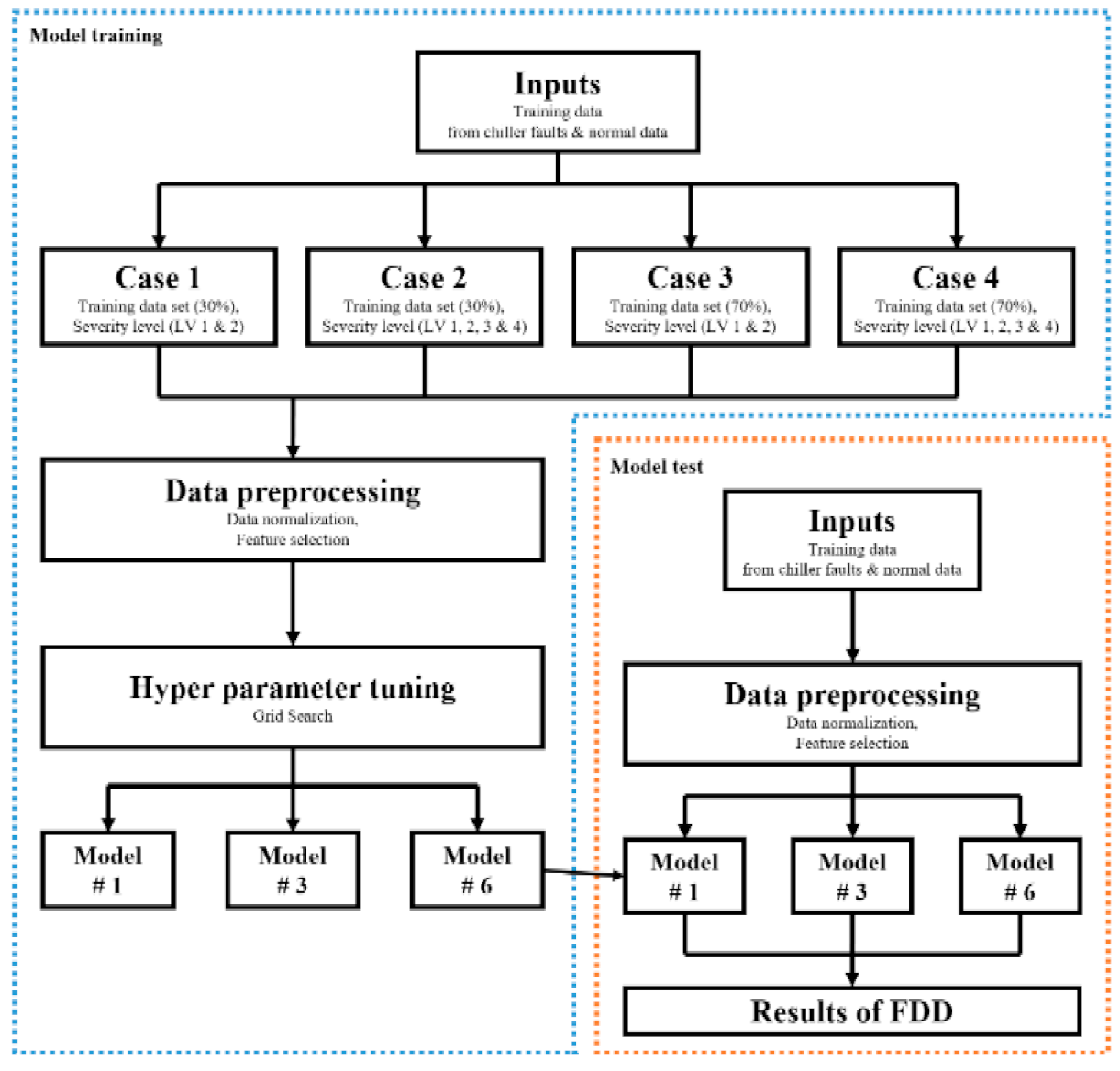

2. Methodology

2.1. Support Vector Machine

2.2. Logistic Regression

2.3. Random Forest

2.4. Extreme Gradient Boosting

3. System Description

4. Results

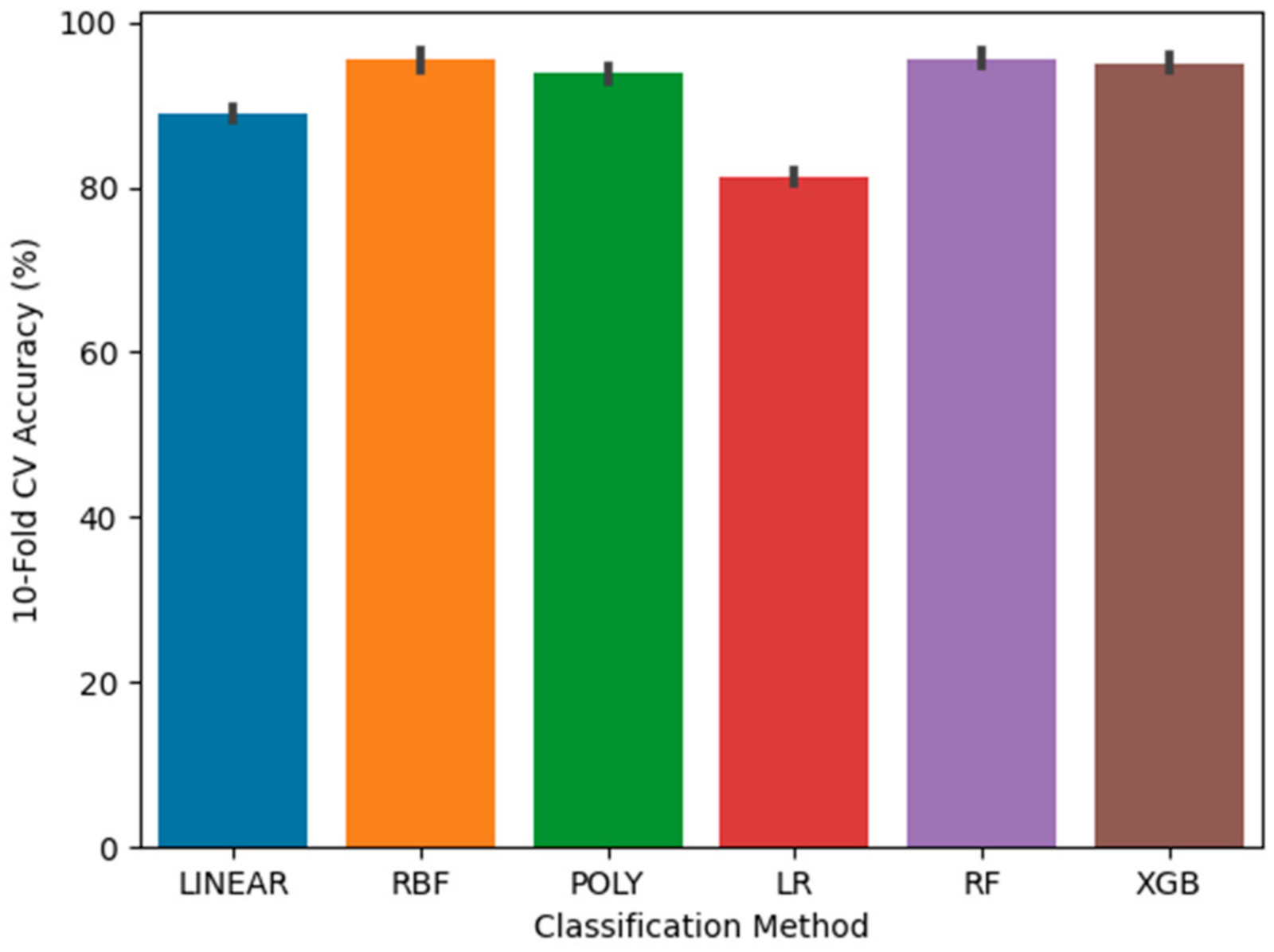

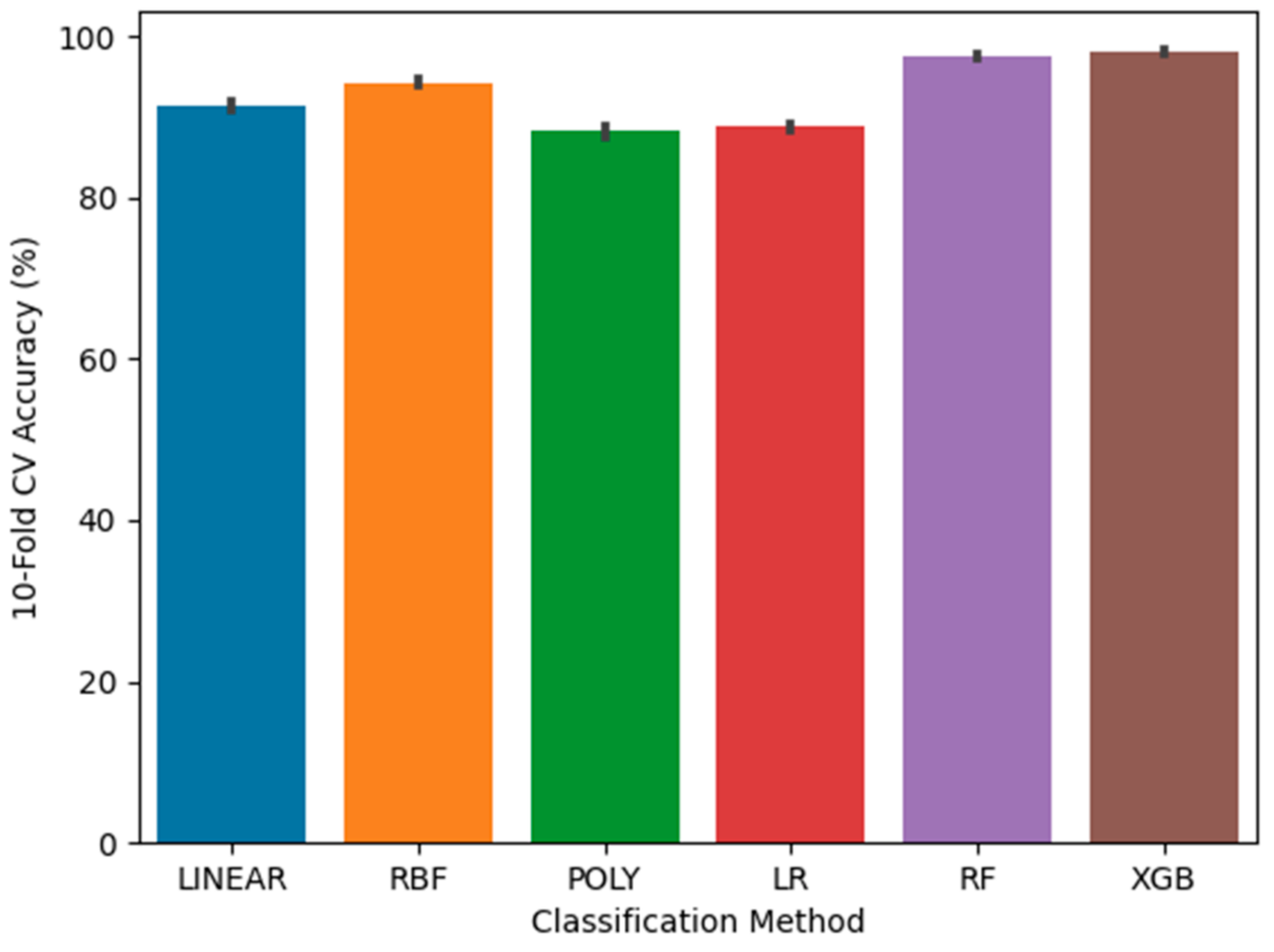

- Approach 1. First, we apply each classification method, which uses default parameters for the chiller data of the ASHRAE 1043-RP Project, compare the 10-fold CV accuracy for each default classification method.

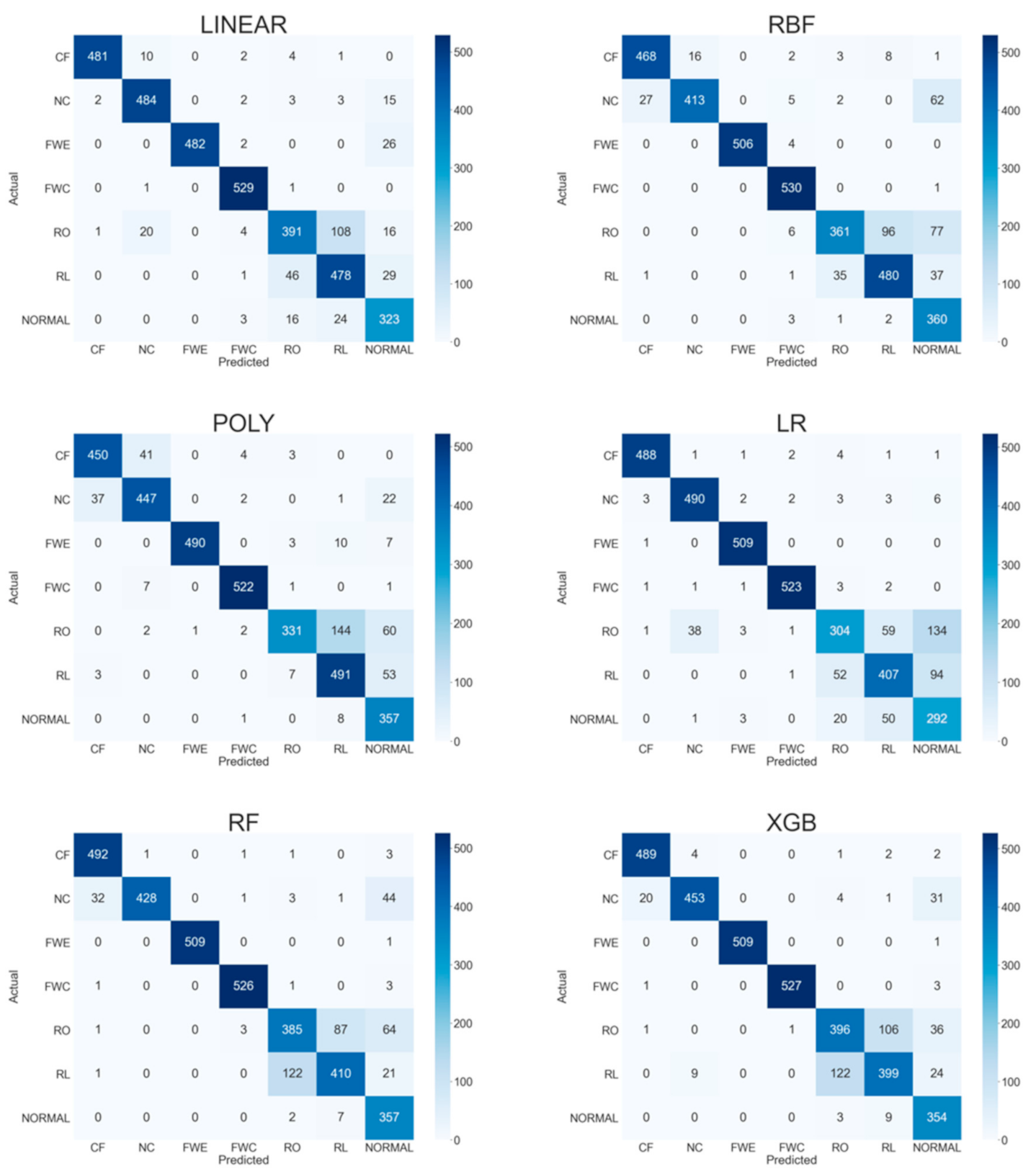

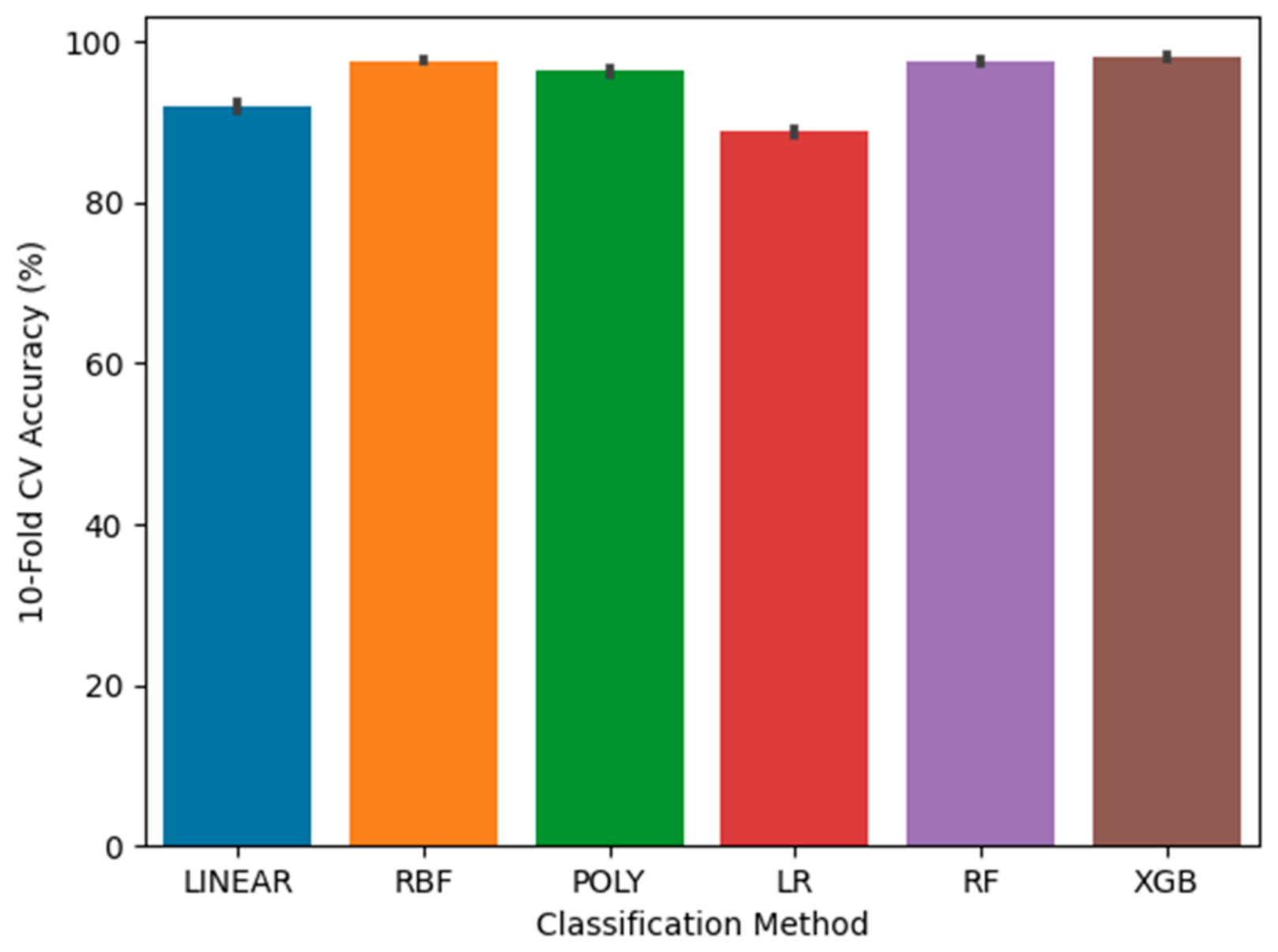

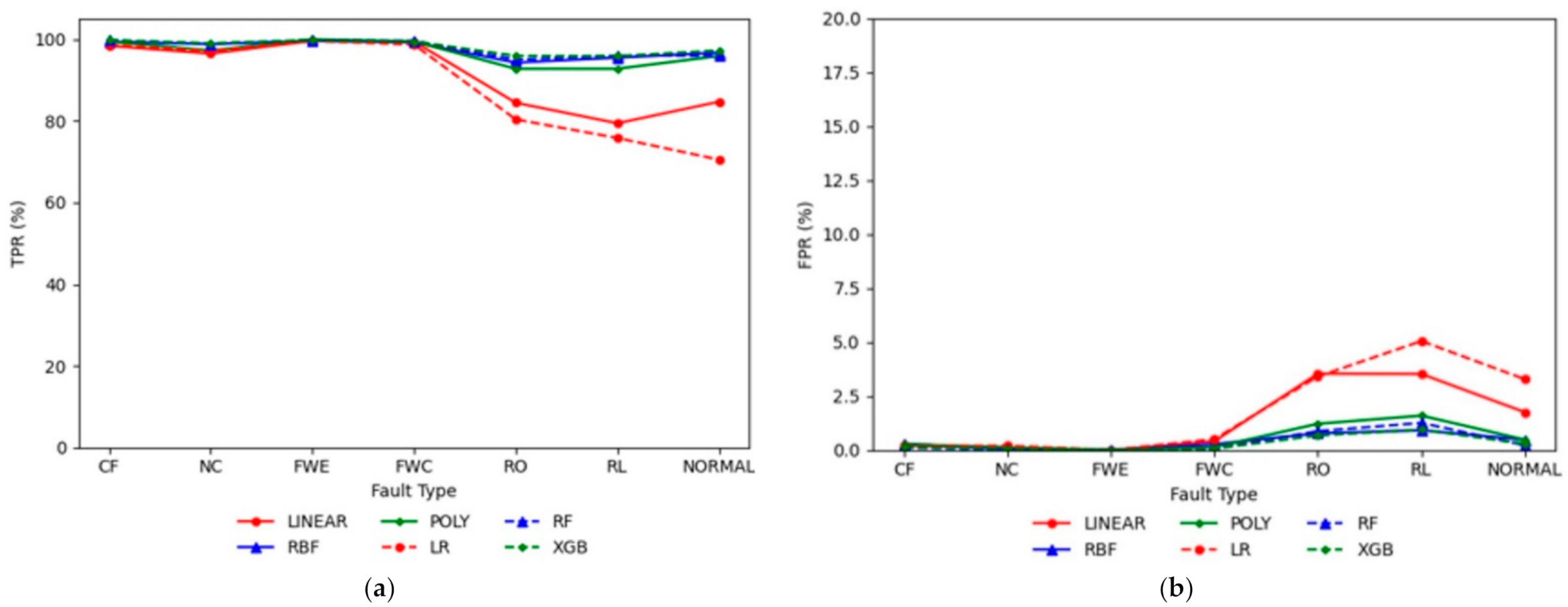

- Approach 2. Second, we apply each classification method, which uses the tuning parameters for the chiller data and compare the 10-fold CV accuracy of each classification method. The test results are discussed based on the confusion matrix, TPR, and FPR.

- Approach 3. Finally, we determine the best classification method for each case based on the results generated by approach 1 and 2.

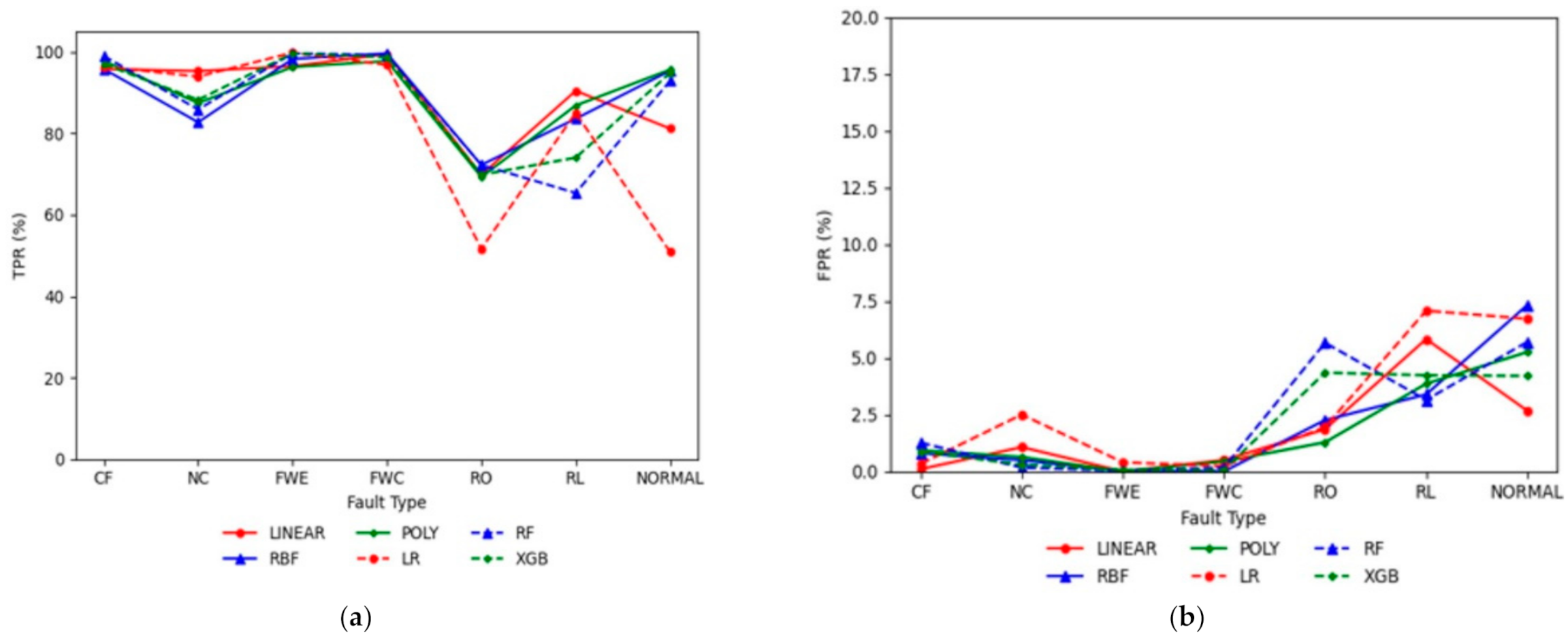

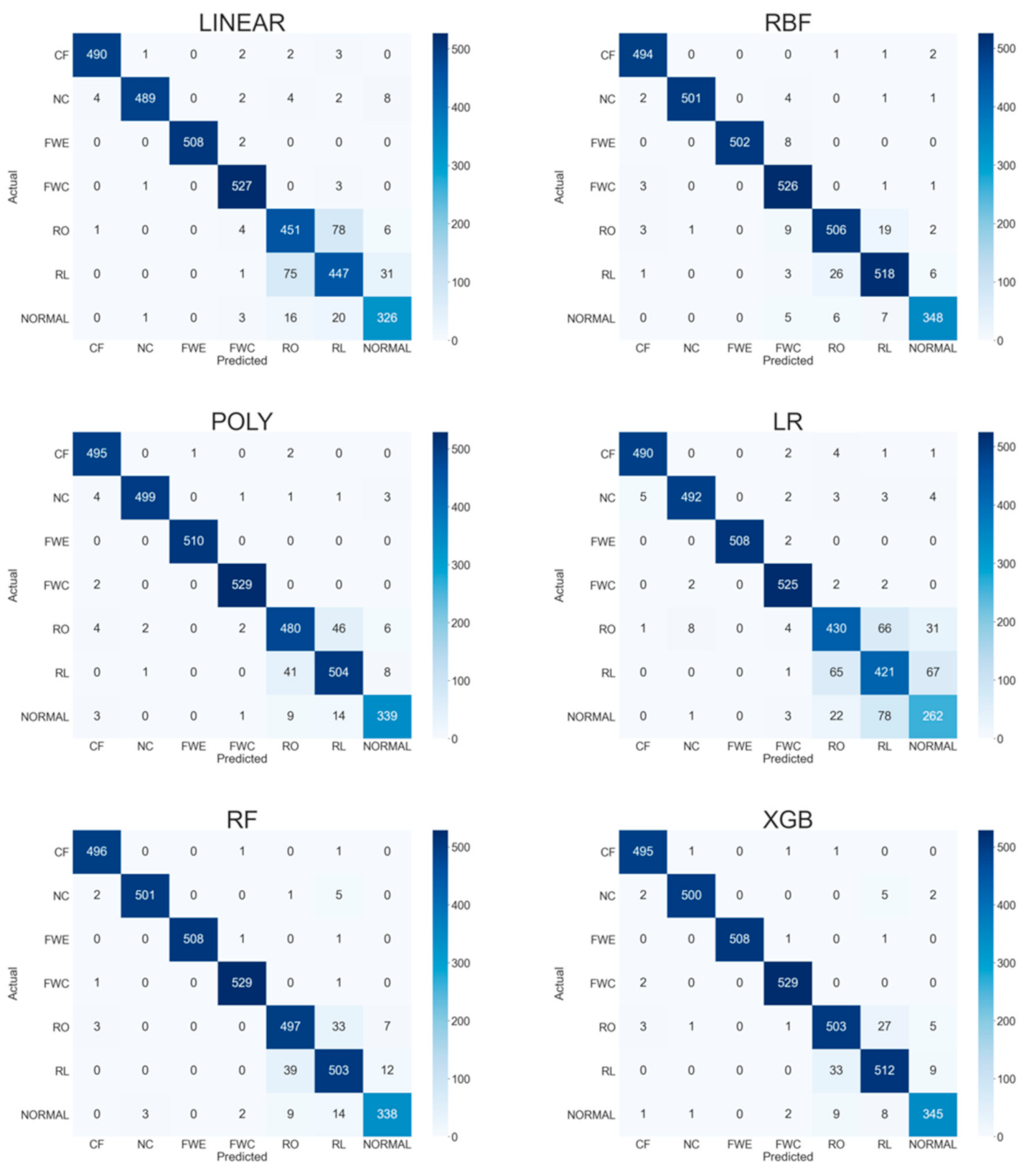

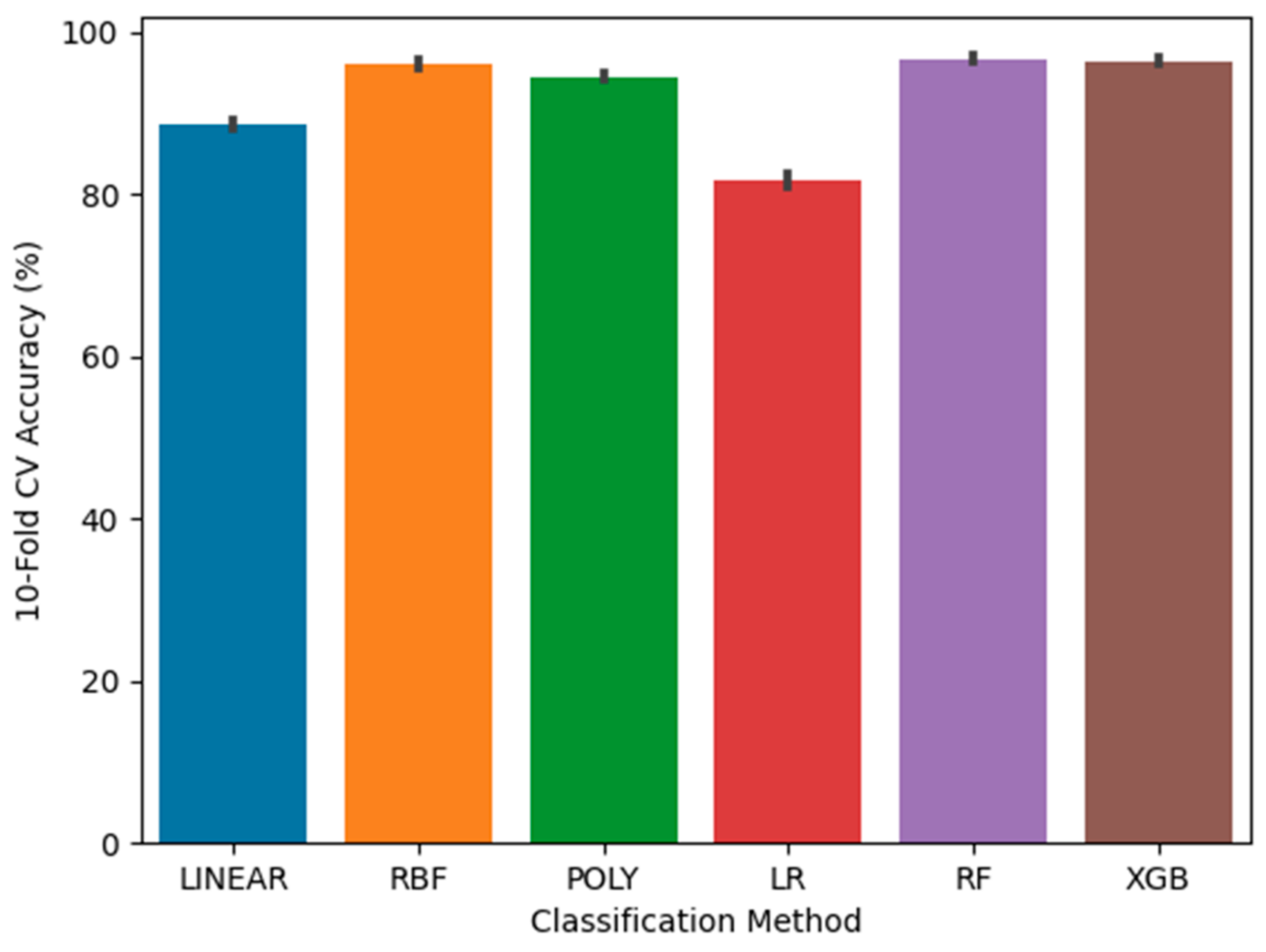

4.1. Case 1

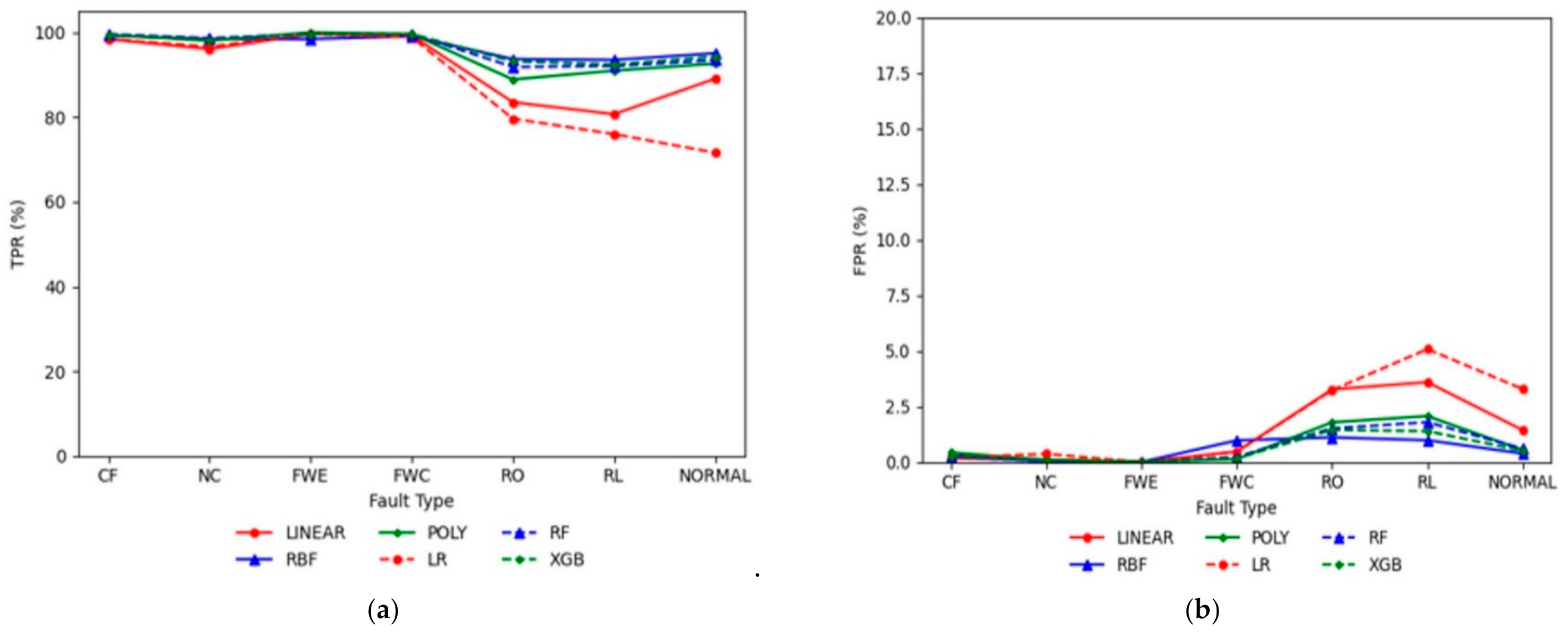

4.2. Case 2

4.3. Case 3

4.4. Case 4

5. Conclusions

- (1)

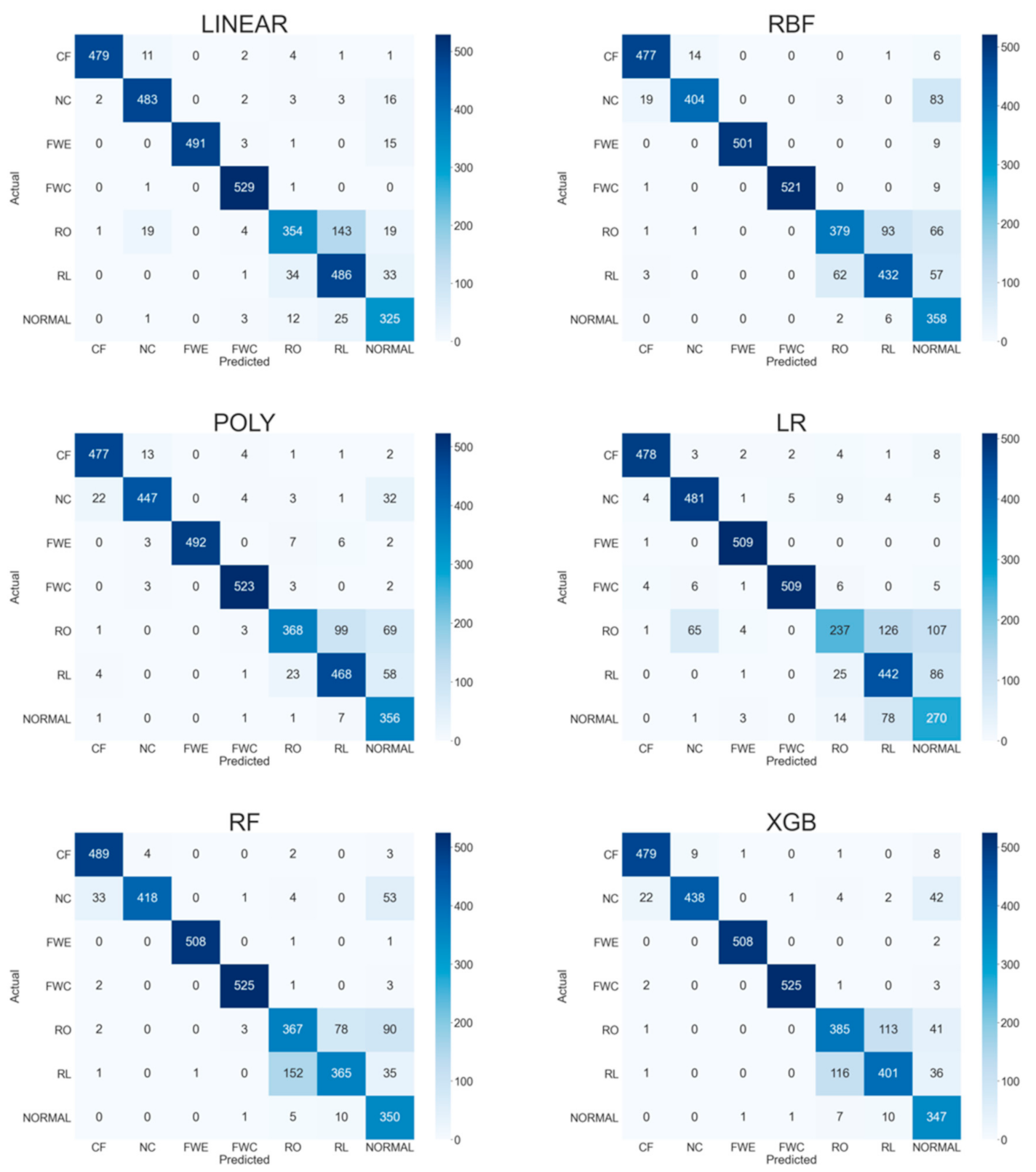

- Case 1: RBF and RF are the best classification methods, with average accuracy rates of 95.43% and 95.64%. For the local fault, the TPR values of Linear and LR are greater than 95%, and the FPR values of RBF, Poly, RF, and XGB are less than 2.0%. For fault detection, the RBF, Poly, RF, and XGB methods predicted correctly, and for fault diagnostics, Linear and LR predicted correctly. Pertaining to the system fault, TPR and FPR values are poor for all the classification methods. For fault detection and diagnostics, all the classification methods predicted incorrectly.

- (2)

- Case 2: XGB is the best classification method, with an average accuracy rate of 96.55%. For the local fault, the TPR values of all the classification methods are greater than 95%, and the FPR values of all the classification methods are lower than 2.0%. For fault detection and diagnostics, all the classification methods predicted correctly. Pertaining to the system fault, the TPR values of RBF, Poly, RF, and XGB are greater than 90%, and the FPR values of RBF, Poly, RF, and XGB are less than 2.5%. For fault detection and diagnostics, RBF, Poly, RF, and XGB predicted correctly.

- (3)

- Case 3: RF is the best classification method, with an average accuracy rate of 96.7%. For the local fault, the TPR values of Linear and LR are greater than 94%, and the FPR values of all the classification methods are lower than 2.5%. In fault detection, all the classification methods predicted correctly, and in fault diagnostics, Linear and LR predicted correctly. For the system fault, the TPR and FPR values of all the classification methods are poor.

- (4)

- Case 4: XGB is the best classification method, with an average accuracy rate of 98.08%. For the local fault, the TPR values of all the classification methods are greater than 95%, and in Case 2, the FPR values of all the classification methods are ~0%, which is lower than that of Case 2. In fault detection and diagnostics, all the classification methods predicted correctly. With regard to the system fault, the TPR values of RBF, Poly, RF, and XGB are greater than 94%, and the FPR values of RBF, Poly, RF, and XGB are less than 1.6%. In fault detection and diagnostics, RBF, Poly, RF, and XGB predicted correctly.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Nomenclature

| true positive | |

| true negative | |

| false positive | |

| false negative | |

| true positive rate | |

| false positive rate | |

| level for severity | |

| sign function | |

| weight vector for SVM method | |

| training data | |

| input vector for training data | |

| bias of hyperplane in SVM method | |

| control tradeoff in SVM method | |

| distance from decision boundary | |

| hyperparameter gamma in RBF and Polynomial methods | |

| degree of polynomial method | |

| parameter for polynomial method | |

| kernel function for input vector in SVM method | |

| sigmoid logistic function for logistic regression method | |

| output data for logistic regression method | |

| prediction value for XGB method | |

| regularization function for XGB method | |

| functional space for XGB method | |

| loss function for XGB method | |

| TEO | Temperature of evaporator water out |

| TCO | Temperature of condenser water out |

| FWC | Flow rate of condenser water |

| TRC | Saturated Refrigerant temperature in condenser |

| TR_dis | Refrigerant discharge temperature |

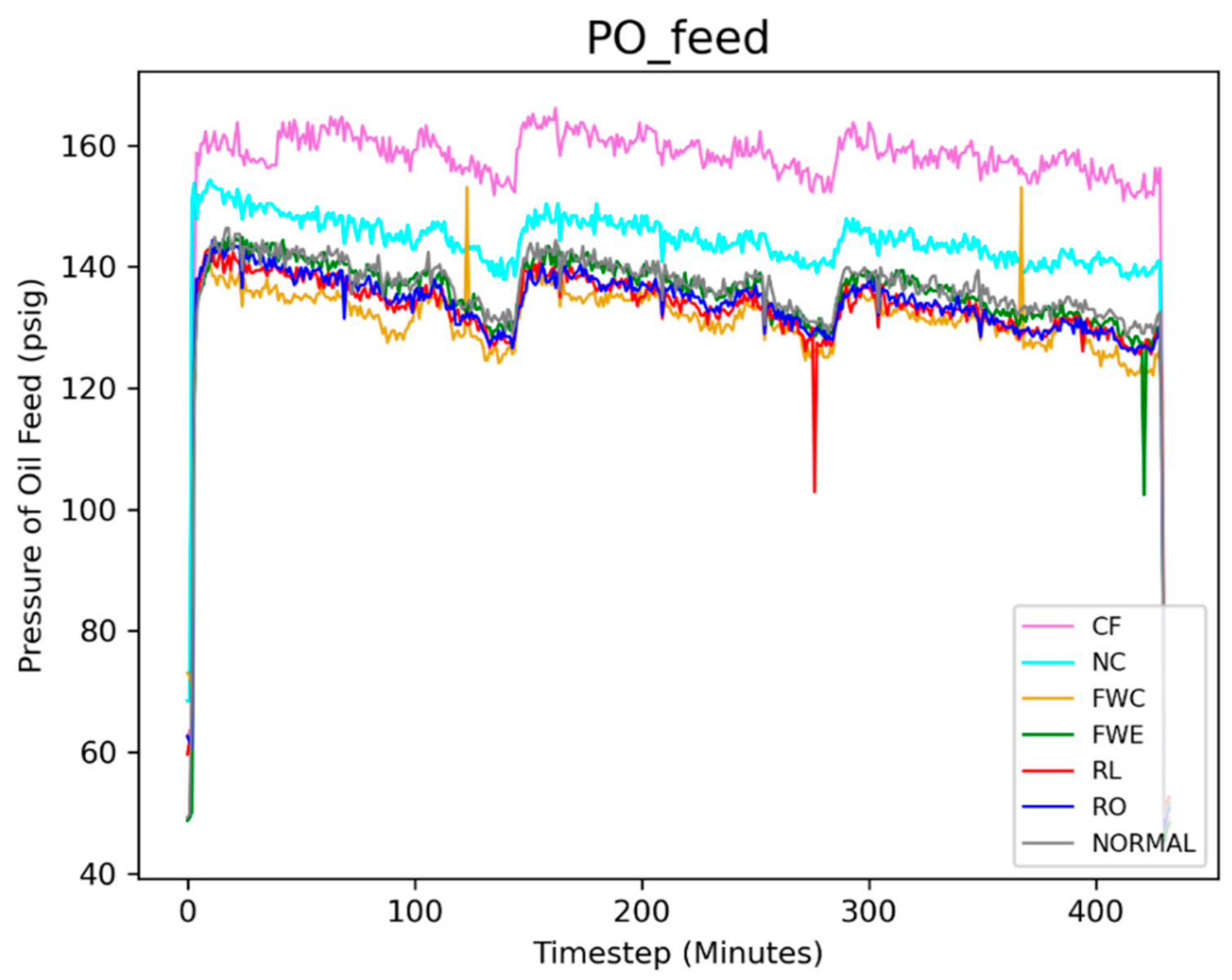

| PO_feed | Pressure of oil feed |

| VE | Evaporator valve position |

| TWI | Temperature of city water in |

References

- Katipamula, S.; Brambley, M.R. Methods for fault detection, diagnostics, and prognostics for building systems—A review, part I. HvacR Res. 2005, 11, 3–25. [Google Scholar] [CrossRef]

- Gordon, J.M.; Ng, K.C.; Chua, H.T. Centrifugal chillers, thermodynamic modelling and a diagnostic case study. Int. J. Refrig. 1995, 18, 253–257. [Google Scholar] [CrossRef]

- Han, H.; Gu, B.; Hong, Y.; Kang, J. Automated FDD of multiple-simultaneous faults (MSF) and the application to building chillers. Energy Build. 2011, 43, 2524–2532. [Google Scholar] [CrossRef]

- Comstock, M.C.; Braun, J.E.; Groll, E.A. A survey of common faults for chillers/Discussion. Ashrae Trans. 2002, 108, 819. [Google Scholar]

- Comstock, M.C.; Braun, J.E.; Bernhard, R. Experimental Data from Fault Detection and Diagnostic Studies on a Centrifugal Chiller; Purdue University: West Lafayette, IN, USA, 1999. [Google Scholar]

- Wang, S.; Cui, J. Sensor-fault detection, diagnosis and estimation for centrifugal chiller systems using principal-component analysis method. Appl. Energy 2005, 82, 197–213. [Google Scholar] [CrossRef]

- Han, H.; Gu, B.; Kang, J.; Li, Z.R. Study on a hybrid SVM model for chiller FDD applications. Appl. Therm. Eng. 2011, 31, 582–592. [Google Scholar] [CrossRef]

- Zhao, Y.; Wang, S.; Xiao, F. A statistical fault detection and diagnosis method for centrifugal chillers based on exponentially-weighted moving average control charts and support vector regression. Appl. Therm. Eng. 2013, 51, 560–572. [Google Scholar] [CrossRef]

- Hu, Y.; Chen, H.; Xie, J.; Yang, X.; Zhou, C. Chiller sensor fault detection using a self-adaptive principal component analysis method. Energy Build. 2012, 54, 252–258. [Google Scholar] [CrossRef]

- Yan, K.; Ji, Z.; Shen, W. Online fault detection methods for chillers combining extended kalman filter and recursive one-class SVM. Neurocomputing 2017, 228, 205–212. [Google Scholar] [CrossRef]

- Fan, Y.; Cui, X.; Han, H.; Lu, H. Feasibility and improvement of fault detection and diagnosis based on factory-installed sensors for chillers. Appl. Therm. Eng. 2020, 164, 114506. [Google Scholar] [CrossRef]

- Han, H.; Cui, X.; Fan, Y.; Qing, H. Least squares support vector machine (LS-SVM)-based chiller fault diagnosis using fault indicative features. Appl. Therm. Eng. 2019, 154, 540–547. [Google Scholar] [CrossRef]

- Garreta, R.; Moncecchi, G. Learning Scikit-Learn: Machine Learning in Python; Packt Publishing Ltd: Birmingham, UK, 2013. [Google Scholar]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; Association for Computing Machinery : New York, NY, USA, 2016; pp. 785–794. [Google Scholar]

- Li, G.; Hu, Y.; Chen, H.; Shen, L.; Li, H.; Hu, M.; Liu, J.; Sun, K. An improved fault detection method for incipient centrifugal chiller faults using the PCA-R-SVDD algorithm. Energy Build. 2016, 116, 104–113. [Google Scholar] [CrossRef]

- Zhao, Y.; Wang, S.; Xiao, F. Pattern recognition-based chillers fault detection method using support vector data description (SVDD). Appl. Energy 2013, 112, 1041–1048. [Google Scholar] [CrossRef]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- James, G.; Witten, D.; Hastie, T.; Tibshirani, R. An Introduction to Statistical Learning; Springer: New York, NY, USA, 2013. [Google Scholar]

- Witten, I.H.; Frank, E.; Hall, M.A.; Pal, C.J. Practical Machine Learning Tools and Techniques; Morgan Kaufmann: Burlington, MA, USA, 2005; p. 578. [Google Scholar]

- Vapnik, V.; Vapnik, V. Statistical Learning Theory; Wiley: New York, NY, USA, 1998; Volume 1, p. 2. [Google Scholar]

- Support Vector Machines. Available online: https://scikit-learn.org/stable/modules/svm.html (accessed on 31 December 2020).

- Hosmer, D.W., Jr.; Lemeshow, S.; Sturdivant, R.X. Applied Logistzic Regression; John Wiley & Sons: Hoboken, NJ, USA, 2013. [Google Scholar]

- Yu, F.W.; Ho, W.T.; Chan, K.T.; Sit, R.K. Logistic regression-based optimal control for air-cooled chiller. Int. J. Refrig. 2018, 85, 200–212. [Google Scholar] [CrossRef]

- Wackerly, D.; Mendenhall, W.; Scheaffer, R.L. Mathematical Statistics with Applications; Cengage Learning: Boston, MA, USA, 2014. [Google Scholar]

- Casella, G.; Berger, R.L. Statistical Inference; Cengage Learning: Boston, MA, USA, 2021. [Google Scholar]

- Biau, G.; Scornet, E. A random forest guided tour. Test 2016, 25, 197–227. [Google Scholar] [CrossRef]

- Maroco, J.; Silva, D.; Rodrigues, A.; Guerreiro, M.; Santana, I.; de Mendonça, A. Data mining methods in the prediction of Dementia: A real-data comparison of the accuracy, sensitivity and specificity of linear discriminant analysis, logistic regression, neural networks, support vector machines, classification trees and random forests. BMC Res. Notes 2011, 4, 299. [Google Scholar] [CrossRef] [PubMed]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Yu, F.W.; Ho, W.T.; Chan, K.T.; Sit, R.K.; Yang, J. Economic analysis of air-cooled chiller with advanced heat rejection. Int. J. Refrig. 2017, 73, 54–64. [Google Scholar] [CrossRef]

- Chakraborty, D.; Elzarka, H. Early detection of faults in HVAC systems using an XGBoost model with a dynamic threshold. Energy Build. 2019, 185, 326–344. [Google Scholar] [CrossRef]

- Comstock, M.C.; Braun, J.E.; Bernhard, R. Development of Analysis Tools for the Evaluation of Fault Detection and Diagnostics in Chillers; Purdue University: West Lafayette, IN, USA, 1999. [Google Scholar]

- Li, D.; Hu, G.; Spanos, C.J. A data-driven strategy for detection and diagnosis of building chiller faults using linear discriminant analysis. Energy Build. 2016, 128, 519–529. [Google Scholar] [CrossRef]

| Predicted Class | ||||||||

|---|---|---|---|---|---|---|---|---|

| CF | NC | FWE | FWC | RO | RL | NORMAL | ||

| Actual Class | CF | TN | TN | TN | FP | TN | TN | TN |

| NC | TN | TN | TN | FP | TN | TN | TN | |

| FWE | TN | TN | TN | FP | TN | TN | TN | |

| FWC | FN | FN | FN | TP | FN | FN | FN | |

| RO | TN | TN | TN | FP | TN | TN | TN | |

| RL | TN | TN | TN | FP | TN | TN | TN | |

| NORMAL | TN | TN | TN | FP | TN | TN | TN | |

| Fault Types | LV 1 | LV 2 | LV 3 | LV 4 | Methods |

|---|---|---|---|---|---|

| RO | 10% | 20% | 30% | 40% | Increasing the refrigerant charge by weight |

| RL | 10% | 20% | 30% | 40% | Reducing the refrigerant charge by weight |

| NC | 1% | 2% | 3% | 5% | Adding nitrogen by volume |

| FWE | 10% | 20% | 30% | 40% | Reducing water flow by percentage |

| FWC | 10% | 20% | 30% | 40% | Reducing water flow by percentage |

| CF | 12% | 20% | 30% | 45% | Plugging tubes by percentage |

| Dataset | Features |

|---|---|

| Input (x value) | TEO, TCO, FWC, TRC, TR_dis, PO_feed, VE, and TWI |

| Output (y value) | Fault types (RO, RL, NC, FWE, FWC, CF, and NORMAL) |

| Dataset | Case 1 | Case 2 | Case 3 | Case 4 |

|---|---|---|---|---|

| Training data | 1948 | 3507 | 4546 | 8183 |

| Test Data | 3508 | 3508 | 3508 | 3508 |

| Total Data | 5456 | 7015 | 8054 | 1,1691 |

| Models | Hyperparameters | Range of Values | Optimal Values |

|---|---|---|---|

| Linear | Regularization parameter, C | [0.001, 0.01, …, 1000, 10,000] | 100 |

| RBF | Regularization parameter, C | [1, 10, 100, 1000, 10,000] | 100 |

| Kernel coefficient, | [0.0001, 0.001, 0.01, 0.1, 1] | 1 | |

| Poly | Regularization parameter, C | [0.001, 0.01, …, 1000, 10,000] | 1000 |

| Kernel coefficient, | [0.0001, 0.001, 0.01, 0.1, 1] | 0.1 | |

| LR | Inverse of regularization strength, C | [0.001, 0.01, …, 1000, 10,000] | 10 |

| RF | Maximum depth of the tree, max_depth | [10, 20, …, 80, 90, 100] | 80 |

| Number of trees, n_estimators | [100, 200, …, 800, 900, 1000] | 1000 | |

| XGB | Boosting learning rate, learning_rate | [0.001, 0.005, …, 0.1, 0.5, 1] | 1 |

| Maximum depth of the tree, max_depth | [1, 2, 3, 4, 5, 6, 7, 8, 9, 10] | 7 | |

| Minimum sum of instance weight, min_child_weight | [1, 10, 100, 1000] | 1 |

| Models | Hyperparameters | Range of Values | Optimal Values |

|---|---|---|---|

| Linear | Regularization parameter, C | [0.001, 0.01,, 1000, 10,000] | 10 |

| RBF | Regularization parameter, C | [1, 10, 100, 1000, 10,000] | 1000 |

| Kernel coefficient, | [0.0001, 0.001, 0.01, 0.1, 1] | 1 | |

| Poly | Regularization parameter, C | [0.001, 0.01,, 1000, 10,000] | 1000 |

| Kernel coefficient, | [0.0001, 0.001, 0.01, 0.1, 1] | 0.1 | |

| LR | Inverse of regularization strength, C | [0.001, 0.01,, 1000, 10,000] | 1 |

| RF | Maximum depth of the tree, max_depth | [10, 20,, 80, 90, 100] | 20 |

| Number of trees, n_estimators | [100, 200,, 800, 900, 1000] | 200 | |

| XGB | Boosting learning rate, learning_rate | [0.001, 0.005, , 0.1, 0.5, 1] | 0.5 |

| Maximum depth of the tree, max_depth | [1, 2, 3, 4, 5, 6, 7, 8, 9, 10] | 7 | |

| Minimum sum of instance weight, min_child_weight | [1, 10, 100, 1000] | 1 |

| Models | Hyperparameters | Range of Values | Optimal Values |

|---|---|---|---|

| Linear | Regularization parameter, C | [0.001, 0.01,, 1000, 10,000] | 100 |

| RBF | Regularization parameter, C | [1, 10, 100, 1000, 10,000] | 100 |

| Kernel coefficient, | [0.0001, 0.001, 0.01, 0.1, 1] | 1 | |

| Poly | Regularization parameter, C | [0.001, 0.01,, 1000, 10,000] | 100 |

| Kernel coefficient, | [0.0001, 0.001, 0.01, 0.1, 1] | 1 | |

| LR | Inverse of regularization strength, C | [0.001, 0.01,, 1000, 10,000] | 1 |

| RF | Maximum depth of the tree, max_depth | [10, 20,, 80, 90, 100] | 50 |

| Number of trees, n_estimators | [100, 200,, 800, 900, 1000] | 600 | |

| XGB | Boosting learning rate, learning_rate | [0.001, 0.005, , 0.1, 0.5, 1] | 0.5 |

| Maximum depth of the tree, max_depth | [1, 2, 3, 4, 5, 6, 7, 8, 9, 10] | 9 | |

| Minimum sum of instance weight, min_child_weight | [1, 10, 100, 1000] | 1 |

| Models | Hyperparameters | Range of Values | Optimal Values |

|---|---|---|---|

| Linear | Regularization parameter, C | [0.001, 0.01,, 1000, 10,000] | 10,000 |

| RBF | Regularization parameter, C | [1, 10, 100, 1000, 10,000] | 1000 |

| Kernel coefficient, | [0.0001, 0.001, 0.01, 0.1, 1] | 1 | |

| Poly | Regularization parameter, C | [0.001, 0.01,, 1000, 10,000] | 10 |

| Kernel coefficient, | [0.0001, 0.001, 0.01, 0.1, 1] | 1 | |

| LR | Inverse of regularization strength, C | [0.001, 0.01,, 1000, 10,000] | 1 |

| RF | Maximum depth of the tree, max_depth | [10, 20,, 80, 90, 100] | 40 |

| Number of trees, n_estimators | [100, 200,, 800, 900, 1000] | 100 | |

| XGB | Boosting learning rate, learning_rate | [0.001, 0.005, , 0.1, 0.5, 1] | 0.5 |

| Maximum depth of the tree, max_depth | [1, 2, 3, 4, 5, 6, 7, 8, 9, 10] | 8 | |

| Minimum sum of instance weight, min_child_weight | [1, 10, 100, 1000] | 1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, I.; Kim, W. Development and Validation of a Data-Driven Fault Detection and Diagnosis System for Chillers Using Machine Learning Algorithms. Energies 2021, 14, 1945. https://doi.org/10.3390/en14071945

Kim I, Kim W. Development and Validation of a Data-Driven Fault Detection and Diagnosis System for Chillers Using Machine Learning Algorithms. Energies. 2021; 14(7):1945. https://doi.org/10.3390/en14071945

Chicago/Turabian StyleKim, Icksung, and Woohyun Kim. 2021. "Development and Validation of a Data-Driven Fault Detection and Diagnosis System for Chillers Using Machine Learning Algorithms" Energies 14, no. 7: 1945. https://doi.org/10.3390/en14071945

APA StyleKim, I., & Kim, W. (2021). Development and Validation of a Data-Driven Fault Detection and Diagnosis System for Chillers Using Machine Learning Algorithms. Energies, 14(7), 1945. https://doi.org/10.3390/en14071945