Data-Driven Voltage Prognostic for Solid Oxide Fuel Cell System Based on Deep Learning

Abstract

:1. Introduction

2. Experimental Scheme and Data Analysis

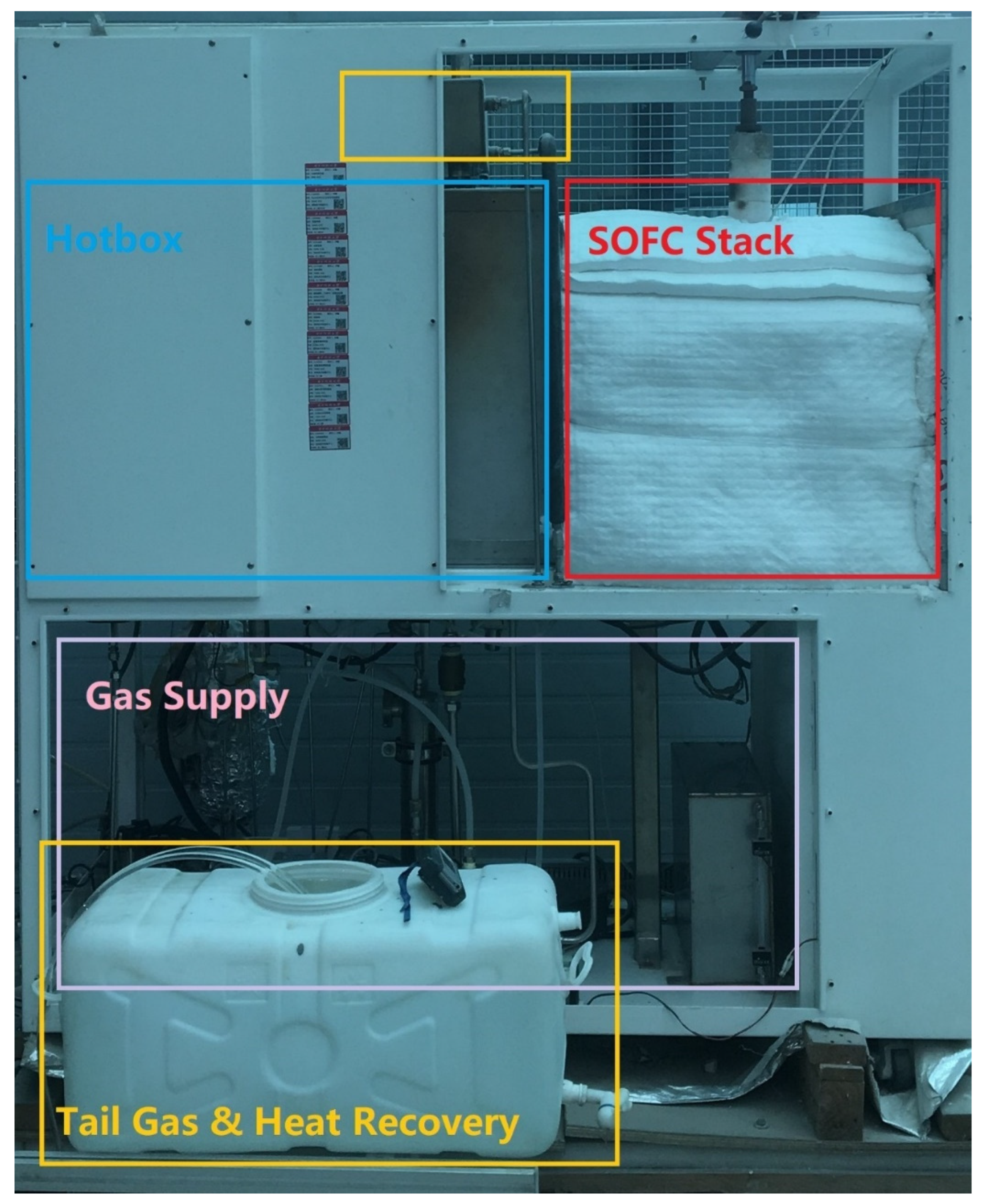

2.1. System Structure

2.2. Experimental Scheme and Data Analysis

- There was high-frequency dithering at 150,000–300,000 s at the anode inlet temperature of the stack (Figure 4b);

- The reconditioner temperature fluctuated slightly throughout the whole operation (Figure 4c);

- There was a sudden, significant drop in the heat-exchanger cathode inlet temperature after 600,000 s, with gas supply not changing significantly and only gas pressure fluctuating (Figure 4c);

- There was a steep rise and fall in the temperature of the exhaust combustion chamber with a high frequency of jitter in the inlet temperature values between 100,000 and 300,000 s (Figure 4c).

3. Prognostic Method for the Degradation of the SOFC System

3.1. Neural Network

3.1.1. Recurrent Neural Network

3.1.2. Long Short-Term Memory

3.1.3. Gated Recurrent Unit

3.2. RNN-Based Encoder–Decoder

3.3. Data Processing

3.4. Prognostic Method Framework

- Raw data from short-term degradation experiments of SOFC systems were collected and pre-processed, including data culling, feature selection, normalization, etc.

- For the processed data, the first 7500 min were used as the training set and the last 7500 min as the test set, where 20% of the training set was randomly selected as the validation set.

- The relevant parameters for the encoder–decoder LSTM/GRU were selected. Since there were four features, the input layer had four nodes and the number of nodes in the hidden layer was set to 32. There was a fully connected layer of 10 nodes between the hidden layer and the output layer. Finally, since the output of the model was a stack voltage, the output layer had only 1 node.

- The relevant training hyperparameters were determined, including time step, batch size, and epoch.

- The optimizer and loss function for the model were selected, the model was trained using the training set, the predicted voltage of the test set was compared with the true value, and the result was evaluated.

4. Results and Discussion

4.1. Evaluation Criteria

4.2. Results of the LSTM-Based Model

4.3. Results of the GRU-Based Model

5. Conclusions

- The results show that the proposed encoder–decoder model can effectively achieve high prediction accuracy under realistic fuel cell operating conditions. Encoder–decoder LSTM and encoder–decoder GRU RNN models had RMSE errors (test phase) of 0.015121 and 0.014966, respectively, whereas the LSTM and GRU models had corresponding values of 0.017050 and 0.017456, which proves that the encoder–decoder RNN had higher performance.

- The proposed model still had some predictive tracking ability for large changes in the data. When the training data changed less, the prediction model had better and more reliable performance compared to the existing work.

- The proposed model can be tested for predictive performance by varying the sliding time step as well as the number of input sequences to suit different SOFC systems and even different fuel cell systems.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Revankar, S.; Majumdar, P. Fuel Cells: Principles, Design, and Analysis; CRC Press: Boca Raton, FL, USA, 2014; ISBN 9781420089684. [Google Scholar]

- Damo, U.M.; Ferrari, M.L.; Turan, A.; Massardo, A.F. Solid Oxide Fuel Cell Hybrid System: A Detailed Review of an Environmentally Clean and Efficient Source of Energy. Energy 2019, 168, 235–246. [Google Scholar]

- Wu, X.L.; Xu, Y.W.; Xue, T.; Shuai, J.; Jiang, J.; Deng, Z.; Fu, X.; Li, X. Control-Oriented Fault Detection of Solid Oxide Fuel Cell System Unknown Input on Fuel Supply. Asian J. Control 2019, 21, 1824–1835. [Google Scholar]

- Xu, H.; Ma, J.; Tan, P.; Chen, B.; Wu, Z.; Zhang, Y.; Wang, H.; Xuan, J.; Ni, M. Towards Online Optimisation of Solid Oxide Fuel Cell Performance: Combining Deep Learning with Multi-Physics Simulation. Energy AI 2020, 1, 100003. [Google Scholar] [CrossRef]

- Bello, I.T.; Zhai, S.; Zhao, S.; Li, Z.; Yu, N.; Ni, M. Scientometric Review of Proton-Conducting Solid Oxide Fuel Cells. Int. J. Hydrog. Energy 2021, 46, 37406–37428. [Google Scholar] [CrossRef]

- Faheem, H.H.; Abbas, S.Z.; Tabish, A.N.; Fan, L.; Maqbool, F. A Review on Mathematical Modelling of Direct Internal Reforming- Solid Oxide Fuel Cells. J. Power Sources 2022, 520, 230857. [Google Scholar]

- Yuan, Z.; Wang, W.; Wang, H.; Ghadimi, N. Probabilistic Decomposition-Based Security Constrained Transmission Expansion Planning Incorporating Distributed Series Reactor. IET Gener. Transm. Distrib. 2020, 14, 3478–3487. [Google Scholar] [CrossRef]

- Huang, Y.; Turan, A. Fuel Sensitivity and Parametric Optimization of SOFC—GT Hybrid System Operational Characteristics. Therm. Sci. Eng. Prog. 2019, 14, 100407. [Google Scholar] [CrossRef]

- Yang, W.J.; Park, S.K.; Kim, T.S.; Kim, J.H.; Sohn, J.L.; Ro, S.T. Design Performance Analysis of Pressurized Solid Oxide Fuel Cell/Gas Turbine Hybrid Systems Considering Temperature Constraints. J. Power Sources 2006, 160, 462–473. [Google Scholar] [CrossRef]

- Hou, Q.; Zhao, H.; Yang, X. Economic Performance Study of the Integrated MR-SOFC-CCHP System. Energy 2019, 166, 236–245. [Google Scholar] [CrossRef]

- Zio, E. Prognostics and Health Management (PHM): Where Are We and Where Do We (Need to) Go in Theory and Practice. Reliab. Eng. Syst. Saf. 2022, 218, 108119. [Google Scholar] [CrossRef]

- Zhang, D.; Cadet, C.; Yousfi-Steiner, N.; Druart, F.; Bérenguer, C. PHM-Oriented Degradation Indicators for Batteries and Fuel Cells. Fuel Cells 2017, 17, 268–276. [Google Scholar] [CrossRef]

- Barelli, L.; Barluzzi, E.; Bidini, G. Diagnosis Methodology and Technique for Solid Oxide Fuel Cells: A Review. Int. J. Hydrog. Energy 2013, 38, 5060–5074. [Google Scholar] [CrossRef]

- Lanzini, A.; Madi, H.; Chiodo, V.; Papurello, D.; Maisano, S.; Santarelli, M.; van Herle, J. Dealing with Fuel Contaminants in Biogas-Fed Solid Oxide Fuel Cell (SOFC) and Molten Carbonate Fuel Cell (MCFC) Plants: Degradation of Catalytic and Electro-Catalytic Active Surfaces and Related Gas Purification Methods. Prog. Energy Combust. Sci. 2017, 61, 150–188. [Google Scholar] [CrossRef] [Green Version]

- Kuramoto, K.; Hosokai, S.; Matsuoka, K.; Ishiyama, T.; Kishimoto, H.; Yamaji, K. Degradation Behaviors of SOFC Due to Chemical Interaction between Ni-YSZ Anode and Trace Gaseous Impurities in Coal Syngas. Fuel Processing Technol. 2017, 160, 8–18. [Google Scholar] [CrossRef]

- Papurello, D.; Lanzini, A. SOFC Single Cells Fed by Biogas: Experimental Tests with Trace Contaminants. Waste Manag. 2018, 72, 306–312. [Google Scholar] [CrossRef]

- Parhizkar, T.; Hafeznezami, S. Degradation Based Operational Optimization Model to Improve the Productivity of Energy Systems, Case Study: Solid Oxide Fuel Cell Stacks. Energy Convers. Manag. 2018, 158, 81–91. [Google Scholar] [CrossRef]

- Tariq, F.; Ruiz-Trejo, E.; Bertei, A.; Boldrin, P.; Brandon, N.P. Chapter 5—Microstructural Degradation: Mechanisms, Quantification, Modeling and Design Strategies to Enhance the Durability of Solid Oxide Fuel Cell Electrodes. In Solid Oxide Fuel Cell Lifetime and Reliability; Brandon, N.P., Ruiz-Trejo, E., Boldrin, P., Eds.; Academic Press: Cambridge, MA, USA, 2017; pp. 79–99. ISBN 978-0-08-101102-7. [Google Scholar]

- Laurencin, J.; Delette, G.; Lefebvre-Joud, F.; Dupeux, M. A Numerical Tool to Estimate SOFC Mechanical Degradation: Case of the Planar Cell Configuration. J. Eur. Ceram. Soc. 2008, 28, 1857–1869. [Google Scholar] [CrossRef]

- Peng, J.; Huang, J.; Wu, X.L.; Xu, Y.W.; Chen, H.; Li, X. Solid Oxide Fuel Cell (SOFC) Performance Evaluation, Fault Diagnosis and Health Control: A Review. J. Power Sources 2021, 505, 230058. [Google Scholar] [CrossRef]

- Silva, R.E.; Gouriveau, R.; Jemeï, S.; Hissel, D.; Boulon, L.; Agbossou, K.; Yousfi Steiner, N. Proton Exchange Membrane Fuel Cell Degradation Prediction Based on Adaptive Neuro-Fuzzy Inference Systems. Int. J. Hydrogen Energy 2014, 39, 11128–11144. [Google Scholar] [CrossRef]

- Javed, K.; Gouriveau, R.; Zerhouni, N.; Hissel, D. Prognostics of Proton Exchange Membrane Fuel Cells Stack Using an Ensemble of Constraints Based Connectionist Networks. J. Power Sources 2016, 324, 745–757. [Google Scholar] [CrossRef]

- Morando, S.; Jemei, S.; Hissel, D.; Gouriveau, R.; Zerhouni, N. Proton Exchange Membrane Fuel Cell Ageing Forecasting Algorithm Based on Echo State Network. Int. J. Hydrogen Energy 2017, 42, 1472–1480. [Google Scholar] [CrossRef]

- Liu, H.; Chen, J.; Hou, M.; Shao, Z.; Su, H. Data-Based Short-Term Prognostics for Proton Exchange Membrane Fuel Cells. Int. J. Hydrog. Energy 2017, 42, 20791–20808. [Google Scholar] [CrossRef]

- Liu, H.; Chen, J.; Zhu, C.; Su, H.; Hou, M. Prognostics of Proton Exchange Membrane Fuel Cells Using a Model-Based Method. IFAC-PapersOnLine 2017, 50, 4757–4762. [Google Scholar] [CrossRef]

- Zhou, D.; Al-Durra, A.; Zhang, K.; Ravey, A.; Gao, F. Online Remaining Useful Lifetime Prediction of Proton Exchange Membrane Fuel Cells Using a Novel Robust Methodology. J. Power Sources 2018, 399, 314–328. [Google Scholar] [CrossRef]

- Jiang, H.; Xu, L.; Struchtrup, H.; Li, J.; Gan, Q.; Xu, X.; Hu, Z.; Ouyang, M. Modeling of Fuel Cell Cold Start and Dimension Reduction Simplification Method. J. Electrochem. Soc. 2020, 167, 044501. [Google Scholar] [CrossRef]

- Shao, Y.; Xu, L.; Zhao, X.; Li, J.; Hu, Z.; Fang, C.; Hu, J.; Guo, D.; Ouyang, M. Comparison of Self-Humidification Effect on Polymer Electrolyte Membrane Fuel Cell with Anodic and Cathodic Exhaust Gas Recirculation. Int. J. Hydrogen Energy 2020, 45, 3108–3122. [Google Scholar] [CrossRef]

- Arriagada, J.; Olausson, P.; Selimovic, A. Artificial Neural Network Simulator for SOFC Performance Prediction. J. Power Sources 2002, 112, 54–60. [Google Scholar] [CrossRef]

- Wu, X.L.; Xu, Y.W.; Xue, T.; Zhao, D.Q.; Jiang, J.; Deng, Z.; Fu, X.; Li, X. Health State Prediction and Analysis of SOFC System Based on the Data-Driven Entire Stage Experiment. Appl. Energy 2019, 248, 126–140. [Google Scholar] [CrossRef]

- Song, S.; Xiong, X.; Wu, X.; Xue, Z. Modeling the SOFC by BP Neural Network Algorithm. Int. J. Hydrogen Energy 2021, 46, 20065–20077. [Google Scholar] [CrossRef]

- Wu, X.; Ye, Q.; Wang, J. A Hybrid Prognostic Model Applied to SOFC Prognostics. Int. J. Hydrogen Energy 2017, 42, 25008–25020. [Google Scholar] [CrossRef]

- Dolenc, B.; Boškoski, P.; Stepančič, M.; Pohjoranta, A. Juričić State of Health Estimation and Remaining Useful Life Prediction of Solid Oxide Fuel Cell Stack. Energy Convers. Manag. 2017, 148, 993–1002. [Google Scholar] [CrossRef]

- Zheng, Y.; Wu, X.L.; Zhao, D.; Xu, Y.W.; Wang, B.; Zu, Y.; Li, D.; Jiang, J.; Jiang, C.; Fu, X.; et al. Data-Driven Fault Diagnosis Method for the Safe and Stable Operation of Solid Oxide Fuel Cells System. J. Power Sources 2021, 490, 229561. [Google Scholar] [CrossRef]

- Zhang, L.; Jiang, J.; Cheng, H.; Deng, Z.; Li, X. Control Strategy for Power Management, Efficiency-Optimization and Operating-Safety of a 5-KW Solid Oxide Fuel Cell System. Electrochim. Acta 2015, 177, 237–249. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Cho, K.; van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning Phrase Representations Using RNN Encoder-Decoder for Statistical Machine Translation. In Proceedings of the EMNLP 2014: Conference on Empirical Methods in Natural Language Processing, Doha, Qatar, 25–29 October 2014; pp. 1724–1734. [Google Scholar] [CrossRef]

- Xie, H.; Anuaruddin, M.; Ahmadon, B.; Yamaguchi, S. Evaluation of Rough Sets Data Preprocessing on Context-Driven Semantic Analysis with RNN. In Proceedings of the 2018 IEEE 7th Global Conference on Consumer Electronics (GCCE), Nara, Japan, 9–12 October 2018. [Google Scholar]

- Pola, S.; Sheela Rani Chetty, M. Behavioral Therapy Using Conversational Chatbot for Depression Treatment Using Advanced RNN and Pretrained Word Embeddings. Mater. Today Proc. 2021, in press. [Google Scholar] [CrossRef]

- Pascanu, R.; Mikolov, T.; Bengio, Y. Understanding the Exploding Gradient Problem. arXiv 2012, arXiv:1211.5063. [Google Scholar]

- Hochreiter, S. The Vanishing Gradient Problem during Learning Recurrent Neural Nets and Problem Solutions. Int. J. Uncertain. Fuzziness Knowl. Based Syst. 1998, 6, 107–116. [Google Scholar] [CrossRef] [Green Version]

| Features | ||

|---|---|---|

| Output voltage | Cathode air pressure | Reformer temperature |

| Output current | Bypass air pressure | Anode inlet temperature |

| Cathode air-flow rate | Anode input pressure | Cathode inlet temperature |

| Bypass air-flow rate | Cathode input pressure | Anode outlet temperature |

| Methane flow rate | Anode output pressure | Cathode outlet temperature |

| Input methane pressure | Cathode output pressure | Burner temperature |

| Training Set | Test Set | |||||||

|---|---|---|---|---|---|---|---|---|

| LSTM | Encoder–Decoder LSTM | GRU | Encoder–Decoder GRU | LSTM | Encoder–Decoder LSTM | GRU | Encoder–Decoder GRU | |

| MSE | 0.013956 | 0.011820 | 0.013129 | 0.011521 | 0.017050 | 0.014966 | 0.017456 | 0.015121 |

| MAE | 0.082145 | 0.059687 | 0.078887 | 0.057455 | 0.094432 | 0.084220 | 0.097976 | 0.086195 |

| R2 | 0.963418 | 0.981198 | 0.964254 | 0.982704 | 0.936420 | 0.964618 | 0.933110 | 0.961665 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, M.; Wu, J.; Chen, Z.; Dong, J.; Peng, Z.; Xiong, K.; Rao, M.; Chen, C.; Li, X. Data-Driven Voltage Prognostic for Solid Oxide Fuel Cell System Based on Deep Learning. Energies 2022, 15, 6294. https://doi.org/10.3390/en15176294

Li M, Wu J, Chen Z, Dong J, Peng Z, Xiong K, Rao M, Chen C, Li X. Data-Driven Voltage Prognostic for Solid Oxide Fuel Cell System Based on Deep Learning. Energies. 2022; 15(17):6294. https://doi.org/10.3390/en15176294

Chicago/Turabian StyleLi, Mingfei, Jiajian Wu, Zhengpeng Chen, Jiangbo Dong, Zhiping Peng, Kai Xiong, Mumin Rao, Chuangting Chen, and Xi Li. 2022. "Data-Driven Voltage Prognostic for Solid Oxide Fuel Cell System Based on Deep Learning" Energies 15, no. 17: 6294. https://doi.org/10.3390/en15176294