Weighting Key Performance Indicators of Smart Local Energy Systems: A Discrete Choice Experiment

Abstract

:1. Introduction

1.1. Multi-Criteria Decision Making

- Structure the decision problem and identify output;

- Specify the relevant criteria or indicators;

- Measure the performance of alternatives;

- Score the alternatives according to their impact on the criteria;

- Weight the individual criteria;

- Rank the alternatives based on scores and weights;

- Apply the outputs to support decision making.

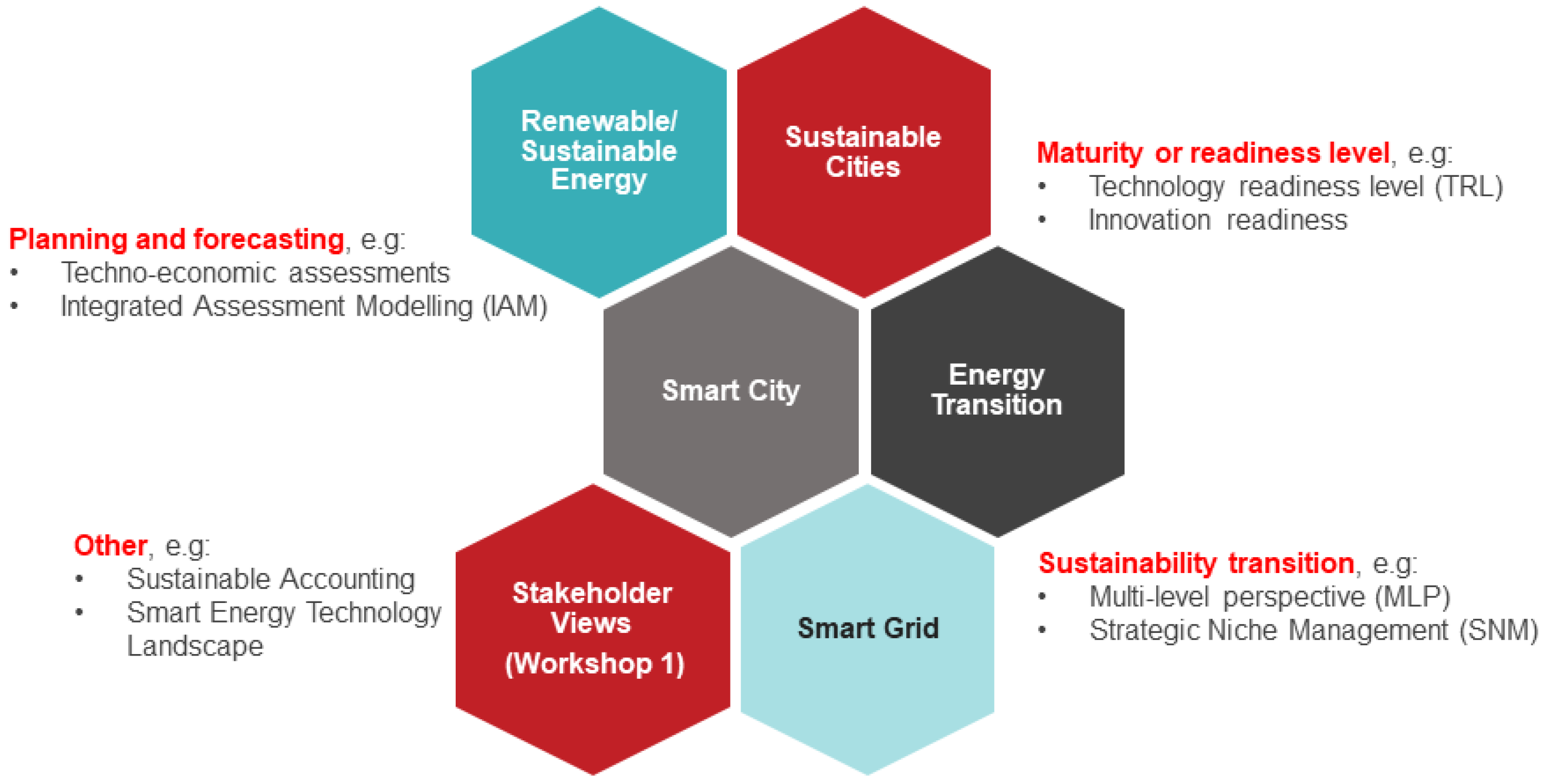

1.2. Criteria for a Smart Local Energy System

- Maturity or Readiness Level—Considering the readiness or maturity of a product and/or service, including: Technology Readiness Level—a de facto standard assessment tool used in aerospace, defence and technology [29]; Technology Performance Level—used to assess wave energy converters; or the Energy Transition Index—used to assess and compare electricity flexibility markets and determine their preparedness for energy transformation [30].

- Planning and Forecasting—Incorporating multiple criteria, such as the technical, economic, environmental and social influences of a product and/or service for planning or forecasting. For example, integrated assessment modelling—for evaluating sustainable energy systems MCDA, optimisation models and software tools) [31]—or the techno-ecological synergy (TES) framework—implemented to improve the sustainability of solar energy across four environments: land, food, water and built-up systems [32].

- Sustainability Transition—Considering the sustainability transition of products, services, processes, people and overall networked systems in their environments across multiple objectives. These include socio-technical transition frameworks, namely a multi-level perspective—which considers the alignment of the incumbent regime, radical “niche innovations” and the “socio-technical landscape” [33]—and strategic niche management—which facilitates the creation of protected spaces for experimentation on: the co-evolution of technology, user practices and regulatory structures [34].

- Other—Miscellaneous tools and indicators that have been used to measure the smartness and/or sustainability of homes, the electricity grid [35], cities [36,37,38,39] and integrated community energy systems (ICES) [40], as well as procedures involving sustainable accounting of six capitals—financial, manufactured, intellectual, social and relationship, human and natural—for assessing long-term viability of an organisation business model [41] and could be applied to the assessment of SLES.

- Data Management—Data gathering and security, provision of ICT and data infrastructure, including issues such as ICT accessibility and penetration

- Technical Performance—Technical performance, including indicators such as resilience, efficiency and innovation. All vectors: heat, power and transport.

- Business and Economics—Financial and economic performance, such as benefit-to-cost ratio, rate of return, financing, job creation and socio-economic impacts.

- Governance—The political and regulatory environment, including alignment with existing regulations and their interface with policy.

- People and Living—The impact on end users (education, ICT skills, engagement or acceptance) and their associated benefits on communities and social interactions (equity, housing conditions, culture or behaviour).

- Environment—The environmental performance, namely the impacts on climate change, human health, resource availability and use of waste energy.

2. Methodology

2.1. The PAPRIKA Method

2.2. Overview of Surveys

2.3. Main Survey

- Technical Performance;

- Data Management;

- Governance;

- People and Living;

- Business and Economics;

- Environment.

- Poor;

- Fair;

- Good;

- Very good;

- Excellent.

2.4. Thematic Surveys

- Greenhouse Gas Emissions or Fuel Poverty:

- –

- Increased;

- –

- Remains the same;

- –

- Decreased;

- –

- Significantly decreased;

- –

- Eliminated (for Greenhouse Gas Emissions, this was termed “Achieves net zero (eliminated)”).

- Revenue from Decarbonisation Activities:

- –

- None;

- –

- £;

- –

- ££;

- –

- £££;

- –

- ££££.

- Local Renewable Energy Generation:

- –

- None;

- –

- A little;

- –

- Moderate;

- –

- Quite a lot;

- –

- Extensive.

- Competitive Energy Pricing (note the four-point scale):

- –

- More expensive energy;

- –

- Parity with today’s prices;

- –

- Slightly cheaper energy;

- –

- Significantly cheaper energy.

3. Results and Discussion

3.1. Main Survey

3.2. Thematic Surveys

4. Conclusions and Policy Implications

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Taxonomy for Smart Local Energy System Assessment [12]

| No. | Theme | Sub-Theme | Previous Application |

|---|---|---|---|

| 1 | Data Security | Security | Smart-grid [35], Smart city [39] |

| Privacy | Smart-grid [35] | ||

| Trust | Smart-grid [35], Stakeholder consultation (1) [47] | ||

| 2 | Data Connectivity | Technology Enablers | Energy Transition [30] |

| ICT Infrastructure | Smart city [38,39], Smart-grid [35] | ||

| ICT Management | Smart city [38,39] | ||

| ICT Accessibility | Smart city [38,39] | ||

| 3 | Technical | Renewable fraction | RE [48], RE-Hybrid [49] |

| Reliability | Stakeholder consultation (1) [47], Solar-energy [32], Smart energy [50], Smart-grid [35], Sustainable energy [51], Wave & tidal energy [52] | ||

| Resilience | Stakeholder consultation (1) [47], Solar-energy [32], Smart-grid [35], Sustainable micro-grid [31] | ||

| Flexibility | Stakeholder consultation (1) [47], Smart-grid [35] | ||

| Scalability | Smart-grid [35], Sustainable micro-grid [31] | ||

| Efficiency | Energy [53], Stakeholder consultation (1) [47], Energy storage [54], Smart city [39], Smart energy [50], Smart-grid [35], Solar-energy [32] | ||

| Maturity | Energy storage [54], Sustainable micro-grid [31] | ||

| Lifespan | Energy [53], Sustainable micro-grid [31] | ||

| Grid accessibility | Energy Transition [30] | ||

| Innovation adaptation | Energy Transition [30], Smart city [39], Smart-grid [35], Sustainable energy [51] | ||

| 4 | Transport | Management | Smart city [38,39] |

| EV Infrastructure | Energy Transition [30], Smart city [38,39] | ||

| 5 | Economics | CBR | RE-Hybrid [49] |

| Cost | Energy [53], RE-Hybrid [49], Smart energy [50], Sustainable micro-grid [31], Waste management [55], Wave & tidal energy [52], | ||

| IRR | RE [48], RE-Hybrid [49] | ||

| LCOE | RE [48], RE-Hybrid [49], Energy [53] | ||

| Payback period | RE-Hybrid [49] | ||

| 6 | Business/Finance | Regulation | Energy Transition [30] |

| Compensation structures | Energy Transition [30] | ||

| Competitive cost | Stakeholder consultation (1) [47] | ||

| Investable | Stakeholder consultation (1) [47], Waste management [55], Wave & tidal energy [52] | ||

| Employment | RE-Hybrid [56], Smart city [39], Sustainable energy [51], Sustainable micro-grid [31] | ||

| 7 | Governance | Transparency | Energy Transition [30], Smart-grid [35] |

| Socioeconomic impact | Energy Transition [30] | ||

| Integrated management | Smart city [38] | ||

| Regulatory alignment | Energy Transition [30], Smart energy [50], Sustainable energy [51] | ||

| 8 | People | Education & Gender | Smart city [38,39], Smart-grid [35], Sustainable micro-grid [31], Waste management [55] |

| ICT Skills | Stakeholder consultation (1) [47], Smart energy [50] | ||

| Participation | Stakeholder consultation (1) [47], Smart city [38,39], Sustainable energy [51] | ||

| Acceptance | Wave & tidal energy [52], Energy storage [54], Smart energy [50], Sustainable micro-grid [51] | ||

| User friendliness | Stakeholder consultation (1) [47], Smart energy [50], Smart-grid [50] | ||

| Inclusion | Smart-grid [35], Waste management [55], Smart city [39], Sustainable energy [51] | ||

| Consumer protection | Smart energy [50], Smart-grid [35] | ||

| 9 | Living | Housing | Smart city [39] |

| Equity | Stakeholder consultation (1) [47], Solar-energy [32], Smart city [38], Smart-grid [35], Sustainable energy [51] | ||

| Culture | Smart city [38,39], Smart-grid [35], Energy storage [54] | ||

| Livelihood | Smart-grid [35] | ||

| Convenience | Smart city [39] | ||

| 10 | Environment | Decarbonisation Ecosystem Human health Resources Other | Stakeholder consultation (1) [47], RE [48], RE-Hybrid [49], Smart city [38,39], Smart energy [50], Smart-grid [35], Solar-energy [32], Sustainable energy [51], Sustainable micro-grid [31], Waste management [55], Wave & tidal energy [52], LCIA RECiPe model. |

References

- Rae, C.; Kerr, S.; Maroto-Valer, M.M. Upscaling smart local energy systems: A review of technical barriers. Renew. Sustain. Energy Rev. 2020, 131, 110020. [Google Scholar] [CrossRef]

- Ford, R.; Maidment, C.; Vigurs, C.; Fell, M.J.; Morris, M. Smart local energy systems (SLES): A framework for exploring transition, context, and impacts. Technol. Forecast. Soc. Chang. 2021, 166, 120612. [Google Scholar] [CrossRef]

- Francis, C.; Sierra Costa, A.; Thomson, R.C.; Ingram, D.M. EnergyREV Workshop Report SLES Benefits—Optimizing Performance Indicators; EnergyREV Internal Report: Unpublished; University of Edinburgh: Edinburgh, UK, 2020. [Google Scholar]

- Desa, U. Transforming Our World: The 2030 Agenda for Sustainable Development. 2016. Available online: https://documents-dds-ny.un.org/doc/UNDOC/GEN/N15/291/89/PDF/N1529189.pdf (accessed on 15 May 2022).

- Heo, E.; Kim, J.; Boo, K.J. Analysis of the assessment factors for renewable energy dissemination program evaluation using fuzzy AHP. Renew. Sustain. Energy Rev. 2010, 14, 2214–2220. [Google Scholar] [CrossRef]

- Štreimikienė, D.; Šliogerienė, J.; Turskis, Z. Multi-criteria analysis of electricity generation technologies in Lithuania. Renew. Energy 2016, 85, 148–156. [Google Scholar] [CrossRef]

- Resniova, E.; Ponomarenko, T. Sustainable Development of the Energy Sector in a Country Deficient in Mineral Resources: The Case of the Republic of Moldova. Sustainability 2021, 13, 3261. [Google Scholar] [CrossRef]

- Barney, A.; Petersen, U.R.; Polatidis, H. Energy scenarios for the Faroe Islands: A MCDA methodology including local social perspectives. Sustain. Future 2022, 4, 100092. [Google Scholar] [CrossRef]

- Vassoney, E.; Mammoliti Mochet, A.; Comoglio, C. Use of multicriteria analysis (MCA) for sustainable hydropower planning and management. J. Environ. Manag. 2017, 196, 48–55. [Google Scholar] [CrossRef]

- Bączkiewicz, A.; Kizielewicz, B. Towards Sustainable Energy Consumption Evaluation in Europe for Industrial Sector Based on MCDA Methods. Procedia Comput. Sci. 2021, 192, 1334–1346. [Google Scholar] [CrossRef]

- Sahabuddin, M.; Khan, I. Multi-criteria decision analysis methods for energy sector’s sustainability assessment: Robustness analysis through criteria weight change. Sustain. Energy Technol. Assess. 2021, 47, 101380. [Google Scholar] [CrossRef]

- Francis, C.; Sierra Costa, A.; Thomson, R.C.; Ingram, D.M. Developing the framework for multi-criteria assessment of smart local energy systems. In Proceedings of the Energy Evaluation Europe 2021 Conference, London, UK, 29 June–1 July 2020; p. 13. [Google Scholar]

- Francis, C.; Sierra Costa, A.; Thomson, R.C.; Ingram, D.M. Developing a Multi-Criteria Assessment Framework for Smart Local Energy Systems; EnergyREV Outputs; University of Strathclyde Publishing: Glasgow, UK, 2020; ISBN 978-1-909522-63-3. [Google Scholar]

- Naegler, T.; Becker, L.; Buchgeister, J.; Hauser, W.; Hottenroth, H.; Junne, T.; Lehr, U.; Scheel, O.; Schmidt-Scheele, R.; Simon, S.; et al. Integrated Multidimensional Sustainability Assessment of Energy System Transformation Pathways. Sustainability 2021, 13, 5217. [Google Scholar] [CrossRef]

- Schmidt-Scheele, R.; Hauser, W.; Scheel, O.; Minn, F.; Becker, L.; Buchgeister, J.; Hottenroth, H.; Junne, T.; Lehr, U.; Naegler, T.; et al. Sustainability assessments of energy scenarios: Citizens’ preferences for and assessments of sustainability indicators. Energy Sustain. Soc. 2022, 12, 41. [Google Scholar] [CrossRef]

- Hottenroth, H.; Sutardhio, C.; Weidlich, A.; Tietze, I.; Simon, S.; Hauser, W.; Naegler, T.; Becker, L.; Buchgeister, J.; Junne, T.; et al. Beyond climate change. Multi-attribute decision making for a sustainability assessment of energy system transformation pathways. Renew. Sustain. Energy Rev. 2022, 156, 111996. [Google Scholar] [CrossRef]

- Schleich, J.; Tu, G.; Faure, C.; Guetlein, M.C. Would you prefer to rent rather than own your new heating system? Insights from a discrete choice experiment among owner-occupiers in the UK. Energy Policy 2021, 158, 112523. [Google Scholar] [CrossRef]

- Chen, Q. District or distributed space heating in rural residential sector? Empirical evidence from a discrete choice experiment in South China. Energy Policy 2021, 148, 111937. [Google Scholar] [CrossRef]

- Azarova, V.; Cohen, J.; Friedl, C.; Reichl, J. Designing local renewable energy communities to increase social acceptance: Evidence from a choice experiment in Austria, Germany, Italy, and Switzerland. Energy Policy 2019, 132, 1176–1183. [Google Scholar] [CrossRef] [Green Version]

- Van Oijstaeijen, W.; Van Passel, S.; Back, P.; Cools, J. The politics of green infrastructure: A discrete choice experiment with Flemish local decision-makers. Ecol. Econ. 2022, 199, 107493. [Google Scholar] [CrossRef]

- Ananda, J.; Herath, G. A critical review of multi-criteria decision making methods with special reference to forest management and planning. Ecol. Econ. 2009, 68, 2535–2548. [Google Scholar] [CrossRef]

- Hansen, P.; Devlin, N. Multi-criteria decision analysis (MCDA) in healthcare decision-making. In Oxford Research Encyclopedia of Economics and Finance; Oxford University Press: Oxford, UK, 2019; ISBN 9780190625979. [Google Scholar] [CrossRef]

- Daim, T.U.; Li, X.; Kim, J.; Simms, S. Evaluation of energy storage technologies for integration with renewable electricity: Quantifying expert opinions. Environ. Innov. Soc. Transit. 2012, 3, 29–49. [Google Scholar] [CrossRef]

- Hajkowicz, S.; Higgins, A. A comparison of multiple criteria analysis techniques for water resource management. Eur. J. Oper. Res. 2008, 184, 255–265. [Google Scholar] [CrossRef]

- Yang, W.; Zhang, J. Assessing the performance of gray and green strategies for sustainable urban drainage system development: A multi-criteria decision-making analysis. J. Clean. Prod. 2021, 293, 126191. [Google Scholar] [CrossRef]

- Zanakis, S.H.; Solomon, A.; Wishart, N.; Dublish, S. Multi-attribute decision making: A simulation comparison of select methods. Eur. J. Oper. Res. 1998, 107, 507–529. [Google Scholar] [CrossRef]

- Yi, L.; Li, T.; Zhang, T. Optimal investment selection of regional integrated energy system under multiple strategic objectives portfolio. Energy 2021, 218, 119409. [Google Scholar] [CrossRef]

- Ford, R.; Maidment, C.; Fell, M.; Vigurs, C.; Morris, M. A Framework for Understanding and Conceptualising Smart Local Energy Systems; EnergyREV; University of Strathclyde Publishing: Strathclyde, UK, 2019. [Google Scholar]

- Mankins, J.C. Technology Readiness Level—A White Paper; NASA, Advanced Concepts Office, Office of Space Access and Technology: Washington, DC, USA, 1995; Available online: https://www.researchgate.net/publication/247705707_Technology_Readiness_Level_-_A_White_Paper (accessed on 3 February 2022).

- Hull, R. Energy Transition Readiness Index; Technical Report; Association for Renewable Energy and Clean Technology (REA): London, UK, 2019. [Google Scholar]

- Kumar, A.; Singh, A.R.; Deng, Y.; He, X.; Kumar, P.; Bansal, R.C. Integrated assessment of a sustainable microgrid for a remote village in hilly region. Energy Convers. Manag. 2019, 180, 442–472. [Google Scholar] [CrossRef]

- Hernandez, R.R.; Armstrong, A.; Burney, J.; Ryan, G.; Moore-O’Leary, K.; Diédhiou, I.; Grodsky, S.M.; Saul-Gershenz, L.; Davis, R.; Macknick, J.; et al. Techno–ecological synergies of solar energy for global sustainability. Nat. Sustain. 2019, 2, 560–568. [Google Scholar] [CrossRef] [Green Version]

- Geels, F.W.; Sovacool, B.K.; Schwanen, T.; Sorrell, S. The Socio-technical dynamics of low-carbon transitions. Joule 2017, 1, 463–479. [Google Scholar] [CrossRef] [Green Version]

- Schot, J.; Geels, F.W. Strategic niche management and sustainable innovation journeys: Theory, findings, research agenda, and policy. Technol. Anal. Strategy Manag. 2008, 20, 537–554. [Google Scholar] [CrossRef]

- Hargreaves, N.; Chilvers, J.; Hargreaves, T. “What’s the Meaning of ’Smart’? A Study of Smart Grids”: Sociotechnical Report; School of Environmental Sciences, University of East Anglia: Norwich, UK, 2015. [Google Scholar]

- Huovila, A.; Bosch, P.; Airaksinen, M. Comparative analysis of standardized indicators for Smart sustainable cities: What indicators and standards to use and when? Cities 2019, 89, 141–153. [Google Scholar] [CrossRef]

- Marchetti, D.; Oliveira, R.; Figueira, A.R. Are global north smart city models capable to assess Latin American cities? A model and indicators for a new context. Cities 2019, 92, 197–207. [Google Scholar] [CrossRef]

- Sharifi, A. A critical review of selected smart city assessment tools and indicator sets. J. Clean. Prod. 2019, 233, 1269–1283. [Google Scholar] [CrossRef]

- Sharifi, A. A typology of smart city assessment tools and indicator sets. Sustain. Cities Soc. 2019, 53, 101936. [Google Scholar] [CrossRef]

- Koirala, B.P.; Koliou, E.; Friege, J.; Hakvoort, R.A.; Herder, P.M. Energetic communities for community energy: A review of key issues and trends shaping integrated community energy systems. Renew. Sustain. Energy Rev. 2016, 56, 722–744. [Google Scholar] [CrossRef] [Green Version]

- Adams, C.; Coulson, A.B.; Emmelkamp, T.; Greveling, R.; Klüth, G.; Nugent, M. CAPITALS Background Paper for <IR>; Technical Report; International Integrated Reporting Council: London, UK, 2013. [Google Scholar]

- Whiting, R.H.; Hansen, P.; Sen, A. A tool for measuring SMEs’ reputation, engagement and goodwill: A New Zealand exploratory study. J. Intellect. Cap. 2017, 18, 170–188. [Google Scholar] [CrossRef]

- Deyshappriya, N.P.R.; Feeny, S. Weighting the Dimensions of the Multidimensional Poverty Index: Findings from Sri Lanka. Soc. Indic. Res. 2021, 156, 1–19. [Google Scholar] [CrossRef]

- Hansen, P.; Ombler, F. A new method for scoring additive multi-attribute value models using pairwise rankings of alternatives. J. Multi-Criteria Decis. Anal. 2008, 15, 87–107. [Google Scholar] [CrossRef]

- Green, P.E.; Krieger, A.M.; Wind, Y. Thirty years of conjoint analysis: Reflections and prospects. INFORMS J. Appl. Anal. 2001, 31, S56–S73. [Google Scholar] [CrossRef] [Green Version]

- Kaya, T.; Kahraman, C. Multicriteria renewable energy planning using an integrated fuzzy VIKOR & AHP methodology: The case of Istanbul. Energy 2010, 35, 2517–2527. [Google Scholar] [CrossRef]

- Francis, C.; Ingram, D.M.; Thomson, R.C. Defining Success of Smart Local Energy System (SLES); Energyrev stakeholder consultation workshop (1) technical report; University of Edinburgh: Edinburgh, UK, 2019. [Google Scholar]

- Liu, G.; Li, M.; Zhou, B.; Chen, Y.; Liao, S. General indicator for techno-economic assessment of renewable energy resources. Energy Convers. Manag. 2018, 156, 416–426. [Google Scholar] [CrossRef]

- Ma, W.; Xue, X.; Liu, G.; Zhou, R. Techno-economic evaluation of a community-based hybrid renewable energy system considering site-specific nature. Energy Convers. Manag. 2018, 171, 1737–1748. [Google Scholar] [CrossRef]

- Snodin, H. Smart Energy—Technology Landscaping, Scotland’s Energy Efficiency Programme; ClimateXChange: Edinburgh, UK, 2017; Available online: https://www.climatexchange.org.uk/media/5500/technology-landscaping-report-smart-energy.pdf (accessed on 15 May 2022).

- Gallego Carrera, D.; Mack, A. Sustainability assessment of energy technologies via social indicators: Results of a survey among European energy experts. Energy Policy 2010, 38, 1030–1039. [Google Scholar] [CrossRef]

- Bull, D.; Costello, R.; Babarit, A.; Nielsen, K.; Kennedy, B.; Bittencourt, C.; Roberts, J.; Weber, J. Scoring the Technology Performance Level (TPL) Assessment; Technical Report SAND2017-4560C; Sandia National Laboratories: Albuquerque, NM, USA, 2017. [Google Scholar]

- Krey, V.; Guo, F.; Kolp, P.; Zhou, W.; Schaeffer, R.; Awasthy, A.; Bertram, C.; de Boer, H.S.; Fragkos, P.; Fujimori, S.; et al. Looking under the hood: A comparison of techno-economic assumptions across national and global integrated assessment models. Energy 2019, 172, 1254–1267. [Google Scholar] [CrossRef]

- REEM Project. Methodology for Linking Technology to Energy System Models; Technical Report; Compiled by KIC InnoEnergy, The Netherlands, Universität Stuttgart, Germany, and KTH Royal Institute of Technology, Sweden on behalf of the European Commission: Brussels, Belgium, 2017. [Google Scholar]

- Rodrigues, A.P.; Fernandes, M.L.; Rodrigues, M.F.F.; Bortoluzzi, S.C.; Gouvea da Costa, S.E.; Pinheiro de Lima, E. Developing criteria for performance assessment in municipal solid waste management. J. Clean. Prod. 2018, 186, 748–757. [Google Scholar] [CrossRef]

- Ma, W.; Xue, X.; Liu, G. Techno-economic evaluation for hybrid renewable energy system: Application and merits. Energy 2018, 159, 385–409. [Google Scholar] [CrossRef]

| KPI | Criteria | Pairwise | ||||||

|---|---|---|---|---|---|---|---|---|

| Theme | 1 | 2 | 3 | 4 | 5 | 6 | 7 | Comparisons |

| Governance | Governance Strategy | Integrated Management & Digital Planning | Accountability & Decision Making | Transparency & Consumer Redress | Knowledge Exchange & Experience | Standards & Regulation | 20 | |

| Environment | Greenhouse Gas Emissions | Biodiversity | Human Health | Resilience to Environment | Noise Levels | Other Ecosystem Impacts | 20 | |

| Data Management | Digital Technology Enablers | ICT Infrastructure | Visibility | Privacy | Grid & Capacity Management | Investment Decisions | 18 | |

| People & Living | Community Engagement | Fuel Poverty | Cost of Energy | Thermal Comfort | Access to Services | Carbon Reduction | Job Opportunities | 17 |

| Business & Economics | Market Design | Attractive to Investors | Competitive Energy Pricing | Promoting Growth | Revenue from Decarbonisation | Techno- Economic Metrics | 34 | |

| Technical Performance | Robustness | Reproducibility | System Performance | Maturity | Energy & Infrastructure | Local Renewable Generation | 15 | |

| Main Involvement in the Sector | Quantity | Percentage |

|---|---|---|

| Research Organisation or University | 111 | 47.4 |

| Small End User | 37 | 15.8 |

| Non-Governmental Organisation (NGO) or Non-Profit Organisation (NPO) | 16 | 6.8 |

| Local Authority | 15 | 6.4 |

| Energy Industry | 14 | 6.0 |

| Consultant | 12 | 5.1 |

| Community Energy | 9 | 3.8 |

| Other | 9 | 3.8 |

| Product Manufacturer and Retailer | 5 | 2.1 |

| Government | 2 | 0.9 |

| Finance Sector | 1 | 0.4 |

| Large End User | 1 | 0.4 |

| Network Operators and Advisors | 1 | 0.4 |

| Regulators | 1 | 0.4 |

| KPI | Criteria Ranking and Weights | Included | ||||||

|---|---|---|---|---|---|---|---|---|

| Theme | 1 | 2 | 3 | 4 | 5 | 6 | 7 | Participants |

| Governance | Governance Strategy (23.3%) | Accountability & Decision Making (19.7%) | Standards & Regulation (16%) | Integrated Management & Digital Planning (15.2%) | Knowledge Exchange & Experience (13.4%) | Transparency & Consumer Redress (12.4%) | 30 | |

| Environment | Greenhouse Gas Emissions (32.1%) | Other Ecosystem Impacts (20.3%) | Biodiversity (20.2%) | Human Health (17.1%) | Resilience to Environment (8.8%) | Noise Levels (1.5%) | 56 | |

| Data Management | Grid & Capacity Management (20.6%) | Digital Technology Enablers (19.5%) | Investment Decisions (19.1%) | ICT Infrastructure (18.9%) | Visibility (13.2%) | Privacy (8.8%) | 16 | |

| People & Living | Fuel Poverty (19.4%) | Carbon Reduction (16.5%) | Cost of Energy (15.1%) | Thermal Comfort (14.2%) | Community Engagement (12.6%) | Access to Services (11.7%) | Job Opportunities (10.5%) | 51 |

| Business & Economics | Market Design (22.3%) | Promoting Growth (21.4%) | Techno- Economic Metrics (15.5%) | Competitive Energy Pricing (14.8%) | Attractive to Investors (13%) | Revenue from Decarbonisation (13%) | 31 | |

| Technical Performance | Robustness (26.6%) | Energy & Infrastructure (18.6%) | Local Renewable Generation (18.5%) | Reproducibility (13.0%) | System Performance (12.2%) | Maturity (11.1%) | 44 | |

| Articles | KPI Theme (Number of Criteria) | ||||||

|---|---|---|---|---|---|---|---|

| This study | Data Management (6) | Technical Performance (5) | Business & Economics (6) | Environment (6) | People & Living (7) | Governance (6) | |

| Heo et al. [5] | Technological (4) | Market (3) | Economic (3) | Environmental (3) | Policy (4) | ||

| Kaya and Kahraman [46] | Technical (7) | Economics (9) | Environmental (9) | Social (4) | |||

| Daim et al. [23] | Technical (6) | Economic (3) | Environmental (3) | Social (1) | |||

| Štreimikienė et al. [6] | Technological (4) | Economical (4) | Environment protection (4) | Social ethics (3) | Institutional & political (5) | ||

| Sahabuddin and Khan [11] | Economics (3) | Environmental (3) | Social (6) | ||||

| Barney et al. [8] | Technical (2) | Economics (2) | Environmental (2) | Social (2) | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Francis, C.; Hansen, P.; Guðlaugsson, B.; Ingram, D.M.; Thomson, R.C. Weighting Key Performance Indicators of Smart Local Energy Systems: A Discrete Choice Experiment. Energies 2022, 15, 9305. https://doi.org/10.3390/en15249305

Francis C, Hansen P, Guðlaugsson B, Ingram DM, Thomson RC. Weighting Key Performance Indicators of Smart Local Energy Systems: A Discrete Choice Experiment. Energies. 2022; 15(24):9305. https://doi.org/10.3390/en15249305

Chicago/Turabian StyleFrancis, Christina, Paul Hansen, Bjarnhéðinn Guðlaugsson, David M. Ingram, and R. Camilla Thomson. 2022. "Weighting Key Performance Indicators of Smart Local Energy Systems: A Discrete Choice Experiment" Energies 15, no. 24: 9305. https://doi.org/10.3390/en15249305