Multiparameter Regression of a Photovoltaic System by Applying Hybrid Methods with Variable Selection and Stacking Ensembles under Extreme Conditions of Altitudes Higher than 3800 Meters above Sea Level

Abstract

1. Introduction

- The implementation of a photovoltaic system under the extreme conditions of an altitude 3800 m above sea level;

- The implementation of four hybrid models (of various selections) using regularization and a sequential feature selector;

- The implementation and validation of a multiparameter regression meta-model based on super-learning for a photovoltaic system using hyperparameter optimization techniques.

2. Materials and Methods

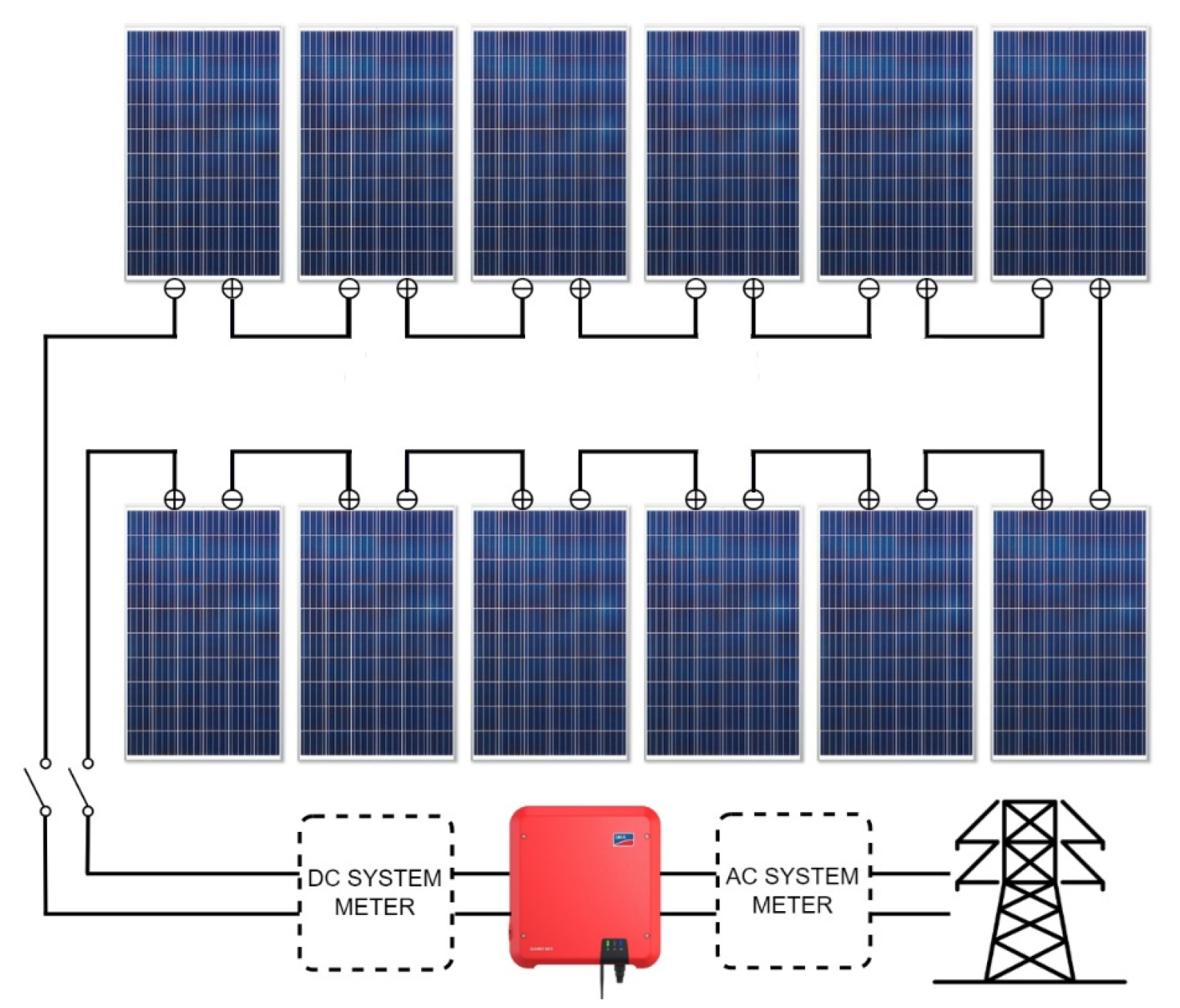

2.1. Dataset

2.2. Basic Model

ElasticNet

2.3. Stacking

2.4. Optimization

- Bayesian method: this method seeks global optimization through the iterative construction of a probabilistic model of the distribution of functions from the values of the hyperparameters to the objective function. Probabilistic models capture beliefs about the behavior of a function by constructing the posterior distribution of the objective function. The acquisition function is then constructed using the posterior distribution to determine the next point with the best probability of improvement. This type of optimization has the disadvantage of exploration and exploitation; that is, finding a balance between global searches to find the best solution in all the available space and local searching to refine the results and try to avoid wasting resources. Within this type of algorithm, we have Parzen, Gaussian process regressor, and kriging.

- Early stop method: by using statistical searches, this method discards the search spaces that offer the worst results and that do not contain a global minimum. Its result is given based on the comparison of the intermediate scores of the model with the set of hyperparameters. As an example, we have halving and hyperband. Their main disadvantage is that they have to go through the entire space to deliver their final result.

- Evolutionary method: based on the principles of evolution given by Charles Darwin, this method begins by extracting an initial sample of the hyperparameter search space to later evaluate them based on their relative fitness. The worst-performing hyperparameters are discarded, and new sets of hyperparameters are generated through crossover and mutation. This process is repeated until no further growth is observed in the results or the process stops due to processing time.

- Bayesian optimization methods: in order to solve exploration and exploitation problems, two strategies are used: sampling, working in areas where better results are obtained, and pruning, which is based on stopping early if optimal results are found.

- The flowchart used for optimization in this investigation is shown in Figure 8:

2.5. Performance Evaluation

- R2 score: indicates the precision of the model in terms of residual distance; it is used as an equivalent metric of the classification models. Its main advantage is that it allows models to be compared more easily, but its disadvantage is that, when working with many variables, it tends to overfit the model, obtaining very high R2 values. R2 is given by Equation (22):

- Mean absolute error (MAE): this is the sum of all the differences between the actual and predicted values divided by the total amount of data. It is used to understand how close the predictions are with respect to the real model calculated on average. Its main advantage is that it is a differentiable function, which is why it is used as a loss function to be minimized. Its disadvantage is that it is affected by outliers, and it is difficult to interpret; that is, interpreting between which ranges the result is acceptable. MAE is given by the following equation:

- Mean squared error (MSE): this is defined as the mean square distance. It works like MAE, but it is used when there are large errors in the prediction, making them noticeable in the total value. Its main advantage is that it is also differentiable and is usually easier to interpret than MSE. Its main disadvantage is that it is greatly affected by outliers. Its equation is as follows:

- Adjusted R2 score: this is used to penalize a model that has too many independent variables that are not significant for the prediction. The main advantage is that it can indicate overfitting in the model, but the disadvantage is that it is affected by highly biased models. Its equation is as follows:

3. Results

3.1. Dataset

3.2. Feature Selection

3.3. Stacking: Level One

3.4. Stacking: Level Two

3.5. Stacking Meta-Model

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Dubey, S.; Sarvaiya, J.N.; Seshadri, B. Temperature Dependent Photovoltaic (PV) Efficiency and Its Effect on PV Production in the World—A Review. Energy Procedia 2013, 33, 311–321. [Google Scholar] [CrossRef]

- Gupta, V.; Sharma, M.; Pachauri, R.K.; Babu, K.D. Comprehensive review on effect of dust on solar photovoltaic system and mitigation techniques. Sol. Energy 2019, 191, 596–622. [Google Scholar] [CrossRef]

- Aglietti, G.S.; Redi, S.; Tatnall, A.R.; Markvart, T. Harnessing high-altitude solar power. IEEE Trans. Energy Convers. 2009, 24, 442–451. [Google Scholar] [CrossRef]

- Ebhota, W.S.; Tabakov, P.Y. Impact of Photovoltaic Panel Orientation and Elevation Operating Temperature on Solar Photovoltaic System Performance. Int. J. Renew. Energy Dev. 2022, 11, 591–599. [Google Scholar] [CrossRef]

- Li, G.; Xie, S.; Wang, B.; Xin, J.; Li, Y.; Du, S. Photovoltaic Power Forecasting with a Hybrid Deep Learning Approach. IEEE Access 2020, 8, 175871–175880. [Google Scholar] [CrossRef]

- El Motaki, S.; El Fengour, A. A statistical comparison of feature selection techniques for solar energy forecasting based on geographical data. Comput. Assist. Methods Eng. Sci. 2021, 28, 105–118. [Google Scholar]

- Nejati, M.; Amjady, N. A New Solar Power Prediction Method Based on Feature Clustering and Hybrid-Classification-Regression Forecasting. IEEE Trans. Sustain. Energy 2022, 13, 1188–1198. [Google Scholar] [CrossRef]

- Castangia, M.; Aliberti, A.; Bottaccioli, L.; Macii, E.; Patti, E. A compound of feature selection techniques to improve solar radiation forecasting. Expert Syst. Appl. 2021, 178, 114979. [Google Scholar] [CrossRef]

- Macaire, J.; Salloum, M.; Bechet, J.; Zermani, S.; Linguet, L. Feature Selection using Kernel Conditional Density Estimator for day-ahead regional PV power fore-casting in French Guiana. In Proceedings of the International Conference on Applied Energy, Bangkok, Thailand, 29 November–2 December 2021. [Google Scholar]

- Zambrano, A.F.; Giraldo, L.F. Solar irradiance forecasting models without on-site training measurements. Renew. Energy 2020, 152, 557–566. [Google Scholar] [CrossRef]

- Huaquipaco, S.; Macêdo, W.N.; Pizarro, H.; Condori, R.; Ramos, J.; Vera, O.; Cruz, J.; Mamani, W. Cross-validation of the operation of photovoltaic systems connected to the grid in extreme conditions of the highlands above 3800 meters above sea level. Int. J. Renew. Energy Res. 2022, 12, 950–959. [Google Scholar] [CrossRef]

- Zhao, Z.; Chen, K.; Chen, Y.; Dai, Y.; Liu, Z.; Zhao, K.; Wang, H.; Peng, Z. An Ultra-Fast Power Prediction Method Based on Simplified LSSVM Hyperparameters Optimization for PV Power Smoothing. Energies 2021, 14, 5752. [Google Scholar] [CrossRef]

- Andrade, C.H.; Melo, G.C.; Vieira, T.F.; Araújo, Í.B.; Medeiros Martins, A.D.; Torres, I.C.; Brito, D.B.; Santos, A.K. How Does Neural Network Model Capacity Affect Photovoltaic Power Pre-diction? A Study Case. Sensors 2023, 23, 1357. [Google Scholar] [CrossRef] [PubMed]

- Silva, R.C.; de Menezes Júnior, J.M.; de Araújo Júnior, J.M. Optimization of narx neural models using particle swarm optimization and genetic algorithms applied to identification of photovoltaic systems. J. Sol. Energy Eng. 2021, 143, 051001. [Google Scholar] [CrossRef]

- Harrou, F.; Taghezouit, B.; Khadraoui, S.; Dairi, A.; Sun, Y.; Arab, A.H. Ensemble Learning Techniques-Based Monitoring Charts for Fault Detection in Photovoltaic Systems. Energies 2022, 15, 6716. [Google Scholar] [CrossRef]

- Pravin, P.; Tan, J.Z.M.; Yap, K.S.; Wu, Z. Hyperparameter optimization strategies for machine learning-based stochastic energy efficient scheduling in cyber-physical production systems. Digit. Chem. Eng. 2022, 4, 100047. [Google Scholar] [CrossRef]

- Tina, G.M.; Ventura, C.; Ferlito, S.; De Vito, S. A State-of-Art-Review on Machine-Learning Based Methods for PV. Appl. Sci. 2021, 11, 7550. [Google Scholar] [CrossRef]

- Mosavi, A.; Salimi, M.; Ardabili, S.F.; Rabczuk, T.; Shamshirband, S.; Varkonyi-Koczy, A.R. State of the Art of Machine Learning Models in Energy Systems, a Systematic Review. Energies 2019, 12, 1301. [Google Scholar] [CrossRef]

- Lodhi, E.; Wang, F.-Y.; Xiong, G.; Dilawar, A.; Tamir, T.S.; Ali, H. An AdaBoost Ensemble Model for Fault Detection and Classification in Photovoltaic Arrays. IEEE J. Radio Freq. Identif. 2022, 6, 794–800. [Google Scholar] [CrossRef]

- Chen, B.; Wang, Y. Short-Term Electric Load Forecasting of Integrated Energy System Considering Nonlinear Synergy Between Different Loads. IEEE Access 2021, 9, 43562–43573. [Google Scholar] [CrossRef]

- Khan, W.; Walker, S.; Zeiler, W. Improved solar photovoltaic energy generation forecast using deep learning-based ensemble stacking approach. Energy 2022, 240, 122812. [Google Scholar] [CrossRef]

- Abdellatif, A.; Mubarak, H.; Ahmad, S.; Ahmed, T.; Shafiullah, G.M.; Hammoudeh, A.; Abdellatef, H.; Rahman, M.M.; Gheni, H.M. Forecasting Photovoltaic Power Generation with a Stacking Ensemble Model. Sustainability 2022, 14, 11083. [Google Scholar] [CrossRef]

- Feng, Y.; Yu, X. Deployment and Operation of Battery Swapping Stations for Electric Two-Wheelers Based on Machine Learning. J. Adv. Transp. 2022, 2022, 8351412. [Google Scholar] [CrossRef]

- Lateko, A.A.H.; Yang, H.-T.; Huang, C.-M. Short-Term PV Power Forecasting Using a Regression-Based Ensemble Method. Energies 2022, 15, 4171. [Google Scholar] [CrossRef]

- Guo, X.; Gao, Y.; Zheng, D.; Ning, Y.; Zhao, Q. Study on short-term photovoltaic power prediction model based on the Stacking ensemble learning. Energy Rep. 2020, 6, 1424–1431. [Google Scholar] [CrossRef]

- Abdelmoula, I.A.; Elhamaoui, S.; Elalani, O.; Ghennioui, A.; El Aroussi, M. A photovoltaic power prediction ap-proach enhanced by feature engineering and stacked machine learning model. Energy Rep. 2022, 8, 1288–1300. [Google Scholar] [CrossRef]

- Massaoudi, M.; Abu-Rub, H.; Refaat, S.S.; Trabelsi, M.; Chihi, I.; Oueslati, F.S. Enhanced Deep Belief Network Based on Ensemble Learning and Tree-Structured of Parzen Estimators: An Optimal Photovoltaic Power Forecasting Method. IEEE Access 2021, 9, 150330–150344. [Google Scholar] [CrossRef]

- Tan, Z.; Zhang, J.; He, Y.; Xiong, G.; Liu, Y. Short-Term Load Forecasting Based on Integration of SVR and Stacking. IEEE Access 2020, 8, 227719–227728. [Google Scholar] [CrossRef]

- Zhang, H.; Zhu, T. Stacking Model for Photovoltaic-Power-Generation Prediction. Sustainability 2022, 14, 5669. [Google Scholar] [CrossRef]

- Lateko, A.A.H.; Yang, H.-T.; Huang, C.-M.; Aprillia, H.; Hsu, C.-Y.; Zhong, J.-L.; Phương, N.H. Stacking Ensemble Method with the RNN Meta-Learner for Short-Term PV Power Forecasting. Energies 2021, 14, 4733. [Google Scholar] [CrossRef]

- Michael, N.E.; Mishra, M.; Hasan, S.; Al-Durra, A. Short-Term Solar Power Predicting Model Based on Multi-Step CNN Stacked LSTM Technique. Energies 2022, 15, 2150. [Google Scholar] [CrossRef]

- Satinet, C.; Fouss, F. A Supervised Machine Learning Classification Framework for Clothing Products’ Sustainability. Sustainability 2022, 14, 1334. [Google Scholar] [CrossRef]

- Adler, A.I.; Painsky, A. Feature Importance in Gradient Boosting Trees with Cross-Validation Feature Selection. Entropy 2022, 24, 687. [Google Scholar] [CrossRef] [PubMed]

- Li, L.; Jamieson, K.; Rostamizadeh, A.; Gonina, E.; Ben-Tzur, J.; Hardt, M.; Recht, B.; Talwalkar, A. A system for massively parallel hyperparameter tuning. Proc. Mach. Learn. Syst. 2020, 2, 230–246. [Google Scholar]

- Akiba, T.; Sano, S.; Yanase, T.; Ohta, T.; Koyama, M. Optuna: A next-generation hyperparameter optimization framework. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 2623–2631. [Google Scholar]

- Li, D.; Zhang, X.; Liu, D.; Wang, T. Aggregation of non-fullerene acceptors in organic solar cells. J. Mater. Chem. A 2020, 8, 15607–15619. [Google Scholar] [CrossRef]

- Huang, W.; Zhang, J.; Ji, D. Extracting Chinese events with a joint label space model. PLoS ONE 2022, 17, e0272353. [Google Scholar] [CrossRef] [PubMed]

- Chakraborty, D.; Mondal, J.; Barua, H.B.; Bhattacharjee, A. Computational solar energy—Ensemble learning methods for prediction of solar power generation based on meteorological parameters in Eastern India. Renew. Energy Focus 2023, 44, 277–294. [Google Scholar] [CrossRef]

| Count | Mean | Std | Min | 25% | 50% | 75% | Max | |

|---|---|---|---|---|---|---|---|---|

| AC voltage (V) | 119,753.0 | 235.440155 | 2.940976 | 223.900000 | 233.400000 | 235.400000 | 237.600000 | 247.900000 |

| AC current (A) | 119,753.0 | 6.964209 | 2.931848 | 0.580000 | 4.633000 | 7.558000 | 9.430000 | 12.416000 |

| Active power (W) | 119,753.0 | 1621.629792 | 708.451947 | 0.000000 | 1070.000000 | 1762.500000 | 2219.200000 | 2879.200000 |

| Apparent power (W) | 119,753.0 | 1642.922003 | 696.644029 | 135.000000 | 1089.800000 | 1777.900000 | 2232.500000 | 2898.000000 |

| Reactive power (W) | 119,753.0 | 220.097268 | 66.293499 | −843.900000 | 196.300000 | 228.500000 | 256.300000 | 485.100000 |

| Frequency (Hz) | 119,753.0 | 60.002564 | 0.046009 | 59.500000 | 60.000000 | 60.000000 | 60.000000 | 60.500000 |

| Power factor | 119,753.0 | 0.951686 | 0.188032 | −0.990000 | 0.983000 | 0.991000 | 0.994000 | 0.998000 |

| Total energy (W/h) | 119,753.0 | 5224.543496 | 1013.532902 | 3894.300000 | 4183.400000 | 5904.500000 | 6171.200000 | 6427.600000 |

| Daily energy (W/h) | 119,753.0 | 127.544866 | 86.504498 | 0.000209 | 56.418152 | 113.251184 | 189.551443 | 342.905747 |

| DC voltage (V) | 119,753.0 | 334.812376 | 17.338490 | 220.800000 | 321.900000 | 332.800000 | 346.100000 | 420.800000 |

| DC current (A) | 119,753.0 | 5.556741 | 2.390009 | 0.000000 | 3.620000 | 5.890000 | 7.650000 | 10.780000 |

| DC power (W) | 119,753.0 | 1831.112472 | 737.393141 | 0.000000 | 1260.304000 | 1972.189000 | 2450.420000 | 3142.272000 |

| Irradiance (W/m2) | 119,753.0 | 668.877765 | 292.047458 | 0.000000 | 432.000000 | 706.000000 | 926.000000 | 1522.000000 |

| Module temp (°C) | 119,753.0 | 35.115793 | 11.256891 | 2.400000 | 27.600000 | 37.000000 | 44.200000 | 60.300000 |

| Ambient temp (°C) | 119,753.0 | 16.611160 | 3.769045 | −2.000000 | 14.500000 | 17.400000 | 19.400000 | 27.700000 |

| Model Base | Ridge | LASSO | ElasticNet | Bayesian | |

|---|---|---|---|---|---|

| MSE | 17.48539 | 10.63716 | 14.40150 | 18.33607 | 10.63832 |

| R2 | 0.99939239 | 0.99977513 | 0.99958782 | 0.99933183 | 0.99977508 |

| 0.99939232 | 0.99977512 | 0.99958778 | 0.99933178 | 0.99977507 |

| Model Base | ElasticNet | XGBoost | |

|---|---|---|---|

| MSE | 17.48539 | 10.63704 | 10.06582 |

| R2 | 0.99939239 | 0.99977514 | 0.99979864 |

| 0.99939232 | 0.99977512 | 0.99979862 |

| Base Model | ElasticNet | XGBoost | |

|---|---|---|---|

| MSE | 17.48539 | 10.63704 | 10.63698 |

| R2 | 0.99939239 | 0.999775137 | 0.999775140 |

| 0.99939232 | 0.999775116 | 0.999775118 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cruz, J.; Romero, C.; Vera, O.; Huaquipaco, S.; Beltran, N.; Mamani, W. Multiparameter Regression of a Photovoltaic System by Applying Hybrid Methods with Variable Selection and Stacking Ensembles under Extreme Conditions of Altitudes Higher than 3800 Meters above Sea Level. Energies 2023, 16, 4827. https://doi.org/10.3390/en16124827

Cruz J, Romero C, Vera O, Huaquipaco S, Beltran N, Mamani W. Multiparameter Regression of a Photovoltaic System by Applying Hybrid Methods with Variable Selection and Stacking Ensembles under Extreme Conditions of Altitudes Higher than 3800 Meters above Sea Level. Energies. 2023; 16(12):4827. https://doi.org/10.3390/en16124827

Chicago/Turabian StyleCruz, Jose, Christian Romero, Oscar Vera, Saul Huaquipaco, Norman Beltran, and Wilson Mamani. 2023. "Multiparameter Regression of a Photovoltaic System by Applying Hybrid Methods with Variable Selection and Stacking Ensembles under Extreme Conditions of Altitudes Higher than 3800 Meters above Sea Level" Energies 16, no. 12: 4827. https://doi.org/10.3390/en16124827

APA StyleCruz, J., Romero, C., Vera, O., Huaquipaco, S., Beltran, N., & Mamani, W. (2023). Multiparameter Regression of a Photovoltaic System by Applying Hybrid Methods with Variable Selection and Stacking Ensembles under Extreme Conditions of Altitudes Higher than 3800 Meters above Sea Level. Energies, 16(12), 4827. https://doi.org/10.3390/en16124827