Abstract

Digital twin is often viewed as a technology that can assist engineers and researchers make data-driven system and network-level decisions. Across the scientific literature, digital twins have been consistently theorized as a strong solution to facilitate proactive discovery of system failures, system and network efficiency improvement, system and network operation optimization, among others. With their strong affinity to the industrial metaverse concept, digital twins have the potential to offer high-value propositions that are unique to the energy sector stakeholders to realize the true potential of physical and digital convergence and pertinent sustainability goals. Although the technology has been known for a long time in theory, its practical real-world applications have been so far limited, nevertheless with tremendous growth projections. In the energy sector, there have been theoretical and lab-level experimental analysis of digital twins but few of those experiments resulted in real-world deployments. There may be many contributing factors to any friction associated with real-world scalable deployment in the energy sector such as cost, regulatory, and compliance requirements, and measurable and comparable methods to evaluate performance and return on investment. Those factors can be potentially addressed if the digital twin applications are built on the foundations of a scalable and interoperable framework that can drive a digital twin application across the project lifecycle: from ideation to theoretical deep dive to proof of concept to large-scale experiment to real-world deployment at scale. This paper is an attempt to define a digital twin open architecture framework that comprises a digital twin technology stack (D-Arc) coupled with information flow, sequence, and object diagrams. Those artifacts can be used by energy sector engineers and researchers to use any digital twin platform to drive research and engineering. This paper also provides critical details related to cybersecurity aspects, data management processes, and relevant energy sector use cases.

1. Introduction

Modernization and automation of the connected electromechanical control systems are pivotal to Industry 4.0 architectures [1]. Such modernization through digitization can provide invaluable system information and capabilities needed to ensure economic viability, enhance efficiency, increase service reliability, improve resiliency, and expand the performance envelope of the facilities that host and operate these control systems. The purpose of such facilities can range from energy generation, transmission, and distribution to manufacturing of systems including distributed energy resources (DERs) and associated peripheral components. Despite the benefits of digitization and automation, such increased digitization equals increased system complexity, requiring a more systematic approach to reap the benefits of the new capabilities that digitization brings. Digital twin is seen as a powerful tool across many sectors/industries [2,3,4] for exercising the full capabilities of a system by using the system’s native sensing capabilities, combined with the mathematical processes, system dynamics, and physics-driven predictions, to provide system-specific insights and advanced analytics [5]. This can also balance capabilities with the incremental costs of system maintenance and the risk of component failures. The development of digital twins in the energy sector [6] is a unique opportunity to incorporate data streams (resource availability, temperature, dissolved gases, pressure, min or max flows, ramping rate limits, etc.) that account for dynamic external factors that rapidly alter the flexibility available for the generation facilities to respond to grid signals.

The concept of digital twins is not new [7], nor is its potential value proposition in advancing many of our antiquated infrastructures and operations. Despite the concept of digital twins being discussed and experimental for nearly two decades [7], the majority of the advancements have been a mix bag of early-stage research explorations, tabletop experiments, and ad hoc deployments. With cross-sector industries heading toward infrastructure advancements and modernization [8], it is vital for the industry players, including manufacturing entities and energy producing utilities, to use a scalable and interoperable digital twin framework for design, development, testing, and deployment. Furthermore, digital twins will enable industry players to operate with greater flexibility, improve physical process performance [9], and pursue additional revenue streams with a better understanding of the tradeoffs with operation and maintenance costs. Management of risks and costs will be more effective because digital twins will improve predictive maintenance and the safety of plant operation; inform health of equipment operation and system performance; and support staff training, engineering design, development of procedures, and effective emergency preparedness planning by being able to simulate drills and plant response (procedural and human factors). As with any technology, factors such as scalability, interoperability, security, trustworthiness, determination of information/data sources, and hosting infrastructure are critical for long-term design and deployment of digital twins [10].

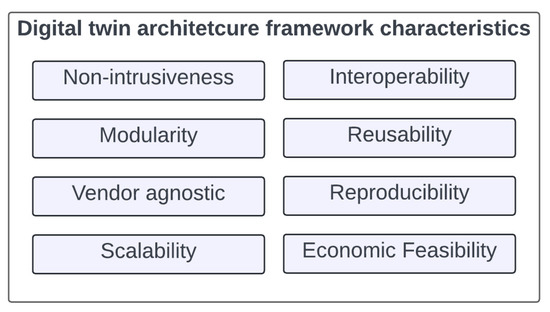

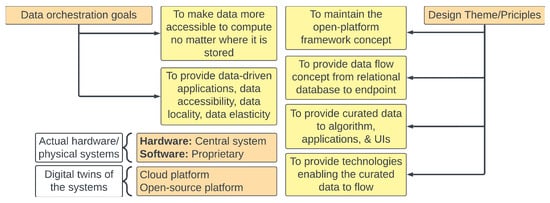

Building scalable digital twins for power grid applications requires answering some fundamental questions: (1) What are the peripheral and integral building blocks of a digital twin for power systems applications? (2) What are the needed interactions and expected relationships between the physical grid systems and the respective digital twin models? (3) What level of granular modeling is required for digital twin’s comparative and reasonable behavior as compared to the physical counterparts? (4) What is the role of data in the end-to-end digital twin design and deployment process? (5) What are the critical cybersecurity aspects and network engineering consideration for a digital twin design process? (6) How should one model system level, subsystem level, network and security level, protocol level attributes, and parameters for a digital twin of the entire network? (7) What are the physical dependencies, software dependencies, and data dependencies for a power system network’s digital twin? and (8) What are the required underlying data orchestration processes? This paper attempts to provide insights into the above critical questions, supports the arguments by presenting a digital twin open architecture framework (D-Arc) with the characteristics shown in Figure 1, and expands on a streamlined set of processes pertaining to data flows and data orchestration that are at the core of a successful digital twin development.

Figure 1.

Necessary characteristics of a digital twin architecture framework.

2. Novel Contributions and Structural Overview of the Paper

Novel contributions of this paper are as follows: (1) a D-Arc technology stack that is agnostic to any digital twin platform and grid systems; (2) process flows and pertinent definitions critical to design accurate and usable digital twins; and (3) a well-defined data orchestration model that is compatible with on-premises and cloud infrastructure. The paper supports these contributions through a detailed deep-dive into relationship models and depicts high-value use cases in the energy sector. This paper is organized with Section 2 providing an in-depth analysis of digital twins; their characteristics, types, and phases; and a handshake illustration between integral and peripheral components; and presents an overview of potential digital twin architectures. Section 3 is dedicated to a technical deep-dive into the D-Arc technology stack design, detailed analysis of the stack’s various layers and their relationships, sequential information flow and system relationship analysis using D-Arc for power grid digital twin modeling effort, sequence of steps to assist the digital twin designers in operationalizing D-Arc and its usability, and important cybersecurity risks and related mitigations for consideration during the design process. Based on the evidence from Section 3 regarding the data orchestration relationship and the importance of data, Section 4 is dedicated to the overall data orchestration process and provides in-depth analysis of various phases involved in the process and offers guidance on handling potential data anomalies and artifacts that are expected during the digital twin development process. Section 5 identifies high-value power grid use cases that have the potential to benefit from digital twins, and Section 6 provides the conclusion to the paper, identifying gaps in the current methodology that are yet to be addressed.

3. Digital Twins

Digital twin technology involves replicating a plant system design, operation, or process using mathematical models. Elements of a digital twin framework accommodate methods to obtain data needed to duplicate a virtual “twin” system, equipment, or process (e.g., sensor data and information) and methods to develop, deploy, and operate the digital twin model (DTM). Using these models, industry players can: (1) discover underlying problems and predict failures by monitoring parameters such as vibration and temperature; (2) get a real-time overview of system status, estimate remaining value of life using data from sensors, virtual sensors, etc., and initiate measures to address forecasted system challenges; (3) perform flow analysis and electromagnetic simulations, and provide other insights that could lead to change in operating conditions and discover ideal operating modes, from an economic standpoint, based on system status; (4) develop and study various “what if” scenarios on a digital twin component and discover system response; (5) gain information about the state of the system and implement predictive maintenance measures to avoid unplanned downtime and maximize availability; (6) explore abstract factory/plant/utility topologies and develop coupled multiphysics equations to understand site-specific customization with the physical system; (7) develop a dynamic view of the ability to operate flexibly in response to grid signals and identify constraints that cause bottlenecks; and (8) perform stress analysis and develop responses to:

- (a)

- subject digital twin components to varying stresses that cannot be predicted in advance, and predict wear and tear or system degradation due to excessive stress, which will enable timely replacement of the component before damage can occur and impede the downstream processes.

- (b)

- perform strength calculations to find critical points with maximum stresses on the system components.

- (c)

- determine current stress at the hot spots with a high degree of certainty at any time, ultimately enabling a robust service life calculation on that basis.

The process of digital twin development involves system modeling, modular system development, and decentralized sensor network technologies such as Cymbiote [11], VOLTTRON [12], and SerialTap [13], to satisfy DTM data requirements. Those technologies were designed to interface with physical and software systems to non-intrusively collect data. For instance, Cymbiote is a utility-scale tested system that can be connected to a physical system to collect network traffic, environmental parameters, and device-level characteristics. On a similar note, the VOLTTRON platform enables distributed sensing and controls and can be integrated with any control system. VOLTTRON has been used in power grid and smart building research to collect measurement data from hardware control systems such as water heaters, HVAC systems, photovoltaic systems, batteries and microgrid generation systems, and many more. Finally, SerialTap was developed to bridge the gap between older serial-based devices and modern networks in industrial control systems.

In the realm of algorithmic models that consume data from the above data acquisition systems and generate value-added information artifacts, researchers have been developing machine learning (ML) systems to use granular models from platforms such as Energy+ [14] and Modelica [15] to leverage partial knowledge of system dynamics and constraint enforcing multiobjective loss functions to effectively use small and static datasets. On-going DOE-funded research successfully demonstrated the effectiveness of a novel unifying framework for constrained optimal control of linear systems to provide stability guarantees for learned dynamics, robustness in the face of uncertainty, and high sampling efficiency [16]. In another DOE-funded effort, researchers have been developing physics-based sensor network modeling to predict failures [17]. Lessons learned from such existing research can be used to address challenges related to data gaps and physics-based modeling in DTM development.

The synchronicity between the building blocks of the DTM is critical for accurate functionality and deployability. Some of these types of challenges were addressed by proprietary digital twin research and solutions in thermal power and oil and gas domains [18]. It is evident that mature research exists across connecting elements of digital twin development, ranging from data acquisition to ML modeling. However, the disparate or ad hoc nature of how these research artifacts are connected to produce scalable, tangible, and operational digital twins is widely unexplored. Expanding upon the aforementioned limitations, this paper introduces a comprehensive framework that meticulously documents a universal backbone that facilitates the data orchestration processes for the research and development of digital twins. The remainder of this section will provide an overview of digital twin design and development processes and introduce a relevant framework in the context of the energy systems.

3.1. Types and Phases of Digital Twins

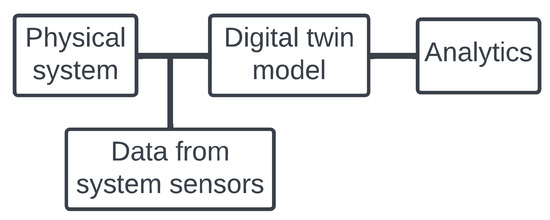

Digital twin signifies the digital representation through a model of a physical system, process, or any piece of equipment [19]. Figure 2 shows the primary components of a digital twin. It is evident from Figure 2 that the DTM can be developed using:

Figure 2.

Digital twin components.

- Virtual representations of the hardware components and their corresponding connections within a physical system.

- Real-time data from the system sensors that are integral or peripheral (example: Internet of Things) to the physical system.

The resultant model will facilitate estimating the performance evaluation and data analytics of the physical system, forecasting/predicting failures, and detecting anomalies. Since data are a critical component of an efficient DTM (or digital twin), the data orchestration process is inherent to the twin development process. There are two types of digital twins: project digital twin and performance digital twin.

- The goal of a project digital twin is to facilitate the design, simulation, and engineering of a physical asset/process or to modernize an existing physical asset.

- The goal of a performance digital twin is to facilitate efficient operations and maintain needed performance of an industrial system.

It is paramount to have good quality and well-managed data to have an effective and successful operation of a digital twin. In most cases that involve electromechnical systems that are at least partially network connected, it might be required to achieve a combination of a project digital twin and a performance digital twin.

Designing the appropriately sized and scalable digital twin archetypes for the physical systems will require the twin to have the following attributes:

- Accurate digital representation of the physical components that are integral to the physical system.

- Ability to simulate the industrial or network-wide operations of the physical system.

- Ability to facilitate smooth maintenance and operations of the physical system.

To fulfill the above attributes, the digital twin design and development process should be categorized into three phases [20]: (a) Design, (b) Build, and (c) Operate. The Design and Build phases are part of project digital twin, whereas the Operate phase is part of the performance digital twin.

- In the Design phase, the physical elements and virtual elements (software) will coordinate and collaborate in a single operation-oriented design of the system. This phase will involve architecting the virtual representation of the system through digital counterparts of the physical system’s hardware subcomponents. The Design phase can be performed on cloud or a physical system/server.

- The Build phase pertains to understanding the performance and behavioral elements of the system. These include attributes such as system tolerance, stress, and design. This phase is related to the simulation aspects of the digital twin. Simulation will provide an estimate of digital twin operations with respect to the physical system operations. Based on the simulated operations, system-level influencing factors and parameters can be determined. These controllable factors and parameters will be critical toward making performance and operational improvement decisions on the physical system.

- The Operate phase corresponds to the actual operations of the physical system and its attributes such as age, operational constraints, etc. In this phase, the digital twin is expected to run in real time alongside the physical system.

3.2. Potential Digital Twin Architectures

Data (such as measurements, system states, etc.) from the physical systems (such as sensors) and digital models (such as CAD-based, granular spice, etc.) are the main virtual/digital components of the digital twin. There are at least five possible architectures based on the digital twin location, data location, and sensor location:

- Data and digital twin on physical (on-premises) device

- Data on cloud (such as AWS) and digital twin on a physical (on-premises) device

- Data and digital twin on the same cloud service (such as AWS)

- Data on a physical (on-premises) device and digital twin on cloud (such as AWS)

- Data and digital twin on different cloud services (such as data: Azure; digital twin: AWS)

These architectures are driven by possible locations of the data repository and the digital twin. Irrespective of the architecture chosen for implementation, the following handshake diagram is valid for all architectures discussed here. It is important to note that the most important differences between the architectures are the data location (where the data repository is stored/located) and the location of the DTM.

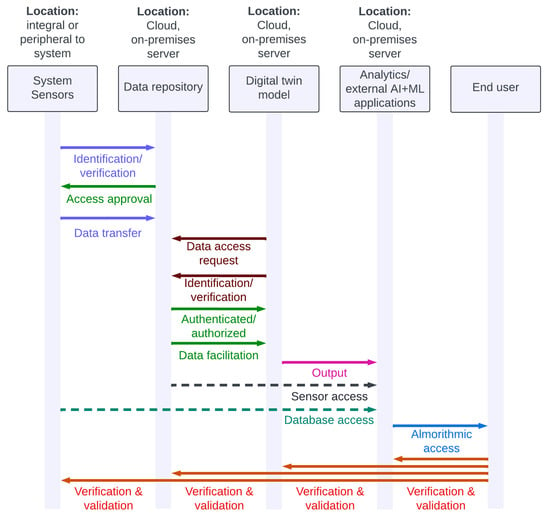

As shown in Figure 3, there are several handshakes expected to happen in the digital twin environment. Note that the figure only provides a high-level overview of these handshakes. Cryptographic components such as data encryption and hashing are critical toward secure operations and accurate performance of the digital twin. Here is the walkthrough of Figure 3.

Figure 3.

Theoretical handshake between digital twin components.

- System sensors, components, or the system as a whole, when possible, may be expected to send the identification/verification information to the data repository to access and write to the data repository.

- Once the validation happens at the repository level, the physical systems are approved with write access privileges so the system sensors can periodically transfer measurement data to the repository.

- When the client/end user deploys the digital twin (application), the digital twin first requests the repository to gain read access to the measurement data. The digital twin will be required to send its identification information toward the authorization process.

- Once the repository validates and approves the read access, the digital twin can start accessing the data and activate its processes. These processes could be arithmetic, logical, or transcendental operations in nature.

- External applications such as ML peripheral software to perform detection, classification, forecasting, etc., will access the digital twin’s output. Note that the identification, authentication, and authorization steps pertaining to the external application is not discussed here. The external application may require going through standalone identification, authentication, and authorization steps with the digital twin (application) and potentially the data repository and sensors (based on the need and possibility). This will be required if the external application wants to verify the digital twin’s output and correlate with the input. This can be necessary to mitigate a man-in-the-middle attack.

- Depending on the digital twin’s environmental feasibility, there could also be a verification and validation process between the client/end user and the rest of the environmental components: external application, digital twin, data repository, and system sensors block. Such intricate security features can mitigate data corruption and related cyberattacks. However, this level of verification and validation may result in latencies and be nonfeasible with certain systems. Therefore, security enhancements should be weighed against the cost of implementation prior to designing the digital twin environment.

Implementing any of the above architectures would require a framework or software platform to facilitate such development and deployment. The following sections present such framework called D-Arc and provide a theoretical depiction of using it to architect, design, and develop scalable DTMs.

4. D-Arc: Technology Stack and Usable Approach

The D-Arc framework presented in this section is designed to address the challenges discussed in the previous sections and it will allow the industry stakeholders to:

- identify software components, communication network parameters, and data requirements to develop a DTM and perform replicable static and dynamic analysis on the DTM;

- develop data pipelines between the DTM and external software to forecast failures, detect anomalies, etc.; and

- manage, enhance, and patch the DTM without causing irreparable downstream impacts.

4.1. D-Arc Technology Stack

The D-Arc technology stack is designed to facilitate these three objectives. D-Arc allows a plug-and-play approach for various DTMs, including those available from open libraries, such as from Modelica, Energy+, PyTorch, TensorFlow, and from proprietary systems. Note that the application and usability of D-Arc is not restricted to energy and power sectors. The stack is agnostic enough to apply for any sectors. However, this paper is particularly scoped to expand on D-Arc’s application to energy and power sector through the information models and use cases presented. The presented D-Arc technology stack facilitates:

- High-fidelity modeling of the underlying physical processes: Tools such as Modelica can be used to perform simulations of the processes that will be suitable for offline analytics, long-term forecasting, and planning.

- Data-driven modeling and approximation with simplified mathematics and physics: Tools and libraries such as PyTorch or TensorFlow can be used for real-time applications such as short-term forecasting, fault detection, and control.

- Interconnection of high-fidelity emulators with data-driven methods: This hybrid approach depends on domain-specific applications. Emerging ML concepts can be used in future adoptions of D-Arc to develop DTMs for any industrial control system (ICS) or electromechanical system.

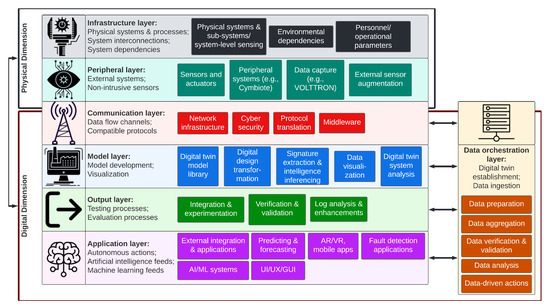

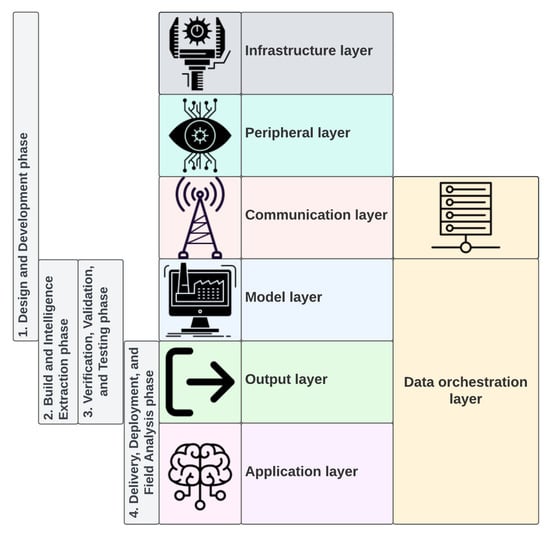

The D-Arc technology stack is categorized into two dimensions (physical and digital) that consist of six horizontal layers and a vertical layer (see Figure 4). The remainder of this section will provide a detailed analysis of the dimensions and layers of the D-Arc technology stack.

Figure 4.

D-Arc Technology Stack.

- 1.

- The physical dimension corresponds to the infrastructure and physical systems/devices. This also includes environmental and operational entities such as personnel or personnel interactions with the physical system, and built-in and external data acquisition systems such as sensors and actuators. Following are the two layers in the physical dimension:

- (a)

- The infrastructure layer pertains to physical systems: their subsystems and associated processes, interconnections between the systems (such as communications), and other system dependencies. The components that fall into this layer are the built-in sensors (pressure sensor, vibration monitoring sensor, etc.), external and environment dependencies, upstream and downstream system components and data, personnel/operators, operational parameters, and other physical operational technology/ICS resources needed for the digital twin design. The elements of this layer are the critical aspects of the digital twin: inaccuracies, inconsistencies, lack of trust, and visibility gaps at the hardware electromechanical system level that result in digital twins that are incapable of producing tangible outcomes.

- (b)

- The peripheral layer includes third-party, external, and peripheral data acquisition systems (such as Cymbiote, Volttron, SerialTap) that can be used to procure system-wide, network-wide, and environmental data that are otherwise impossible to gain by only relying on the components from the infrastructure layer. Depending on data requirements and gaps, use of this layer may be optional, and external interfacing for data acquisition may not be needed if sufficient observability is acquired from the infrastructure layer.

- 2.

- The digital dimension corresponds to the sequential processes that will lead to efficient digital twins and their ability to interface with external user applications, such as a ML failure forecasting system, artificial intelligence (AI)-based anomaly detection system, etc. The digital dimension layers can be established in cloud infrastructure or on-premises servers. This dimension is categorized into three layers:

- (a)

- The communication layer facilitates the flow of needed information between the physical and digital dimensions. This layer corresponds to the needed network systems and protocols that will allow the transfer of data and information from the physical dimension to the digital dimension (and vice-versa). Protocol examples may include Ethernet-based, TCP-IP, UDP, and serial in combination of common ICS protocols (e.g., OPC, Modbus, DNP3, IEC 61850, CIP).

- (b)

- The model layer pertains to the process of digital twin establishment and data ingestion from the physical systems to feed into the digital twins. Furthermore, data analysis and visualization are integral to evaluate the effectiveness of DTMs. Finally, preliminary signature extraction and inferencing intelligence from the collected data using logical filters are also part of this layer; this is critical to ensure that necessary feedback loops are in place to improve DTMs. The following functions are part of this layer: (1) DTMs (e.g., model library); (2) digital design transformation (e.g., information models, software needed for digital model transformations and mathematical functions), which is necessary for accurate digital representation of the physical system with provisions for expected/required inputs and outputs, and includes accurately capturing the control logic and physics-based mathematical models; (3) signature extraction and intelligence inference; (4) data visualization; and (5) digital twin system analysis by coupling the data aggregation with the DTMs developed. Digital twin evaluation to compare against the physical systems begins in this layer. The functional DTM can be established to work in two or more modes, in parallel to the physical system or independent of the physical system.

- (c)

- The output layer includes the testing and validation processes. This layer provides DTM logs and postprocessing analysis to ensure expected behavior, and modules to perform static and dynamic testing to evaluate its efficacy under extreme boundary conditions. These conditions or edge cases will include operating the digital twin in conditions that approach a failure or alarm while still functioning within the bounds of operational acceptance.

- (d)

- The application layer will connect DTMs with AI/ML detection/forecast, graphical user interfaces, etc. The application programming interfaces (APIs) can be open-source and vendor-agnostic or proprietary. Systems or modules in this layer will use the digital twin to perform failure forecasting, anomaly detection, etc.

- (e)

- The data orchestration layer is a vertical layer that spans across the entire digital dimension. In other words, data orchestration and related processes are underlying backend infrastructure for a well-functioning digital twin platform or ecosystem. The functions of this layer include (1) data preparation, (2) corruption-free data aggregation within acceptable error thresholds, (3) data verification and validation, (4) data analysis, and (5) data-driven actions. The following subsection provides an in-depth analysis of the data orchestration layer.

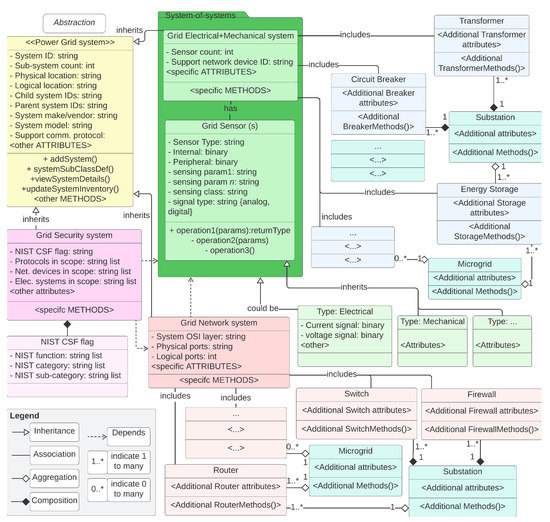

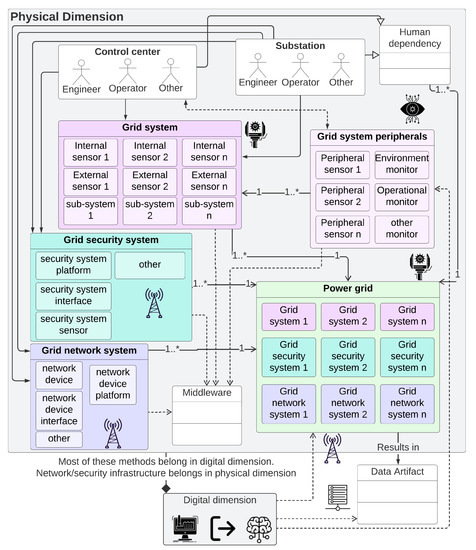

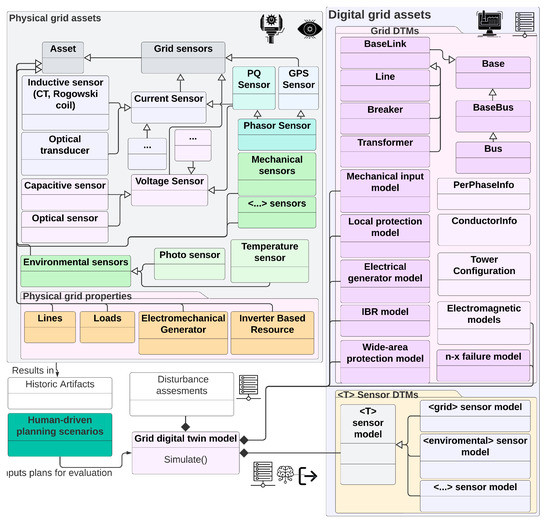

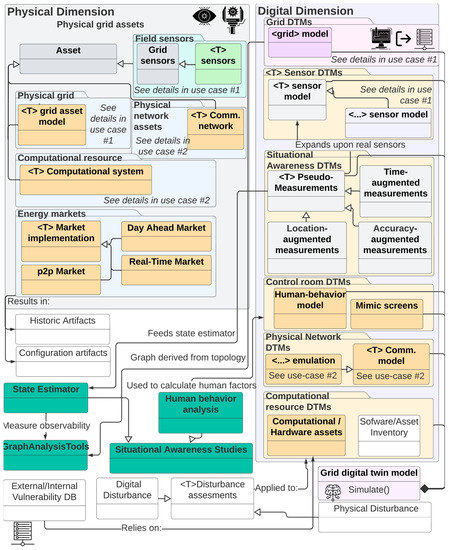

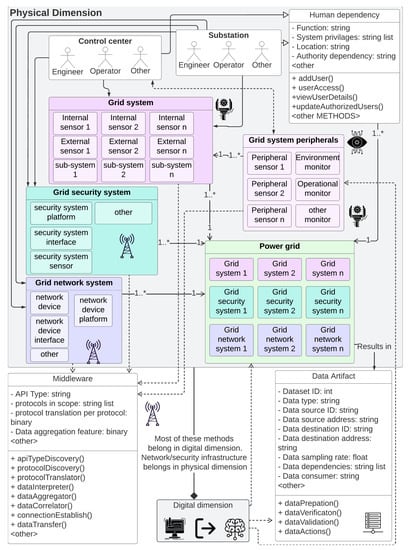

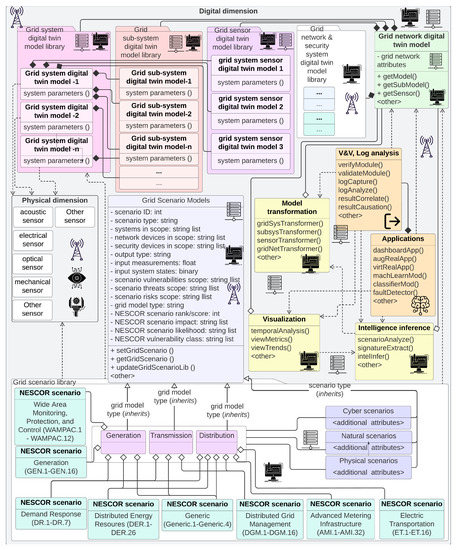

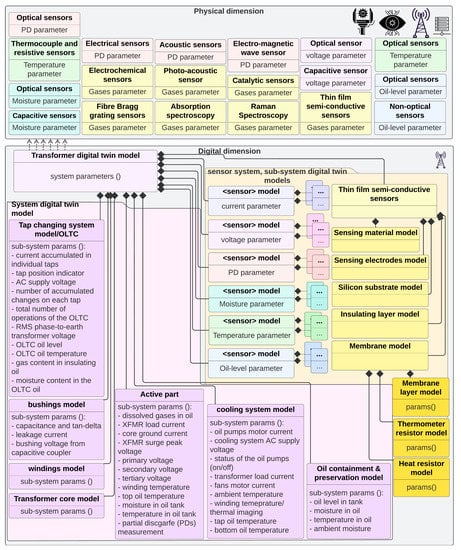

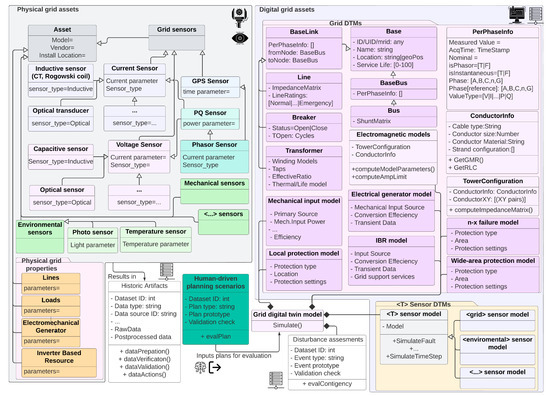

4.2. System and Network Information Flow per D-Arc

Operationalzing D-Arc requires intricate definitions of systems, networks, and interdependencies. Detailed illustrative attempts are made to define such relationships in a power grid environment (see Figure 5—Note that under “Grid Security system”, NIST CSF is the abbreviation of the NIST Cyber Security Framework. In practice, depending on the use case, this can be replaced with other standards such as the NIST 800-53, ISA 62443, NERC CIP, etc.). A real-world, end-to-end grid network relationship representation will be significantly more complicated than this representation. The intent of this illustration is to show the level of information needed from systems, subsystems (including sensors), support systems such as the network and security infrastructure, etc. Modeling these system-level intricacies combined with modeling the network intricacies are crucial to achieve a realistic digital twin for energy infrastructure. An illustrative information flow, dependencies, and relationships of the physical dimension D-Arc technology stack layers in the context of a power grid is shown in Figure 6. Transfer of data, sequence of activities, and the relationship between system and subsystem models are also shown. For ease of readability, the icons from the D-Arc technology stack are denoted next to respective components.

Figure 5.

Applicability and usability depiction of D-Arc technology stack in a phased approach.

Figure 6.

Information flow, dependencies, and relationships of the physical dimension D-Arc technology stack layers in the context of a power grid operations. For additional details, please see Figure A5.

All information flow and object model diagrams are depicted in this paper are inspired by the Unified Modeling Language (UML) Class diagram standard. The UML class diagrams are largely used in software development processes. Digital twins are hybrid technology with closely coupled hardware and software systems and subsystems. Therefore, the UML class diagram standard is chosen to represent the diagrams. In these diagrams, the rectangular boxes represent classes. Although classes are building blocks of the software-related objects, similar parallel can be drawn to diagrammatically represent the hardware components. In other words, in a software/digital portion of the diagrams, the rectangular objects represent the building blocks of the software/programmatic objects; but in the hardware-related portions of the diagrams, the rectangular boxes represent building blocks of a system or subsystem. Nomenclature of the rectangular box is as follows:

- The top row of the rectangular boxes represents the building block name.

- The middle row of the rectangular boxes represents attributes and potential data types. This representation follows <attribute: datatype> (e.g., System ID: string).

- The bottom half of the rectangular boxes represents methods otherwise known as operations. In the diagrams, one operation is defined per line.

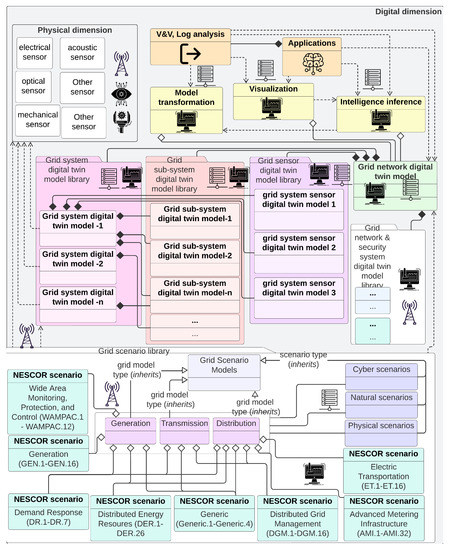

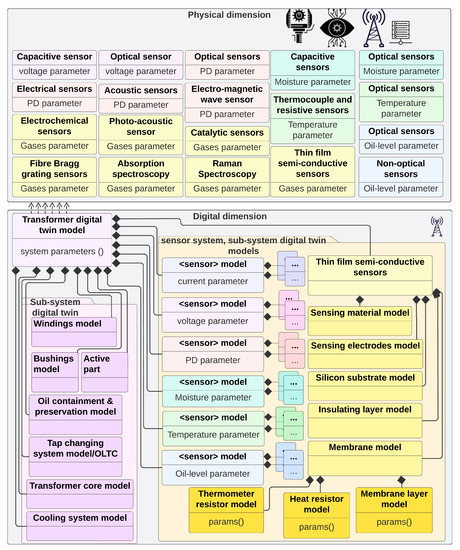

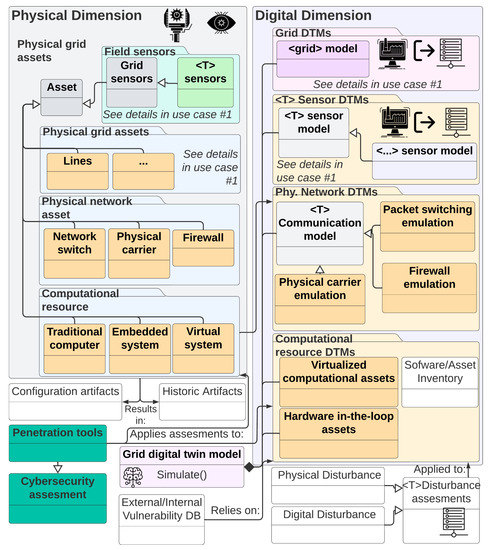

Figure 5, Figure 6, Figure 7 and Figure 8 show illustrative building blocks or classes, attributes, and methods or operations. The purpose here is to use the UML class diagram standard to demonstrate the expandability of D-Arc, generalize the framework, and demonstrate its applicability to Energy sector use cases (Section 5). This fulfills the purpose of demonstrating the usability of the framework and a methodical approach to apply D-Arc for any use case in the depicted fashion.

Figure 7.

Information flow, dependencies, and relationships of the digital dimension D-Arc technology stack layers in the context of a NESCOR scenarios. For added detail, please see Figure A6.

Figure 8.

Illustration of the D-Arc and UML sequence flow process using Transformer system as an example. For added detail, please see Figure A7.

Figure 6 is being used to illustrate the following: (1) physical elements of a potential grid system; (2) peripheral components that may interact with the grid system; (3) network and security system subelements; (4) expected means of interactions between those systems through middleware; (5) depiction of how these systems belong to the power grid and require accurate digital twin representation; (6) expected human interaction with the systems and potential human/user classes or categories; (7) data artifacts and elements that would define a dataset; and finally, (8) the relationship between everything above in a physical dimension to the digital dimension. Although Figure 6 is used for illustration proposes, it is intended to show how the relationship diagrams can be architected using D-Arc prior to building the digital twins. Lack of understanding of such interdependencies and relationships will result in an unrealistic set of digital twins that cannot contribute to any intended high-value use cases.

Note that the purpose behind defining methods in the physical dimension’s UML entity class elements is to depict three important possibilities: (1) there may be system-level software methods that are responsible for the attribute’s definition; (2) there may be peripheral or interfacing software components (e.g., through API/middleware) that would be responsible for the attribute’s definition; and (3) it may indicate that, in the absence of physical system’s inherent or peripheral software components where the identified methods can contribute to the attribute’s definitions, such software development would need to be done as integral to the digital dimension. This is shown in Figure 7 by illustrating the Middleware class object as “crossing the physical dimension boundaries”. This figure provides a deep dive into the digital dimension and is meant to show the probably level of granularity needed in the digital dimension modeling efforts with D-Arc technology stack as reference. In addition to the grid systems, subsystems, sensors, and pertinent grid network model, which includes all of those software models and network, security, and protocol models/representations, Figure 7 also shows an architectural view of the scenario library. This scenario library uses NESCOR failure scenarios [21] as reference to demonstrate the process. Finally, a typical transformer system is used as an example to put all of the above in the context of the complexity that may be involved in creating a transformer DTM. This illustration is shown in Figure 8 and is meant to show the level of granularity that can be obtained for each of the grid systems. To model this example, system design parameters that are identified in [22] are used. The granularity of a system model, however, depends on the available information about the system itself. If the information about a physical system is not readily available, some assumptions about the subsystem relationship should be made that could result in behavior discrepancies between the physical system and digital twin.

In summary, the above sequence of illustrations are meant to visualize the end-to-end process involved in the design, development, and deployment of a power system and power grid network digital twins through UML sequence diagrams. In the process, the illustrations are offered to show the operational nature of the D-Arc technology stack and provide a method that can be applied to any grid system digital twin design.

4.3. Use of D-Arc Technology Stack

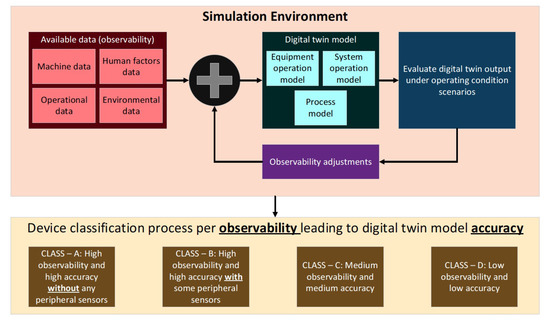

D-Arc can be used to develop DTMs in a sequential process that spans across the following phases (see Figure 9): (1) design and development; (2) build and intelligence extraction; (3) verification, validation, and testing; and (4) delivery, deployment, and field analysis.

Figure 9.

Applicability and usability depiction of D-Arc technology stack in a phased approach.

- Design and development: The first phase of DTM development spans across the infrastructure, peripheral, communication, and DTM D-Arc layers. This phase involves identifying system components, data, communication systems, and sensors to address data gaps (note that the data gaps may vary for different right-sized archetype models that can be designed). Furthermore, key differences regarding calibrated data versus uncalibrated data should be considered. This phase pertains to aggregating machine data, human factors data, operational data, and environmental data using built-in and peripheral sensors (e.g., Cymbiote and SerialTap). This phase also corresponds to converting the analog outputs from the system to digital form to feed into the DTM using systems such as VOLTTRON and SerialTap.

- Build and intelligence extraction: This phase spans across D-Arc’s integration and process layers and corresponds to model and output layers. This phase is largely focused on the DTM development process. Connected equipment operations, system operations, and system processes will be part of the digital twin. In this phase, data from the previous phase will be used by the DTM to run its processes.

- Verification, validation, and testing: This phase spans across D-Arc’s model and output layers. DTMs from the model library will be simulated through test cases to evaluate behavior under anomalous and data-constrained conditions (“what ifs”). Using those tests, behavioral accuracy of the DTMs will be evaluated and error rate, operating boundaries based on available data, minimum and maximum data needs, etc. will be determined. DTM outputs will be compared against physical system outputs to evaluate the performance and accuracy of the DTM and to optimize it.

- Delivery, deployment, and field analysis: This phase spans across D-Arc’s output and application layers. AI/ML forecasting, detection, prediction systems, etc. will be part of this layer. Data output from the DTM will be fed into AI/ML software. Testing and verification procedures from the previous phase will be used to perform boundary testing on the AI/ML software. Integrated testing between the previous and current phase will involve development of estimation or approximation software systems to fill the data gaps and attempt to fulfill the data requirements.

The remainder of this section illustrates a mechanism of applying D-Arc to a digital twin proof-of-concept simulation. The purpose of this illustrative approach is to present a reasonable methodology that can scale and mature over time based on data availability for the digital twins to grow. Furthermore, the approach below also presents a method to classify the digital twins based on factors such as observability, accuracy, and the availability of sensors/data acquisition systems, both peripheral and integral to the physical system.

- Step 1: Initially, a proof-of-concept quasi-DTM of a physical system/subsystem should be developed. The model is referred to as “quasi” because the model, in the current initial state, can only involve mathematical and physics-driven process approximations bounded to the simulation engine instead of an accurate digital replica of a physical system in production.

- Step 2: Data generated in the simulation engine can be used to drive the quasi-DTM. Data generation and availability are categorized as “from system” and “from peripheral sensors” as the data feeds into the model. In this process, the quasidigital twin would be simulated with total observability to capture the outputs and should be used as a simulation baseline.

- Step 3: Data availability should be adjusted followed by the simulation of the DTM. The DTM output under varying data consistency conditions should be compared against the baseline to estimate data requirement boundaries (minimum and maximum data required). Parameters such as error rate can be calculated in this step.

- Step 4: Based on the above simulation loop, four or more device classifications can be defined as shown in Figure 10. Data availability and DTM output accuracy are among the leading factors that should be used to determine the class of the physical system. Through this classification, the digital twin architect/designer will be able to determine the “true value” of a digital twin in their environment. Furthermore, they can determine the needed peripheral systems to deploy to improve DTM accuracy based on return on investment. The proposed classification system allows the digital twin architect to understand the limitations and define different archetype frameworks that can be customized. In retrospect,

Figure 10. Quasi-DTM in simulation environment for device classifications.

Figure 10. Quasi-DTM in simulation environment for device classifications.- (a)

- advanced/latest systems with built-in sensing capabilities fall under class A.

- (b)

- systems with a mix of built-in sensing and the ability to interface with peripheral sensors fall under class B.

- (c)

- older systems with limited built-in sensing and limited ability to interface with peripheral sensors will fall under class C.

- (d)

- legacy systems with least data availability and inability to interface with peripherals will fall under class D.

The D-Arc technology stack is architected to be vendor agnostic. Therefore, irrespective of using any available commercial-off-the-shelf platforms/software tools or custom-built tools to build digital twins [18,23,24,25,26,27], the stack can be used to methodically build, use, and transfer the models between platforms as needed.

4.4. Model Accuracy Implications and Considerations

In the world of digital twins, the accuracy in behavior of digital artifacts versus physical artifacts is crucial. Building on the D-Arc stack discussed earlier, factors that would impact the accuracy should be estimated. This estimation can be computed by determining the contextual losses in between the real-world system outputs versus the behavior calculated by the digital artifact. This will be mostly influenced by the digital model’s fidelity among other factors, but it will also be influenced by the physical system’s ability to accurately capture and represent the real-world state. For example, the data generated from a sensor may go through several stages of processing and handling before it reaches the digital twin model, with each stage resulting in some contextual loss. Depending on where the artifact belongs in the D-Arc stack, factors that influence the contextual losses and the artifact accuracy will vary. Detailed analysis of such mathematical relationships is beyond the scope of this paper and will be strongly considered for future work. However, we will briefly define the above mathematical relationship for a set of preconceived conditions.

Theorem definition: The artifact accuracy (A) is a function of the contextual loss (C) (see Equation (1)).

Generally speaking, can represent a combination of linear, nonlinear, continuous, or discontinuous functions that reflect on the model’s accuracy, simulation operational range, and ability to capture real-world data (e.g., bandwidth and quantization limits). An example of a nonlinear function is given in Equation (2).

where is the displacement or proportionality factor and x is a nonlinearity factor. Both and x are used to represent the nonlinear relationship between A and C. Note that for , A and C bear a linear relationship and a nonlinear relation when . For the sake of simplicity, we can assume that is a linear function and thus:

where is the displacement or proportionality factor and used to represent the nonlinear relationship the nonlinear relationship between A and C.

Theorem analysis: Mathematical representation of the above definition when applied to the D-Arc stack is as follows (see Table A3 for additional explanation):

Assume that the artifact accuracy at a given layer is denoted as where represents an artifact at a given stack layer L. Within the layer or between the layers, every transformation of will result in a contextual loss pertaining to at layer L: . This relationship is defined as follows:

where i and j represent the artifact transformation in horizontal (e.g., within layer L) and vertical (between layers) transformations.

Note: In Equation (10), the context losses due to model limitations could be additive, multiplicative, or other combinatorial forms. Comparisons between different combinatorial forms is beyond the scope of this paper and will be evaluated in future work. For the sake of simplicity, the rest of this section will assume that losses can be computed using an additive approach. Therefore, the expanded form of the artifact accuracy can be written as follows:

If , will be at its maximum value (≈). Therefore, the objective to achieve during artifact transformations is to minimize the losses—this is represented as follows:

The aforementioned equation clarifies the relationship between i, j, and L by further representing L as two components:

where is any transformation of an artifact.

As indicated earlier, the artifact transformation is influenced by varying factors in a layer or between the layers. For, instance, factors pertinent to infrastructure, peripheral, and communication layers include network throughput (N), data sampling rate (S), and latency (Y). With those three factors in scope, their relationship with and for N is as follows:

In other words, if the network throughput is lower than the necessary throughput to accommodate the instrumentation, the losses could increase due to potential loss of data/packets. Therefore, the relationship between the network throughput and the losses is inversely proportional.

Losses due to insufficient network throughput are as follows:

where is a proportionality factor and is the maximum possible threshold beyond which improving N can no longer minimize . Therefore, another relationship for N can be shown as:

- ⊳

- Value of N to is unchanged for

- ⊳

- Value of N to is at maximum for

Because of the inverse proportionality relationship between N and , N is related to the artifact accuracy as follows:

Similarly, the relationship between S and , is as follows:

- ⊳

- Value of S to is unchanged for

- ⊳

- Value of S to is at maximum for

The relationship between Y and , is slightly different and is as follows:

- ⊳

- Value of Y to is the maximum for

Unlike network throughput and data sampling rate, latency should be lower to minimize losses. A lower latency implies lower losses while a higher latency leads to higher losses. Therefore, the relationship between latency and losses is directly proportional.

Note that the above relationship illustrations are to demonstrate that the artifact accuracy and contextual loss calculations would involve analyzing individual factors. The computations or mathematical representations of such influencing factors may significantly vary as compared to the above relationships. As indicated previously, the presented mathematical analysis is in early stages and a detailed analysis will be the focus of future research.

The relationship between model sufficiency is directly proportional to the artifact accuracy.

Model sufficiency depends on the physical instrumentation from which the data are acquired. Therefore, the built-in and peripheral instrumentation of a device or system serves as a strong dependency for model sufficiency. Such hardware dependency analysis might have to be performed based on the measurands, types (or classes) of sensors, and system characteristics. These analyses could be used to identify conditions where either an insufficient number of sensors exist or too many sensors report on the same measurand (e.g., sensor overcrowding). Sensor classes could include acoustic, thermal, vibration, frequency, electrical (voltage and current), mechanical, etc. Mathematically, this can be estimated as follows:

For a given set of sensors/instrumentation, if is achieved, further increase in instrumentation will result in diminishing returns. If is not achieved, additional peripheral instrumentation can be added to increase local observability data until is achieved.

The above logic can also be understood by using a more traditional approach in control systems theory. Specifically, the observability test requires that sensors are placed in such a way that a nonsingular determinant exists. Putting it in simpler terms, there should be enough sensors to assemble an independent set of vector spaces that make the system observable. If the vector spaces are repeated (e.g., due to sensor overcrowding), no additional information will be gained, while empty vector spaces will yield unobservable states until specific sensors are installed within the problem area to fill this void.

4.5. Cybersecurity Implication and Consideration

Security risks: Hearn et al. [28] emphasizes the criticality of securing the digital twin’s platform and its software for the safety of digital twins and the corresponding physical system. An example of a security gap between the digital twin and physical system could be the presence of an advanced security feature such as securing the microcontrollers in the hardware of the physical system but absence of it in the digital twin platform. Another security concern is the ability of an adversary to obtain access to the digital twin. The digital twin will serve as a blueprint of the physical system, enabling the adversary to not only learn the piping and instrumentation diagrams but also to use that knowledge to craft and execute a tailored or targeted attack on the physical system. Compromising a digital twin exposes the organization to backend system attacks through the server [29]. Code analysis of the API calls will enable the hacker to deliver backend systems attack. Remainder of this section will present various steps to securing digital twin implementation.

Security mitigations: Begin with a clear and well-designed secure software development lifecycle management process that includes all aspects of the lifecycle. The security requirements should be defined prior to design and development. To maintain security of the software source code, the organization must automate a few processes such as (1) peer code review, (2) secure coding methodology, (3) good repository control to scan source code for language conformance, (4) flaws and known vulnerabilities, and (5) open-source compliance to organization policy.

Software and data can be locked to specific devices (computers) through data and copy protection technologies and hardening APIs. In regard to security of the overall digital twin development process, digital twins, peripheral applications, and securing the data while at rest, in transit, and in use are some considerations and requirements.

- Perform vulnerability analysis and threat modeling on the software systems and models.

- Compute and identify the qualitative and quantitative risks associated with digital twin and peripheral software deployments. Architect the vulnerability and risk mitigations.

- Identify the overall cybersecurity posture of the facility with and without digital twin deployments. Based on the comparative analysis, take necessary measures to minimize the attack surface. For example, use the outcomes of self-assessment tools to drive system- and network-level actions.

- Identify the threat actors or related information that can exploit digital twins (for example, Diamond model and MITE ATT&CK ICS matrix).

- Perform a consequence-based analysis on the twins to identify critical areas of concern. For example, to perform various scenario-based testing and analysis on the twin, and not risk damaging the hardware equivalent, first identify the resilience and security scenarios that could impact the hardware systems, then run those scenarios on the twin. In the process, identify the consequences in a situation where the twin is corrupted. Another critical aspect is to ensure that data at rest, in transit, and in use are secure.

- Ensure the communications/interactions and interoperability between two or more digital twins are consistent with their hardware counterparts. Simplifying protocol-based communications in the digital world will result in missing critical gaps that need to be addressed.

For secure development and implementation, NIST guidance [30,31] that includes testing and experimental analysis should be used throughout the development lifecycle.

5. Data Orchestration for Digital Twins

Among all the D-Arc technology stack layers discussed in the previous section, the inherent nature of the data orchestration process is shown through its vertical alignment and depiction in Figure 4. Data orchestration can be defined [32] as automation of data-driven processes from end to end. This section focuses on a technical deep-dive of the data orchestration process.

As discussed previously, the physical dimension components that involve physical/hardware electromechanical systems and associated sensors are connected to the digital dimension through the communication architecture. The OSI reference model can be used to architect the network and pertaining cybersecurity measures depending on the sensors involved and the method of communication used.

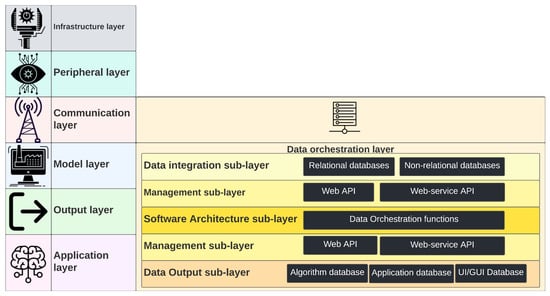

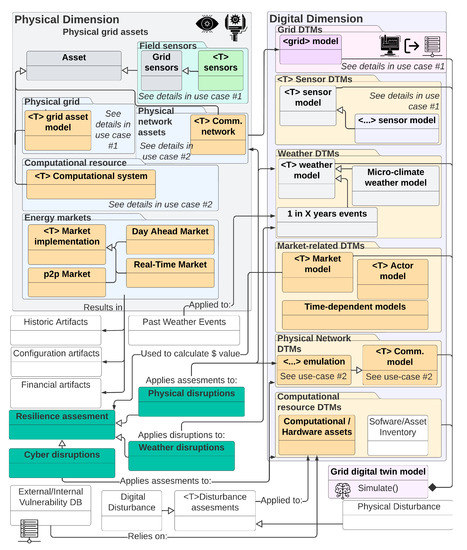

Data orchestration is the only vertical layer within the digital dimension that can be further broken down into five sublayers (see Figure 11): (1) data integration, (2) management, (3) software architecture, (4) management-II, and (5) data output. The data flows through these sublayers as part of processing and filtering (discussed in later sections). Finally, the DTM and other layers consume the data to perform data-driven actions.

Figure 11.

Inherent sublayers of the data orchestration layer and associated processes.

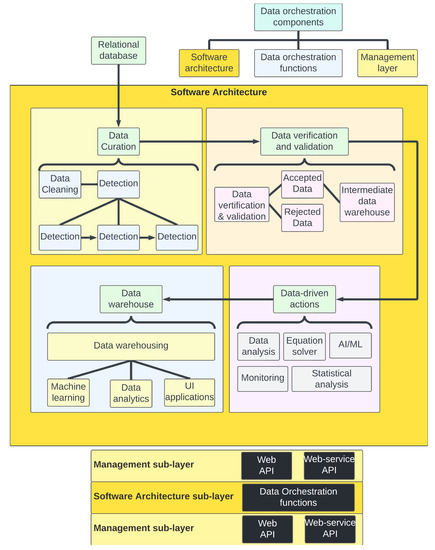

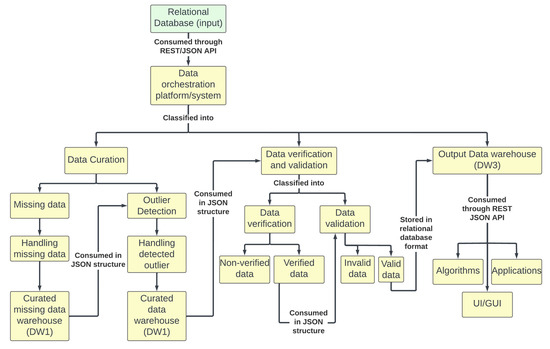

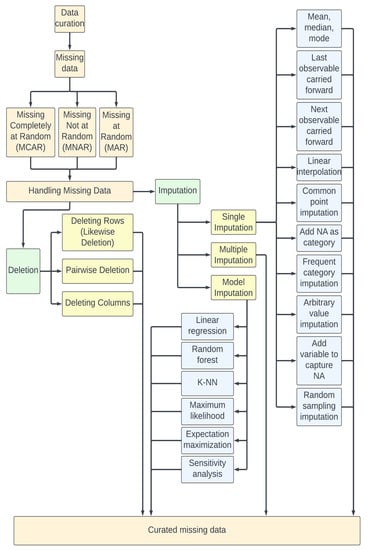

The complete process of data orchestration is sequentially represented in Figure 12. As shown in the figure, the three main sequential components are (1) data preparation or curation, (2) data verification and validation, and (3) data-driven actions. Although data warehousing is shown at the end, warehousing could serve at an intermediate level (between the three components); therefore, data orchestration may involve multiple data warehouses.

Figure 12.

Sequential representation of data orchestration process.

Data preparation pertains to curation before the data go through validation and are stored in a data warehouse. A commonly agreed-upon, functionality-based definition of a data warehouse is that it centralizes and consolidates large amounts of heterogeneous data from multiple sources [33].

Data verification and validation involves the decision-making process to determine whether to accept or reject the curated data, followed by storing the accepted data in another data warehouse.

Data-driven actions are the operations that can be conducted on the accepted data procured from the data warehouse based on the user needs.

As discussed earlier, the goals of the data orchestration process are to make data more accessible to DTMs for compute and mathematical modeling, and to provide data to the data-driven peripheral applications to perform data-driven actions. The data themselves might originate from the electromechanical systems or ICS and may use cloud or open-source platforms (see Figure 13). See Appendix A for a deep dive on the data orchestration processes. Since data orchestration is not a new field, the authors provided pertinent details in Appendix A.

Figure 13.

Data orchestration goals.

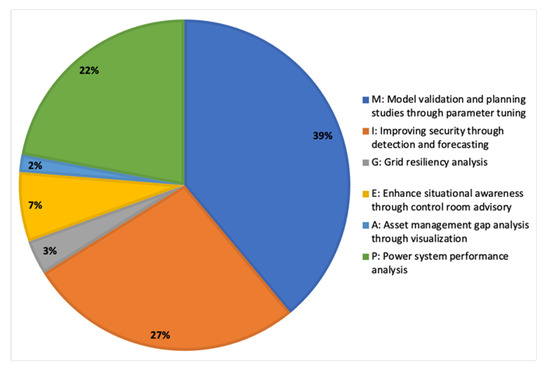

6. Digital Twin Use Cases

Digital twin has applications in multiple sectors such as energy, critical manufacturing, supply chain, etc. Since the focus of this paper has been energy sector applications, this section identifies some critical use cases and pertinent value propositions. Digital twin has the potential to assist the energy sector stakeholders (utilities, transmission owner, reliability coordinator, etc.) to understand how the grid is performing in real time or near real time. Such in-depth analysis could help stakeholders achieve situational awareness and evaluate change settings and impact in virtualized environments to achieve optimal efficiency and help future planning. Some high-level power grid applications are described below that can be supported by digital twins in the future. Table 1 maps the use cases to the D-Arc framework at a high level. This section focuses on six high-value energy sector use cases. However, the application of digital twins and D-Arc to the energy sector is beyond these six use cases. A wider set of relevant use cases that can use D-Arc are depicted in Appendix B.

Table 1.

Mapping power grid DT use case with DT D0.

6.1. Use Case 1: Model Validation and Planning Studies through Parameter Tuning

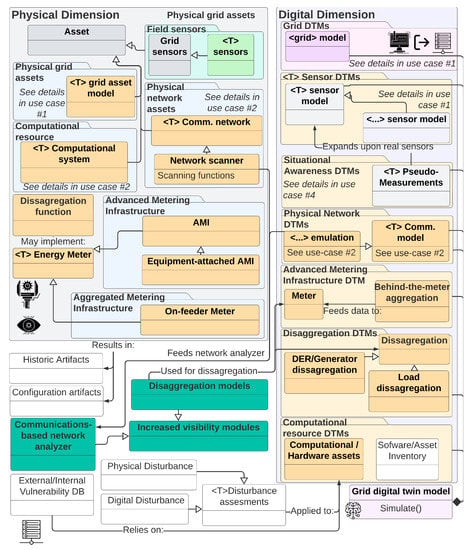

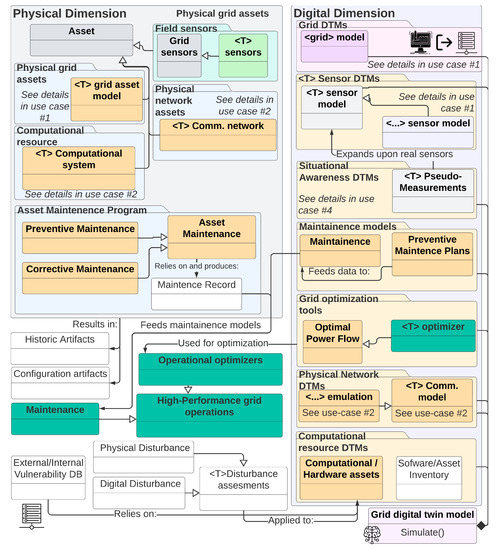

The electrical grid relies on physical infrastructure to transport and distribute energy across its service area. Although much of this infrastructure is static in nature (e.g., lines and transformers), a mixture of actuators and control mechanisms work together to dynamically change the behavior depending on the operational needs (see Figure 14). These decisions depend on the sensor’s reported data, the control logic, and the network models. It is imperative that grid operators have accurate network models that can represent a wide range of operational scenarios (e.g., emergency vs. normal conditions). However, this is not always possible due to a multitude of issues that range from incorrect or obsolete asset parameters to a lack of mathematical models. Bad network models have been in part responsible for events such as the 1996 Western Interconnect blackout [34] and can routinely lead to cases where engineers unintentionally operate the grid in an insecure state. To mitigate these risks, digital twin models can be used to constantly compare the physical grid state against a simulated world state. At a minimum, grid components should be able to replicate the steady-state properties, but more advanced use cases could benefit from being able to replicate the system behavior when frequency or weather conditions change. Relevant asset parameters will be dependent on the simulation engine being used, but could range from those used in power flow studies [35,36] to those used for electromagnetic event modeling [37,38]. In addition to the improved grid models, sensor-level digital twins can be used to ensure that field measurements are consistent with past and current behavior (e.g., by implementing event recorders). It is expected that with these types of DTMs, engineers will be able to assert the validity of future expansion studies. They will also be able to assert the system behavior when abnormal or emergency operational conditions arise (e.g., by relying on hypothetical disturbance scenarios). For instance, engineers will be able to simulate the real-world implications of deploying microgrids and connecting to privately owned DER farms, and utility DER deployments for energy management studies (see [39]) as well as being able to evaluate the system’s resiliency after a significant weather event occurs (e.g., a hurricane or wildfire).

Figure 14.

Information flow and object modeling for use case 1. For added detail, please see Figure A8.

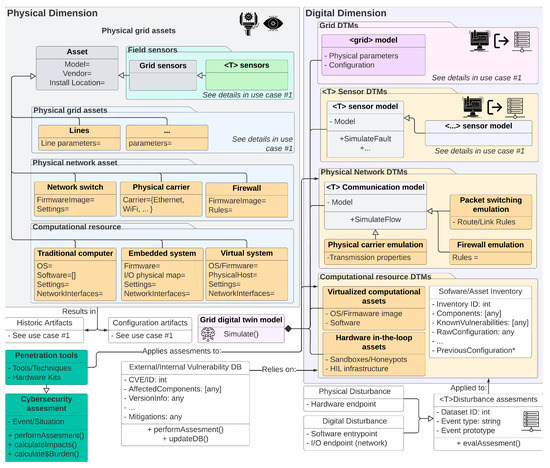

6.2. Use Case 2: Improving Security through Detection and Forecasting

By definition, and as described in use case 1, digital twins for the power grid are required (and expected) to collect and compare data from different grid sensors and the underlying physical infrastructure in real-time. However, the modern grid is also dependent on its interactions with information and communications technology assets. As such, it becomes necessary for system operators to know and understand the digital equipment attached to the grid (e.g., digital devices, network links, and the software that runs on top of them) to attain a holistic system view. It is important to note that these devices have become so ubiquitous in our modern world that their presence may be ignored, but include assets such as digital protection devices, automated/smart meter readers, digital sensors, digital actuators, dedicated control systems, and remote-control room systems. In addition to their ubiquity, the majority (if not all) of these devices may be vulnerable to cyberattacks that could lead to physical impacts. To better understand these interactions, a DTM should try to replicate as many of the digital functions and attributes as possible (see Figure 15 for an example architecture). Depending on the extent and depth of the cybersecurity assessments that are taking place, the DTM should mimic:

Figure 15.

Information flow and object modeling for use case 2. For added detail, please see Figure A9.

- Input/output characteristics: These capture the digital and grid interfaces that are used or accessible to the device in question. A DTM should attempt to replicate or at least describe these interactions.

- Digital systems: These may include low-level hardware models or be abstracted in the form of virtual appliances. These systems will typically run firmware or operating systems that dictate their behavior.

- Software components: This includes any piece of software that runs on top of a digital system that can take decisions, process data, and communicate with input/output interfaces. In an ideal DTM, a full replica may be desirable, but if this is not possible, at least up-to-date inventories and vulnerability databases must be maintained.

- Communication paths: These capture the communication topology and can be used to identify topological vulnerabilities (e.g., single points of failure) and entry/exit points (which may be used to deploy an attack).

- Traffic management devices:This includes routers, switches, and any other device that can alter or set a message transversal path.

- Security and monitoring devices: This often includes firewalls but may also include network sniffers and any other systems that help an operator or system to gain visibility.

- Supervisory systems:This may include tools or systems that collect data and perform health evaluations.

Based on the above characteristics, a successful DTM will therefore enable cybersecurity engineers to find vulnerabilities, assert defense strategies, and overall increase the cybersecurity posture of their system.

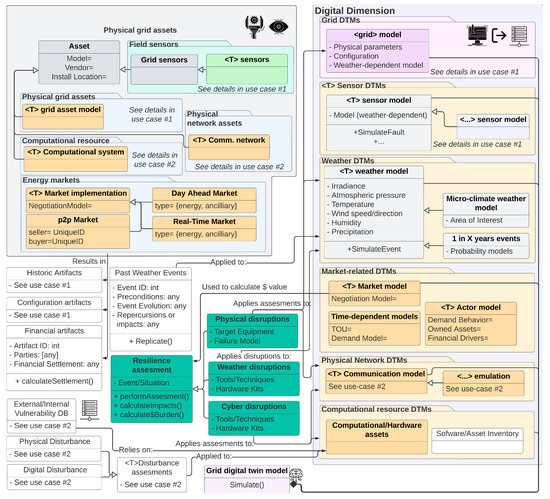

6.3. Use Case 3: Grid Resiliency Analysis:

Based on the capabilities outlined by use cases 1 and 2, it becomes possible for grid operators to simulate a sequence of well-orchestrated cyber and physical events to assess a system’s resiliency attributes. However, analyzing the consequences of natural events (such as those caused by extreme weather conditions) can remain a challenge unless the DTM considers these as part of its model. For this example, Figure 16 adds considerations for analyzing the economic impact of markets due to weather events. This is done by (1) adding historical weather datasets, (2) modifying grid models to account for weather variables, and (3) adding an economic model that captures the market behavior. By adding these two dimensions (one serving as input, while the economic perspective serves as an output), a DTM can help operators to not only plan for the actual outages but also to ensure that tariffs remain acceptable to their customers [40].

Figure 16.

Information flow and object modeling for use case 3. For added detail, please see Figure A10.

The outcomes of such scenarios can then be used to augment the grid’s ability to operate and maintain customer expectations even under severe circumstances. Some countries are already using DTM to do large-scale modeling to study infrastructural enhancement scenarios and implications [41], whereas in [42], they have focused on the energy market studies.

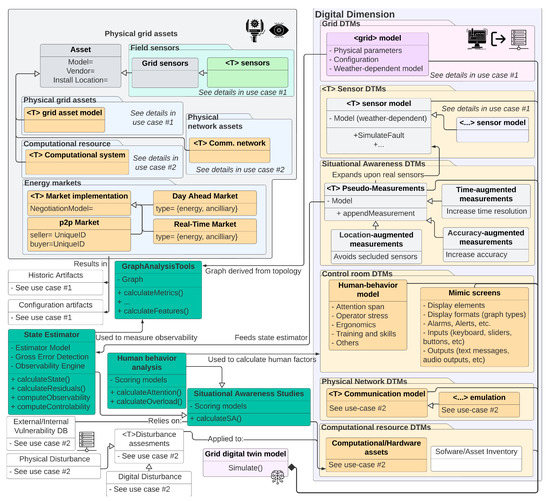

6.4. Use Case 4: Enhance Situational Awareness through Control Room Advisor

Although grid automation has and continues to advance, control center operators are ultimately responsible for a continuous system operation. However, the amount of data they must process to maintain situational awareness sometimes exceeds the limits of the human brain. DTMs could be used to test different techniques and tools to ensure human operators are not overloaded and thus can safely operate the system. Furthermore, techniques under test can be evaluated by automated behavioral models to ensure the results are replicable and remain valid under all circumstances (see Figure 17).

Figure 17.

Information flow and object modeling for use case 4. For added detail, please see Figure A11.

DTMs can also be used to complement existing situation awareness platforms by offering enhanced state estimators and other time or resolution augmentation tools that can eliminate data voids or other artifacts that distract or confuse operators. This, for example, may be used to generate visualizations or diagrams that complement and summarize information (thereby reducing the mental burden).

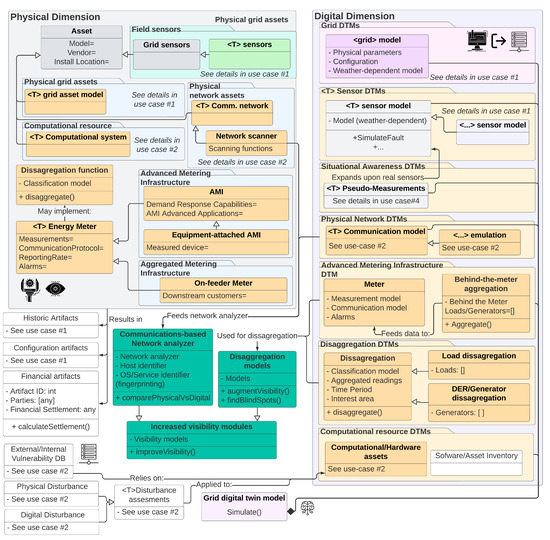

6.5. Use Case 5: Increased Behind-the-Meter Visibility and Digital Asset Management Gap Analysis

Digital twins can also be used to increase system visibility where no data are available due to a lack of sensing capability or technical limitations (e.g., unable to measure a large customer-owned load). This can largely be done by performing measurement disaggregation (via Amazon Machine Image (AMI)-hosted functions or centralized approaches) but can be difficult to generalize when larger behind-the-meter systems are in place (e.g., commercial photovoltaic (PV) deployments). By implementing these functions on a DTM-based platform, engineers can increase their situational awareness and be able to more easily identify areas that require increased visibility and segments of the grid with poor quality measurements, helping them to take physical actions to mitigate these issues. It is important to note that this increase in visibility is not limited to measurements but can also be extended to the physical domain by enabling grid operators to identify missing or unaccounted assets that are present in the field (by comparing reported measurements and doing periodic network scans). This can enable engineers to have a higher degree of certainty about the devices deployed within the service region. An example architecture of such a system is presented in Figure 18.

Figure 18.

Information flow and object modeling for use case 5. For added detail, please see Figure A12.

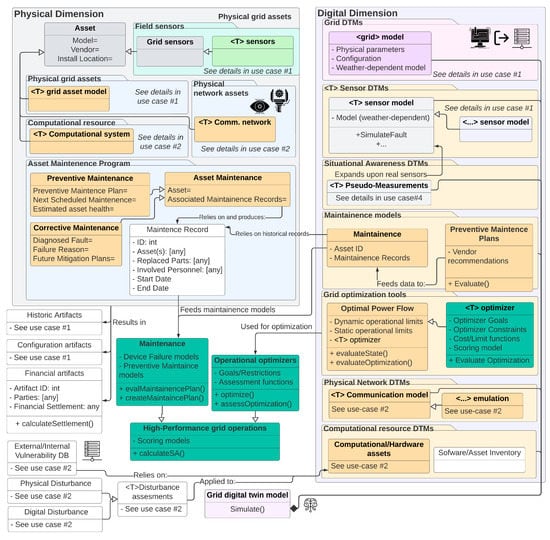

6.6. Use Case 6: Power System Performance Analysis

Based on use cases 1 and 2, it is clear that a digital twin has the ability to support holistic system views, which accurately mimic the system behavior. These features can be used to test new tools and techniques that seek to increase operational performance without having to experience the risks associated with field deployments. Furthermore, it can accelerate the deployment of new or novel algorithms by allowing researchers to safely evaluate new test tools, enabling them to identify shortcomings and improvements early in the design process, and helping them increase their technology readiness levels.

Another benefit of DTMs is their ability to identify faults early on (by evaluating device-level discrepancies). This can be further complemented by adding fault and maintenance data that can be used to develop better preventive maintenance programs that ensure a continuous and reliable grid operation. Although individual applications will need to tailor the design to their needs, Figure 19 illustrates a performance-oriented platform that can be used to optimize system operations and performance activities.

Figure 19.

Information flow and object modeling for use case 6. For added detail, please see Figure A13.

7. Comparative Analysis

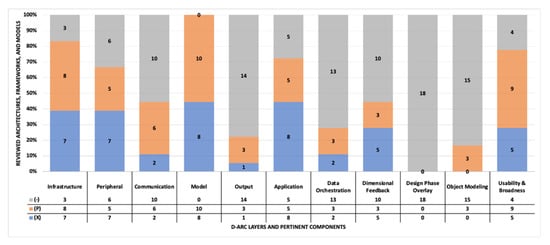

To evaluate and demonstrate the efficacy of D-Arc, the framework is compared against 18 different existing frameworks, architectures, and models (collectively referred to as frameworks for the remainder of this section) that are highly cited or used. The reviewed frameworks are largely focused on Energy and Power use cases with a small percentage applied to additional sectors such as advanced manufacturing. Table A2 shows the comprehensive comparative analysis and pertinent observations. The table is formatted to assign a rating for each of the columns (excluding the final column) that represent D-Arc related components and layers. The final column of the table provides noteworthy observations and justification behind the assigned rating. The legend explaining the ratings for the columns is defined at the bottom of the table. The analysis and data from Table A2 is plotted in Figure 20 for simplified analysis and to provide inferences. According to Figure 20, the bulk of the existing frameworks focused on identifying physical systems and designing their respective digital models, which is reasonable in proof-of-concept and experimental studies. However, thorough analysis combined with the design and relationship definitions are needed at communication, output, and data orchestration layers to build, deploy, and scale real-world digital twins. Furthermore, none of the reviewed frameworks depicted design phases of the digital twin development process that can be reused or replicated by other research groups.

Figure 20.

Comprehensive view of the comparative analysis presented in Table A1.

Another noteworthy observation is that most of the reviewed frameworks did not present information and object modeling. The digital twin development process involves relying on software tools and artifacts; the lack of information/object/data modeling would make it nearly impossible to operationalize a framework or an experiment. Only three of the studied frameworks alluded to some level of object modeling. Ranking across the above factors is used to subjectively estimate the usability of the frameworks outside the scope of the use cases within the 18 papers. Thorough analysis of the frameworks further solidified the need for D-Arc. As discussed in the previous sections, D-Arc is designed to serve as a detailed framework that is complemented by modeling artifacts. These artifacts combined with the framework can be used to ideate, design, and deploy digital twins for any use case. D-Arc itself is not designed with any intended use case except for the driving philosophy to primarily apply D-Arc to the Energy sector and secondarily apply D-Arc to other sectors such as advanced manufacturing and others. In conclusion, D-Arc is compatible with all four levels of digital twins that are described in [43,44]

- Level-1—Predigital Twin: This level involves physics-based simulation without any emphasis on the physical system, supporting applications/GUIs, and machine learning systems. The digital twins that are included in this level would mainly focus on maturing the Model Layer of the D-Arc framework with necessary network connections to the Infrastructure and Peripheral Layers for needed data flows. In terms of D-Arc phases, the modeling process involved in Level-1 digital twins primarily stay within the Design and Development Phase with need-basis emphasis or expansion into the Build & Intelligence Extraction Phase; Verification, Validation, and Testing Phase.

- Level-2—Digital Twin: In addition to physics-based simulation, this level involves thorough understanding and integration with the physical system. Therefore, the digital twins that are included in this level would focus (at least partially) on maturing the Infrastructure, Peripheral, Communication, Model, and Data Orchestration Layers. In terms of D-Arc phases, the modeling process involved in Level-2 digital twins mainly stay within the Design and Development Phase; Build & Intelligence Extraction Phase with need-basis emphasis or expansion into the Verification, Validation, and Testing Phase.

- Level-3—Adaptive Digital Twin: The digital twins in this this level would emphasize everything a Level-2 digital twin would emphasize, as well as fully maturing Data Orchestration, Output Layers and Dashboards/GUIs related components under the Application Layer. In terms of D-Arc phases, the modeling process involved in Level-3 digital twins primarily stay within the Design and Development Phase; Build & Intelligence Extraction Phase; Verification, Validation, and Testing Phase with limited emphasis or expansion into the Delivery, Deployment, and Field Analysis Phase.

- Level-4—Intelligent Digital twins: To achieve the digital twins that are included in this level, elements from all layers of the D-Arc framework should be highly matured (including the design of matured machine learning components). In terms of D-Arc phases, the modeling process involved in Level-4 digital twins would go through all four phases of the D-Arc framework (i.e., a complete end-to-end digital twin).

8. Potential Challenges and Limitations with Digital Twins and D-Arc

The concept of digital twins has promising benefits in rapidly evolving energy infrastructures. However, building a true digital twin is nontrivial. There is existing literature where researchers synonymously used granular modeling as digital twins. However, based on the technical details and justification discussed in this paper, for a physics-based granular model to be a called a digital twin, the twin should have some level of (near) real-time interaction with the physical systems. As the technology and its applications evolve, there are several noteworthy challenges that should be addressed with the digital twin development:

- Digital twins could be subject to cyberphysical attacks such as denial of service, false data injection, or network infrastructure attacks leading to man-in-the-middle or adversary-in-the-middle attacks, etc. [43].

- It is not uncommon for energy utilities to have some differences between planning and operational grid models. Moreover, feeder maintenance efforts are always ongoing. In such changing ecosystems, it can be problematic to ensure that the planning and operational models are synchronized in real time. This challenge extends to the digital twin modeling of the grid feeder because it is often a common practice to use planning models or “near-to-real” operational models for simulation and modeling efforts including digital twins.

- Digital twin development and pertinent end-to-end processes would involve large datasets. To ensure (near) real-time synchronization between the digital twins and physical systems, data processing and orchestration, data transfer and handling should happen at a high speed with minimal temporal overhead. This may involve relying on cloud infrastructure or expensive on-premises infrastructure that support extremely low latency and high throughput. In addition to security of the digital twins, it is important to ensure the security of physical systems. Adversaries could compromise a subsystem and laterally move into more critical systems. If the physical infrastructure is compromised, the integrity and usability of the digital twin outputs can be compromised. Furthermore, strict data handling processes should be in place because the exchange of information between the physical systems and the digital twin, including the feeder models, may be deemed as business critical/sensitive information. Therefore, data and model confidentiality are of high importance [45].

D-Arc framework is designed from a technological/engineering perspective with very minimal emphasis on nontechnical aspects such as correlation to business needs. Here are the noteworthy limitations of D-Arc, and future work will involve addressing these limitations:

- Lack of governance layer: In its current state, D-Arc does not have a mechanism to incorporate governance rules and policies. This will be considered in D-Arc’s expansion efforts.

- Lack of business and social layers: Social/societal implications and correlation to business needs/policies are not defined in D-Arc. These may be nontechnical in nature, but it is vital to build and deploy digital twins that solve specific organizational needs and risks.

- Lack of technology/tool mapping across layers: The current state of D-Arc is technology-agnostic and does not emphasize or recommend a particular open-source or proprietary solution. A potentially useful expansion of D-Arc could map verified existing tools and technologies that can support achieving goals/requirements across each of the D-Arc layers and design phases. The risk associated with addressing the tool mapping limitation is that such mapping lists become outdated quickly.

- Cybersecurity testing: An important next step for D-Arc involves thorough cyberphysical security and cyber and physical resiliency testing using security frameworks such as MITRE ATT&CK. Such an exercise can assist in evaluating the resiliency and security of the framework and respective use cases that leverage the framework. Such an exploration would be followed by a list of security and resiliency mitigations/guidance.