A Data-Driven Kernel Principal Component Analysis–Bagging–Gaussian Mixture Regression Framework for Pulverizer Soft Sensors Using Reduced Dimensions and Ensemble Learning

Abstract

1. Introduction

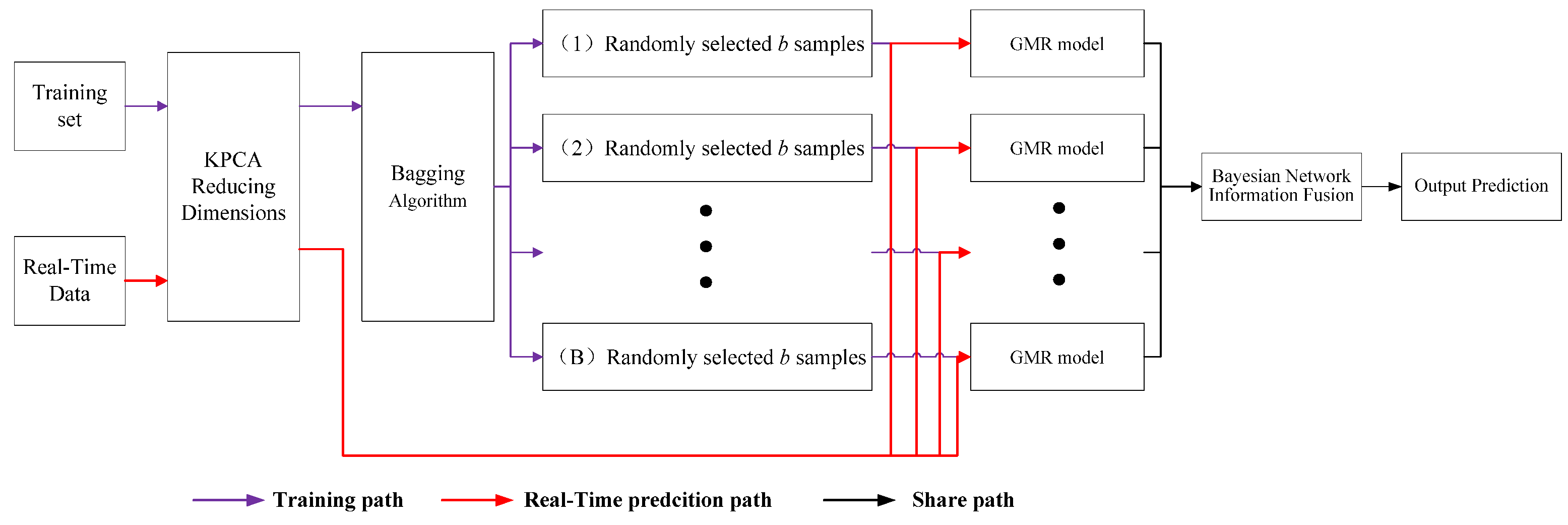

2. Principle and Method

2.1. KPCA Reducing Dimensions

2.2. Bagging Algorithm

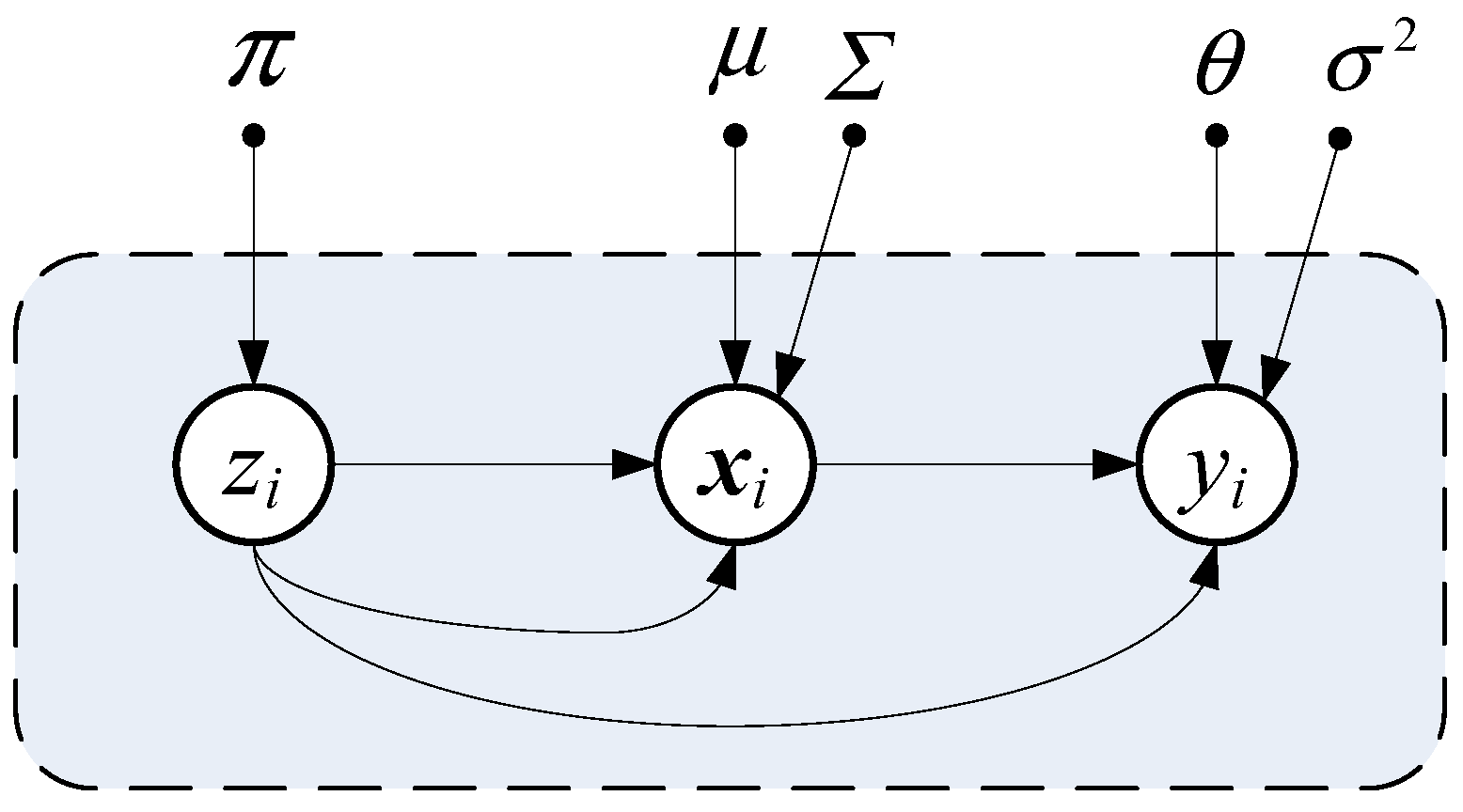

2.3. Gaussian Mixture Regression Model

2.4. Bayesian Network Information Fusion

3. Steps Based on KPCA-Bagging-GMR

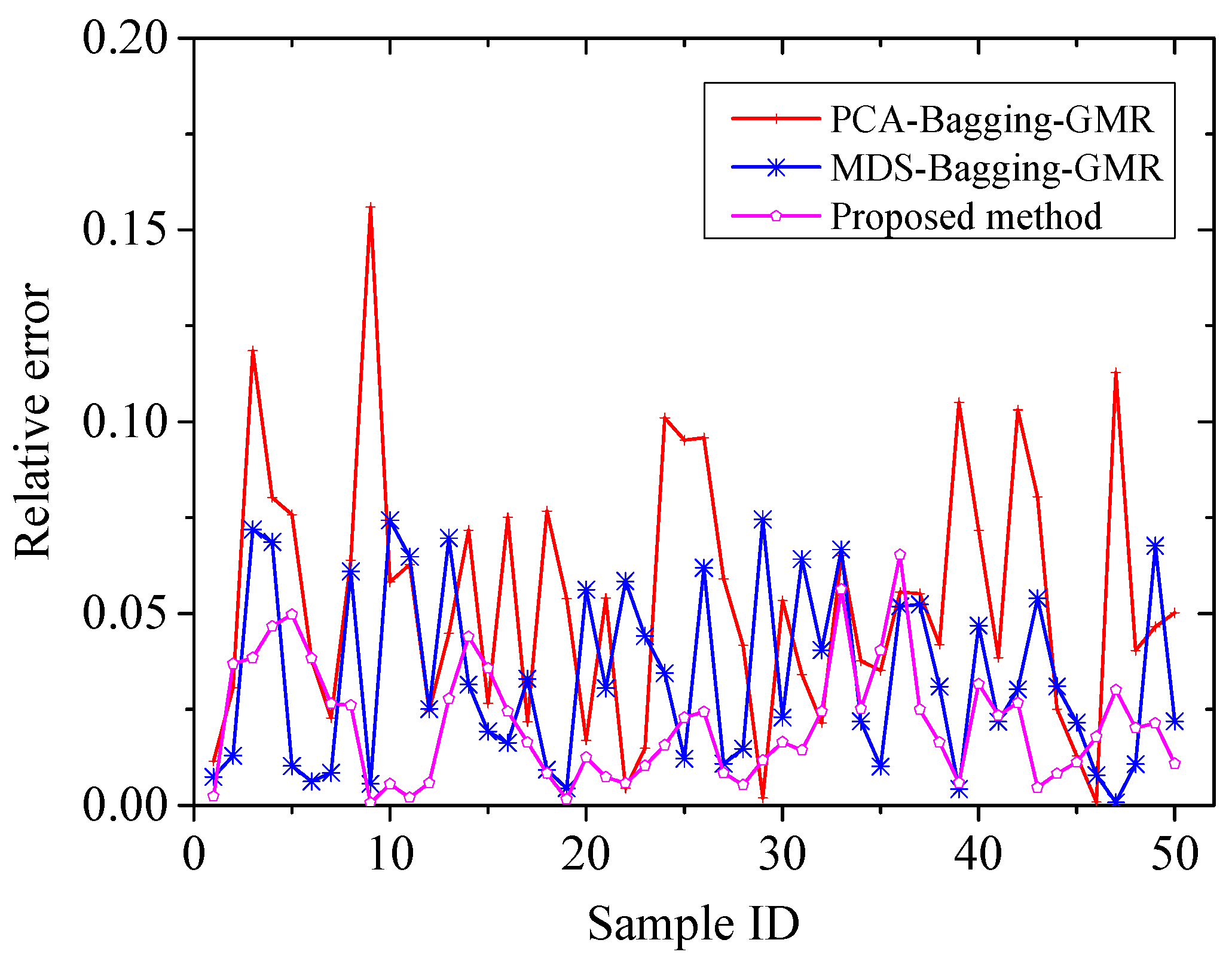

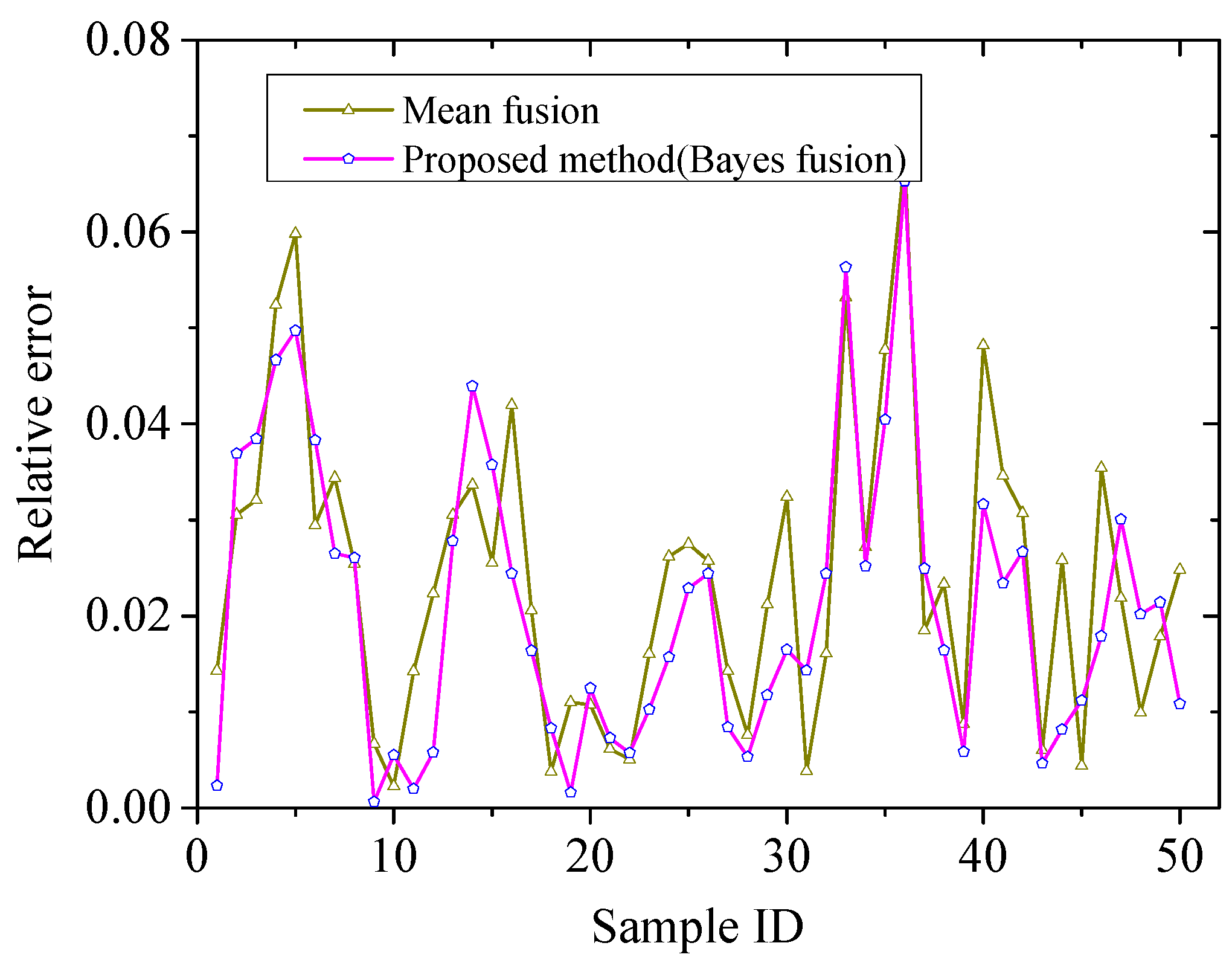

4. Results and Discussion

4.1. Research Object

4.2. Simulation Experiment of Pulverizer

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Eslick, J.C.; Zamarripa, M.A.; Ma, J.; Wang, M.; Bhattacharya, I.; Rychener, B.; Pinkston, P.; Bhattacharyya, D.; Zitney, S.E.; Burgard, A.P.; et al. Predictive Modeling of a Subcritical Pulverized-Coal Power Plant for Optimization: Parameter Estimation, Validation, and Application. Appl. Energy 2022, 319, 119226. [Google Scholar] [CrossRef]

- Saif-ul-Allah, M.W.; Khan, J.; Ahmed, F.; Hussain, A.; Gillani, Z.; Bazmi, A.A.; Khan, A.U. Convolutional Neural Network Approach for Reduction of Nitrogen Oxides Emissions from Pulverized Coal-Fired Boiler in a Power Plant for Sustainable Environment. Comput. Chem. Eng. 2023, 176, 108311. [Google Scholar] [CrossRef]

- Agrawal, V.; Panigrahi, B.K.; Subbarao, P.M.V. Review of Control and Fault Diagnosis Methods Applied to Coal Mills. J. Process Control. 2015, 32, 138–153. [Google Scholar] [CrossRef]

- Hong, X.; Xu, Z.; Zhang, Z. Abnormal Condition Monitoring and Diagnosis for Coal Mills Based on Support Vector Regression. IEEE Access 2019, 7, 170488–170499. [Google Scholar] [CrossRef]

- Xu, W.; Huang, Y.; Song, S.; Cao, G.; Yu, M.; Cheng, H.; Zhu, Z.; Wang, S.; Xu, L.; Li, Q. A Bran-New Performance Evaluation Model of Coal Mill Based on GA-IFCM-IDHGF Method. Meas. J. Int. Meas. Confed. 2022, 195, 126171. [Google Scholar] [CrossRef]

- Banik, R.; Das, P.; Ray, S.; Biswas, A. Wind Power Generation Probabilistic Modeling Using Ensemble Learning Techniques. Mater. Today Proc. 2019, 26, 2157–2162. [Google Scholar] [CrossRef]

- Zhong, X.; Ban, H. Crack Fault Diagnosis of Rotating Machine in Nuclear Power Plant Based on Ensemble Learning. Ann. Nucl. Energy 2022, 168, 108909. [Google Scholar] [CrossRef]

- Wen, X.; Li, K.; Wang, J. NOx Emission Predicting for Coal-Fired Boilers Based on Ensemble Learning Methods and Optimized Base Learners. Energy 2023, 264, 126171. [Google Scholar] [CrossRef]

- Cai, J.; Ma, X.; Li, Q. On-Line Monitoring the Performance of Coal-Fired Power Unit: A Method Based on Support Vector Machine. Appl. Therm. Eng. 2009, 29, 2308–2319. [Google Scholar] [CrossRef]

- Zhang, X.; Zhao, J. Prediction Model for Rotary Kiln Coal Feed Based on Hybrid SVM. Procedia Eng. 2011, 15, 681–687. [Google Scholar] [CrossRef][Green Version]

- Yao, Z.; Romero, C.; Baltrusaitis, J. Combustion Optimization of a Coal-Fired Power Plant Boiler Using Artificial Intelligence Neural Networks. Fuel 2023, 344, 128145. [Google Scholar] [CrossRef]

- Doner, N.; Ciddi, K.; Yalcin, I.B.; Sarivaz, M. Artificial Neural Network Models for Heat Transfer in the Freeboard of a Bubbling Fluidised Bed Combustion System. Case Stud. Therm. Eng. 2023, 49, 103145. [Google Scholar] [CrossRef]

- Yu, Z.; Yousaf, K.; Ahmad, M.; Yousaf, M.; Gao, Q.; Chen, K. Efficient Pyrolysis of Ginkgo Biloba Leaf Residue and Pharmaceutical Sludge (Mixture) with High Production of Clean Energy: Process Optimization by Particle Swarm Optimization and Gradient Boosting Decision Tree Algorithm. Bioresour. Technol. 2020, 304, 123020. [Google Scholar] [CrossRef] [PubMed]

- Cao, H.; Chen, W.; Jia, L.; Zhang, Y.; Lu, Y. Cluster Analysis Based on Attractor Particle Swarm Optimization with Boundary Zoomed for Working Conditions Classification of Power Plant Pulverizing System. Neurocomputing 2013, 117, 54–63. [Google Scholar] [CrossRef]

- Li, X.; Wu, Y.; Chen, H.; Chen, X.; Zhou, Y.; Wu, X.; Chen, L.; Cen, K. Coal Mill Model Considering Heat Transfer Effect on Mass Equations with Estimation of Moisture. J. Process Control. 2021, 104, 178–188. [Google Scholar] [CrossRef]

- Niemczyk, P.; Dimon Bendtsen, J.; Peter Ravn, A.; Andersen, P.; Søndergaard Pedersen, T. Derivation and Validation of a Coal Mill Model for Control. Control. Eng. Pract. 2012, 20, 519–530. [Google Scholar] [CrossRef]

- Dai, Q.; Ye, R.; Liu, Z. Considering Diversity and Accuracy Simultaneously for Ensemble Pruning. Appl. Soft Comput. J. 2017, 58, 75–91. [Google Scholar] [CrossRef]

- Shiue, Y.R.; You, G.R.; Su, C.T.; Chen, H. Balancing Accuracy and Diversity in Ensemble Learning Using a Two-Phase Artificial Bee Colony Approach. Appl. Soft Comput. 2021, 105, 107212. [Google Scholar] [CrossRef]

- Khoder, A.; Dornaika, F. Ensemble Learning via Feature Selection and Multiple Transformed Subsets: Application to Image Classification. Appl. Soft Comput. 2021, 113, 108006. [Google Scholar] [CrossRef]

- Mohammed, A.; Kora, R. A Comprehensive Review on Ensemble Deep Learning: Opportunities and Challenges. J. King Saud Univ.—Comput. Inf. Sci. 2023, 35, 757–774. [Google Scholar] [CrossRef]

- Liu, D.; Shang, J.; Chen, M. Principal Component Analysis-Based Ensemble Detector for Incipient Faults in Dynamic Processes. IEEE Trans. Ind. Inform. 2021, 17, 5391–5401. [Google Scholar] [CrossRef]

- Lu, H.; Su, H.; Zheng, P.; Gao, Y.; Du, Q. Weighted Residual Dynamic Ensemble Learning for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 6912–6927. [Google Scholar] [CrossRef]

- Feng, Y.; Wang, X.; Zhang, J. A Heterogeneous Ensemble Learning Method for Neuroblastoma Survival Prediction. IEEE J. Biomed. Health Inform. 2022, 26, 1472–1483. [Google Scholar] [CrossRef]

- Farrell, A.; Wang, G.; Rush, S.A.; Martin, J.A.; Belant, J.L.; Butler, A.B.; Godwin, D. Machine Learning of Large-Scale Spatial Distributions of Wild Turkeys with High-Dimensional Environmental Data. Ecol. Evol. 2019, 9, 5938–5949. [Google Scholar] [CrossRef] [PubMed]

- Kuang, F.; Xu, W.; Zhang, S. A Novel Hybrid KPCA and SVM with GA Model for Intrusion Detection. Appl. Soft Comput. J. 2014, 18, 178–184. [Google Scholar] [CrossRef]

- Cao, L.J.; Chua, K.S.; Chong, W.K.; Lee, H.P.; Gu, Q.M. A Comparison of PCA, KPCA and ICA for Dimensionality Reduction in Support Vector Machine. Neurocomputing 2003, 55, 321–336. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhou, M.; Xu, X.; Zhang, N. Fault Diagnosis of Rolling Bearings with Noise Signal Based on Modified Kernel Principal Component Analysis and DC-ResNet. CAAI Trans. Intell. Technol. 2023, 8, 1014–1028. [Google Scholar] [CrossRef]

- Sha, X.; Diao, N. Robust Kernel Principal Component Analysis and Its Application in Blockage Detection at the Turn of Conveyor Belt. Measurement 2023, 206, 112283. [Google Scholar] [CrossRef]

- Liu, Z.; Han, H.G.; Dong, L.X.; Yang, H.Y.; Qiao, J.F. Intelligent Decision Method of Sludge Bulking Using Recursive Kernel Principal Component Analysis and Bayesian Network. Control. Eng. Pract. 2022, 121, 105038. [Google Scholar] [CrossRef]

- Breiman, L. Bagging Predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Sheng, H.; Xiao, J.; Wang, P. Lithium Iron Phosphate Battery Electric Vehicle State-of-Charge Estimation Based on Evolutionary Gaussian Mixture Regression. IEEE Trans. Ind. Electron. 2017, 64, 544–551. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, Y. Bayesian Entropy Network for Fusion of Different Types of Information. Reliab. Eng. Syst. Saf. 2020, 195, 106747. [Google Scholar] [CrossRef]

- Singh, P.; Bose, S.S. Ambiguous D-Means Fusion Clustering Algorithm Based on Ambiguous Set Theory: Special Application in Clustering of CT Scan Images of COVID-19. Knowl-Based Syst. 2021, 231, 107432. [Google Scholar] [CrossRef]

| Location | Auxiliary Variables |

|---|---|

| Pulverizer Inlet | inlet primary air flow, inlet primary air pressure, inlet primary air temperature, and coal feed |

| Pulverizer Outlet | separator air powder mixture temperature and separator outlet pressure |

| Pulverizer classifying | seal air and primary air pressure difference |

| Hydraulic power unit | loaded oil pressure and hydraulic oil temperature |

| Pulverizer motor | motor bearing temperature and thrust bearing oil groove oil temperature |

| Number | I | II | III | IV | V |

|---|---|---|---|---|---|

| Modeling approach | KPCA–GMR | PCA–Bagging–GMR | MDS–Bagging–GMR | KPCA–Bagging–GMR | Proposed Method |

| Fusion mode | Bayes fusion | Bayes fusion | Bayes fusion | Mean fusion | Bayes fusion |

| RSEM | 2.6667 | 4.7652 | 2.7653 | 0.6979 | 0.6045 |

| COR | 91.34% | 85.23% | 78.45% | 99.34% | 99.12% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jin, S.; Si, F.; Dong, Y.; Ren, S. A Data-Driven Kernel Principal Component Analysis–Bagging–Gaussian Mixture Regression Framework for Pulverizer Soft Sensors Using Reduced Dimensions and Ensemble Learning. Energies 2023, 16, 6671. https://doi.org/10.3390/en16186671

Jin S, Si F, Dong Y, Ren S. A Data-Driven Kernel Principal Component Analysis–Bagging–Gaussian Mixture Regression Framework for Pulverizer Soft Sensors Using Reduced Dimensions and Ensemble Learning. Energies. 2023; 16(18):6671. https://doi.org/10.3390/en16186671

Chicago/Turabian StyleJin, Shengxiang, Fengqi Si, Yunshan Dong, and Shaojun Ren. 2023. "A Data-Driven Kernel Principal Component Analysis–Bagging–Gaussian Mixture Regression Framework for Pulverizer Soft Sensors Using Reduced Dimensions and Ensemble Learning" Energies 16, no. 18: 6671. https://doi.org/10.3390/en16186671

APA StyleJin, S., Si, F., Dong, Y., & Ren, S. (2023). A Data-Driven Kernel Principal Component Analysis–Bagging–Gaussian Mixture Regression Framework for Pulverizer Soft Sensors Using Reduced Dimensions and Ensemble Learning. Energies, 16(18), 6671. https://doi.org/10.3390/en16186671