Real-Time Multi-Home Energy Management with EV Charging Scheduling Using Multi-Agent Deep Reinforcement Learning Optimization

Abstract

1. Introduction

- This paper presents a multi-home energy management optimization solution with optimal EV charging and discharging scheduling to enhance the load profile of a group of prosumers under the supervision of an aggregator. The study also incorporates an energy trading strategy based on Real-Time Pricing (RTP), as the four prosumers and the aggregator are considered profit-making entities, which has not been addressed in previous research. The strategy promotes appropriate behavior among prosumers for consuming and injecting power from and to the grid.

- This paper proposes a multi-agent optimization solution using the DDPG algorithm to tackle the multi-home energy management problem with EV charging and discharging scheduling, taking into account the uncertainties in power consumption, solar PV generation, and EV usage among all prosumers. The proposed method trains well-adaptable agents capable of efficiently finding optimal solutions in uncertain situations. Furthermore, the agents require less discovery time for the optimal solution compared to existing methods, particularly metaheuristic methods [32]. Additionally, this paper considers the EV battery as the BESS for each prosumer, which presents a significant challenge in dealing with the uncertainty of EV usage, especially departure and arrival times, which has not been explored in previous research.

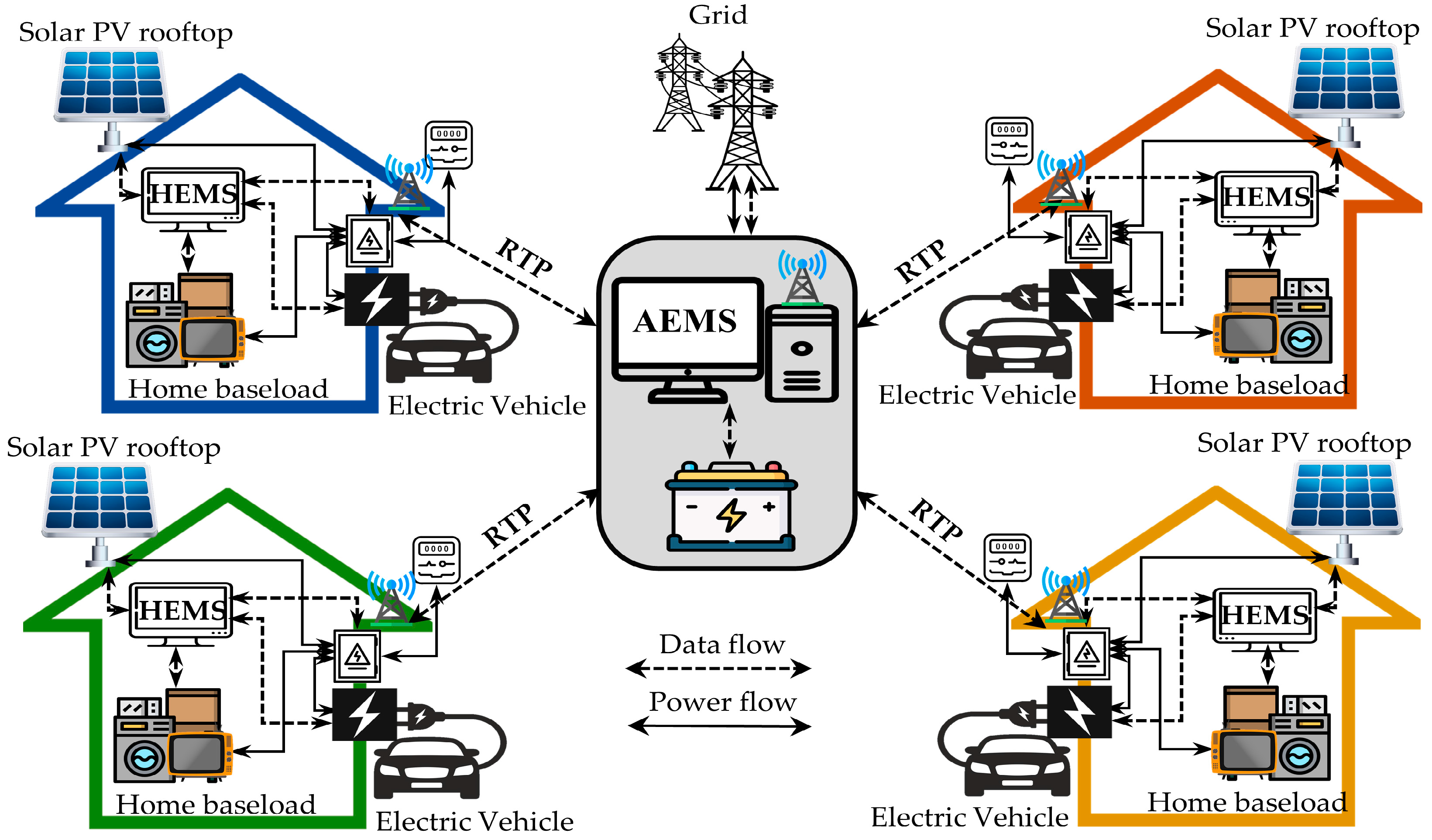

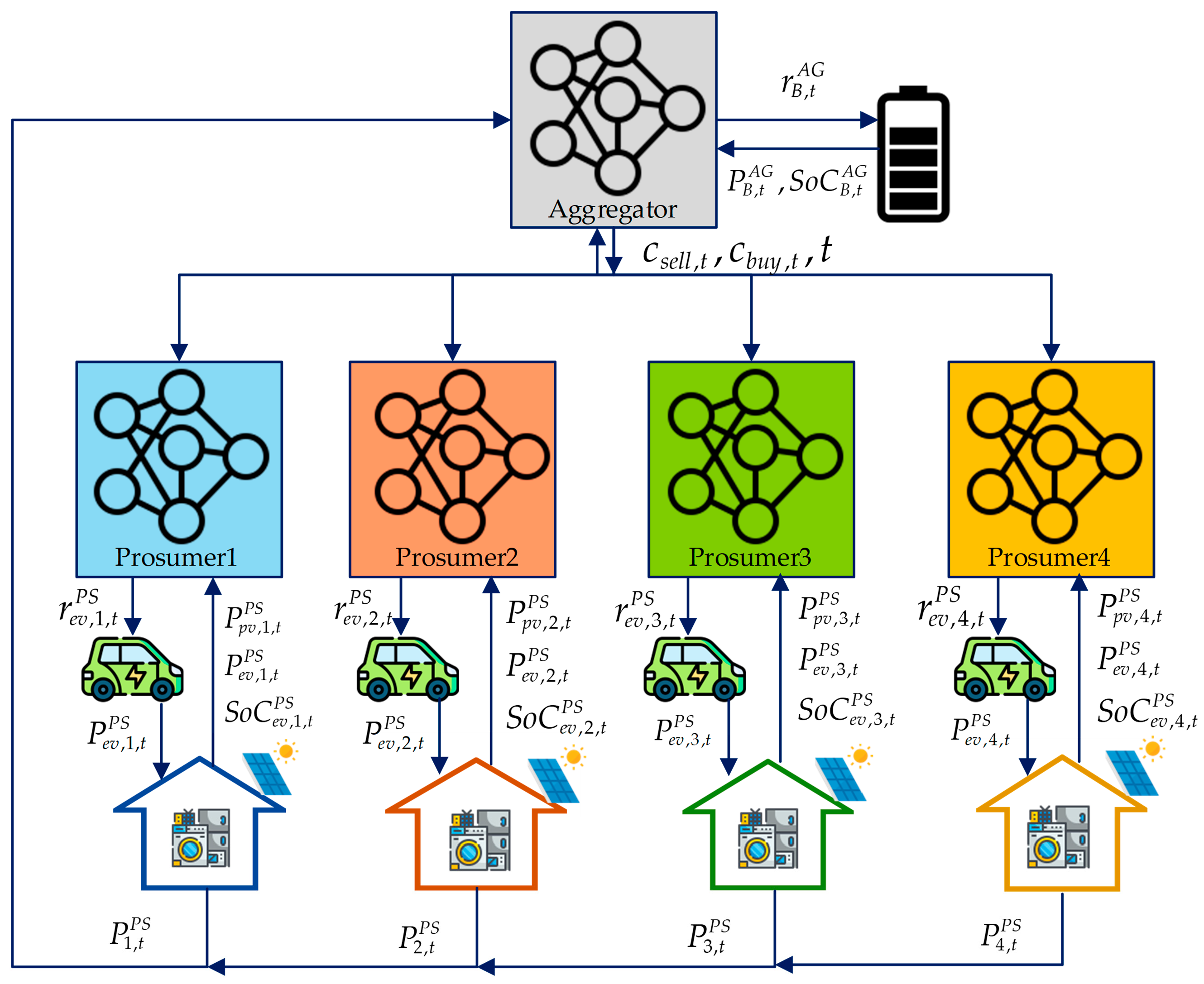

2. Proposed Energy Management Framework

3. Problem Formulation

3.1. Objective Functions

3.1.1. Revenue/Cost for Selling/Buying Energy

3.1.2. Battery Degradation Cost

3.2. Constraints

3.2.1. Operating Limits of the Battery

3.2.2. Power Balance

3.2.3. Power Consumption of All Prosumers

3.3. Multi-Agent Problem Transformation

3.3.1. DDPG Variables at the Home Level

3.3.2. DDPG Variables at the Aggregator Level

4. Proposed Method

4.1. Stochastic Model Construction

4.1.1. The Stochastic Model of EV Usage

4.1.2. Solar PV Generation and Home Baseload

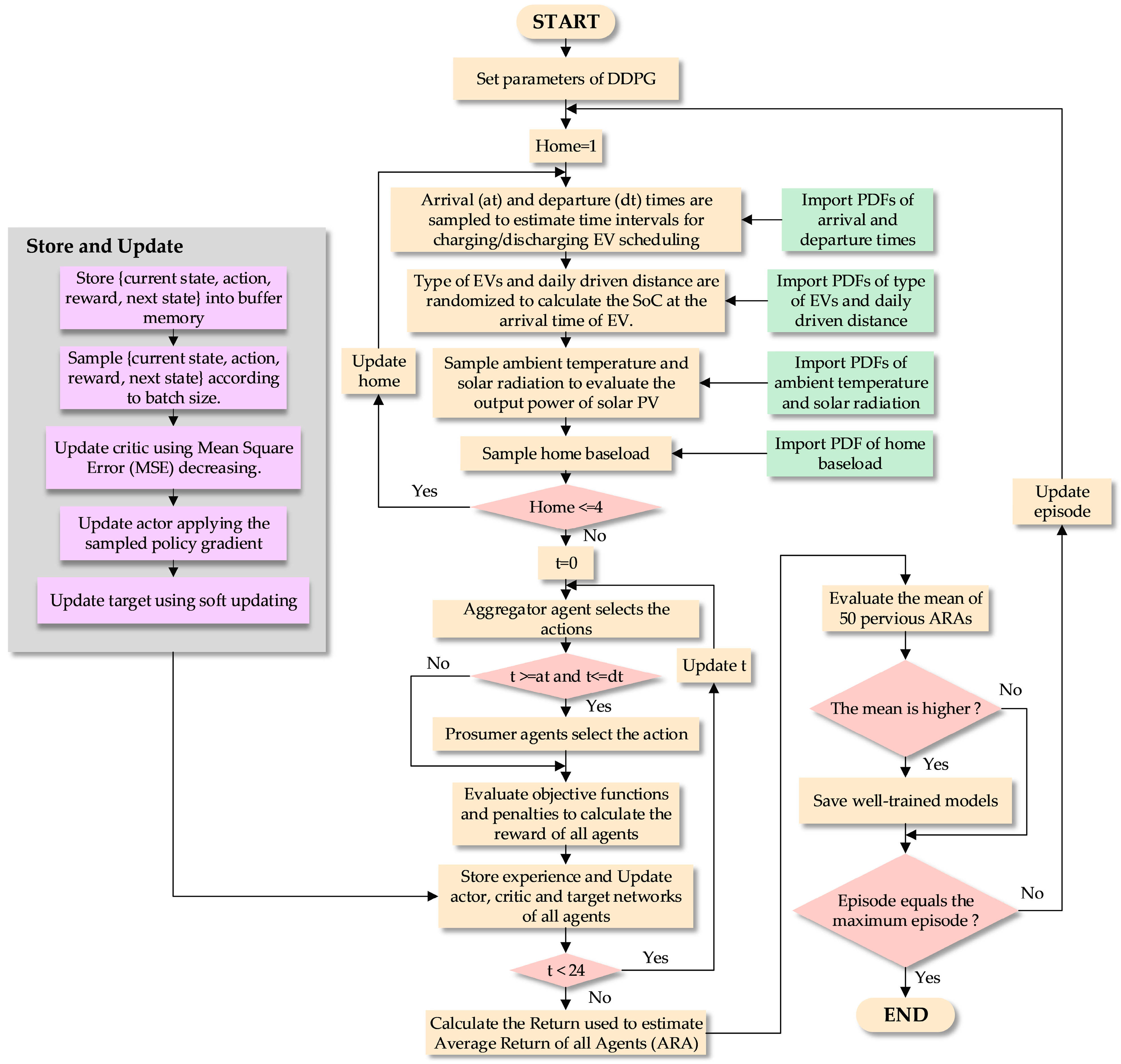

4.2. Training Procedure

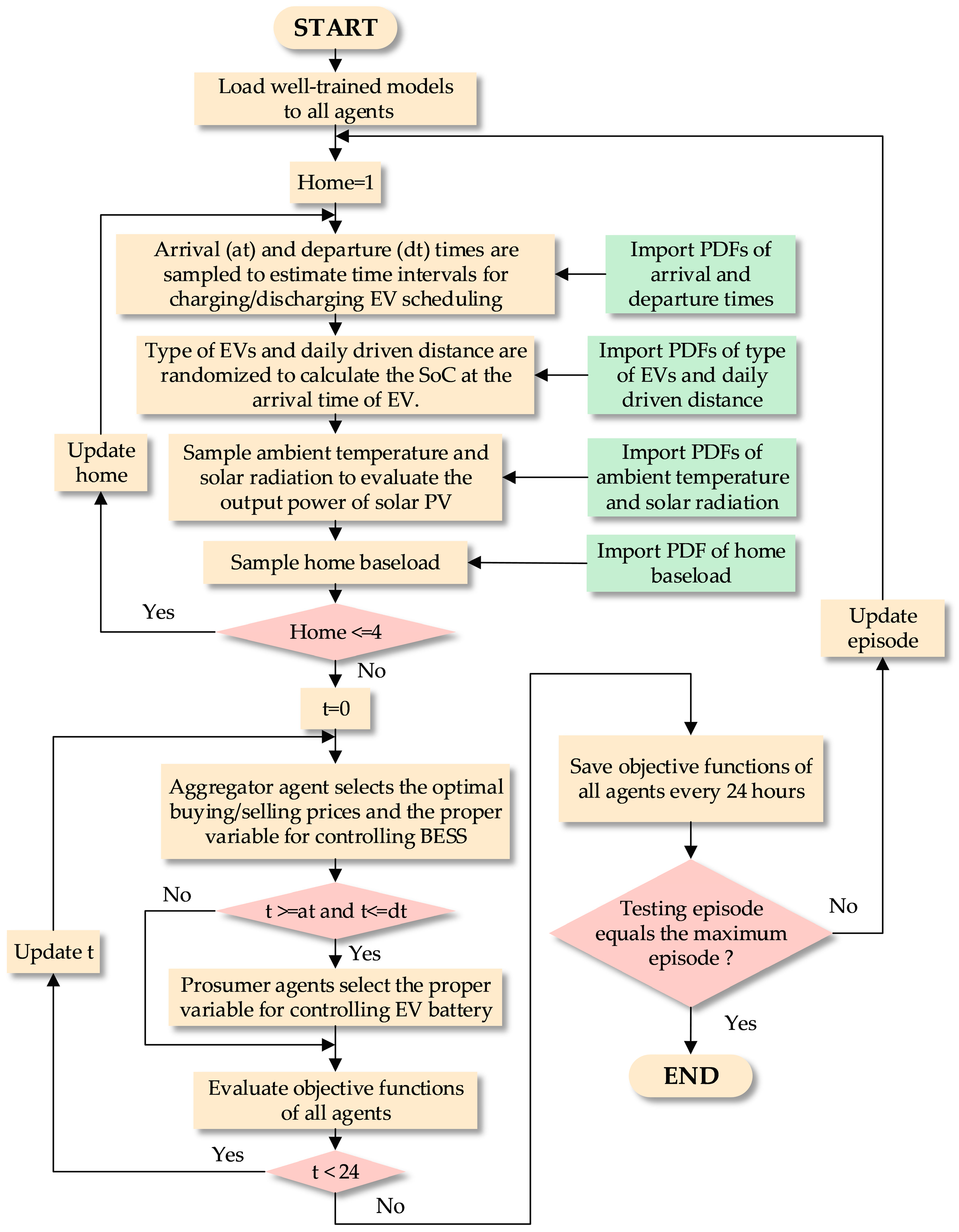

4.3. Testing Procedure

5. Simulation Results and Discussions

5.1. Assumption and Case Studies

- Case I: TOU & FIT energy trading; the aggregator proposes the selling energy price using the TOU rate to four prosumers. In contrast, the FIT rate, determined as the buying energy price, is offered to four prosumers every 24 h. Additionally, the aggregator and prosumers can only control their battery to maximize their rewards through multi-agent optimization using the DDPG algorithm.

- Case II: RTP energy trading (proposed method); the aggregator proposes the selling energy price and buying energy price using the RTP concept to four prosumers for 24 h. The aggregator can control both selling/buying prices and its BESS, whereas the four prosumers try to control their EV batteries to maximize their rewards. Additionally, multi-agent optimization using the DDPG algorithm is employed to acquire the optimal decisions of both the aggregator and prosumer.

5.2. Comparison Results

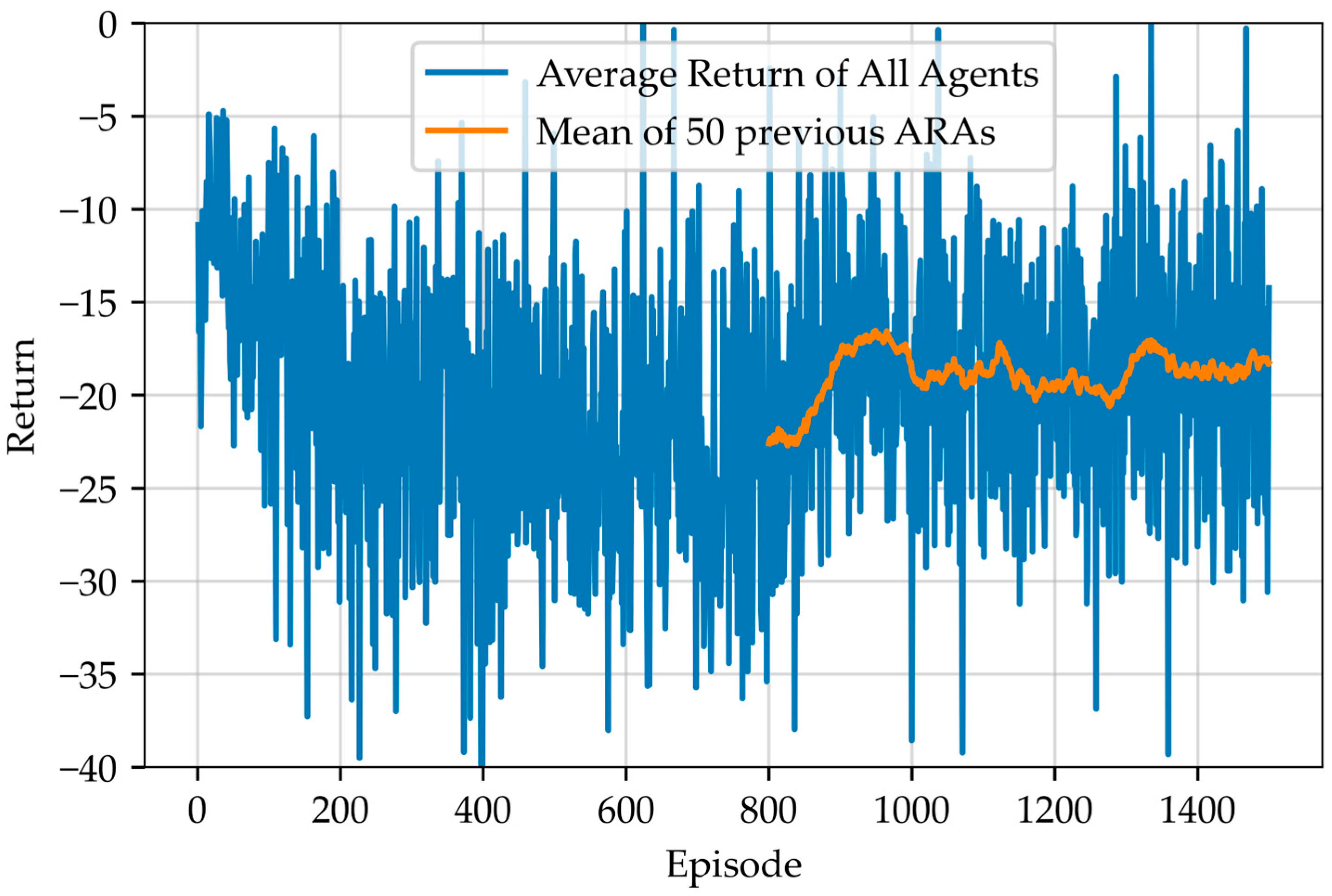

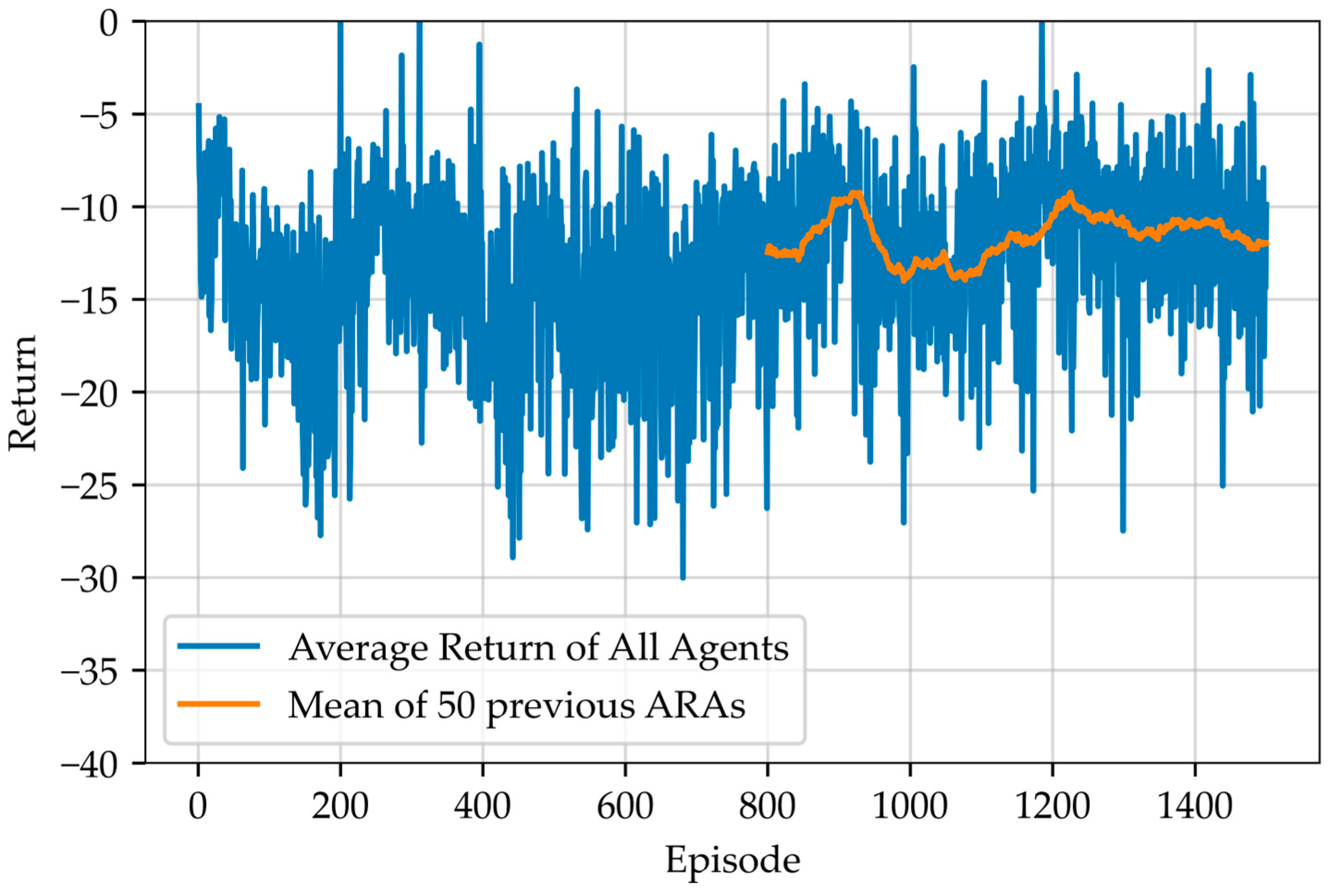

5.2.1. Training Results

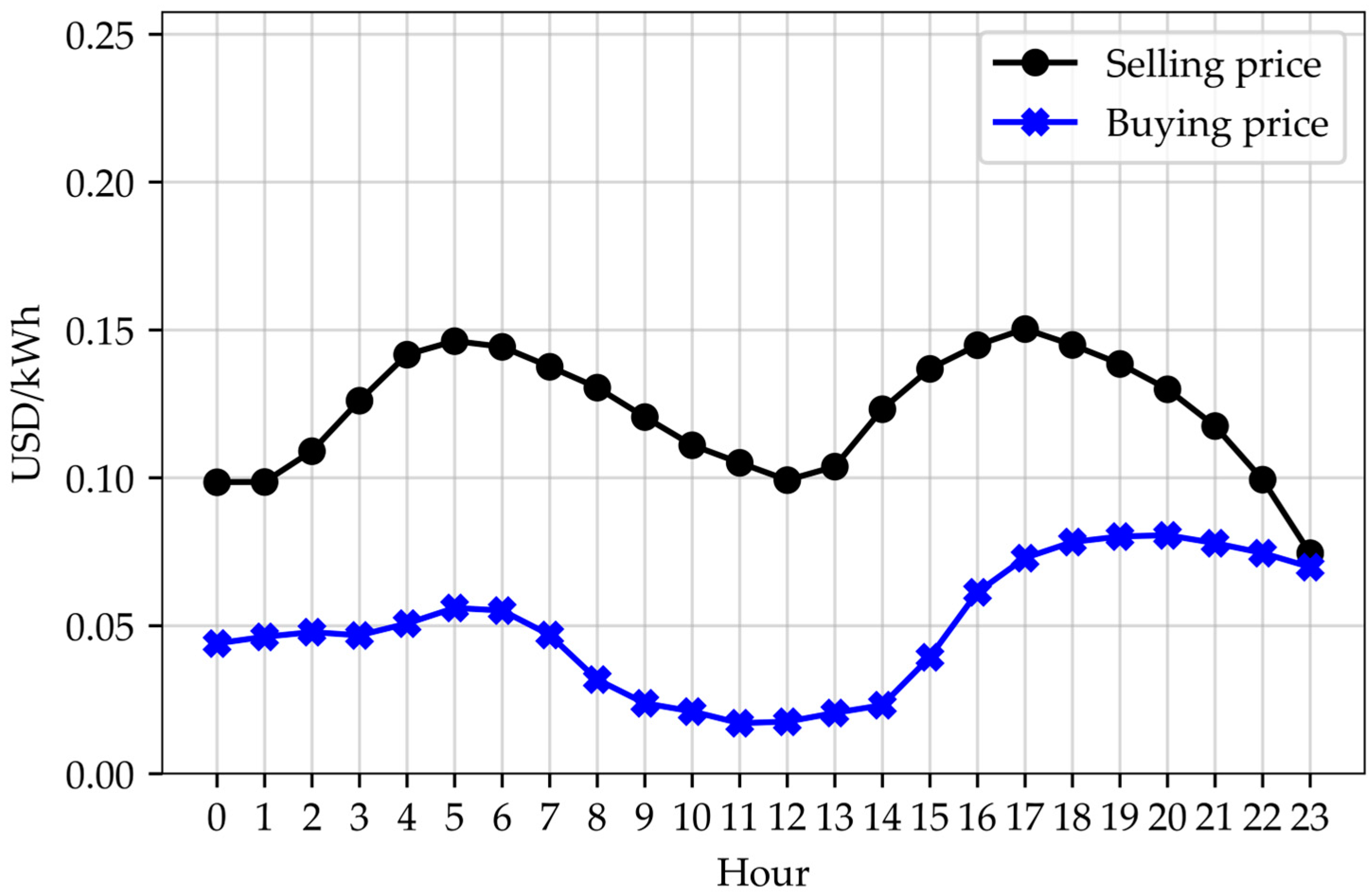

5.2.2. Energy Pricing Results

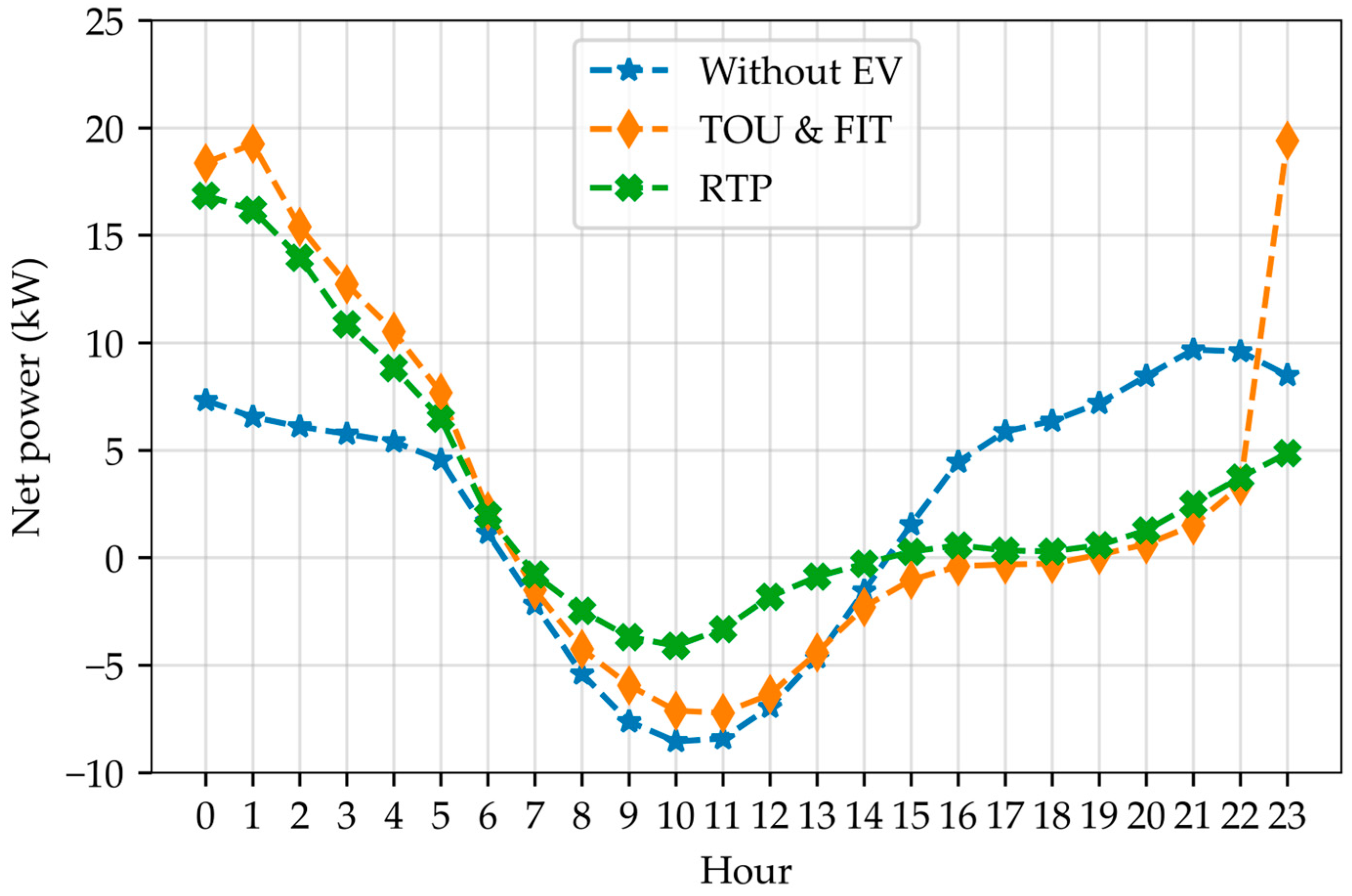

5.2.3. Power State Results

5.2.4. Objective Evaluation Results

5.3. Discussions

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Aslam, S.; Iqbal, Z.; Javaid, N.; Khan, Z.; Aurangzeb, K.; Haider, S. Towards Efficient Energy Management of Smart Buildings Exploiting Heuristic Optimization with Real Time and Critical Peak Pricing Schemes. Energies 2017, 10, 2065. [Google Scholar] [CrossRef]

- Vahidinasab, V.; Ardalan, C.; Mohammadi-Ivatloo, B.; Giaouris, D.; Walker, S.L. Active Building as an Energy System: Concept, Challenges, and Outlook. IEEE Access 2021, 9, 58009–58024. [Google Scholar] [CrossRef]

- Nguyen, H.T.; Nguyen, D.T.; Le, L.B. Energy Management for Households With Solar Assisted Thermal Load Considering Renewable Energy and Price Uncertainty. IEEE Trans. Smart Grid 2015, 6, 301–314. [Google Scholar] [CrossRef]

- Mahmud, K.; Hossain, M.J.; Ravishankar, J. Peak-Load Management in Commercial Systems With Electric Vehicles. IEEE Syst. J. 2019, 13, 1872–1882. [Google Scholar] [CrossRef]

- Liemthong, R.; Srithapon, C.; Ghosh, P.K.; Chatthaworn, R. Home Energy Management Strategy-Based Meta-Heuristic Optimization for Electrical Energy Cost Minimization Considering TOU Tariffs. Energies 2022, 15, 537. [Google Scholar] [CrossRef]

- Srithapon, C.; Ghosh, P.; Siritaratiwat, A.; Chatthaworn, R. Optimization of Electric Vehicle Charging Scheduling in Urban Village Networks Considering Energy Arbitrage and Distribution Cost. Energies 2020, 13, 349. [Google Scholar] [CrossRef]

- Park, K.; Moon, I. Multi-Agent Deep Reinforcement Learning Approach for EV Charging Scheduling in a Smart Grid. Appl. Energy 2022, 328, 120111. [Google Scholar] [CrossRef]

- Hussain, S.; El-Bayeh, C.Z.; Lai, C.; Eicker, U. Multi-Level Energy Management Systems Toward a Smarter Grid: A Review. IEEE Access 2021, 9, 71994–72016. [Google Scholar] [CrossRef]

- Srithapon, C.; Fuangfoo, P.; Ghosh, P.K.; Siritaratiwat, A.; Chatthaworn, R. Surrogate-Assisted Multi-Objective Probabilistic Optimal Power Flow for Distribution Network With Photovoltaic Generation and Electric Vehicles. IEEE Access 2021, 9, 34395–34414. [Google Scholar] [CrossRef]

- Deb, S.; Tammi, K.; Kalita, K.; Mahanta, P. Impact of Electric Vehicle Charging Station Load on Distribution Network. Energies 2018, 11, 178. [Google Scholar] [CrossRef]

- Awadallah, M.A.; Singh, B.N.; Venkatesh, B. Impact of EV Charger Load on Distribution Network Capacity: A Case Study in Toronto. Can. J. Electr. Comput. Eng. 2016, 39, 268–273. [Google Scholar] [CrossRef]

- Satarworn, S.; Hoonchareon, N. Impact of EV Home Charger on Distribution Transformer Overloading in an Urban Area. In Proceedings of the 2017 14th International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology (ECTI-CON), Phuket, Thailand, 27–30 June 2017; pp. 469–472. [Google Scholar]

- Singh, R.; Tripathi, P.; Yatendra, K. Impact of Solar Photovoltaic Penetration In Distribution Network. In Proceedings of the 2019 3rd International Conference on Recent Developments in Control, Automation & Power Engineering (RDCAPE), Noida, India, 10–11 October 2019; pp. 551–556. [Google Scholar]

- Rastegar, M.; Fotuhi-Firuzabad, M.; Moeini-Aghtaie, M. Developing a Two-Level Framework for Residential Energy Management. IEEE Trans. Smart Grid 2018, 9, 1707–1717. [Google Scholar] [CrossRef]

- Joo, I.-Y.; Choi, D.-H. Distributed Optimization Framework for Energy Management of Multiple Smart Homes With Distributed Energy Resources. IEEE Access 2017, 5, 15551–15560. [Google Scholar] [CrossRef]

- Mak, D.; Choi, D.-H. Optimization Framework for Coordinated Operation of Home Energy Management System and Volt-VAR Optimization in Unbalanced Active Distribution Networks Considering Uncertainties. Appl. Energy 2020, 276, 115495. [Google Scholar] [CrossRef]

- Sarker, M.R.; Olsen, D.J.; Ortega-Vazquez, M.A. Co-Optimization of Distribution Transformer Aging and Energy Arbitrage Using Electric Vehicles. IEEE Trans. Smart Grid 2017, 8, 2712–2722. [Google Scholar] [CrossRef]

- Mak, D.; Choi, D.-H. Smart Home Energy Management in Unbalanced Active Distribution Networks Considering Reactive Power Dispatch and Voltage Control. IEEE Access 2019, 7, 149711–149723. [Google Scholar] [CrossRef]

- Althaher, S.; Mancarella, P.; Mutale, J. Automated Demand Response From Home Energy Management System Under Dynamic Pricing and Power and Comfort Constraints. IEEE Trans. Smart Grid 2015, 6, 1874–1883. [Google Scholar] [CrossRef]

- Killian, M.; Zauner, M.; Kozek, M. Comprehensive Smart Home Energy Management System Using Mixed-Integer Quadratic-Programming. Appl. Energy 2018, 222, 662–672. [Google Scholar] [CrossRef]

- Gonçalves, I.; Gomes, Á.; Henggeler Antunes, C. Optimizing the Management of Smart Home Energy Resources under Different Power Cost Scenarios. Appl. Energy 2019, 242, 351–363. [Google Scholar] [CrossRef]

- Ma, K.; Hu, S.; Yang, J.; Xu, X.; Guan, X. Appliances Scheduling via Cooperative Multi-Swarm PSO under Day-Ahead Prices and Photovoltaic Generation. Appl. Soft Comput. 2018, 62, 504–513. [Google Scholar] [CrossRef]

- Jordehi, A.R. Optimal Scheduling of Home Appliances in Home Energy Management Systems Using Grey Wolf Optimisation (Gwo) Algorithm. In Proceedings of the 2019 IEEE Milan PowerTech, Milan, Italy, 23–27 June 2019; pp. 1–6. [Google Scholar]

- Battula, A.R.; Vuddanti, S.; Salkuti, S.R. Review of Energy Management System Approaches in Microgrids. Energies 2021, 14, 5459. [Google Scholar] [CrossRef]

- Nakabi, T.A.; Toivanen, P. Deep Reinforcement Learning for Energy Management in a Microgrid with Flexible Demand. Sustain. Energy Grids Netw. 2021, 25, 100413. [Google Scholar] [CrossRef]

- Ji, Y.; Wang, J.; Xu, J.; Fang, X.; Zhang, H. Real-Time Energy Management of a Microgrid Using Deep Reinforcement Learning. Energies 2019, 12, 2291. [Google Scholar] [CrossRef]

- Gao, G.; Li, J.; Wen, Y. Energy-Efficient Thermal Comfort Control in Smart Buildings via Deep Reinforcement Learning. arXiv 2019, arXiv:1901.04693. [Google Scholar]

- Wan, Z.; Li, H.; He, H. Residential Energy Management with Deep Reinforcement Learning. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–7. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2018; p. 352. [Google Scholar]

- Arulkumaran, K.; Deisenroth, M.P.; Brundage, M.; Bharath, A.A. A Brief Survey of Deep Reinforcement Learning. IEEE Signal Process. Mag. 2017, 34, 26–38. [Google Scholar] [CrossRef]

- Guo, C.; Wang, X.; Zheng, Y.; Zhang, F. Optimal Energy Management of Multi-Microgrids Connected to Distribution System Based on Deep Reinforcement Learning. Int. J. Electr. Power Energy Syst. 2021, 131, 107048. [Google Scholar] [CrossRef]

- Kaewdornhan, N.; Srithapon, C.; Chatthaworn, R. Electric Distribution Network With Multi-Microgrids Management Using Surrogate-Assisted Deep Reinforcement Learning Optimization. IEEE Access 2022, 10, 130373–130396. [Google Scholar] [CrossRef]

- Lee, J.-O.; Kim, Y.-S. Novel Battery Degradation Cost Formulation for Optimal Scheduling of Battery Energy Storage Systems. Int. J. Electr. Power Energy Syst. 2022, 137, 107795. [Google Scholar] [CrossRef]

- Zhou, C.; Qian, K.; Allan, M.; Zhou, W. Modeling of the Cost of EV Battery Wear Due to V2G Application in Power Systems. IEEE Trans. Energy Convers. 2011, 26, 1041–1050. [Google Scholar] [CrossRef]

- Li, Y.; Xie, K.; Wang, L.; Xiang, Y. The Impact of PHEVs Charging and Network Topology Optimization on Bulk Power System Reliability. Electr. Power Syst. Res. 2018, 163, 85–97. [Google Scholar] [CrossRef]

- Affonso, C.d.M.; Kezunovic, M. Technical and Economic Impact of PV-BESS Charging Station on Transformer Life: A Case Study. IEEE Trans. Smart Grid 2019, 10, 4683–4692. [Google Scholar] [CrossRef]

- Wang, C.; Liu, C.; Tang, F.; Liu, D.; Zhou, Y. A Scenario-Based Analytical Method for Probabilistic Load Flow Analysis. Electr. Power Syst. Res. 2020, 181, 106193. [Google Scholar] [CrossRef]

- Gupta, N. Gauss-Quadrature-Based Probabilistic Load Flow Method With Voltage-Dependent Loads Including WTGS, PV, and EV Charging Uncertainties. IEEE Trans. Ind. Appl. 2018, 54, 6485–6497. [Google Scholar] [CrossRef]

- Reddy, S.S.; Abhyankar, A.R.; Bijwe, P.R. Market Clearing for a Wind-Thermal Power System Incorporating Wind Generation and Load Forecast Uncertainties. In Proceedings of the 2012 IEEE Power and Energy Society General Meeting, San Diego, CA, USA, 22–26 July 2012; pp. 1–8. [Google Scholar]

- Baghaee, H.R.; Mirsalim, M.; Gharehpetian, G.B.; Talebi, H.A. Application of RBF Neural Networks and Unscented Transformation in Probabilistic Power-Flow of Microgrids Including Correlated Wind/PV Units and Plug-in Hybrid Electric Vehicles. Simul. Model. Pract. Theory 2017, 72, 51–68. [Google Scholar] [CrossRef]

- Park, J.; Liang, W.; Choi, J.; El-Keib, A.A.; Shahidehpour, M.; Billinton, R. A Probabilistic Reliability Evaluation of a Power System Including Solar/Photovoltaic Cell Generator. In Proceedings of the 2009 IEEE Power & Energy Society General Meeting, Calgary, AB, Canada, 26–30 July 2009; pp. 1–6. [Google Scholar]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous Control with Deep Reinforcement Learning. arXiv 2019, arXiv:1509.02971. [Google Scholar] [CrossRef]

- Silver, D.; Lever, G.; Heess, N.; Degris, T.; Wierstra, D.; Riedmiller, M. Deterministic Policy Gradient Algorithms. In Proceedings of the 31st International Conference on Machine Learning, Beijing, China, 21–26 June 2014; Volume 32. [Google Scholar]

- Average Annual Prices of Lithium-Ion Battery Packs from 2010 to 2022. Available online: https://www.statista.com/statistics/1042486/india-lithium-ion-battery-packs-average-price/ (accessed on 23 November 2022).

| Energy Rate | Period | |

|---|---|---|

| Peak (9.00–22.00) | Off-Peak (22.00–9.00) | |

| Time-of-Use (TOU) | 0.1855 USD/kWh | 0.0843 USD/kWh |

| Feed-in-Tariff (FIT) | 0.0574 USD/kWh | |

| Agent | Networks | Parameters | ||

|---|---|---|---|---|

| Learning Rate | Activate Function (Hidden, Output) | Number of Hidden Layers (Number of Neurons) | ||

| Aggregator | Actor | 0.001 | ReLU, Sigmoid | 2 (512, 512) |

| Critic | 0.01 | |||

| Prosumer | Actor | 0.001 | ReLU, Tanh | |

| Critic | 0.01 | |||

| Procedure | Parameters | |||||||

|---|---|---|---|---|---|---|---|---|

| Episode | Decay Rate | Discount Factor | Soft Update Factor | Batch Size | ||||

| Training | 1500 | 0.0005 | 0.9 | 0.005 | 512 | 0.2 | 0.002 | 1 |

| Testing | 1000 | - | - | - | - | - | - | - |

| Case Study | The Mean of the Positive Net Power (kW) | Decrease (%) Compared with the Power without EV | Decrease (%) Compared with the Power from Case I |

|---|---|---|---|

| Without EV | 6.152 | - | 33.56 |

| Case I | 9.260 | −50.52 | - |

| Case II (proposed) | 5.596 | 9.04 | 39.57 |

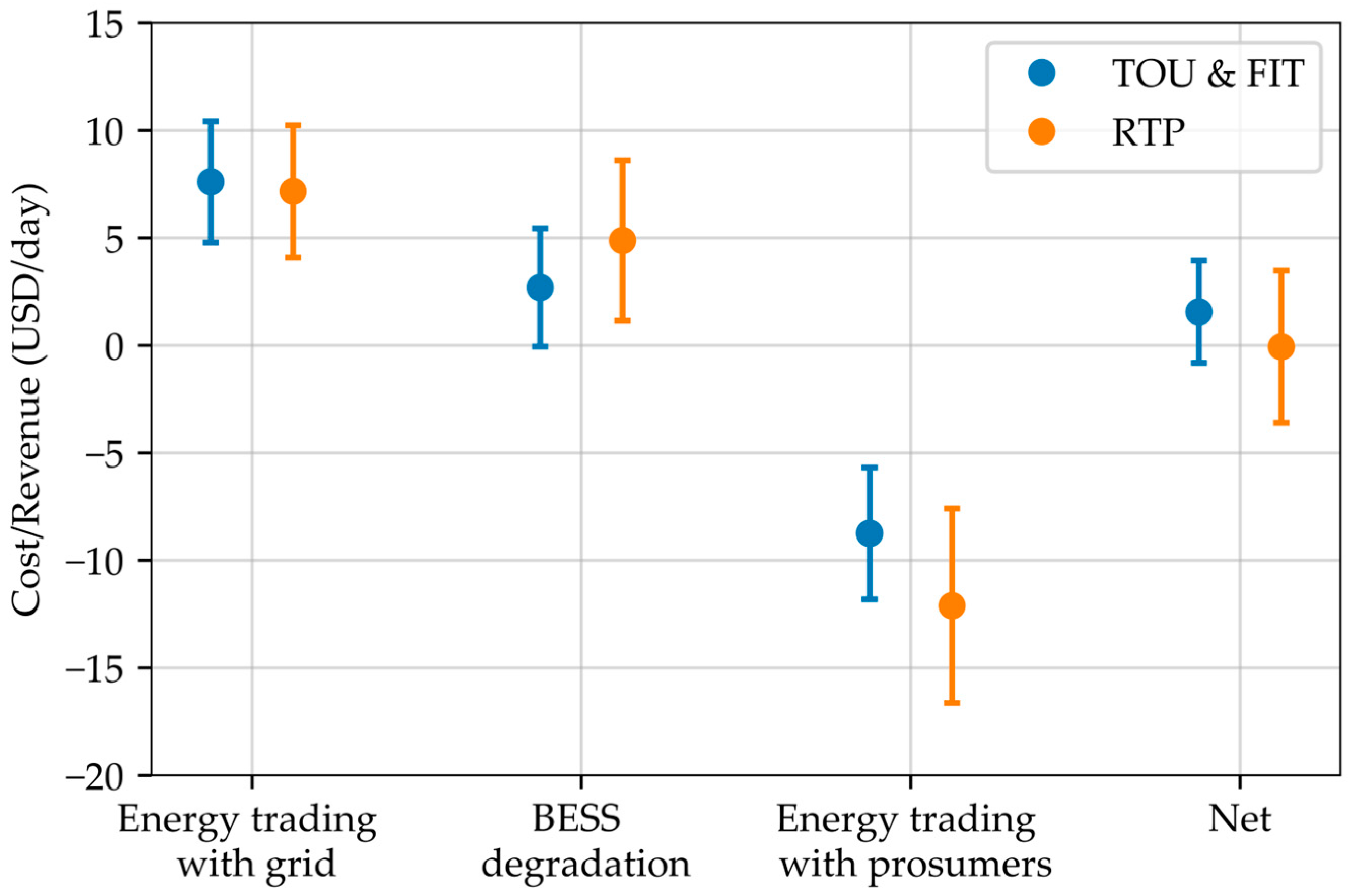

| Case Study | Revenue/Cost (USD/Day) | |||||||

|---|---|---|---|---|---|---|---|---|

| Energy Trading with the Grid | BESS Degradation | Energy Trading with Prosumers | Net | |||||

| Mean | Std | Mean | Std | Mean | Std | Mean | Std | |

| Case I | 7.610 | 1.409 | 2.699 | 1.375 | −8.745 | 1.535 | 1.564 | 1.192 |

| Case II (proposed) | 7.160 | 1.537 | 4.886 | 1.863 | −12.111 | 2.262 | −0.065 | 1.770 |

| Case Study | Net Cost (USD/day) | |||||||

|---|---|---|---|---|---|---|---|---|

| Prosumer1 | Prosumer2 | Prosumer3 | Prosumer4 | |||||

| Mean | Std | Mean | Std | Mean | Std | Mean | Std | |

| Case I | 3.458 | 1.209 | 4.732 | 0.975 | 6.565 | 1.168 | 7.390 | 1.706 |

| Case II (proposed) | 3.098 | 0.915 | 4.653 | 1.145 | 6.325 | 1.554 | 5.574 | 1.313 |

| Decreased (%) | 10.41% | - | 1.67% | - | 3.66% | - | 24.57% | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kaewdornhan, N.; Srithapon, C.; Liemthong, R.; Chatthaworn, R. Real-Time Multi-Home Energy Management with EV Charging Scheduling Using Multi-Agent Deep Reinforcement Learning Optimization. Energies 2023, 16, 2357. https://doi.org/10.3390/en16052357

Kaewdornhan N, Srithapon C, Liemthong R, Chatthaworn R. Real-Time Multi-Home Energy Management with EV Charging Scheduling Using Multi-Agent Deep Reinforcement Learning Optimization. Energies. 2023; 16(5):2357. https://doi.org/10.3390/en16052357

Chicago/Turabian StyleKaewdornhan, Niphon, Chitchai Srithapon, Rittichai Liemthong, and Rongrit Chatthaworn. 2023. "Real-Time Multi-Home Energy Management with EV Charging Scheduling Using Multi-Agent Deep Reinforcement Learning Optimization" Energies 16, no. 5: 2357. https://doi.org/10.3390/en16052357

APA StyleKaewdornhan, N., Srithapon, C., Liemthong, R., & Chatthaworn, R. (2023). Real-Time Multi-Home Energy Management with EV Charging Scheduling Using Multi-Agent Deep Reinforcement Learning Optimization. Energies, 16(5), 2357. https://doi.org/10.3390/en16052357