Automated Quantification of Wind Turbine Blade Leading Edge Erosion from Field Images

Abstract

1. Introduction

2. Methodology

- Pitting (shallow): intermittent perforations in the outer blade coating. Pits are generally categorized as shallow, circular cavities. Pits do not expose underlying blade material and generally have minimal impact on aerodynamic performance, particularly compared to more severe damage types such as delamination. However, studies utilizing a S809 airfoil with pitting leading edge erosion indicated that pits have non-negligible impact on aerodynamic performance depending on the pit depth, density and distribution [53]. Pitting erosion may progress into more severe types of erosion (marring, gouges, delamination) with increased numbers of hydrometeor impacts. Pitting may also occur along the chord at short distances from the leading edge.

- Marring (shallow): surface-level scratches along the outer blade coating, damaging the outermost layers of the coating but not exposing underlying blade material. Marring is generally more severe than pitting, and erosion patterns of marring may be most closely described as Stage 2 erosion in past research and has been shown to cause higher degradation in power production compared to pitting [14].

- Gouges (deep): deep, circular cavities with removal of the outer blade coating leading to exposure of underlying material. Gouges generally have larger depths and diameters than pits but are not as expansive or deep as delamination [54]. Studies of a DU 96-W-180 airfoil in a wind tunnel showed substantial lift reduction and drag increases for LEE cases with gouges and pits compared to cases with just pits [54]. Gouges may also occur along the chord at short distances from the leading edge.

- Delamination (deep): the final and most severe stage of leading edge erosion, delamination exposes substantial areas of underlying material. Compared to other leading edge erosion types, delamination generally produces the most severe reductions in aerodynamic performance and may lead to total blade structural failure [14,54,55].

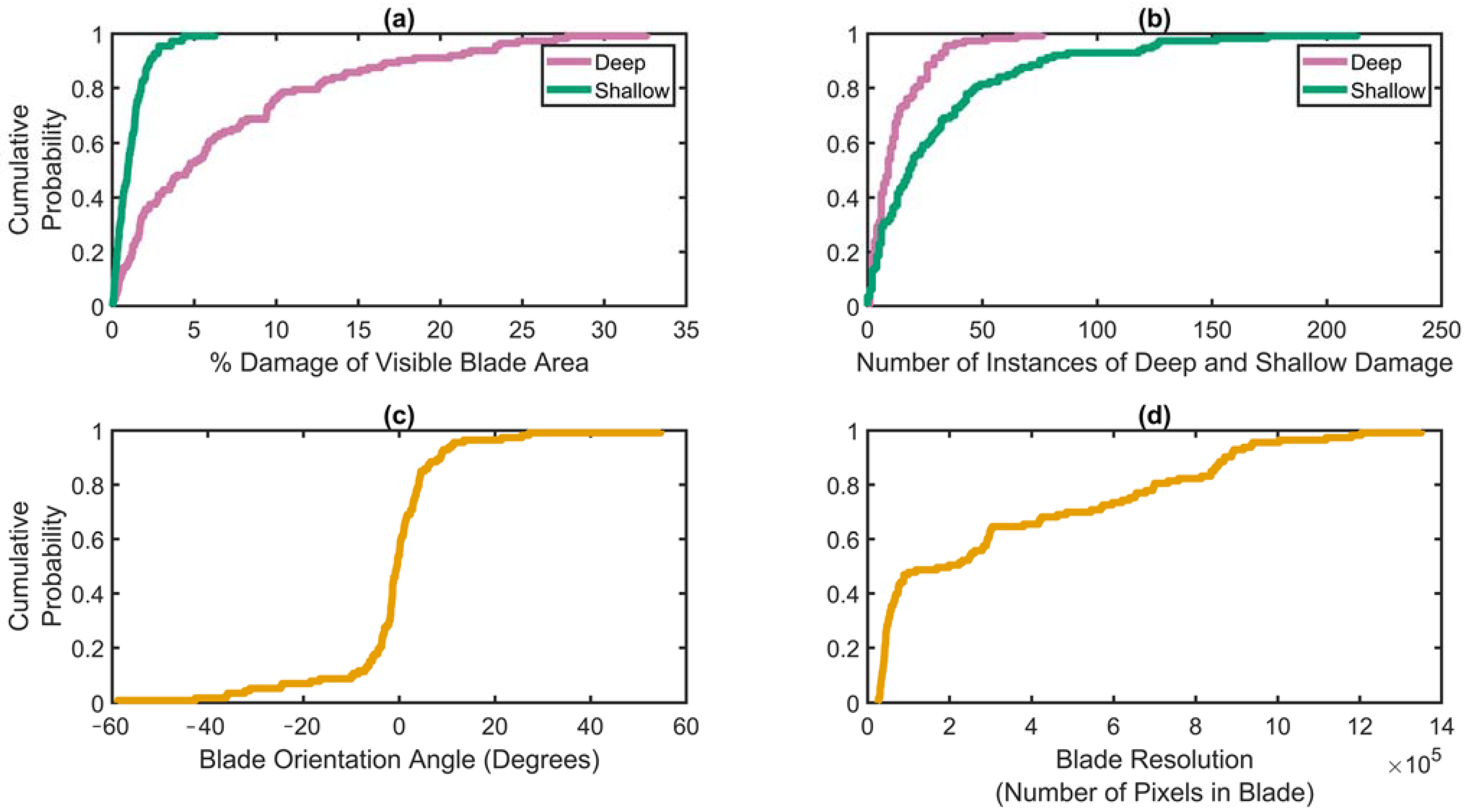

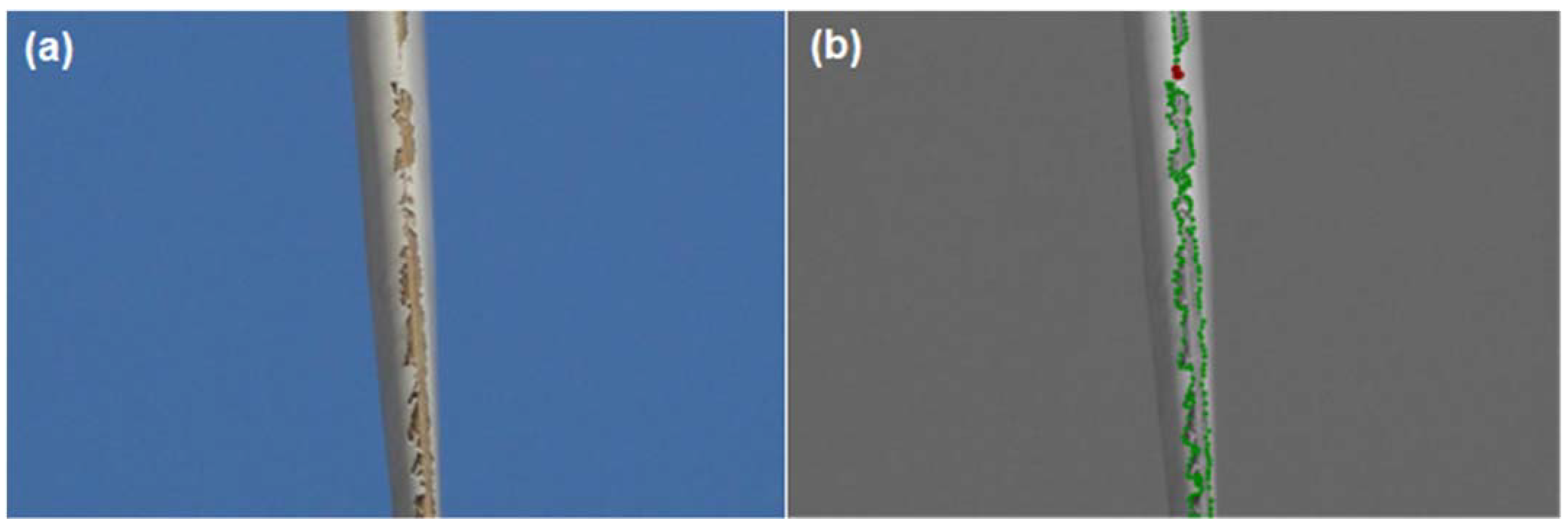

2.1. Description of Field Images

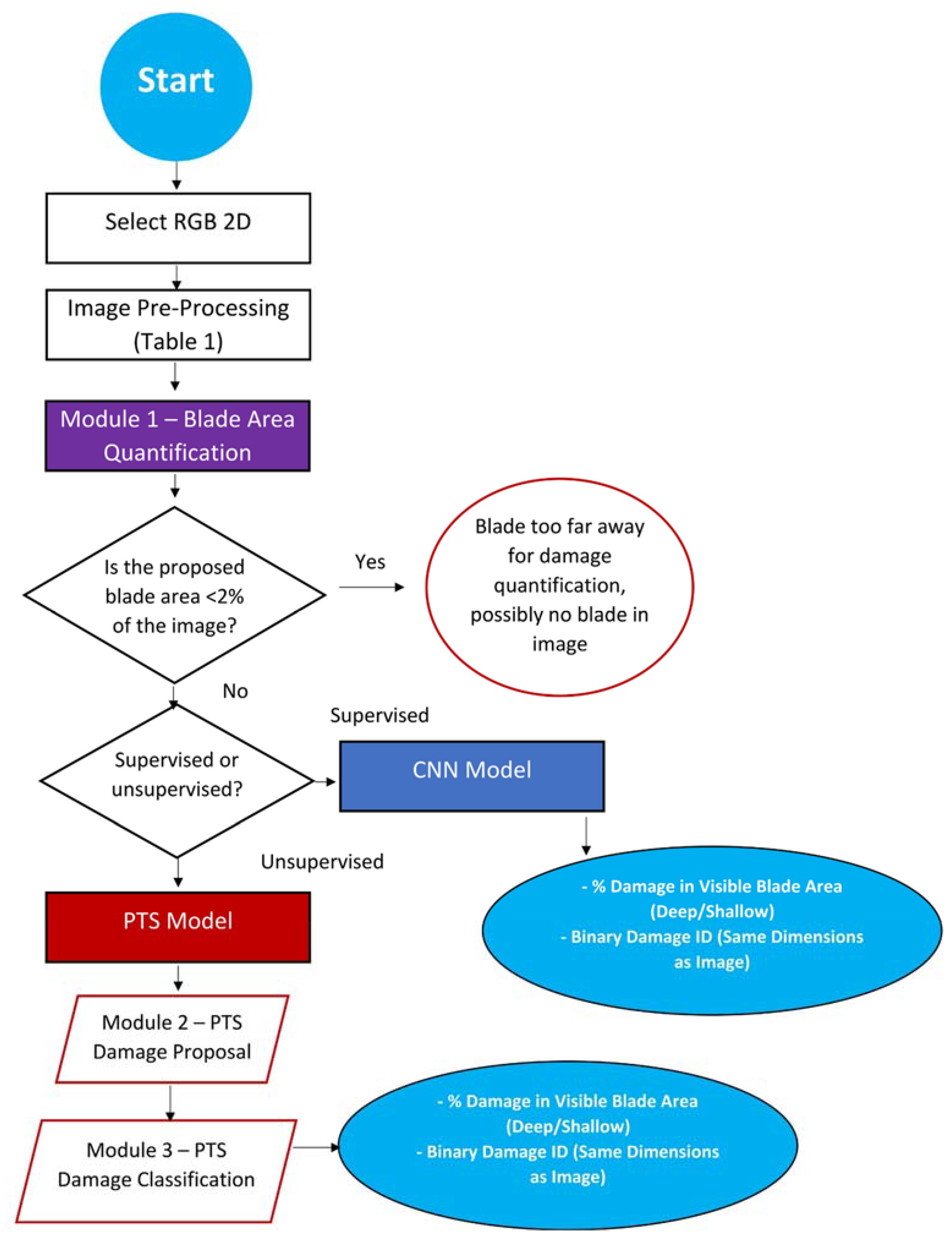

2.2. Workflow

2.2.1. Image Preprocessing

2.2.2. Blade Area Quantification Module

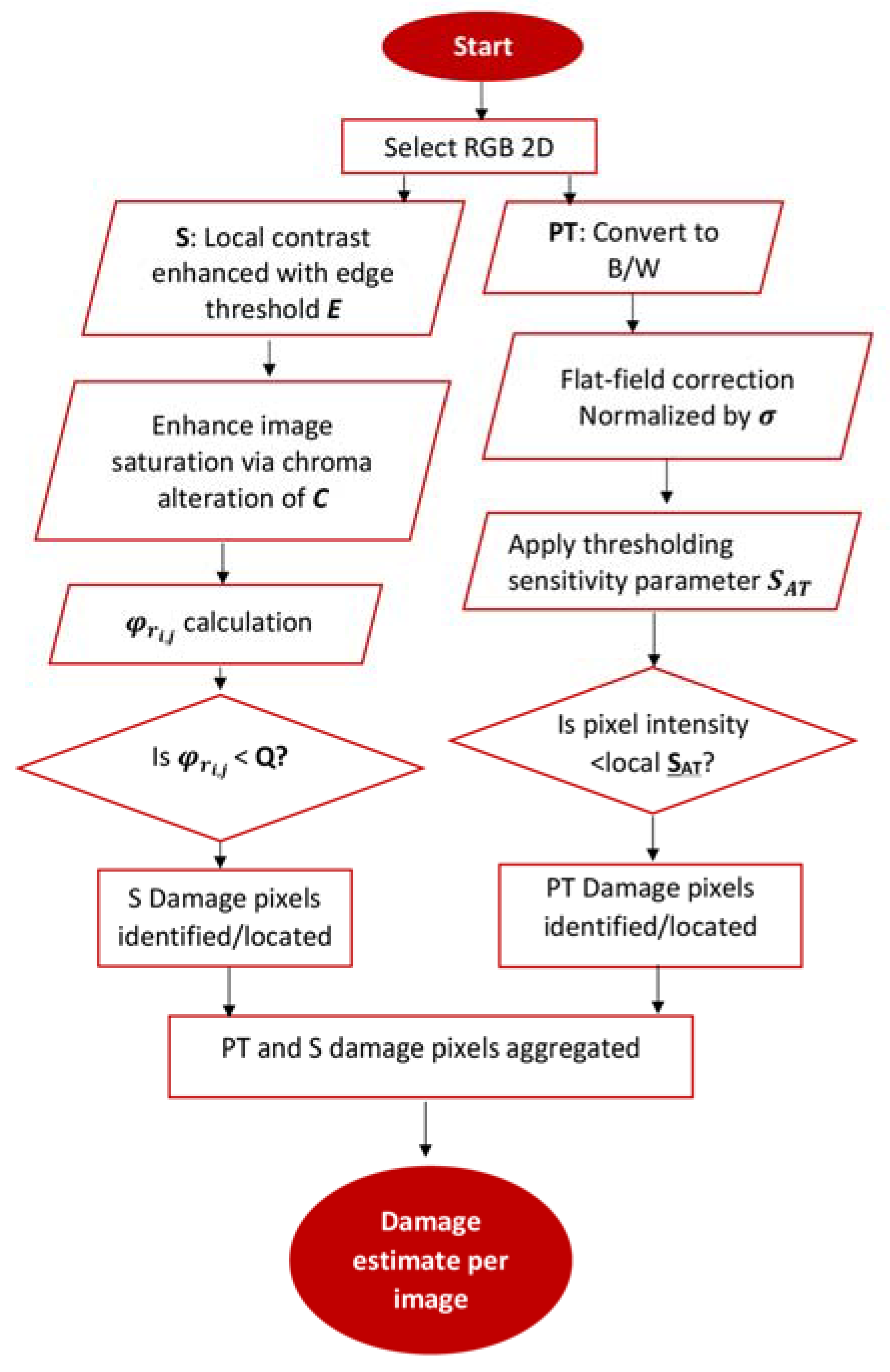

- Image details are enhanced through applying a local contrast operation with contrast increased to enhance edge resolution with edge threshold. The edge threshold E specifies the minimum intensity amplitude of strong edges to leave unchanged.

- Image saturation is enhanced by increasing the saturation value within the HSV (hue, saturation, value) color space through applying a chroma alteration by a factor C. Enhancing the image saturation increases the intensity of blue hues within the image for detection between blade and sky.

- The pixel-by-pixel illumination invariant shadow ratio is calculated [50,51]. Note this parameter is used both in the blade area quantification module and PTS. The shadow ratio is calculated as follows, utilizing per-pixel (where i, j denotes the pixel location in the image) median-filtered (noise reduction) green (G) and blue (B) color channel values:Calculation of the illumination invariant shadow ratio allows for detection of shadows (pixels with highest darkness) throughout a given image, while eliminating ambiguity due to variations in illumination throughout the image. Illumination invariant color spaces are utilized widely in image processing applications and have been shown to reduce image variations due to lighting conditions and shadow, resulting in image color spaces that better describe material properties of objects [61].

- Shadow ratio values are clustered into two classes (blade or sky) using k-means segmentation.

- The pixel-by-pixel RGB distance from the RGB pure blue color triplet is calculated using the CIE94 standard and averaged for each proposed class [62]. The class with the lowest/highest average color difference from the blue RGB triplet is designated as the sky/blade, respectively.

2.2.3. Unsupervised Method: Pixel Intensity Thresholding and Shadow (PTS) Ratio

2.2.4. Supervised Method—Region-Based Convolutional Neural Network

3. Results

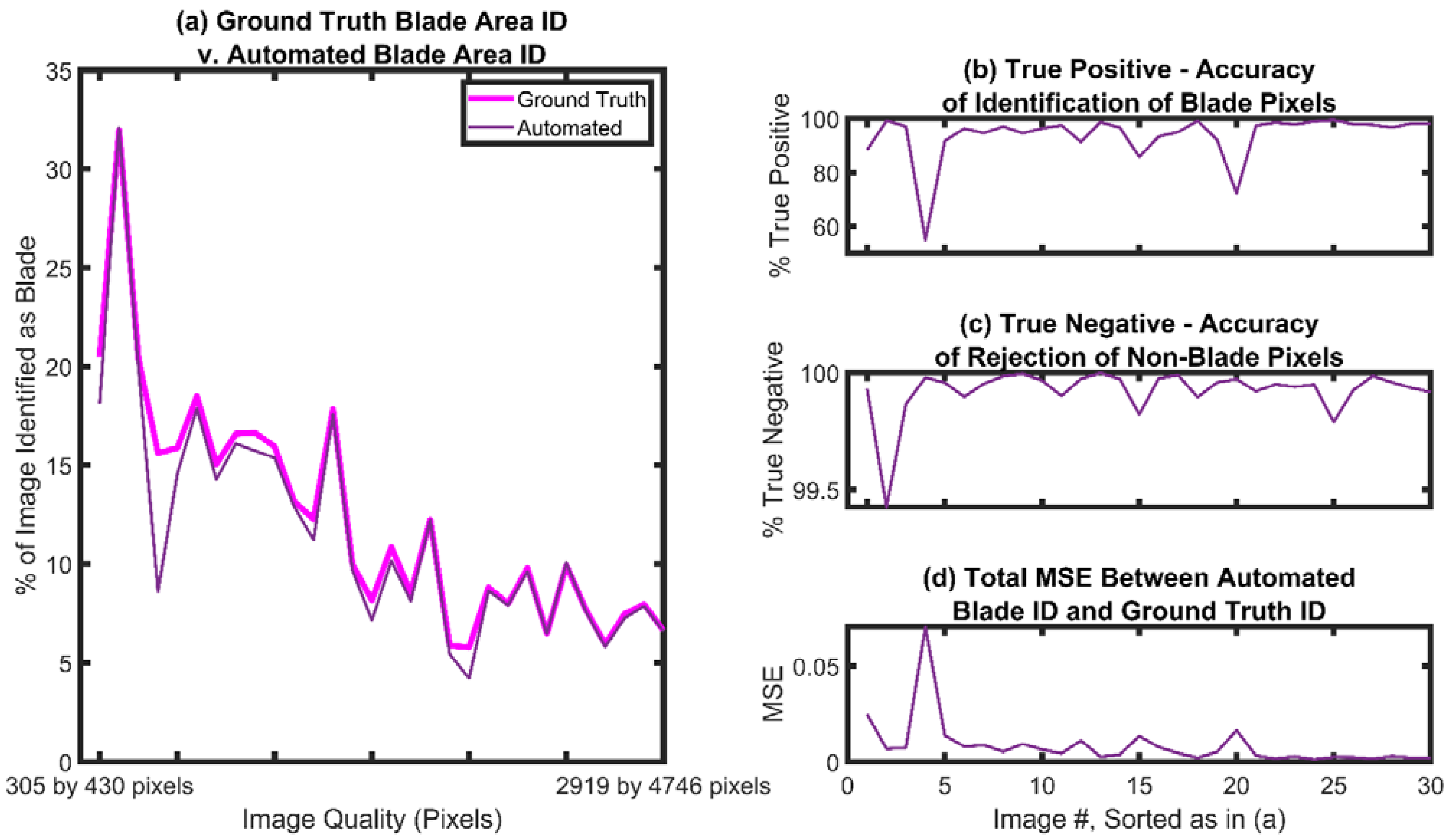

3.1. Blade Area Quantification

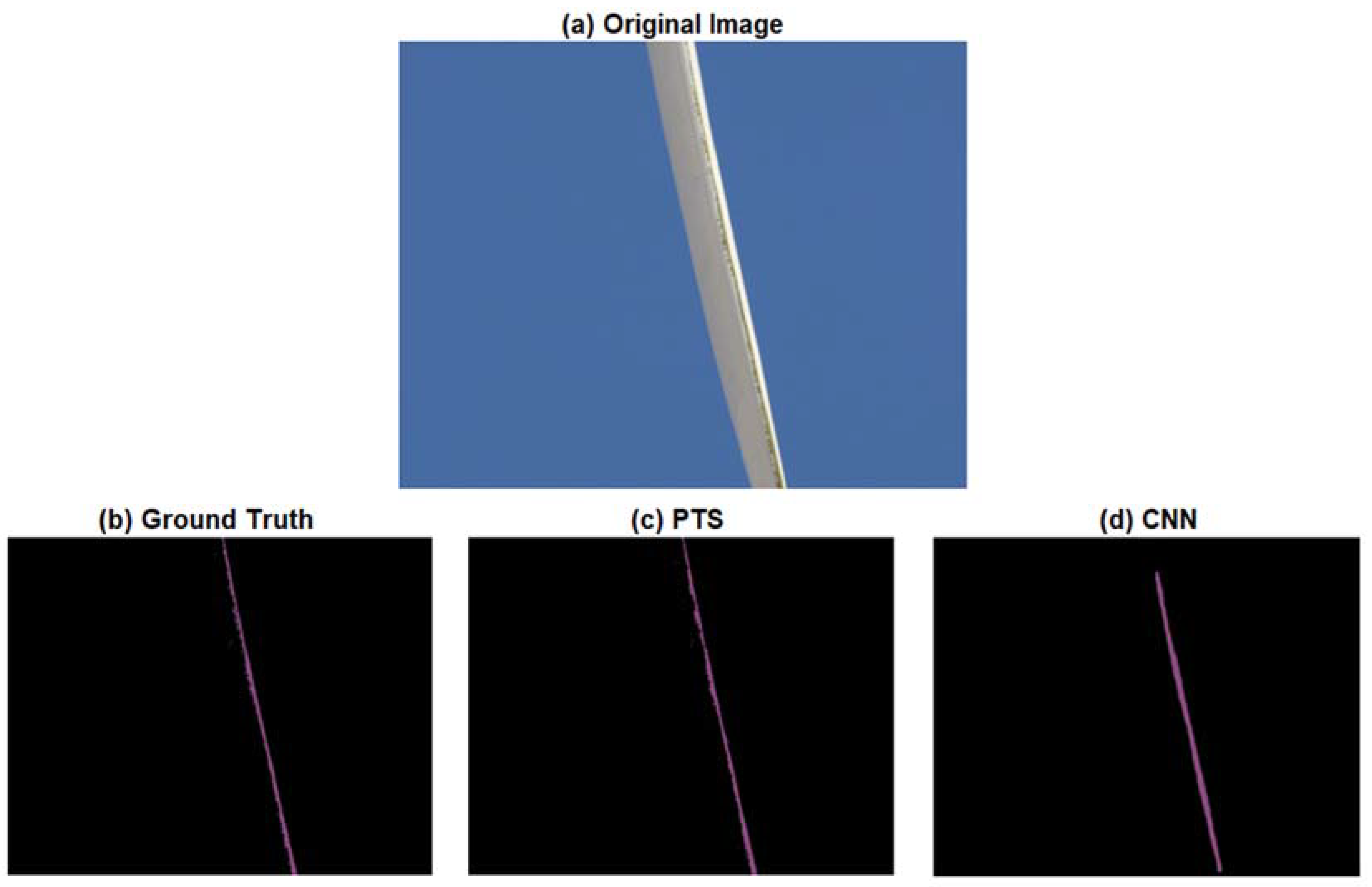

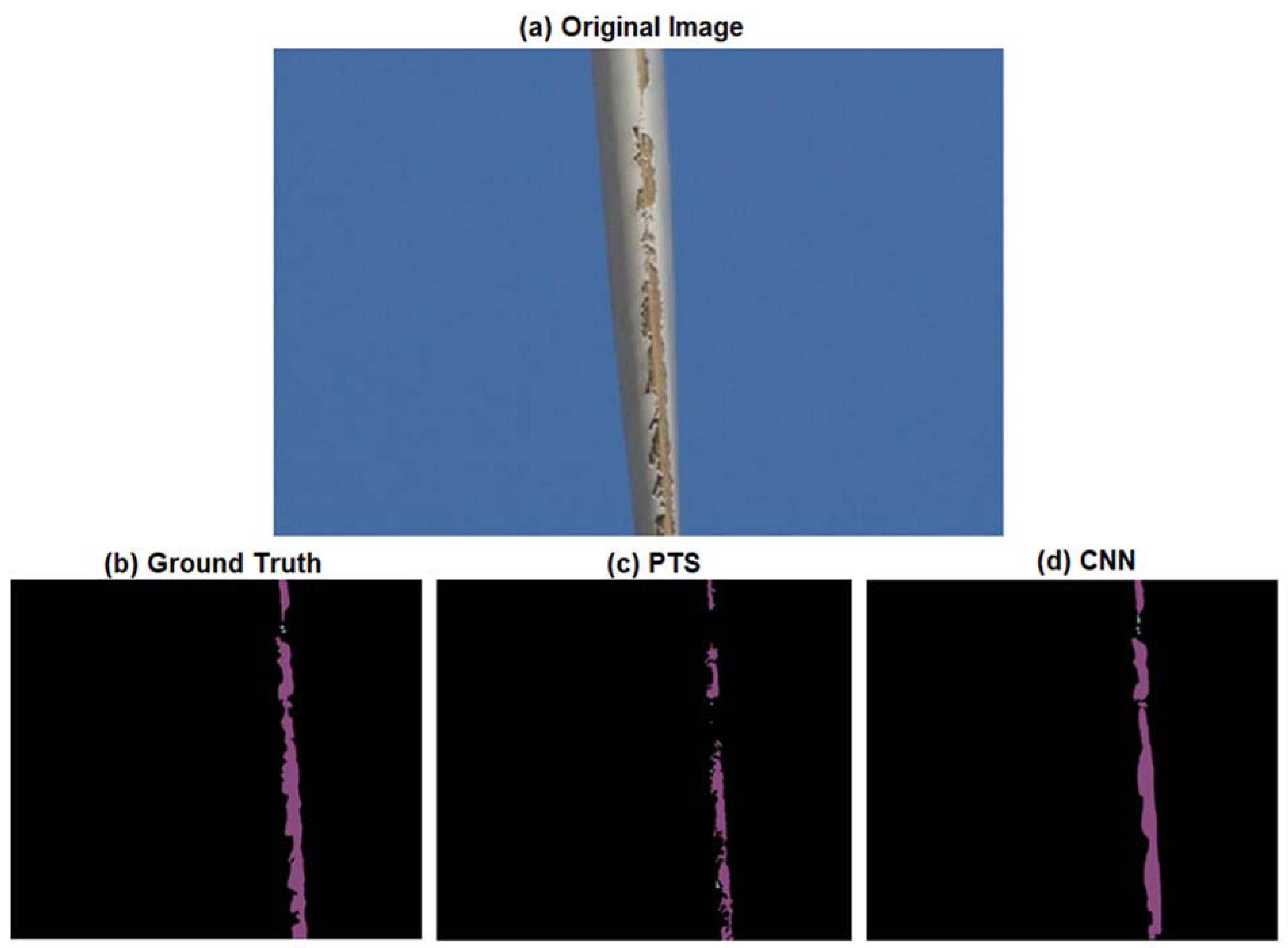

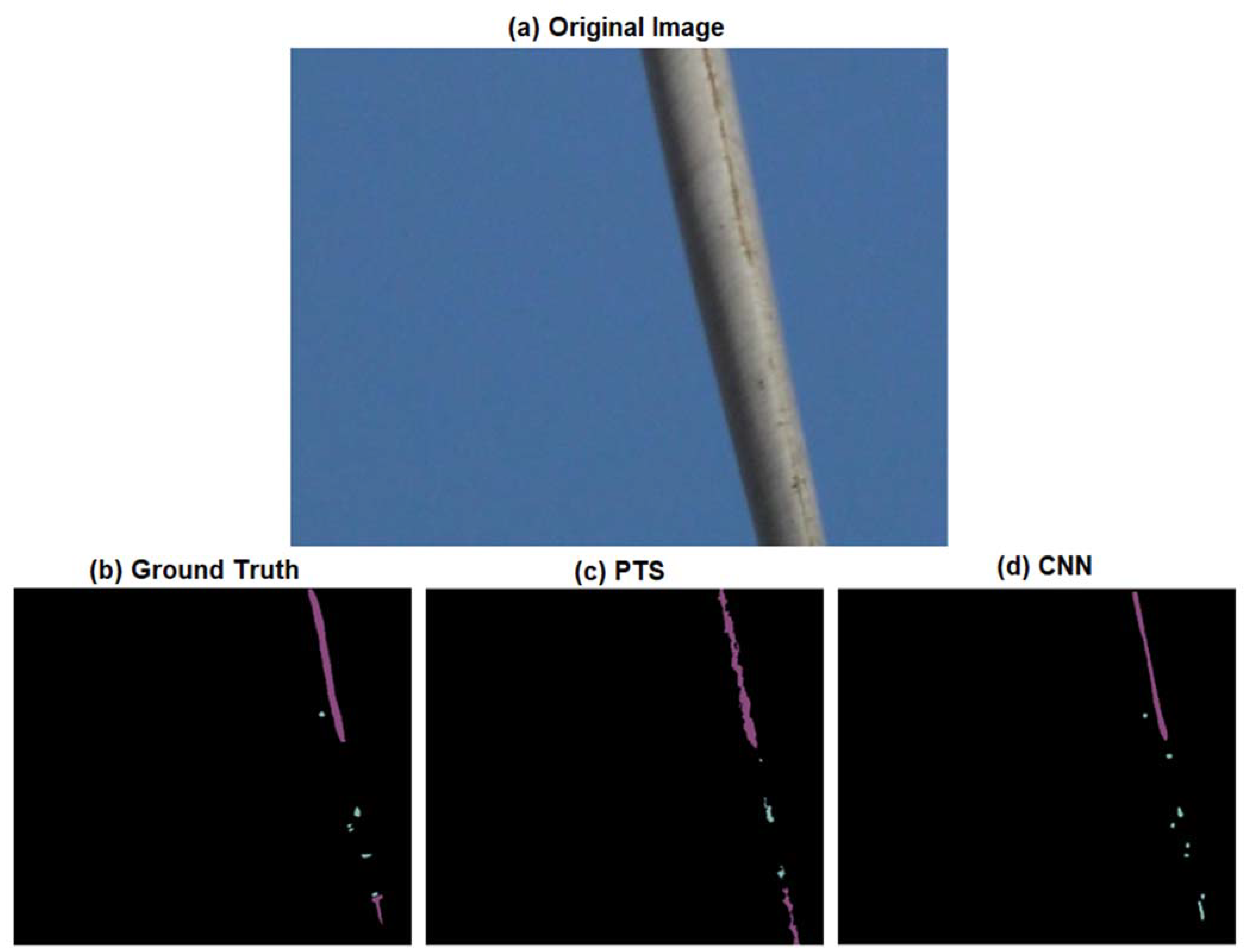

3.2. Illustrative Examples of the Representation of Damage Areas: Comparison between CNN and PTS Models

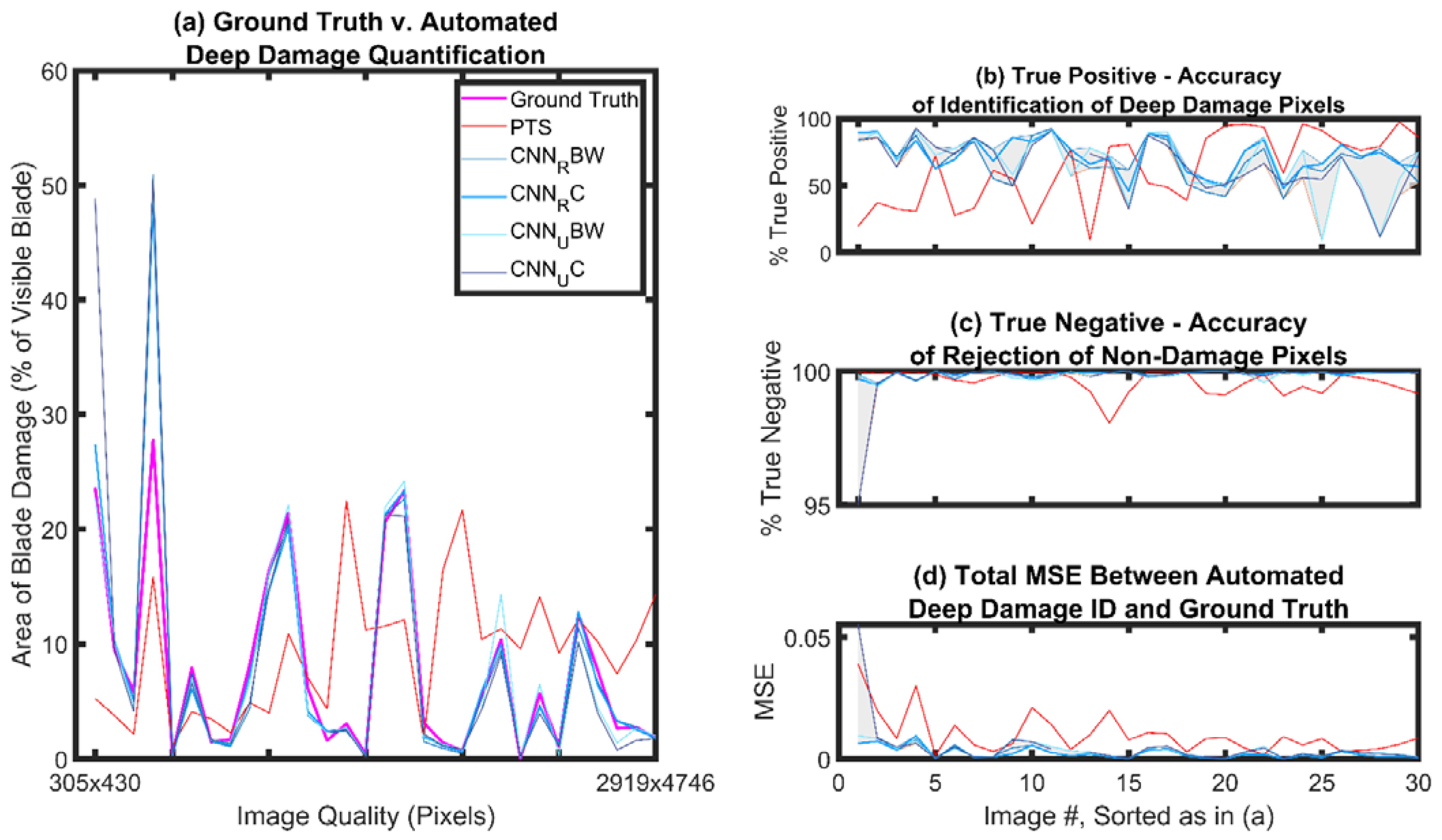

3.3. Damage Quantification

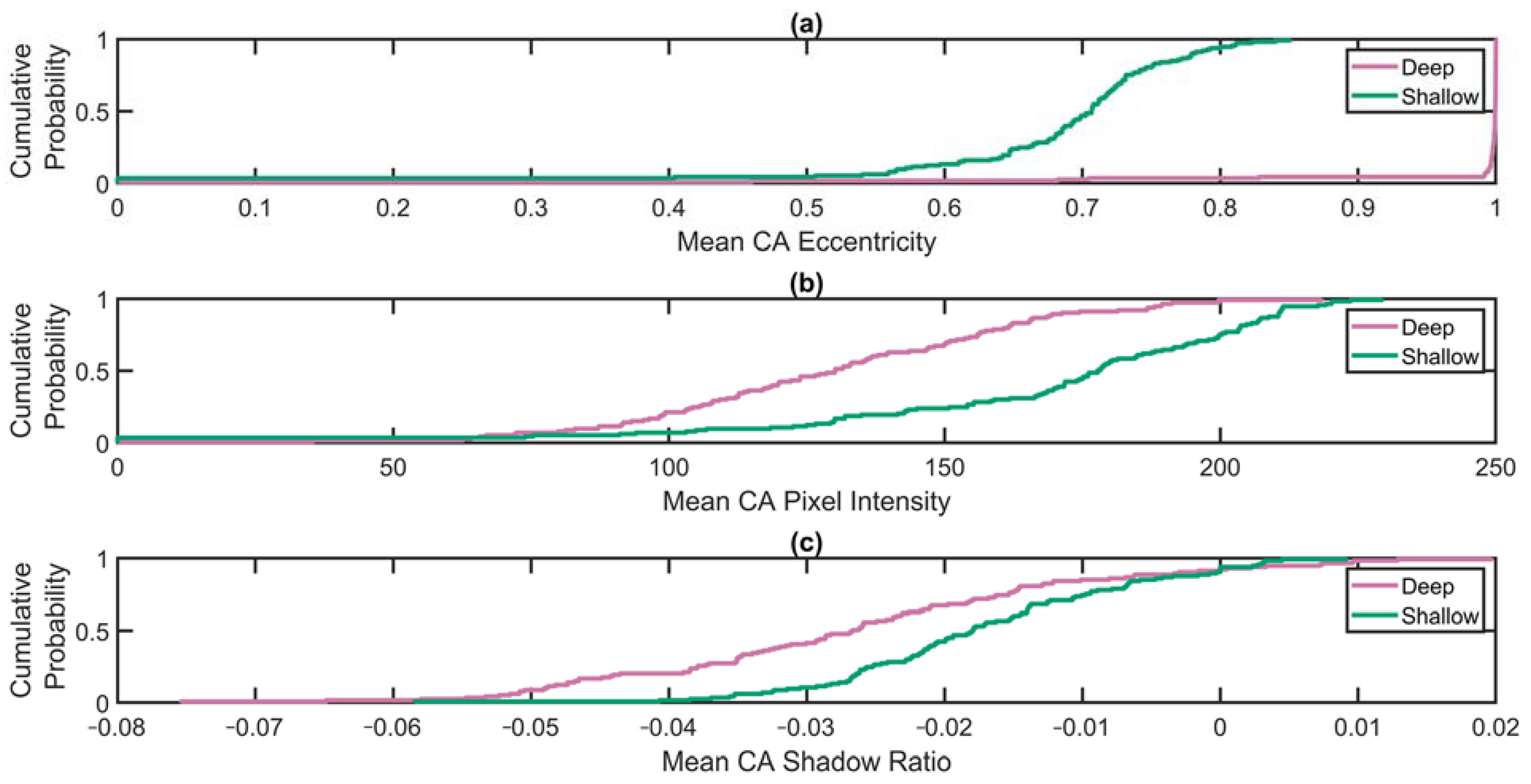

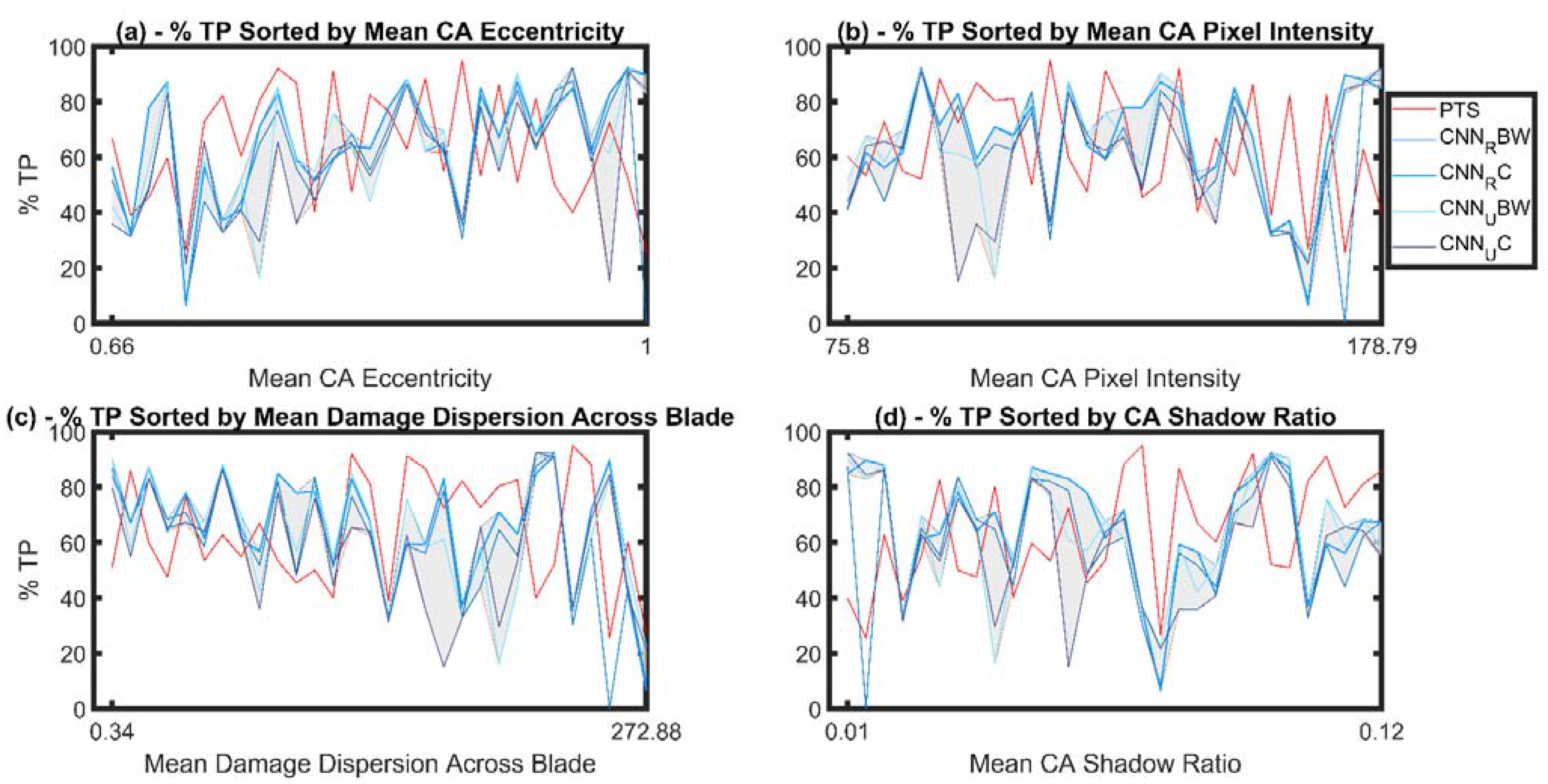

3.4. Damage Classification

4. Discussion and Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wiser, R.; Bolinger, M.; Hoen, B.; Millstein, D.; Rand, J.; Barbose, G.; Darghouth, N.; Gorman, W.; Jeong, S.; Paulos, B. Land-Based Wind Market Report: 2022 Edition; Lawrence Berkeley National Lab: Berkeley, CA, USA, 2022. [Google Scholar]

- Barthelmie, R.J.; Shepherd, T.J.; Aird, J.A.; Pryor, S.C. Power and wind shear implications of large wind turbine scenarios in the US Central Plains. Energies 2020, 13, 4269. [Google Scholar] [CrossRef]

- Musial, W.; Spitsen, P.; Duffy, P.; Beiter, P.; Marquis, M.; Hammond, R.; Shields, M. Offshore Wind Market Report: 2022 Edition; (No. NREL/TP-5000-83544); National Renewable Energy Lab: Golden, CO, USA, 2022.

- Alsaleh, A.; Sattler, M. Comprehensive life cycle assessment of large wind turbines in the US. Clean Technol. Environ. Policy 2019, 21, 887–903. [Google Scholar] [CrossRef]

- Du, Y.; Zhou, S.; Jing, X.; Peng, Y.; Wu, H.; Kwok, N. Damage detection techniques for wind turbine blades: A review. Mech. Syst. Signal Process 2020, 141, 106445. [Google Scholar] [CrossRef]

- Pryor, S.C.; Barthelmie, R.J.; Cadence, J.; Dellwik, E.; Hasager, C.B.; Kral, S.T.; Reuder, J.; Rodgers, M.; Veraart, M. Atmospheric Drivers of Wind Turbine Blade Leading Edge Erosion: Review and Recommendations for Future Research. Energies 2022, 15, 8553. [Google Scholar] [CrossRef]

- Mishnaevsky, L., Jr.; Hasager, C.B.; Bak, C.; Tilg, A.M.; Bech, J.I.; Rad, S.D.; Fæster, S. Leading edge erosion of wind turbine blades: Understanding, prevention and protection. Renew. Energy 2021, 169, 953–969. [Google Scholar] [CrossRef]

- Herring, R.; Dyer, K.; Martin, F.; Ward, C. The increasing importance of leading edge erosion and a review of existing protection solutions. Renew. Sustain. Energy Rev. 2019, 115, 109382. [Google Scholar] [CrossRef]

- Mishnaevsky, L., Jr.; Thomsen, K. Costs of repair of wind turbine blades: Influence of technology aspects. Wind. Energy 2020, 23, 2247–2255. [Google Scholar] [CrossRef]

- Ravishankara, A.K.; Özdemir, H.; Van der Weide, E. Analysis of leading edge erosion effects on turbulent flow over airfoils. Renew. Energy 2021, 172, 765–779. [Google Scholar] [CrossRef]

- Amirzadeh, B.; Louhghalam, A.; Raessi, M.; Tootkaboni, M. A computational framework for the analysis of rain-induced erosion in wind turbine blades, part I: Stochastic rain texture model and drop impact simulations. J. Wind Eng. Ind. Aerodyn. 2017, 163, 33–43. [Google Scholar] [CrossRef]

- Fraisse, A.; Bech, J.I.; Borum, K.K.; Fedorov, V.; Johansen NF, J.; McGugan, M.; Mishnaevsky, L., Jr.; Kusano, Y. Impact fatigue damage of coated glass fibre reinforced polymer laminate. Renew. Energy 2018, 126, 1102–1112. [Google Scholar] [CrossRef]

- Carraro, M.; De Vanna, F.; Zweiri, F.; Benini, E.; Heidari, A.; Hadavinia, H. CFD modeling of wind turbine blades with eroded leading edge. Fluids 2022, 7, 302. [Google Scholar] [CrossRef]

- Han, W.; Kim, J.; Kim, B. Effects of contamination and erosion at the leading edge of blade tip airfoils on the annual energy production of wind turbines. Renew. Energy 2018, 115, 817–823. [Google Scholar] [CrossRef]

- Schramm, M.; Rahimi, H.; Stoevesandt, B.; Tangager, K. The influence of eroded blades on wind turbine performance using numerical simulations. Energies 2017, 10, 1420. [Google Scholar] [CrossRef]

- Papi, F.; Cappugi, L.; Salvadori, S.; Carnevale, M.; Bianchini, A. Uncertainty quantification of the effects of blade damage on the actual energy production of modern wind turbines. Energies 2020, 13, 3785. [Google Scholar] [CrossRef]

- Gaudern, N. A practical study of the aerodynamic impact of wind turbine blade leading edge erosion. J. Phys. Conf. Ser. 2014, 524, 012031. [Google Scholar] [CrossRef]

- Letson, F.; Shepherd, T.J.; Barthelmie, R.J.; Pryor, S.C. WRF modeling of deep convection and hail for wind power applications. J. Appl. Meteorol. Climatol. 2020, 59, 1717–1733. [Google Scholar] [CrossRef]

- Verma, A.S.; Castro, S.G.; Jiang, Z.; Teuwen, J.J. Numerical investigation of rain droplet impact on offshore wind turbine blades under different rainfall conditions: A parametric study. Compos. Struct. 2020, 241, 112096. [Google Scholar] [CrossRef]

- Knobbe-Eschen, H.; Stemberg, J.; Abdellaoui, K.; Altmikus, A.; Knop, I.; Bansmer, S.; Balaresque, N.; Suhr, J. Numerical and experimental investigations of wind-turbine blade aerodynamics in the presence of ice accretion. In Proceedings of the AIAA Scitech 2019 Forum, San Diego, CA, USA, 7–11 January 2019; p. 0805. [Google Scholar]

- Lau, B.C.P.; Ma, E.W.M.; Pecht, M. Review of offshore wind turbine failures and fault prognostic methods. In Proceedings of the IEEE 2012 Prognostics and System Health Management Conference, Beijing, China, 23–25 May 2012; pp. 1–5. [Google Scholar]

- Wood, R.J.; Lu, P. Leading edge topography of blades—A critical review. Surf. Topogr. 2021, 9, 023001. [Google Scholar] [CrossRef]

- Slot, H.M.; Gelinck, E.R.M.; Rentrop, C.; Van Der Heide, E. Leading edge erosion of coated wind turbine blades: Review of coating life models. Renew. Energy 2015, 80, 837–848. [Google Scholar] [CrossRef]

- Springer, G.S.; Yang, C.I.; Larsen, P.S. Analysis of rain erosion of coated materials. J. Compos. Mater. 1974, 8, 229–252. [Google Scholar] [CrossRef]

- Pryor, S.C.; Letson, F.W.; Shepherd, T.J.; Barthelmie, R.J. Evaluation of WRF simulation of deep convection in the US Southern Great Plains. J. Appl. Meteorol. Climatol. 2023, 62, 41–62. [Google Scholar] [CrossRef]

- Letson, F.; Barthelmie, R.J.; Pryor, S.C. Radar-derived precipitation climatology for wind turbine blade leading edge erosion. Wind Energy Sci. 2020, 5, 331–347. [Google Scholar] [CrossRef]

- Keegan, M.H. Wind Turbine Blade Leading Edge Erosion, an Investigation of Rain Droplet and Hailstone Impact Induced Damage Mechanisms. Ph.D. Thesis, University of Strathclyde, Glasgow, UK, 2014. [Google Scholar]

- Springer, G.S. Erosion by Liquid Impact; Springer: Berlin, Germany, 1976. [Google Scholar]

- Castorrini, A.; Venturini, P.; Corsini, A.; Rispoli, F. Machine learnt prediction method for rain erosion damage on wind turbine blades. Wind Energy 2021, 24, 917–934. [Google Scholar] [CrossRef]

- Hoksbergen, N.; Akkerman, R.; Baran, I. The Springer model for lifetime prediction of wind turbine blade leading edge protection systems: A review and sensitivity study. Materials 2022, 15, 1170. [Google Scholar] [CrossRef]

- Eisenberg, D.; Laustsen, S.; Stege, J. Wind turbine blade coating leading edge rain erosion model: Development and validation. Wind Energy 2018, 21, 942–951. [Google Scholar] [CrossRef]

- Hoksbergen, T.H.; Baran, I.; Akkerman, R. Rain droplet erosion behavior of a thermoplastic based leading edge protection system for wind turbine blades. IOP Conf. Ser. Mater. Sci. Eng. 2020, 942, 012023. [Google Scholar] [CrossRef]

- Tobin, E.F.; Young, T.M. Analysis of incubation period versus surface topographical parameters in liquid droplet erosion tests. Mater. Perform. Charact. 2017, 6, 144–164. [Google Scholar] [CrossRef]

- McGugan, M.; Mishnaevsky, L., Jr. Damage mechanism based approach to the structural health monitoring of wind turbine blades. Coatings 2020, 10, 1223. [Google Scholar] [CrossRef]

- Stephenson, S. Wind blade repair: Planning, safety, flexibility. Composites World, 1 August 2011. [Google Scholar]

- Major, D.; Palacios, J.; Maughmer, M.; Schmitz, S. Aerodynamics of leading-edge protection tapes for wind turbine blades. Wind Eng. 2021, 45, 1296–1316. [Google Scholar] [CrossRef]

- Bech, J.I.; Hasager, C.B.; Bak, C. Extending the life of wind turbine blade leading edges by reducing the tip speed during extreme precipitation events. Wind Energy Sci. 2018, 3, 729–748. [Google Scholar] [CrossRef]

- Rempel, L. Rotor blade leading edge erosion-real life experiences. Wind. Syst. Mag. 2012, 11, 22–24. [Google Scholar]

- Amirat, Y.; Benbouzid, M.E.H.; Al-Ahmar, E.; Bensaker, B.; Turri, S. A brief status on condition monitoring and fault diagnosis in wind energy conversion systems. Renew. Sustain. Energy Rev. 2009, 13, 2629–2636. [Google Scholar] [CrossRef]

- Yan, Y.J.; Cheng, L.; Wu, Z.Y.; Yam, L.H. Development in vibration-based structural damage detection technique. Mech. Syst. Signal Process. 2007, 21, 2198–2211. [Google Scholar] [CrossRef]

- Juengert, A.; Grosse, C.U. Inspection techniques for wind turbine blades using ultrasound and sound waves. In Proceedings of the NDTCE, Nantes, France, 30 June–3 July 2009; Volume 9. [Google Scholar]

- Van Dam, J.; Bond, L.J. Acoustic emission monitoring of wind turbine blades. Smart Mater. Non-Destr. Eval. Energy Syst. 2015, 9439, 55–69. [Google Scholar]

- Xu, D.; Wen, C.; Liu, J. Wind turbine blade surface inspection based on deep learning and UAV-taken images. J. Renew. Sustain. Energy 2019, 11, 053305. [Google Scholar] [CrossRef]

- Sørensen, B.F.; Lading, L.; Sendrup, P. Fundamentals for Remote Structural Health Monitoring of Wind Turbine Blades-A Pre-Project; U.S. Department of Energy: Washington, DC, USA, 2002.

- Shihavuddin, A.S.M.; Chen, X.; Fedorov, V.; Nymark Christensen, A.; Andre Brogaard Riis, N.; Branner, K.; Bjorholm Dahl, A.; Reinhold Paulsen, R. Wind turbine surface damage detection by deep learning aided drone inspection analysis. Energies 2019, 12, 676. [Google Scholar] [CrossRef]

- Yu, Y.; Cao, H.; Liu, S.; Yang, S.; Bai, R. Image-based damage recognition of wind turbine blades. In Proceedings of the 2017 2nd International Conference on Advanced Robotics and Mechatronics (ICARM), Hefei and Tai’an, China, 27–31 August 2017; pp. 161–166. [Google Scholar]

- Yang, P.; Dong, C.; Zhao, X.; Chen, X. The surface damage identifications of wind turbine blades based on ResNet50 algorithm. In Proceedings of the 2020 39th Chinese Control Conference (CCC), Shenyang, China, 27–30 July 2020; pp. 6340–6344. [Google Scholar]

- Bradley, D.; Roth, G. Adaptive thresholding using the integral image. J. Graph. Tools 2007, 12, 13–21. [Google Scholar] [CrossRef]

- Zulpe, N.; Pawar, V. GLCM textural features for brain tumor classification. Int. J. Comput. Sci. Issues 2012, 9, 354. [Google Scholar]

- Sirmacek, B.; Unsalan, C. Damaged building detection in aerial images using shadow information. In Proceedings of the 2009 4th International Conference on Recent Advances in Space Technologies, Istanbul, Turkey, 11–13 June 2009; pp. 249–252. [Google Scholar]

- Unsalan, C.; Boyer, K.L. Linearized vegetation indices based on a formal statistical framework. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1575–1585. [Google Scholar] [CrossRef]

- Maniaci, D.C.; MacDonald, H.; Paquette, J.; Clarke, R. Leading Edge Erosion Classification System; Technical Report from IEA Wind Task 46 Erosion of Wind Turbine Blades; Technical University of Denmark: Lyngby, Denmark, 2022; 52p. [Google Scholar]

- Wang, Y.; Hu, R.; Zheng, X. Aerodynamic analysis of an airfoil with leading edge pitting erosion. J. Sol. Energy Eng. 2017, 139, 061002. [Google Scholar] [CrossRef]

- Sareen, A.; Sapre, C.A.; Selig, M.S. Effects of leading edge erosion on wind turbine blade performance. Wind. Energy 2014, 17, 1531–1542. [Google Scholar] [CrossRef]

- Mishnaevsky, L., Jr. Root causes and mechanisms of failure of wind turbine blades: Overview. Materials 2022, 15, 2959. [Google Scholar] [CrossRef] [PubMed]

- McGugan, M.; Pereira, G.; Sørensen, B.F.; Toftegaard, H.; Branner, K. Damage tolerance and structural monitoring for wind turbine blades. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2015, 373, 20140077. [Google Scholar] [CrossRef]

- Peacock, J.A. Two-dimensional goodness-of-fit testing in astronomy. Mon. Notices Royal Astron. Soc. 1983, 202, 615–627. [Google Scholar] [CrossRef]

- Alloghani, M.; Al-Jumeily, D.; Mustafina, J.; Hussain, A.; Aljaaf, A.J. A systematic review on supervised and unsupervised machine learning algorithms for data science. In Supervised and Unsupervised Learning for Data Science. Unsupervised and Semi-Supervised Learning; Berry, M., Mohamed, A., Yap, B., Eds.; Springer: Cham, Switzerland, 2020; pp. 3–21. [Google Scholar]

- Zheng, X.; Lei, Q.; Yao, R.; Gong, Y.; Yin, Q. Image segmentation based on adaptive K-means algorithm. EURASIP J. Image Video Process. 2018, 2018, 1–10. [Google Scholar] [CrossRef]

- Burney, S.A.; Tariq, H. K-means cluster analysis for image segmentation. Int. J. Comput. Appl. 2014, 96, 872–878. [Google Scholar]

- Maddern, W.; Stewart, A.; McManus, C.; Upcroft, B.; Churchill, W.; Newman, P. Illumination invariant imaging: Applications in robust vision-based localisation, mapping and classification for autonomous vehicles. In Proceedings of the Visual Place Recognition in Changing Environments Workshop, IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; Volume 2, p. 5. [Google Scholar]

- Sharma, G.; Wu, W.; Dalal, E.N. The CIEDE2000 Color-Difference Formula: Implementation Notes, Supplementary Test Data, and Mathematical Observations. Color Res. Appl. 2005, 30, 21–30. [Google Scholar] [CrossRef]

- Chaki, N.; Shaikh, S.H.; Saeed, K.; Chaki, N.; Shaikh, S.H.; Saeed, K. A Comprehensive Survey on Image Binarization Techniques; Springer: New Delhi, India, 2014; pp. 5–15. [Google Scholar]

- Rawat, W.; Wang, Z. Deep convolutional neural networks for image classification: A comprehensive review. Neural Comput. 2017, 29, 2352–2449. [Google Scholar] [CrossRef]

- Hu, G.; Yang, Y.; Yi, D.; Kittler, J.; Christmas, W.; Li, S.Z.; Hospedales, T. When face recognition meets with deep learning: An evaluation of convolutional neural networks for face recognition. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Santiago, Chile, 7–13 December 2015; pp. 142–150. [Google Scholar]

- Dhillon, A.; Verma, G.K. Convolutional neural network: A review of models, methodologies and applications to object detection. Prog. Artif. Intell. 2020, 9, 85–112. [Google Scholar] [CrossRef]

- Hossain, T.; Shishir, F.S.; Ashraf, M.; Al Nasim, M.A.; Shah, F.M. Brain tumor detection using convolutional neural network. In Proceedings of the 2019 1st International Conference on Advances in Science, Engineering and Robotics Technology, Dhaka, Bangladesh, 3–5 May 2019; pp. 1–6. [Google Scholar]

- Aird, J.A.; Quon, E.W.; Barthelmie, R.J.; Debnath, M.; Doubrawa, P.; Pryor, S.C. Region-based convolutional neural network for wind turbine wake characterization in complex terrain. Remote Sens. 2021, 13, 4438. [Google Scholar] [CrossRef]

- Aird, J.A.; Quon, E.W.; Barthelmie, R.J.; Pryor, S.C. Region-based convolutional neural network for wind turbine wake characterization from scanning lidars. J. Phys. Conf. Ser. 2022, 2265, 032077. [Google Scholar] [CrossRef]

- Guo, W.; Yang, W.; Zhang, H.; Hua, G. Geospatial object detection in high resolution satellite images based on multi-scale convolutional neural network. Remote Sens. 2018, 10, 131. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Badilla-Solórzano, J.; Spindeldreier, S.; Ihler, S.; Gellrich, N.C.; Spalthoff, S. Deep-learning-based instrument detection for intra-operative robotic assistance. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 1685–1695. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Jensen, F.; Jerg, J.F.; Sorg, M.; Fischer, A. Active thermography for the interpretation and detection of rain erosion damage evolution on GFRP airfoils. NDT E Int. 2023, 135, 102778. [Google Scholar] [CrossRef]

| Parameter | Usage | Module (See Figure 3) | Value |

|---|---|---|---|

| E | Local contrast operation to enhance edges within the image | 1—Blade Area Quantification 2—PTS damage proposal 3—PTS damage classification | 0.2 |

| C | Chroma alteration; image saturation is enhanced | 1—Blade Area Quantification, 2—PTS damage proposal 3—PTS damage classification | 0.5 |

| Flat-field correction; Gaussian smoothing with a standard deviation of is utilized to correct image shading distortion | 2—PTS damage proposal | 8 |

| Parameter | Usage | Value |

|---|---|---|

| Q | Shadow ratio quantile | 0.009 |

| Adjusts sensitivity of adaptive thresholding to luminance of foreground/background pixels (the damage is often distinguished as background pixels due to associated lower pixel intensity). | 0.3 |

| Parameter | Usage | Value |

|---|---|---|

| Learning Rate | Specifies the pace at which the machine learning model learns the input data | 0.001 |

| Batch Size | Number of training examples in one iteration | 2 |

| Epochs | Number of times the CNN processes the entire dataset during training | 225 |

| Image rotated by to ensure the blade is horizontal in the image | Unrotated Image | |

| Black and White | ||

| Color |

| Task | Model | Accuracy (% of Pixels Correctly Identified Relative to Ground Truth) |

|---|---|---|

| Blade Area Quantification | Module 1 | 93.7 |

| Damage Quantification | PTS (Module 2) | 63.9 |

| CNN | 61.4 (mean CNN), [58.1, 65.9] [min = max = | |

| Deep Damage Classification | PTS (Module 3) | 62.1 |

| CNN | 68.3 (mean CNN), [65.5, 72.5] [min = max = | |

| Shallow Damage Classification | PTS (Module 3) | 6.6 |

| CNN | 26.1 (mean CNN), [24.5, 28.5] [min = max = ] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aird, J.A.; Barthelmie, R.J.; Pryor, S.C. Automated Quantification of Wind Turbine Blade Leading Edge Erosion from Field Images. Energies 2023, 16, 2820. https://doi.org/10.3390/en16062820

Aird JA, Barthelmie RJ, Pryor SC. Automated Quantification of Wind Turbine Blade Leading Edge Erosion from Field Images. Energies. 2023; 16(6):2820. https://doi.org/10.3390/en16062820

Chicago/Turabian StyleAird, Jeanie A., Rebecca J. Barthelmie, and Sara C. Pryor. 2023. "Automated Quantification of Wind Turbine Blade Leading Edge Erosion from Field Images" Energies 16, no. 6: 2820. https://doi.org/10.3390/en16062820

APA StyleAird, J. A., Barthelmie, R. J., & Pryor, S. C. (2023). Automated Quantification of Wind Turbine Blade Leading Edge Erosion from Field Images. Energies, 16(6), 2820. https://doi.org/10.3390/en16062820