Abstract

In a previous research effort by this group, pseudo engine dynamometer data in multi-dimensional arrays were combined with dynamic equations to form a crank angle resolved engine model compatible with a real-time simulator. The combination of the real-time simulator and external targets enabled the development of a software-in-the-loop (SIL) environment that enabled near-real-time development of AI/ML and real-time deployment of the resulting AI/ML. Military applications, in particular, are unlikely to possess large quantities of non-sparse operational data that span the full operational range of the system, stove-piping the ability to develop and deploy AI/ML which is sufficient for near-real-time or real-time control. AI/ML has been shown to be well suited for predicting highly non-linear mathematical phenomena and thus military systems could potentially benefit from the development and deployment of AI/ML acting as soft sensors. Given the non-sparse nature of the data, it becomes exceedingly important that AI/ML be developed and deployed in near-real-time or real-time in parallel to a real-time system to overcome the inadequacy of applicable data. This research effort used parallel processing to reduce the training duration of the shallow artificial neural networks (SANN) and forest algorithms forming ensemble models. This research is novel in that the SIL environment enables pre-developed AI/ML to be adapted in near-real-time or develop AI/ML in response to changes within the operation of the applied system, different load torques, engine speeds, and atmospheric conditions to name a few. Over time it is expected that the continued adaptation of the algorithms will lead to the development of AI/ML that is suitable for real-time control and energy management.

1. Introduction

Technology innovation is often at odds with corresponding improvements in energy efficiency. Often, military hardware is extremely effective, but if the technology provides an improvement in energy efficiency it is likely a coincidence instead of a planned outcome. The United States Energy Information Agency (EIA) reported in their 2023 Annual Energy Outlook that total carbon emissions due to energy production in the United States have declined since 2005 and due to the rapid integration of renewables and emphasis on electrification of certain energy-intensive industries, carbon emissions are projected to decline further through 2030 [1]. Further, the EIA reports that overall energy consumption by the Department of Defense has decreased by 54% since 1975 [2]. However, another report, written by the National Academies of Sciences, Engineering, and Medicine in 2021, states that since World War II, the Army alone consumed approximately 20 times more energy per soldier while conducting combat operations [3].

In 1947, the first practical semiconductor transistor [4] was developed by William Shockley, John Bardeen, and Walter Brattain. The prevalence of these semiconductor devices then gradually gave rise to the birth of modern-day electronics, ultimately coming to a head in 1979 [5], when General Motors introduced the first Engine Control Module (ECM). The ECM was used to control the fuel injection of the internal combustion engine. Up to this point, all vehicle systems were regulated and controlled by the operator, or through mechanical control system technologies.

By the mid to late 2000’s the term ECM was recast to be more generalized as the Electronic Control Module (ECM). The standard automobile manufactured during this period had anywhere from 20 to 40 individual ECUs required to improve the stability and control of modern-day automobiles. Presently, modern-day automobiles have anywhere from 60 to 80 or more ECUs, capable of controlling all aspects of the automobile, including comfort and infotainment-based systems. Civilian-based vehicles rely heavily on the fusion of electronic controls and sensing technologies to provide unparalleled safety and control capabilities. Modern-day military vehicles, on the other hand, have lagged significantly, largely because military vehicles must operate in extremely inhospitable and chaotic environments.

Environmental factors, combined with diversity of mission requirements, often require increased energy utilization which is ultimately governed by combustible fuels. Boosting energy utilization demands higher engine output, but this escalates size, weight, and armor needs, posing a challenge for manufacturers to accommodate the U.S. Army’s strict design criteria. Another option is to manage onboard E&P resources selectively, disabling or enabling capabilities as needed, or by hybridizing the vehicle’s electrical mechanical architecture, thus enabling diverse E&P resource sharing. According to Mittal et al. [6], 60% to 80% of the daily utilization of tactical and combat vehicles are not in use or off, between 15% and 30% of the time the vehicles are idling, and between 0% and 10% of the time the vehicles are in motion. While in operation, a substantial portion of vehicle usage involves idling, which is likely why engineers and scientists have adopted auto start-stop technology now commonly found within civilian vehicle variants into military vehicle variants. It is expected that this innovation could lead to a 20% reduction in total fuel utilization. A reduction in fuel utilization leads to reduced logistical support requirements, and the ability to manage internal energy utilization based on active mission requirements.

A dynamic and chaotic potential battlefield environment combined with the increased introduction of electrification of weapons, vehicles, and systems, will likely further increase the thirst for energy in military formations. In a future potential scenario of contested lines of communication, environment/geographical limitations, and multi-dimensional conflict, simply moving more energy supply in the form of petroleum fuels may not be adequate. Energy supply, analogous to water, food, or ammunition on the battlefield, is a commodity that must be managed in near-real-time and real-time; due to the nature of evolving equipment, weapons, and tactics, failure to manage energy supply will severely degrade the effectiveness and efficiency of future military operations.

Energy and power (E&P) capabilities and requirements have risen sharply for military systems as the needs of the warfighter continue to change. Modern systems are pushed harder, faster, and for longer periods of time when compared to aging legacy systems, supporting a wider operational range. E&P is quickly becoming a separate and crucial logistical burden in which all multi-domain operations must compete for a finite number of resources over short time scales, i.e., a multi-variate optimization problem. Such resources could be constrained or contested in the future, resulting from environmental or adversarial effects. There is a growing need to understand the current and future E&P utilization from a bottom-up perspective which can provide both high granularity and a holistic understanding in an effective, efficient, and timely fashion (near-real-time, or real-time). This information could be elevated to understand the broader E&P requirements and capabilities of systems, systems of systems, and the battlefield, and further tied to both local and global mission requirements and capabilities.

E&P is a commodity that must be sensed, detected, tracked, and analyzed in near-real-time or real-time; failure to adequately understand the current and future energy utilization can severely degrade military operations, decreasing the efficiency or effectiveness of the warfighter. The development of knowledge or intelligence regarding a system’s energy utilization is known as energy awareness. Energy awareness can subsequently be used to aid in near-real-time and real-time optimization and control of complex systems or for preventative maintenance. Energy awareness is a capability that requires computationally efficient reduced order modeling techniques, application of AI/ML as soft sensors and as predictive analytics, atmospheric intelligence from which operational intelligence may be inferred, and the inclusion of interconnected, distributed, computational processing and communication resources capable of ingesting, transmitting, receiving, and analyzing large quantities of sparse un-labeled data, made possible by the internet of battlefield things (IoBT).

The U.S. Army’s climate and modernization priorities [7,8] necessitate the need for major improvements to the network technologies required to command and control forces throughout multi-domain operations (MDO), enabled by the Internet of Battlefield Things (IoBT). IoBT provides an avenue for the development of new and improved energy management, control, and optimization policies capable of near-real-time or real-time execution. These capabilities will be enabled by reduced order modeling techniques, development and deployment of AI/ML, advanced predictive analytics, the fusion of near-real-time sensor data and AI/ML, deployment of distributed graphical processing units (GPUs), and cloud computing technologies leading to machine intelligence, improved energy solutions, and battlefield energy awareness.

One of the rapidly growing ways to tackle modern complex problems is through the exploitation of artificial intelligence and machine learning (AI/ML) for data ingestion, analysis, and as soft sensors. Artificial intelligence can be used to monitor activities and simulate them in real-time, making it a method valuable for research [9]. Additionally, machine learning is a useful way to aid AI in making predictions and testing technology. AI/ML is used for soft sensing and making models that can predict real-world conditions and results. For energy to be managed effectively, one must understand, through modeling, where energy is being produced, consumed, and wasted. This “energy awareness”, at all scales, soldiers through theater, is vital to making meaningful improvements. The usage of AI/ML technology, coupled with soft sensing, is seen in a lot of industries seeking to improve predictions and procedures that are focused on improving efficiency. One example of this is seen in the pharmaceutical industry. Rathore et al. demonstrated that AI/ML and soft sensing are decreasing the amount of human supervision required, while also creating more autonomous processes in the healthcare industry [10]. Additionally, these concepts are being used in bioprocessing. A team led by Khanal used AI/ML to predict and track pH, nutrients, temperature, and other important factors, gaining critical insight to assist in the management of soil in agricultural operations, and therefore, improving efficiency and effectiveness in the food supply chain [11]. AI/ML was also employed by Katare and colleagues to study how AI/ML is being implemented into autonomous driving services in order to improve energy efficiency by tracking fuel usage and environmental factors [12]. Other AI/ML soft sensing applications are realized in the areas of smart homes [13] and vehicular battery state of charge [14]. Examples like these indicate that there is substantial potential for AI/ML and smart sensing to dramatically improve efficiency and effectiveness in manned, semi-autonomous, and autonomous systems.

Improving the accuracy of modeling in energy systems is vital to increasing efficiency. Gaining an understanding of critical system state variables in areas where they are difficult to measure, yet reside in an area of the engine where most of the thermal energy is lost during combustion, improves the ability to model exergy losses and improve overall efficiency. Similar to recent work by Wiesner et al., this work also employs a software-in-the-loop methodology, leveraging a real-time simulator to improve the accuracy of the AI/ML algorithm in real-time [15]. Parallelism was also exploited to reduce computational duration by training different threads simultaneously.

Two different AI/ML architectures were deployed in parallel to the operation of the real-time simulator; (1) shallow artificial neural networks, which are computationally inexpensive and non-complex, yet capable of approximating nonlinear phenomena using various architectures with a minimum set of input features, and (2) random forest ensemble models that are robust to disturbance perturbations, less likely to overfit, and considered to be one of the most accurate machine learning algorithms. Conversely, random forest training algorithms suffer lengthier training durations and require large memory reserves to store algorithm trees, which could be beneficial for near-real-time applications with careful selection of input features and the number of trees. Military systems are unlikely to be adequately sensed when compared to civilian vehicles, lacking sufficient access to data that span the full operational capabilities of a system (it may require increased scrutiny based on the sensitivity of a system). This limits the ability of military systems to benefit from technological improvements aided by AI/ML. Being able to develop AI/ML in near-real-time or real-time alleviates the need to access sensitive or incomplete data and simply train over the life cycle of a system or a contingent of systems, ultimately permitting more effective and efficient operation when adapted. The novelty of this paper is due to the rigorous use of AI/ML and associated aforementioned techniques to improve energy efficiency in a dynamic environment and to the authors’ knowledge this specific application of AI/ML is not documented in the literature. With new technology and improvements like these, the hope is to obtain more accurate energy loss predictions, so that energy conservation methods can be used to increase efficiency and reduce losses.

The remainder of the publication is organized as follows, Section 2 will discuss the software-in-the-loop environment, Section 3 contains the results, which are separated into three subsections, Section 3.1 contains the results obtained from the shallow artificial neural network training phase, Section 3.2 contains the results obtained from the random forest ensemble model training phase, and Section 3.3 contains the results from the neural network testing phase. The document is then concluded by Section 4, highlighting the conclusions and future work.

2. Methodology

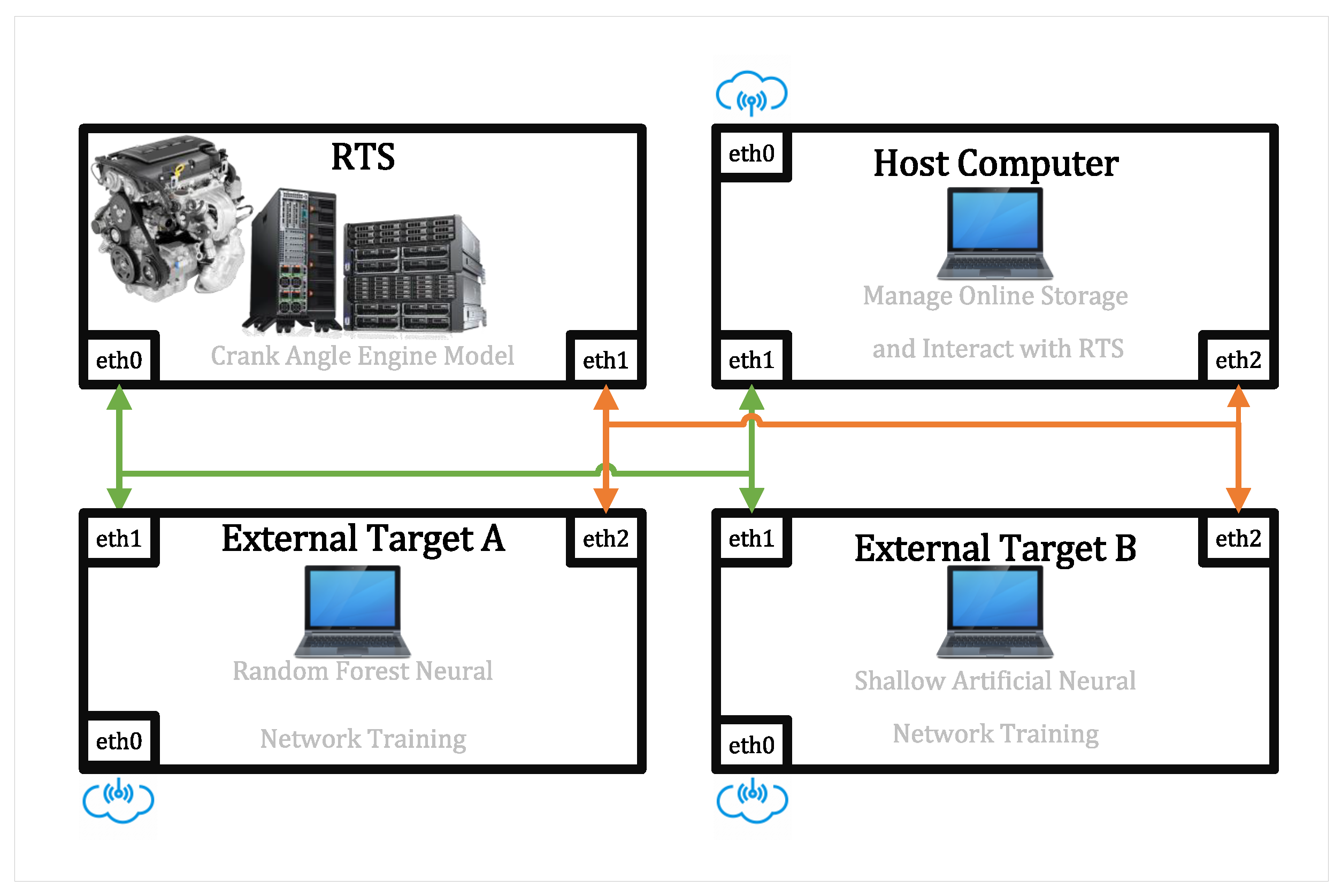

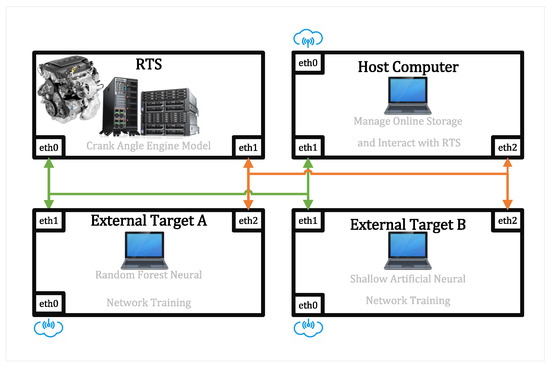

The software-in-the-loop (SIL) environment was made up of four separate components as seen in Figure 1, including (1) the OPAL real-time simulator more commonly referred to as OPAL-RT, henceforth to be referred to as a real-time simulator (RTS) which contains a real-time compatible crank angle resolved engine model (Section 2.1), (2) the host computer containing a console which enables the user to observe and interact with the RTS (Section 2.2), (3) external target A configured to develop the shallow artificial neural networks (SANNs) forming ensemble models for dissimilar engine operating conditions (Section 2.3), (4) external target B which will create a forest ensemble model of the aggregate response of the in-cylinder conditions (Section 2.4), and (5) advancement and improvement of algorithms (Section 2.5). The host computer and both external targets communicate using UDP/IP. Further, the host and external targets can access a cloud storage repository. The cloud storage repository will contain data collected from the RTS as it becomes available using the RTS’s built-in data resources. Each of the four components will be described in greater detail within subsequent subsections of this manuscript.

Figure 1.

The SIL environment consists of the RTS, the host computer, and two external target machines connected through UDP/IP communication. The host computer and both external targets are all connected to a cloud storage repository.

2.1. RTS Compatible Model

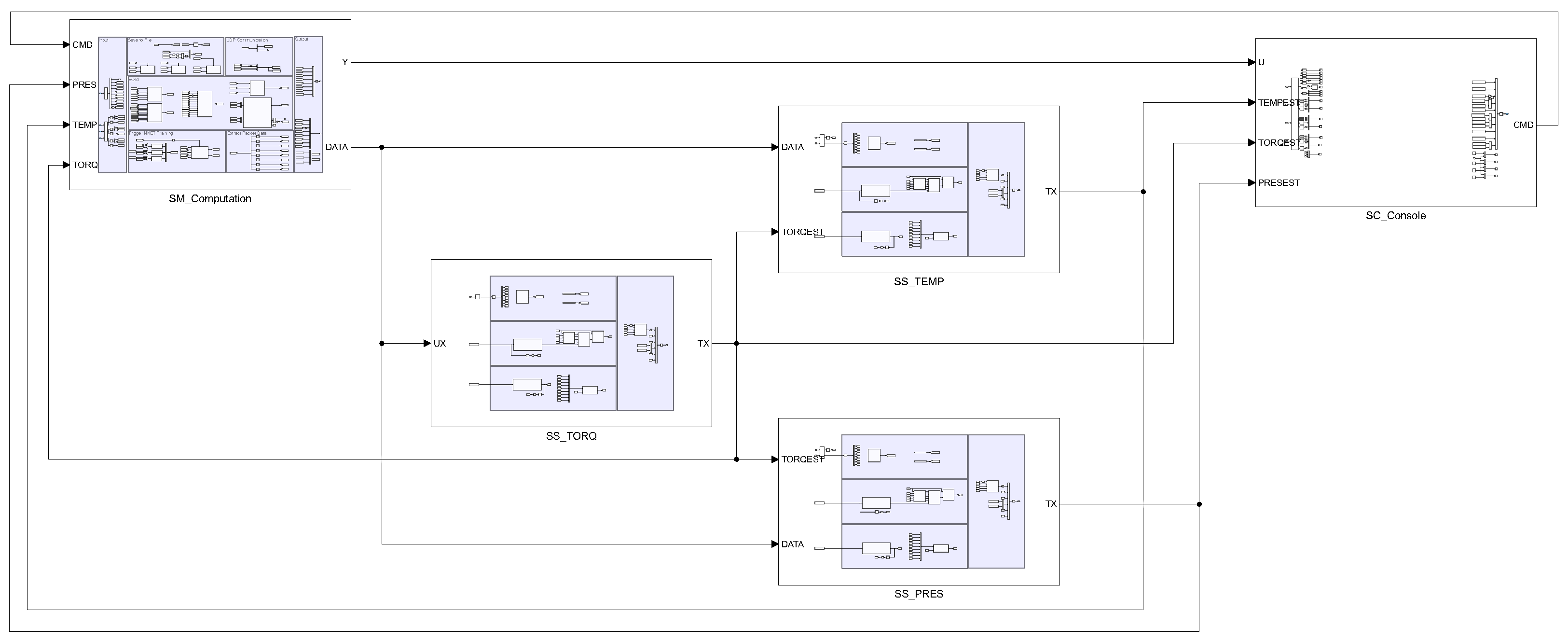

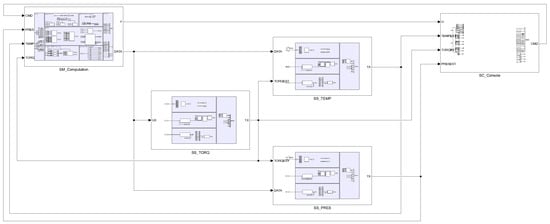

The RTS used within this study was designed and developed to be compatible with MATLAB/Simulink. The RTS-compatible model was separated into five subsystems as shown in Figure 2; including (1) the SM subsystem, (2) the SC subsystem, (3) the SS_TORQ subsystem, (4) the SS_TEMP subsystem, and (5) the SS_PRES subsystem. The SM subsystem contains the crank angle resolved engine model, described by Jane et al. [16]. The SC subsystem contains the console as described in more detail in a subsequent section of this report. Regarding the remaining three subsystems, each of the three subsystems contained multiple built-in blocks and custom system functions coded using C to implement each of the twenty-five shallow artificial neural networks and random forest ensemble models.

Figure 2.

The RTS compatible model includes five subsystems, the SC, SM, SS_TORQ, SS_TEMP, and SS_PRES subsystems. The SC subsystem contains the console, the SM subsystem contains the crank angle resolved engine model, and the SS_TORQ, SS_TEMP, and SS_PRES contain the per-cylinder generated torque and in-cylinder temperature and pressure AI/ML.

2.2. Host Computer

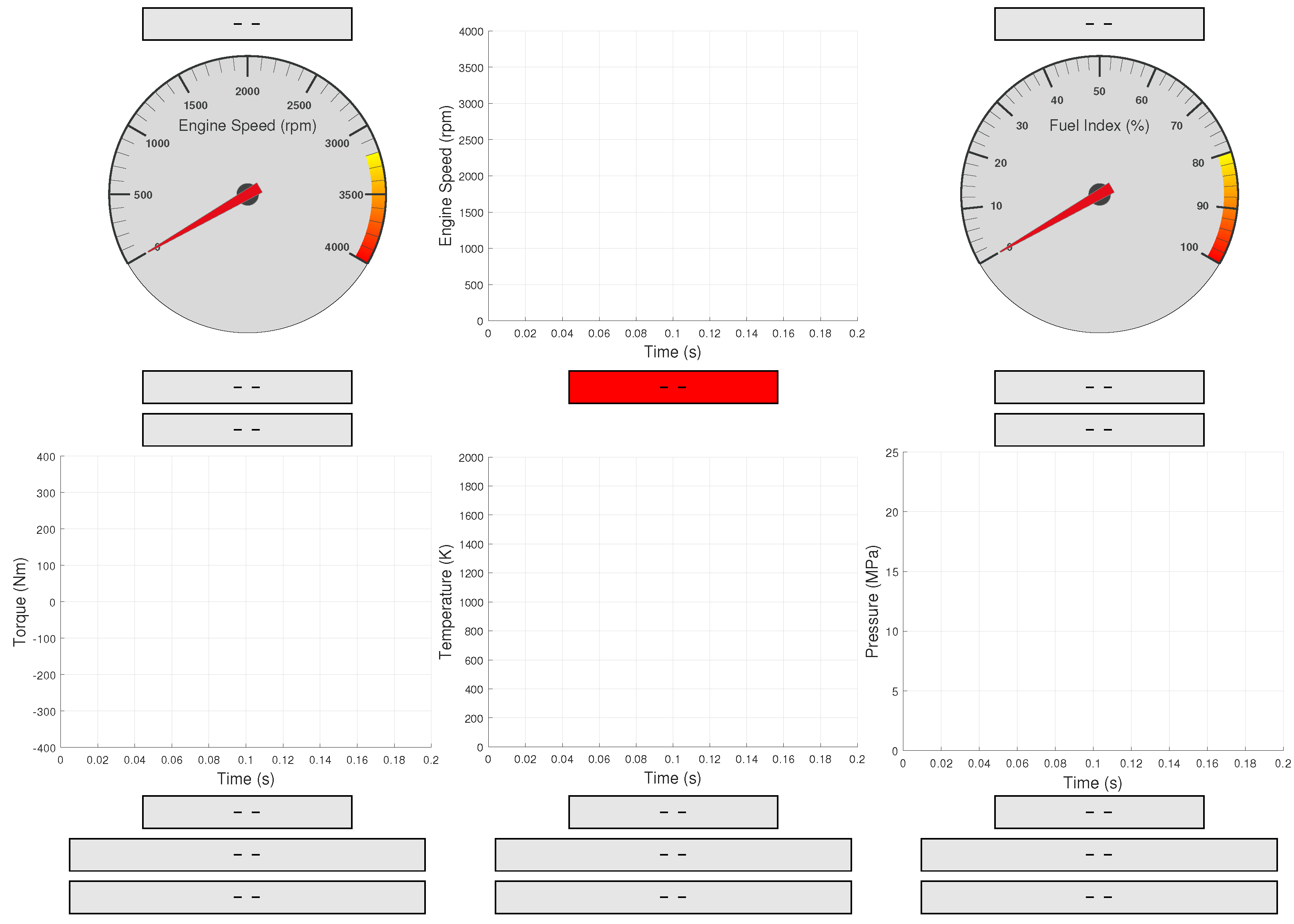

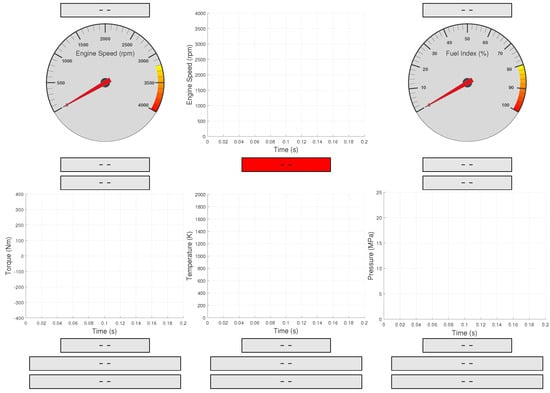

The host computer controls the RTS using the console as shown in Figure 3. The console contains multiple figures, gauges, and displays to visually observe various RTS states. States of interest include the engine speed (leftmost gauge and the display immediately below the gauge) and the throttle/fuel index (rightmost gauge and the display immediately below the gauge). The topmost figure visually displays a time history comparing the reference engine speed command and the engine speed response. The bottom three figures then visually display a time history comparing the actual engine state to the forecasted engine state produced by the SANN and the forest ensemble AI/ML model. From right to left, the per-cylinder generated torque, in-cylinder pressure, and temperature response would be observed when running. The topmost displays of the console then show the amount of data transmitted or received to/from the external targets used to ensure the correct number of bytes are transferred at any given time. The displays located immediately below the three side-by-side figures display an evaluation of the neural network via the performance function, the mean squared error. The bottommost displays then highlight ten elements of data transmitted from external target B to the RTS. The displays were included to visually observe that a UDP/IP message was received by the RTS. Finally, the red display will count down from 360 s to 0 s, this is the training duration, this will ensure conflicting training commands are ignored until the current training duration has been completed.

Figure 3.

The host computer console was used to visually observe and command or interact with the internal states of the RTS. Side portions of the console were removed, the portions that were removed are blocks that combine input signals into a vector signal or extract output elements contained in a vector signal which are then used to populate the console’s displays.

UDP/IP messages are exchanged between the RTS and the host computer, enabling the host computer to visually observe the selected internal states of the RTS and command the RTS. This UDP/IP message link is also used to download the saved simulation data as they become available on the RTS and uploaded directly to the cloud storage repository, enabling any external targets with access to the cloud storage repository to utilize the RTS simulation data for developing the AI/ML algorithms.

2.3. External Target A-Ensemble Forest Model Generator

The computer designated as external target A was configured to send and receive UDP/IP messages that relied upon the client-server protocol, and operated in much of the same way as external target B. The external target will remain inactive for much of the simulation. The external target continuously observes the online data repository, looking for the presence of twenty-five separate data files, which are generated within the random forest artificial neural network data collection phase.

Once all data files exist within the data repository, the random forest ensemble model training phase begins. The individual data files were sequentially loaded into memory, and the input and target features were then extracted and down-selected. Each data file contained between 40 and 50 s worth of data sampled every s. An engine operating at 750 rpm or 3600 rpm completes 12/60 full revolutions every second and represents complete engine cycles for a 4-stroke engine. Thus, there will be 2000 samples representing separate engine cycles. If the engine is operating at a steady state condition, one or two engine cycles worth of data is sufficient to approximate the quasi-steady state behavior of the engine, thus the input and target features are averaged for each distinctly different engine operating condition (load torque and engine speed), i.e., down-selected. Some additional preprocessing routines are then executed, and when concluded, the random forest ensemble AI/ML algorithms are trained in parallel using MATLAB’s Parallel Computing Toolbox [17].

Three separate random forest ensemble AI/ML algorithms or ensemble models were then developed or trained, for each of the three engine states including per-cylinder generated torque, in-cylinder pressure, and temperature. Each of the algorithms was limited to one hundred individual trees. Due to the randomness of a random forest algorithm (architecture will change depending on input feature values) and the nature of the RTS, the architecture of the random forest algorithm must be fixed or pre-allocated and then adapted once operational. Using the principles as outlined in Zhang [18] and MATLAB’s documentation regarding the ensemble of learners for regression [19], each individual random forest tree could be converted into a selection and bit-vector matrix, in addition to a threshold and categorical or numerical vector. Reshaping the selection and bit-vector matrices into column vectors allows for concatenation with both the threshold and the categorical or numerical values vectors. Aggregating the resulting vector for each random forest tree effectively allows the entirety of the random forest ensemble AI/ML algorithm to be formalized into a column array. Each individual column array for each of the ensemble models represented by a large double-precision vector can be typecast into an unassigned sequence of integers and transmitted via UDP/IP to the RTS. This enables the pre-allocated memory of the RTS to be adapted enabling the RTS to implement a random forest ensemble model. At this point, the training phase of the random forest algorithm will conclude. The external target will remain inactive until the end of the simulation, which is to conclude by subjecting the engine to a new set of dissimilar operating conditions that are included within the operational range of the engine but not explicitly tested.

The ensemble forest model parameters and optimization algorithm were configured to generate a regression forest ensemble model utilizing 18,000 observations from 720 samples for each of the 25 unique engine operating conditions. The ensemble learning algorithm used to train the model included Least-Squares Boosting (LSB). The algorithm was limited to generating 100 trees. The network was configured to have a single floating-point scalar value as the input features, including per-cylinder generated torque, in-cylinder temperature, or in-cylinder pressure predictions generated by the shallow artificial neural networks. Each neural network outputs a single floating-point scalar including the per-cylinder generated torque or in-cylinder temperature and pressure.

Originally, infinite time complexity was allotted for the ensemble forest model training; however, preliminary development of representative ensemble forest models indicated that the algorithms’ training duration (time complexity) was insufficient for near-real-time or real-time implementation when using all available data samples. Using the average sampled response as described previously, the training duration (time complexity) of the implemented ensemble forest model was approximated to be twenty seconds.

2.4. External Target B-Ensemble Model Generator

The computer designated as external target B was also configured to send and receive UDP/IP messages and relied upon the client-server protocol, where the RTS represented the client and the external target the server. Prior to launching the RTS, the UDP/IP protocol was instantiated on the external target using MATLAB’s Instrument Control Toolbox [20], after which the external target will remain inactive. The external target will become active upon receipt of a UDP/IP packet containing a sequence of unassigned integers, which when typecast formed four double-precision values, including the current RTS clock, the designated data file name that will be used to develop the AI/ML algorithms, and both the commanded engine speed and load torque. Once the data have been received, the server enters an additional waiting period, in which the online data repository is monitored, awaiting the required data file to exist within the data repository. After the data file is generated on the RTS and transferred and uploaded to the online data repository the data will be loaded into memory. Some preprocessing routines are then executed, and when completed, each of the three shallow artificial neural networks (SANN) will be trained in parallel using MATLAB’s Parallel Computing Toolbox [17].

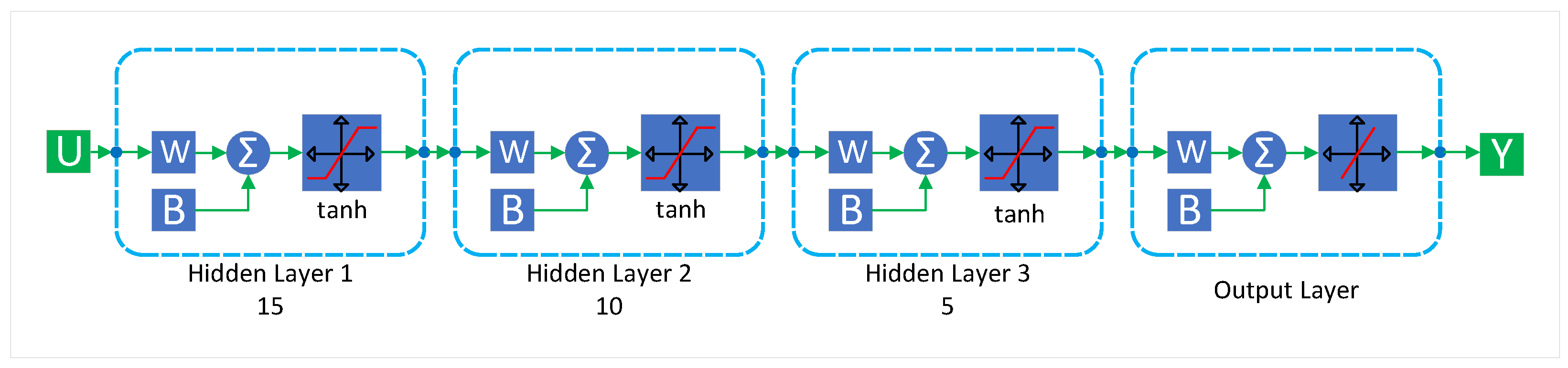

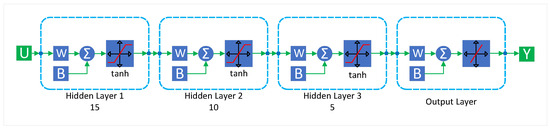

The selected architecture of the SANNs has been provided in Figure 4. The three SANNs were developed to predict the per-cylinder generated torque, in-cylinder temperature, and pressure. These engine states were selected as they directly impact the performance and efficiency of the engine. If accurate predictions can be generated in near-real-time or real-time, such predictions could help understand the energy utilization of the engine or utilized for real-time engine control and tuning. The selected input features utilized for the per-cylinder generated torque SANN include the crank angle, the cylinder’s swept position, area, and volume. The selected input features utilized for the in-cylinder temperature and pressure SANNs include all previously mentioned variables in addition to the forecasted per-cylinder generated torque.

Figure 4.

The selected architecture utilized for the shallow artificial neural networks (SANNs), where U is the input, Y is the desired output variable, W is the weight matrix, and B is the bias matrix.

Upon completion of the shallow artificial neural network training, the neural network weights and biases that define the network were extracted and concatenated within three separate double-precision vectors that were typecast into unassigned integers and transmitted back to the RTS using UDP/IP. The external target again becomes inactive until the target receives another UDP/IP packet, at which point the process will begin again. Each subsequent training of the AI/ML will be initiated using the previously obtained architecture and respective neural network weight and biases, thus potentially benefiting from transfer learning principles to help reduce subsequent training durations. The processes described were contained within a custom class object, a MATLAB construct that utilized object-oriented programming techniques to implement UDP/IP communication and train the SANNs.

The shallow artificial neural network parameters and optimization algorithm were configured to utilize Bayesian Regularization Backpropagation (BRB), configured to have fifteen floating-point scalar values as the input features to include (1) crank angle degrees, (2) cylinder sweep position, (3) cylinder sweep volume, (4) cylinder sweep area, (5) throttle or fuel index for a combustion ignition engine, (6) engine speed, (7) the total number of cylinders, (8) the rated power produced per cylinder of the engine, (9) the rated power of the engine, (10) the rated engine speed, (11) the ambient temperature, (12) the ambient pressure, (13) the displacement of the engine, (14) the bore dimension, and (15) either the aggregate engine torque (per-cylinder generated torque SANN) or the per-cylinder generated torque (in-cylinder temperature and pressure SANN). Each neural network output is a single floating-point scalar including the per-cylinder generated torque or in-cylinder temperature and pressure. The network architecture included three hidden layers containing 15, 10, and 5 neurons, respectively, followed by an output layer, and activation functions of tanh was utilized as visualized in Figure 4. MATLAB’s dividerand function was utilized to divide targets into three sets using random indices for training, testing, and validation, using a standard 70%, 15%, and 15% split. Input and target features were normalized with respect to engine parameters, and the mean squared error (MSE) performance function was used to assess neural network performance.

The training duration (time complexity) was limited to thirty seconds for each unique operational engine condition. This is the time allotted to train the neural network; training may be interrupted if MATLAB’s internal network validation checks were reached, indicating the measured performance was not significantly increasing based on the computed gradient. The training duration (time complexity) limitation was implemented to develop “good enough” approximations of the actual engine states in near-real-time or real-time. Greater time complexity would lead to more accurate predictions; however, in an ever-changing chaotic environment, the delay may invalidate the predictions.

2.5. Advancement and Improvement of Algorthims

In a previous research effort, a similar set of algorithms was utilized in combination with an RTS to train and deploy a set of AI/ML algorithms in near-real-time. In this research effort, the training and application of the AI/ML algorithms were re-investigated, first by identifying other potentially suitable machine learning candidate algorithms for continued research and application as shown in Appendix C. Because we lacked computational resources in the form of GPUs, and memory allocation for the RTS-supported software (OPAL-RT, RT LAB 2023.1) had to be completed in advance of running the RTS, we utilized machine learning algorithms that could be formalized into matrices, preallocated, and that could be trained and operated in real-time or near-real-time. Feed-forward artificial neural networks in addition to random forest ensemble models were selected to be deployed within this research effort. A comparison between the algorithms utilized in Jane et al. [16] and the algorithms utilized in this research effort is provided in Table 1.

Table 1.

Comparison between algorithms utilized in Jane et al. [16] and the algorithms utilized in this research effort.

3. Results

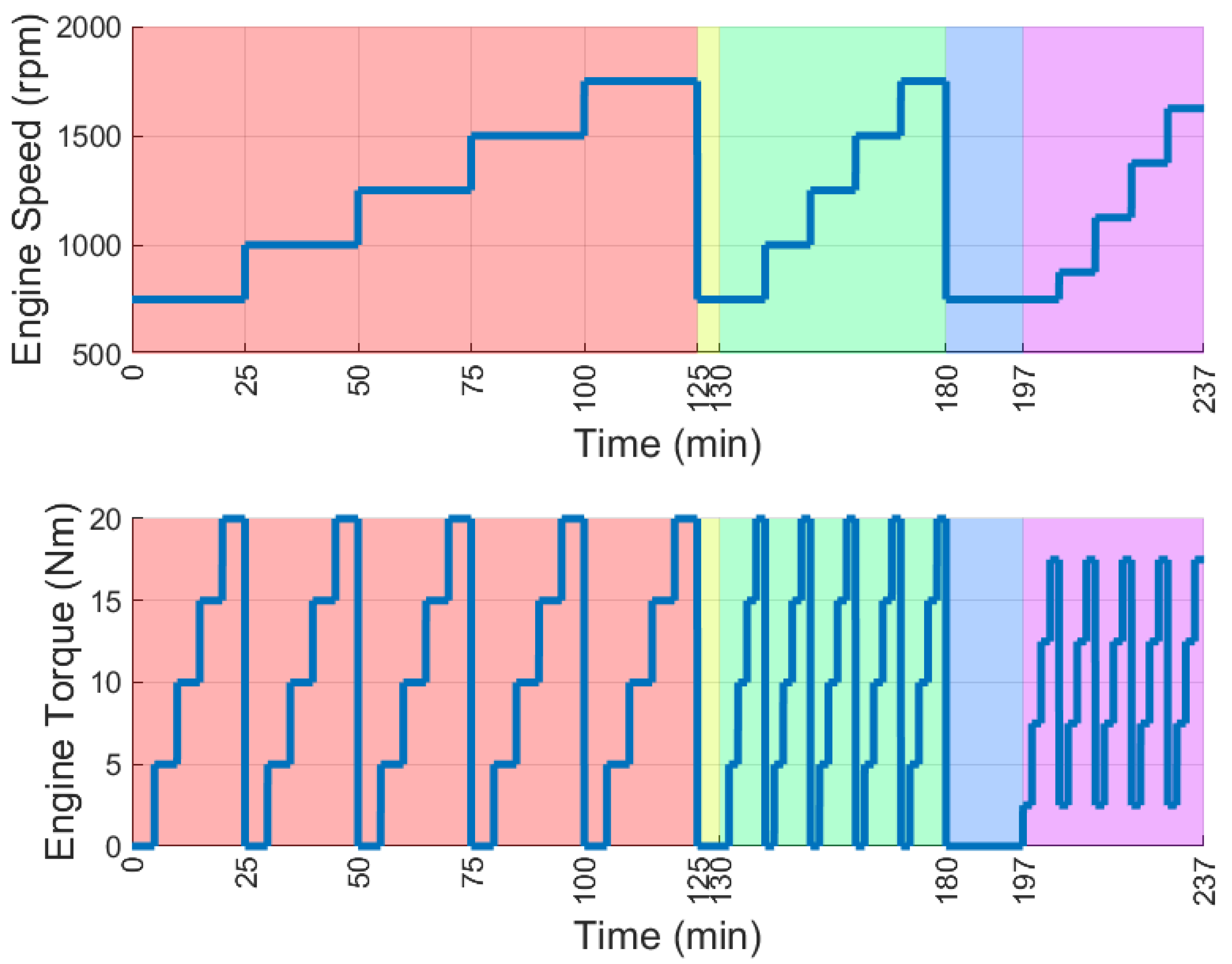

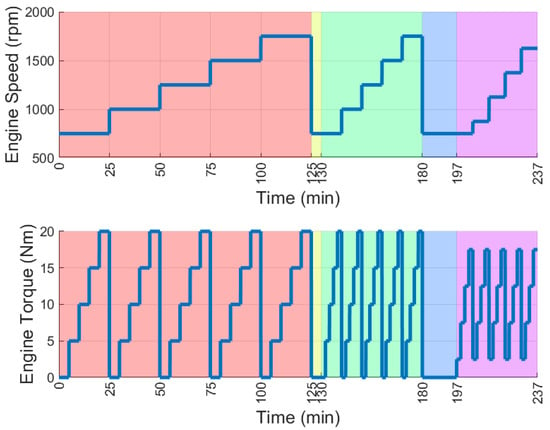

The results can be decomposed into five phases, the shallow artificial neural network training phase (phase I), an intermediate phase (phase II), the random forest artificial neural network data collection phase (phase III), the random forest ensemble model training phase (phase IV), and the neural network testing phase (phase V). During the different phases, the RTS, which was emulating the engine, will be commanded to operate at different engine speeds and load torques as seen in Figure 5. The red, yellow, green, blue, and purple-shaded regions represent the five phases. During the shallow artificial neural network training phase (phase I), each neural network was trained over a four-minute period, located immediately following an increase/decrease in the engine operating conditions, i.e., load torque or engine speed. Next, during the intermediate phase (phase II), the RTS is commanded to operate at an engine speed of 750 rpm and a load torque of 0 Nm, which allows any unstabilized states to stabilize.

Figure 5.

The red, yellow, green, blue, and purple shaded regions represent the five phases, the shallow artificial neural network training phase (phase I), an intermediate phase (phase II), the shallow artificial neural network evaluation phase (phase III), the random forest ensemble model training phase (phase IV), the neural network testing phase (phase V). During the different phases, the emulated engine on the RTS will be commanded to operate at dissimilar engine speeds and load torques.

Immediately following this phase, the random forest artificial neural network data collection phase (phase III) begins. During this phase, the trained SANNs are again subjected to various engine speeds and load torques already emulated; the performance of the network will not change. However, each network is now producing a prediction, and these predictions are required for the subsequent phases. Following the conclusion of phase III, the random forest ensemble model training phase (phase IV) begins, during this time, the random forest ensemble model was trained using parallelism on external target B. The RTS is also commanded to operate at an engine speed of 750 rpm and a load torque of 0 Nm, which again allows any unstabilized states to stabilize. After the training has concluded, all parameters that define the random forest ensemble AI/ML algorithms are transmitted to the RTS through UDP/IP.

With the conclusion of phase IV, the testing phase (phase V) begins. During this time the SANNs and the random forest AI/ML will be subjected to dissimilar engine speeds and load torques that were not explicitly observed but rather implicitly observed throughout the previous training phases. The remainder of the results section is separated into three subsections illustrating the response of the SANN trained and evaluated during phase I (Section 3.1), the response of the offline random forest ensemble AI/ML algorithms immediately following the conclusion of phase IV (Section 3.2), and the response of both the SANN and the random forest ensemble AI/ML algorithms subjected to the dissimilar engine operating conditions of phase V (Section 3.3).

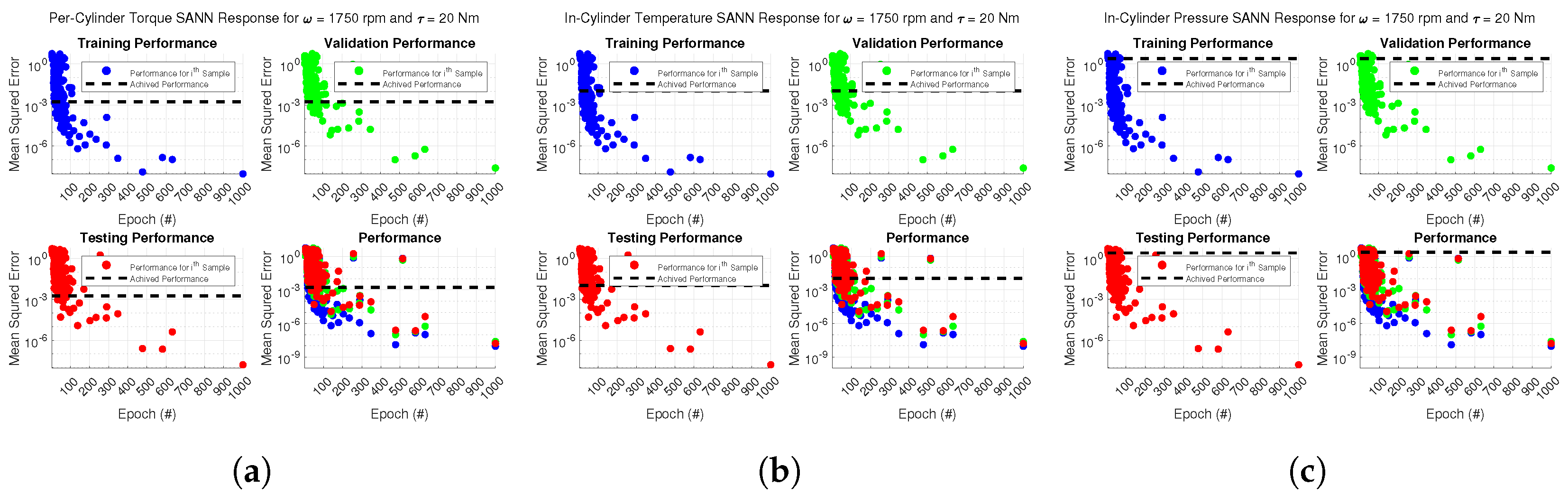

3.1. Shallow Artificial Neural Network Training Phase

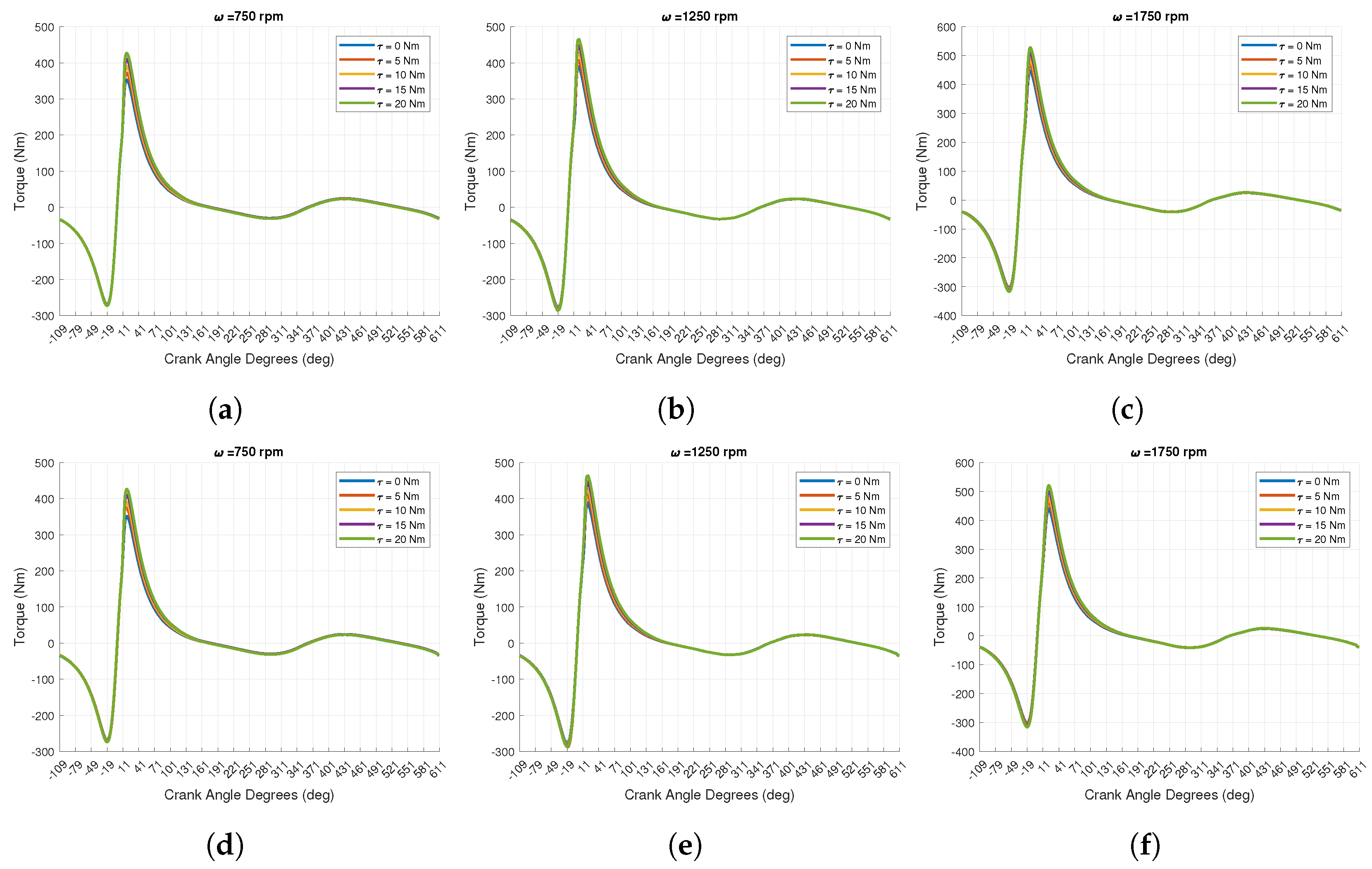

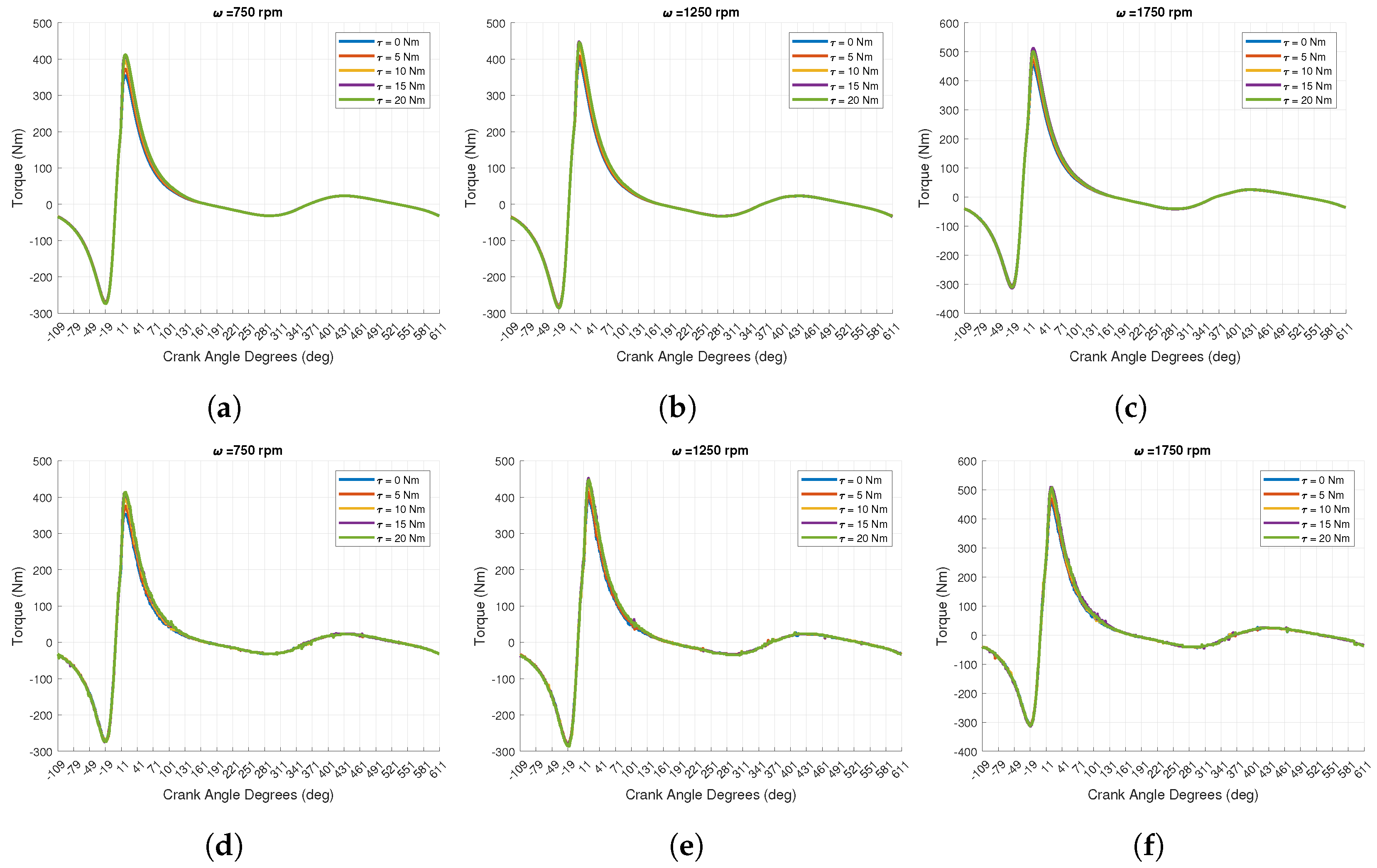

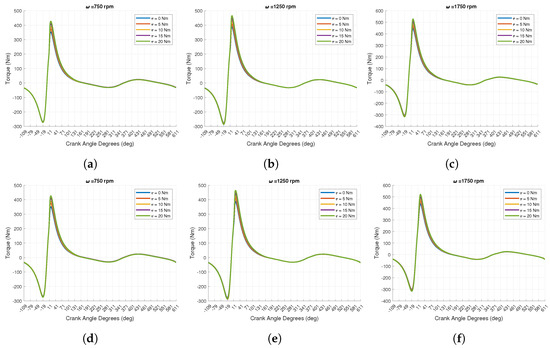

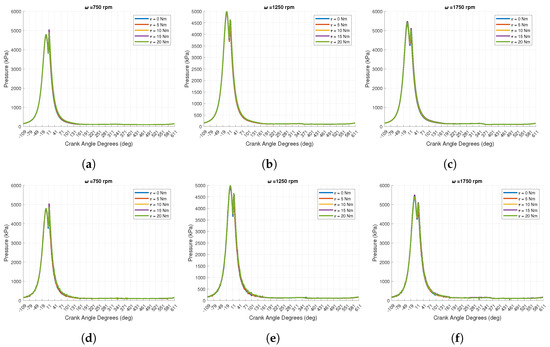

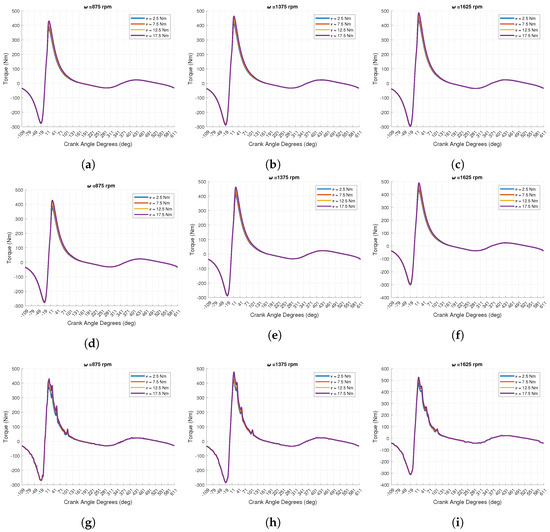

The results collected from the development and deployment of the shallow artificial neural network training phase (phase I), are provided in Figure 6 and Table 2 for the per-cylinder generated torque, Figure 7 and Table 3 for the in-cylinder temperature, and Figure 8 and Table 4 for the in-cylinder pressure.

Figure 6.

A comparison between the expected (look-up table) per-cylinder generated torque (a–c) and the forecasted SANN per-cylinder generated torque (d–f) for engine speeds of 750, 1250, and 1750 rpm and load torques of 0, 5, 10, 15, and 20 Nm.

Table 2.

An evaluation of the SANN’s performance function (mean squared error (MSE)) to predict the per-cylinder generated torque.

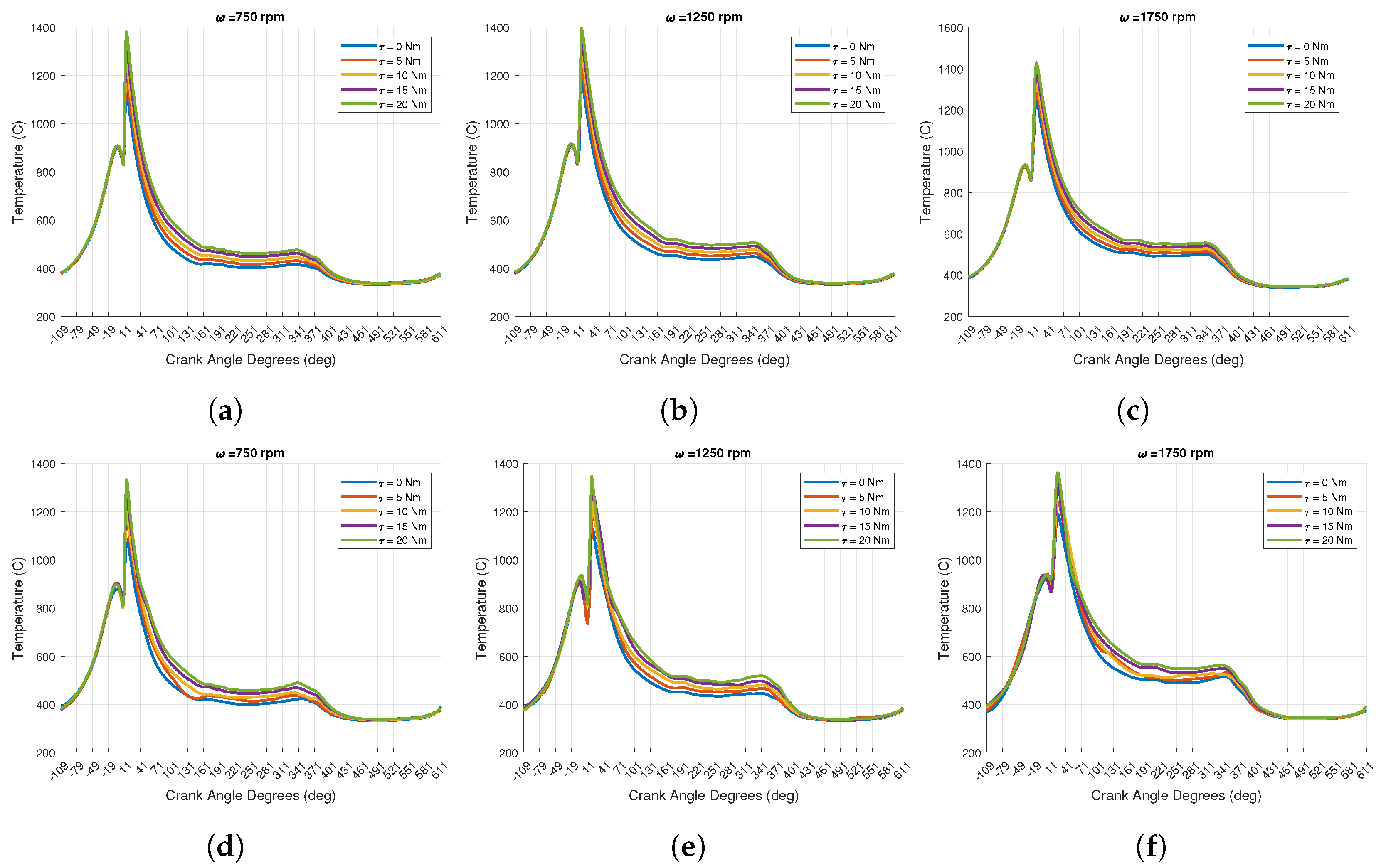

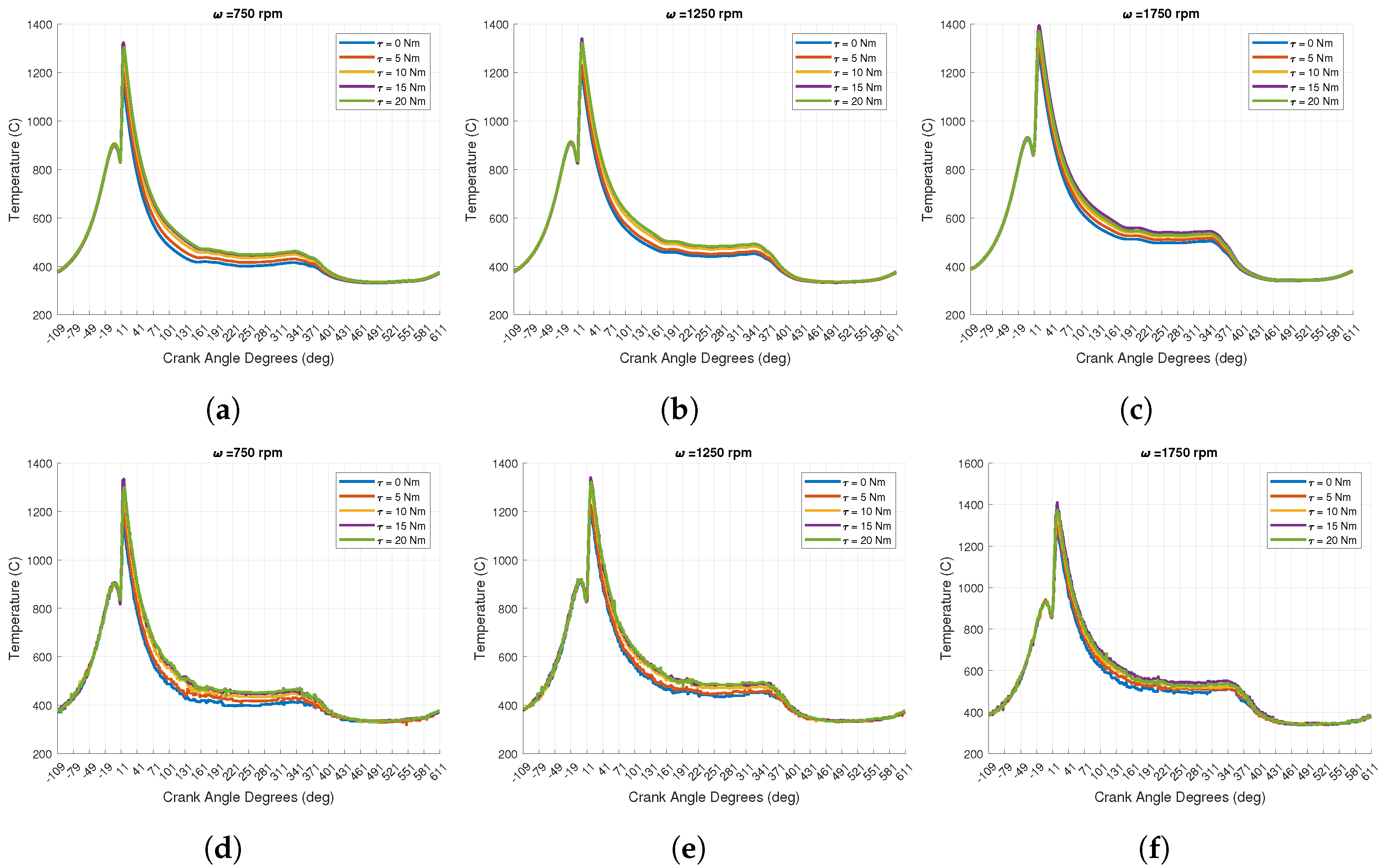

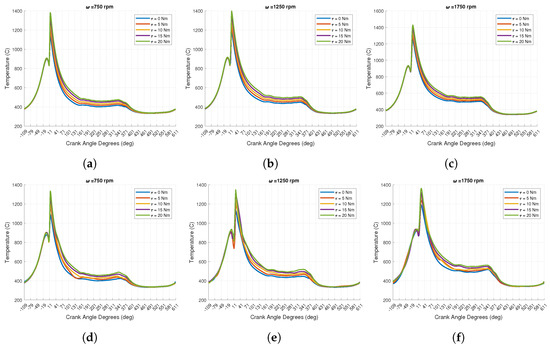

Figure 7.

A comparison between the expected (look-up table) in-cylinder temperature (a–c) and the forecasted SANN in-cylinder temperature (d–f) for engine speeds of 750, 1250, and 1750 rpm and load torques of 0, 5, 10, 15, and 20 Nm.

Table 3.

An evaluation of the SANN’s performance function (mean squared error (MSE)) to predict the in-cylinder temperature.

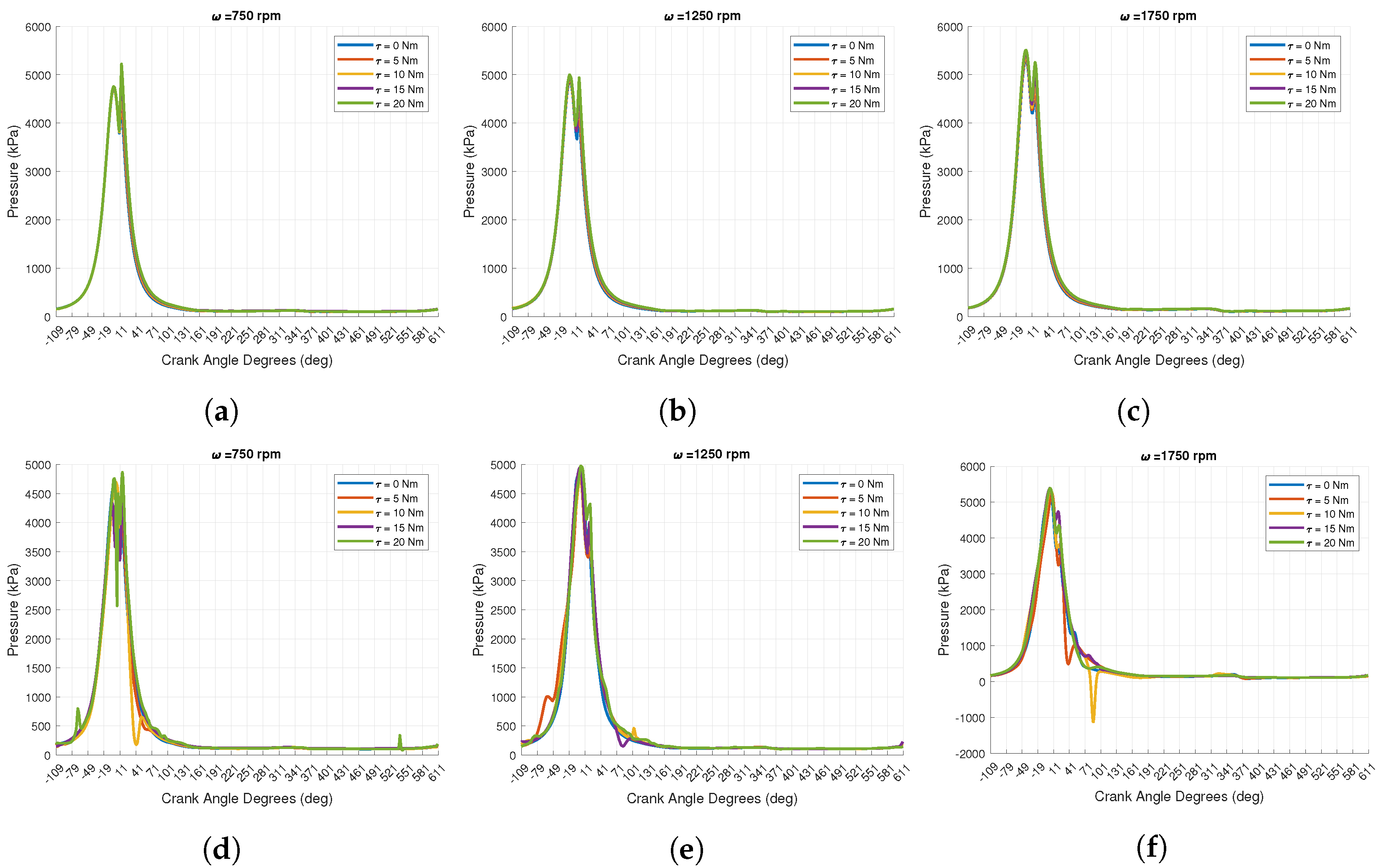

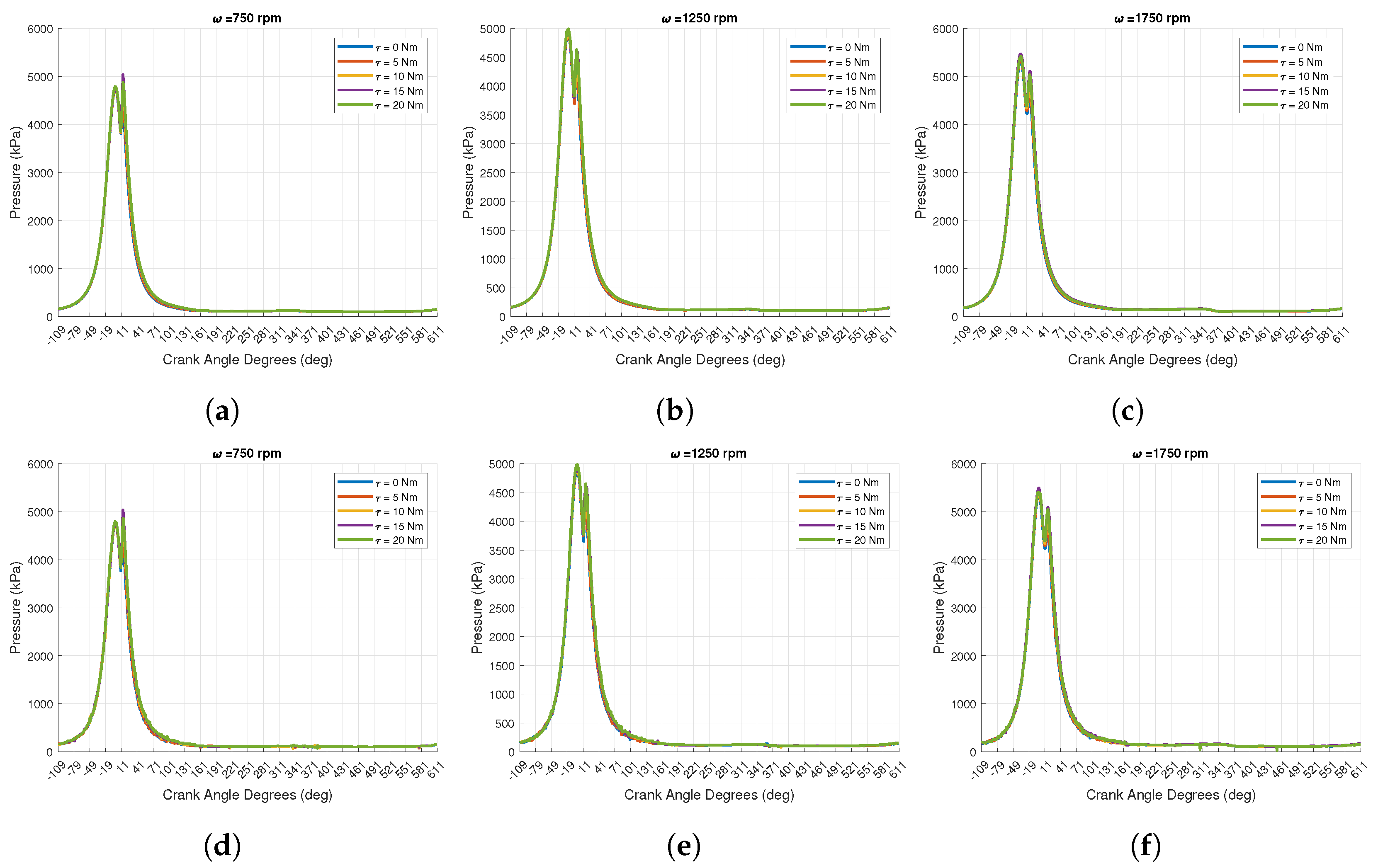

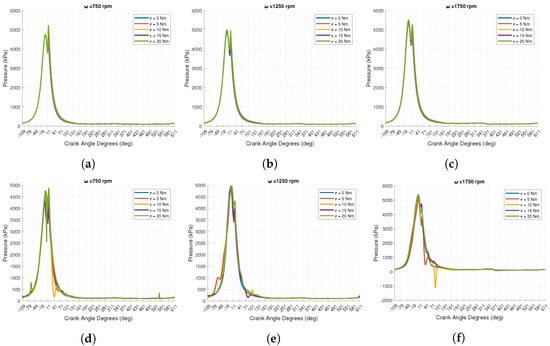

Figure 8.

A comparison between the expected (look-up table) in-cylinder pressure (a–c) and the forecasted SANN in-cylinder pressure (d–f) for engine speeds of 750, 1250, and 1750 rpm and load torques of 0, 5, 10, 15, and 20 Nm.

Table 4.

An evaluation of the SANN’s performance function (mean squared error (MSE)) to predict the in-cylinder pressure.

In total, seventy-five shallow artificial neural networks were trained to predict per-cylinder generated torque, in-cylinder temperature, and in-cylinder pressure at engine speeds of 750 rpm, rpm, rpm, rpm, and rpm and load torques of Nm, Nm, Nm, Nm, and Nm. At every simulation step, twenty-five separate SANN predictions are generated and passed to a two-degree linear interpolation schema. For engine conditions that were explicitly used to train the SANNs, the linear interpolation schema will return the prediction from a single neural network. Conversely, for engine conditions that were implicitly defined within the operational space, the interpolation schema will return a prediction that is a linear combination of the nearest neighbor predictors.

The analysis of Figure 6 and Table 2 reveals that the shallow artificial neural networks are capable of producing accurate and characteristic per-cylinder generated engine torque estimates utilizing only crank angle, the cylinder’s swept position, area, and volume, with an average performance evaluation of 1.64 Nm2. This is an encouraging result as the selected input features are not directly linked to a specific engine operating condition, but instead only a select set of engine parameters (crank radius, bore, number of engine cylinders, etc.). While encouraging, it is important to state, that the results as presented within this section represent the results of the trained neural network as applied to the specific condition in which the neural network was generated.

The analysis of Figure 7 and Table 3 reveals that on average, the shallow artificial neural networks are capable of yielding characteristic and semi-accurate predictions. Dissimilarly, to the previous neural network variant, the SANN that predicts in-cylinder temperature utilizes crank angle, the cylinder’s swept position, area, volume, and the per-cylinder generated torque which is being produced by the per-cylinder generated torque SANN. Forecasting error observed within the per-cylinder generated torque SANN neural network will cascade into the in-cylinder SANN predictions. Because these results are generated in response to specific engine conditions in which the SANN were trained, as such the average performance evaluation of the predictors is 584.39 K2. A visual analysis of Figure 7 predictions indicates that a few of the temperature neural networks deviate from target conditions around a crank angle of 71° through 271°. Additionally, some of the neural networks overshoot the peak temperature. It is believed that more accurate predictions could be obtained by altering the SANN architecture. A decision was made earlier within this research effort to reduce the computational complexity of the neural networks so less data could be transmitted from external target A and the RTS; this was primarily completed to simplify the model for compatibility-related issues dealing with the RTS software.

The analysis of Figure 8 and Table 4 reveals that on average, the shallow artificial neural networks are capable of yielding predictions that approximate the in-cylinder pressure trace; however, the neural networks have considerably more errors between a crank angle of ° and 131°. During this operational range, the piston will be subjected to both the compression and expansion stroke. This operational range is difficult to accurately predict, as the peak in-cylinder pressure is a complex phenomenon impacted by the engine operating conditions input and fuel temperature, input pressure, relative humidity, injection timing, the amount of fuel injected, and a host of other engine operating parameters. That being said, the SANNs produce predictions with an average performance evaluation of 15,429.31 kPa2. We believe this network can be improved by utilizing more neurons within each hidden layer and by including a mathematical expression to compute and estimate the in-cylinder pressure trace. In Jane et al. [16], we found that per-cylinder torque can be computed directly from the in-cylinder pressure using an equation. The equation, however, ultimately required both a scaling and bias correction. With the amendment, each individual engine cylinder per-cylinder torque trace could be computed and aggregated to form the resulting engine torque trace. This trace was nearly identical to the aggregate engine torque trace contained within the original pseudo engine dynamometer data.

Additionally, one or two of the SANNs for a specific set of operational engine conditions exhibit momentary and noticeable deviations, in which the neural network has failed to sufficiently converge to predict the target, provided the selected input features. Again, we expect this is likely due to the complexity of the in-cylinder pressure dynamics. The neural network had significant confusion for the provided input features at the respective crank angle, which again is governed by the injection parameters.

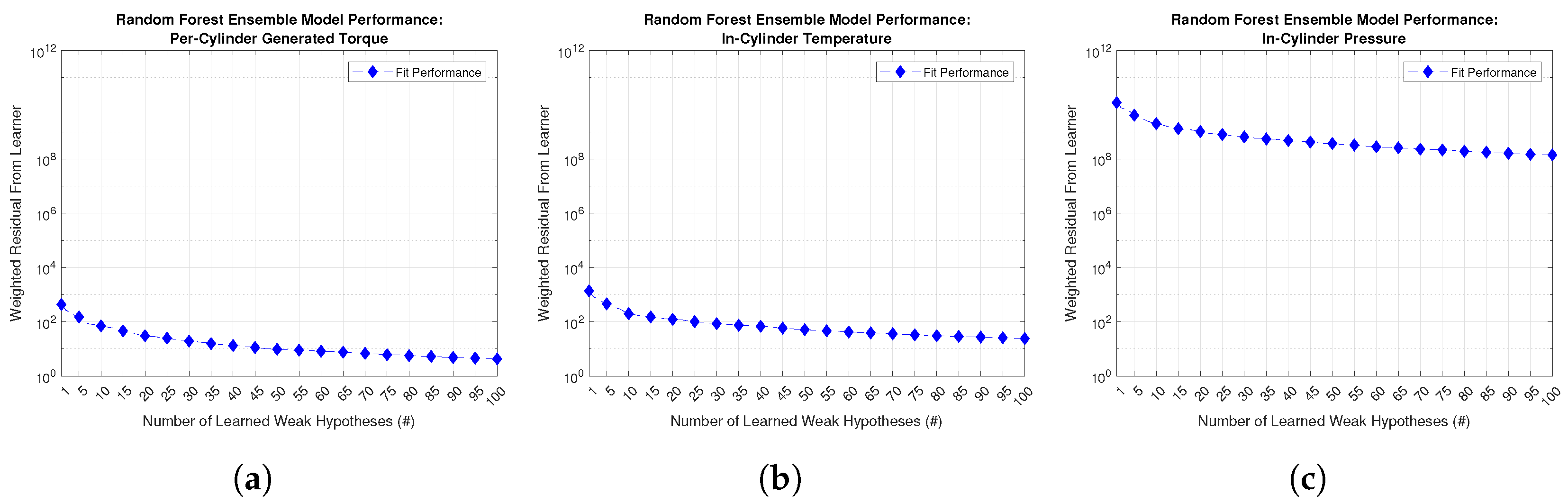

3.2. Random Forest Ensemble Model Training Phase

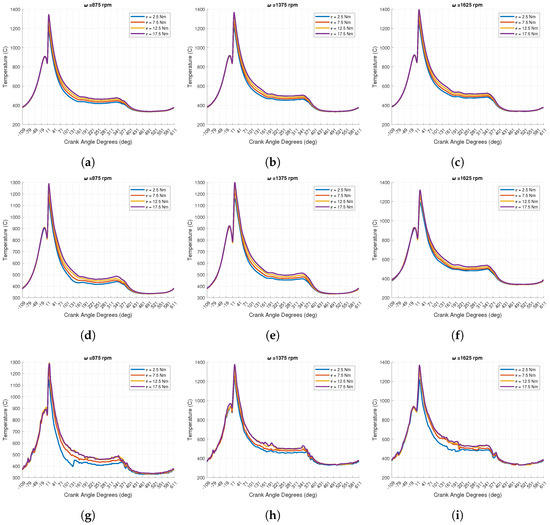

The results collected from the development and deployment of the random forest ensemble AI/ML algorithm training phase (phase IV) are provided in Figure 9 and Table 5 for the per-cylinder generated torque, Figure 10 and Table 6 for the in-cylinder temperature, and Figure 11 and Table 7 for the in-cylinder pressure.

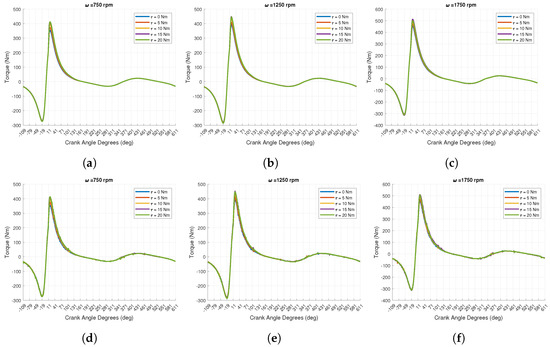

Figure 9.

A comparison between the expected (look-up table) per-cylinder generated torque (a–c) and the forecasted random forest ensemble model per-cylinder generated torque (d–f) for engine speeds of 750, 1250, and 1750 rpm and load torques of 0, 5, 10, 15, and 20 Nm.

Table 5.

An evaluation of the random forest AI/ML’s performance function (mean squared error (MSE)) to predict the per-cylinder generated torque.

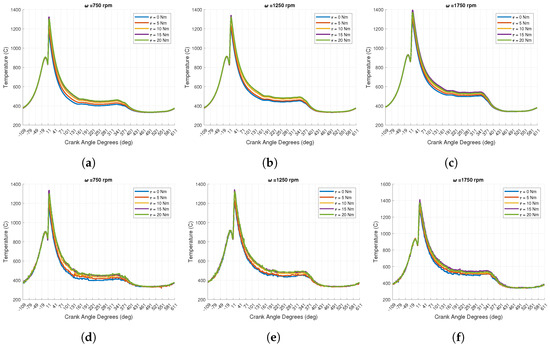

Figure 10.

A comparison between the expected (look-up table) in-cylinder temperature (a–c) and the forecasted random forest ensemble model in-cylinder temperature (d–f) for engine speeds of 750, 1250, and 1750 rpm and load torques of 0, 5, 10, 15, and 20 Nm.

Table 6.

An evaluation of the random forest AI/ML’s performance function (mean squared error (MSE)) to predict the in-cylinder temperature.

Figure 11.

A comparison between the expected (look-up table) in-cylinder pressure (a–c) and the forecasted random forest ensemble model in-cylinder pressure (d–f) for engine speeds of 750, 1250, and 1750 rpm and load torques of 0, 5, 10, 15, and 20 Nm.

Table 7.

An evaluation of the random forest AI/ML’s performance function (mean squared error (MSE)) to predict the in-cylinder pressure.

The analysis of Figure 9 and Table 5 reveals that the random forest ensemble AI/ML algorithm are capable of producing accurate and characteristic per-cylinder generated engine torque estimates. Compared to the SANNs, the random forest ensemble AI/ML algorithms utilized a different set of input features to include all twenty-five per-cylinder generated torque SANN predictions, in addition to crank angle, the cylinder’s swept position, area, volume, throttle, engine speed, the reference engine speed, and load torque, with an average performance evaluation of 2.16 Nm2. Recall the performance of the SANN was 1.64 Nm2, thus the random forest AI/ML algorithms produce commensurate predictions to the SANN, indicating that either neural network may be sufficient to predict the desired engine state. This variant by nature of the selected input features is more extensible than each individual SANN. As observed for many of the previous AI/ML deployed within this study, if the SANNs upstream of the random forest ensemble AI/ML algorithm have a significant error, then this error will cascade into the predictions; however, during the training this error may be mitigated if sufficiently trained. The random forest ensemble AI/ML algorithm predictions do, however, exhibit notable and frequent high-speed disturbances not observed within the SANNs. The random forest ensemble AI/ML algorithms are sometimes referred to as binary decision trees. Each random forest ensemble AI/ML algorithm contains one hundred individual decision trees, the aggregation of which gives rise to the ensemble models. The presence of numerous individual decision trees is likely attributed to the frequent high-speed disturbance-like behavior. If the number of individual decision trees were reduced, we expect that the random forest ensemble AI/ML algorithm predictions response to increase and potentially exhibit less frequent high-speed disturbance-like behavior.

The analysis of Figure 10 and Table 6 reveals that the random forest ensemble AI/ML algorithm was capable of producing accurate, and characteristic in-cylinder temperature estimates. Again, the random forest AI/ML models utilized a different set of input features to include all twenty-five in-cylinder temperature SANN predictions, in addition to crank angle, the cylinder’s swept position, area, volume, throttle, engine speed, the reference engine speed, and load torque, with an average performance evaluation of 12.13 K2. Recall the performance of the SANN was that of 584.39 K2, this is a significant improvement upon the SANN predictions, indicating that the random forest AI/ML is more accurate and potentially desirable to produce predictions as it corrects and improves upon any predictive errors generated from the SANN predictions. This neural network also exhibits frequent high-speed disturbance-like behavior, which is more apparent in magnitude, but still an improvement upon the SANN predictions. A large part of the improvement is attributed to a more accurate prediction of the peak magnitude of the in-cylinder temperature.

The analysis of Figure 11 and Table 7 reveals that the random forest ensemble AI/ML algorithms were capable of producing accurate and characteristic in-cylinder pressure estimates. Again, the random forest ensemble AI/ML algorithm utilized a different set of input features to include all twenty-five in-cylinder pressure SANN predictions, in addition to crank angle, the cylinder’s swept position, area, volume, throttle, engine speed, the reference engine speed, and load torque, with an average performance evaluation of 73.25 kPa2. Recall the performance of the SANN was that of 15,429.31 kPa2. This is a significant improvement upon the SANN, indicating that the random forest ensemble AI/ML algorithm is more accurate and potentially desirable to produce predictions as it corrects and improves upon any predictive errors generated from the SANN predictions. As was seen in all previous versions of the random forest neural network, the frequent high-speed disturbance-like behavior is visually observed; however, it is less noticeable as the overall magnitude of peak pressure is multiple orders of magnitude larger than the ambient pressure of 50 kPa, but still an improvement upon the SANN predictions.

3.3. Real-Time Implementation of SANN and Random Forest AI/ML

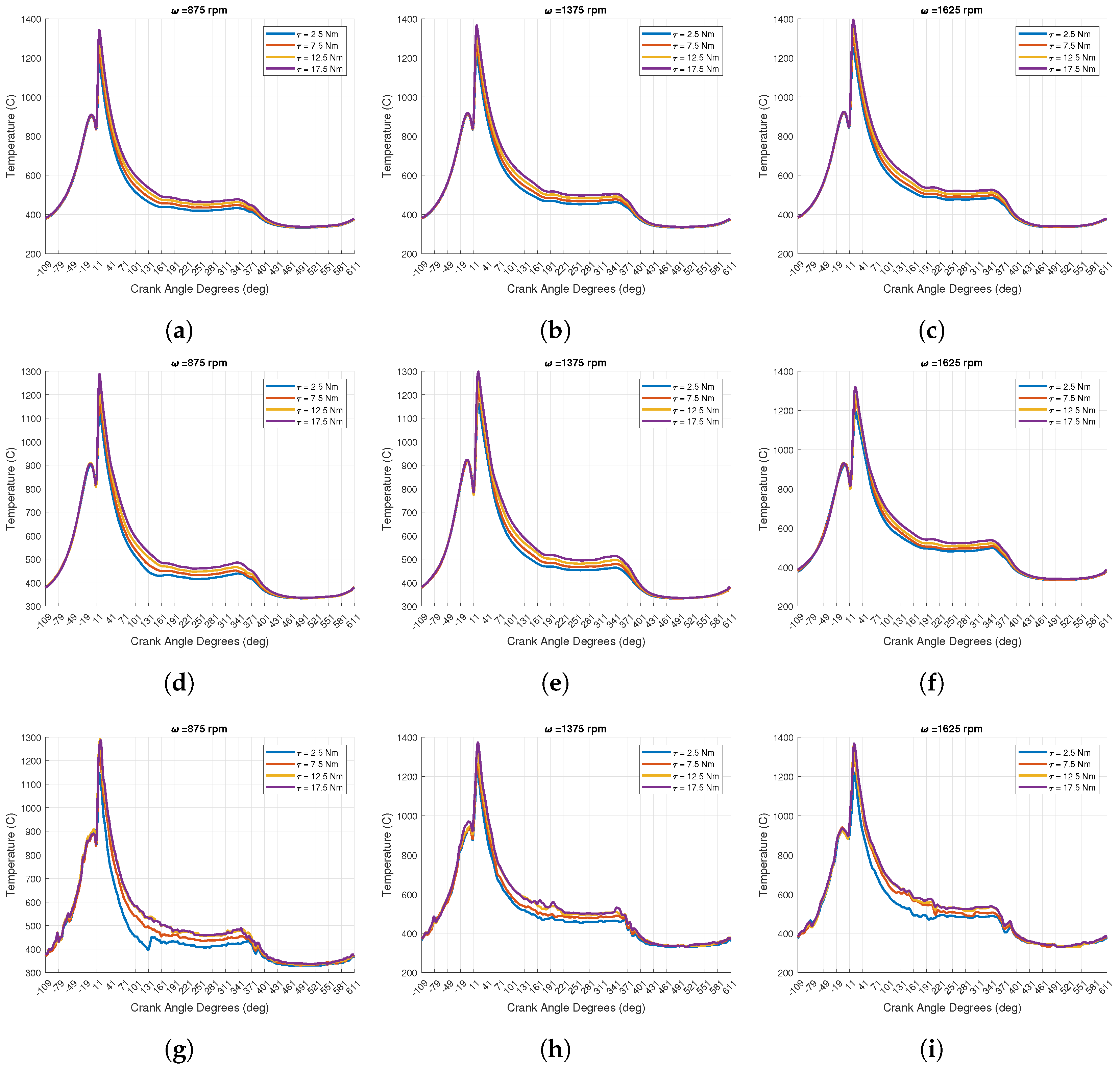

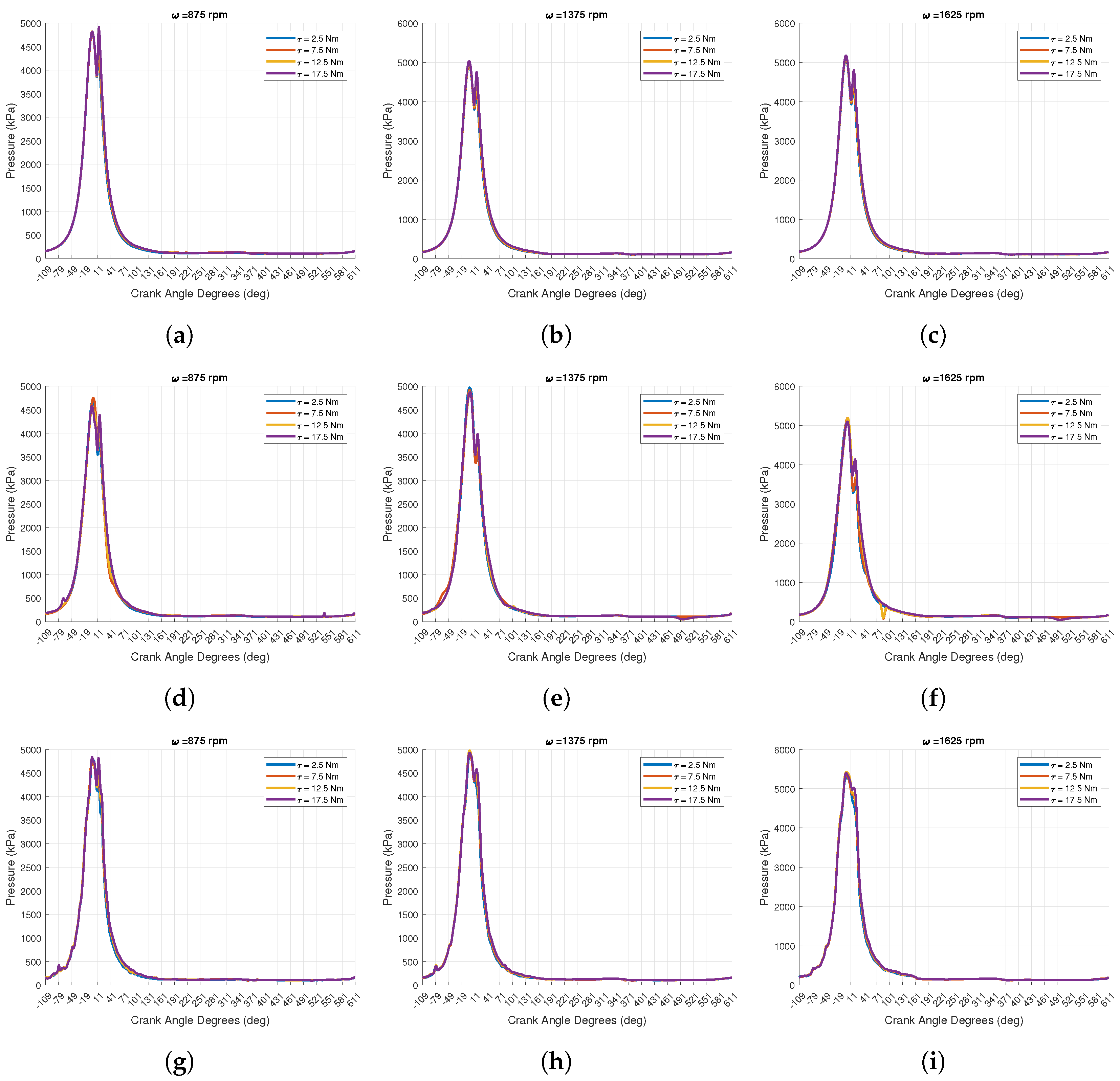

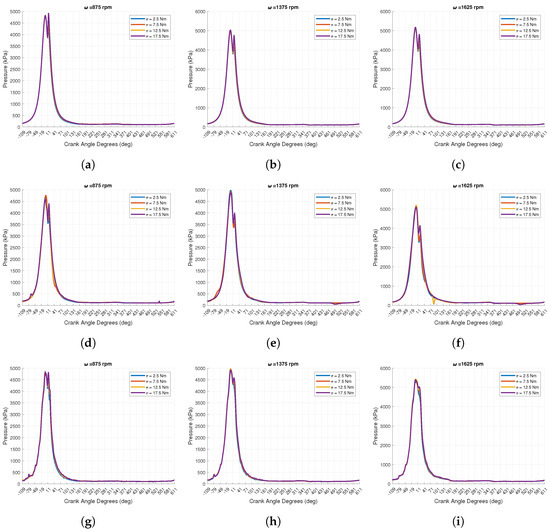

The results collected from the neural network testing phase (phase V) are provided in Figure 12 and Table 8 for the per-cylinder generated torque, Figure 13 and Table 9 for the in-cylinder temperature, and Figure 14 and Table 10 for the in-cylinder pressure.

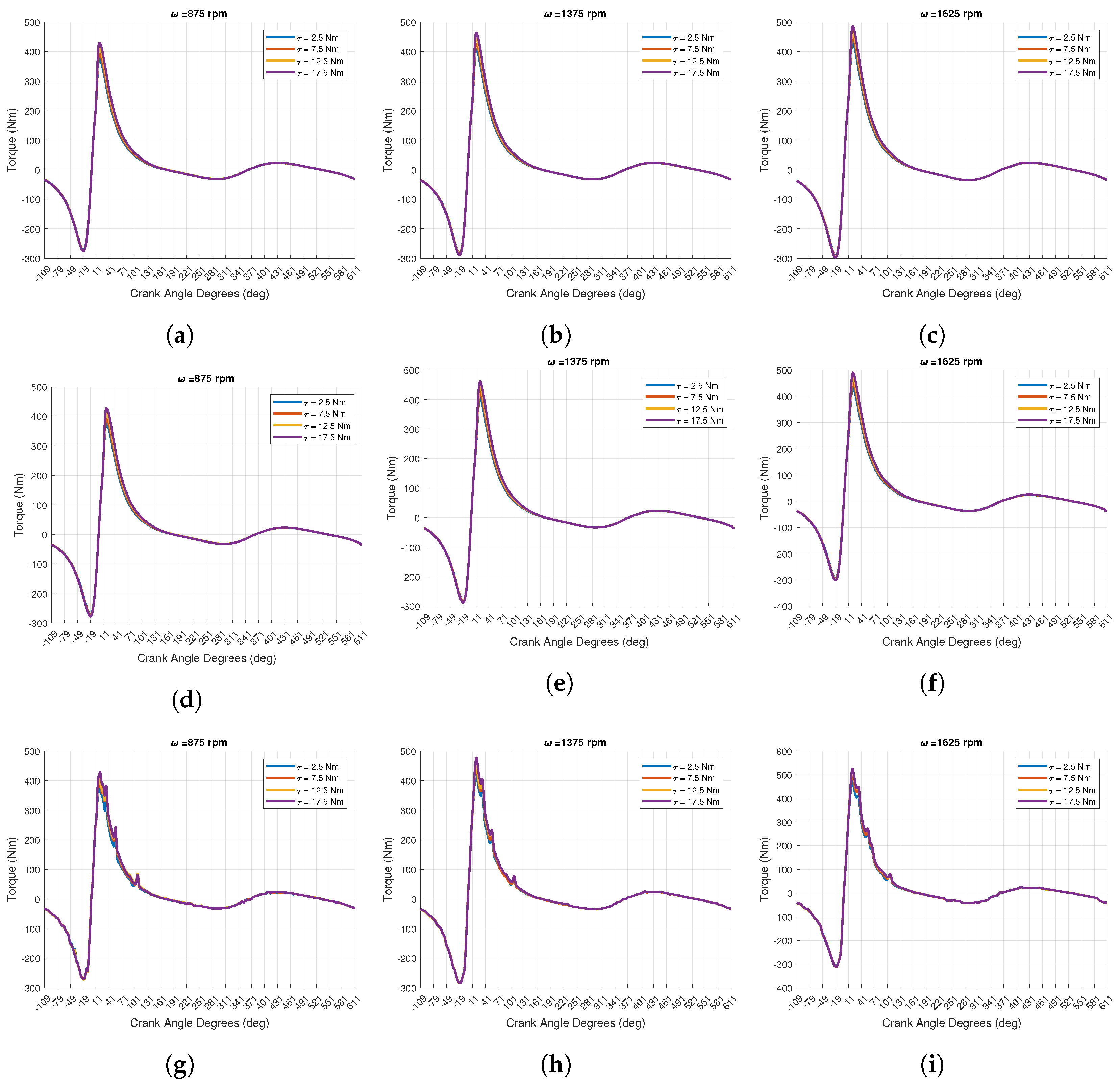

Figure 12.

A comparison between the expected (look-up table) per-cylinder generated torque (a–c), the forecasted SANN per-cylinder generated torque (d–f), and the forecasted random forest per-cylinder generated torque (g–i) for engine speeds of 875, 1375, and 1625 rpm and load torques of 2.5, 7.5, 12.5, and 17.5 Nm.

Table 8.

An evaluation of both the random forest and the SANN AI/ML algorithm’s subject to the dissimilar engine conditions evaluated by the performance function (mean squared error (MSE)) to predict the per-cylinder generated torque.

Figure 13.

A comparison between the expected (look-up table) in-cylinder temperature (a–c), the forecasted SANN in-cylinder temperature (d–f), and the forecasted random forest in-cylinder temperature (g–i) for engine speeds of 875, 1375, and 1625 rpm and load torques of 2.5, 7.5, 12.5, and 17.5 Nm.

Table 9.

An evaluation of both the random forest and the SANN AI/ML algorithm’s subject to the dissimilar engine conditions evaluated by the performance function (mean squared error (MSE)) to predict the in-cylinder temperature.

Figure 14.

A comparison between the expected (look-up table) in-cylinder pressure (a–c), the forecasted SANN in-cylinder pressure (d–f), and the forecasted random forest in-cylinder pressure (g–i) for engine speeds of 875, 1375, and 1625 rpm and load torques of 2.5, 7.5, 12.5, and 17.5 Nm.

Table 10.

An evaluation of both the random forest and the SANN AI/ML algorithm’s subject to the dissimilar engine conditions evaluated by the performance function (mean squared error (MSE)) to predict the in-cylinder pressure.

The analysis of Figure 12 and Table 8 reveals that both the SANNs and the random forest AI/ML algorithms are capable of producing characteristic per-cylinder generated engine torque estimates for conditions not explicitly observed, but rather indirectly observed, with an average performance evaluation of 1.83 Nm2 and 119.52 Nm2, respectively. This indicates that the SANN outperforms the random forest AI/ML algorithms. As was observed during the training phase, all random forest AI/ML algorithm predictions exhibit frequent high-speed disturbance-like behavior. Upon further analysis of Figure 12g through Figure 12i, there are three notable areas in which estimate unexpectedly deviates from the characteristic predictions which occur around crank angles of 31°, 51°, and 101°. Visual analysis of the per-cylinder generated torque random forest ensemble AI/ML algorithm during phase IV indicates that there are several locations in which the predictions have small notable deviations, three of which coincide with a crank angle of 31°, 51°, and 101°. This would seem to indicate that the trained neural network had multiple individual decision trees that had conflicting predictions based on the input features, and the differences within the non-observed engine state features resulted in conflicting predictions attributing to an exacerbated predictive feature.

The analysis of Figure 13 and Table 6 reveals that both the SANNs and the random forest ensemble AI/ML algorithms are capable of producing characteristic in-cylinder temperature estimates for conditions not explicitly observed, but rather indirectly observed, with an average performance evaluation of 480.14 K2 and 301.42 K2, respectively. This indicates that the random forest ensemble AI/ML algorithms outperform the SANNs. Further analysis reveals that the random forest ensemble model appears to outperform the SANN as the random forest ensemble AI/ML algorithms can better predict the peak temperature more accurately than the SANNs, which tend to overpredict the peak temperature. The SANN predictions are visually more continuous than the random forest ensemble AI/ML algorithm predictions and again continue to exhibit frequent high-speed disturbance-like behavior, which is more noticeable for the dissimilar engine conditions. The random forest ensemble AI/ML algorithm predictions seem to be notably deviating from the actual in-cylinder temperature for crank angles of 71° through 401°; however, because of the closer approximation of the peak temperature, the random forest ensemble models have reduced MSE when compared to an actual engine state as opposed to the SANNs predictions. While the average MSE metric appears to yield more accurate predictions than the SANNs, a visual inspection ultimately indicates that the SANN yields more characteristic predictions than the random forest ensemble AI/ML algorithms. This is further supported by a visual observance of the individual MSE metrics, where on average, the MSE of the SANN predictions tends to be lower than random forest ensemble AI/ML algorithms MSE metric.

The analysis of Figure 14 and Table 10 reveals that both the SANNs and the random forest ensemble AI/ML algorithms are capable of producing characteristic in-cylinder pressure estimates for conditions not explicitly observed with an average performance evaluation of 8018.45 kPa2 and 8701.79 kPa2, respectively. The SANN predictions are visually more continuous than the random forest ensemble model predictions, which again continue to exhibit frequent high-speed disturbance-like behavior. However, the disturbance-like behavior is less noticeable due to the overall peak magnitude of the pressure of 5000 kPa, whereas the ambient pressure is closer to 50 kPa. Both AI/ML algorithms have issues predicting the in-cylinder pressure around peak pressure. The magnitude of the random forest ensemble AI/ML algorithms appears to be more accurate than the SANN predictions; however, the random forest ensemble AI/ML algorithms underperform on the subsequent pressure peaks which were observed to occur not long after peak pressure. Both algorithms fail to predict the in-cylinder pressure accurately and consistently.

4. Conclusions, Implications, Limitations, and Future Work

In this research effort, we advanced our ability to develop AI/ML in parallel to an RTS. The training duration of the shallow artificial neural networks was reduced due to parallelism, used to generate multiple computationally simplistic SANNs capable of approximating per-cylinder generated torque, in-cylinder temperature, and pressure. The number of input features was also reduced to crank angle, the cylinder’s swept position, area, and volume for the per-cylinder generated torque SANNs. Conversely, input features of crank angle, the cylinder’s swept position, area, volume, and the predicted per-cylinder generated torque were used to predict in-cylinder temperature and pressure. This enables the neural networks to be utilized to predict the desired engine conditions without the need to observe and sense inlet and time-varying engine states directly. Due to the lack of the input features that correspond to specific engine conditions, the SANNs are less extensible, and thus require a two-dimensional interpolation routine to interpolate between dissimilar engine conditions.

Real-time training is difficult to achieve using the RTS’s built-in data management capabilities. One way this can be significantly improved would be to transmit all required sensor data from the RTS to a target that is real-time capable. Because internal combustion engines operate at tens to hundreds to thousands of revolutions per minute, sufficient data required to generate AI/ML could be sensed over a relatively short period of time. As an example, an engine operating at 750 rpm will complete 12.5 revolutions every second, this equates to either 6 or 12 complete engine cycles for a four-stroke or two-stroke engine, respectively. Thus, a real-time target could be triggered to record data for a short duration sufficient to catch any desired steady state or transient response, and subsequently be used to generate AI/ML.

A comparison of the predictive responses yielded by the SANNs and the random forest ensemble AI/ML algorithms indicated that for engine operational conditions that were identically equal to a load torque of Nm, Nm, Nm, Nm, and Nm and an engine speed of rpm, rpm, rpm, rpm, and rpm, the random forest AI/ML model outperformed all SANNs. There are two notable caveats, (1) the random forest ensemble models are reliant on all SANN predictions, thus, if the SANN were developed for the operational range of the engine and combined with the random forest AI/ML algorithms more accurate and characteristic predictions are obtainable, and (2) this only is true for the load torques and engine speeds listed, which are the conditions in which the SANNs and the random forest ensemble models were trained on, which are expected to yield the most accurate results.

As was observed within the phase V results, the random forest ensemble AI/ML algorithms underperformed for the dissimilar engine conditions not explicitly contained within the operational engine conditions simulated. Conversely, the SANN predictions outperformed when compared to the predictions generated by the random forest ensemble AI/ML algorithm. It is believed that a combination of the SANNs and random forest ensemble AI/ML algorithms can lead to sufficiently accurate and characteristic per-cylinder generated torques and in-cylinder temperature and pressure traces to be utilized for internal engine analysis and control. This would, however, require that the in-cylinder temperature and pressure could be measured on a production engine, or from a sufficiently, non-linear dynamic model of an internal combustion engine from a computationally expensive engine model. If the operational range of the engine were discretized more thoroughly, and the random forest ensemble AI/ML algorithms were reduced to be invariant of the engine conditions with the exception of the SANN predictions, as was achieved by the SANNs, then a two-dimensional interpolation schema could be used to interpolate between dissimilar engine conditions and lead to improved performance of the AI/ML.

Being able to predict in-cylinder conditions of the engine accurately and in a timely fashion could allow for more robust engine control and the development of energy awareness for the engine subject to its operational environment. As an example, if the conditions within the engine deteriorated or something malfunctioned causing a reduction to per-cylinder generated torque, and thus the in-cylinder pressure and temperature, then a comparison of the neural network prediction could indicate a malfunctioning engine component or identify complex operational conditions that lead to inadequate combustion conditions.

The results presented within this technical document illustrate how AI/ML can be utilized as soft sensors to begin to observe and develop energy awareness for internal combustion engines. Such capabilities can be used to improve the design and control of engines, and further enable an understanding of critical internal engine conditions that directly impact the creation of acoustic, thermal, and kinetic energy via the combustion of chemical energy. This can also be used to aid in preventative maintenance of an engine. Military systems are unlikely to possess large, complete, and labeled datasets, limiting the ability to benefit from technological advancements to include AI/ML. There are two ways that will permit the use of AI/ML for an internal combustion engine, (1) using a high-powered computational fluid dynamics (CFD) simulation software (Realis Wave [21], Ansys [22], GT-Power [23], to name a few) synthetic data for multiple representative engines may be developed offline and applied to the engine in near-real-time or real-time, alternatively, (2) AI/ML may be developed in near-real-time or real-time directly from a manufactured engine over a life cycle of the engine or during initial development of a system and adapted once deployed.

The proposed method has three primary limitations: (1) data ingestion and sensor fusion, (2) memory allocation and computational resources, and (3) adaptability and extensibility. Non-standard communication topologies and protocol utilization across dissimilar vehicle manufacturers and OEMs for both civilian and military-type vehicles. This is especially true for different military vehicle classifications (tactical, combat, support, robotic). This ultimately means that there is no guarantee that individual systems or platforms will have the required set of sensor data to which AI/ML could be exploited and obtain universal benefit across platforms. Regarding memory allocation and computational resources, not all vehicle types have sufficient memory or computational resources to exploit AI/ML. Larger systems have the space and energy capabilities to support these functionalities and capabilities, while smaller platforms are constrained by both weight and power capabilities. Due to the potential that some systems may have limited power resources, memory allocation becomes exceptionally important to ensure that the AI/ML algorithms can operate as intended. Regarding adaptability and extensibility, to run in real-time, the SANN architecture was fixed, and the architecture of the algorithm could not be changed. The forest ensemble model architecture was not fixed; however, the cumulative memory required to implement the algorithm was fixed. Additionally, both algorithms’ input features were fixed; to be more extensible the input features memory would need to be preallocated. Additionally, for any particular AI/ML algorithm to be pre-allocated, the neural network implementation should be configured to utilize matrix-supported operations, which would enable the algorithms to be adaptable and extensible.

Author Contributions

Conceptualization, R.J., S.R. and C.M.J.; Data curation, R.J. and S.R.; Formal analysis, R.J.; Funding acquisition, C.M.J.; Investigation, R.J. and S.R.; Methodology, R.J.; Project administration, C.M.J.; Resources, C.M.J.; Software, R.J.; Supervision, C.M.J.; Validation, R.J., S.R. and C.M.J.; Visualization, S.R. and C.M.J.; Writing—original draft, R.J., S.R. and C.M.J.; Writing—review and editing, S.R. and C.M.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to nature of the application.

Acknowledgments

The authors of this technical article would like to acknowledge our external collaborators at the DEVCOM’s Ground Vehicle Systems Center (GVSC), Denise Rizzo and Matthew Castanier. We would also like to acknowledge our external collaborators at Clemson University, Benjamin Lawler and Dan Egan, for constructing the GT-Power models and simulating the various engine configurations forming the engine maps used in this research effort and those to come. Thanks to Constandinos Mitsingas of the DEVCOM Army Research Laboratory (ARL) in Aberdeen, MD for providing his technical expertise regarding various internal combustion engine modeling and behavior. We would also like to thank Brandon Hencey of the Air Force Research Laboratory (AFRL) in Dayton, OH for providing his technical expertise regarding AI/ML and general engineering practices which helped to develop predictive algorithms.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

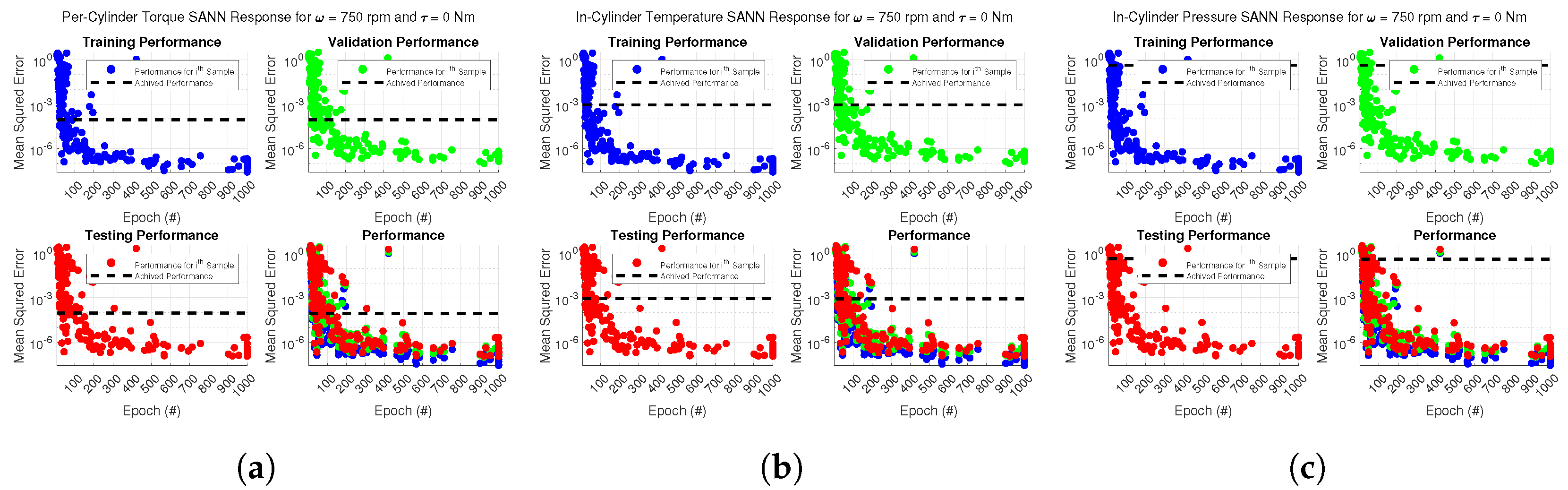

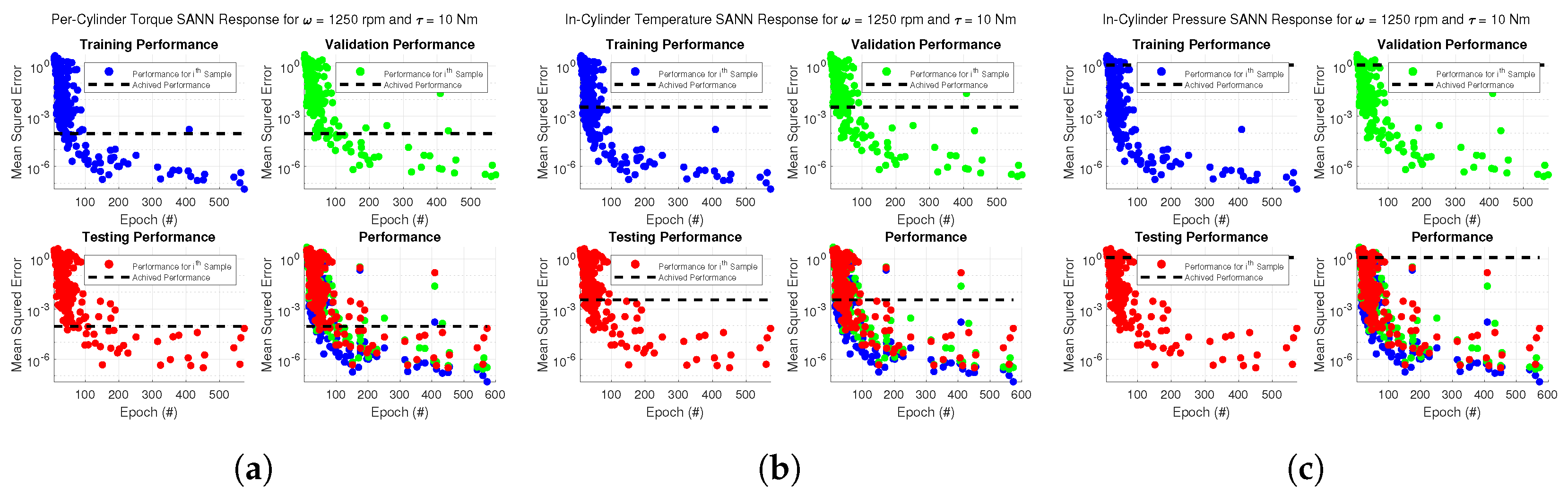

In the Shallow Artificial Neural Network (SANNs) Training Phase, twenty-five separate neural networks for the per-cylinder generated torque, in-cylinder temperature, and in-cylinder pressure were generated. This resulted in seventy-five unique neural networks. A small subset of the approximate training, testing, and validation loss/precision graphs were generated using two-hundred repeated random training samples for rpm and Nm (Figure A1a–c), rpm and Nm (Figure A2a–c), rpm and Nm (Figure A3a–c), rpm and Nm (Figure A4a–c), and rpm and Nm (Figure A5a–c).

Figure A1.

The approximate training, testing, and validation loss/precision for the shallow artificial neural networks subject to rpm and Nm for per-cylinder generated torque (a), for in-cylinder temperature (b), and for in-cylinder pressure (c).

Figure A1.

The approximate training, testing, and validation loss/precision for the shallow artificial neural networks subject to rpm and Nm for per-cylinder generated torque (a), for in-cylinder temperature (b), and for in-cylinder pressure (c).

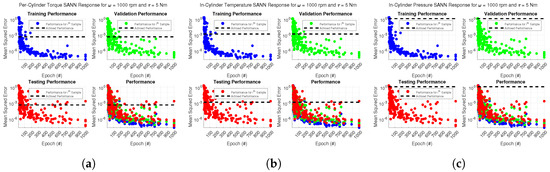

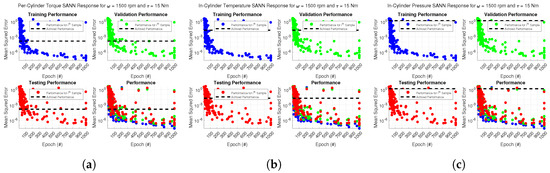

Figure A2.

The approximate training, testing, and validation loss/precision for the shallow artificial neural networks subject to rpm and Nm for per-cylinder generated torque (a), for in-cylinder temperature (b), and for in-cylinder pressure (c).

Figure A2.

The approximate training, testing, and validation loss/precision for the shallow artificial neural networks subject to rpm and Nm for per-cylinder generated torque (a), for in-cylinder temperature (b), and for in-cylinder pressure (c).

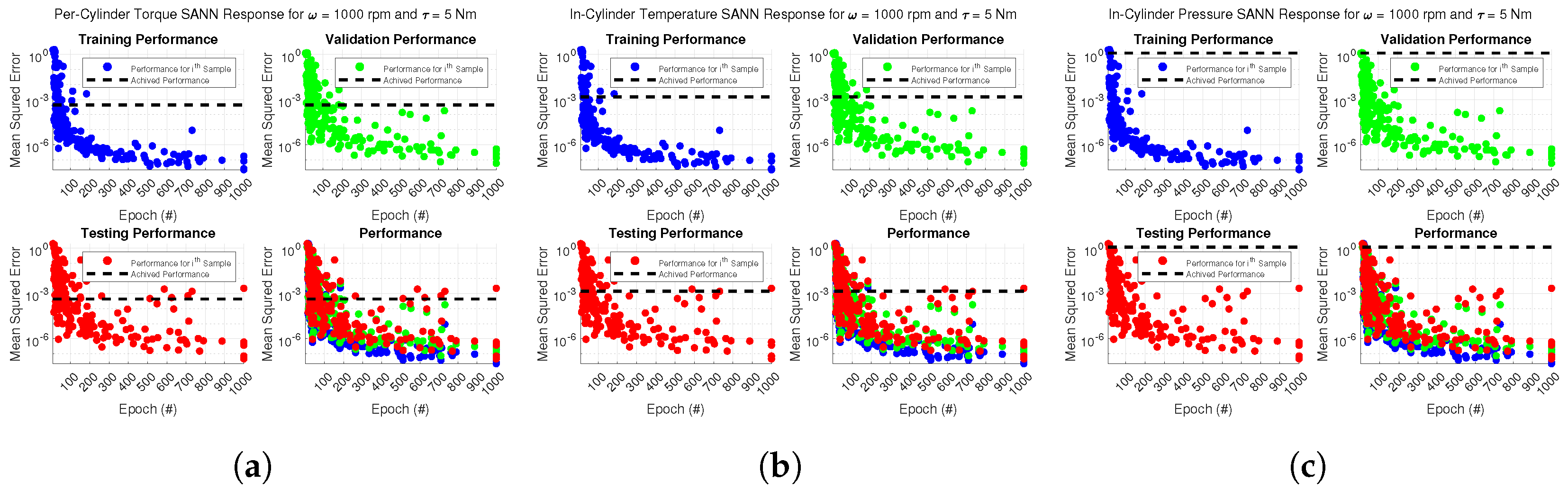

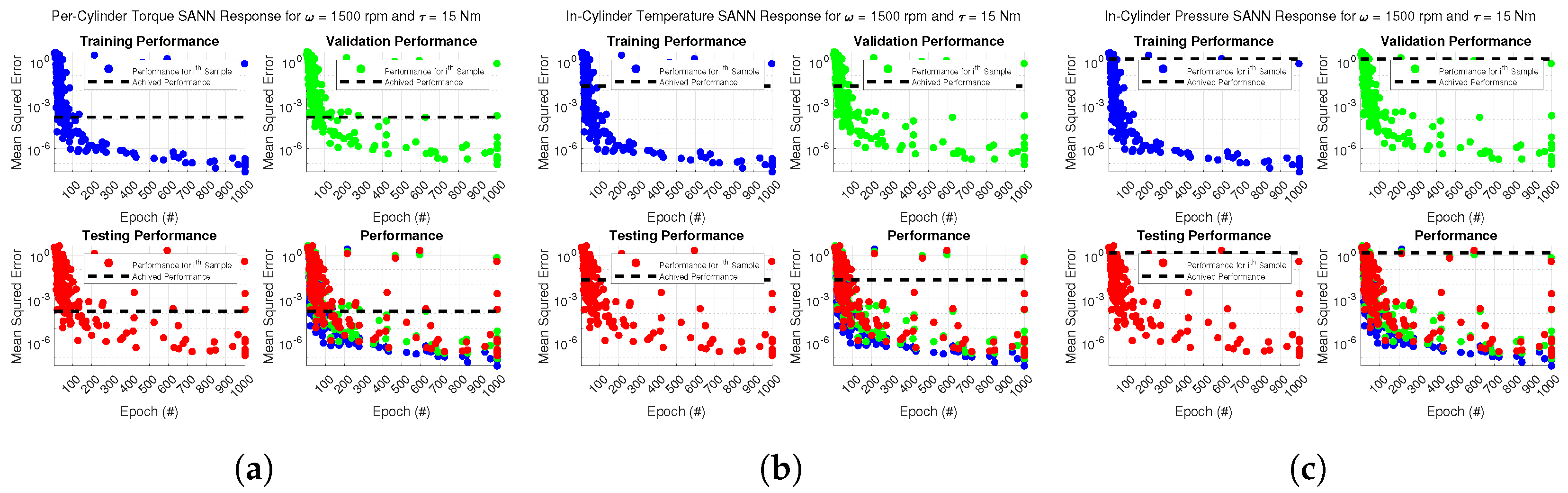

Figure A3.

The approximate training, testing, and validation loss/precision for the shallow artificial neural networks subject to rpm and Nm for per-cylinder generated torque (a), for in-cylinder temperature (b), and for in-cylinder pressure (c).

Figure A3.

The approximate training, testing, and validation loss/precision for the shallow artificial neural networks subject to rpm and Nm for per-cylinder generated torque (a), for in-cylinder temperature (b), and for in-cylinder pressure (c).

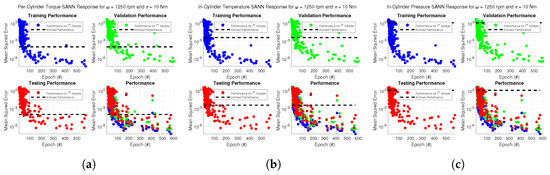

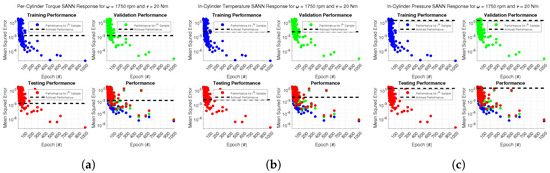

Figure A4.

The approximate training, testing, and validation loss/precision for the shallow artificial neural networks subject to rpm and Nm for per-cylinder generated torque (a), for in-cylinder temperature (b), and for in-cylinder pressure (c).

Figure A4.

The approximate training, testing, and validation loss/precision for the shallow artificial neural networks subject to rpm and Nm for per-cylinder generated torque (a), for in-cylinder temperature (b), and for in-cylinder pressure (c).

Figure A5.

The approximate training, testing, and validation loss/precision for the shallow artificial neural networks subject to rpm and Nm for per-cylinder generated torque (a), for in-cylinder temperature (b), and for in-cylinder pressure (c).

Figure A5.

The approximate training, testing, and validation loss/precision for the shallow artificial neural networks subject to rpm and Nm for per-cylinder generated torque (a), for in-cylinder temperature (b), and for in-cylinder pressure (c).

Appendix B

In the Random Forest Ensemble Model Training Phase, three separate neural networks were generated, one for the per-cylinder generated torque, in-cylinder temperature, and in-cylinder pressure. The testing and validation loss/precision are not retained or monitored by default. Recall/confusion graphs for this particular forest ensemble model are not useful for a regression neural network. The training performance loss/precision for each of the three neural networks have been provided in Figure A6.

Figure A6.

The training loss/precision for the random forest ensemble models subject to dissimialr engine speeds and load torques for per-cylinder generated torque (a), for in-cylinder temperature (b), and for in-cylinder pressure (c).

Figure A6.

The training loss/precision for the random forest ensemble models subject to dissimialr engine speeds and load torques for per-cylinder generated torque (a), for in-cylinder temperature (b), and for in-cylinder pressure (c).

Appendix C

In this research effort, the training and application of the AI/ML algorithms were reinvestigated, first by identifying other potential suitable candidate machine learning algorithms for continued research and application as shown in Table A1 through Table A3. The primary reason for completing this was to identify other potential candidate machine learning models that could be used for near-real-time and real-time training and operation.

Table A1.

Comparison of some of the advantages and disadvantages differences of multiple shallow artificial neural network types.

Table A1.

Comparison of some of the advantages and disadvantages differences of multiple shallow artificial neural network types.

| Advantages and Disadvantages | Feedforward Neural Networks | Feedforward Time Delay Neural Networks | Feedforward Nonlinear Autoregressive Neural Networks (NAR) | Feedforward Nonlinear Autoregressive Neural Networks with External Input (NARX) |

|---|---|---|---|---|

| Learned Complexity | Suitable for both linear and non-linear functional approximations, and sequence predictions. | |||

| Training Complexity | Low training complexity when compared to deep neural network training. Training complexity will increase with dense hidden layers. | |||

| Multi-Threading | Multi-threading permitted but not required. | |||

| Feature Engineering | Not required but may improve convergence and performance. | |||

| Suitability for Real-Time Training | Well suited for real-time training, with non-dense hidden layers. If using dense hidden layers, GPUs may be required to improve convergence. | |||

| Suitability for Real-Time Operation | Well suited for real-time operation except for exceptionally dense hidden layers. GPUs may be utilized to improve convergence. | |||

| Time Series Predictability | Well suited to function approximations and can be made to provide sequence predictions where the input features are also sequences. | Well suited for sequence predictions resulting from memory. | ||

Table A2.

Comparison of some of the advantages and disadvantages differences of multiple deep artificial neural network types.

Table A2.

Comparison of some of the advantages and disadvantages differences of multiple deep artificial neural network types.

| Advantages and Disadvantages | Recurrent Neural Networks | Long Short Term Memory Neural Networks | Convolutional Neural Networks | Gated Recurrent Units Neural Networks |

|---|---|---|---|---|

| Learned Complexity | Suitable for both linear and non-linear functional approximations, sequence predictions, and image classifcation. | |||

| Training Complexity | Medium to high complexity. | Medium to high complexity. | High complexity. | Medium to high complexity. |

| Multi-Threading | Multi-threading is routinely utilized to improve convergence as the number of multiplications increases due to exotic layer operations. Not required for less dense networks. | |||

| Feature Engineering | Feature engineering is routinly utilized to improve performance and convergence. | Feature engineering is not required but may improve performance or convergence. | Feature engineering is routinly utilized to improve performance and convergence. | |

| Suitability for Real-Time Training | Not well suited for real-time training, as the number of multiplications increases due to exotic layer operations. Networks may suffer from vanishing gradients. GPUs are required to be able to train in near-real-time or real-time. | |||

| Suitability for Real-Time Operation | Not well suited for real-time operation. GPUs can be utilized to enable improved near-real-time or real-time performance. | |||

| Time Series Predictability | Well suited for sequence predictions resulting from delays, memory, and more exotic layers. | |||

Table A4 was then constructed to describe the computational complexity of common layers found within dissimilar AI/ML algorithms that are used to guide our decision on what types of architecture or algorithms may be suited for near-real-time and real-time training and operations. Within the subsequent table, represents the number of rows of the input signal, represents the number of columns of the input signal, represents the number of channels of the input signal, represents the number of filters, represents the number of kernels, represents the number of neurons, is the sequence length, represents the number of categorical variables, represents the embedding dimension, represents the number of trees, and represents the depth from roots to leaves. The complexity could change if the input signals passed between the dissimilar layers are matrices instead of vectors; the opposite is also true.

Table A3.

Comparison of some of the advantages and disadvantages differences of multiple deep artificial neural network types.

Table A3.

Comparison of some of the advantages and disadvantages differences of multiple deep artificial neural network types.

| Advantages and Disadvantages | Autoencoders Neural Networks | Generative Adversarial Neural Networks | Large Language Neural Networks | Binary Decision Tree Neural Network |

|---|---|---|---|---|

| Learned Complexity | Strength lies in learning complex, non-linear mapping. | Suitable for both linear and non-linear approximation tasks. | Not traditionally used for functional approximations, or sequence predictions, but can learn both linear and non-linear function approximations. | Capable of learning both linear and non-linear relationships, heavily dependent on the complexity of data and tree structure. |

| Training Complexity | High to extremely high complexity. | High to extremely high complexity. | Extremely high complexity. | Medium to High Complexity. |

| Multi-Threading | Multi-threading is almost always utilized to improve convergence, particularly because these neural network variations require excessive amounts of data. | Not required. Input feature complexity or dimensionality may require significant complexity, and thus benefit from parallelism. | ||

| Feature Engineering | Autoencoders typically require less feature engineering compared to traditional machine learning models. | Typically do not require explicit feature engineering | Feature engineering not routinely, largely impart because the amount of data ingested is substantially larger than traditional AI/ML algorithms. | Feature engineering may be used, less common to utilize than other machine learning models. |

| Suitability for Real-Time Training | Due to the data requirements of these types of machine learning models, none are suited for real-time training, unless sufficiently not complex, and multiple GPUs or dedicated processors are accessible. | Depending on the complexity and the number of input features this machine learning model can be trained in near-real-time and real-time if using GPUs or for overly non-complex models. | ||

| Suitability for Real-Time Operation | Near real-time or real-time operation is supported; however, the use of GPUs and dedicated processors are required. | Depending on the complexity near-real-time and real-time performance is achievable, overly complex systems likely require GPUs. | ||

| Time Series Predictability | Use for feature extraction and dimensionality reduction. | Particularly well suited for generating synthetic time series data or for data augmentation. | Primarily designed for natural language processing tasks, the machine learning models can be adapted for time series prediction tasks. | Depending on the complexity input feature complexity these types of models can be utilized for time series predictions. |

Table A4.

The computational complexity of common layers utilized for various AI/ML architectures.

Table A4.

The computational complexity of common layers utilized for various AI/ML architectures.

| Layer Type | Complexity |

|---|---|

| Dense Layer or Fully Connected Layer | |

| Convolutional Layer with Single Filter | |

| Convolutional Layer with Multiple Filters | |

| Maximum Pooling Layer | |

| Average Pooling Layer | |

| Global Pooling Layer | |

| Recurrent Layer (Simple RNN) | |

| Long Short Term Memory (LSTM) Layer | |

| GRU (Gated Recurrent Unit) Layer | |

| Bidirectional Layer | |

| Attention Layer | Negligible Compared to Others |

| Normalization Layer | Negligible Compared to Others |

| Dropout Layer (Inference) | Negligible during Inference |

| Dropout Layer(Training) | Randomly Fractional Activation during Training |

| Activation Layer | Negligible Compared to Others |

| Embedding Layer | |

| Flatten Layer | Negligible Compared to Others |

| Concatenation Layer | Negligible Compared to Others |

| Lambda Layer | Negligible Compared to Others |

| Self-Attention Layer | |

| Skip Connection (Residual) Layer | Negligible Compared to Others |

| Upsampling Layer | |

| Drop Connect Layer | Negligible Compared to Others |

| Alpha Dropout Layer | Negligible Compared to Others |

| Graph Convolutional Layer | |

| Input Transformation Layer | |

| Gate Operations Layers | |

| Output Layers | |

| Residual Connections Layers | |

| Batch Layer | |

| Regression Forest Ensemble |

References

- U.S. Energy Information Administration (EIA). 2023 Annual Energy Outlook. 2023. Available online: https://www.eia.gov/outlooks/aeo/ (accessed on 15 November 2023).

- U.S. Energy Information Administration (EIA). U.S. Government Energy Consumption by Agency. 2023. Available online: https://www.eia.gov/totalenergy/data/browser/index.php?tbl=T02.07#/?f=M&start=200001 (accessed on 15 November 2023).

- National Academies of Sciences, Engineering, and Medicine; Powering the U.S. Army of the Future: Washington, DC, USA, 2021. [CrossRef]

- Garrett, A. The Discovery of the Transistor: W. Shockley, J. Bardeen, and W. Brattain. J. Chem. Educ. 1963, 40, 302. [Google Scholar] [CrossRef]

- Grimm, R.; Bremer, R.; Stonestreet, S. GM Micro-Computer Engine Control System. SAE Trans. 1980, 357–372. [Google Scholar] [CrossRef] [PubMed]

- Konopa, B.; Miller, M.; Revnew, L.; Muraco, J.; Mayfield, L.; Rutledge, M.; Crocker, M.; Mittal, V. Optimal Use Cases for Electric and Hybrid Tactical Vehicles; Technical Report, SAE Technical Paper; SAE International: Warrendale, PA, USA, 2024. [Google Scholar]

- United States Army Climate Strategy, Office of the Assistant Secretary of the Army for Installations, Energy and Environment. 2022. Available online: https://www.army.mil/article/253754/us_army_releases_its_climate_strategy (accessed on 16 November 2023).

- 2019 Army Modernization Strategy: Investing in the Future, Department of the United States Army. 2019. Available online: https://www.army.mil/e2/downloads/rv7/2019_army_modernization_strategy_final.pdf (accessed on 16 November 2023).

- Sharma, A.; Sharma, V.; Jaiswal, M.; Wang, H.C.; Jayakody, D.N.K.; Basnayaka, C.M.W.; Muthanna, A. Recent trends in AI-based intelligent sensing. Electronics 2022, 11, 1661. [Google Scholar] [CrossRef]

- Rathore, A.S.; Nikita, S.; Thakur, G.; Mishra, S. Artificial intelligence and machine learning applications in biopharmaceutical manufacturing. Trends Biotechnol. 2023, 41, 497–510. [Google Scholar] [CrossRef] [PubMed]

- Khanal, S.K.; Tarafdar, A.; You, S. Artificial intelligence and machine learning for smart bioprocesses. Bioresour. Technol. 2023, 375, 128826. [Google Scholar] [CrossRef] [PubMed]

- Katare, D.; Perino, D.; Nurmi, J.; Warnier, M.; Janssen, M.; Ding, A.Y. A Survey on Approximate Edge AI for Energy Efficient Autonomous Driving Services. IEEE Commun. Surv. Tutor. 2023, 25, 2714–2754. [Google Scholar] [CrossRef]

- Dong, Z.; Ji, X.; Zhou, G.; Gao, M.; Qi, D. Multimodal neuromorphic sensory-processing system with memristor circuits for smart home applications. IEEE Trans. Ind. Appl. 2022, 59, 47–58. [Google Scholar] [CrossRef]

- Dong, Z.; Ji, X.; Wang, J.; Gu, Y.; Wang, J.; Qi, D. ICNCS: Internal cascaded neuromorphic computing system for fast electric vehicle state of charge estimation. IEEE Trans. Consum. Electron. 2023, 70, 4311–4320. [Google Scholar] [CrossRef]

- Wiesner, P.; Steinke, M.; Nickel, H.; Kitana, Y.; Kao, O. Software-in-the-loop simulation for developing and testing carbon-aware applications. Softw. Pract. Exp. 2023, 53, 2362–2376. [Google Scholar] [CrossRef]