Research on Electric Hydrogen Hybrid Storage Operation Strategy for Wind Power Fluctuation Suppression

Abstract

1. Introduction

- (1)

- DRL algorithm is utilized for research on smoothing on-grid WPF. Fast perception of EHHS status and formulation of charging and discharging operation strategies through DRL intelligent agents significantly suppress the on-grid WPF.

- (2)

- A wavelet packet power decomposition algorithm based on variable frequency entropy improvement is proposed. This algorithm addresses the drawback of the wavelet packet decomposition (WPD) algorithm that requires precise input conditions and manual setting of response time boundary points for different energy storage components.

- (3)

- A modified proximal policy optimization (MPPO) based on wind power deviation is proposed for training and solving the unique challenges of WPF. By dynamically adjusting the clipping rate based on real-time WPF, the training efficiency and stability of the algorithm are balanced, and the overall performance of the model is improved.

2. Architecture of Electric Hydrogen Hybrid Storage System

3. Wind Power Fluctuation Suppression Strategy of EHHS

3.1. Wavelet Packet Power Decomposition Algorithm Based on Frequency Conversion Entropy Improvement

3.1.1. Traditional Wavelet Packet Decomposition Algorithm

3.1.2. Variable Frequency Entropy Strategy Based on WPD

3.2. Modeling of Wind Power Fluctuation Suppression

3.2.1. Objective Function

- A

- The optimal effect of WPF suppression

- B

- The optimal operating cost of EHHS

- A

- Power balance

- B

- Unit time exchange power of EES

- C

- Electrolytic cell operation

- D

- Hydrogen storage status

- E

- Upper and lower limits of SOC for EES

3.2.2. WPF Suppression Strategy Based on Markov Decision Process

- A

- States

- B

- Actions

- C

- Rewards

4. Solution Based on the MPPO Algorithm

4.1. Basic Principles of PPO Algorithm

4.2. Adaptive Clipping Rate Mechanism Based on Power Fluctuations

4.3. The Training Process of the Improved PPO Algorithm

5. Case Study

5.1. Configuration and Parameter Setting of IPHS

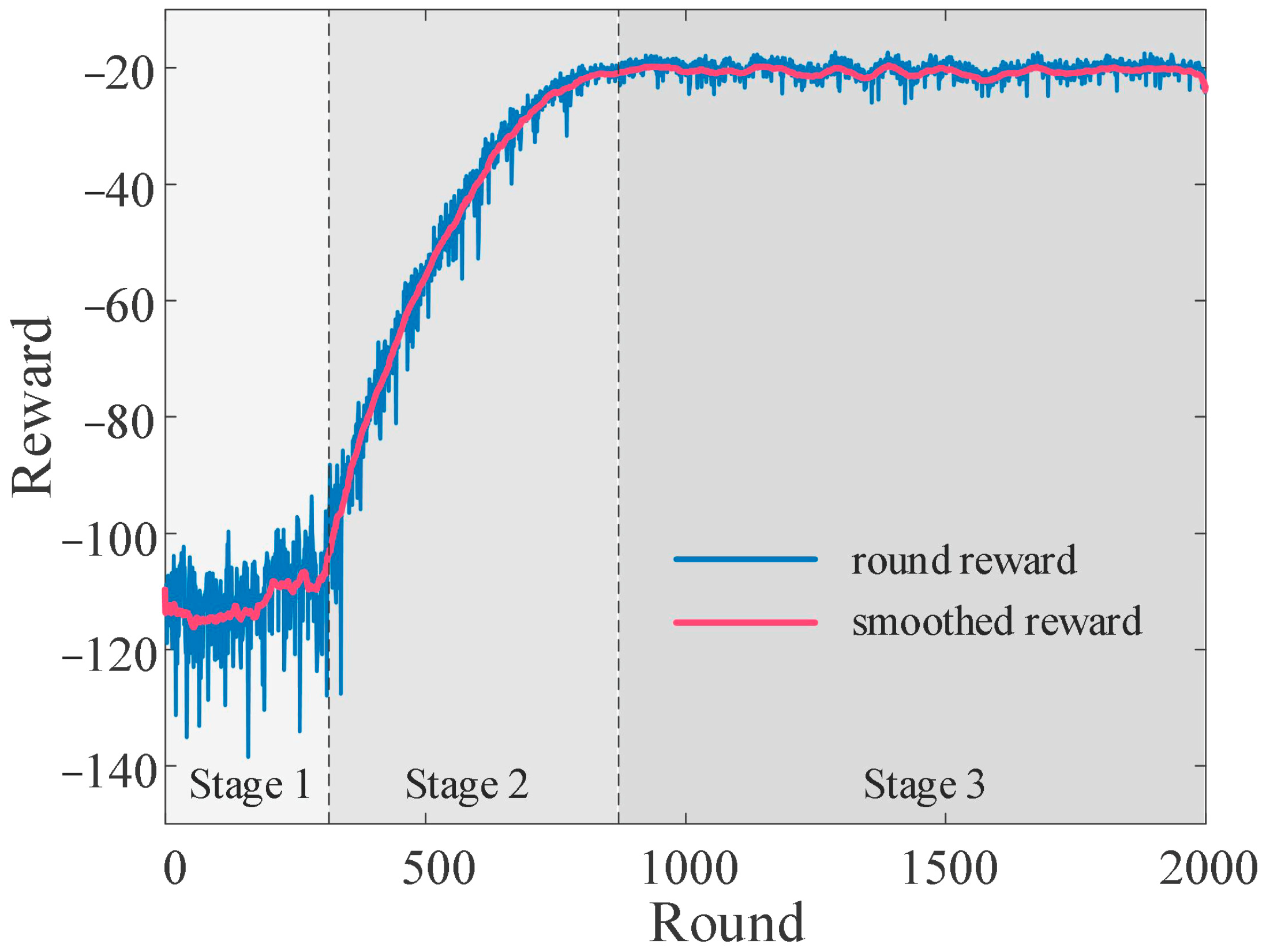

5.2. Analysis of Training Process

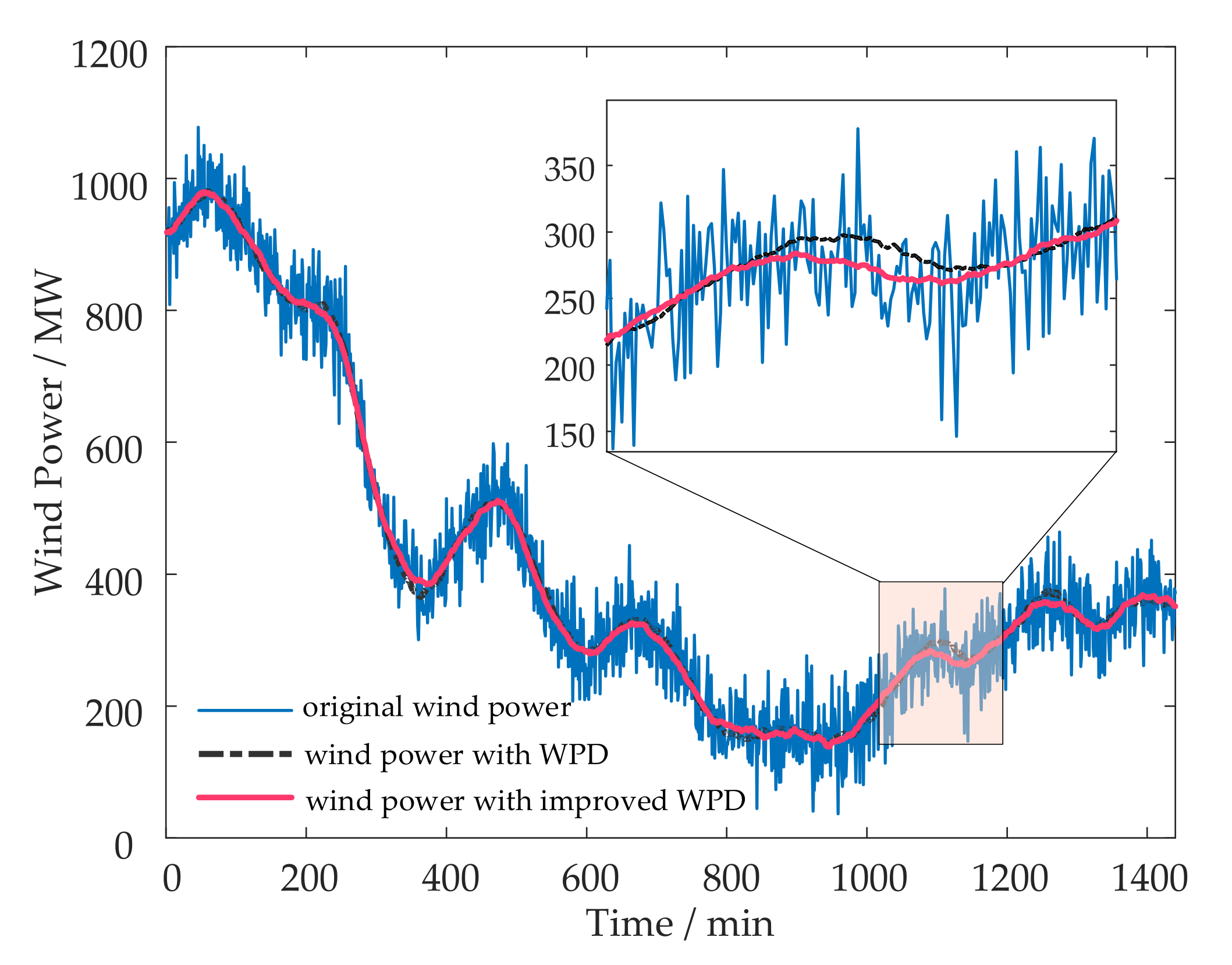

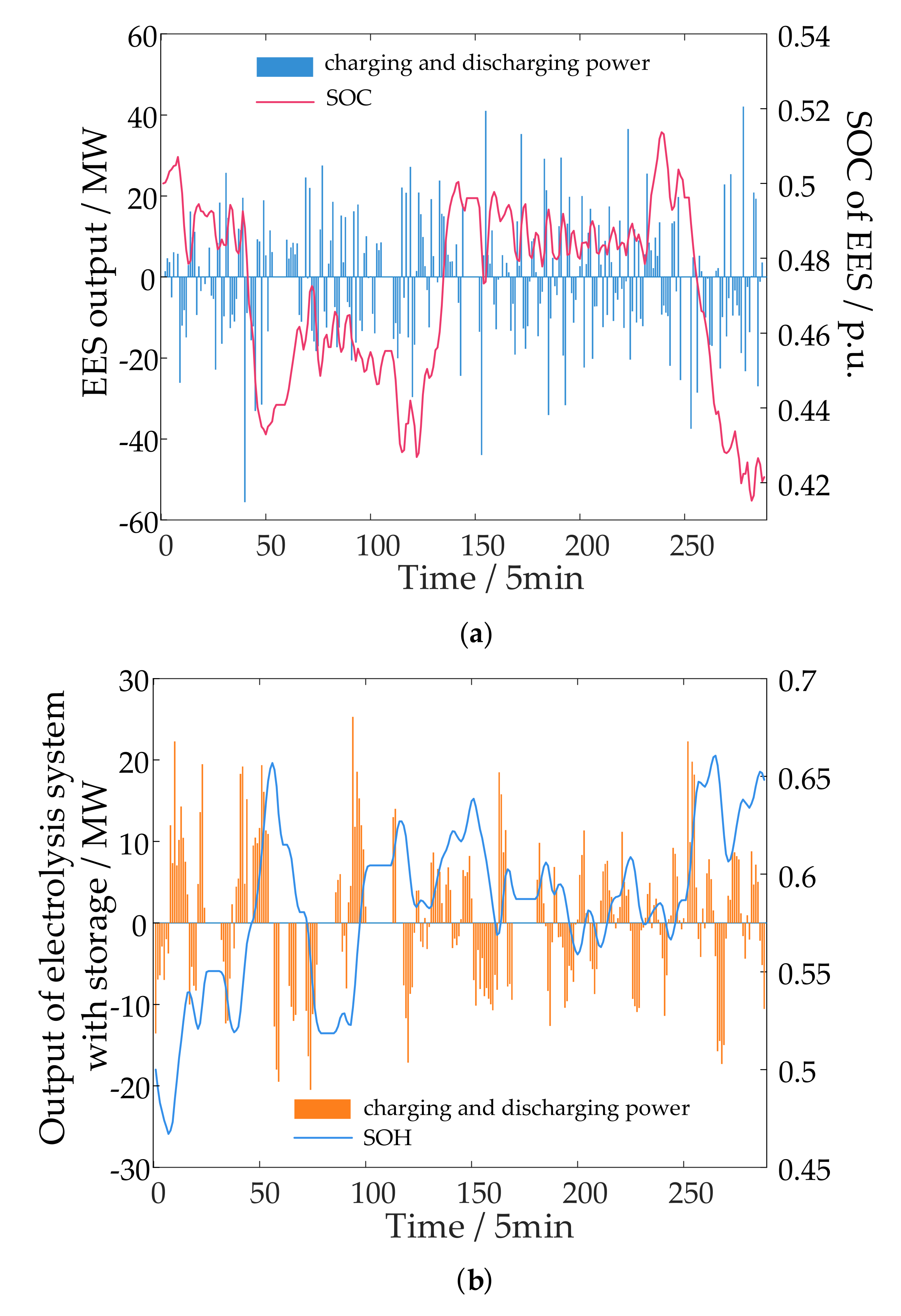

5.3. WPF Suppression Model Application and Results Analysis

5.4. Comparison of Different Algorithms

6. Conclusions

- (1)

- This paper explores the energy flow and complementary characteristics of EHHS based on a DRL algorithm, achieving real-time perception of system status. By formulating power charging and discharging strategies for EES and HES, WPF is effectively mitigated and the overall system cost is reduced.

- (2)

- The proposed modified WPD algorithm can accurately characterize the wind power, thereby formulating high- and low-frequency power allocation plans. The average on-grid WPF was only 5.74 MW, a decrease of 71.46% compared with before suppression.

- (3)

- Compared with other DRL algorithms, the proposed MPPO algorithm can dynamically adjust the clipping rate based on WPF during the training process, effectively balancing the training efficiency and convergence stability. Compared with the conventional PPO algorithm, the MPPO algorithm increased the training reward value by 21.25% and reduced the on-grid WPF by 16.81%.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Energy Institute KPMG. Statistical Review of World Energy 2024. 2024. pp. 58–65. Available online: https://www.energyinst.org/statistical-review (accessed on 30 August 2024).

- BP. bp Energy Outlook. 2024, 57. Available online: https://www.bp.com/content/dam/bp/business-sites/en/global/corporate/pdfs/energy-economics/energy-outlook/bp-energy-outlook-2024.pdf (accessed on 30 August 2024).

- Jie, D.; Xu, X.; Guo, F. The future of coal supply in China based on non-fossil energy development and carbon price strategies. Energy 2021, 220, 119644. [Google Scholar] [CrossRef]

- Lund, H. Renewable energy strategies for sustainable development. Energy 2007, 32, 912–919. [Google Scholar] [CrossRef]

- Hu, H.; Ma, C.; Wang, X.; Zhang, Z.; Aizezi, A. Anti-Interference System for Power Sensor Signals under Artificial Intelligence. In Proceedings of the 2024 Second International Conference on Data Science and Information System (ICDSIS), Hassan, India, 17–18 May 2024; pp. 1–5. [Google Scholar]

- Beaudin, M.; Zareipour, H.; Schellenberglabe, A.; Rosehart, W. Energy storage for mitigating the variability of renewable electricity sources: An updated review. Energy Sustain. Dev. 2010, 14, 302–314. [Google Scholar] [CrossRef]

- Lund, P.D.; Lindgren, J.; Mikkola, J.; Salpakari, J. Review of energy system flexibility measures to enable high levels of variable renewable electricity. Renew. Sustain. Energy Rev. 2015, 45, 785–807. [Google Scholar] [CrossRef]

- Hosseini, S.E.; Wahid, M.A. Hydrogen production from renewable and sustainable energy resources: Promising green energy carrier for clean development. Renew. Sustain. Energy Rev. 2016, 57, 850–866. [Google Scholar] [CrossRef]

- He, G.; Mallapragada, D.S.; Bose, A.; Heuberger, C.F.; Gencer, E. Hydrogen supply chain planning with flexible transmission and storage scheduling. IEEE Trans. Sustain. Energy 2021, 12, 1730–1740. [Google Scholar] [CrossRef]

- Serban, I.; Marinescu, C. Control strategy of three-phase battery energy storage systems for frequency support in microgrids and with uninterrupted supply of local loads. IEEE Trans. Power Electron. 2013, 29, 5010–5020. [Google Scholar] [CrossRef]

- Li, J.; Yang, B.; Huang, J.; Guo, Z.; Wang, J.; Zhang, R.; Hu, Y.; Shu, H.; Chen, Y.; Yan, Y. Optimal planning of Electricity-Hydrogen hybrid energy storage system considering demand response in active distribution network. Energy 2023, 273, 127142. [Google Scholar] [CrossRef]

- Vivas, F.J.; De las, H.A.; Segura, F.; Andújar Márquez, J.M. A review of energy management strategies for renewable hybrid energy systems with hydrogen backup. Renew. Sustain. Energy Rev. 2018, 82, 126–155. [Google Scholar] [CrossRef]

- Mannelli, A.; Papi, F.; Pechlivanoglou, G.; Ferrara, G.; Bianchini, A. Discrete wavelet transform for the real-time smoothing of wind turbine power using li-ion batteries. Energies 2021, 14, 2184. [Google Scholar] [CrossRef]

- Roy, P.; He, J.; Liao, Y. Cost minimization of battery-supercapacitor hybrid energy storage for hourly dispatching wind-solar hybrid power system. IEEE Access 2020, 8, 210099–210115. [Google Scholar] [CrossRef]

- Guo, T.; Liu, Y.; Zhao, J.; Zhu, Y.; Liu, J. A dynamic wavelet-based robust wind power smoothing approach using hybrid energy storage system. Int. J. Electr. Power Energy Syst. 2020, 116, 105579. [Google Scholar] [CrossRef]

- Wan, C.; Qian, W.; Zhao, C.; Song, Y.; Yang, G. Probabilistic forecasting based sizing and control of hybrid energy storage for wind power smoothing. IEEE Trans. Sustain. Energy 2021, 12, 1841–1852. [Google Scholar] [CrossRef]

- Qais, M.H.; Hasanien, H.M.; Alghuwainem, S. Output power smoothing of wind power plants using self-tuned controlled SMES units. Electr. Power Syst. Res. 2020, 178, 106056. [Google Scholar] [CrossRef]

- Lin, L.; Cao, Y.; Kong, X.; Lin, Y.; Jia, Y.; Zhang, Z. Hybrid energy storage system control and capacity allocation considering battery state of charge self-recovery and capacity attenuation in wind farm. J. Energy Storage 2024, 75, 109693. [Google Scholar] [CrossRef]

- Carvalho, W.C.; Bataglioli, R.P.; Fernandes, R.; Coury, D.V. Fuzzy-based approach for power smoothing of a full-converter wind turbine generator using a supercapacitor energy storage. Electr. Power Syst. Res. 2020, 184, 106287. [Google Scholar] [CrossRef]

- Syed, M.A.; Khalid, M. An intelligent model predictive control strategy for stable solar-wind renewable power dispatch coupled with hydrogen electrolyzer and battery energy storage. Int. J. Energy Res. 2023, 2023, 4531054. [Google Scholar] [CrossRef]

- Guo, T.; Zhu, Y.; Liu, Y.; Gu, C.; Liu, J. Two-stage optimal MPC for hybrid energy storage operation to enable smooth wind power integration. IET Renew. 2020, 14, 2477–2486. [Google Scholar] [CrossRef]

- Wu, C.; Gao, S.; Liu, Y.; Han, H.; Jiang, S. Wind power smoothing with energy storage system: A stochastic model predictive control approach. IEEE Access 2021, 9, 37534–37541. [Google Scholar] [CrossRef]

- Bao, W.; Wu, Q.; Ding, L.; Huang, S.; Teng, F.; Terzija, V. Synthetic inertial control of wind farm with BESS based on model predictive control. IET Renew. 2020, 14, 2447–2455. [Google Scholar] [CrossRef]

- Liu, X.; Feng, L.; Kong, X. A comparative study of robust MPC and stochastic MPC of wind power generation system. Energies 2022, 15, 4814. [Google Scholar] [CrossRef]

- Chen, X.; Cao, W.; Zhang, Q.; Hu, S.; Zhang, J. Artificial intelligence-aided model predictive control for a grid-tied wind-hydrogen-fuel cell system. IEEE Access 2020, 8, 92418–92430. [Google Scholar] [CrossRef]

- Schrotenboer, A.H.; Veenstra, A.; Broek, M.; Ursavas, E. A green hydrogen energy system: Optimal control strategies for integrated hydrogen storage and power generation with wind energy. Renew. Sustain. Energy Rev. 2022, 168, 112744. [Google Scholar] [CrossRef]

- Cao, D.; Hu, W.; Zhao, J.; Zhang, G.; Zhang, B.; Liu, Z.; Chen, Z.; Blaabjerg, F. Reinforcement learning and its applications in modern power and energy systems: A review. J. Mod. Power Syst. Clean Energy 2020, 8, 1029–1042. [Google Scholar] [CrossRef]

- Huang, S.; Li, P.; Yang, M.; Gao, Y.; Yun, J.; Zhang, C. A control strategy based on deep reinforcement learning under the combined wind-solar storage system. IEEE Trans. Ind. Appl. 2021, 57, 6547–6558. [Google Scholar] [CrossRef]

- Wang, X.; Zhou, J.; Qin, B.; Guo, L. Coordinated control of wind turbine and hybrid energy storage system based on multi-agent deep reinforcement learning for wind power smoothing. J. Energy Storage 2023, 57, 106297. [Google Scholar] [CrossRef]

- Yin, X.; Lei, M. Jointly improving energy efficiency and smoothing power oscillations of integrated offshore wind and photovoltaic power: A deep reinforcement learning approach. PCMP 2023, 8, 1–11. [Google Scholar] [CrossRef]

- Chen, P.; Han, D. Reward adaptive wind power tracking control based on deep deterministic policy gradient. Appl. Energy 2023, 348, 121519. [Google Scholar] [CrossRef]

- Liang, T.; Sun, B.; Tan, J.; Cao, X.; Sun, H. Scheduling scheme of wind-solar complementary renewable energy hydrogen production system based on deep reinforcement learning. High Volt. Eng. 2023, 49, 2264–2274. (In Chinese) [Google Scholar]

- Yuan, T.; Guo, J.; Yang, Z.; Feng, Y.; Wang, J. Optimal allocation of power electric-hydrogen hybrid energy storage of stabilizing wind power fluctuation. Proc. CSEE 2024, 44, 1397–1405. (In Chinese) [Google Scholar]

| Device | Capacity |

|---|---|

| EES power/MW | 60 |

| EES capacity/MWh | 150 |

| Electrolytic cell power/MW | 50 |

| HES capacity/m3 | 2100 |

| Fuel cell power/MW | 50 |

| Device | DDPG | PPO | MPPO |

|---|---|---|---|

| Cost of EES/CNY | 1749.31 | 1387.16 | 1295.33 |

| Cost of HES/CNY | 4127.88 | 3512.28 | 2869.12 |

| Average of on-grid power fluctuations/MW | 9.52 | 6.90 | 5.74 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, D.; Qian, K.; Gao, C.; Xu, Y.; Xing, Q.; Wang, Z. Research on Electric Hydrogen Hybrid Storage Operation Strategy for Wind Power Fluctuation Suppression. Energies 2024, 17, 5019. https://doi.org/10.3390/en17205019

Li D, Qian K, Gao C, Xu Y, Xing Q, Wang Z. Research on Electric Hydrogen Hybrid Storage Operation Strategy for Wind Power Fluctuation Suppression. Energies. 2024; 17(20):5019. https://doi.org/10.3390/en17205019

Chicago/Turabian StyleLi, Dongsen, Kang Qian, Ciwei Gao, Yiyue Xu, Qiang Xing, and Zhangfan Wang. 2024. "Research on Electric Hydrogen Hybrid Storage Operation Strategy for Wind Power Fluctuation Suppression" Energies 17, no. 20: 5019. https://doi.org/10.3390/en17205019

APA StyleLi, D., Qian, K., Gao, C., Xu, Y., Xing, Q., & Wang, Z. (2024). Research on Electric Hydrogen Hybrid Storage Operation Strategy for Wind Power Fluctuation Suppression. Energies, 17(20), 5019. https://doi.org/10.3390/en17205019