A Three-Granularity Pose Estimation Framework for Multi-Type High-Voltage Transmission Towers Using Part Affinity Fields (PAFs)

Abstract

1. Introduction

- (1)

- A novel transmission tower attitude estimation framework with good generalization capability is proposed, which, unlike traditional target detection and image segmentation methods, can cover the keypoint and skeleton attitude detection of multiple types of transmission towers and assist in the implementation of defect localization detection in videos captured by UAVs.

- (2)

- A transmission tower structure detection method combining classification coding and PAFs is proposed, which solves the problem of coding extension under multiple types of structures of transmission towers and also accurately determines the correct skeleton connection from multiple candidate connections by the PAF method.

- (3)

- The proposed framework designs the corresponding three-granularity feature framework and intermediate supervision mechanism according to the actual situation of defect detection in transmission towers, gradually optimizes the keypoint prediction, solves the problem of dense, similar, and overlapping keypoints, and adapts to the needs of different horizons.

2. Related Work

2.1. Research on Inspection of Electrical Tower Based on Deep Learning

2.2. Pose Estimation

3. Proposed Framework

3.1. Data Annotation

3.1.1. Dataset Source

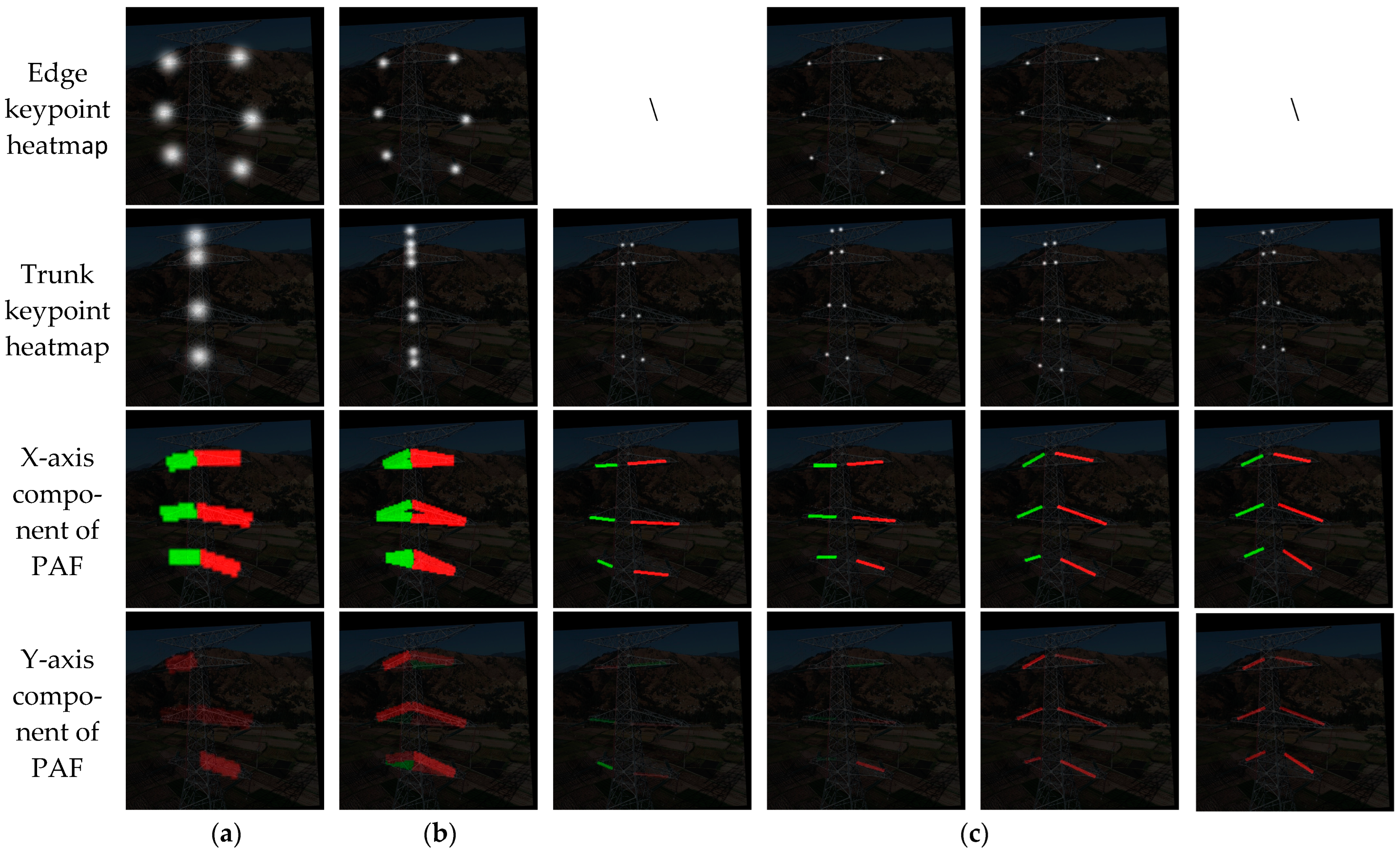

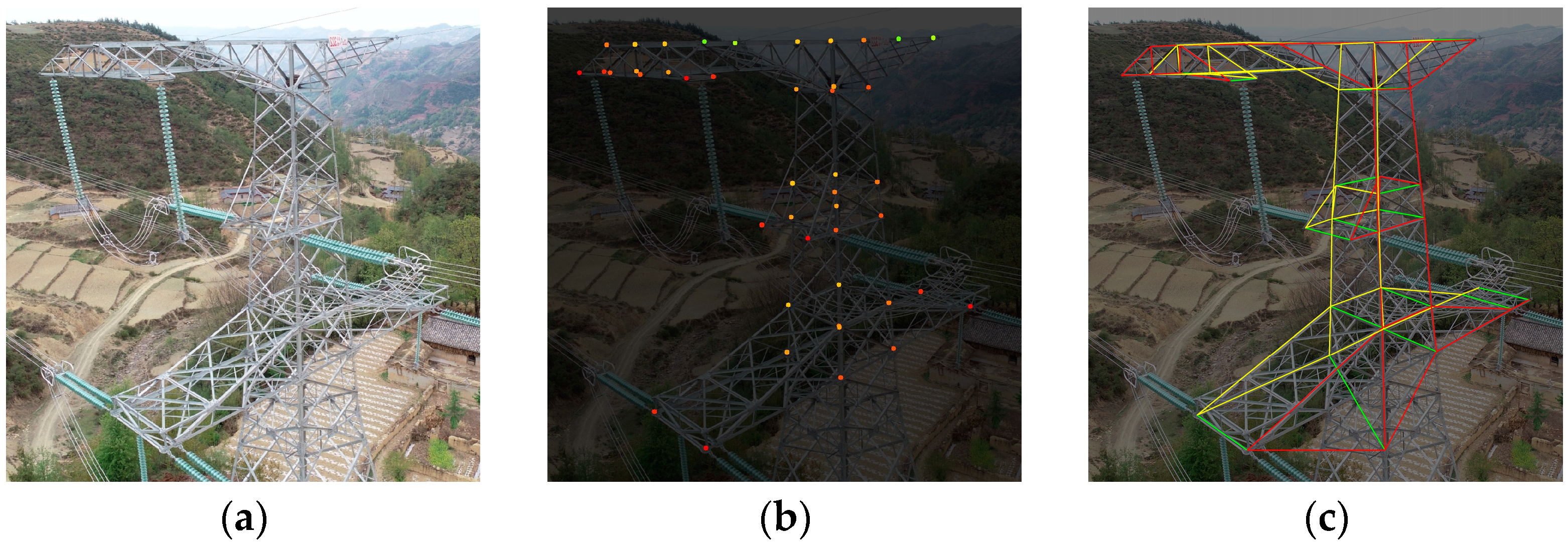

3.1.2. Gaussian Heatmaps for Representing Keypoints and PAFs for Representing Connections

- (1)

- Obtain the heatmap of the corresponding keypoint pair for a given electrical tower connection.

- (2)

- Apply Gaussian filtering to these two heatmap channels to obtain their probability distribution maps.

- (3)

- Retrieve the region with peak values from the filtered Gaussian heatmap as candidate connection points, obtaining multiple candidates for both starting and ending points.

- (4)

- Iterate over all candidate starting and ending points, adopting an approach similar to integral interpolation to round positions between them. Multiply this interpolated position’s value with the unit vector connection from the start to end point, then add it up to obtain a score for each connection. If this score exceeds a threshold, predict it as a skeleton connection; otherwise, discard it.

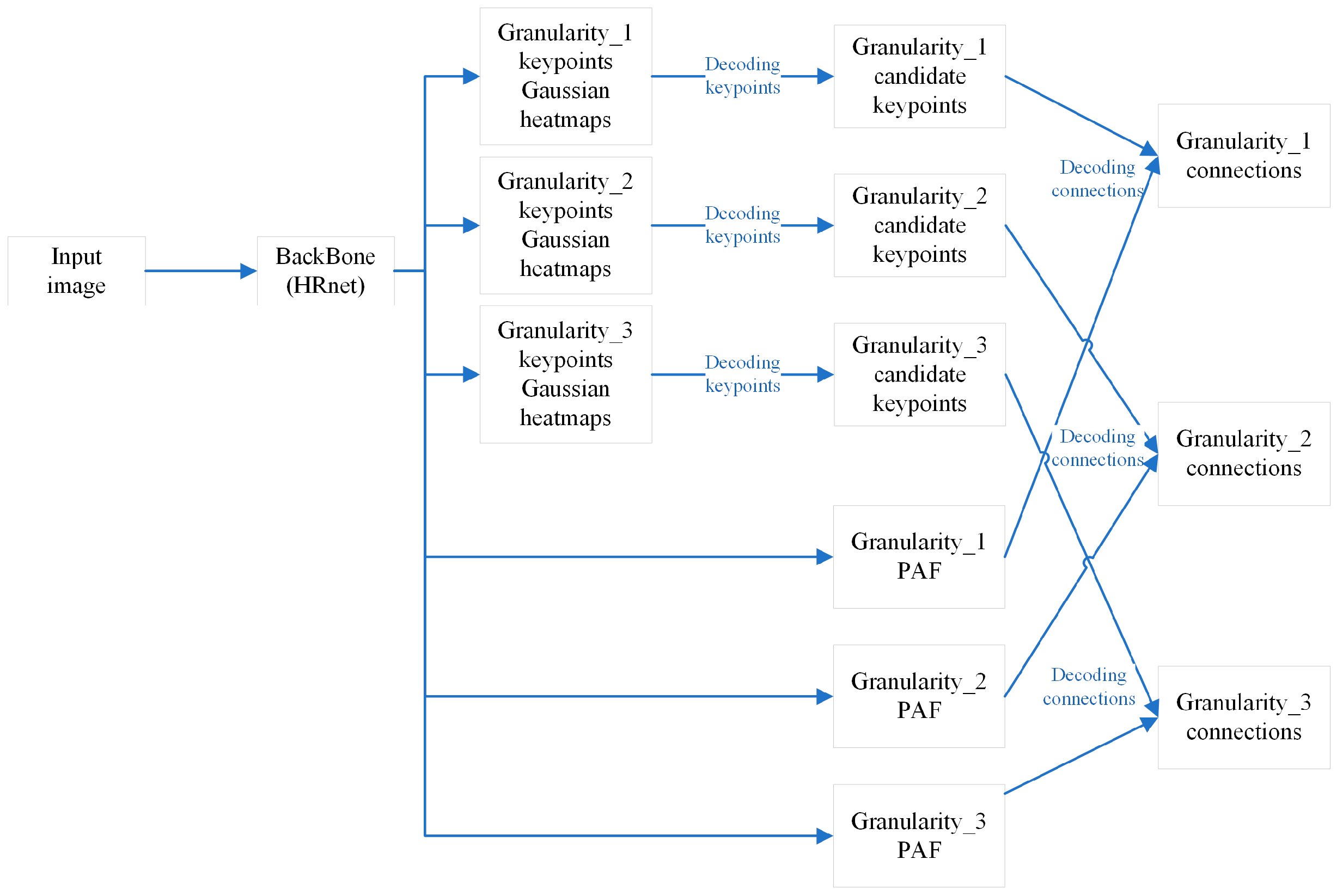

3.2. Network

3.2.1. HRNet Backbone

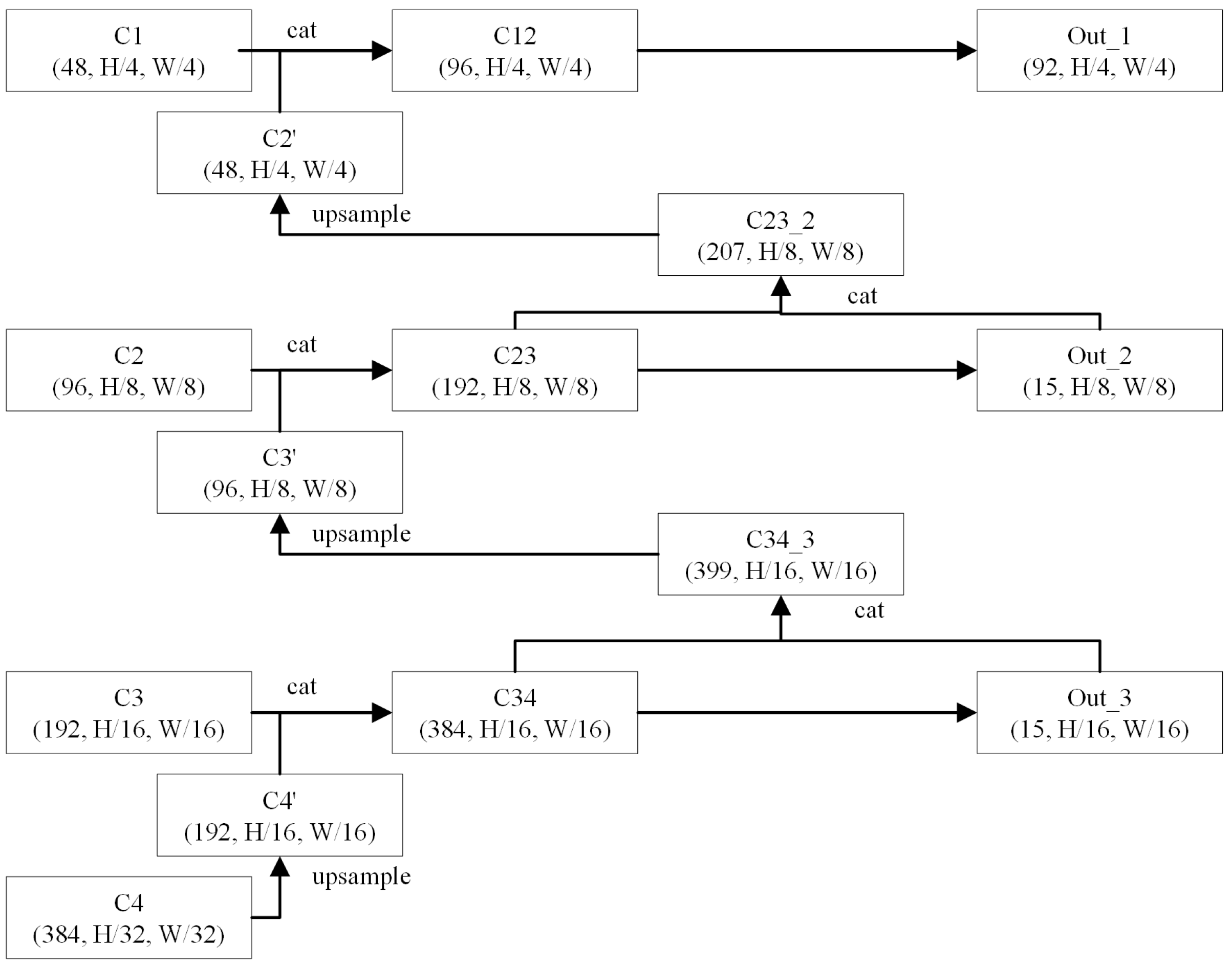

3.2.2. Network’s Neck

3.2.3. Loss Function

3.2.4. Parameters

4. Experiments and Analysis

4.1. Implementation Details

4.2. Evaluation Metrics

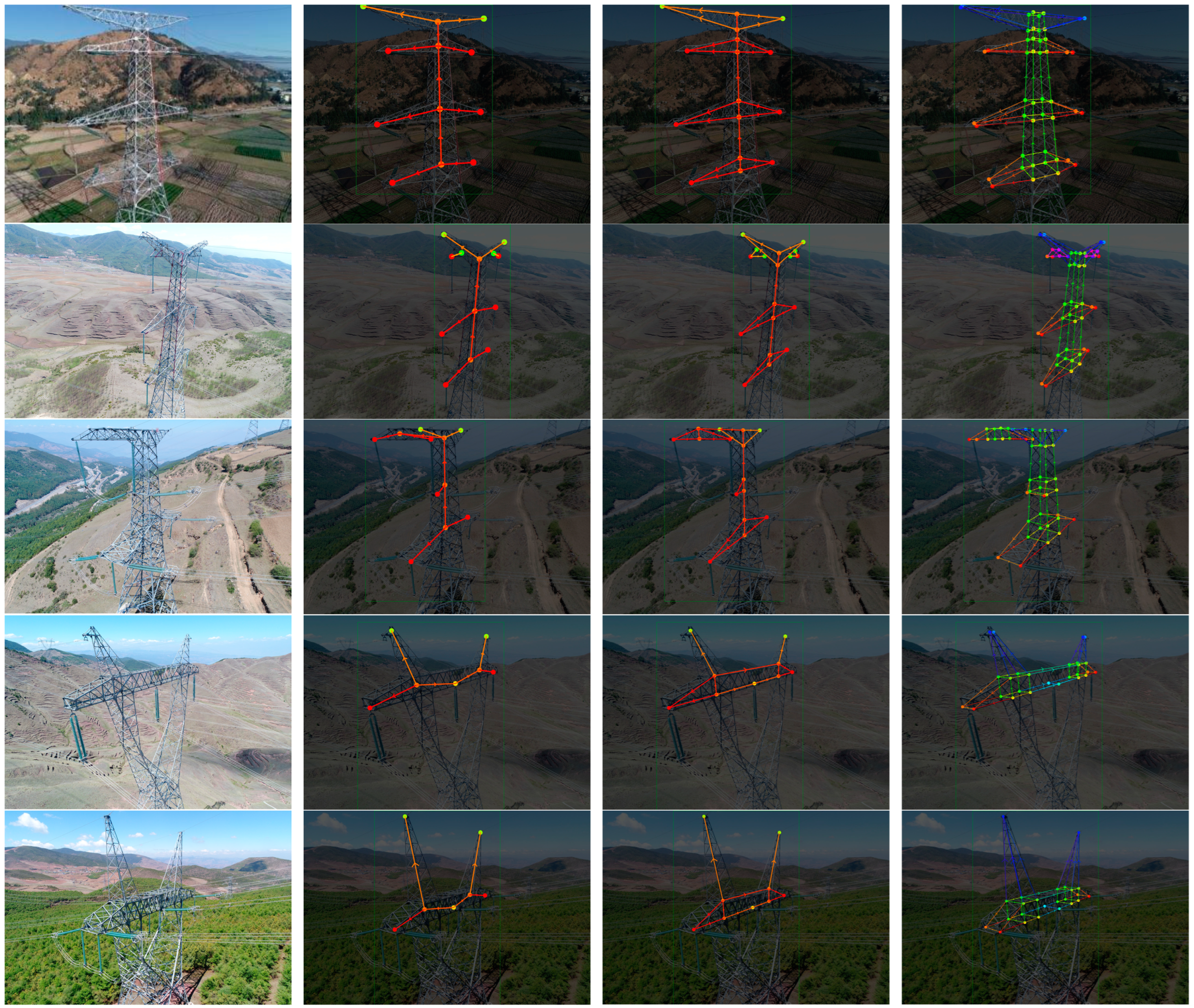

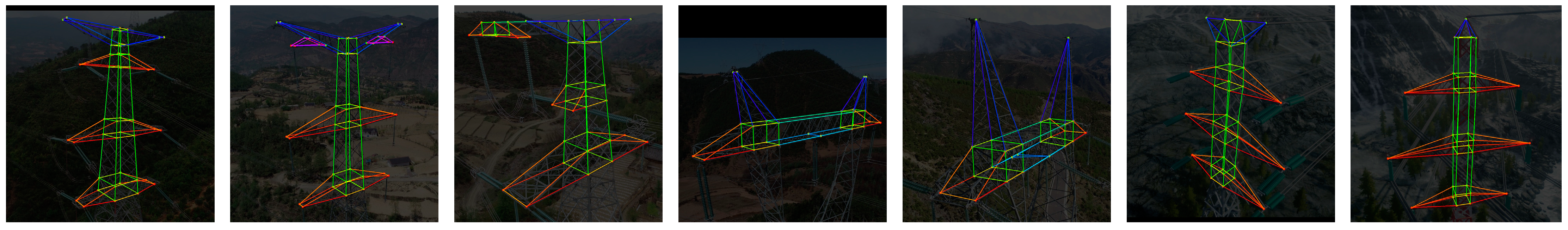

4.3. Experimental Result

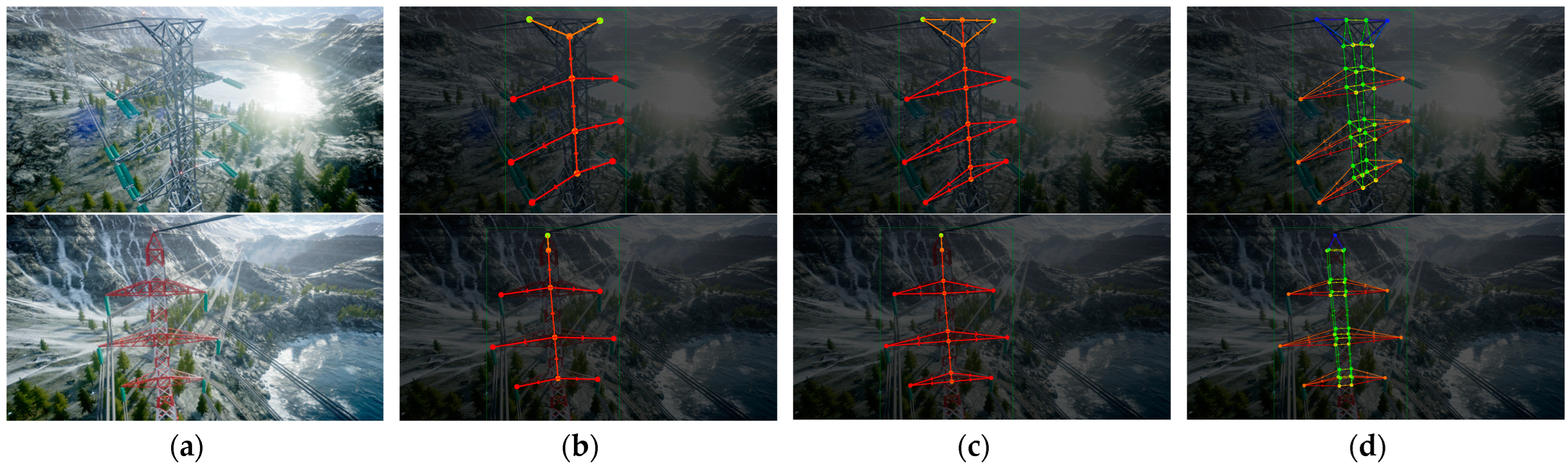

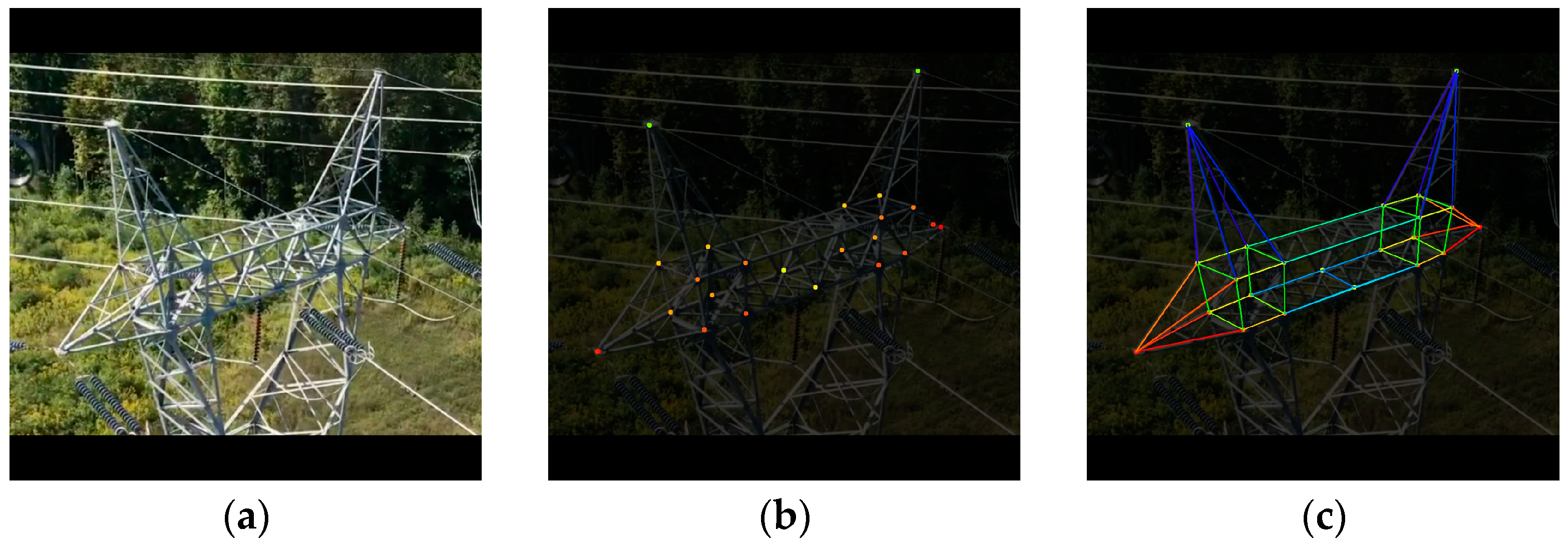

4.4. Image Not Included in Dataset Test

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Forlani, G.; Dall’Asta, E.; Diotri, F.; Morra di Cella, U.; Roncella, R.; Santise, M. Quality Assessment of DSMs Produced from UAV Flights Georeferenced with On-Board RTK Positioning. Remote Sens. 2018, 10, 311. [Google Scholar] [CrossRef]

- Lei, X.; Sui, Z. Intelligent Fault Detection of High Voltage Line Based on the Faster R-CNN. Measurement 2019, 138, 379–385. [Google Scholar] [CrossRef]

- Liao, J.; Xu, H.; Fang, X.; Miao, Q.; Zhu, G. Quantitative Assessment Framework for Non-Structural Bird’s Nest Risk Information of Transmission Tower in High-Resolution UAV Images. IEEE Trans. Instrum. Meas. 2023, 72, 1–12. [Google Scholar] [CrossRef]

- Satheeswari, D.; Shanmugam, L.; Swaroopan, N.J. Recognition of Bird’s Nest in High Voltage Power Line Using SSD. In Proceedings of the 2022 First International Conference on Electrical, Electronics, Information and Communication Technologies (ICEEICT), Trichy, India, 16–18 February 2022; pp. 1–7. [Google Scholar]

- Yuan, Z.; He, L.; Wang, S.; Tu, Y.; Li, Z.; Wang, C.; Li, F. Intelligent Breakage Assessment of Composite Insulators on Overhead Transmission Lines by Ellipse Detection Based on IRHT. CSEE J. Power Energy Syst. 2022, 9, 1942–1949. [Google Scholar]

- Luo, B.; Xiao, J.; Zhu, G.; Fang, X.; Wang, J. Occluded Insulator Detection System Based on YOLOX of Multi-Scale Feature Fusion. IEEE Trans. Power Deliv. 2024, 39, 1063–1074. [Google Scholar] [CrossRef]

- Wen, J.; Shugang, L.; Wanguo, W.; Zhenli, W.; Zhenyu, L.; Xinyue, M. Defect Identification Technology for Rotate Object in Transmission Lines Based on Multi-Scale Residual Network. In Proceedings of the 2023 IEEE International Conference on Control, Electronics and Computer Technology (ICCECT), Jilin, China, 28–30 April 2023; pp. 399–403. [Google Scholar]

- Huang, W.; Zeng, Q.; Wu, Y.; Cai, Z.; Zhou, R.; Shang, J.; Liang, L.; Li, X. Data-Efficient Pin Defect Detection with Transformer in Transmission Lines. In Proceedings of the 2023 5th International Conference on Electronic Engineering and Informatics (EEI), Wuhan, China, 30 June–2 July 2023; pp. 41–44. [Google Scholar]

- Xiao, Y.; Li, Z.; Zhang, D.; Teng, L. Detection of Pin Defects in Aerial Images Based on Cascaded Convolutional Neural Network. IEEE Access 2021, 9, 73071–73082. [Google Scholar] [CrossRef]

- Zhu, G.; Zhang, W.; Wang, M.; Wang, J.; Fang, X. Corner Guided Instance Segmentation Network for Power Lines and Transmission Towers Detection. Expert Syst. Appl. 2023, 234, 121087. [Google Scholar] [CrossRef]

- Abdelfattah, R.; Wang, X.; Wang, S. Ttpla: An Aerial-Image Dataset for Detection and Segmentation of Transmission Towers and Power Lines. In Proceedings of the Asian Conference on Computer Vision, Virtual, 30 November–4 December 2020. [Google Scholar]

- Zhou, J.; Liu, G.; Gu, Y.; Wen, Y.; Chen, S. A Box-Supervised Instance Segmentation Method for Insulator Infrared Images Based on Shuffle Polarized Self-Attention. IEEE Trans. Instrum. Meas. 2023, 72, 5026111. [Google Scholar] [CrossRef]

- Wang, B.; Dong, M.; Ren, M.; Wu, Z.; Guo, C.; Zhuang, T.; Pischler, O.; Xie, J. Automatic Fault Diagnosis of Infrared Insulator Images Based on Image Instance Segmentation and Temperature Analysis. IEEE Trans. Instrum. Meas. 2020, 69, 5345–5355. [Google Scholar] [CrossRef]

- Zhu, Z.; Hou, J.; Wu, D.O. Cross-Modal Orthogonal High-Rank Augmentation for Rgb-Event Transformer-Trackers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 22045–22055. [Google Scholar]

- Toshev, A.; Szegedy, C. Deeppose: Human Pose Estimation via Deep Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1653–1660. [Google Scholar]

- Wei, S.-E.; Ramakrishna, V.; Kanade, T.; Sheikh, Y. Convolutional Pose Machines. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4724–4732. [Google Scholar]

- Xu, T.; Takano, W. Graph Stacked Hourglass Networks for 3d Human Pose Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 16105–16114. [Google Scholar]

- Xiao, B.; Wu, H.; Wei, Y. Simple Baselines for Human Pose Estimation and Tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 466–481. [Google Scholar]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X.; et al. Deep High-Resolution Representation Learning for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3349–3364. [Google Scholar] [CrossRef]

- Simon, T.; Joo, H.; Matthews, I.; Sheikh, Y. Hand Keypoint Detection in Single Images Using Multiview Bootstrapping. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1145–1153. [Google Scholar]

- Zhou, H.; Hadap, S.; Sunkavalli, K.; Jacobs, D.W. Deep Single-Image Portrait Relighting. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 7194–7202. [Google Scholar]

- Ng, X.L.; Ong, K.E.; Zheng, Q.; Ni, Y.; Yeo, S.Y.; Liu, J. Animal Kingdom: A Large and Diverse Dataset for Animal Behavior Understanding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 19023–19034. [Google Scholar]

- Cao, J.; Tang, H.; Fang, H.-S.; Shen, X.; Lu, C.; Tai, Y.-W. Cross-Domain Adaptation for Animal Pose Estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9498–9507. [Google Scholar]

- Mathis, A.; Biasi, T.; Schneider, S.; Yuksekgonul, M.; Rogers, B.; Bethge, M.; Mathis, M.W. Pretraining Boosts Out-of-Domain Robustness for Pose Estimation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 5–9 January 2021; pp. 1859–1868. [Google Scholar]

- Stojanović, N.; Pantić, V.; Damjanović, V.; Vukmirović, S. 3D Vehicle Pose Estimation from an Image Using Geometry. In Proceedings of the 2022 21st International Symposium INFOTEH-JAHORINA (INFOTEH), East Sarajevo, Bosnia and Herzegovina, 16–18 March 2022; pp. 1–6. [Google Scholar]

- Chabot, F.; Chaouch, M.; Rabarisoa, J.; Teulière, C.; Chateau, T. Accurate 3D Car Pose Estimation. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3807–3811. [Google Scholar]

- Khan, S.U.; Khan, Z.U.; Alkhowaiter, M.; Khan, J.; Ullah, S. Energy-Efficient Routing Protocols for UWSNs: A Comprehensive Review of Taxonomy, Challenges, Opportunities, Future Research Directions, and Machine Learning Perspectives. J. King Saud Univ. Comput. Inf. Sci. 2024, 36, 102128. [Google Scholar]

- Gang, Q.; Muhammad, A.; Khan, Z.U.; Khan, M.S.; Ahmed, F.; Ahmad, J. Machine Learning-Based Prediction of Node Localization Accuracy in IIoT-Based MI-UWSNs and Design of a TD Coil for Omnidirectional Communication. Sustainability 2022, 14, 9683. [Google Scholar] [CrossRef]

- Cao, Z.; Simon, T.; Wei, S.-E.; Sheikh, Y. Realtime Multi-Person 2d Pose Estimation Using Part Affinity Fields. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7291–7299. [Google Scholar]

- Xu, L.; Jin, S.; Zeng, W.; Liu, W.; Qian, C.; Ouyang, W.; Luo, P.; Wang, X. Pose for Everything: Towards Category-Agnostic Pose Estimation. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 398–416. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Wang, Y.; Yang, M.; Zhang, Y.; Xu, Z.; Huang, J.; Fang, X. A Bearing Fault Diagnosis Model Based on Deformable Atrous Convolution and Squeeze-and-Excitation Aggregation. IEEE Trans. Instrum. Meas. 2021, 70, 1–10. [Google Scholar] [CrossRef]

- Caesar, H.; Uijlings, J.; Ferrari, V. Coco-Stuff: Thing and Stuff Classes in Context. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1209–1218. [Google Scholar]

- Andriluka, M.; Pishchulin, L.; Gehler, P.; Schiele, B. 2d Human Pose Estimation: New Benchmark and State of the Art Analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3686–3693. [Google Scholar]

- Geng, Z.; Wang, C.; Wei, Y.; Liu, Z.; Li, H.; Hu, H. Human Pose as Compositional Tokens. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 660–671. [Google Scholar]

- Bulat, A.; Kossaifi, J.; Tzimiropoulos, G.; Pantic, M. Toward Fast and Accurate Human Pose Estimation via Soft-Gated Skip Connections. In Proceedings of the 2020 15th IEEE international conference on automatic face and gesture recognition (FG 2020), Buenos Aires, Argentina, 16–20 November 2020; pp. 8–15. [Google Scholar]

- Su, Z.; Ye, M.; Zhang, G.; Dai, L.; Sheng, J. Cascade Feature Aggregation for Human Pose Estimation. arXiv 2019, arXiv:1902.07837. [Google Scholar]

- Yang, S.; Quan, Z.; Nie, M.; Yang, W. Transpose: Keypoint Localization via Transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 11802–11812. [Google Scholar]

| Tower Type | Number of Images | Number of Partial Images | Granularity-1 | Granularity-2 | Granularity-3 | |||

|---|---|---|---|---|---|---|---|---|

| Number of Keypoints | Number of Connections | Number of Keypoints | Number of Connections | Number of Keypoints | Number of Connections | |||

| Drum Tower | 139 | 646 | 12 | 11 | 16 | 23 | 48 | 100 |

| Sheep Horn Tower | 245 | 473 | 13 | 10 | 18 | 21 | 48 | 92 |

| Zigzag Tower | 148 | 413 | 11 | 10 | 15 | 22 | 46 | 98 |

| Wine-Glass-1 Tower | 438 | \ | 7 | 6 | 9 | 11 | 26 | 54 |

| Wine-Glass-2 Tower | 173 | \ | 7 | 6 | 9 | 11 | 26 | 54 |

| UE-1 Tower | 142 | \ | 12 | 11 | 16 | 23 | 48 | 100 |

| UE-2 Tower | 100 | \ | 11 | 10 | 14 | 20 | 46 | 94 |

| OKS Threshold | AP1 | AR1 | AF1 | AP2 | AR2 | AF2 | AP3 | AR3 | AF3 |

|---|---|---|---|---|---|---|---|---|---|

| 0.5 | 0.9979 | 0.9969 | 0.9974 | 0.9927 | 0.9813 | 0.9870 | 0.9608 | 0.9719 | 0.9663 |

| 0.75 | 0.9961 | 0.9951 | 0.9956 | 0.9871 | 0.9757 | 0.9814 | 0.8712 | 0.8814 | 0.8763 |

| OKS Threshold | AP_CN | AR_CN | AF_CN |

|---|---|---|---|

| 0.5 | 0.9380 | 0.9228 | 0.9304 |

| 0.75 | 0.8049 | 0.7918 | 0.7983 |

| Tower Type | AP1 | AR1 | AF1 | AP2 | AR2 | AF2 | AP3 | AR3 | AF3 | AP_ CN | AR_ CN | AF_ CN |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Drum Tower | 0.9969 | 0.9976 | 0.9973 | 0.9959 | 0.9900 | 0.9929 | 0.9638 | 0.9749 | 0.9693 | 0.9434 | 0.9480 | 0.9457 |

| Sheep Horn Tower | 1.0000 | 1.0000 | 1.0000 | 0.9863 | 0.9863 | 0.9863 | 0.9912 | 0.9974 | 0.9943 | 0.9875 | 0.9904 | 0.9890 |

| Zigzag Tower | 0.9952 | 0.9904 | 0.9928 | 0.9902 | 0.9719 | 0.9810 | 0.9158 | 0.9381 | 0.9269 | 0.8888 | 0.8730 | 0.8808 |

| Wine-Glass-1 Tower | 1.0000 | 1.0000 | 1.0000 | 0.9962 | 0.9923 | 0.9942 | 0.9894 | 0.9929 | 0.9912 | 0.9857 | 0.9670 | 0.9763 |

| Wine-Glass-2 Tower | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 0.9967 | 0.9984 | 0.9652 | 0.9717 | 0.9684 | 0.9463 | 0.9020 | 0.9236 |

| UE-1 Tower | 1.0000 | 1.0000 | 1.0000 | 0.9941 | 0.9970 | 0.9955 | 0.8936 | 0.8919 | 0.8928 | 0.8407 | 0.7438 | 0.7893 |

| UE-2 Tower | 1.0000 | 1.0000 | 1.0000 | 1.0000 | 0.9966 | 0.9983 | 0.8979 | 0.9099 | 0.9039 | 0.8443 | 0.7665 | 0.8035 |

| Methods | AF_0.5 | AF_0.75 | AF_CN_0.5 | AF_CN_0.75 |

|---|---|---|---|---|

| PCT | 0.9419 | 0.8123 | 0.8832 | 0.7020 |

| Soft-gated Skip Connections | 0.9323 | 0.8010 | 0.8764 | 0.6910 |

| Cascade Feature Aggregation | 0.9310 | 0.7888 | 0.8841 | 0.6902 |

| TransPose | 0.9252 | 0.7615 | 0.8632 | 0.6606 |

| Ours | 0.9663 | 0.8763 | 0.9304 | 0.7983 |

| Methods | AF_0.5 | AF_0.75 | AF_CN_0.5 | AF_CN_0.75 | ||

|---|---|---|---|---|---|---|

| HRNet | Three Granularity with PAF | Intermediate Supervision | ||||

| √ | 0.9215 | 0.7853 | 0.8738 | 0.6836 | ||

| √ | √ | 0.9459 | 0.8451 | 0.9127 | 0.7544 | |

| √ | √ | √ | 0.9663 | 0.8763 | 0.9304 | 0.7983 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huo, Y.; Dai, X.; Tang, Z.; Xiao, Y.; Zhang, Y.; Fang, X. A Three-Granularity Pose Estimation Framework for Multi-Type High-Voltage Transmission Towers Using Part Affinity Fields (PAFs). Energies 2025, 18, 488. https://doi.org/10.3390/en18030488

Huo Y, Dai X, Tang Z, Xiao Y, Zhang Y, Fang X. A Three-Granularity Pose Estimation Framework for Multi-Type High-Voltage Transmission Towers Using Part Affinity Fields (PAFs). Energies. 2025; 18(3):488. https://doi.org/10.3390/en18030488

Chicago/Turabian StyleHuo, Yaoran, Xu Dai, Zhenyu Tang, Yuhao Xiao, Yupeng Zhang, and Xia Fang. 2025. "A Three-Granularity Pose Estimation Framework for Multi-Type High-Voltage Transmission Towers Using Part Affinity Fields (PAFs)" Energies 18, no. 3: 488. https://doi.org/10.3390/en18030488

APA StyleHuo, Y., Dai, X., Tang, Z., Xiao, Y., Zhang, Y., & Fang, X. (2025). A Three-Granularity Pose Estimation Framework for Multi-Type High-Voltage Transmission Towers Using Part Affinity Fields (PAFs). Energies, 18(3), 488. https://doi.org/10.3390/en18030488