Reinforcement Learning for Optimizing Renewable Energy Utilization in Buildings: A Review on Applications and Innovations

Abstract

:1. Introduction

1.1. Motivation

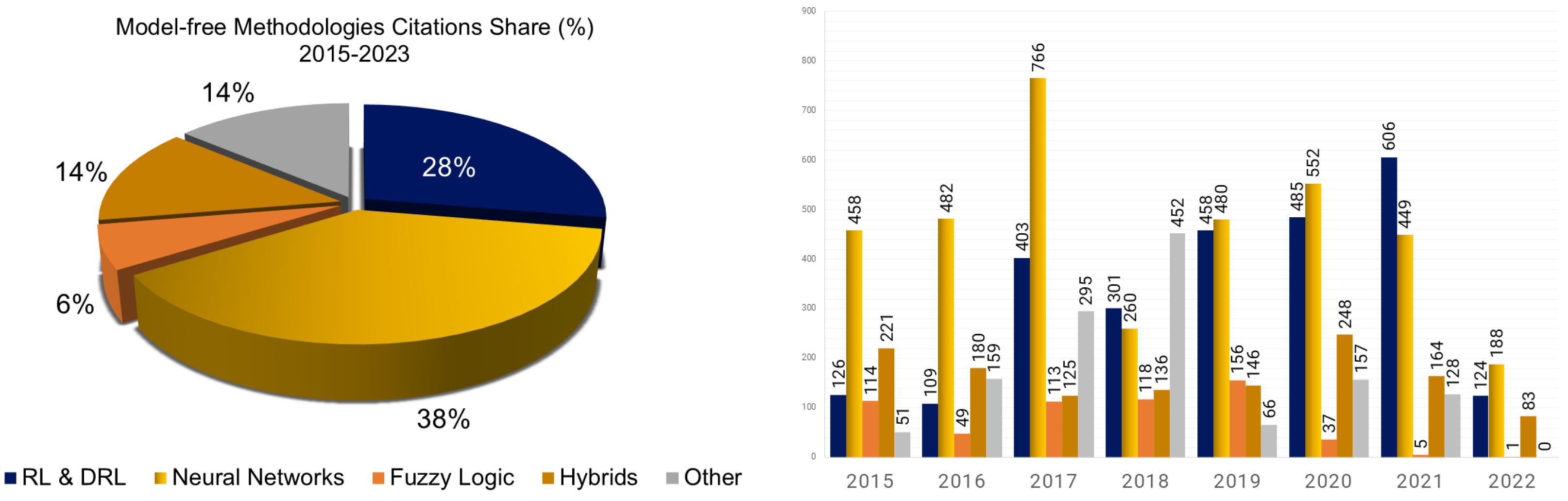

1.2. Literature Analysis Approach

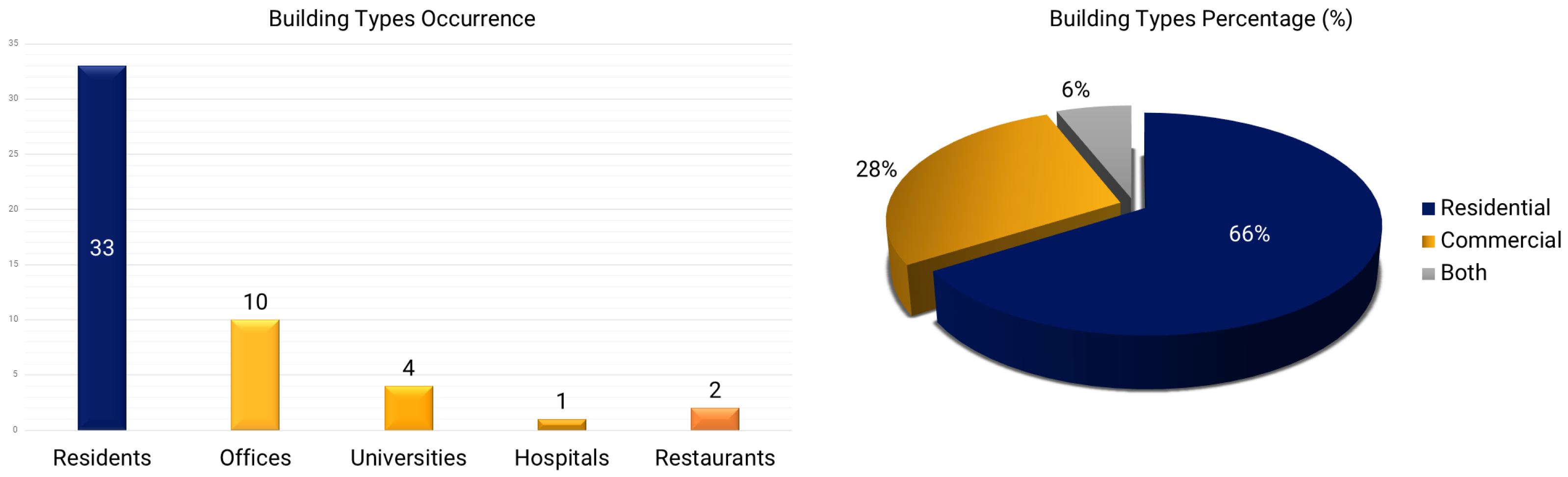

- Article Selection: A rigorous selection process was conducted using academic databases such as Scopus- and Web of Science (WoS)-indexed peer-reviewed journals and conferences to ensure quality and reliability. An initial pool of over 300 papers was reviewed based on abstracts, from which the most relevant studies were shortlisted for detailed analysis. More specifically, the work followed a multi-step quality assessment process considering the following: Citation Impact (studies with at least 10 citations—excluding self-citations—were selected to ensure academic influence, verified via Scopus at the time of selection); Relevance (only papers explicitly addressing RL-based control for RES-integrated BEMS were included, excluding studies on fault detection, generic demand-side management, or isolated RES control without RL); Peer Review (only peer-reviewed journal articles and high-quality conference proceedings—IEEE, Elsevier, Springer, MDPI—were considered, excluding preprints and non-peer-reviewed reports); Rigor (selected studies had to clearly describe RL implementation, optimization objectives, and evaluation frameworks, with benchmark comparisons and experimental validation); Diversity (a balanced selection of value-based, policy-based, actor-critic, and hybrid RL methods was ensured, covering various building types—residential, commercial, university, hospital—and RES-Integrated BEMS setups).

- Keyword Research: A comprehensive keyword analysis was performed, incorporating terms such as “Reinforcement Learning in RES for buildings”, “RL control in BEMS”, “RL-based energy management” and other specific phrases related to RES integration. This approach ensured a broad yet precise capture of the challenges and advancements in RL-based RES management.

- Data Collection: Each publication was systematically categorized based on the RL techniques applied to RES control, the integration of additional energy systems, the specific application context, and evaluations of the advantages, limitations, and practical implications within building energy management.

- Quality Assessment: A structured quality assessment was carried out, considering citation count, the academic contributions of authors, and the methodological rigor of each study. More specifically, the citation-based selection concerned a total number of more than ten citations acting as a measure of academic impact, ensuring the inclusion of studies with established influence. Citation counts, retrieved from Scopus and excluding self-citations, provided reliability. Preference was given to publications in high-impact journals and conferences. Moreover the research background of authors in RL, RES, BEMS was evaluated based on their publications in top-tier journals, contributions to widely adopted RL algorithms or methodological advancements in energy management, and affiliations with leading research institutions or energy-focused labs. Studies from authors with a strong track record in RL-based energy optimization were prioritized to ensure credibility.This evaluation helped determine the relative significance of each research work’s contribution in the field.

- Data Synthesis: Findings were synthesized into distinct categories, enabling clear comparisons across studies and facilitating a holistic understanding of the evolving landscape of RL-based control for RES in buildings.

1.3. Previous Work

1.4. Contribution and Novelty

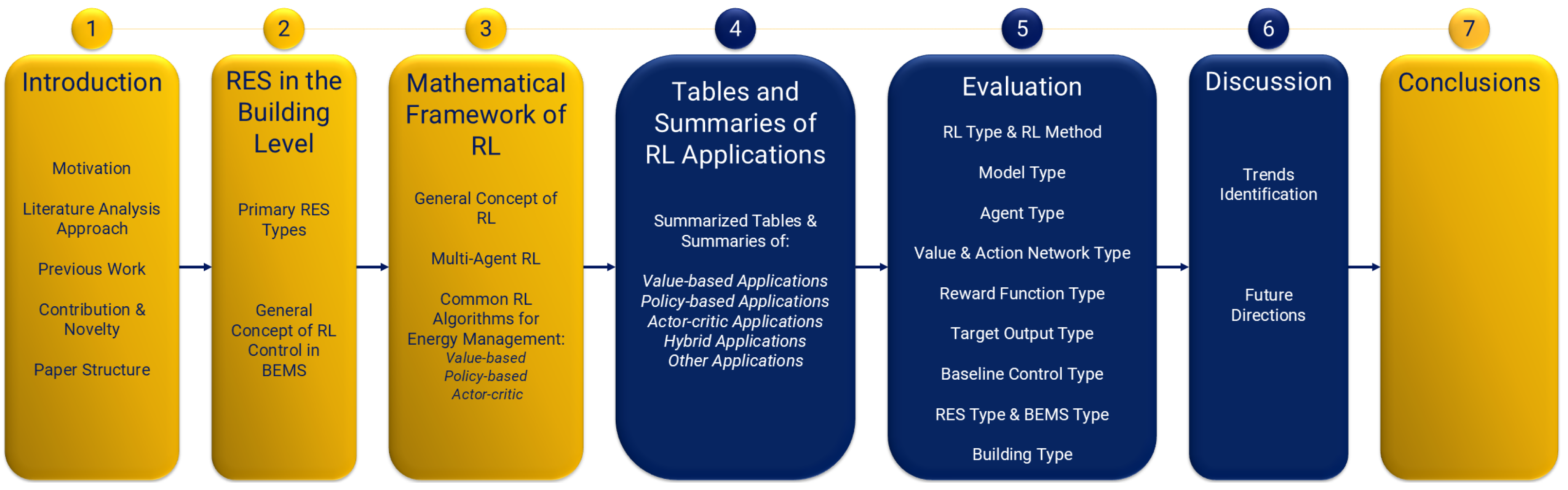

1.5. Paper Structure

2. Renewable Energy Systems in the Building Level

2.1. Primary RES Types

- Solar Photovoltaic Systems: PV systems generate electricity by converting sunlight directly into electrical energy through rooftop or facade-mounted solar panels. Their widespread adoption is driven by decreasing costs and ease of installation.

- Solar Water Heating Systems: Utilizing solar energy to produce heat, SWH systems are commonly used for water and space heating. They operate through solar collectors that absorb sunlight and transfer heat to a working fluid, which then supplies thermal energy to the building’s water heating or HVAC system.

- Wind Turbine Systems: Small-scale WT can be installed near buildings to harness wind energy for electricity generation. However, their feasibility is highly dependent on local wind conditions, zoning restrictions, and structural integration.

- Geothermal Heat Pumps: Also known as ground-source heat pumps, leverage the earth’s stable underground temperature for heating and cooling. By circulating a heat-exchange fluid through buried pipes, they provide an energy-efficient alternative to conventional HVAC systems.

- Biomass Energy Systems: Biomass systems convert organic materials—such as wood pellets, agricultural residues, or other bio-based fuels—into heat or electricity. They are particularly advantageous in regions with abundant biomass resources.

2.2. General Concept of Reinforcement Learning Control in BEMS

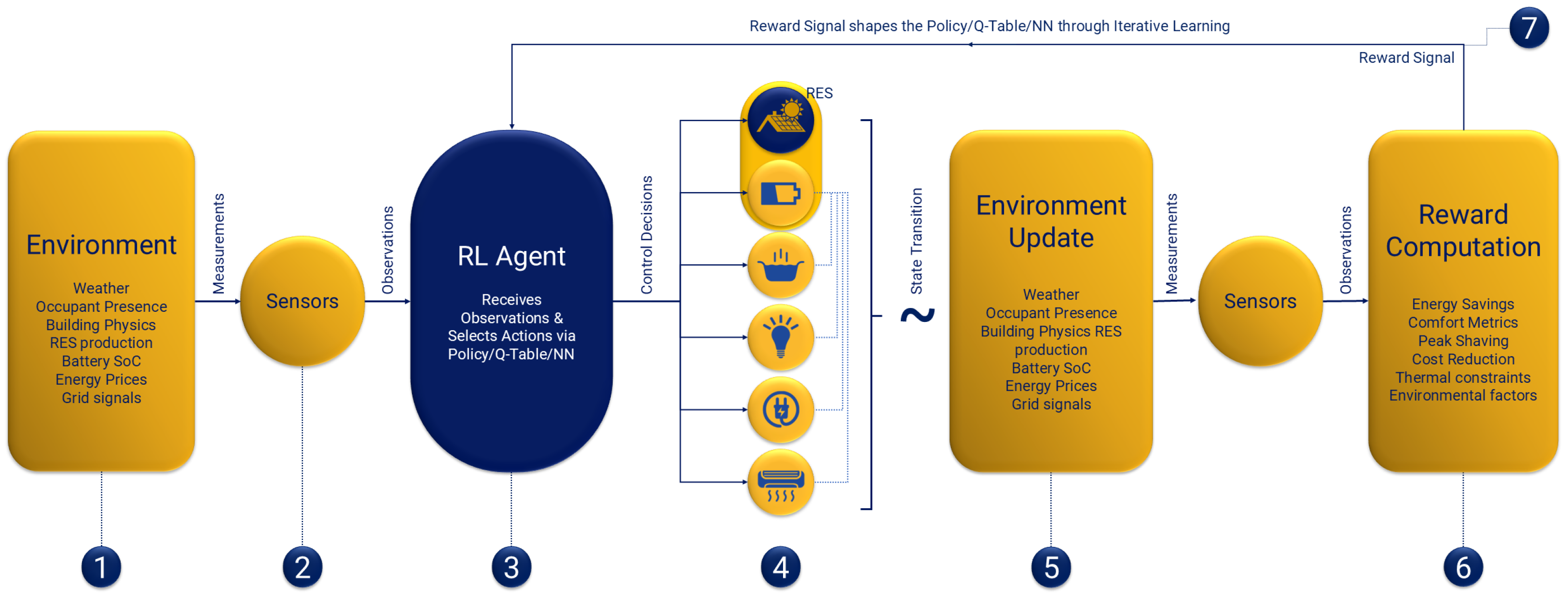

2.3. General Concept of Reinforcement Learning Control in BEMS

- Environment: The environment represents all external factors that the RL agent does not directly control but must respond to and account for. This includes both indoor and outdoor conditions, such as weather, occupant presence, building physics, PV/wind energy production, and battery states, as well as external signals like electricity prices and demand-response requests. Additionally, it encompasses constraints imposed by the power grid or regulatory frameworks. In essence, the environment encapsulates the entire building system and its dynamic interactions with external influences over time.

- Sensors: Sensors play a crucial role in collecting real-time data on the state of the environment, providing the RL agent with the necessary observations for decision-making. In a building context, this typically includes measurements of indoor temperature, occupancy, energy consumption, PV/wind energy output, and battery state of charge. Sensor inputs can originate from physical devices, such as temperature sensors, occupancy detectors, and power meters, or from virtual data streams, including electricity price signals and weather forecasts.

- RL Agent: The RL agent serves as the intelligent decision-making entity, processing environmental observations and selecting optimal control actions to enhance energy efficiency, reduce costs, and maintain occupant comfort. Depending on the RL approach, the agent’s learned policy may be represented using a Q-table (in traditional RL) or an artificial neural network (ANN) in DRL. The agent makes key operational decisions, such as adjusting HVAC setpoints, scheduling battery storage operations, managing EV charging, and optimizing renewable energy dispatch.

- Control Decision Application: Based on the learned policy, the RL agent applies its selected control actions in real time to optimize the building’s energy management. These decisions might involve regulating HVAC settings to maintain indoor comfort while minimizing energy consumption, scheduling battery charging and discharging to maximize renewable self-consumption and reduce peak loads, or managing PV output, determining whether to store excess solar energy, use it immediately, or feed it into the grid based on real-time electricity prices and demand.

- Environment Update: After the RL agent implements its decisions, the environment undergoes an update, reflecting the impact of the chosen actions. This includes changes in building physics, occupant behavior, weather conditions, and grid interactions, which collectively determine the new environmental state. Mathematically, this transition captures how the building and its systems evolve over time in response to both internal control strategies and external dynamics.

- Reward Computation: Following the environment update, a numerical reward is calculated to assess the effectiveness of the RL agent’s actions in the given timestep. The reward function may consider multiple factors, such as energy cost savings, occupant comfort levels, peak load reduction, adherence to thermal constraints, and environmental impact.

- Reward Signal Feedback: Finally, the computed reward is fed back into the RL algorithm, enabling the agent to refine its policy (whether through QL, an ANN, or another RL approach). By continuously interacting with the environment, learning from past actions, and adjusting its strategy accordingly, the RL agent progressively improves its decision-making to better achieve objectives such as minimizing costs, maintaining thermal comfort, and maximizing on-site renewable energy utilization.

3. Mathematical Framework of Reinforcement Learning

3.1. The General Concept of RL

3.2. Multi-Agent Reinforcement Learning

- One centralized critic with separate policy networks: A single critic processes global or aggregated information, while each agent retains its own policy.

- Fully separate critics and policies: Each agent independently learns both value functions and policies, with no shared parameters or central critic.

- A shared critic and policy across all agents: All agents operate under the same critic and policy, effectively functioning as a unified controller.

- Hybrid/partially shared: Certain components (e.g., parts of the critic or specific layers in the policy networks) are shared, while others remain agent-specific.

- Centralized Training, Decentralized Execution (CTDE): Agents leverage global or centralized information during learning but act independently in real-time.

- Fully Decentralized Training: Each agent learns solely from its own local observations and experiences, with no shared critic or global oversight.

- Fully Centralized Training: A single entity holds and updates all agent parameters, effectively treating the multi-agent environment as one large system.

- Mixed/Hybrid Training: Combines centralized feedback with decentralized updates, or vice versa, to balance local autonomy and global coordination.

- Implicit Coordination: Agents rely on shared objectives, rewards, or a global critic but do not communicate directly.

- Explicit Coordination: Agents exchange information or messages, enabling direct negotiation or data sharing for coordinated decision-making.

- Emergent Coordination: Cooperative behavior arises through repeated interactions within the environment, without any explicit mechanism or communication.

- Hierarchical Coordination: Leader-follower roles or multi-level decision architectures establish structured control and optimize collaborative objectives.

3.3. Common RL Algorithms for Energy Management

3.3.1. Value-Based Algorithms

- Q-Learning: QL is an off-policy algorithm that learns the optimal action-value function regardless of the policy being followed. This algorithm is widely used for RES control due to its simplicity and ability to converge to the optimal policy without requiring a model of the environment [75]. However, QL struggles with high-dimensional state spaces, leading to slow convergence [76]. It is primarily applied in small-scale RES systems, such as individual building HVAC control or battery storage management. QL learns the value of state-action pairs, , through iterative updates using the Bellman equation:where is the learning rate. QL performs well in discrete action spaces and has been applied to HVAC optimization in buildings [77].

- Deep Q-Networks: DQN addresses the limitations of QL by utilizing deep ANNs to approximate the Q-function, enabling the handling of complex, high-dimensional environments. DQN leverages experience replay and target networks to stabilize learning [66]. This makes DQN suitable for larger RES configurations where multi-variable control is required, such as managing distributed energy resources in commercial buildings. However, DQN is computationally intensive and requires significant tuning, making deployment in real-time systems challenging. DQN extends the QL approach by using deep ANNs to approximate values, enabling the handling of large state spaces [78]. The update rule may be expressed by the following equation:

3.3.2. Policy-Based Algorithms

- Proximal Policy Optimization (PPO): PPO entails primarily a policy-based approach because it directly optimizes the policy (the actor) by maximizing an objective function related to expected rewards. The policy is typically represented by a neural network that outputs action probabilities (for discrete actions) or parameters of a distribution (for continuous actions). PPO improves stability by limiting the policy update step, thereby preventing drastic policy changes [79]. PPO has been effectively applied to BEMS, particularly for optimizing HVAC systems and renewable energy dispatch. PPO strikes a balance between performance and computational efficiency, making it popular for large-scale applications [80].where and concern the advantage function.

3.3.3. Actor-Critic Algorithms

- Deep Deterministic Policy Gradient: DDPG presents an actor-critic algorithm that combines the strengths of policy gradient methods and QL. It is well suited for continuous action spaces, making it advantageous for applications such as battery energy storage control, where actions like charge/discharge rates are continuous [81]. DDPG’s major advantage is its ability to handle high-dimensional, continuous control tasks. However, it is sensitive to hyperparameters and prone to instability during training. DDPG portrays an off-policy algorithm suited for continuous action spaces, combining actor-critic architectures [81]. The policy (actor) updates based on gradients derived from the critic’s Q-function.

- Soft Actor-Critic (SAC): SAC maximizes entropy, encouraging exploration and preventing premature convergence to suboptimal policies. Such algorithms have proven effective in RES control for smart grids and microgrids, where the environment is highly dynamic and stochastic [82]. SAC’s primary benefit lies in its robustness to environmental noise and ability to achieve high performance across varying conditions. However, it requires substantial computational resources. SAC achieves sample efficiency and is well suited for real-time energy management applications but requires careful balance of the entropy coefficient .

- Advantage Actor-Critic: A2C concerns a synchronous, deterministic version of the asynchronous actor-critic framework. It uses both a policy network (actor) to choose actions and a value network (critic) to evaluate them, optimizing both simultaneously to improve learning efficiency. In the context of RES control for buildings, A2C is particularly useful for tasks that involve sequential decision-making under uncertainty, such as demand-side energy management and dynamic load scheduling [83]. A2C’s key advantage lies in training stability, in comparison to purely policy-based or value-based methods. A2C leverages the advantage function to reduce variance in policy gradient estimation, which accelerates convergence. However, its performance may be sensitive to hyperparameter tuning and might struggle with very high-dimensional action spaces without additional enhancements.The advantage function is calculated as the difference between the value of taking an action in a given state and the baseline value of the state:The policy gradient is updated to maximize the expected reward with reduced variance:A2C is well suited for moderately complex RES applications but can require enhancements like parallel training for scaling to larger and more complex environments.

4. Tables and Summaries of RL Applications

4.1. Summarized Tables

- Ref.: illustrating the reference application in the first column;

- Year: illustrating the publication year for each research application;

- Method: illustrating the specific RL algorithmic methodology applied in each work;

- Agent: illustrating the agent type of the concerned methodology (single-agent or multi-agent RL approach)

- BEMS: illustrating the combination of energy systems within BEMS RES technology integrated in the energy mix (e.g. PV, ESS, TSS, SHW, WT, HVAC, HP, GHP, BIO, etc.)

- Residential: defining if the testbed application concerns a residential building control application with an “x”;

- Commercial: defining if the testbed application concerns a commercial building control application with an “x”;

- Simulation: defining if the testbed application concerns a simulative building control application with an “x”;

- Real-life: defining if the testbed application concerns a real-life building control application with an “x”;

- Citations: portrays the number of citations—according to Scopus—of each work.

4.2. Summaries of Applications

5. Evaluation

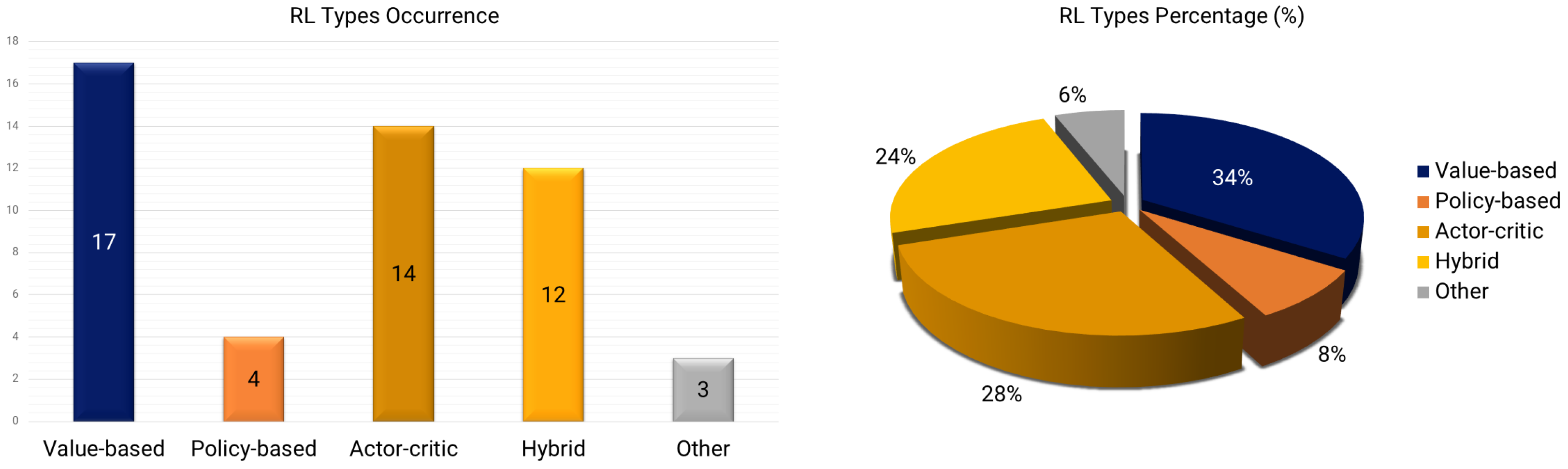

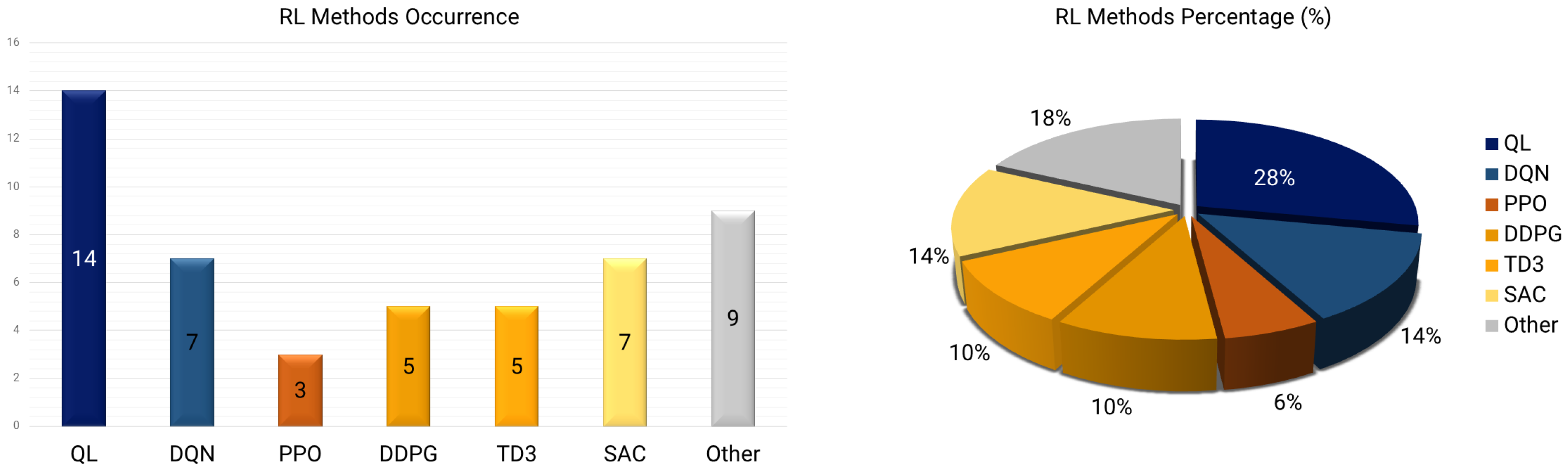

5.1. RL Types and RL Methods

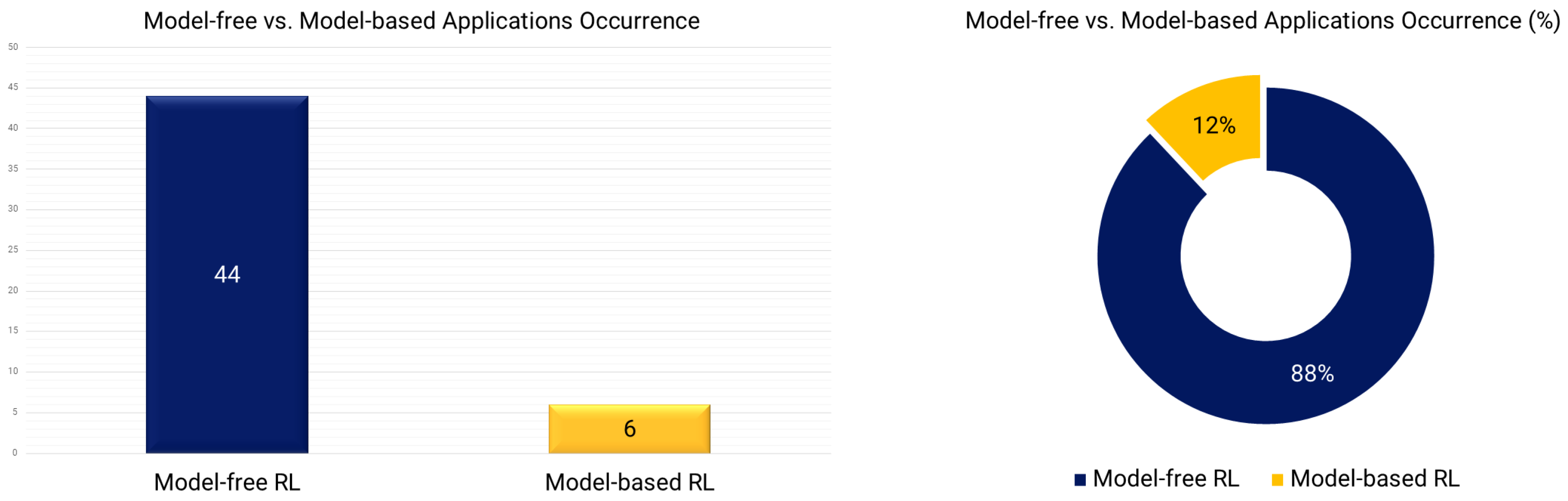

5.2. Model Types

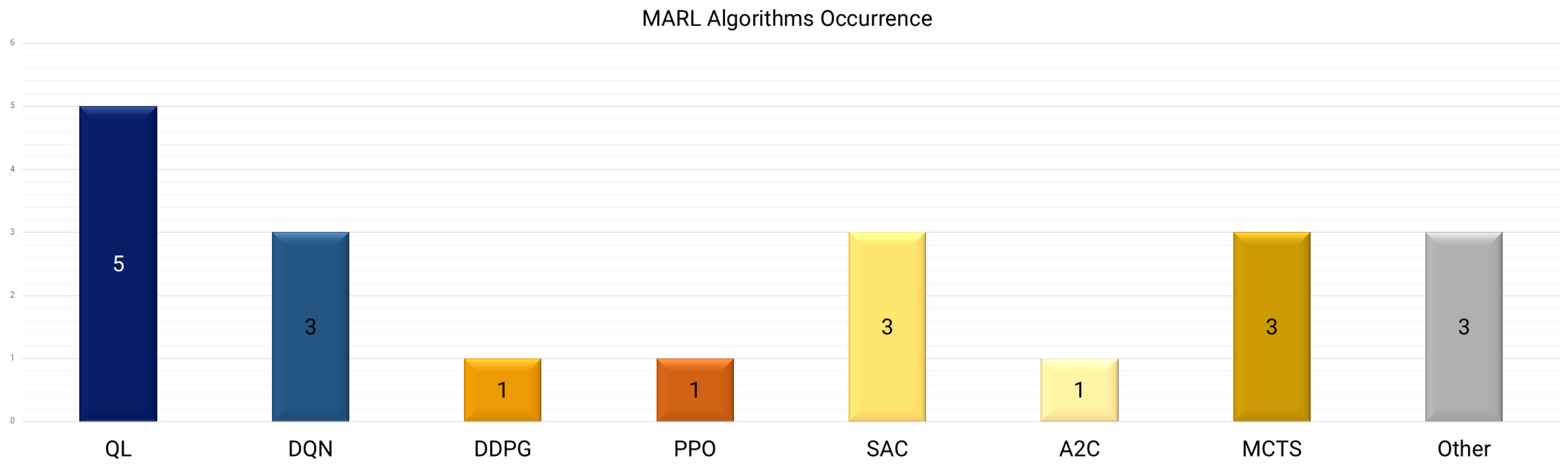

5.3. Agent Type

5.4. Value and Action Network Types

- Value Networks in MARL Applications: Value networks in MARL serve as the critic, helping agents estimate the expected return for state-action pairs. Two dominant approaches emerge in the existing research: fully decentralized critics, where each agent maintains its own independent Q-network, and centralized critics, which leverage global information to enhance coordination. Studies such as [84,90,91] demonstrate that fully separate critics are advantageous when agents operate independently with minimal reliance on global coordination. Such approaches enable robust, distributed learning but often suffer from non-stationarity, as agents adjust their strategies independently, causing instability in learning dynamics. In contrast, centralized critics, as employed in [85,102,109,116,130], integrate joint state-action evaluations to improve cooperation between agents. The ability to assess system-wide performance leads to more stable and coordinated energy management strategies. However, such practice may introduce scalability challenges, as sharing global state information may be computationally intensive and require significant communication overhead. The trade-off between decentralized and centralized critics is thus driven by the scale of deployment and the necessity for real-time agent collaboration.

- Action Networks in MARL Applications: Action networks—or policy networks—determine how agents select actions. The choice between independent and coordinated policy networks is crucial in defining MARL strategies for BEMS. In fully decentralized settings, as seen in [84,90,91,97,115], each agent learns and optimizes its policy independently, often through deep QL or actor-critic models. This method ensures resilience and local autonomy, allowing each agent to optimize its own objectives. However, the lack of explicit cooperation mechanisms may lead to conflicts in decision-making, where independent policies may not align with overall energy efficiency goals. On the other hand, coordinated policy networks found in [85,102,116,131], introduce mechanisms to balance local autonomy with system-wide optimization. Such approaches often adopt a centralized training, decentralized execution (CTDE) paradigm, allowing agents to learn with shared knowledge while maintaining independent execution capabilities. The integration of federated RL [109] or shared reward functions [131] further enhances cooperative learning by aligning incentives among distributed agents without imposing direct communication constraints.

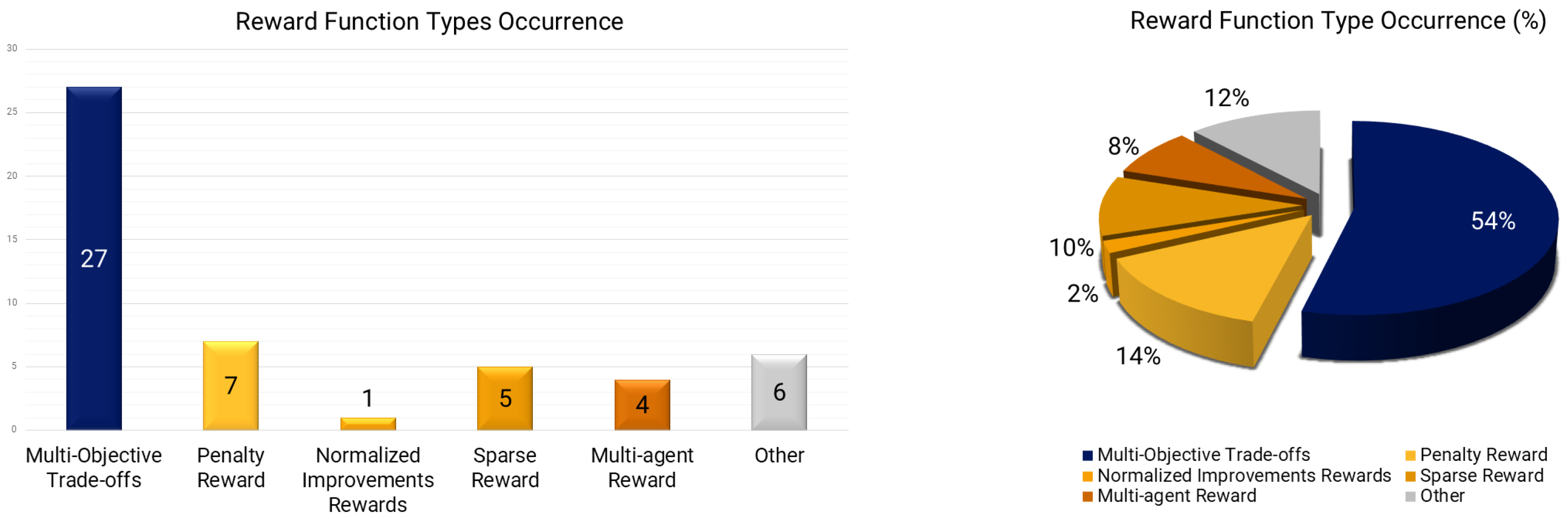

5.5. Reward Function Type

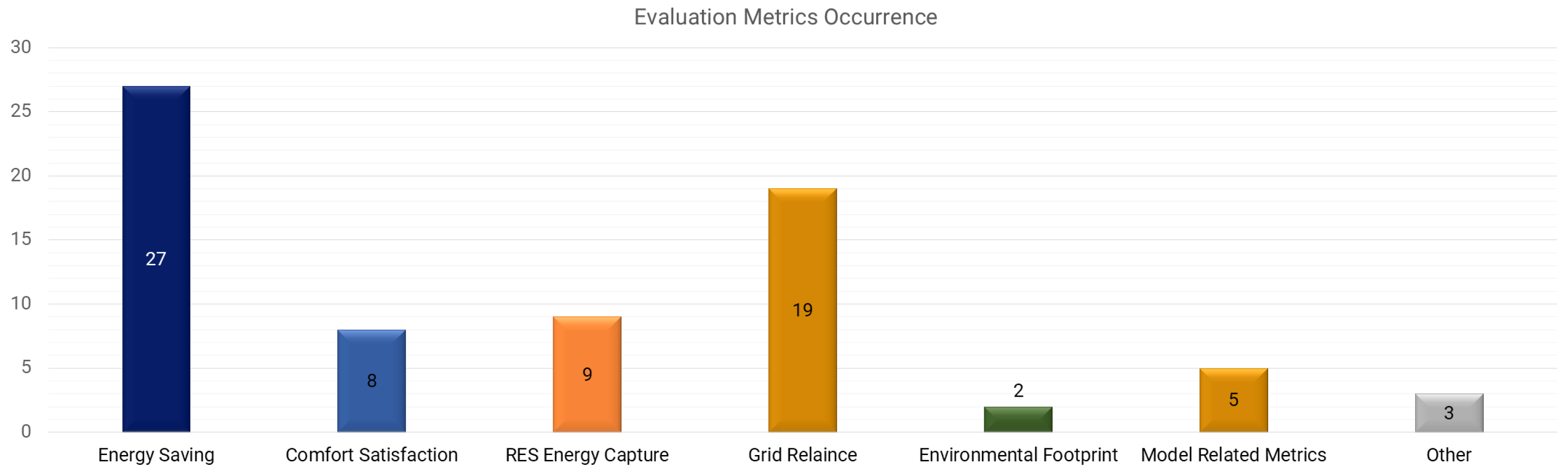

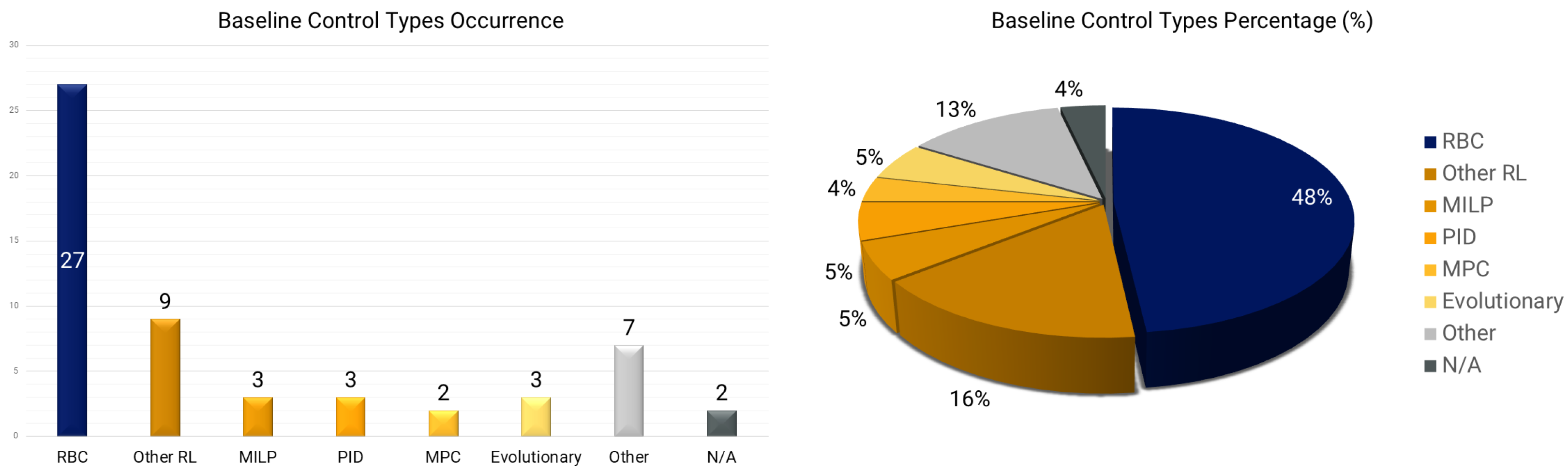

5.6. Target Output

5.7. Baseline Control

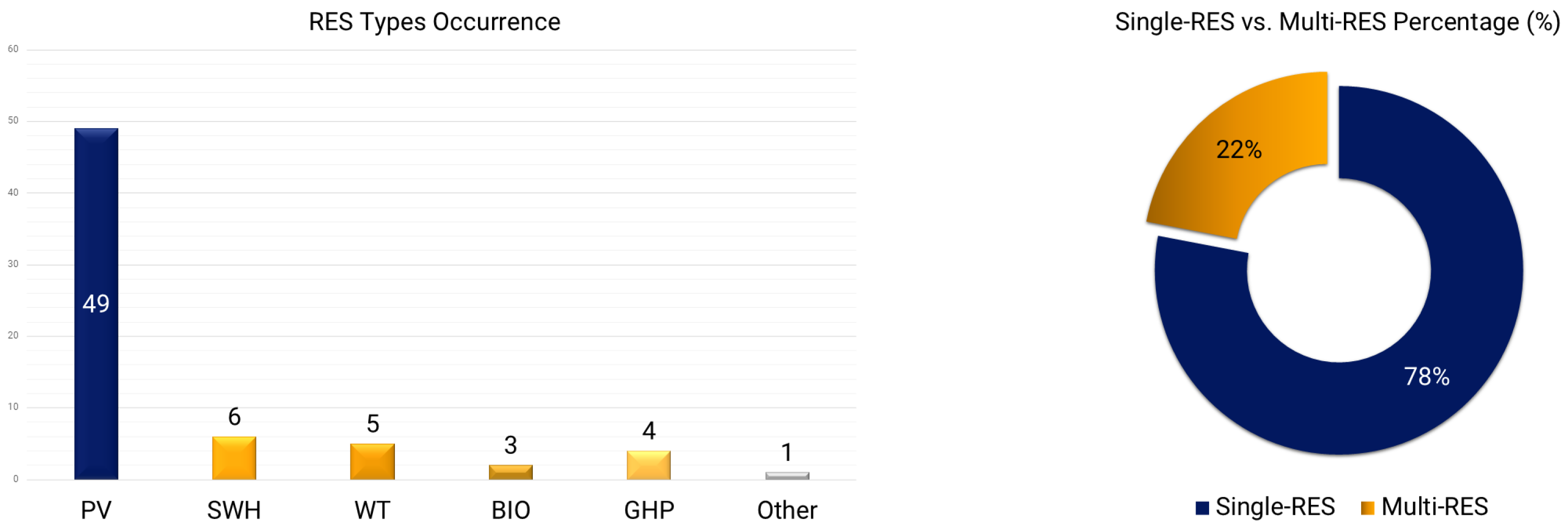

5.8. RES Type

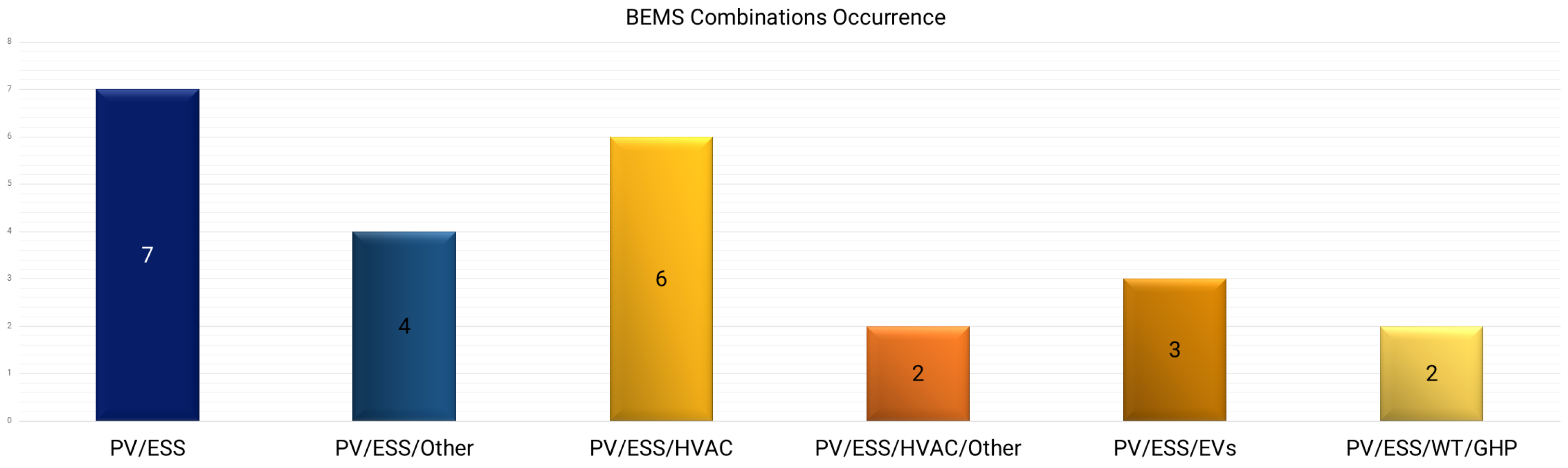

5.9. BEMS Type

5.10. Building Type

6. Discussion

6.1. Trends Identification

- ✓

- Dominance of value-based RL and the rise of actor-critic methods: The evaluation indicates that value-based RL, particularly QL, has been the most applied method due to its simplicity and effectiveness in handling discrete state-action spaces [84,88,89,91,92,93,94,99]. However, as RL applications become more complex, there is a noticeable shift toward actor-critic methods, such as TD3, DDPG, and SAC, which are better suited for high-dimensional, continuous control problems [105,107,113,115,117,118]. These methodologies integrate elements of building physics to enhance learning efficiency and stability while reducing convergence failures [86,103,111]. Despite their higher computational demands, actor-critic frameworks are emerging as a promising alternative for real-time energy optimization.

- ✓

- Hybrid RL for stability and reliability: A growing number of studies integrate RL with complementary control techniques, such as FLC, MPC, or heuristic RBC [104,121,127,128,131,134] (see Figure 4). This hybridization ensures that RL agents operate within safe and reliable constraints, preventing failures in real-world scenarios. Hybrid RL approaches seem particularly effective in energy systems with slow thermal responses, where traditional control handles short-term fluctuations while RL optimizes long-term performance.

- ✓

- Widespread adoption of multi-agent reinforcement learning (MARL) for complex systems: Nearly 40 percent of studies now employ MARL to manage complex energy ecosystems where multiple subsystems require coordinated control [97,98,100,102,116,133] (see Figure 7). This decentralized approach enhances adaptability to fluctuating renewable energy system generation and dynamic energy consumption patterns. However, MARL still faces challenges, such as increased communication overhead and coordination inefficiencies, necessitating advanced mechanisms for agent interaction and stability [110,117,123].

- ✓

- Multi-objective RL for balanced decision-making: RL applications increasingly incorporate multi-objective optimization to balance competing targets, such as energy cost, thermal comfort, carbon emissions, and peak demand reduction [93,101,107,110,117,118] (see Figure 12). Rather than optimizing a single reward function, recent studies introduce weighted trade-offs and explicit constraints to ensure practical feasibility. Some works embed predefined comfort or CO2 emission thresholds into the learning process, aligning RL policies with regulatory requirements.

- ✓

- Focus on federated, privacy-preserving, and real-time RL: An increasing number of studies explore federated learning in RL-based BEMS, where multiple buildings train local models and share aggregated updates instead of raw data [106,109,116]. This approach enhances data privacy and security while supporting distributed learning. Additionally, there is a shift toward real-time RL applications capable of responding to changing conditions within minutes, moving beyond day-ahead scheduling toward sub-15-minute decision cycles [103,111,131].

6.2. Future Directions

- ➠

- Advancing hybrid RL and algorithmic innovations: Future research should focus on more sophisticated hybrid RL approaches that blend model-based and model-free paradigms to enhance learning efficiency and decision robustness. Current studies highlight the trend of combining RL with predictive models, FLC, and MDP to mitigate convergence issues and accelerate policy adaptation [121,127,131,134]. Future advancements should ensure that RL agents leverage prior knowledge while maintaining adaptability to unforeseen conditions.

- ➠

- Scalable and hierarchical MARL: As BEMS extend beyond individual buildings to microgrids and districts, flat MARL architectures may become inefficient due to increased communication overhead and coordination challenges. Hierarchical RL structures, where high-level policies coordinate multiple lower-level subsystems, can enhance scalability and stability [97,100].

- ➠

- Expanding storage utilization beyond batteries: While battery storage is widely studied, other forms of ESS, such as thermal mass, phase-change materials, and distributed heating/cooling reserves, remain underexplored (see Figure 13, Left). Future research should focus on RL strategies that leverage thermal storage as a controllable resource, such as pre-cooling or intelligent heat storage management [98,133].

- ➠

- Accelerating RL adaptation across buildings and climates: RL models trained for specific buildings often struggle when transferred to different environments due to variations in climate, occupancy, and infrastructure. Future research should develop meta-learning and domain-adaptation techniques to enable RL agents to rapidly adjust to new settings [90,101].

- ➠

- Ensuring safety and compliance with constraints: For RL to be widely adopted in BEMS, safety assurances must be embedded directly into control policies. Techniques such as constrained RL, shielded RL, and Lyapunov-based critics will be essential to ensuring energy systems operate within predefined limits while preventing failures and regulatory non-compliance.

- ➠

- Transition from simulations to real-world deployment: Despite significant progress in RL-driven energy management, most studies remain confined to simulation environments. Notably, only two works among the overall dataset implement real-life experiments considering RL in RES-integrated BEMS. Future research must prioritize real-world implementation with robust fallback mechanisms, ensuring safe operation under unexpected conditions [86].

7. Conclusions

Funding

Conflicts of Interest

Abbreviations

| A2C | Advantage Actor-Critic |

| BEMS | Building Energy Management System |

| BIO | Biomass Energy Systems |

| DDPG | Deep Deterministic Policy Gradient |

| DDQN | Double Deep Q-Network |

| D3QN | Dueling Double Deep Q-Network |

| DHW | Domestic Hot Water |

| DQN | Deep Q-Network |

| DR | Demand Response |

| DRL | Deep Reinforcement Learning |

| ESS | Energy Storage System |

| EVs | Electric Vehicles |

| FLC | Fuzzy Logic Control |

| GA | Genetic Algorithm |

| GHP | Ground Heat Pump |

| HEMS | Home Energy Management System |

| HP | Heat Pump |

| HVAC | Heating, Ventilation, and Air Conditioning |

| LS | Lighting System |

| LSTM | Long Short-Term Memory |

| MARL | Multi-Agent Reinforcement Learning |

| MCTS | Monte Carlo Tree Search |

| MDP | Markov Decision Process |

| MPC | Model Predictive Control |

| ANN | Artificial Neural Network |

| NZEB | Net-Zero Energy Building |

| PAR | Peak-to-Average Ratio |

| PV | Photovoltaic |

| QL | Q-Learning |

| RBC | Rule-Based Control |

| RES | Renewable Energy System |

| RL | Reinforcement Learning |

| SAC | Soft Actor-Critic |

| SWH | Solar Water Heating |

| TD3 | Twin Delayed Deep Deterministic Policy Gradient |

| TSS | Thermal Storage System |

| V2G | Vehicle-to-Grid |

| V2H | Vehicle-to-Home |

| WT | Wind Turbine |

References

- Economidou, M.; Todeschi, V.; Bertoldi, P.; D’Agostino, D.; Zangheri, P.; Castellazzi, L. Review of 50 years of EU energy efficiency policies for buildings. Energy Build. 2020, 225, 110322. [Google Scholar] [CrossRef]

- Pavel, T.; Polina, S.; Liubov, N. The research of the impact of energy efficiency on mitigating greenhouse gas emissions at the national level. Energy Convers. Manag. 2024, 314, 118671. [Google Scholar] [CrossRef]

- Ye, J.; Fanyang, Y.; Wang, J.; Meng, S.; Tang, D. A Literature Review of Green Building Policies: Perspectives from Bibliometric Analysis. Buildings 2024, 14, 2607. [Google Scholar] [CrossRef]

- Reddy, V.J.; Hariram, N.; Ghazali, M.F.; Kumarasamy, S. Pathway to sustainability: An overview of renewable energy integration in building systems. Sustainability 2024, 16, 638. [Google Scholar] [CrossRef]

- Rehmani, M.H.; Reisslein, M.; Rachedi, A.; Erol-Kantarci, M.; Radenkovic, M. Integrating renewable energy resources into the smart grid: Recent developments in information and communication technologies. IEEE Trans. Ind. Inform. 2018, 14, 2814–2825. [Google Scholar] [CrossRef]

- Harvey, L.D. Reducing energy use in the buildings sector: Measures, costs, and examples. Energy Effic. 2009, 2, 139–163. [Google Scholar] [CrossRef]

- Chel, A.; Kaushik, G. Renewable energy technologies for sustainable development of energy efficient building. Alex. Eng. J. 2018, 57, 655–669. [Google Scholar] [CrossRef]

- Farghali, M.; Osman, A.I.; Mohamed, I.M.; Chen, Z.; Chen, L.; Ihara, I.; Yap, P.S.; Rooney, D.W. Strategies to save energy in the context of the energy crisis: A review. Environ. Chem. Lett. 2023, 21, 2003–2039. [Google Scholar] [CrossRef]

- Yudelson, J. The Green Building Revolution; Island Press: Washington, DC, USA, 2010. [Google Scholar]

- Cao, X.; Dai, X.; Liu, J. Building energy-consumption status worldwide and the state-of-the-art technologies for zero-energy buildings during the past decade. Energy Build. 2016, 128, 198–213. [Google Scholar] [CrossRef]

- Gielen, D.; Boshell, F.; Saygin, D.; Bazilian, M.D.; Wagner, N.; Gorini, R. The role of renewable energy in the global energy transformation. Energy Strategy Rev. 2019, 24, 38–50. [Google Scholar] [CrossRef]

- Mogoș, R.I.; Petrescu, I.; Chiotan, R.A.; Crețu, R.C.; Troacă, V.A.; Mogoș, P.L. Greenhouse gas emissions and Green Deal in the European Union. Front. Environ. Sci. 2023, 11, 1141473. [Google Scholar]

- Famà, R. REPowerEU. In Research Handbook on Post-Pandemic EU Economic Governance and NGEU Law; Edward Elgar Publishing: London, UK, 2024; pp. 128–143. [Google Scholar]

- Jäger-Waldau, A.; Bodis, K.; Kougias, I.; Szabo, S. The New European Renewable Energy Directive-Opportunities and Challenges for Photovoltaics. In Proceedings of the 2019 IEEE 46th Photovoltaic Specialists Conference (PVSC), Chicago, IL, USA, 16–21 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 0592–0594. [Google Scholar]

- Fetsis, P. The LIFE Programme–Over 20 Years Improving Sustainability in the Built Environment in the EU. Procedia Environ. Sci. 2017, 38, 913–918. [Google Scholar]

- Hassan, Q.; Algburi, S.; Sameen, A.Z.; Salman, H.M.; Jaszczur, M. A review of hybrid renewable energy systems: Solar and wind-powered solutions: Challenges, opportunities, and policy implications. Results Eng. 2023, 20, 101621. [Google Scholar] [CrossRef]

- Kalogirou, S.A. Building integration of solar renewable energy systems towards zero or nearly zero energy buildings. Int. J. Low-Carbon Technol. 2015, 10, 379–385. [Google Scholar]

- Orikpete, O.F.; Ikemba, S.; Ewim, D.R.E. Integration of renewable energy technologies in smart building design for enhanced energy efficiency and self-sufficiency. J. Eng. Exact Sci. 2023, 9, 16423-01e. [Google Scholar] [CrossRef]

- Saloux, E.; Teyssedou, A.; Sorin, M. Analysis of photovoltaic (PV) and photovoltaic/thermal (PV/T) systems using the exergy method. Energy Build. 2013, 67, 275–285. [Google Scholar]

- Michailidis, P.; Michailidis, I.; Kosmatopoulos, E. Review and Evaluation of Multi-Agent Control Applications for Energy Management in Buildings. Energies 2024, 17, 4835. [Google Scholar] [CrossRef]

- Abdulraheem, A.; Lee, S.; Jung, I.Y. Dynamic Personalized Thermal Comfort Model: Integrating Temporal Dynamics and Environmental Variability with Individual Preferences. J. Build. Eng. 2025, 102, 111938. [Google Scholar]

- Lu, X.; Fu, Y.; O’Neill, Z. Benchmarking high performance HVAC Rule-Based controls with advanced intelligent Controllers: A case study in a Multi-Zone system in Modelica. Energy Build. 2023, 284, 112854. [Google Scholar]

- Drgoňa, J.; Picard, D.; Kvasnica, M.; Helsen, L. Approximate model predictive building control via machine learning. Appl. Energy 2018, 218, 199–216. [Google Scholar] [CrossRef]

- Blinn, A.; Kue, U.R.S.; Kennel, F. A Comparison of Model Predictive Control and Heuristics in Building Energy Management. In Proceedings of the 2024 8th International Conference on Smart Grid and Smart Cities (ICSGSC), Shanghai, China, 25–27 October 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 275–285. [Google Scholar]

- Chen, Z.; Xiao, F.; Guo, F.; Yan, J. Interpretable machine learning for building energy management: A state-of-the-art review. Adv. Appl. Energy 2023, 9, 100123. [Google Scholar] [CrossRef]

- Ukoba, K.; Olatunji, K.O.; Adeoye, E.; Jen, T.C.; Madyira, D.M. Optimizing renewable energy systems through artificial intelligence: Review and future prospects. Energy Environ. 2024, 35, 3833–3879. [Google Scholar] [CrossRef]

- Shobanke, M.; Bhatt, M.; Shittu, E. Advancements and future outlook of Artificial Intelligence in energy and climate change modeling. Adv. Appl. Energy 2025, 17, 100211. [Google Scholar] [CrossRef]

- Pergantis, E.N.; Priyadarshan; Al Theeb, N.; Dhillon, P.; Ore, J.P.; Ziviani, D.; Groll, E.A.; Kircher, K.J. Field demonstration of predictive heating control for an all-electric house in a cold climate. Appl. Energy 2024, 360, 122820. [Google Scholar] [CrossRef]

- Manic, M.; Wijayasekara, D.; Amarasinghe, K.; Rodriguez-Andina, J.J. Building energy management systems: The age of intelligent and adaptive buildings. IEEE Ind. Electron. Mag. 2016, 10, 25–39. [Google Scholar] [CrossRef]

- Zia, M.F.; Elbouchikhi, E.; Benbouzid, M. Microgrids energy management systems: A critical review on methods, solutions, and prospects. Appl. Energy 2018, 222, 1033–1055. [Google Scholar] [CrossRef]

- Michailidis, P.; Michailidis, I.; Vamvakas, D.; Kosmatopoulos, E. Model-Free HVAC Control in Buildings: A Review. Energies 2023, 16, 7124. [Google Scholar] [CrossRef]

- Mariano-Hernández, D.; Hernández-Callejo, L.; Zorita-Lamadrid, A.; Duque-Pérez, O.; García, F.S. A review of strategies for building energy management system: Model predictive control, demand side management, optimization, and fault detect & diagnosis. J. Build. Eng. 2021, 33, 101692. [Google Scholar]

- Pergantis, E.N.; Dhillon, P.; Premer, L.D.R.; Lee, A.H.; Ziviani, D.; Kircher, K.J. Humidity-aware model predictive control for residential air conditioning: A field study. Build. Environ. 2024, 266, 112093. [Google Scholar] [CrossRef]

- Pergantis, E.N.; Premer, L.D.R.; Priyadarshan; Lee, A.H.; Dhillon, P.; Groll, E.A.; Ziviani, D.; Kircher, K.J. Latent and Sensible Model Predictive Controller Demonstration in a House During Cooling Operation. ASHRAE Trans. 2024, 130, 177–185. [Google Scholar]

- Michailidis, I.T.; Schild, T.; Sangi, R.; Michailidis, P.; Korkas, C.; Fütterer, J.; Müller, D.; Kosmatopoulos, E.B. Energy-efficient HVAC management using cooperative, self-trained, control agents: A real-life German building case study. Appl. Energy 2018, 211, 113–125. [Google Scholar]

- Michailidis, P.; Pelitaris, P.; Korkas, C.; Michailidis, I.; Baldi, S.; Kosmatopoulos, E. Enabling optimal energy management with minimal IoT requirements: A legacy A/C case study. Energies 2021, 14, 7910. [Google Scholar] [CrossRef]

- Michailidis, I.T.; Sangi, R.; Michailidis, P.; Schild, T.; Fuetterer, J.; Mueller, D.; Kosmatopoulos, E.B. Balancing energy efficiency with indoor comfort using smart control agents: A simulative case study. Energies 2020, 13, 6228. [Google Scholar] [CrossRef]

- Michailidis, P.; Michailidis, I.; Gkelios, S.; Kosmatopoulos, E. Artificial Neural Network Applications for Energy Management in Buildings: Current Trends and Future Directions. Energies 2024, 17, 570. [Google Scholar] [CrossRef]

- Li, S.E. Reinforcement Learning for Sequential Decision and Optimal Control; Springer: Berlin/Heidelberg, Germany, 2023. [Google Scholar]

- Vamvakas, D.; Michailidis, P.; Korkas, C.; Kosmatopoulos, E. Review and evaluation of reinforcement learning frameworks on smart grid applications. Energies 2023, 16, 5326. [Google Scholar] [CrossRef]

- Recht, B. A tour of reinforcement learning: The view from continuous control. Annu. Rev. Control Robot. Auton. Syst. 2019, 2, 253–279. [Google Scholar]

- Mohammadi, P.; Darshi, R.; Shamaghdari, S.; Siano, P. Comparative Analysis of Control Strategies for Microgrid Energy Management with a Focus on Reinforcement Learning. IEEE Access 2024, 12, 171368–171395. [Google Scholar] [CrossRef]

- Ruelens, F.; Claessens, B.J.; Vandael, S.; De Schutter, B.; Babuška, R.; Belmans, R. Residential demand response of thermostatically controlled loads using batch reinforcement learning. IEEE Trans. Smart Grid 2016, 8, 2149–2159. [Google Scholar] [CrossRef]

- Kadamala, K.; Chambers, D.; Barrett, E. Enhancing HVAC control systems through transfer learning with deep reinforcement learning agents. Smart Energy 2024, 13, 100131. [Google Scholar] [CrossRef]

- Al Sayed, K.; Boodi, A.; Broujeny, R.S.; Beddiar, K. Reinforcement learning for HVAC control in intelligent buildings: A technical and conceptual review. J. Build. Eng. 2024, 95, 110085. [Google Scholar] [CrossRef]

- Wang, Z.; Hong, T. Reinforcement learning for building controls: The opportunities and challenges. Appl. Energy 2020, 269, 115036. [Google Scholar] [CrossRef]

- Gautam, M. Deep Reinforcement learning for resilient power and energy systems: Progress, prospects, and future avenues. Electricity 2023, 4, 336–380. [Google Scholar] [CrossRef]

- Jiang, Z.; Risbeck, M.J.; Ramamurti, V.; Murugesan, S.; Amores, J.; Zhang, C.; Lee, Y.M.; Drees, K.H. Building HVAC control with reinforcement learning for reduction of energy cost and demand charge. Energy Build. 2021, 239, 110833. [Google Scholar] [CrossRef]

- Yu, L.; Qin, S.; Zhang, M.; Shen, C.; Jiang, T.; Guan, X. A review of deep reinforcement learning for smart building energy management. IEEE Internet Things J. 2021, 8, 12046–12063. [Google Scholar] [CrossRef]

- Liu, S.; Henze, G.P. Experimental analysis of simulated reinforcement learning control for active and passive building thermal storage inventory: Part 2: Results and analysis. Energy Build. 2006, 38, 148–161. [Google Scholar] [CrossRef]

- Lazaridis, C.R.; Michailidis, I.; Karatzinis, G.; Michailidis, P.; Kosmatopoulos, E. Evaluating Reinforcement Learning Algorithms in Residential Energy Saving and Comfort Management. Energies 2024, 17, 581. [Google Scholar] [CrossRef]

- Singh, Y.; Pal, N. Reinforcement learning with fuzzified reward approach for MPPT control of PV systems. Sustain. Energy Technol. Assess. 2021, 48, 101665. [Google Scholar] [CrossRef]

- Wang, Z.; Xue, W.; Li, K.; Tang, Z.; Liu, Y.; Zhang, F.; Cao, S.; Peng, X.; Wu, E.Q.; Zhou, H. Dynamic combustion optimization of a pulverized coal boiler considering the wall temperature constraints: A deep reinforcement learning-based framework. Appl. Therm. Eng. 2025, 259, 124923. [Google Scholar] [CrossRef]

- Perera, A.; Kamalaruban, P. Applications of reinforcement learning in energy systems. Renew. Sustain. Energy Rev. 2021, 137, 110618. [Google Scholar]

- Gaviria, J.F.; Narváez, G.; Guillen, C.; Giraldo, L.F.; Bressan, M. Machine learning in photovoltaic systems: A review. Renew. Energy 2022, 196, 298–318. [Google Scholar] [CrossRef]

- Fu, Q.; Han, Z.; Chen, J.; Lu, Y.; Wu, H.; Wang, Y. Applications of reinforcement learning for building energy efficiency control: A review. J. Build. Eng. 2022, 50, 104165. [Google Scholar]

- Rezaie, B.; Esmailzadeh, E.; Dincer, I. Renewable energy options for buildings: Case studies. Energy Build. 2011, 43, 56–65. [Google Scholar]

- Lin, Y.; Yang, W.; Hao, X.; Yu, C. Building integrated renewable energy. Energy Explor. Exploit. 2021, 39, 603–607. [Google Scholar]

- Hayter, S.J. Integrating Renewable Energy Systems in Buildings (Presentation); Technical report; National Renewable Energy Lab. (NREL): Golden, CO, USA, 2011. [Google Scholar]

- Le, T.V.; ChuDuc, H.; Tran, Q.X. Optimized Integration of Renewable Energy in Smart Buildings: A Systematic Review from Scopus Data. In Proceedings of the 2024 9th International Conference on Applying New Technology in Green Buildings (ATiGB), Danang, Vietnam, 30–31 August 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 397–402. [Google Scholar]

- Chen, L.; Hu, Y.; Wang, R.; Li, X.; Chen, Z.; Hua, J.; Osman, A.I.; Farghali, M.; Huang, L.; Li, J.; et al. Green building practices to integrate renewable energy in the construction sector: A review. Environ. Chem. Lett. 2024, 22, 751–784. [Google Scholar]

- Hayter, S.J.; Kandt, A. Renewable Energy Applications for Existing Buildings; Technical report; National Renewable Energy Lab. (NREL): Golden, CO, USA, 2011. [Google Scholar]

- Vassiliades, C.; Agathokleous, R.; Barone, G.; Forzano, C.; Giuzio, G.; Palombo, A.; Buonomano, A.; Kalogirou, S. Building integration of active solar energy systems: A review of geometrical and architectural characteristics. Renew. Sustain. Energy Rev. 2022, 164, 112482. [Google Scholar]

- Bougiatioti, F.; Michael, A. The architectural integration of active solar systems. Building applications in the Eastern Mediterranean region. Renew. Sustain. Energy Rev. 2015, 47, 966–982. [Google Scholar] [CrossRef]

- Canale, L.; Di Fazio, A.R.; Russo, M.; Frattolillo, A.; Dell’Isola, M. An overview on functional integration of hybrid renewable energy systems in multi-energy buildings. Energies 2021, 14, 1078. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Ahrarinouri, M.; Rastegar, M.; Seifi, A.R. Multiagent reinforcement learning for energy management in residential buildings. IEEE Trans. Ind. Inform. 2020, 17, 659–666. [Google Scholar]

- Hernandez-Leal, P.; Kartal, B.; Taylor, M.E. A survey and critique of multiagent deep reinforcement learning. Auton. Agents Multi-Agent Syst. 2019, 33, 750–797. [Google Scholar]

- Busoniu, L.; Babuska, R.; De Schutter, B. A comprehensive survey of multiagent reinforcement learning. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2008, 38, 156–172. [Google Scholar]

- Castellini, J.; Oliehoek, F.A.; Savani, R.; Whiteson, S. The representational capacity of action-value networks for multi-agent reinforcement learning. arXiv 2019, arXiv:1902.07497. [Google Scholar]

- Sheng, J.; Wang, X.; Jin, B.; Yan, J.; Li, W.; Chang, T.H.; Wang, J.; Zha, H. Learning structured communication for multi-agent reinforcement learning. Auton. Agents Multi-Agent Syst. 2022, 36, 50. [Google Scholar]

- Canese, L.; Cardarilli, G.C.; Di Nunzio, L.; Fazzolari, R.; Giardino, D.; Re, M.; Spanò, S. Multi-agent reinforcement learning: A review of challenges and applications. Appl. Sci. 2021, 11, 4948. [Google Scholar] [CrossRef]

- Xie, H.; Song, G.; Shi, Z.; Zhang, J.; Lin, Z.; Yu, Q.; Fu, H.; Song, X.; Zhang, H. Reinforcement learning for vehicle-to-grid: A review. Adv. Appl. Energy 2025, 17, 100214. [Google Scholar]

- Mohammadi, P.; Nasiri, A.; Darshi, R.; Shirzad, A.; Abdollahipour, R. Achieving Cost Efficiency in Cloud Data Centers Through Model-Free Q-Learning. In Proceedings of the International Conference on Electrical and Electronics Engineering, Marmaris, Turkey, 22–24 April 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 457–468. [Google Scholar]

- Watkins, C.J.; Dayan, P. Q-learning. Mach. Learn. 1992, 8, 279–292. [Google Scholar]

- Zhang, H.; Seal, S.; Wu, D.; Bouffard, F.; Boulet, B. Building energy management with reinforcement learning and model predictive control: A survey. IEEE Access 2022, 10, 27853–27862. [Google Scholar] [CrossRef]

- Wei, T.; Wang, Y.; Zhu, Q. Deep reinforcement learning for building HVAC control. In Proceedings of the 54th Annual Design Automation Conference 2017, Austin, TX, USA, 18–22 June 2017; pp. 1–6. [Google Scholar]

- Wang, Y.; He, H.; Tan, X. Truly proximal policy optimization. In Proceedings of the Uncertainty in Artificial Intelligence, PMLR, Virtual, 3–6 August 2020; pp. 113–122. [Google Scholar]

- Bolt, P.; Ziebart, V.; Jaeger, C.; Schmid, N.; Stadelmann, T.; Füchslin, R.M. A simulation study on energy optimization in building control with reinforcement learning. In Proceedings of the IAPR Workshop on Artificial Neural Networks in Pattern Recognition, Montréal, QC, Canada, 10–12 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 320–331. [Google Scholar]

- Sumiea, E.H.; Abdulkadir, S.J.; Alhussian, H.S.; Al-Selwi, S.M.; Alqushaibi, A.; Ragab, M.G.; Fati, S.M. Deep deterministic policy gradient algorithm: A systematic review. Heliyon 2024, 10, e30697. [Google Scholar]

- Ding, F.; Ma, G.; Chen, Z.; Gao, J.; Li, P. Averaged Soft Actor-Critic for Deep Reinforcement Learning. Complexity 2021, 2021, 6658724. [Google Scholar] [CrossRef]

- Dong, J.; Wang, H.; Yang, J.; Lu, X.; Gao, L.; Zhou, X. Optimal scheduling framework of electricity-gas-heat integrated energy system based on asynchronous advantage actor-critic algorithm. IEEE Access 2021, 9, 139685–139696. [Google Scholar]

- Yang, L.; Nagy, Z.; Goffin, P.; Schlueter, A. Reinforcement learning for optimal control of low exergy buildings. Appl. Energy 2015, 156, 577–586. [Google Scholar] [CrossRef]

- Raju, L.; Sankar, S.; Milton, R. Distributed optimization of solar micro-grid using multi agent reinforcement learning. Procedia Comput. Sci. 2015, 46, 231–239. [Google Scholar]

- De Somer, O.; Soares, A.; Vanthournout, K.; Spiessens, F.; Kuijpers, T.; Vossen, K. Using reinforcement learning for demand response of domestic hot water buffers: A real-life demonstration. In Proceedings of the 2017 IEEE PES Innovative Smart Grid Technologies Conference Europe (ISGT-Europe), Torino, Italy, 26–29 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–7. [Google Scholar]

- Ebell, N.; Heinrich, F.; Schlund, J.; Pruckner, M. Reinforcement learning control algorithm for a pv-battery-system providing frequency containment reserve power. In Proceedings of the 2018 IEEE International Conference on Communications, Control, and Computing Technologies for Smart Grids (SmartGridComm), Aalborg, Denmark, 29–31 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar]

- Remani, T.; Jasmin, E.; Ahamed, T.I. Residential load scheduling with renewable generation in the smart grid: A reinforcement learning approach. IEEE Syst. J. 2018, 13, 3283–3294. [Google Scholar]

- Kim, S.; Lim, H. Reinforcement learning based energy management algorithm for smart energy buildings. Energies 2018, 11, 2010. [Google Scholar] [CrossRef]

- Prasad, A.; Dusparic, I. Multi-agent deep reinforcement learning for zero energy communities. In Proceedings of the 2019 IEEE PES Innovative Smart Grid Technologies Europe (ISGT-Europe), Bucharest, Romania, 29 September–2 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–5. [Google Scholar]

- Xu, X.; Jia, Y.; Xu, Y.; Xu, Z.; Chai, S.; Lai, C.S. A multi-agent reinforcement learning-based data-driven method for home energy management. IEEE Trans. Smart Grid 2020, 11, 3201–3211. [Google Scholar]

- Correa-Jullian, C.; Droguett, E.L.; Cardemil, J.M. Operation scheduling in a solar thermal system: A reinforcement learning-based framework. Appl. Energy 2020, 268, 114943. [Google Scholar] [CrossRef]

- Chen, S.J.; Chiu, W.Y.; Liu, W.J. User preference-based demand response for smart home energy management using multiobjective reinforcement learning. IEEE Access 2021, 9, 161627–161637. [Google Scholar] [CrossRef]

- Lissa, P.; Deane, C.; Schukat, M.; Seri, F.; Keane, M.; Barrett, E. Deep reinforcement learning for home energy management system control. Energy AI 2021, 3, 100043. [Google Scholar] [CrossRef]

- Raman, N.S.; Gaikwad, N.; Barooah, P.; Meyn, S.P. Reinforcement learning-based home energy management system for resiliency. In Proceedings of the 2021 American Control Conference (ACC), Online, 25–28 May 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1358–1364. [Google Scholar]

- Heidari, A.; Maréchal, F.; Khovalyg, D. Reinforcement Learning for proactive operation of residential energy systems by learning stochastic occupant behavior and fluctuating solar energy: Balancing comfort, hygiene and energy use. Appl. Energy 2022, 318, 119206. [Google Scholar] [CrossRef]

- Lu, J.; Mannion, P.; Mason, K. A multi-objective multi-agent deep reinforcement learning approach to residential appliance scheduling. IET Smart Grid 2022, 5, 260–280. [Google Scholar] [CrossRef]

- Shen, R.; Zhong, S.; Wen, X.; An, Q.; Zheng, R.; Li, Y.; Zhao, J. Multi-agent deep reinforcement learning optimization framework for building energy system with renewable energy. Appl. Energy 2022, 312, 118724. [Google Scholar]

- Wang, L.; Zhang, G.; Yin, X.; Zhang, H.; Ghalandari, M. Optimal control of renewable energy in buildings using the machine learning method. Sustain. Energy Technol. Assess. 2022, 53, 102534. [Google Scholar] [CrossRef]

- Cordeiro-Costas, M.; Villanueva, D.; Eguía-Oller, P.; Granada-Álvarez, E. Intelligent energy storage management trade-off system applied to Deep Learning predictions. J. Energy Storage 2023, 61, 106784. [Google Scholar] [CrossRef]

- Mocanu, E.; Mocanu, D.C.; Nguyen, P.H.; Liotta, A.; Webber, M.E.; Gibescu, M.; Slootweg, J.G. On-line building energy optimization using deep reinforcement learning. IEEE Trans. Smart Grid 2018, 10, 3698–3708. [Google Scholar]

- Vazquez-Canteli, J.R.; Henze, G.; Nagy, Z. MARLISA: Multi-agent reinforcement learning with iterative sequential action selection for load shaping of grid-interactive connected buildings. In Proceedings of the 7th ACM International Conference on Systems for Energy-Efficient Buildings, Cities, and Transportation, Virtual, 18–20 November 2020; pp. 170–179. [Google Scholar]

- Chen, B.; Donti, P.L.; Baker, K.; Kolter, J.Z.; Bergés, M. Enforcing policy feasibility constraints through differentiable projection for energy optimization. In Proceedings of the Twelfth ACM International Conference on Future Energy Systems, Torino, Italy, 28 June–2 July 2021; pp. 199–210. [Google Scholar]

- Jung, S.; Jeoung, J.; Kang, H.; Hong, T. Optimal planning of a rooftop PV system using GIS-based reinforcement learning. Appl. Energy 2021, 298, 117239. [Google Scholar]

- Yu, L.; Xie, W.; Xie, D.; Zou, Y.; Zhang, D.; Sun, Z.; Zhang, L.; Zhang, Y.; Jiang, T. Deep reinforcement learning for smart home energy management. IEEE Internet Things J. 2019, 7, 2751–2762. [Google Scholar]

- Lee, S.; Choi, D.H. Federated reinforcement learning for energy management of multiple smart homes with distributed energy resources. IEEE Trans. Ind. Inform. 2020, 18, 488–497. [Google Scholar]

- Ye, Y.; Qiu, D.; Wang, H.; Tang, Y.; Strbac, G. Real-time autonomous residential demand response management based on twin delayed deep deterministic policy gradient learning. Energies 2021, 14, 531. [Google Scholar] [CrossRef]

- Touzani, S.; Prakash, A.K.; Wang, Z.; Agarwal, S.; Pritoni, M.; Kiran, M.; Brown, R.; Granderson, J. Controlling distributed energy resources via deep reinforcement learning for load flexibility and energy efficiency. Appl. Energy 2021, 304, 117733. [Google Scholar]

- Lee, S.; Xie, L.; Choi, D.H. Privacy-preserving energy management of a shared energy storage system for smart buildings: A federated deep reinforcement learning approach. Sensors 2021, 21, 4898. [Google Scholar] [CrossRef]

- Pinto, G.; Piscitelli, M.S.; Vázquez-Canteli, J.R.; Nagy, Z.; Capozzoli, A. Coordinated energy management for a cluster of buildings through deep reinforcement learning. Energy 2021, 229, 120725. [Google Scholar] [CrossRef]

- Pinto, G.; Deltetto, D.; Capozzoli, A. Data-driven district energy management with surrogate models and deep reinforcement learning. Appl. Energy 2021, 304, 117642. [Google Scholar] [CrossRef]

- Gao, Y.; Matsunami, Y.; Miyata, S.; Akashi, Y. Operational optimization for off-grid renewable building energy system using deep reinforcement learning. Appl. Energy 2022, 325, 119783. [Google Scholar] [CrossRef]

- Langer, L.; Volling, T. A reinforcement learning approach to home energy management for modulating heat pumps and photovoltaic systems. Appl. Energy 2022, 327, 120020. [Google Scholar] [CrossRef]

- Pinto, G.; Kathirgamanathan, A.; Mangina, E.; Finn, D.P.; Capozzoli, A. Enhancing energy management in grid-interactive buildings: A comparison among cooperative and coordinated architectures. Appl. Energy 2022, 310, 118497. [Google Scholar] [CrossRef]

- Nweye, K.; Sankaranarayanan, S.; Nagy, Z. MERLIN: Multi-agent offline and transfer learning for occupant-centric operation of grid-interactive communities. Appl. Energy 2023, 346, 121323. [Google Scholar] [CrossRef]

- Qiu, D.; Xue, J.; Zhang, T.; Wang, J.; Sun, M. Federated reinforcement learning for smart building joint peer-to-peer energy and carbon allowance trading. Appl. Energy 2023, 333, 120526. [Google Scholar] [CrossRef]

- Xie, J.; Ajagekar, A.; You, F. Multi-agent attention-based deep reinforcement learning for demand response in grid-responsive buildings. Appl. Energy 2023, 342, 121162. [Google Scholar] [CrossRef]

- Qiu, Y.; Zhou, S.; Xia, D.; Gu, W.; Sun, K.; Han, G.; Zhang, K.; Lv, H. Local integrated energy system operational optimization considering multi-type uncertainties: A reinforcement learning approach based on improved TD3 algorithm. IET Renew. Power Gener. 2023, 17, 2236–2256. [Google Scholar] [CrossRef]

- Deng, X.; Zhang, Y.; Jiang, Y.; Qi, H. A novel operation method for renewable building by combining distributed DC energy system and deep reinforcement learning. Appl. Energy 2024, 353, 122188. [Google Scholar] [CrossRef]

- Kazmi, H.; D’Oca, S.; Delmastro, C.; Lodeweyckx, S.; Corgnati, S.P. Generalizable occupant-driven optimization model for domestic hot water production in NZEB. Appl. Energy 2016, 175, 1–15. [Google Scholar] [CrossRef]

- Kofinas, P.; Dounis, A.I.; Vouros, G.A. Fuzzy Q-Learning for multi-agent decentralized energy management in microgrids. Appl. Energy 2018, 219, 53–67. [Google Scholar]

- Chasparis, G.C.; Pichler, M.; Spreitzhofer, J.; Esterl, T. A cooperative demand-response framework for day-ahead optimization in battery pools. Energy Inform. 2019, 2, 29. [Google Scholar]

- Gao, Y.; Matsunami, Y.; Miyata, S.; Akashi, Y. Multi-agent reinforcement learning dealing with hybrid action spaces: A case study for off-grid oriented renewable building energy system. Appl. Energy 2022, 326, 120021. [Google Scholar]

- Ashenov, N.; Myrzaliyeva, M.; Mussakhanova, M.; Nunna, H.K. Dynamic cloud and ANN based home energy management system for end-users with smart-plugs and PV generation. In Proceedings of the 2021 IEEE Texas Power and Energy Conference (TPEC), College Station, TX, USA, 2–5 February 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–6. [Google Scholar]

- Deltetto, D.; Coraci, D.; Pinto, G.; Piscitelli, M.S.; Capozzoli, A. Exploring the potentialities of deep reinforcement learning for incentive-based demand response in a cluster of small commercial buildings. Energies 2021, 14, 2933. [Google Scholar] [CrossRef]

- Huang, C.; Zhang, H.; Wang, L.; Luo, X.; Song, Y. Mixed deep reinforcement learning considering discrete-continuous hybrid action space for smart home energy management. J. Mod. Power Syst. Clean Energy 2022, 10, 743–754. [Google Scholar]

- Nicola, M.; Nicola, C.I.; Selișteanu, D. Improvement of the control of a grid connected photovoltaic system based on synergetic and sliding mode controllers using a reinforcement learning deep deterministic policy gradient agent. Energies 2022, 15, 2392. [Google Scholar] [CrossRef]

- Almughram, O.; Abdullah ben Slama, S.; Zafar, B.A. A reinforcement learning approach for integrating an intelligent home energy management system with a vehicle-to-home unit. Appl. Sci. 2023, 13, 5539. [Google Scholar] [CrossRef]

- Zhou, X.; Du, H.; Sun, Y.; Ren, H.; Cui, P.; Ma, Z. A new framework integrating reinforcement learning, a rule-based expert system, and decision tree analysis to improve building energy flexibility. J. Build. Eng. 2023, 71, 106536. [Google Scholar] [CrossRef]

- Binyamin, S.S.; Slama, S.A.B.; Zafar, B. Artificial intelligence-powered energy community management for developing renewable energy systems in smart homes. Energy Strategy Rev. 2024, 51, 101288. [Google Scholar]

- Wang, Z.; Xiao, F.; Ran, Y.; Li, Y.; Xu, Y. Scalable energy management approach of residential hybrid energy system using multi-agent deep reinforcement learning. Appl. Energy 2024, 367, 123414. [Google Scholar] [CrossRef]

- Anvari-Moghaddam, A.; Rahimi-Kian, A.; Mirian, M.S.; Guerrero, J.M. A multi-agent based energy management solution for integrated buildings and microgrid system. Appl. Energy 2017, 203, 41–56. [Google Scholar] [CrossRef]

- Tomin, N.; Shakirov, V.; Kurbatsky, V.; Muzychuk, R.; Popova, E.; Sidorov, D.; Kozlov, A.; Yang, D. A multi-criteria approach to designing and managing a renewable energy community. Renew. Energy 2022, 199, 1153–1175. [Google Scholar] [CrossRef]

- Tomin, N.; Shakirov, V.; Kozlov, A.; Sidorov, D.; Kurbatsky, V.; Rehtanz, C.; Lora, E.E. Design and optimal energy management of community microgrids with flexible renewable energy sources. Renew. Energy 2022, 183, 903–921. [Google Scholar] [CrossRef]

- Michailidis, I.T.; Manolis, D.; Michailidis, P.; Diakaki, C.; Kosmatopoulos, E.B. Autonomous self-regulating intersections in large-scale urban traffic networks: A Chania City case study. In Proceedings of the 2018 5th international conference on control, decision and information technologies (CoDIT), Thessaloniki, Greece, 10–13 April 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 853–858. [Google Scholar]

- Michailidis, I.T.; Manolis, D.; Michailidis, P.; Diakaki, C.; Kosmatopoulos, E.B. A decentralized optimization approach employing cooperative cycle-regulation in an intersection-centric manner: A complex urban simulative case study. Transp. Res. Interdiscip. Perspect. 2020, 8, 100232. [Google Scholar] [CrossRef]

- Michailidis, I.T.; Kapoutsis, A.C.; Korkas, C.D.; Michailidis, P.T.; Alexandridou, K.A.; Ravanis, C.; Kosmatopoulos, E.B. Embedding autonomy in large-scale IoT ecosystems using CAO and L4G-CAO. Discov. Internet Things 2021, 1, 8. [Google Scholar] [CrossRef]

| Ref. | Year | Method | Agent | BEMS | Residential | Commercial | Simulation | Real-Life | Citations |

|---|---|---|---|---|---|---|---|---|---|

| [84] | 2015 | QL | Multi | PV/GHP/HVAC | x | x | 150 | ||

| [85] | 2015 | QL | Multi | PV/ESS | x | x | 37 | ||

| [86] | 2017 | FQI | Single | PV/HVAC/DWH | x | x | 19 | ||

| [87] | 2018 | SARSA | Single | PV/ESS | x | x | 16 | ||

| [88] | 2019 | QL | Single | PV/ESS/EVs | x | x | 84 | ||

| [89] | 2018 | QL | Single | PV | x | x | 128 | ||

| [90] | 2019 | DQN | Multi | PV/ESS | x | 39 | |||

| [91] | 2020 | QL | Multi | PV/HVAC/LS/EVs | x | x | 283 | ||

| [92] | 2020 | QL | Single | SWH/HVAC | x | x | 43 | ||

| [93] | 2021 | QL | Single | PV/ESS/Other | x | x | 24 | ||

| [94] | 2021 | QL | Single | PV/HVAC/DHW | x | x | 132 | ||

| [95] | 2021 | QL | Single | PV/ESS/Other | x | x | 11 | ||

| [96] | 2022 | DQN | Single | PV/SWH/HVAC | x | x | 33 | ||

| [97] | 2022 | DQN | Multi | PV/Other | x | x | 18 | ||

| [98] | 2022 | DQN | Single | PV/WT/GHP | x | x | 64 | ||

| [99] | 2022 | QL | Single | PV/WT/SWH/BIO | x | x | 15 | ||

| [100] | 2023 | DQN | Single | PV/ESS | x | x | 14 |

| Ref. | Year | Method | Agent | BEMS | Residential | Commercial | Simulation | Real-Life | Citations |

|---|---|---|---|---|---|---|---|---|---|

| [101] | 2019 | DPG | Single | PV/EVs/Other | x | x | 464 | ||

| [102] | 2020 | MARLISA | Multi | PV/ESS/HVAC | x | x | 51 | ||

| [103] | 2021 | PPO | Single | PV/HVAC | x | x | 26 | ||

| [104] | 2021 | PPO | Single | PV | x | x | 40 |

| Ref. | Year | Method | Agent | BEMS | Residential | Commercial | Simulation | Real-Life | Citations |

|---|---|---|---|---|---|---|---|---|---|

| [105] | 2019 | DDPG | Single | PV/ESS/HVAC | x | x | 304 | ||

| [106] | 2020 | A2C | Multi | PV/ESS/HVAC/Other | x | x | 112 | ||

| [107] | 2021 | TD3 | Single | PV/ESS/HVAC/EVs | x | x | 39 | ||

| [108] | 2021 | DDPG | Single | PV/ESS/HVAC | x | x | 56 | ||

| [109] | 2021 | - | Multi | PV/ESS/HVAC | x | x | 16 | ||

| [110] | 2021 | SAC | Single | PV/ESS/TSS/HVAC/DHW | x | x | 69 | ||

| [111] | 2021 | SAC | Single | PV/HVAC/TSS | x | x | 72 | ||

| [112] | 2022 | TD3 | Single | PV/ESS/BIO | x | x | 46 | ||

| [113] | 2022 | DDPG | Single | PV/SWH/ESS/HVAC/TSS | x | x | 25 | ||

| [114] | 2022 | SAC | Multi | PV/SWH/ESS | x | x | x | 26 | |

| [115] | 2023 | SAC | Multi | PV/ESS | x | x | 21 | ||

| [116] | 2023 | DDPG | Multi | PV/ESS/HVAC | x | x | x | 46 | |

| [117] | 2023 | SAC | Multi | PV/ESS/DHW/HVAC | x | x | x | 35 | |

| [118] | 2023 | TD3 | Single | PV/WT/ESS | x | x | 10 | ||

| [119] | 2024 | SAC | Single | PV/ESS/EVs | x | x | x | 12 |

| Ref. | Year | Method | Agent | BEMS | Residential | Commercial | Simulation | Real-Life | Citations |

|---|---|---|---|---|---|---|---|---|---|

| [120] | 2016 | HRL/ACO | Single | PV/DHW/HVAC | x | x | x | 69 | |

| [121] | 2018 | QL/FLC | Multi | PV/ESS/Other | x | x | 179 | ||

| [122] | 2019 | Monte Carlo/ADP | Multi | PV/ESS | x | x | 10 | ||

| [123] | 2020 | DQN/TD3 | Multi | PV/ESS/BIO | x | x | 21 | ||

| [124] | 2021 | QL/ANN | Single | PV/ESS/Other | x | x | 14 | ||

| [125] | 2021 | SAC/RBC | Single | PV/TSS | x | x | 24 | ||

| [126] | 2022 | DQN/DDPG | Single | PV/ESS/HVAC/Other | x | x | 45 | ||

| [127] | 2022 | TD3/MPC | Single | PV/Other | x | x | 11 | ||

| [128] | 2023 | QL/FLC | Single | PV/ESS/EVs/Other | x | x | 12 | ||

| [129] | 2023 | DDPG/RBC | Single | PV/ESS | x | x | 26 | ||

| [130] | 2024 | QL/ANN | Multi | PV/ESS/EVs | x | x | 12 | ||

| [131] | 2024 | PPO/IL | Multi | PV/ESS/HVAC | x | x | 15 |

| Ref. | Year | Method | Agent | BEMS | Residential | Commercial | Simulation | Real-Life | Citations |

|---|---|---|---|---|---|---|---|---|---|

| [132] | 2017 | Bayesian RL | Multi | PV/ESS/WT/SWH/HVAC | x | x | 37 | ||

| [133] | 2022 | MCTS | Multi | PV/ESS/WT/GHP | x | x | 23 | ||

| [134] | 2022 | MCTS | Multi | PV/ESS/WT/GHP | x | x | 116 |

| Author (Year) | Summary |

|---|---|

| Yang et al. [84] | MARL QL optimized a PV/T-GHP system with floor heating in a Swiss resident. RL learned online to maximize net thermal and electrical output, ensuring GHP compensation and optimal operation. Achieved 100% heat demand coverage (vs. 97% RBC) and 10% higher PV/T energy capture |

| Raju et al. [85] | Multi-agent QL scheduled PV/ESS operations in a university microgrid, coordinating battery storage for optimal grid interactions. The approach reduced grid reliance by 15–20%, improving energy balancing, and enhancing battery utilization over a 10-year horizon |

| De Somer et al. [86] | Model-based RL (FQI with ERT regression) optimized DR in DHW buffers of PV-integrated homes. Real-life tests in 6 smart homes showed a 20% increase in PV self-consumption, reducing grid dependence by dynamically scheduling heating cycles. |

| Ebell et al. [87] | SARSA-ANN-based RL managed PV/ESS providing frequency containment reserve. The agent minimized grid imports while ensuring real-time compliance with demand fluctuations. Simulations showed a 7.8% reduction in grid reliance compared to RBC |

| Kim et al. [89] | QL single-agent control optimized PV/ESS/V2G scheduling in a university building, minimizing costs through ToU pricing strategies. Reduced daily energy costs by 20–25% and grid reliance by 15–30% while dynamically shifting charging/discharging cycles. |

| Remani et al. [88] | QL-based RL optimized real-time load scheduling for residential PV-powered homes. Stochastic PV modeling ensured adaptive scheduling, reducing energy costs from 735 units to 16.25 units, enhancing self-sufficiency |

| Prasad et al. [90] | Multi-agent DQN optimized peer-to-peer energy sharing in a PV/ESS residential community. Agents learn when to store, lend, or borrow power. Simulations showed 40–60 kWh lower grid reliance per household, achieving near-zero energy status |

| Xu et al. [91] | Multi-agent QL-based HEMS integrates PV, HVAC, LS, EVs, and shiftable loads. Extreme Learning Machine predicted PV output and prices. Cost reductions reached 44.6%, and dynamic scheduling enhanced system flexibility |

| Correa et al. [92] | Tabular QL optimized SWH scheduling in a university with solar thermal collectors and heat pumps (HP). TRNSYS simulations revealed a 21% efficiency boost in low solar conditions, optimizing demand-side response |

| Chen et al. [93] | Multi-objective QL optimized DR for PV/ESS smart homes, balancing cost vs. user satisfaction via dual Q-tables. Achieved 8.44% cost savings, with a 1.37% increase in user satisfaction |

| Lissa et al. [94] | QL-based HEMS with ANN-approximated Q-values optimized a PV/ESS/DHW system. Dynamic setpoint adjustments improved PV self-consumption (+9.5%), energy savings (+16%), and load shifting (+10.2%) |

| Raman et al. [95] | Zap QL optimized PV/ESS scheduling during grid outages, ensuring resilience. Computation time reduced (0.14 ms RL vs. 62.47 s MPC), maintaining 100% reliability for critical loads in disaster scenarios |

| Heidari et al. [96] | DDQN optimized a PV/HP/DHW framework, incorporating Legionella control and user comfort constraints. Swiss home trials reported 7–60% energy savings over RBC while maintaining hygiene and adaptability |

| Lu et al. [97] | MARL DQN optimized residential appliance scheduling with PV. Individual agents learnt cooperative control policies, reducing costs by 30%, peak demand by 18.2%, and improving punctuality by 37.3%. |

| Shen et al. [98] | D3QN-based MARL optimized PV/WT/GHP systems in office buildings. Prioritized experience replay and feasible action screening reduced discomfort by 84%, minimized unconsumed renewable energy by 43%, and cut energy costs by 8% |

| Wang et al. [99] | QL-based BEEL method optimizes DHW/PV/BIO interactions in a multi-zone building. Enhanced control over heating, cooling, and storage, reducing heating demand by 26% and cooling energy by 15% |

| Cordeiro et al. [100] | DQN/SNN control optimized a PV/ESS framework in a university setting. Boosted self-sufficiency (41.39% → 52.92%), reduced grid reliance (−17.85%), and achieved EUR 25,000 annual savings with an 8.56 kg CO2 cut |

| Author (Year) | Summary |

|---|---|

| Mocanu et al. [101] | Proposed energy management for PV-integrated residents using DPG and DQN. The model was trained on Pecan Street data, optimizing real-time energy consumption and costs, achieving 26.3% peak demand reduction, 27.4% energy cost savings, and improved PV utilization |

| Vasquez et al. [102] | MARLISA MARL optimized urban energy systems with PV, TSS, HPs, and heaters. MARLISA reduced daily peak demand by 15%, ramping by 35%, and increased the load factor by 10%. Combining MARLISA with RBC accelerated convergence within 2 years |

| Chen et al. [103] | PPO-based PROF method integrated ANN projection layers to enforce convex constraints in energy optimization. Applied to radiant heating in Carnegie Mellon’s campus, enhancing thermal comfort and reducing energy use by 4% |

| Jung et al. [104] | GIS-PPO RL model for optimal rooftop PV planning in South Korea. Simulates 10,000 economic scenarios, optimizing panel allocation under future uncertainties. Achieved USD 539,197 profit, outperforming RBC and GA by 4.4%, while reducing global warming potential by 91.8% |

| Author (Year) | Summary |

|---|---|

| Yu et al. [105] | DDPG optimized HVAC scheduling and ESS management in a resident, integrating PV. The method handled thermal inertia and stochastic DR to minimize energy costs ensuring comfort. Simulations using real-world data, showed 8.10–15.21% less costs compared to RBC |

| Lee et al. [106] | The MARL A2C optimized PV-ESS and appliance scheduling across multiple residents. Each household trained locally, periodically updating a global model to improve efficiency. The approach reduced electricity costs while maintaining comfort, achieving learning accelerating convergence |

| Ye et al. [107] | The TD3 DR optimized BEMS integrating PV, ESS, HVAC, and EVs with V2G. The algorithm addressed uncertainties in solar power generation and non-shiftable loads, achieving 12.45% and 5.93% lower energy costs compared to DQN and DPG using real-world datasets |

| Touzani et al. [108] | Developed a DDPG-based controller for DER management in a commercial building equipped with PV, ESS, and HVAC. The system was trained on synthetic data and deployed in FLEXLAB, demonstrating energy savings through optimized scheduling of RES and load shifting |

| Lee et al. [109] | Proposed a hierarchical framework with federated learning to optimize shared ESS and HVAC scheduling in smart buildings. The approach preserved privacy by sharing only abstracted model parameters while reducing HVAC consumption by 24–32% and electricity costs by 18.6–20.6% |

| Pinto et al. [110] | Proposed a centralized SAC for HVAC and TSS management in commercial buildings (offices, retail, and restaurant). The model balanced peak demand reduction, operational cost savings (4% reduction), and load profile smoothing (12% peak decrease, 6% improved load uniformity) |

| Pinto et al. [111] | Utilized SAC for HP and TSS coordination, integrating LSTM-based indoor temperature predictions. The approach optimized grid interaction and storage utilization, reducing peak demand by 23%, Peak-to-Average Ratio (PAR) by 20%, and having 20% faster training |

| Gao et al. [112] | Investigated TD3 and DDPG for optimizing off-grid hybrid RES systems in a Japanese office building. The model ensured safe battery operation and grid stability, with TD3 outperforming DDPG by reducing hourly grid power error below 2 kWh and increasing battery safety by 7.72 h |

| Langer et al. [113] | Examined DDPG for home energy management with PV, modulating air-to-water HP, ESS, and TSS. The model transitioned from MILP to DRL, leveraging domain knowledge for stable learning. Simulations in Germany demonstrated 75% self-sufficiency, 39.6% cost savings, over RBC |

| Pinto et al. [114] | Developed a MARL-based SAC model for district energy management (three residential buildings and a restaurant). The decentralized approach optimized PV self-consumption and thermal storage, reducing energy costs by 7% and peak demand by 14% compared to RBC |

| Qiu et al. [116] | Proposed a federated multi-agent DDPG approach for joint peer-to-peer energy and carbon trading in mixed-use buildings (residential, commercial, industrial). The Fed-JPC model reduced total energy and environmental costs by 5.87% and 8.02% while ensuring data privacy |

| Nweye et al. [115] | Introduced the MERLIN framework utilizing SAC for distributed PV-battery optimization in 17 zero-net-energy homes. Combined real-world smart meter data and simulations, reducing training data needs by 50%, improving ramping by 60%, and lowering peak load by 35% |

| Xie et al. [117] | Applied a MARL SAC framework with an attention mechanism for DR in grid-interactive buildings. The model controlled PV, ESS, and DHW in residential, office, and academic buildings. Achieved a 6–8% reduction in net demand, USD 92,158 annual electricity savings at Cornell University |

| Qiu et al. [118] | Proposed a TD3-based RL approach with dynamic noise balancing to optimize PV, wind, ESS, and hydrogen-based energy systems in commercial buildings. The model reduced operating costs by 18.46%, eliminated RES curtailment, and achieved superior accuracy over traditional methods |

| Deng et al. [119] | Developed the DC-RL model integrating a distributed DC energy system with SAC. Optimized PV, ESS, EVs, and flexible loads for residential and office buildings. Increased PV self-consumption by 38%, satisfaction by 9%, and PV self-sufficiency to 93%, while reducing ESS reliance by 33% |

| Author (Year) | Summary |

|---|---|

| Kazmi et al. [120] | The hybrid RL-combining RL, heuristics and ACO-optimized DHW production in 46 NZEBs in Holland, integrating PV and ASHPs. Simulations showed 27% energy savings, while real-world tests yielded 61 kWh savings over 3.5 months |

| Kofinas et al. [121] | Proposed a MARL system using fuzzy QL to control a microgrid with PV, diesel generator, fuel cell, ESS, and electrolyzer. Each device RL agent, optimized energy balance independently. System achieved 1.54% uncovered energy, less diesel usage (0.87%), and reduced ESS discharges |

| Chasparis et al. [122] | Developed a hierarchical ADP-based RL model where an aggregator controlled residential PV/ESS systems for energy bidding. Used Monte Carlo Least Squares ADP for flexibility forecasting. Real-world simulations in Austria (30 homes) improved revenues over 10 test days |

| Gao et al. [123] | Employed a MARL RL framework using TD3 (continuous actions) and DQN (discrete actions) for off-grid energy optimization in a Japanese office building with PV, BIO, and ESS. Achieved 64.93% improvement in off-grid operation and an 84% reduction in unsafe battery runtime |

| Ashenov et al. [124] | Integrated ANNs for forecasting and QL for load scheduling in a HEMS with PV, ESS, and smart plugs. Distinguished non-shiftable and shiftable loads, dynamically optimizing scheduling. Achieved a 24% cost reduction and a 15% profit increase in a single-home case study |

| Deltetto et al. [125] | Combined SAC with RBC for demand response in small commercial buildings (office, retail, restaurant) with PV. SAC-alone reduced costs by 9%, energy use by 7%, and improved peak shaving by 4%, but violated DR constraints. Hybrid SAC-RBC balanced cost and DR compliance. |

| Huang et al. [126] | Proposed a mixed DRL model integrating DQN and DDPG for discrete-continuous action spaces in HEMS (PV, ESS, HVAC, appliances). A safe-mixed DRL version mitigated comfort violations. Achieved 25.8% cost reduction and significant thermal comfort improvements. |

| Nicola et al. [127] | Combined Synergetic (SYN) and Sliding Mode Control (SMC) with a TD3 RL agent for MPPT in a 100 kW PV-grid system. The RL agent stabilized DC-link voltage, reducing steady-state error (<0.02%) and overshooting under 30% load variations. |

| Almughram et al. [128] | Developed RL-HCPV using fuzzy QL and deep learning (ANN, LSTM) for predictive PV generation, EV SOC, and energy trading in smart homes with V2H. Reduced grid reliance by 38% (sunny days) and 24% (cloudy days), cutting electricity bills to USD 3.92/day. |

| Zhou et al. [129] | Integrated DDPG with a rule-based expert system for energy flexibility in a net-zero energy office building with PV and ESS. Used CART to analyze external influences. Achieved 7% cost reduction, 9.2% PV self-consumption increase, and 10.6% lower grid reliance |

| Binyamin et al. [130] | Combined multi-agent QL and ANN for smart home P2P energy trading with PV, ESS, and EVs. Optimized load scheduling, storage, and trading under real-time pricing. Achieved 9.3–16.07% household reward improvements and cost reductions under varying solar penetration |

| Wang et al. [131] | Proposed MAPPO and Imitation Learning for scalable hybrid energy management (PV, ESS, HVAC) in ZEHs. Centralized training, decentralized execution improved PV self-consumption (18.47%) and energy self-sufficiency (46.10%) while ensuring thermal comfort. |

| Author (Year) | Summary |

|---|---|

| Anvari et al. [132] | Developed an ontology-driven multi-agent system for energy management in residential microgrids integrating buildings, RES, and controllable loads. The system used Bayesian RL for real-time battery bank optimization, managing PV, WT, DHW, radiant floor systems, and micro-CHP. Achieved 5–13% cost reductions, improved occupant comfort, and enhanced system resilience under dynamic pricing schemes. |

| Tomin et al. [133] | Proposed a multi-criteria approach for designing and managing renewable energy communities using bi-level programming and RL, namely, the Monte Carlo Tree Search (MCTS) approach. Optimized the operation of PV, WT, biomass gasifiers, and storage in remote Japanese villages. Results showed 75% cost reductions in electricity tariffs, higher RES penetration, and balanced economic-environmental benefits |

| Tomin et al. [134] | Applied bi-level optimization with RL (MCTS) for designing and managing community microgrids in Siberian settlements. Integrated PV, WT, biomass gasifiers, and storage, optimizing energy market operations. Demonstrated a 20–40% LCOE reduction, improved electricity reliability, and operational flexibility. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Michailidis, P.; Michailidis, I.; Kosmatopoulos, E. Reinforcement Learning for Optimizing Renewable Energy Utilization in Buildings: A Review on Applications and Innovations. Energies 2025, 18, 1724. https://doi.org/10.3390/en18071724

Michailidis P, Michailidis I, Kosmatopoulos E. Reinforcement Learning for Optimizing Renewable Energy Utilization in Buildings: A Review on Applications and Innovations. Energies. 2025; 18(7):1724. https://doi.org/10.3390/en18071724

Chicago/Turabian StyleMichailidis, Panagiotis, Iakovos Michailidis, and Elias Kosmatopoulos. 2025. "Reinforcement Learning for Optimizing Renewable Energy Utilization in Buildings: A Review on Applications and Innovations" Energies 18, no. 7: 1724. https://doi.org/10.3390/en18071724