A Novel Camera-Based Measurement System for Roughness Determination of Concrete Surfaces

Abstract

:1. Introduction

- Fully digital measurement system and reproducibility of results.

- Contactless and area-based measurement.

- Deployable on construction sites and high mobility.

- Applicability on arbitrary oriented surfaces.

- Easy to use.

- Lightweight.

- Low-cost.

2. State of the Art and Related Work

3. Theoretical Background

3.1. Defining Roughness

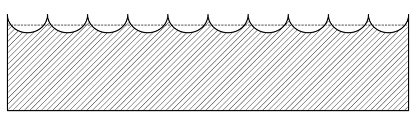

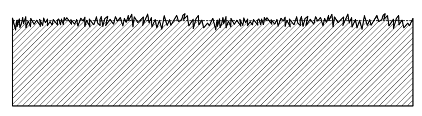

3.1.1. Shape Deviations

3.1.2. Parameters

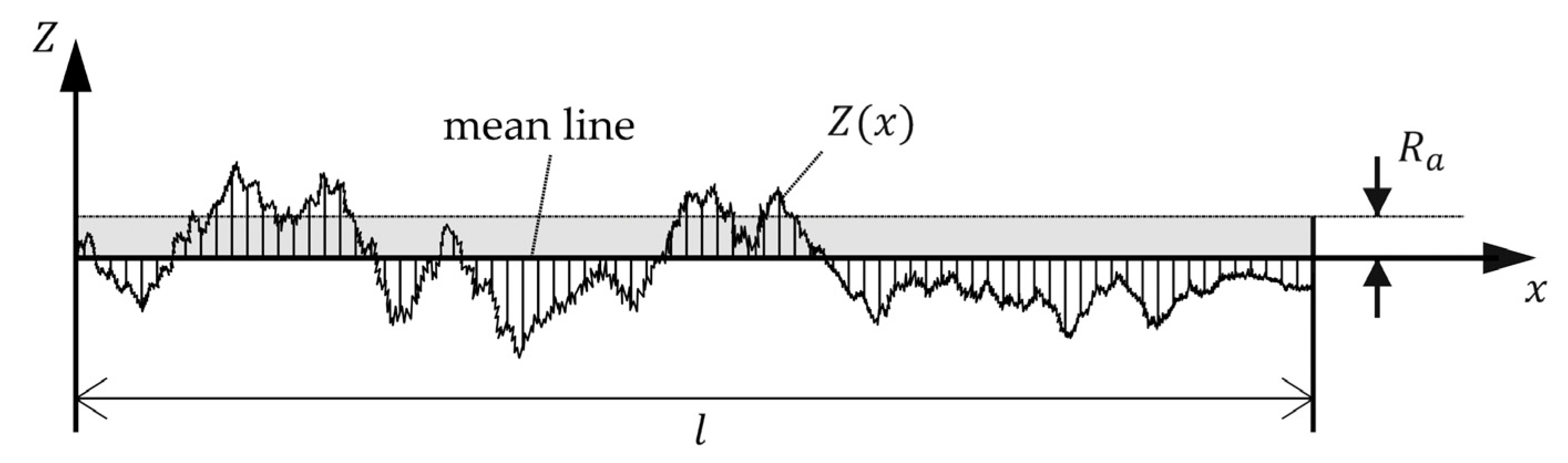

Arithmetical Mean Deviation of the Assessed Profile ()

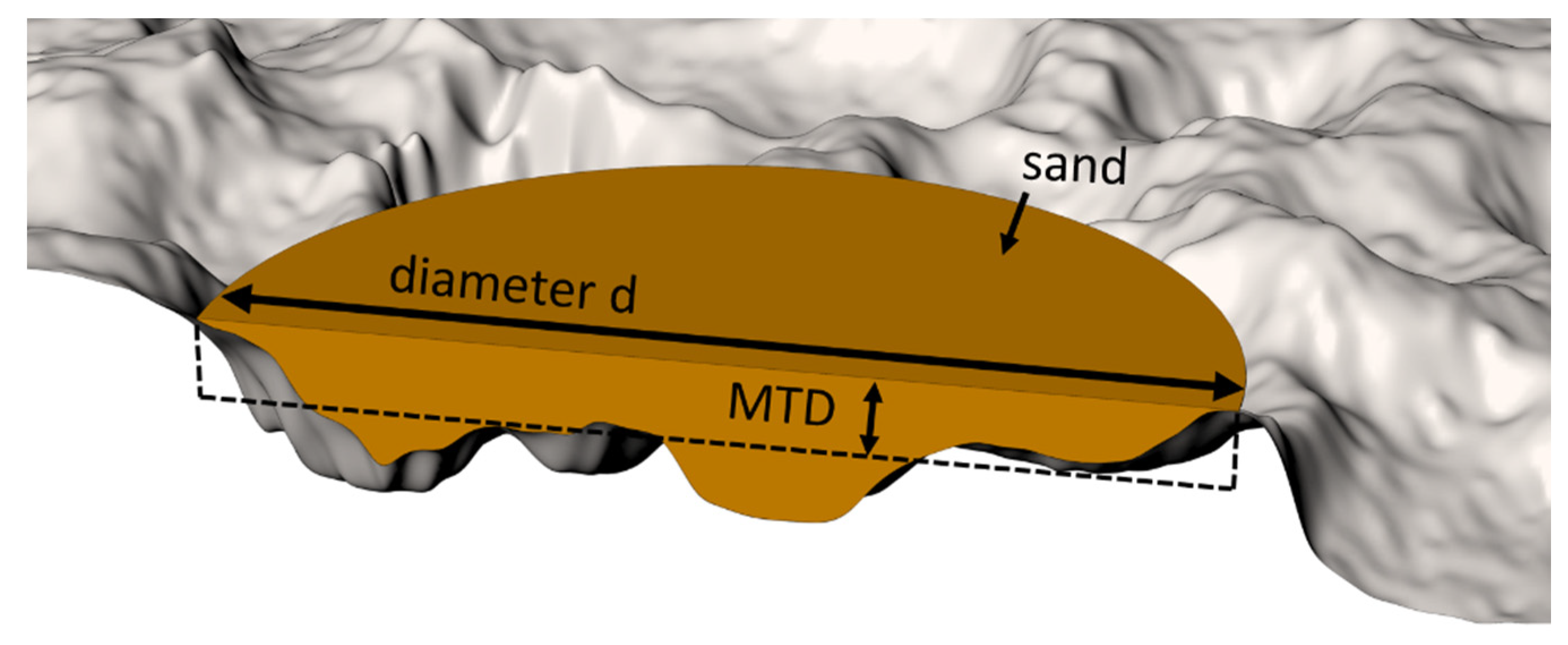

Mean Texture Depth ()

3.2. Digital Photogrammetry

3.2.1. Camera Model

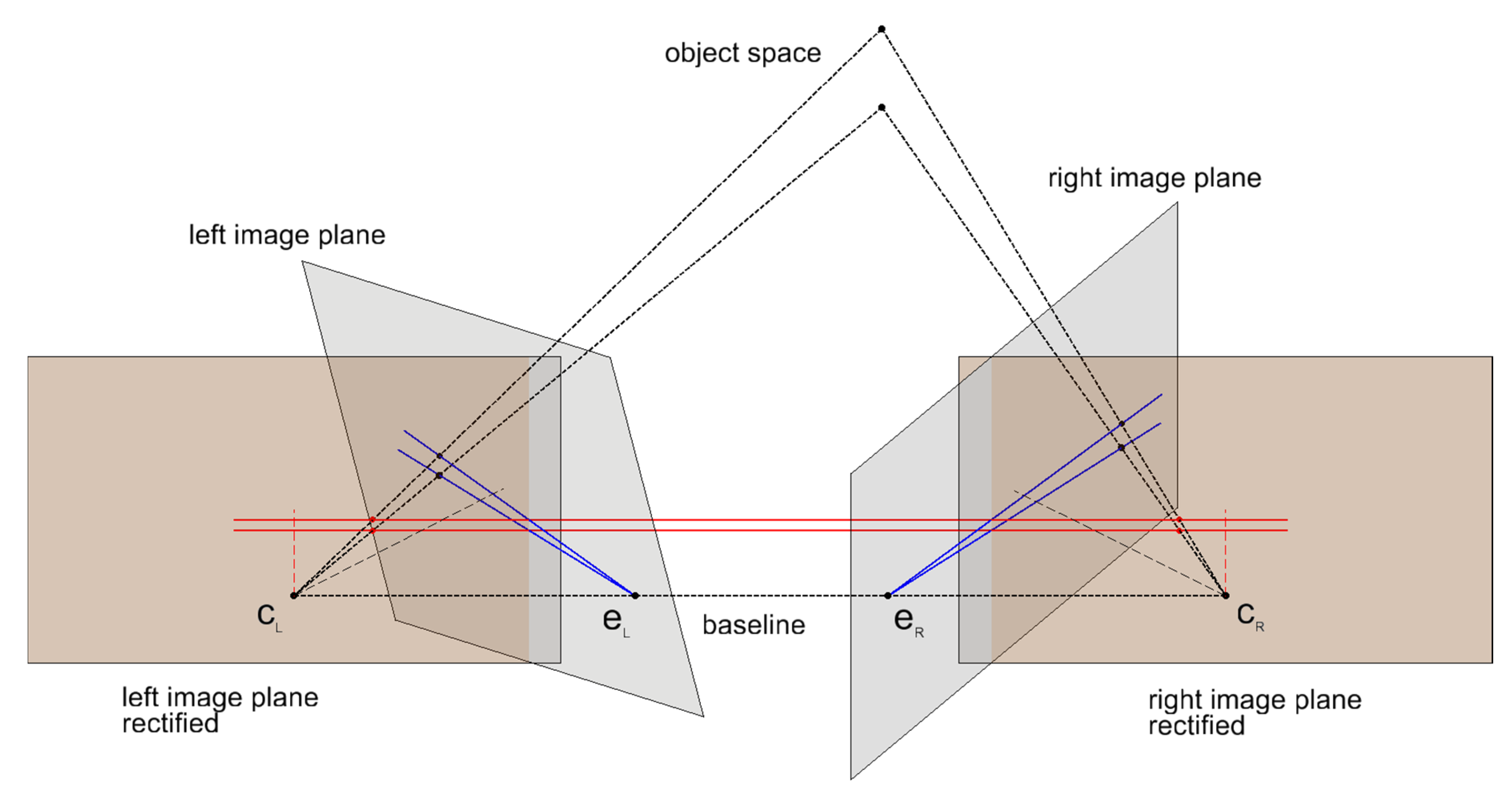

3.2.2. Epipolar Geometry

4. Measurement System

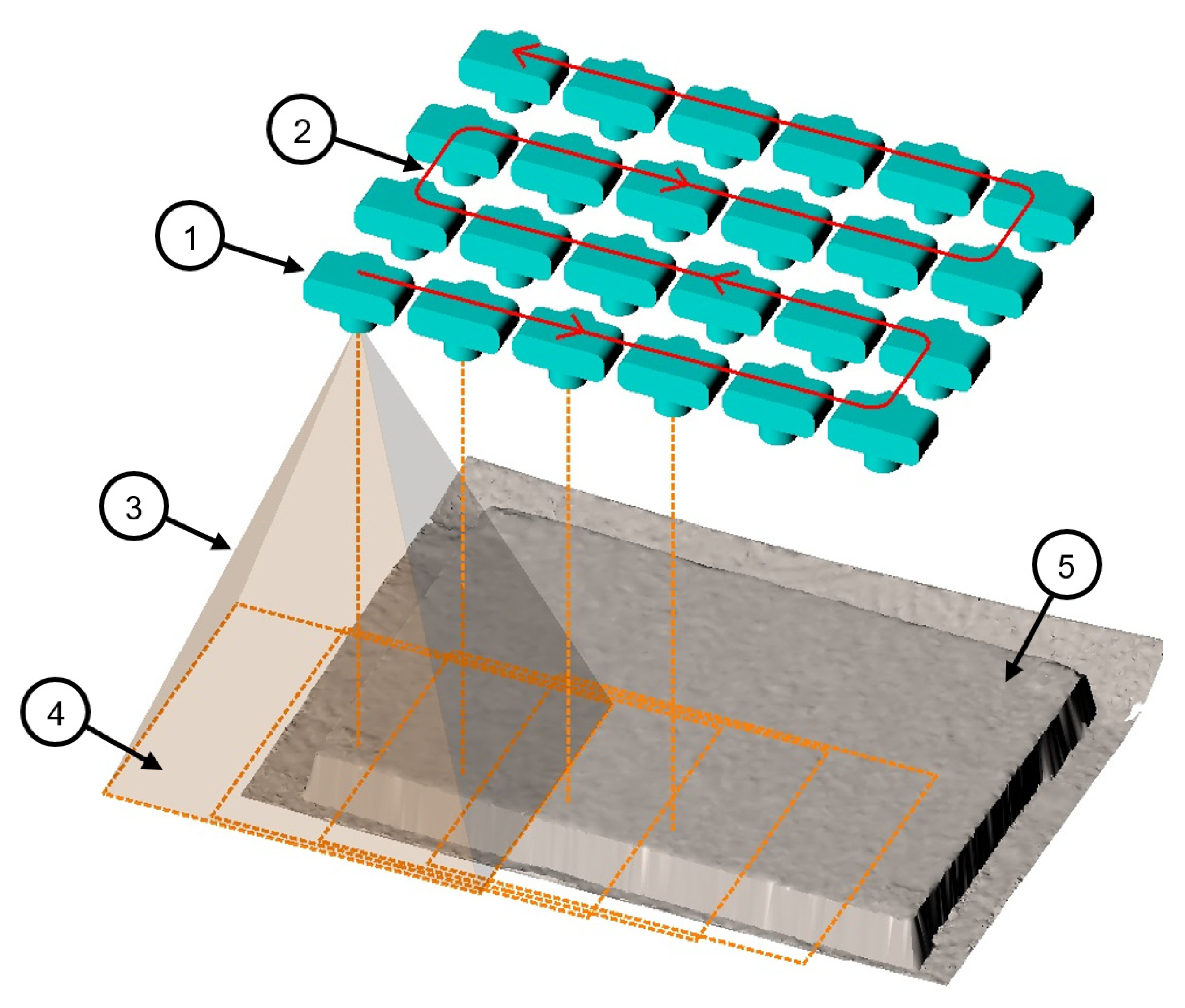

4.1. Concept for Image Capture

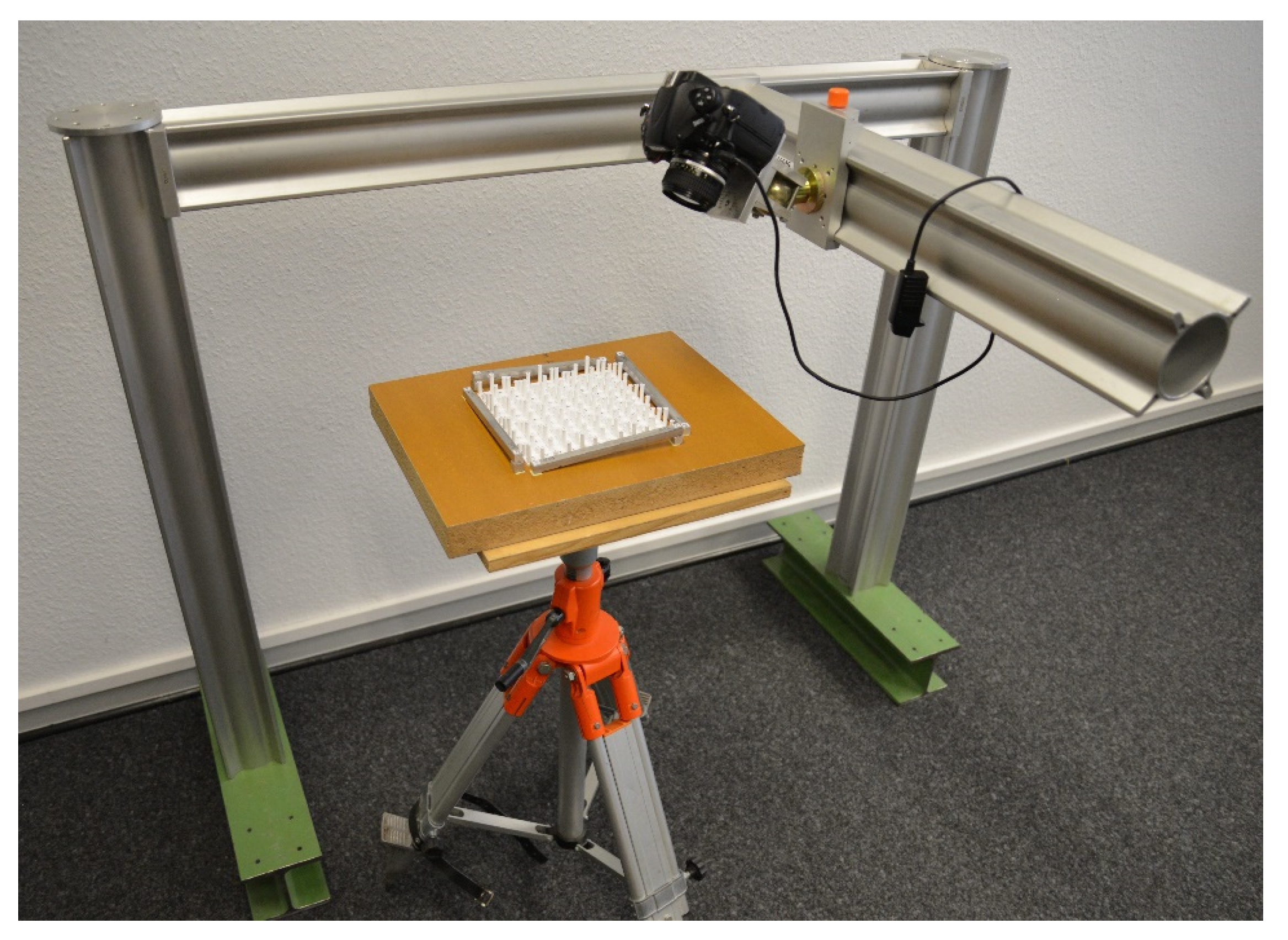

4.2. Apparatus

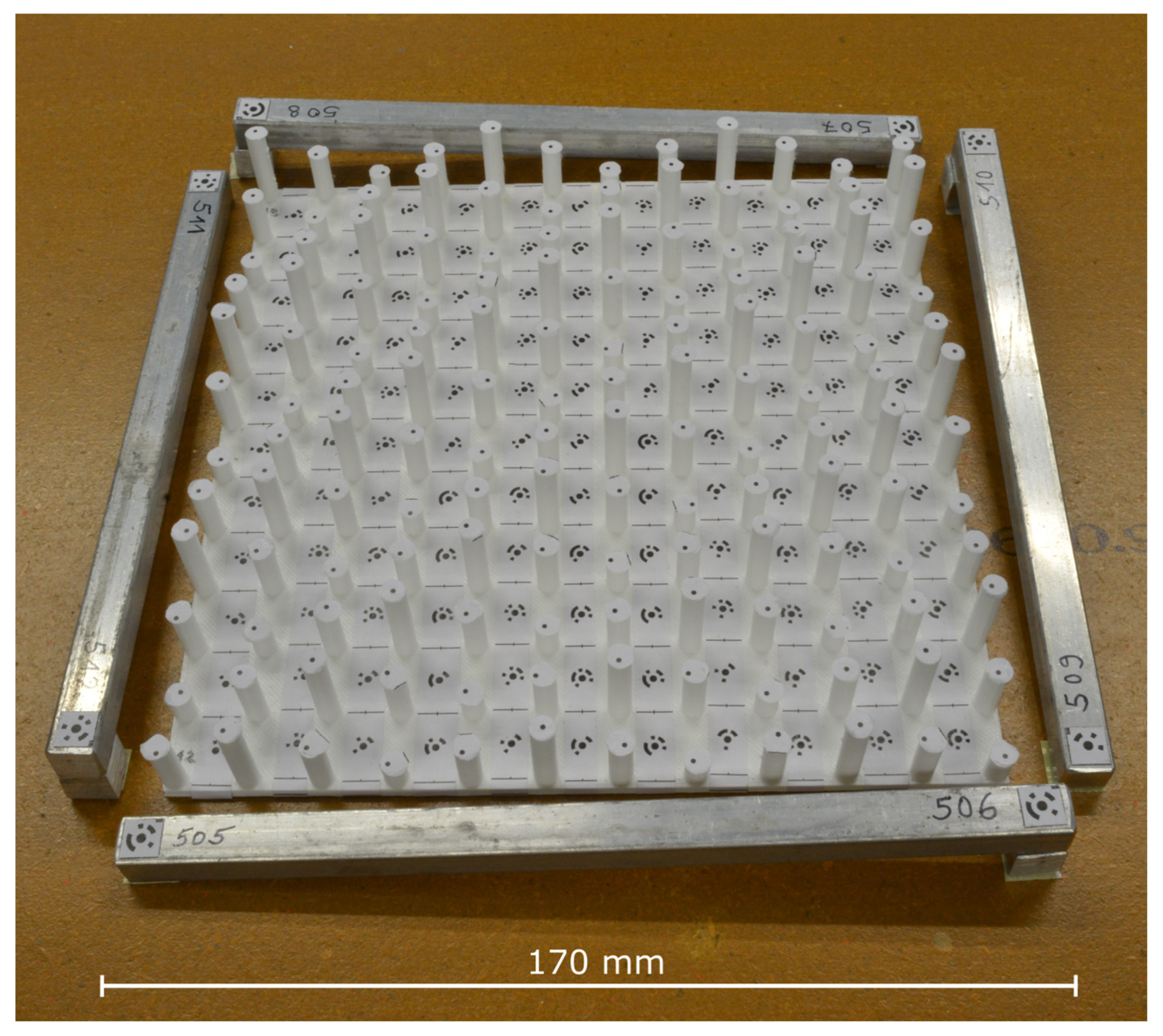

4.3. Custom-Built 3D Calibration Test-Field

5. Methodology

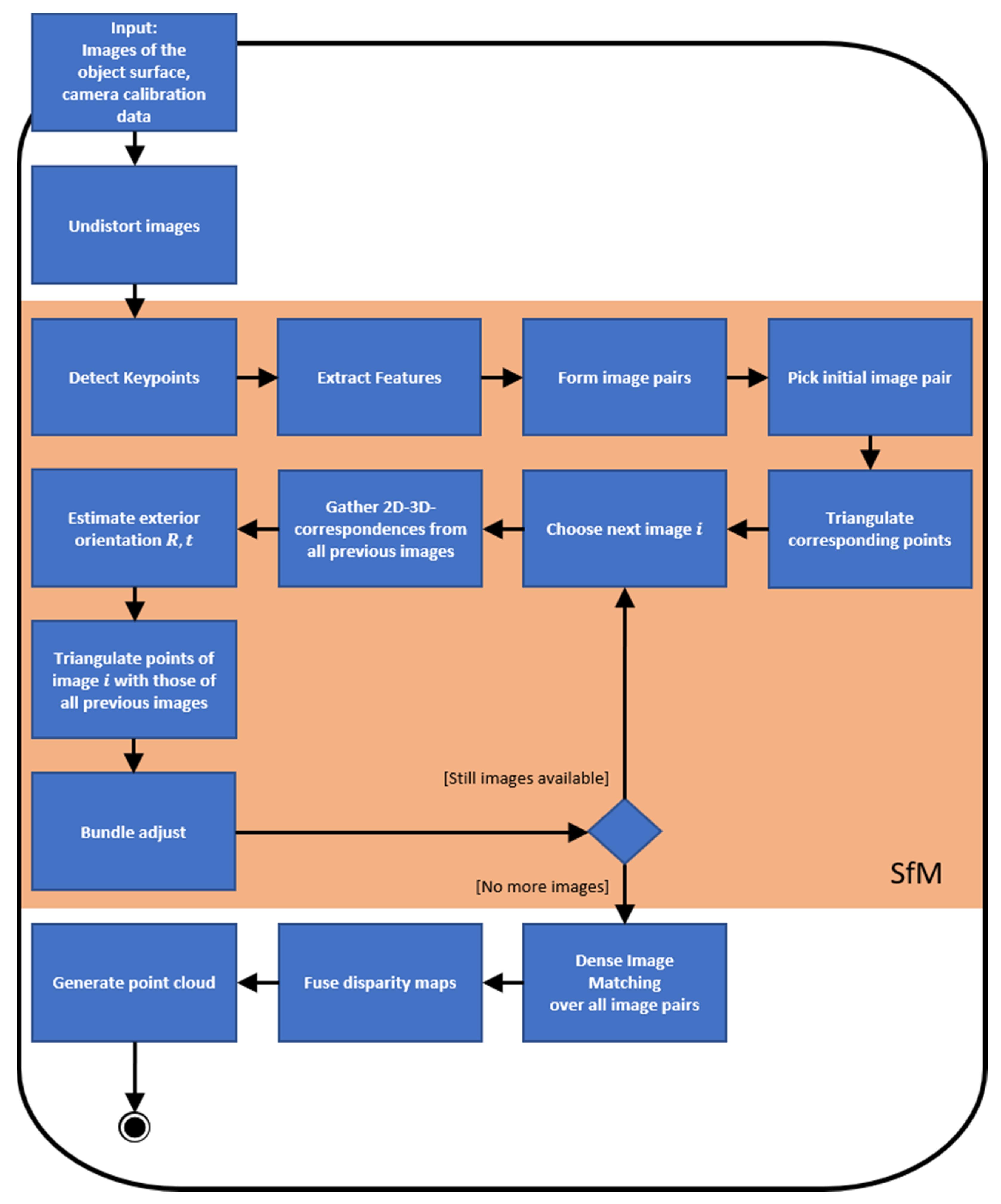

5.1. 3D Reconstruction Pipeline

5.1.1. Preprocessing

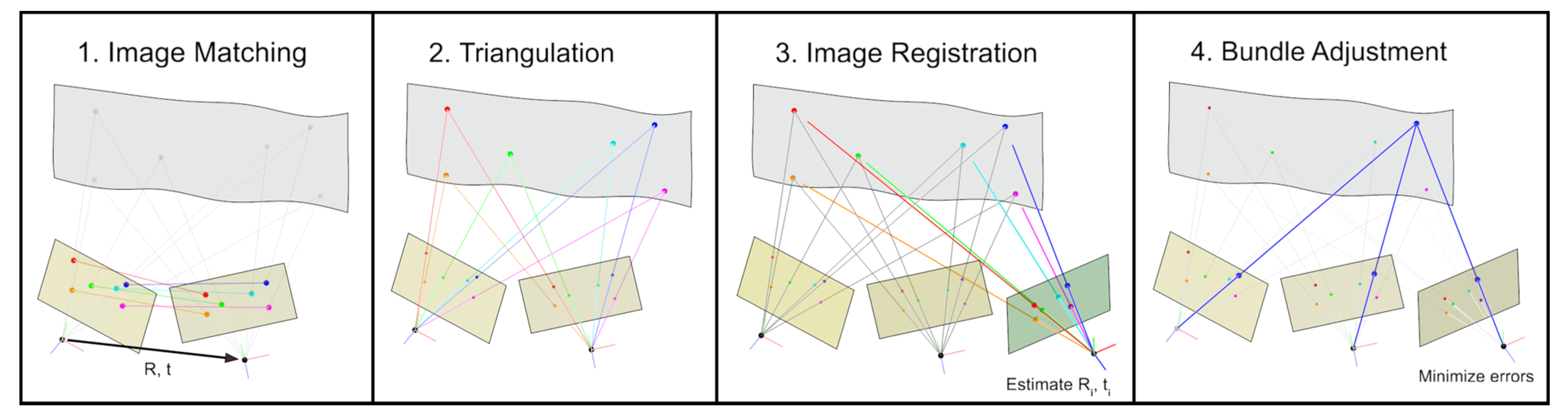

5.1.2. Structure from Motion

5.1.3. Dense Image Matching

- A

- Cost Initialization:

- B

- Cost Aggregation:

- C

- Disparity Selection:

Disparity Map Fusion and Point Cloud Generation

5.2. Adapting Roughness Parameter to 3D Point Clouds

Arithmetical Mean Deviation

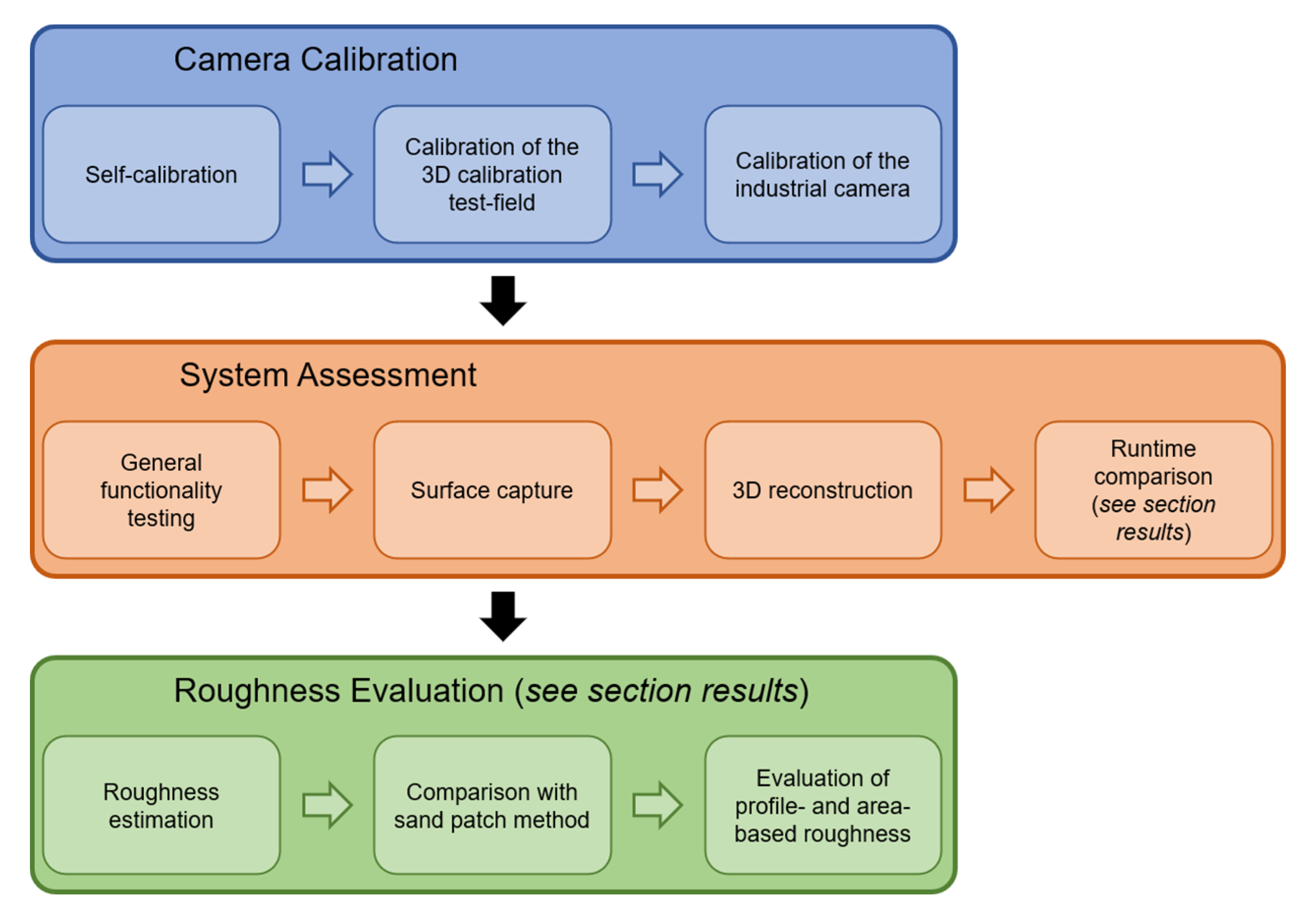

6. Experiments

6.1. Camera Calibration

6.1.1. Self-Calibration

6.1.2. Calibration of the Test-Field

6.1.3. Calibration of the Industrial Camera

6.2. System Assessment

6.2.1. Test Objects

6.2.2. Measurement Procedure

7. Results and Discussion

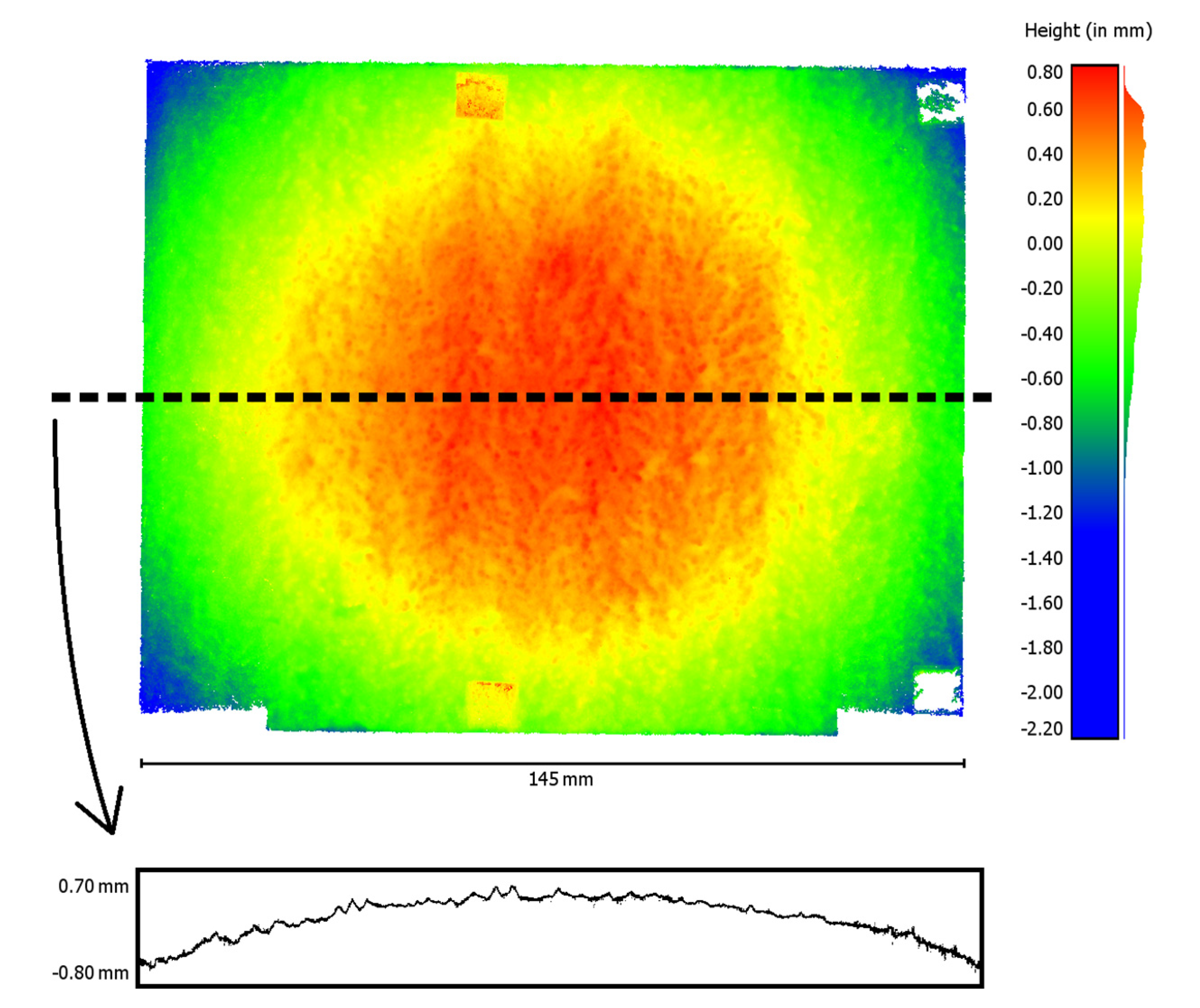

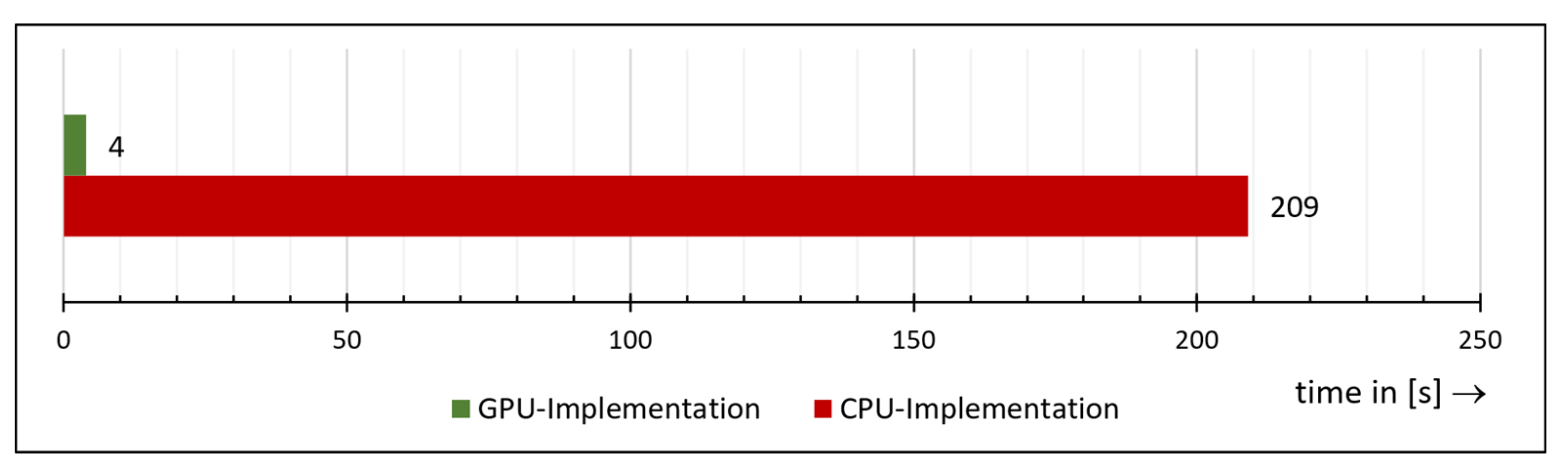

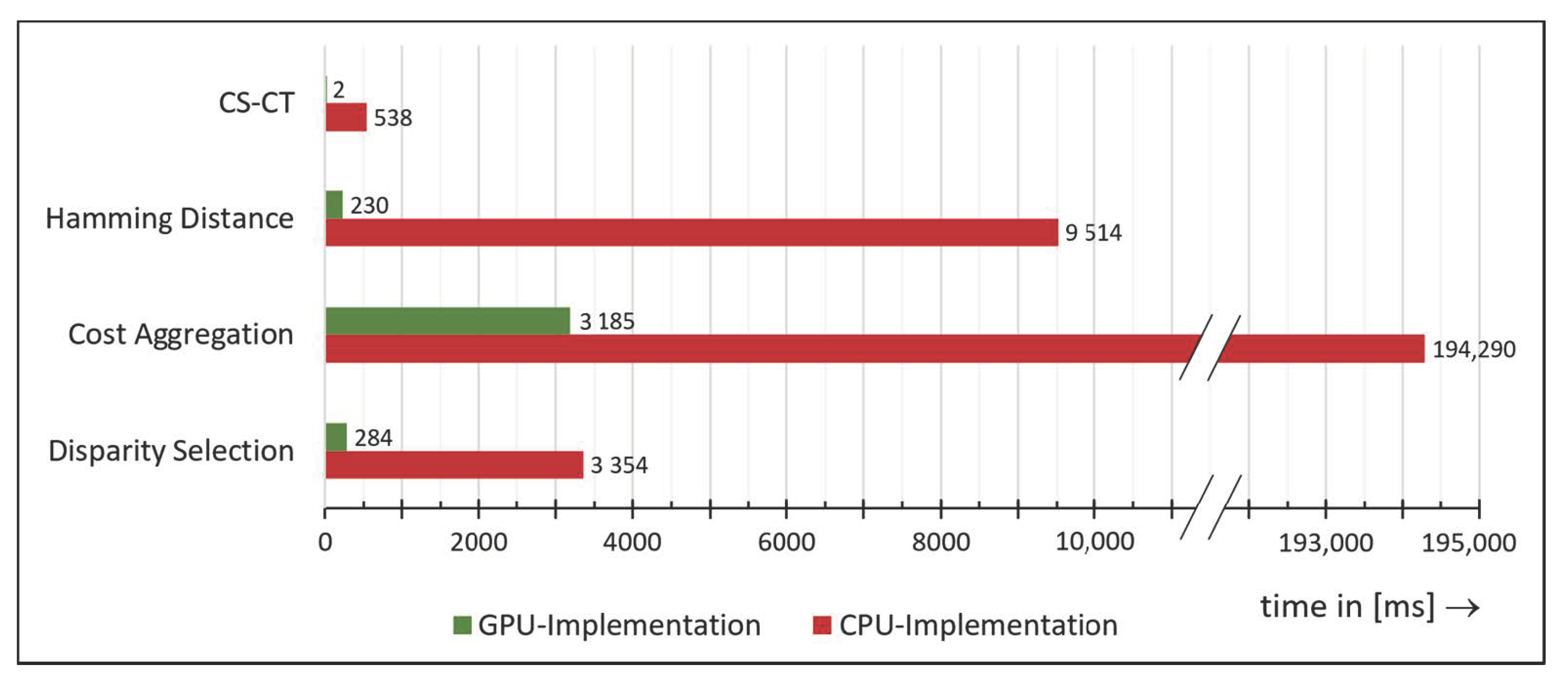

7.1. GPU Acceleration of SGM

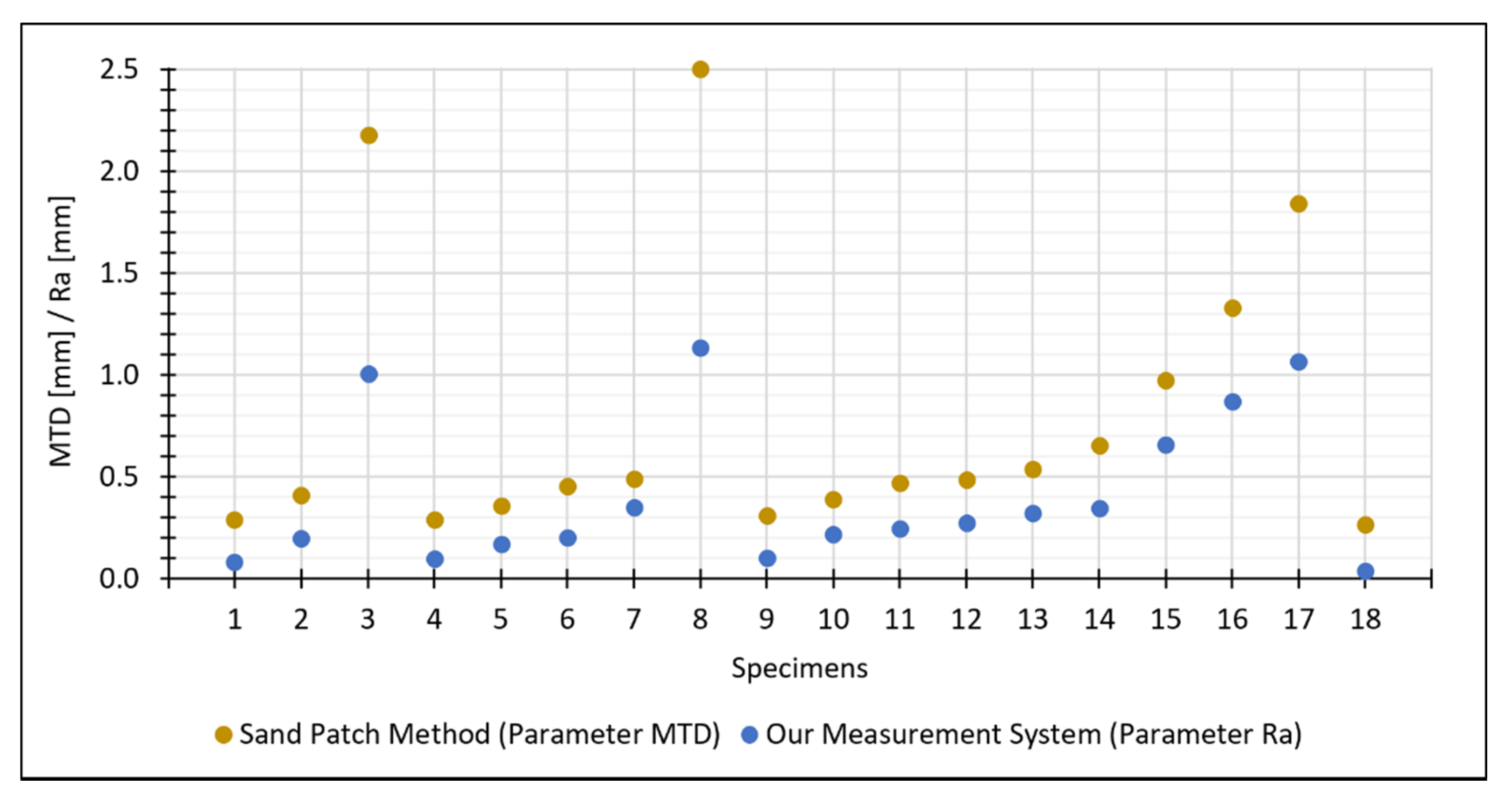

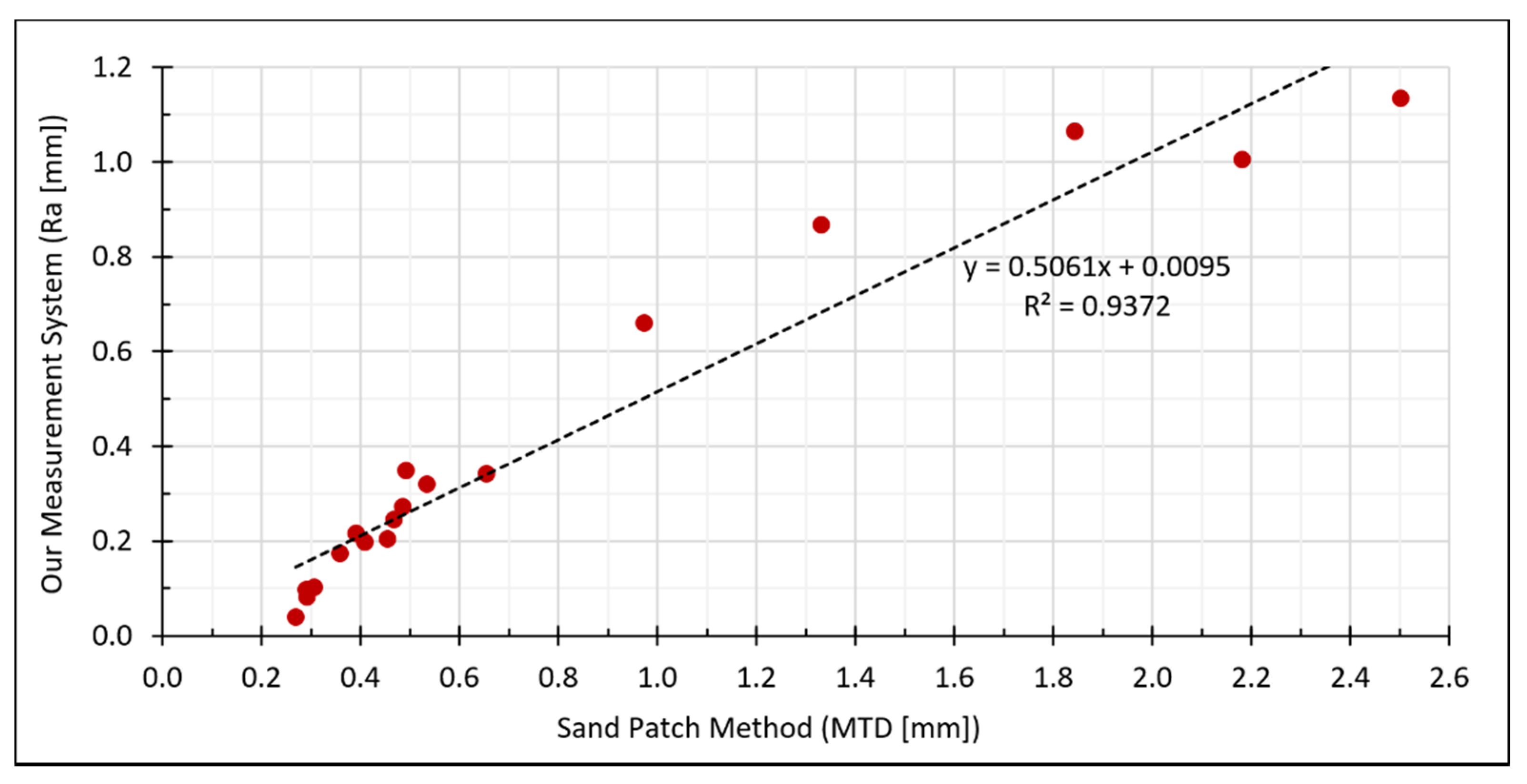

7.2. Comparison of the Results of Our Measurement System with the Sand Patch Method

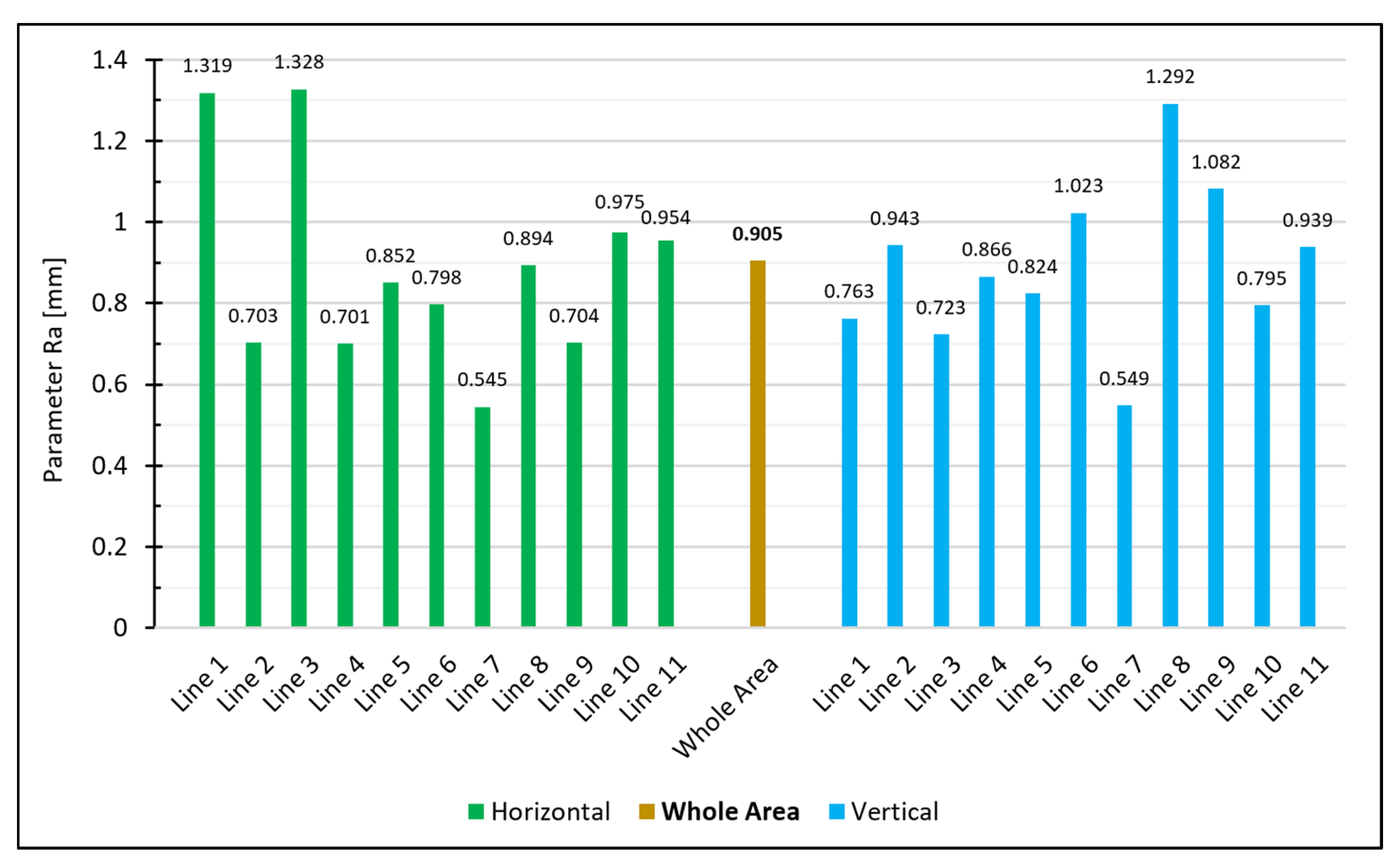

7.3. Area- vs. Line-Based Estimation of the Roughness

8. Conclusions

8.1. Summary

8.2. Outlook

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Julio, E.N.B.S.; Branco, F.A.B.; Silva, V.D. Concrete-to-concrete bond strength. Influence of the roughness of the substrate surface. Constr. Build. Mater. 2004, 18, 675–681. [Google Scholar] [CrossRef] [Green Version]

- Santos, P.M.D.; Julio, E.N.B.S.; Silva, V.D. Correlation between concrete-to-concrete bond strength and the roughness of the substrate surface. Constr. Build. Mater. 2007, 21, 1688–1695. [Google Scholar] [CrossRef] [Green Version]

- Santos, D.S.; Santos, P.M.D.; Dias-da-Costa, D. Effect of surface preparation and bonding agent on the concrete-to-concrete interface strength. Constr. Build. Mater. 2012, 37, 102–110. [Google Scholar] [CrossRef]

- Santos, P.M.D.; Julio, E.N.B.S. A state-of-the-art review on roughness quantification methods for concrete surfaces. Constr. Build. Mater. 2013, 38, 912–923. [Google Scholar] [CrossRef]

- Bikerman, J.J. The Science of Adhesive Joints; Academic Press: Cambridge, MA, USA, 1968. [Google Scholar]

- Kaufmann, N. Das Sandflächenverfahren. Straßenbautechnik 1971, 24, 131–135. [Google Scholar]

- Mellmann, G.; Oppat, K. Maß für Maß. Rautiefen-Bestimmung von Betonoberflächen mittels Laserverfahren. Bautenschutz Bausanier B B 2008, 31, 30–32. [Google Scholar]

- Steinhoff, A.; Holthausen, R.S.; Raupach, M.; Schulz, R.-R. Entwicklung eines Pastenverfahrens zur Bestimmung der Rautiefe an vertikalen Betonoberflächen. Entwicklungsschwerpunkte und Ergebnisse einer Studie. Beton 2020, 70, 182–186. [Google Scholar]

- China, S.; James, D.E. Comparison of Laser-Based and Sand Patch Measurements of Pavement Surface Macrotexture. J. Transp. Eng. 2012, 138, 176–181. [Google Scholar] [CrossRef]

- ASTM E2157-15. Standard Test Method for Measuring Pavement Macrotexture Properties Using the Circular Track Meter; ASTM: West Conshohocken, PA, USA, 2019. [Google Scholar]

- Ma, L.F.; Li, Y.; Li, J.; Wang, C.; Wang, R.S.; Chapman, M.A. Mobile Laser Scanned Point-Clouds for Road Object Detection and Extraction: A Review. Remote Sens. 2018, 10, 1531. [Google Scholar] [CrossRef] [Green Version]

- Gonzalez-Jorge, H.; Rodriguez-Gonzalvez, P.; Shen, Y.Q.; Laguela, S.; Diaz-Vilarino, L.; Lindenbergh, R.; Gonzalez-Aguilera, D.; Arias, P. Metrological intercomparison of six terrestrial laser scanning systems. IET Sci. Meas. Technol. 2018, 12, 218–222. [Google Scholar] [CrossRef] [Green Version]

- Stal, C.; Verbeurgt, J.; De Sloover, L.; De Wulf, A. Assessment of handheld mobile terrestrial laser scanning for estimating tree parameters. J. For. Res. 2020. [Google Scholar] [CrossRef]

- DIN EN ISO 13473-1:2017-08. Characterization of Pavement Texture by Use of Surface Profiles—Part 1: Determination of Mean Profile Depth; ISO: Geneva, Switzerland, 2017. [Google Scholar]

- Schulz, R.-R.; Schmidt, T.; Hardt, R.; Riedl, R. Baustellengerechte Laser-Profilmessverfahren für die Steuerung und Eigenüberwachung der Oberflächentexturierung von Verkehrsflächen aus Beton. Straße Autob. 2013, 64, 911–920. [Google Scholar]

- Schulz, R.-R. Fortschritte bei der Rauheitsbewertung von Betonoberflächen. Alternativen zum Sandflächenverfahren. Beton 2016, 66, 502–510. [Google Scholar]

- Schulz, R.-R. Laser schlägt Sand—Rautiefenmessung an Betonoberflächen. Bau. Im Bestand B B 2017, 40, 44–48. [Google Scholar]

- Schulz, R.-R. Roughness and anti-slip properties of concrete surfaces—Electro-optical measuring systems to determine roughness parameters. Bft Int. 2008, 74, 4–15. [Google Scholar]

- Werner, S.; Neumann, I.; Thienel, K.C.; Heunecke, O. A fractal-based approach for the determination of concrete surfaces using laser scanning techniques: A comparison of two different measuring systems. Mater. Struct. 2013, 46, 245–254. [Google Scholar] [CrossRef]

- PHIDIAS. The Complete Solution for Photogrammetric Close Range Applications. Available online: http://www.phocad.com/en/en.html (accessed on 19 October 2020).

- Benning, W.; Lange, J.; Schwermann, R.; Effkemann, C.; Görtz, S. Monitoring crack origin and evolution at concrete elements using photogrammetry. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 35, 678–683. [Google Scholar]

- Benning, W.; Lange, J.; Schwermann, R.; Effkemann, C.; Gortz, S. Photogrammetric measurement system for two-dimensional deformation and crack analysis of concrete constructions. Sens. Meas. Syst. 2004, 1829, 813–817. [Google Scholar]

- Calonder, M.; Lepetit, V.; Strecha, C.; Fua, P. BRIEF: Binary Robust Independent Elementary Features. In Proceedings of the Computer Vision ECCV, Heraklion, Greece, 5–11 September 2010; Volume 6314, pp. 778–792. [Google Scholar] [CrossRef] [Green Version]

- Rosten, E.; Porter, R.; Drummond, T. Faster and Better: A Machine Learning Approach to Corner Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 105–119. [Google Scholar] [CrossRef] [Green Version]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Wieneke, K.; Herbrand, M.; Vogler, N.; Schwermann, R.; Blankenbach, J. Measurement methods for determining the roughness of concrete surfaces. Bauingenieur 2018, 93, 365–373. [Google Scholar]

- Grigoriadis, K. Use of laser interferometry for measuring concrete substrate roughness in patch repairs. Autom. Constr. 2016, 64, 27–35. [Google Scholar] [CrossRef] [Green Version]

- Lange, D.A.; Jennings, H.M.; Shah, S.P. Analysis of Surface-Roughness Using Confocal Microscopy. J. Mater. Sci. 1993, 28, 3879–3884. [Google Scholar] [CrossRef]

- Sadowski, L. Methodology of the assessment of the interlayer bond in concrete composites using NDT methods. J. Adhes. Sci. Technol. 2018, 32, 139–157. [Google Scholar] [CrossRef]

- Özcan, B.; Schwermann, R.; Blankenbach, J. Kamerabasiertes Messsystem zur Bestimmung der Rauigkeit von Bauteiloberflächen—Kalibrierung und erste Ergebnisse. In Proceedings of the 19. Internationaler Ingenieurvermessungskurs, München, Germany, 3–6 March 2020. [Google Scholar]

- DIN 4760:1982-06. Form Deviations; Concepts; Classification System; Beuth: Berlin, Germany, 1982. [Google Scholar]

- DIN EN ISO 4287:2010-07. Geometrical Product Specifications (GPS)—Surface Texture: Profile Method—Terms, Definitions and Surface Texture Parameters; ISO: Geneva, Switzerland, 2010. [Google Scholar]

- Luhmann, T.; Robson, S.; Kyle, S.; Boehm, J. Close-Range Photogrammetry and 3d Imaging, 3rd ed.; Walter de Gruyter GmbH: Berlin, Germany, 2020. [Google Scholar]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: Cambridge, UK; New York, NY, USA, 2003. [Google Scholar]

- Agisoft Metashape. Available online: https://www.agisoft.com/features/professional-edition/ (accessed on 19 October 2020).

- Panchal, P.M.; Panchal, S.R.; Shah, S.K. A Comparison of SIFT and SURF. Int. J. Innov. Res. Comput. Commun. Eng. 2013, 1, 323–327. [Google Scholar]

- Hartley, R.I. In defense of the eight-point algorithm. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 580–593. [Google Scholar] [CrossRef] [Green Version]

- Hartley, R.I.; Sturm, P. Triangulation. Comput. Vis. Image Underst. 1997, 68, 146–157. [Google Scholar] [CrossRef]

- Blut, C.; Blankenbach, J. Three-dimensional CityGML building models in mobile augmented reality: A smartphone-based pose tracking system. Int. J. Digit. Earth 2020. [Google Scholar] [CrossRef] [Green Version]

- Fischler, M.A.; Bolles, R.C. Random Sample Consensus—A Paradigm for Model-Fitting with Applications to Image-Analysis and Automated Cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Levenberg, K. A method for the solution of certain non-linear problems in least squares. Q. Appl. Math. 1944, 2, 164–168. [Google Scholar] [CrossRef] [Green Version]

- Marquardt, D.W. An Algorithm for Least-Squares Estimation of Nonlinear Parameters. J. Soc. Ind. Appl. Math. 1963, 11, 431–441. [Google Scholar] [CrossRef]

- Sun, J.; Zheng, N.N.; Shum, H.Y. Stereo matching using belief propagation. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 787–800. [Google Scholar] [CrossRef]

- Kolmogorov, V.; Zabih, R. Computing visual correspondence with occlusions using graph cuts. In Proceedings of the Eighth Ieee International Conference on Computer Vision, Vol Ii, Proceedings, Vancouver, BC, Canada, 7–14 July 2001; pp. 508–515. [Google Scholar]

- Hirschmuller, H. Accurate and efficient stereo processing by semi-global matching and mutual information. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; Volume 2, pp. 807–814. [Google Scholar] [CrossRef]

- CUDA Zone|NVIDIA Developer. Available online: https://developer.nvidia.com/cuda-zone (accessed on 19 October 2020).

- Zhao, F.; Huang, Q.M.; Gao, W. Image matching by normalized cross-correlation. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Toulouse, France, 14–19 May 2006; Volume 1–13, pp. 1977–1980. [Google Scholar]

- Zabih, R.; Woodfill, J. Non-Parametric Local Transforms for Computing Visual Correspondence; Springer: Berlin/Heidelberg, Germany, 1994; pp. 151–158. [Google Scholar]

- Spangenberg, R.; Langner, T.; Rojas, R. Weighted Semi-Global Matching and Center-Symmetric Census Transform for Robust Driver Assistance; Springer: Berlin/Heidelberg, Germany, 2013; pp. 34–41. [Google Scholar]

- Tian, Q.; Huhns, M.N. Algorithms for Subpixel Registration. Comput. Vis. Graph. Image Process. 1986, 35, 220–233. [Google Scholar] [CrossRef]

- RenderScript. Available online: https://developer.android.com/guide/topics/renderscript/compute (accessed on 19 October 2020).

| Shape Deviations | ||

|---|---|---|

| 1. Order Form deviation | Curvature, Unevenness |  |

| 2. Order Waviness | Waves |  |

| 3. Order Roughness | Grooves |  |

| 4. Order Roughness | Ridges, Scales, Crests |  |

| 5. Order Roughness | Microstructure of the material | not easily presentable in image form |

| 6. Order | Lattice structure of the material | not easily presentable in image form |

| Specification | Value |

|---|---|

| Resolution (H × V) | 3840 pixel × 2748 pixel |

| Pixel size (H × V) | 1.67 µm × 1.67 µm |

| Bit depth | 12 bits |

| Signal-to-noise ratio | 32.9 dB |

| Mono/Colour | Mono |

| Shutter technology | Rolling shutter |

| 1.00 | −0.06 | 0.15 | −1.00 | 0.99 | −0.97 | 0.22 | 0.13 | |

| 1.00 | 0.05 | 0.06 | −0.06 | 0.06 | 0.04 | 0.01 | ||

| 1.00 | −0.15 | 0.14 | −0.13 | 0.04 | −0.02 | |||

| 1.00 | −0.99 | 0.97 | −0.22 | −0.13 | ||||

| 1.00 | −0.99 | 0.22 | 0.13 | |||||

| 1.00 | −0.21 | −0.13 | ||||||

| 1.00 | 0.03 | |||||||

| 1.00 |

| Parameter | Value | Std. Dev. | |

|---|---|---|---|

| 8.2545 mm | 0.0007 mm | ||

| 0.0737 mm | 0.0012 mm | ||

| 0.0051 mm | 0.0007 mm | ||

| −43.8231 | 0.2449 | ||

| 565.8091 | 36.7678 | ||

| −10,555.4693 | 1665.3598 | ||

| 5.1708 | 0.2624 | ||

| 10.2455 | 0.2549 |

| 1.00 | 0.00 | 0.01 | 0.03 | −0.01 | 0.00 | 0.00 | 0.00 | |

| 1.00 | 0.00 | −0.01 | 0.01 | −0.01 | 0.72 | 0.00 | ||

| 1.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.38 | |||

| 1.00 | −0.98 | 0.93 | 0.01 | 0.00 | ||||

| 1.00 | −0.99 | −0.01 | 0.00 | |||||

| 1.00 | 0.01 | 0.00 | ||||||

| 1.00 | 0.00 | |||||||

| 1.00 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Özcan, B.; Schwermann, R.; Blankenbach, J. A Novel Camera-Based Measurement System for Roughness Determination of Concrete Surfaces. Materials 2021, 14, 158. https://doi.org/10.3390/ma14010158

Özcan B, Schwermann R, Blankenbach J. A Novel Camera-Based Measurement System for Roughness Determination of Concrete Surfaces. Materials. 2021; 14(1):158. https://doi.org/10.3390/ma14010158

Chicago/Turabian StyleÖzcan, Barış, Raimund Schwermann, and Jörg Blankenbach. 2021. "A Novel Camera-Based Measurement System for Roughness Determination of Concrete Surfaces" Materials 14, no. 1: 158. https://doi.org/10.3390/ma14010158

APA StyleÖzcan, B., Schwermann, R., & Blankenbach, J. (2021). A Novel Camera-Based Measurement System for Roughness Determination of Concrete Surfaces. Materials, 14(1), 158. https://doi.org/10.3390/ma14010158