Surface Defect Detection for Automated Tape Laying and Winding Based on Improved YOLOv5

Abstract

:1. Introduction

- (1)

- The CA attention mechanism has been improved in the embedded Separate CA structure, which not only achieves long-range dependence in the spatial direction, but also enhances the positional information and improves the ability to extract features. Additionally, the use of interval embedding further enhances the detection speed.

- (2)

- A new SIoU_loss regression box loss function has been proposed to replace the original CioU_loss loss function, introducing considerations for the matching direction and using the angle loss as a penalty term. This further accelerates the regression speed of the bounding box and improves the detection accuracy, especially for small objects.

- (3)

- Based on the proposed SIoU_loss regression box loss function in this paper and combined with the Soft-NMS regression box filtering method, a new non-maximum suppression method called Soft-SIoU-NMS has been proposed for the post-processing of the model. By using a more gentle pre-selection box removal method, redundant boxes are removed while retaining more effective boxes, which improves the detection accuracy for overlapping coverage defects.

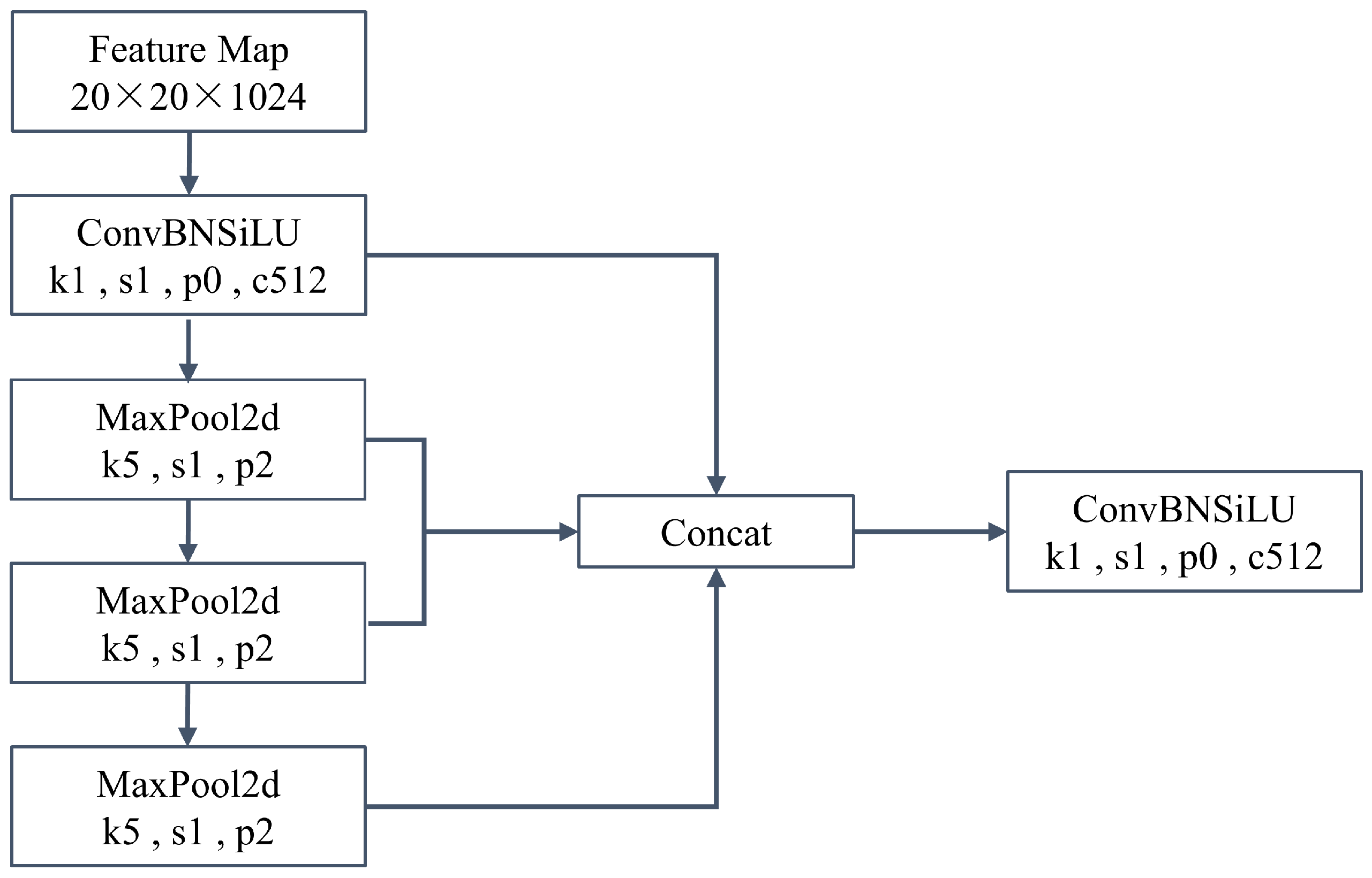

2. Detection Principle of YOLOv5

3. The Improvement of the Model Based on YOLOv5

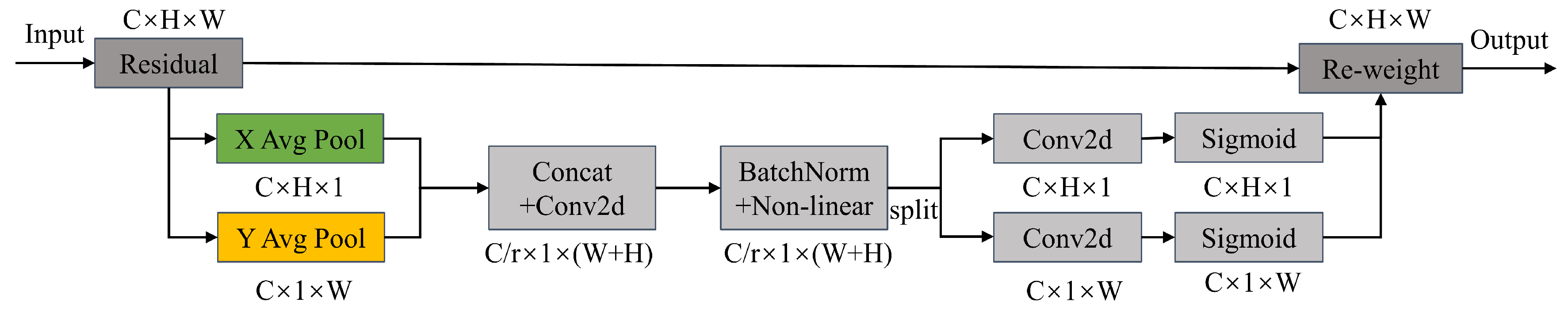

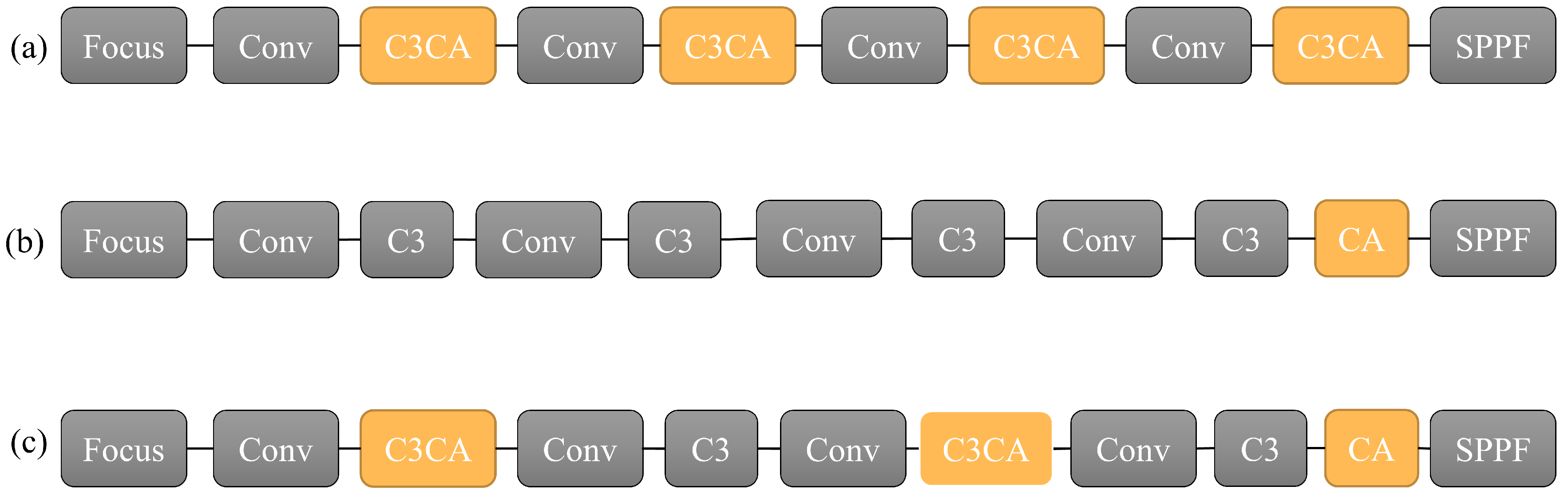

3.1. Separate CA-YOLOv5 Network Model

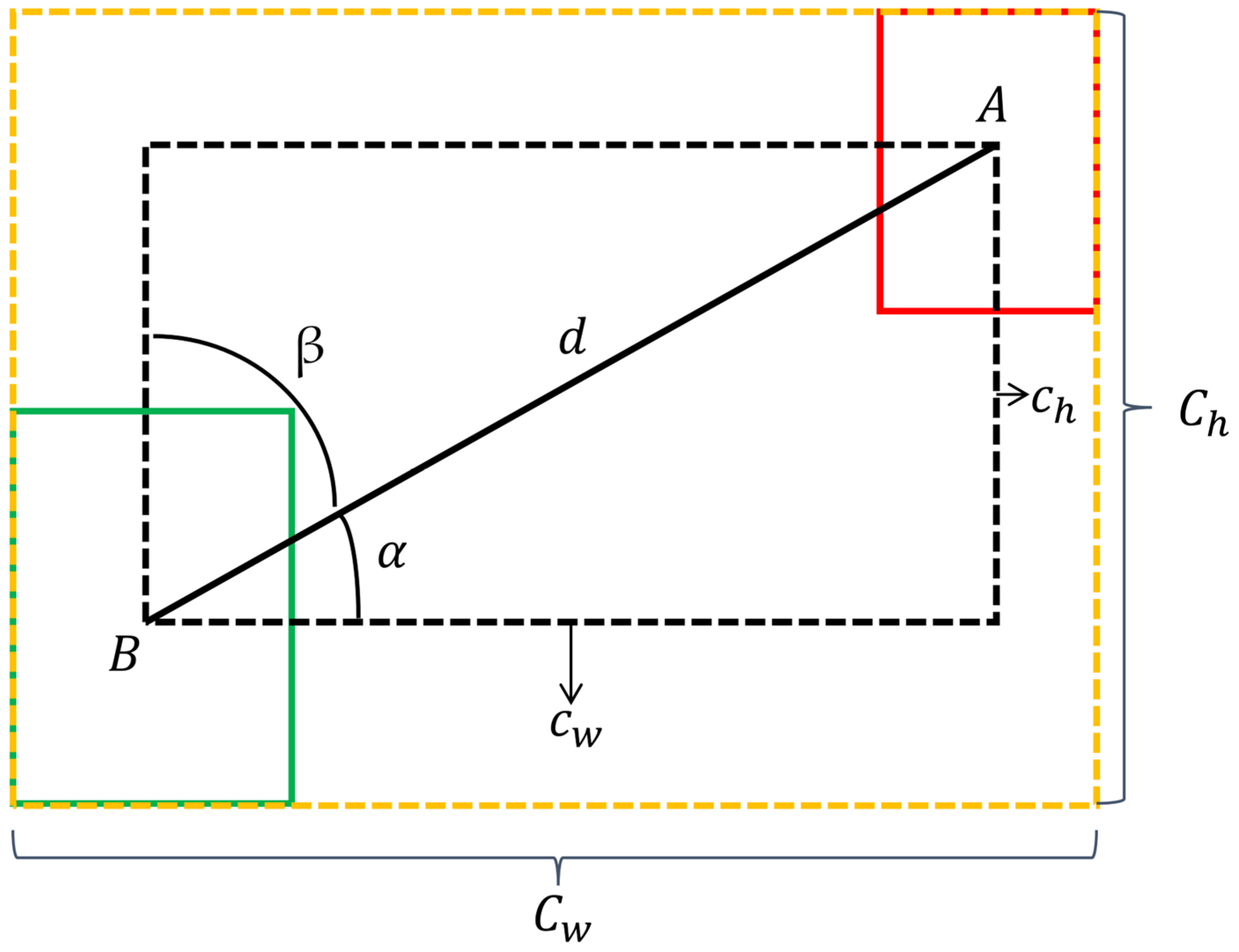

3.2. Regression Box Loss Function Improvement

- (1)

- Angle cost

- (2)

- Distance cost

- (3)

- Shape cost

- (4)

- IoU cost

3.3. Post-Processing Method Improvements

3.3.1. Post-Processing Method for YOLOv5

3.3.2. Improving Non-Maximum Suppression Algorithm

4. Experimental Setup and Results Analysis

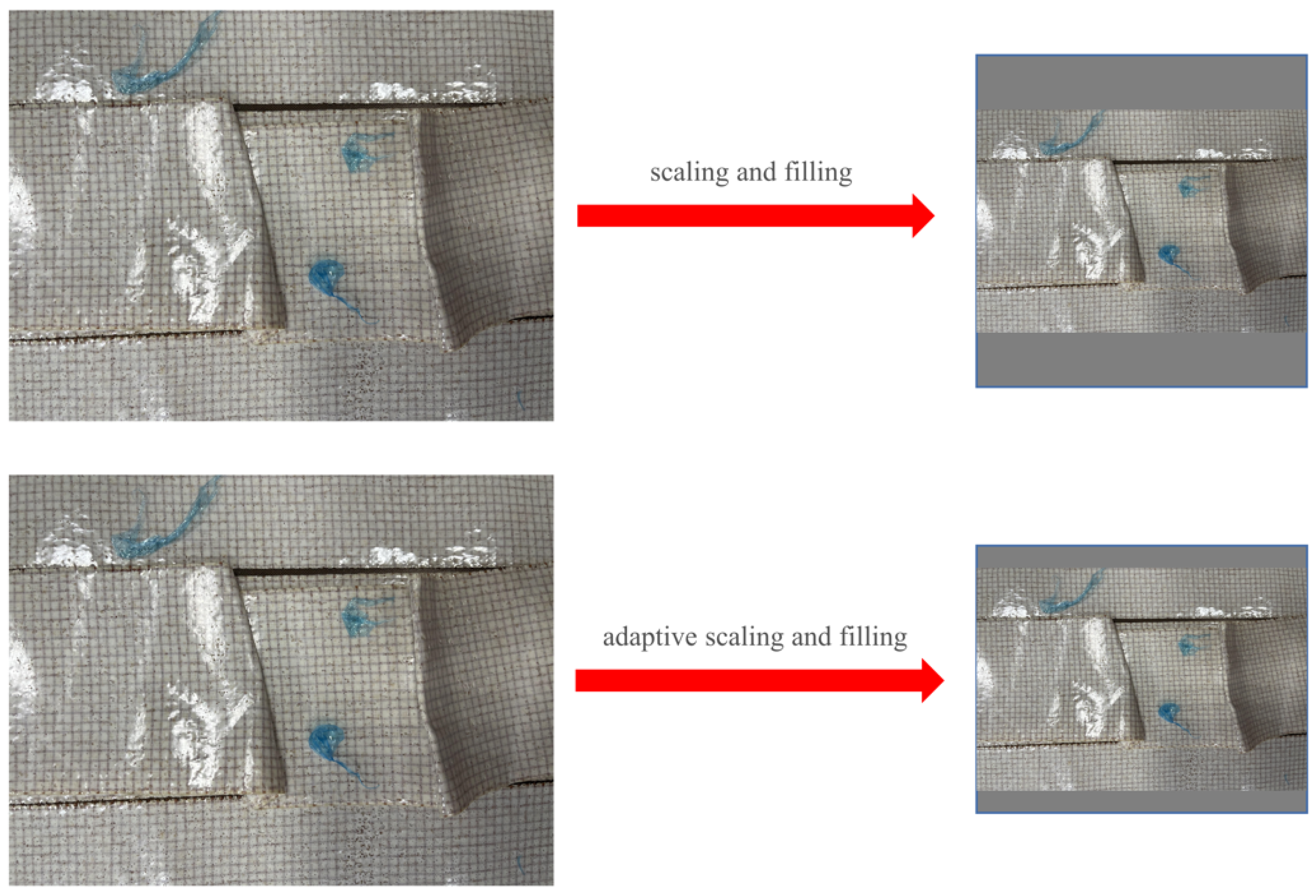

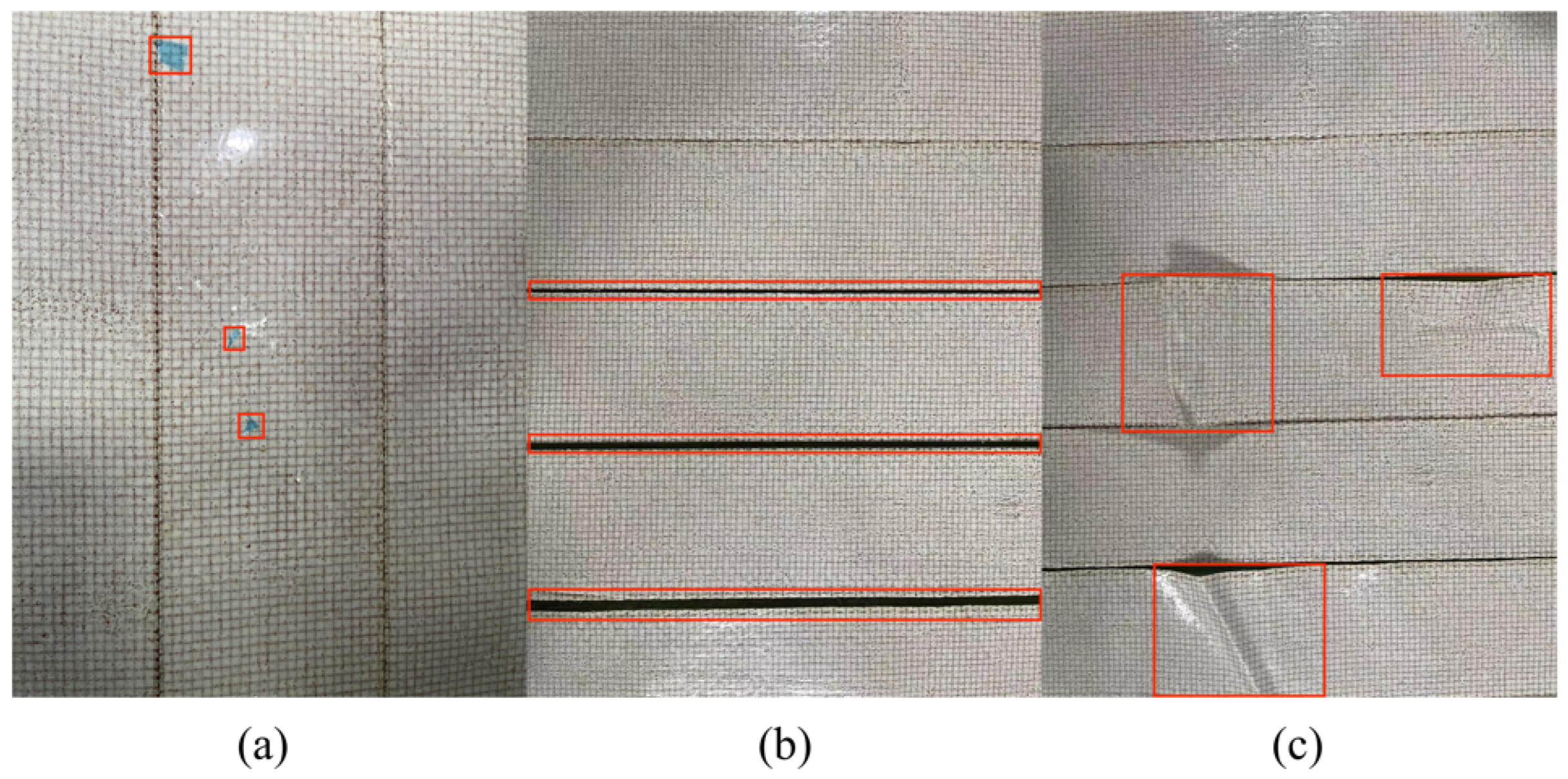

4.1. Dataset

4.2. Experimental Environment and Parameter Configuration

4.3. Evaluation Indicators

4.4. Experimental Results and Analysis

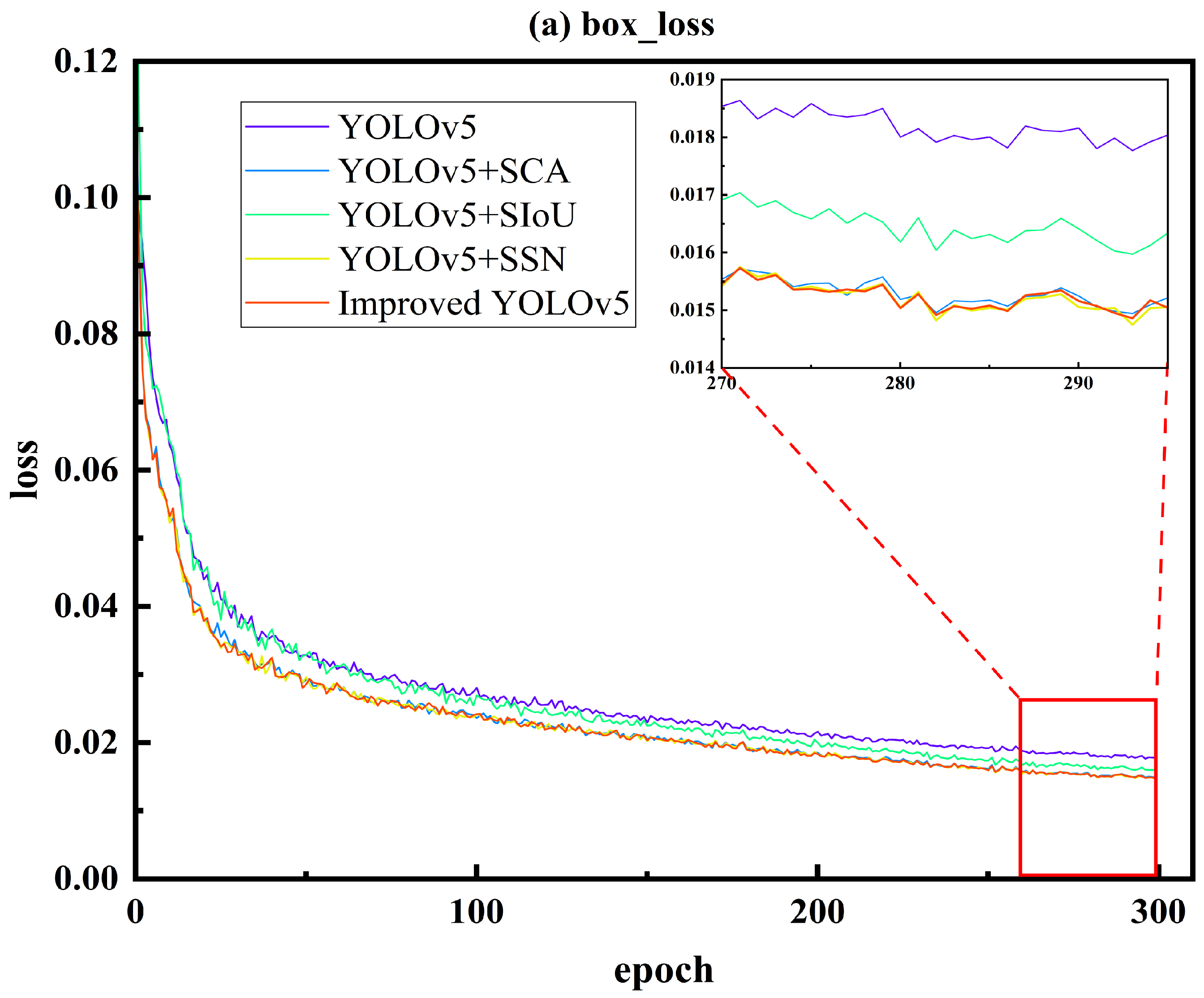

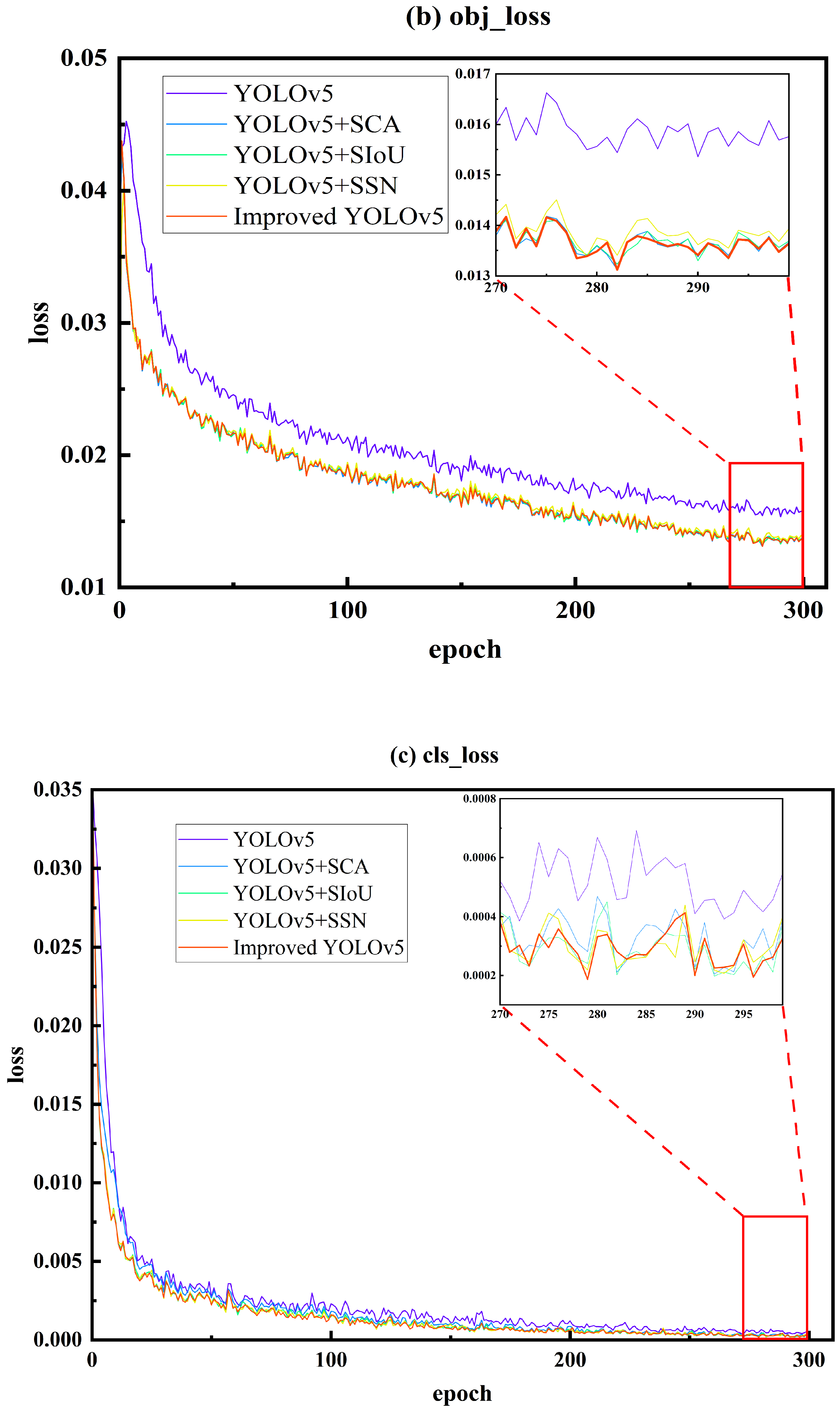

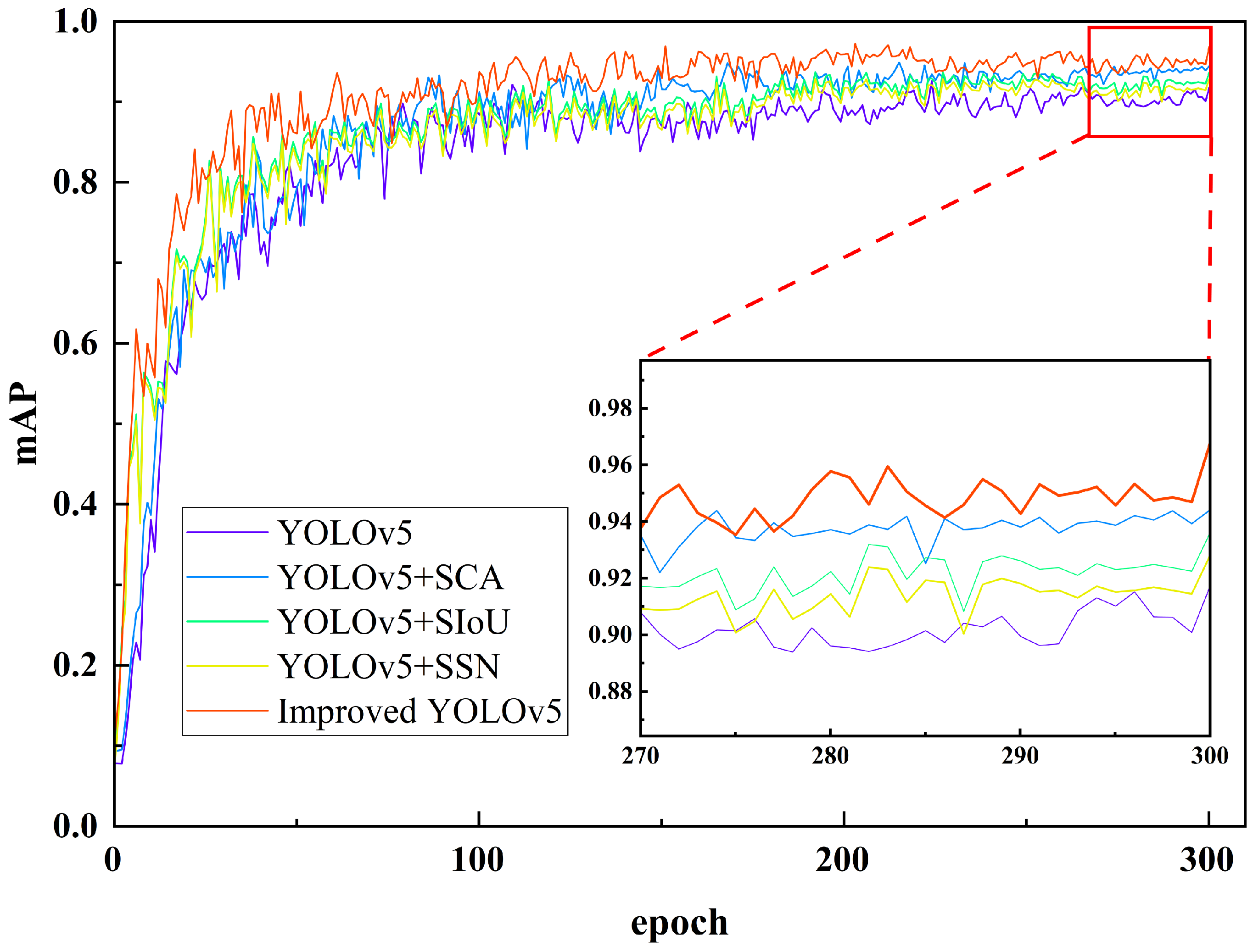

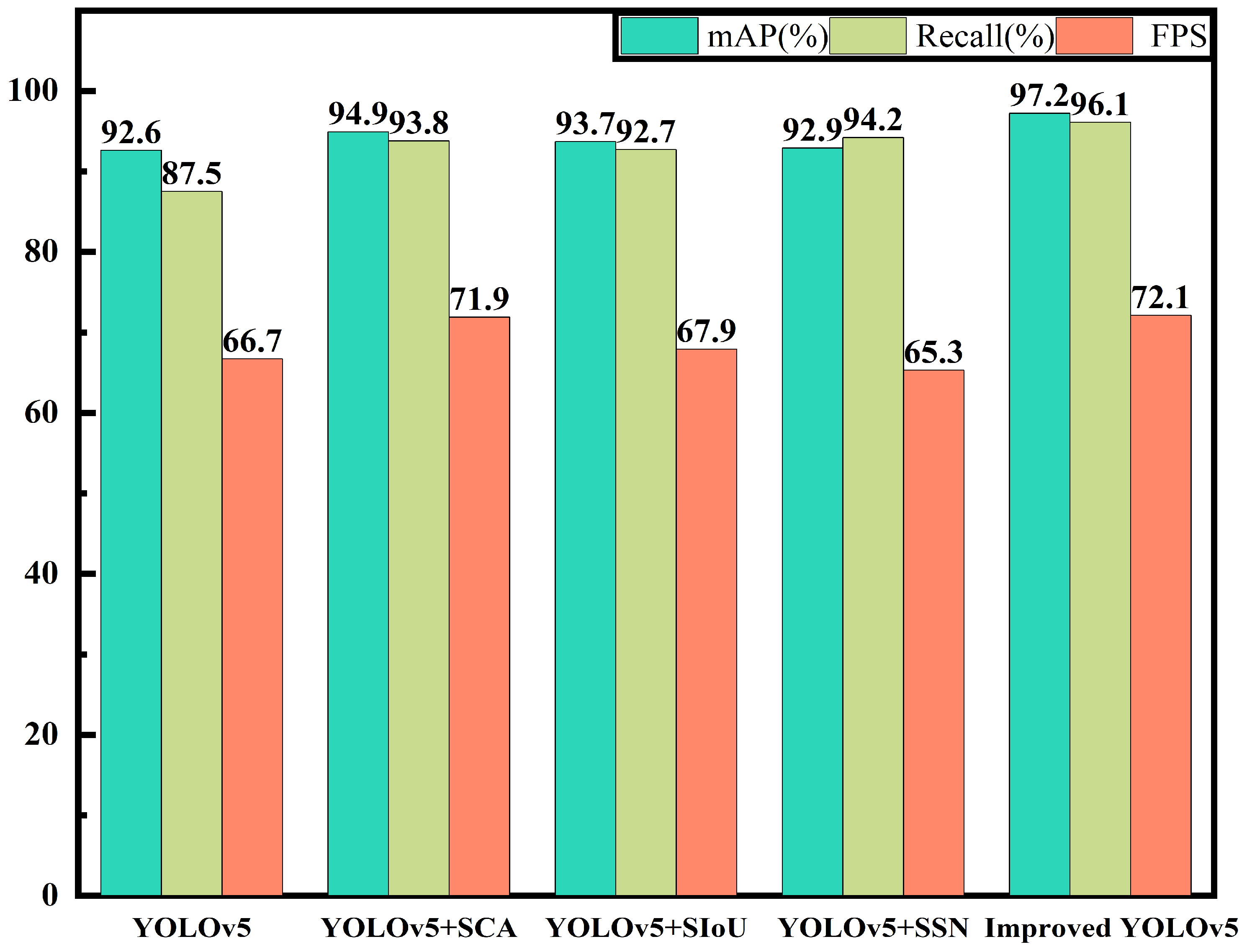

4.4.1. Training Results and Analysis

4.4.2. Test Results and Analysis

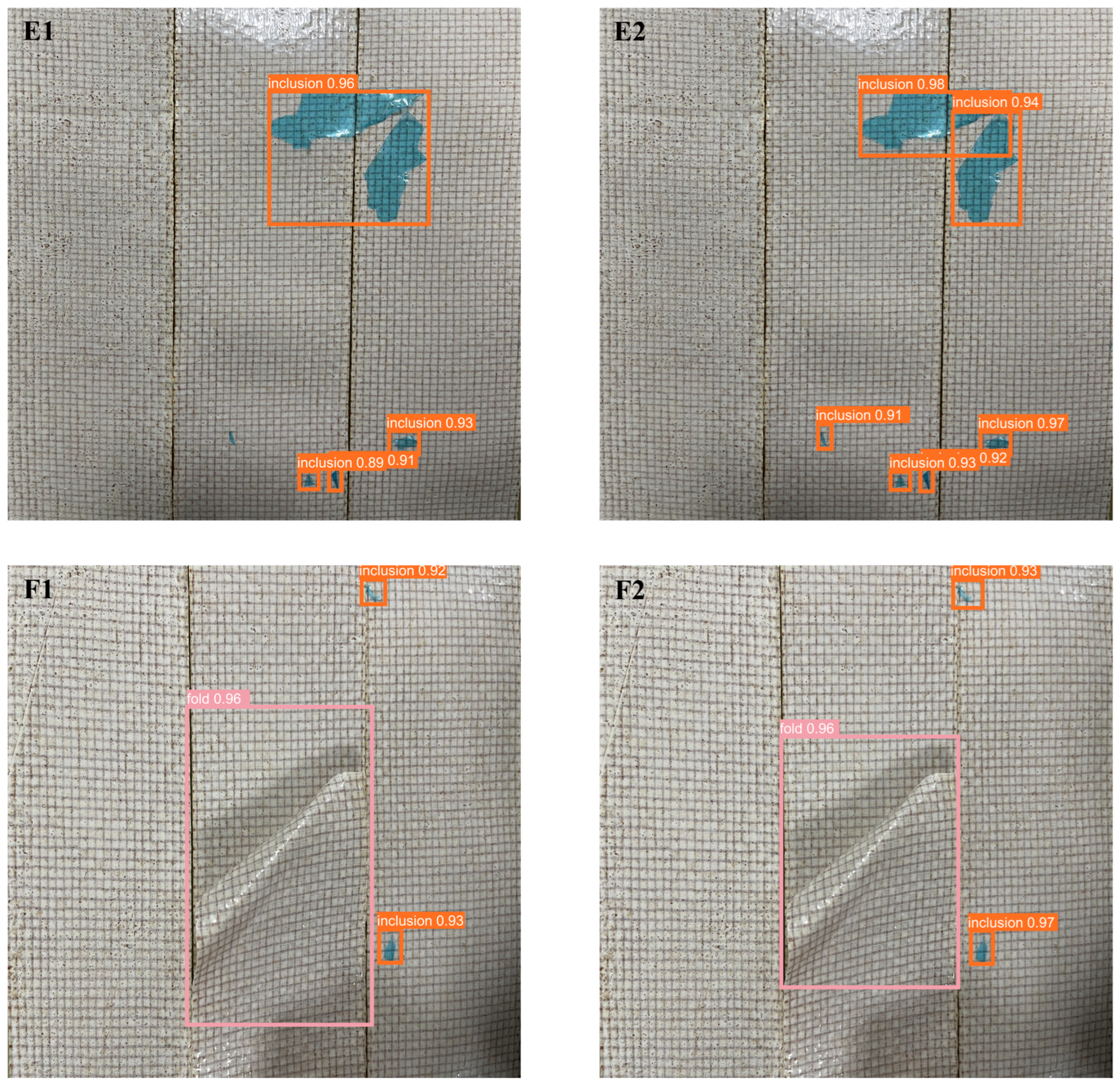

4.4.3. Application Results and Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- He, K.; Nie, H.; Yan, C. The Intelligent Composite Panels Manufacturing Technology Based on Tape-laying Automatic System. Procedia CIRP 2016, 56, 610–613. [Google Scholar] [CrossRef]

- Aabid, A.; Baig, M.; Hrairi, M. Advanced Composite Materials for Structural Maintenance, Repair, and Control. Materials 2023, 16, 743. [Google Scholar] [CrossRef] [PubMed]

- Al-Furjan, M.; Shan, L.; Shen, X.; Zarei, M.; Hajmohammad, M.; Kolahchi, R. A review on fabrication techniques and tensile properties of glass, carbon, and Kevlar fiber reinforced rolymer composites. J. Mater. Res. Technol. 2022, 19, 2930–2959. [Google Scholar] [CrossRef]

- Sacco, C.; Radwan, A.B.; Beatty, T.; Harik, R. Machine Learning Based AFP Inspection: A Tool for Characterization and Integration. SAMPE J. 2020, 56, 34–41. [Google Scholar]

- Schmidt, C.; Hocke, T.; Denkena, B. Deep learning-based classification of production defects in automated-fiber-placement processes. Prod. Eng. 2019, 13, 501–509. [Google Scholar] [CrossRef]

- Zambal, S.; Heindl, C.; Eitzinger, C.; Scharinger, J. End-to-End Defect Detection in Automated Fiber Placement Based on Artificially Generated Data. In Proceedings of the Fourteenth International Conference on Quality Control by Artificial Vision, Mulhouse, France, 15–17 July 2019; p. 68. [Google Scholar]

- Hsu, K.; Yuh, D.-Y.; Lin, S.-C.; Lyu, P.-S.; Pan, G.-X.; Zhuang, Y.-C.; Chang, C.-C.; Peng, H.-H.; Lee, T.-Y.; Juan, C.-H.; et al. Improving performance of deep learning models using 3.5D U-Net via majority voting for tooth segmentation on cone beam computed tomography. Sci. Rep. 2022, 12, 19809. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single Shot Multibox Detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 39, 1137–1149. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.109342020. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767 2018. [Google Scholar]

- Wang, H.; Yang, X.; Zhou, B.; Shi, Z.; Zhan, D.; Huang, R.; Lin, J.; Wu, Z.; Long, D. Strip Surface Defect Detection Algorithm Based on YOLOv5. Materials 2023, 16, 2811. [Google Scholar] [CrossRef] [PubMed]

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. A Review of Yolo Algorithm Developments. Procedia Comput. Sci. 2022, 199, 1066–1073. [Google Scholar] [CrossRef]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. Mixup: Beyond Empirical Risk Minimization. arXiv 2017, arXiv:1710.094122017. [Google Scholar]

- Yun, S.; Han, D.; Oh, S.J.; Chun, S.; Choe, J.; Yoo, Y. Cutmix: Regularization Strategy to Train Strong Classifiers with Localizable Features. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6023–6032. [Google Scholar]

- Jin, R.; Xu, Y.; Xue, W.; Li, B.; Yang, Y.; Chen, W. An Improved Mobilenetv3-Yolov5 Infrared Target Detection Algorithm Based on Attention Distillation. In Proceedings of the Advanced Hybrid Information Processing, Pt I; Liu, S., Ma, X., Eds.; European Alliance Innovation: Gent, Belgium, 2022; Volume 416, pp. 266–279. [Google Scholar] [CrossRef]

- Wang, Y.; Du, H.; Zhang, X.; Xu, Y. Application of Visual Attention Network in Workpiece Sur-face Defect Detection. J. Comput. Aided Des. Comput. Graph. 2019, 31, 1528–1534. [Google Scholar]

- Xuan, W.; Jian-She, G.; Bo-Jie, H.; Zong-Shan, W.; Hong-Wei, D.; Jie, W. A Lightweight Modified YOLOX Network Using Coordinate Attention Mechanism for PCB Surface Defect Detection. IEEE Sens. J. 2022, 22, 20910–20920. [Google Scholar] [CrossRef]

- Li, Z.; Li, B.; Ni, H.; Ren, F.; Lv, S.; Kang, X. An Effective Surface Defect Classification Method Based on RepVGG with CBAM Attention Mechanism (RepVGG-CBAM) for Aluminum Profiles. Metals 2022, 12, 1809. [Google Scholar] [CrossRef]

- Lin, D.; Cheng, Y.; Li, Y.; Prasad, S.; Guo, A. MLSA-UNet: End-to-End Multi-Level Spatial Attention Guided UNet for Industrial Defect Segmentation. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Bordeaux, France, 16–19 October 2022; pp. 441–445. [Google Scholar] [CrossRef]

- Zhu, Y.; Ding, R.; Huang, W.; Wei, P.; Yang, G.; Wang, Y. HMFCA-Net: Hierarchical multi-frequency based Channel attention net for mobile phone surface defect detection. Pattern Recognit. Lett. 2022, 153, 118–125. [Google Scholar] [CrossRef]

- Yuan, Y.; Tan, X. Defect Detection of Refrigerator Metal Surface in Complex Environment. J. Comput. Appl. 2021, 41, 270–274. [Google Scholar]

- Yang, Y.; Liao, Y.; Cheng, L.; Zhang, K.; Wang, H.; Chen, S. Remote Sensing Image Aircraft Target Detection Based on GIoU-YOLO V3. In Proceedings of the 2021 6th International Conference on Intelligent Computing and Signal Processing (ICSP), Xi’an, China, 9–11 April 2021; pp. 474–478. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. arXiv 2019, arXiv:1911.08287. [Google Scholar] [CrossRef]

- Yu, J.; Jiang, Y.; Wang, Z.; Cao, Z.; Huang, T. UnitBox: An Advanced Object Detection Network. In Proceedings of the 24th ACM International Conference on Multimedia, Amsterdam, The Netherlands, 24–26 October 2016; pp. 516–520. [Google Scholar]

- Xu, Y.; Jiang, M.; Li, Y.; Wu, Y.; Lu, G. Fruit Target Detection Based on Improved YOLO and NMS. J. Electron. Meas. Instrum. 2022, 36, 114–123. [Google Scholar]

| Name | Configuration/Version |

|---|---|

| Operating System | Windows 10 × 64 |

| CPU | Intel(R) Core(TM) i7-10875H |

| GPU | NVIDIA GeForce GTX 1650 Ti |

| Memory | 32 GB |

| Graphics memory | 4 GB |

| IDE | Pycharm 2020.1 |

| Deep Learning Framework | Pytorch 1.10.1 |

| CUDA | CUDA 10.2.89 |

| cudnn | cuDNN 8.3.3 |

| PythonVersion | Python 3.9 |

| Parameter | Setting |

|---|---|

| Initial Learning Rate | 0.01 |

| Epoch | 300 |

| Batch size | 3 |

| Momentum Size | 0.937 |

| Weight Decay Coefficient | 0.0005 |

| Input Image Size | 640 × 640 |

| Nc | 3 |

| Optimizer | SGD |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wen, L.; Li, S.; Ren, J. Surface Defect Detection for Automated Tape Laying and Winding Based on Improved YOLOv5. Materials 2023, 16, 5291. https://doi.org/10.3390/ma16155291

Wen L, Li S, Ren J. Surface Defect Detection for Automated Tape Laying and Winding Based on Improved YOLOv5. Materials. 2023; 16(15):5291. https://doi.org/10.3390/ma16155291

Chicago/Turabian StyleWen, Liwei, Shihao Li, and Jiajun Ren. 2023. "Surface Defect Detection for Automated Tape Laying and Winding Based on Improved YOLOv5" Materials 16, no. 15: 5291. https://doi.org/10.3390/ma16155291