An Example of Using Low-Cost LiDAR Technology for 3D Modeling and Assessment of Degradation of Heritage Structures and Buildings

Abstract

1. Introduction

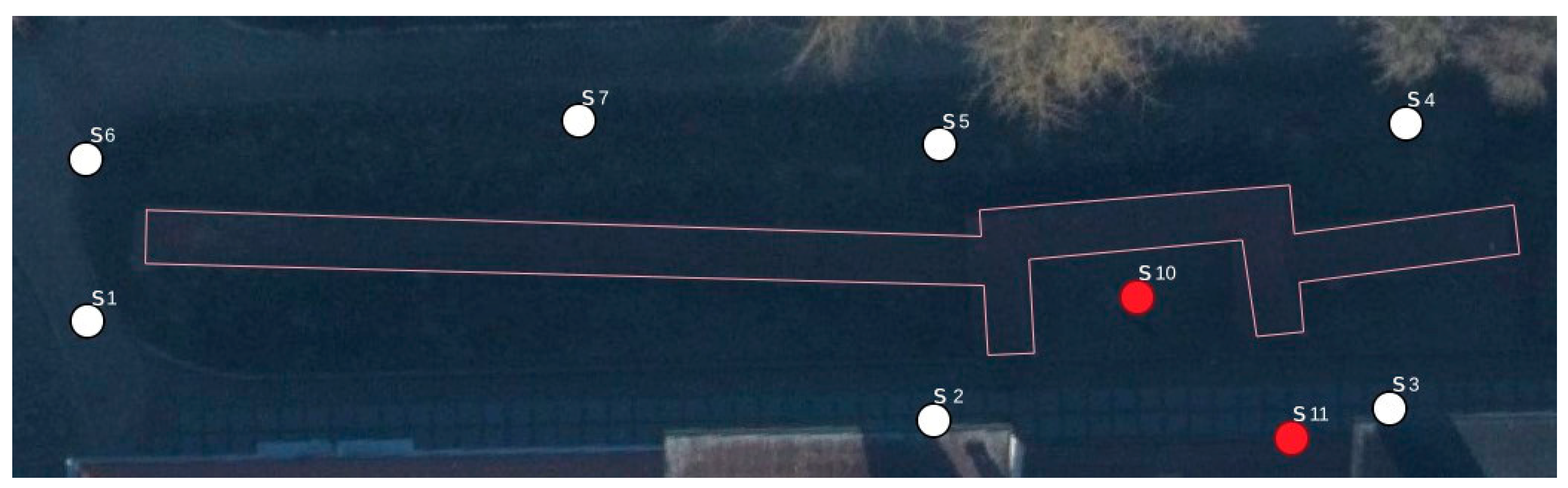

2. Research Area

3. Methodology

3.1. Measurement Equipment Used

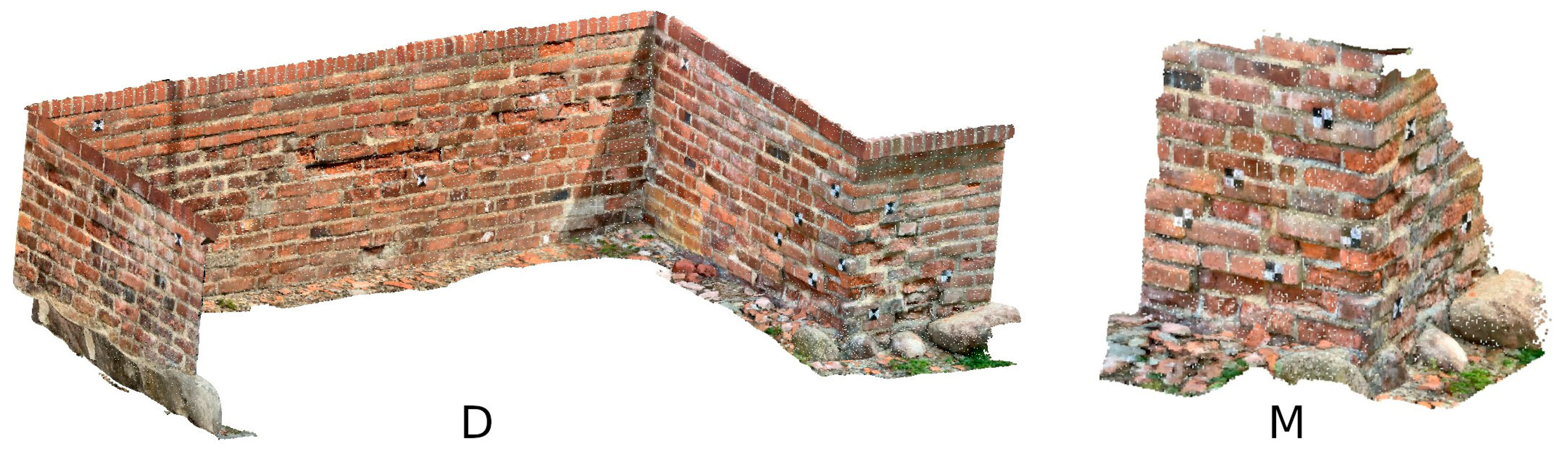

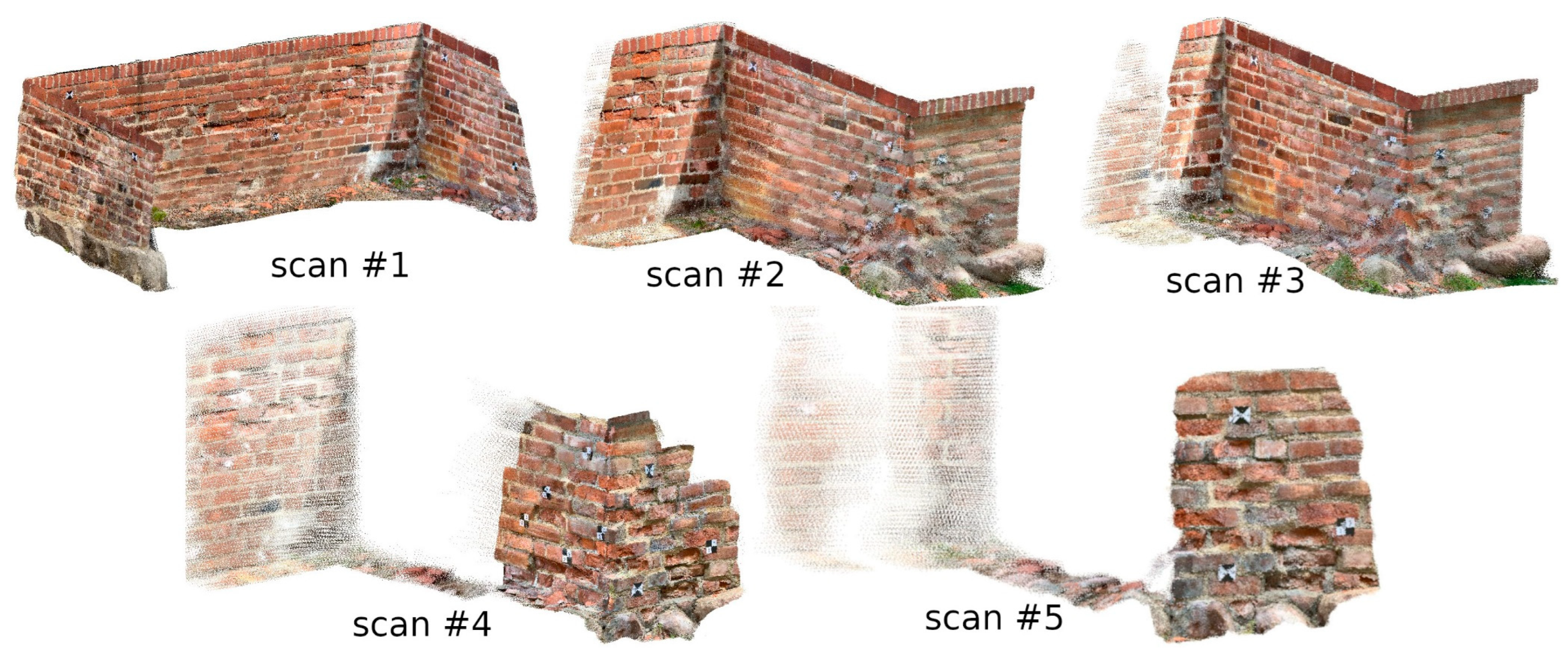

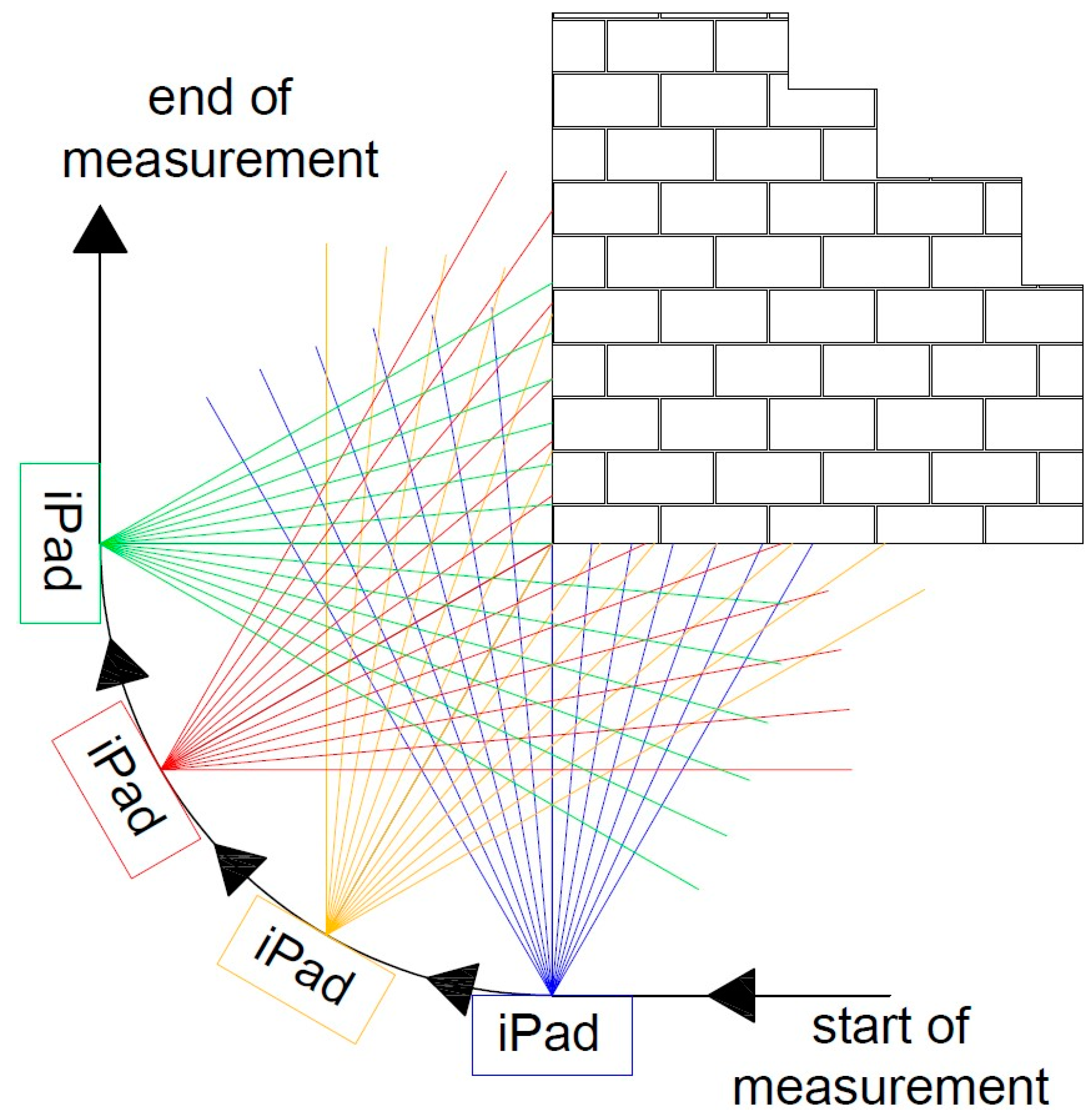

3.2. Measurements

4. Results

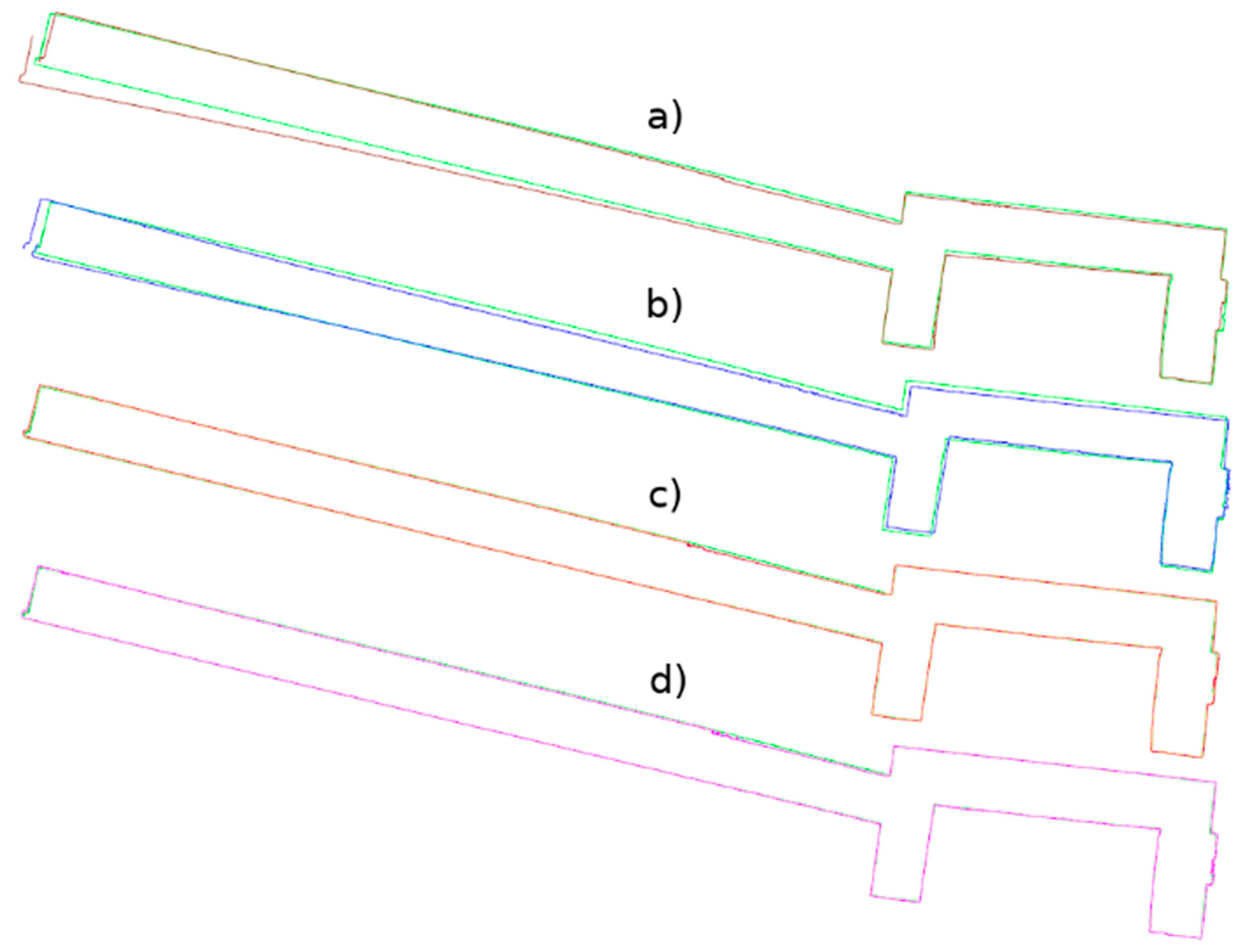

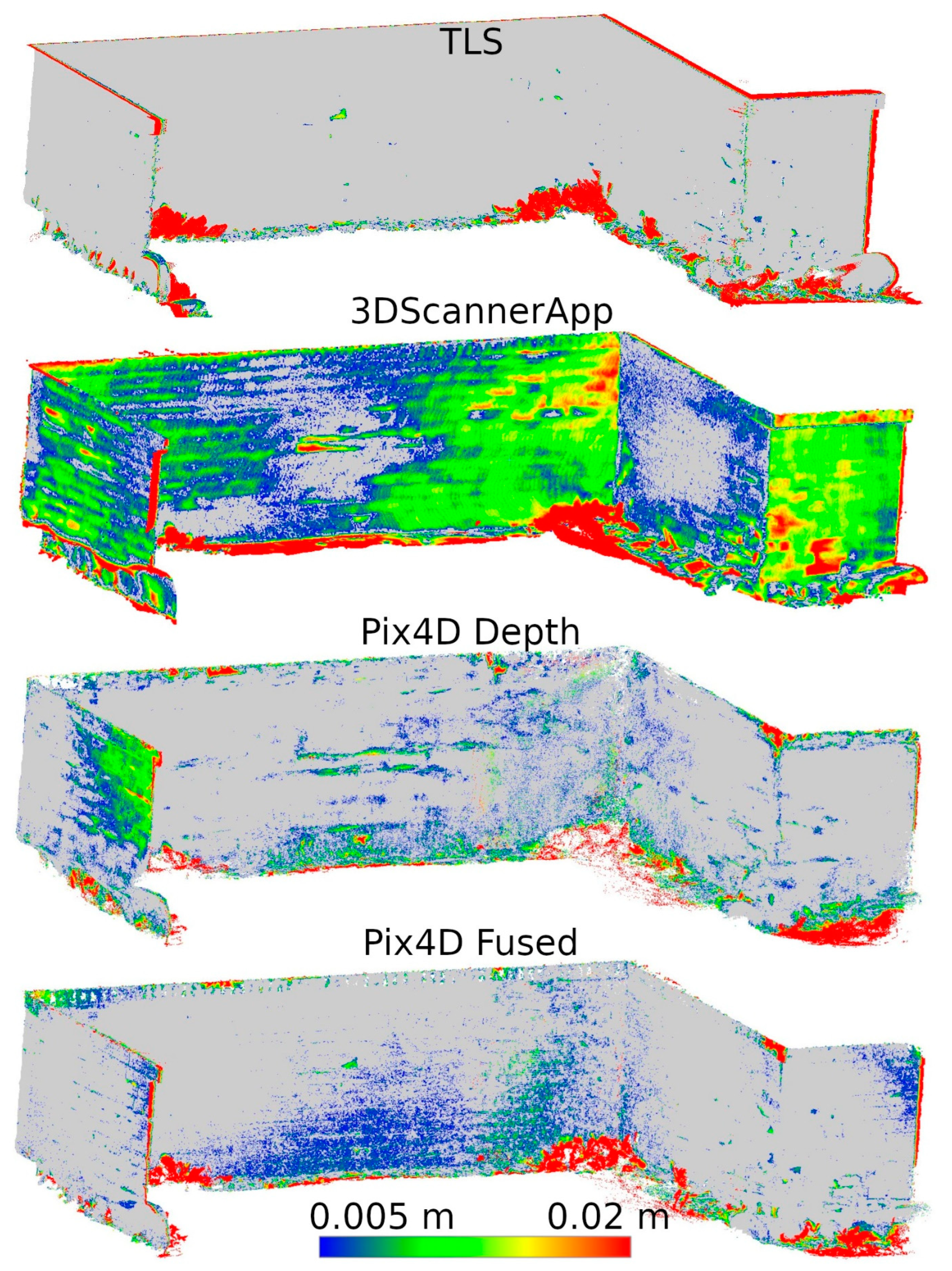

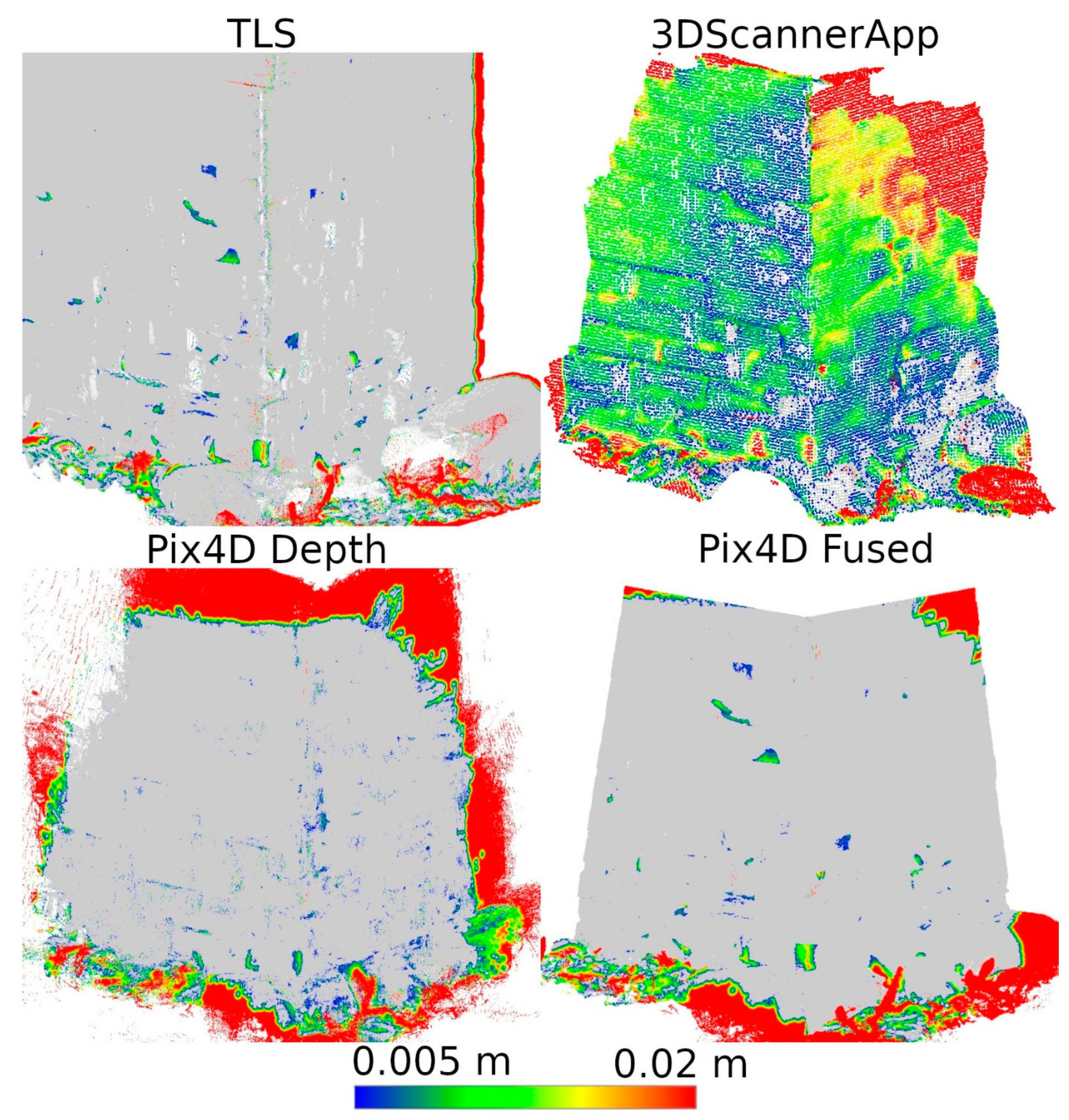

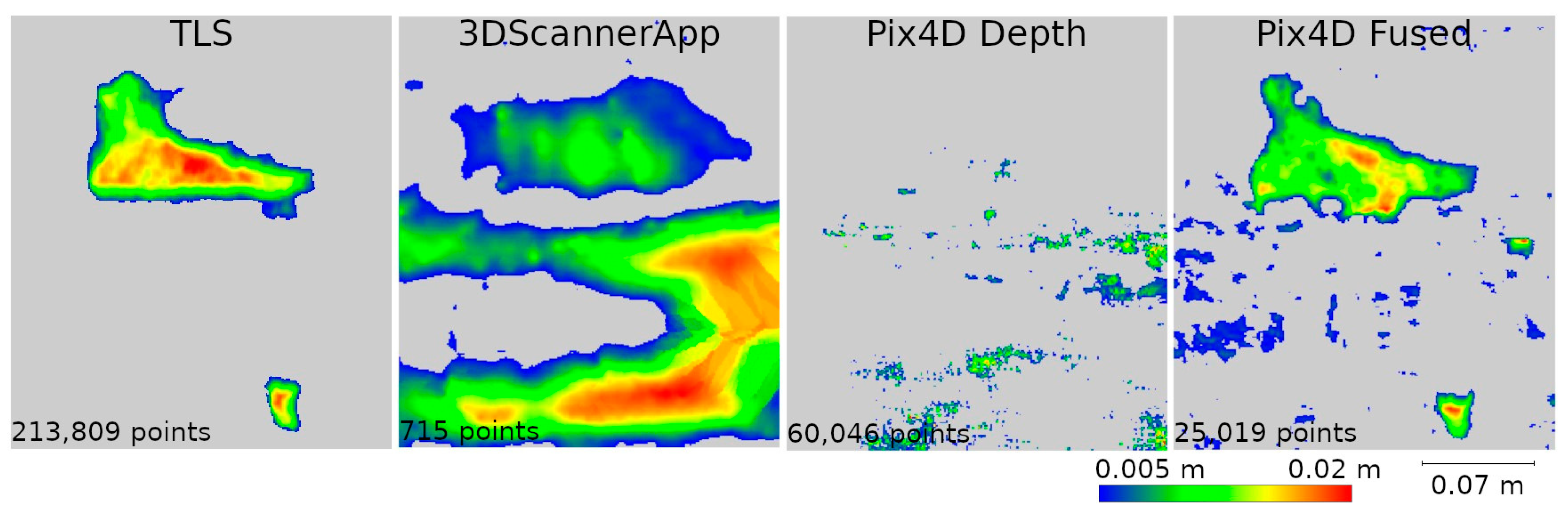

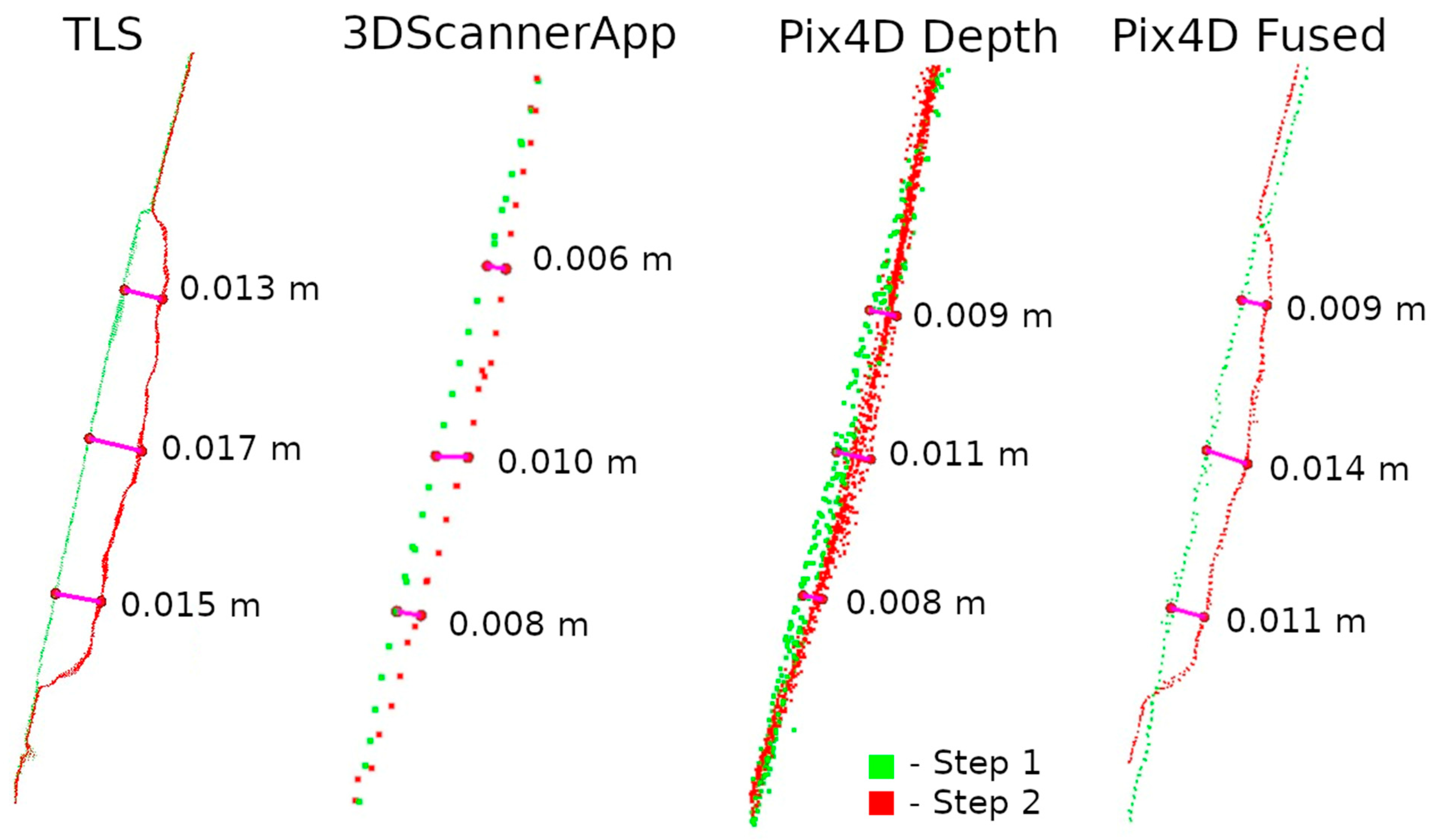

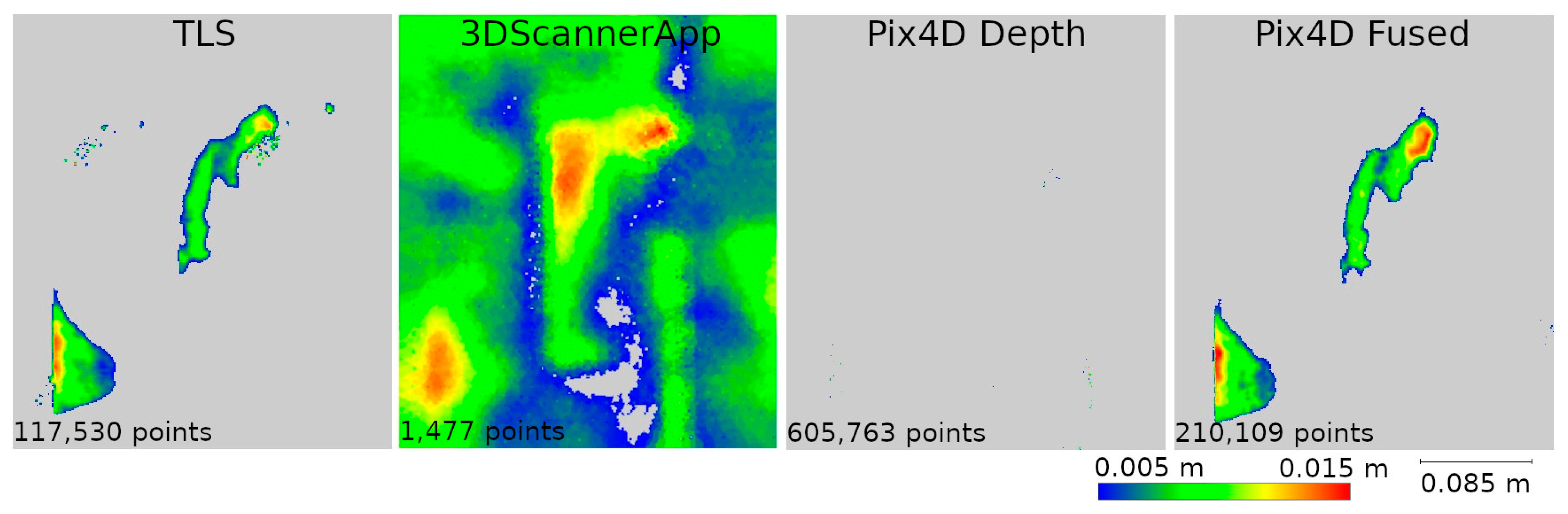

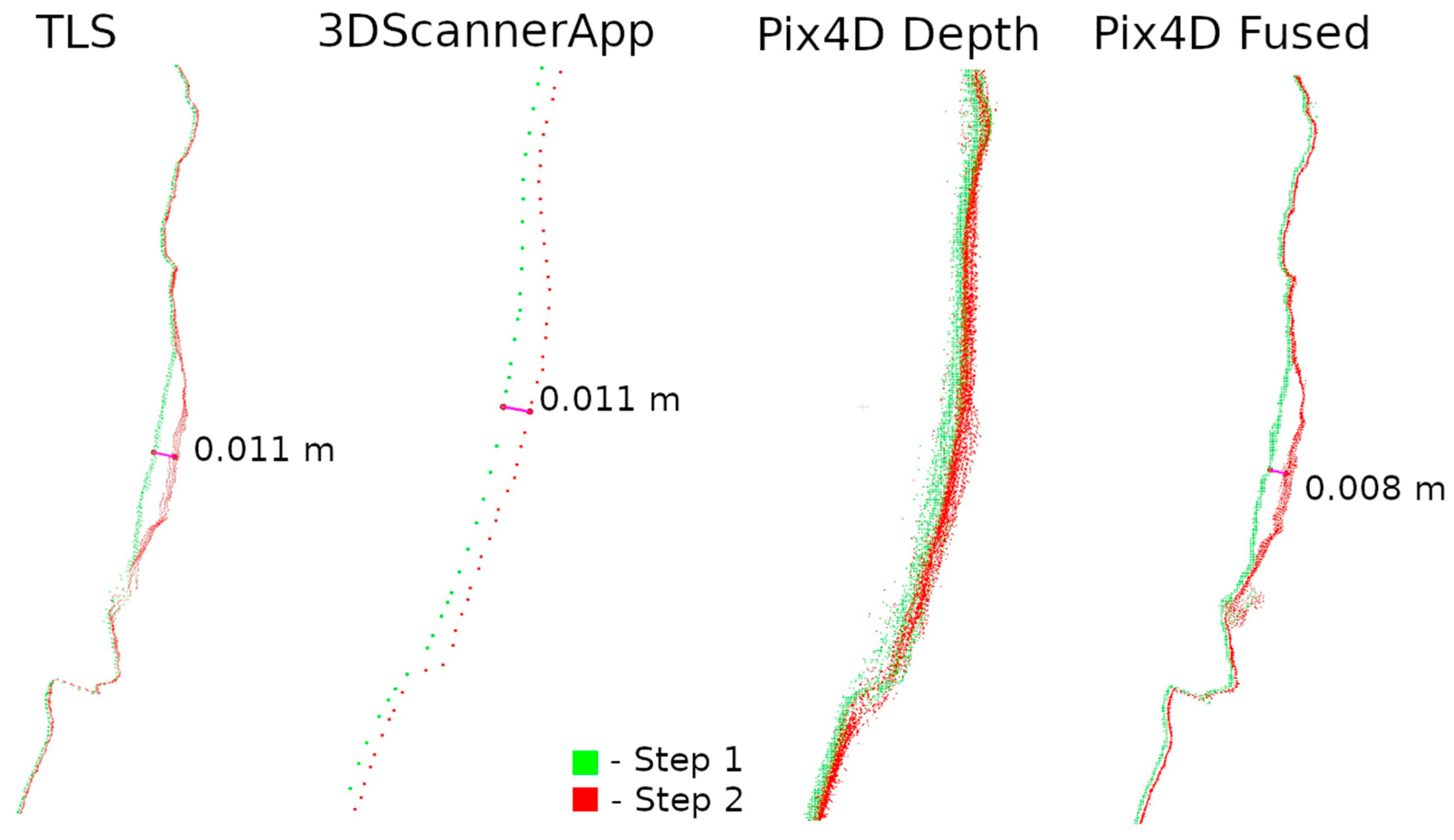

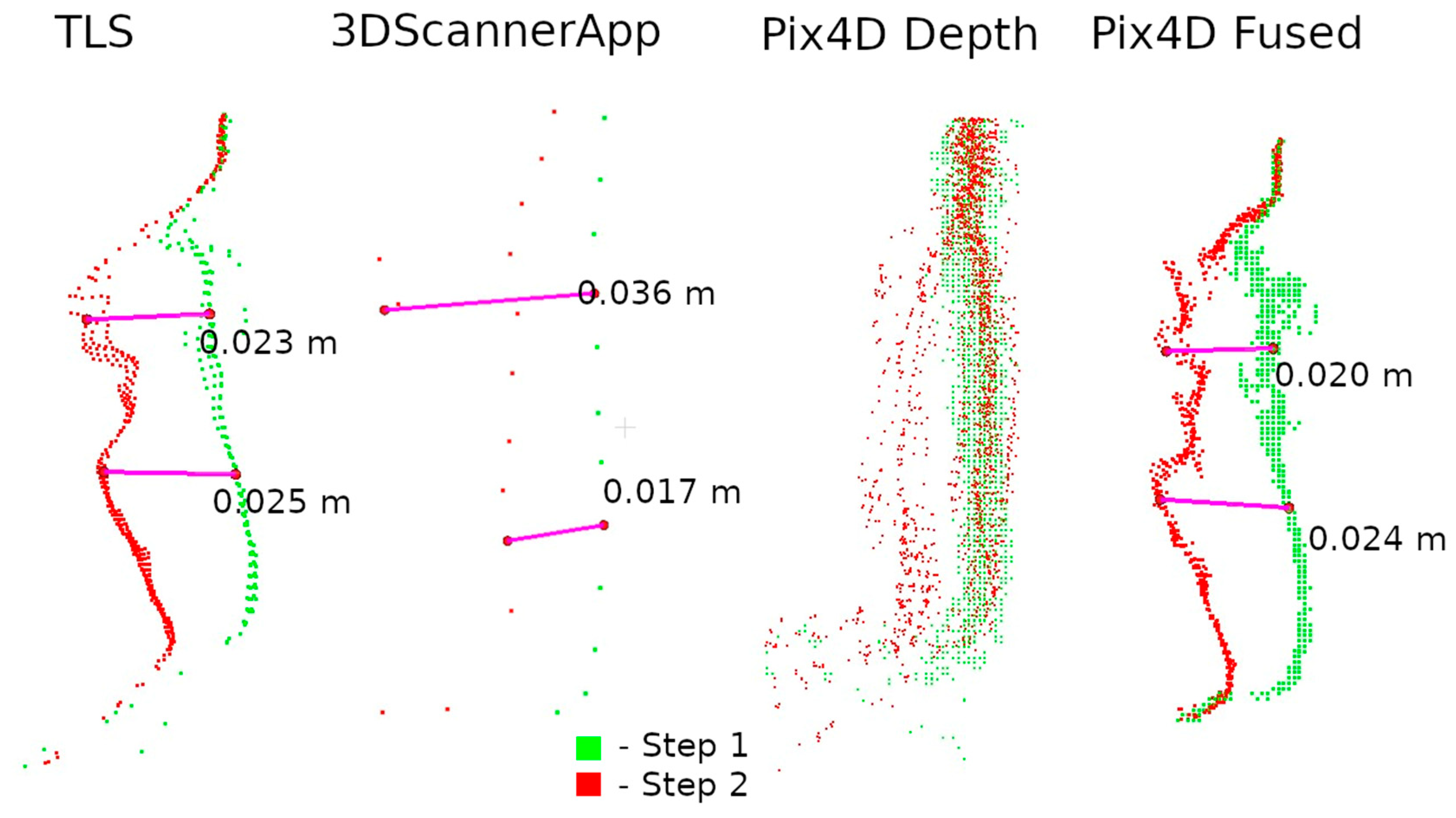

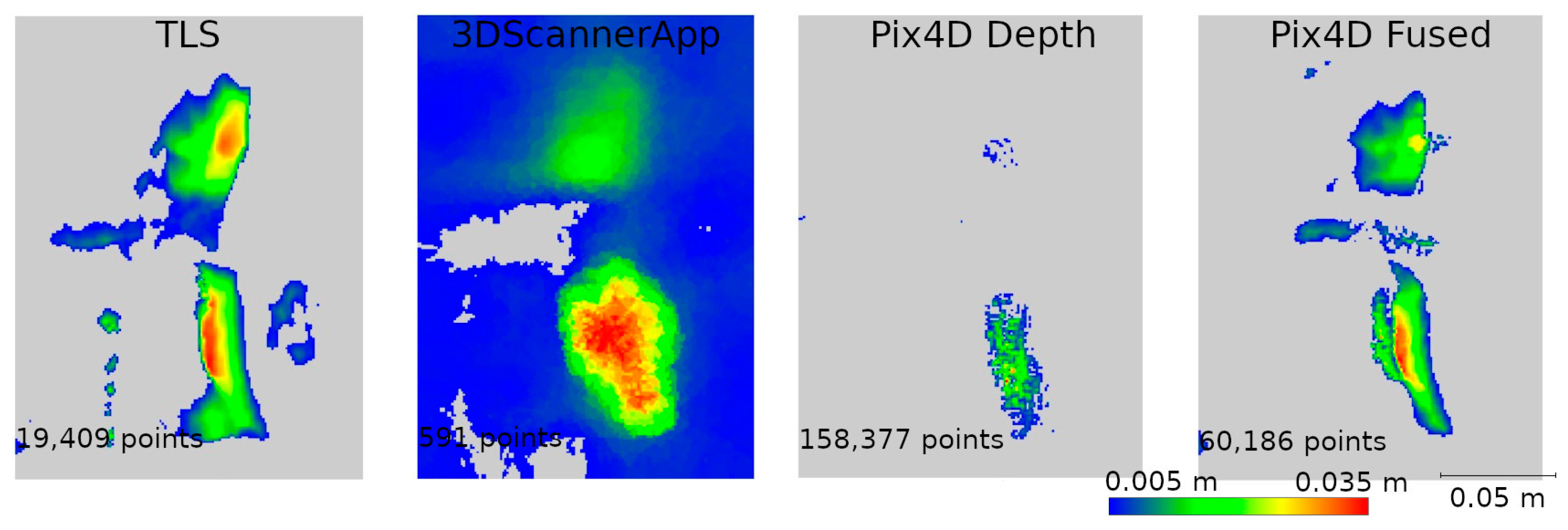

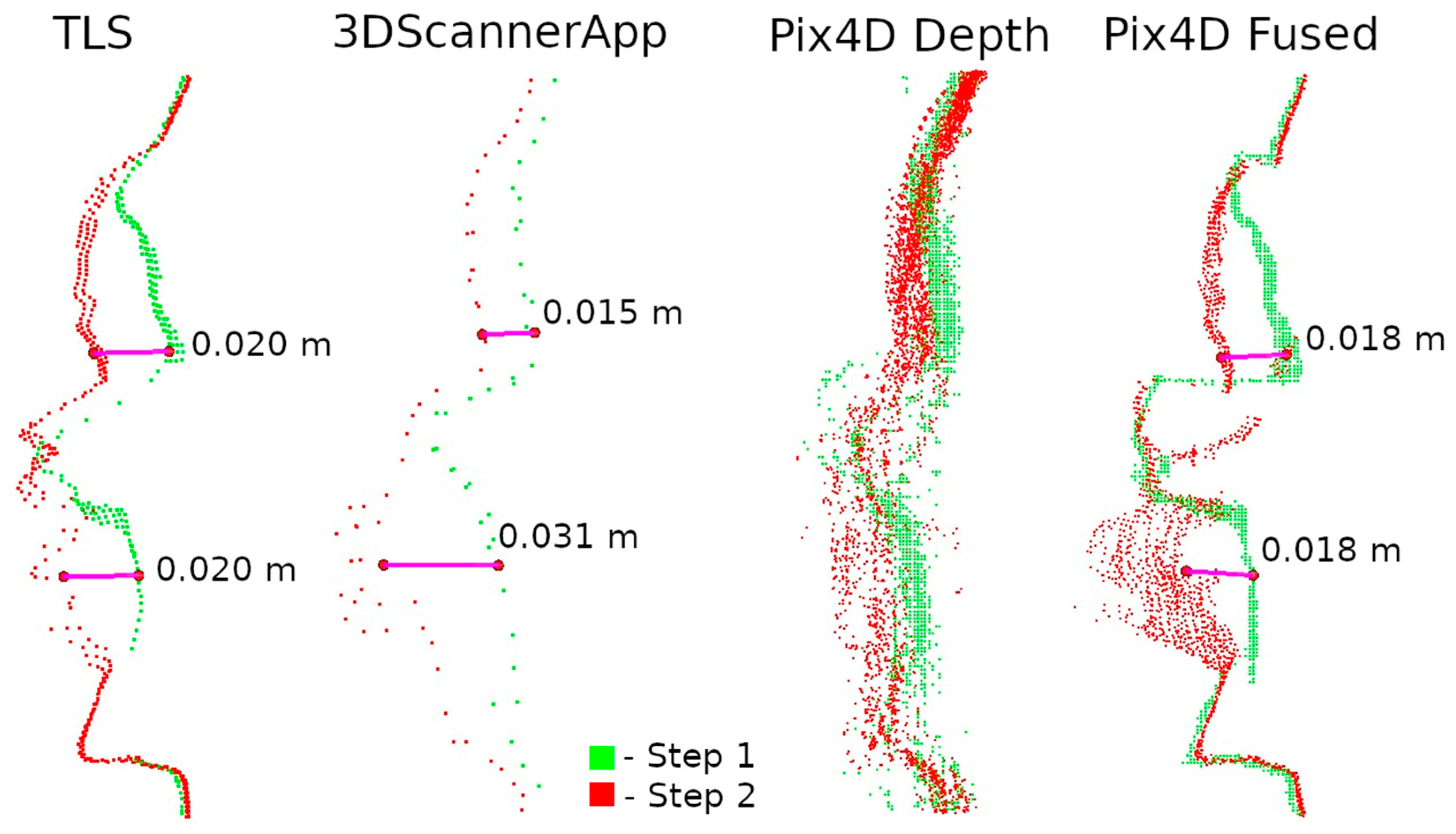

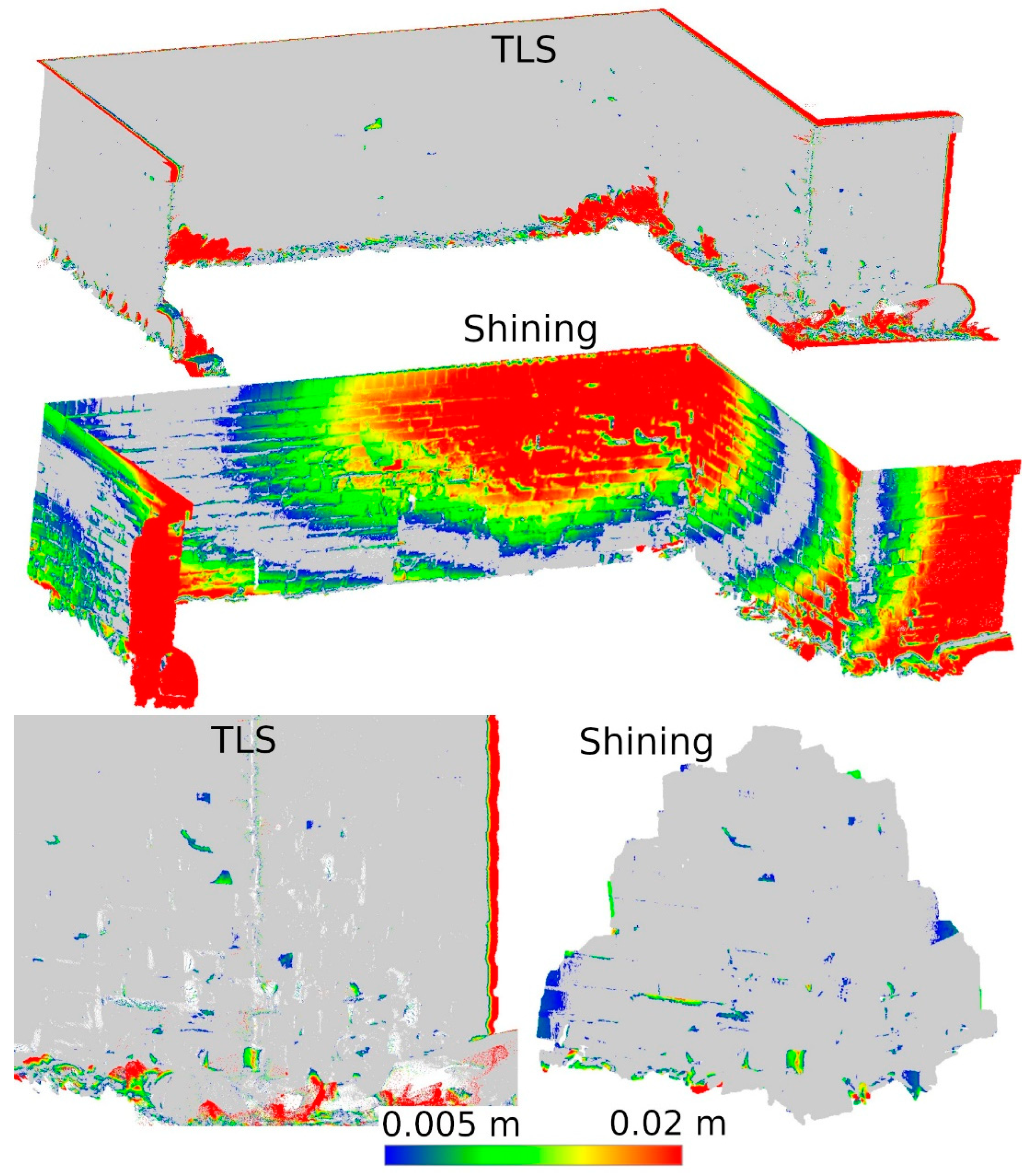

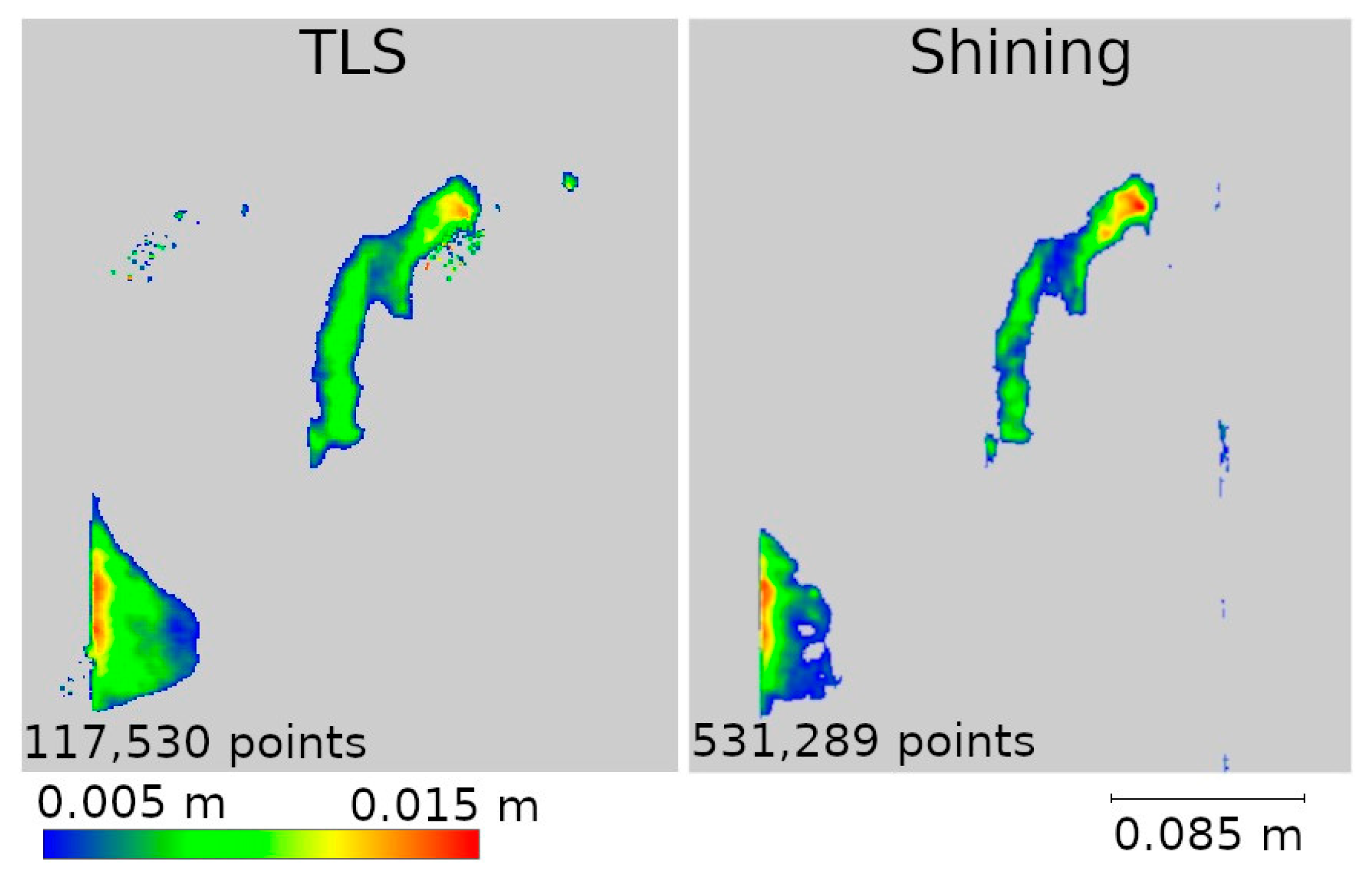

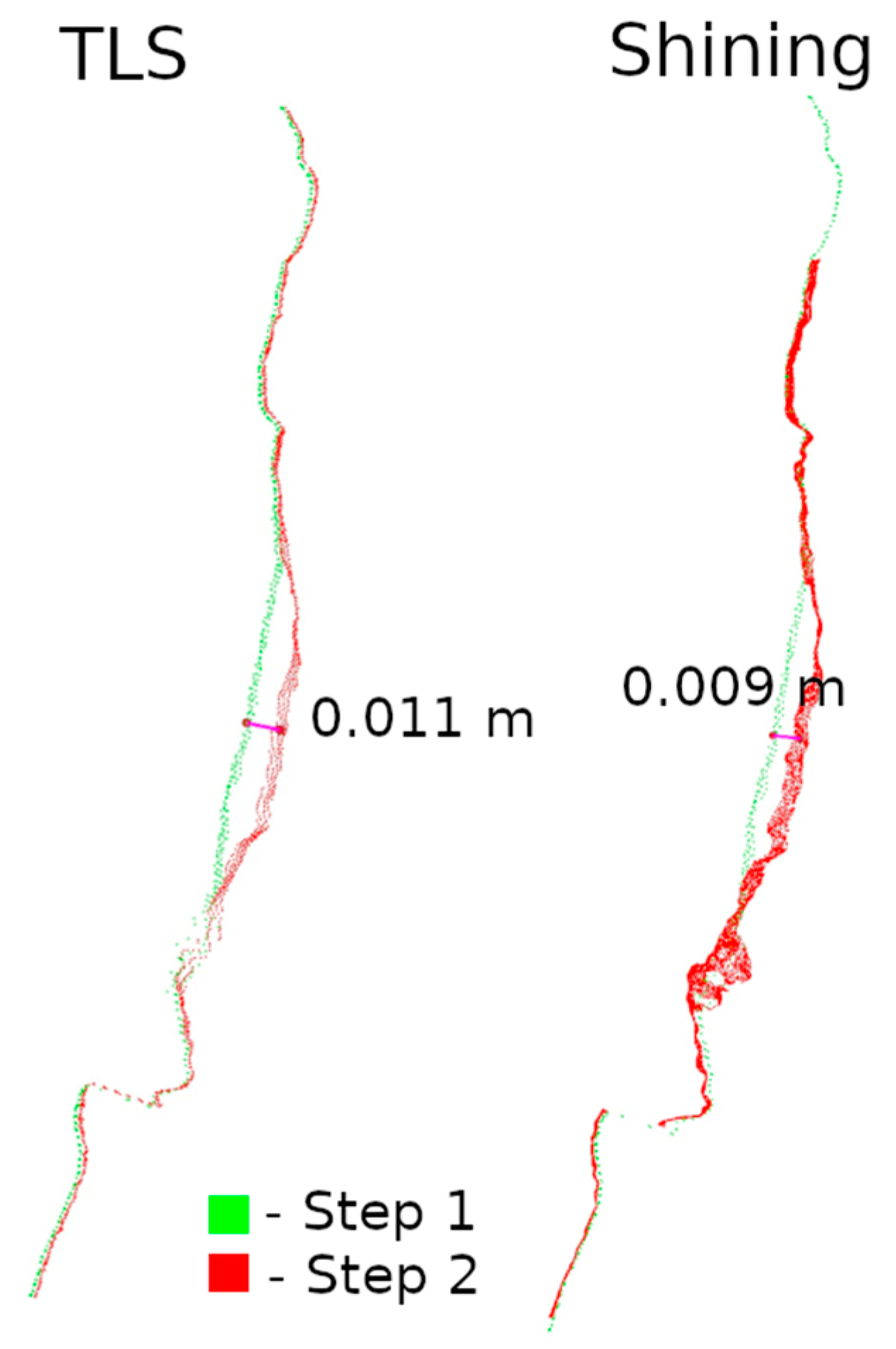

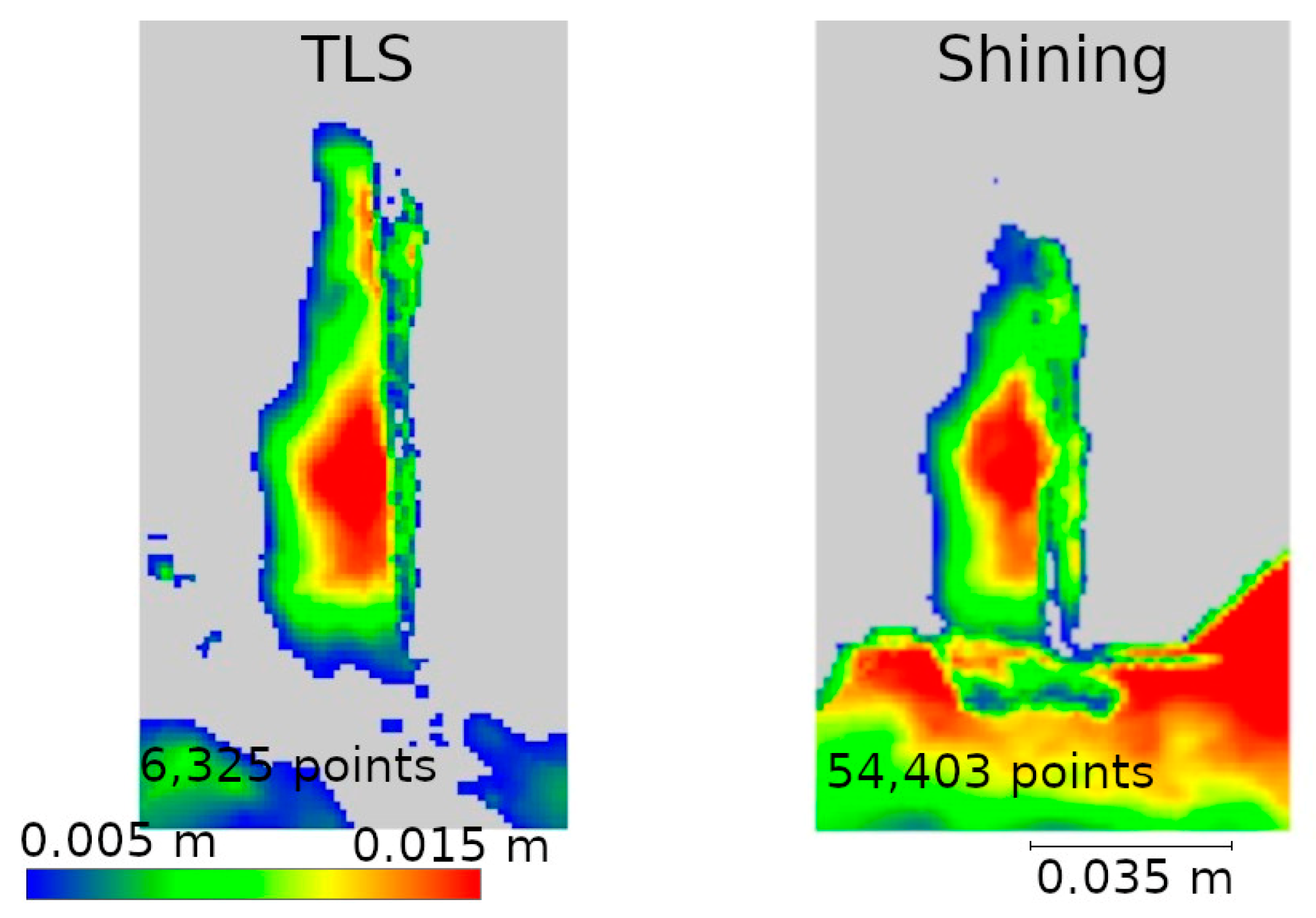

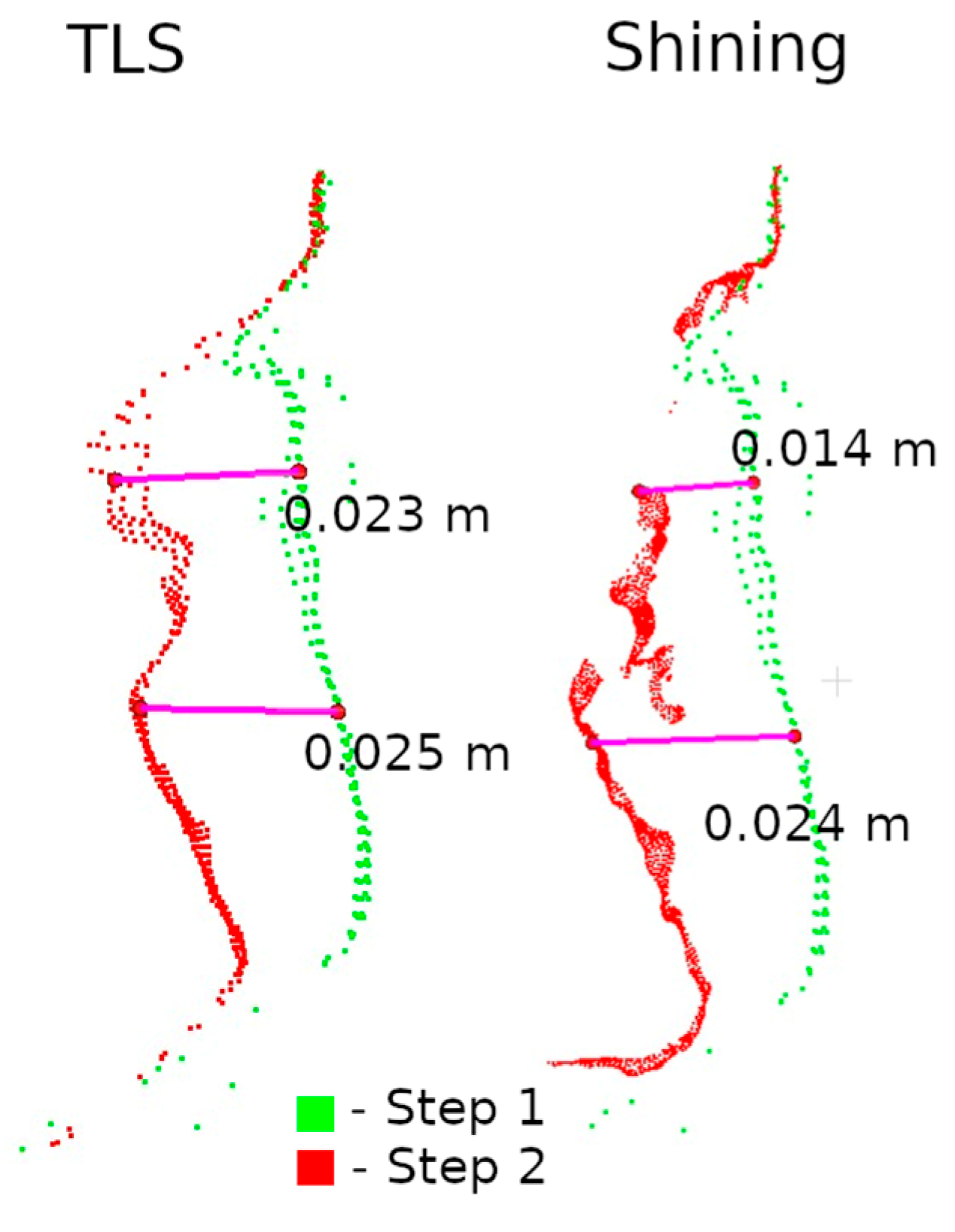

4.1. Stage 1

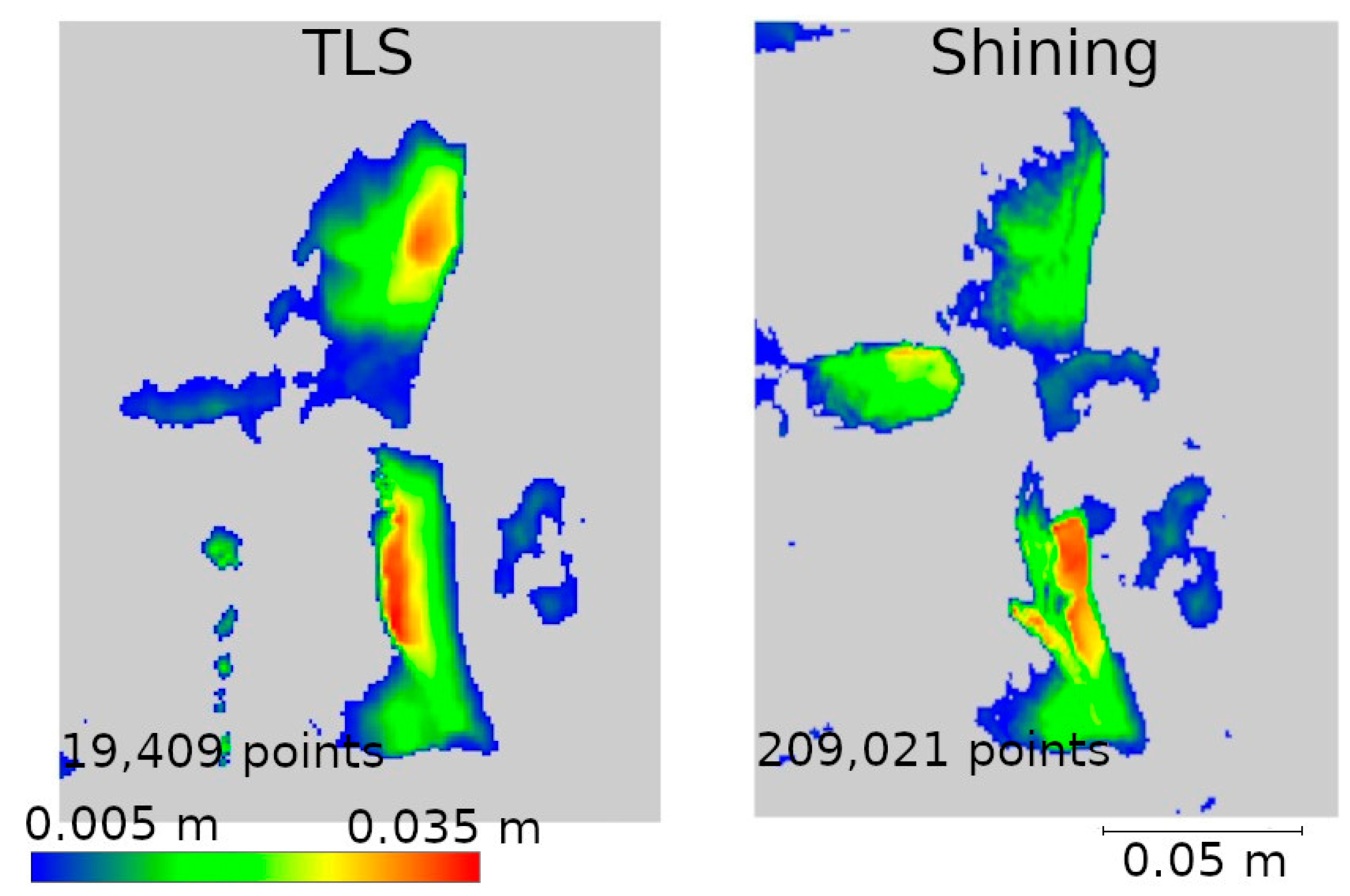

4.2. Stage 2

5. Discussion

- Size of the measured object;

- The shape of the measured object;

- The distance from which the measurement is made;

- The application used.

6. Conclusions

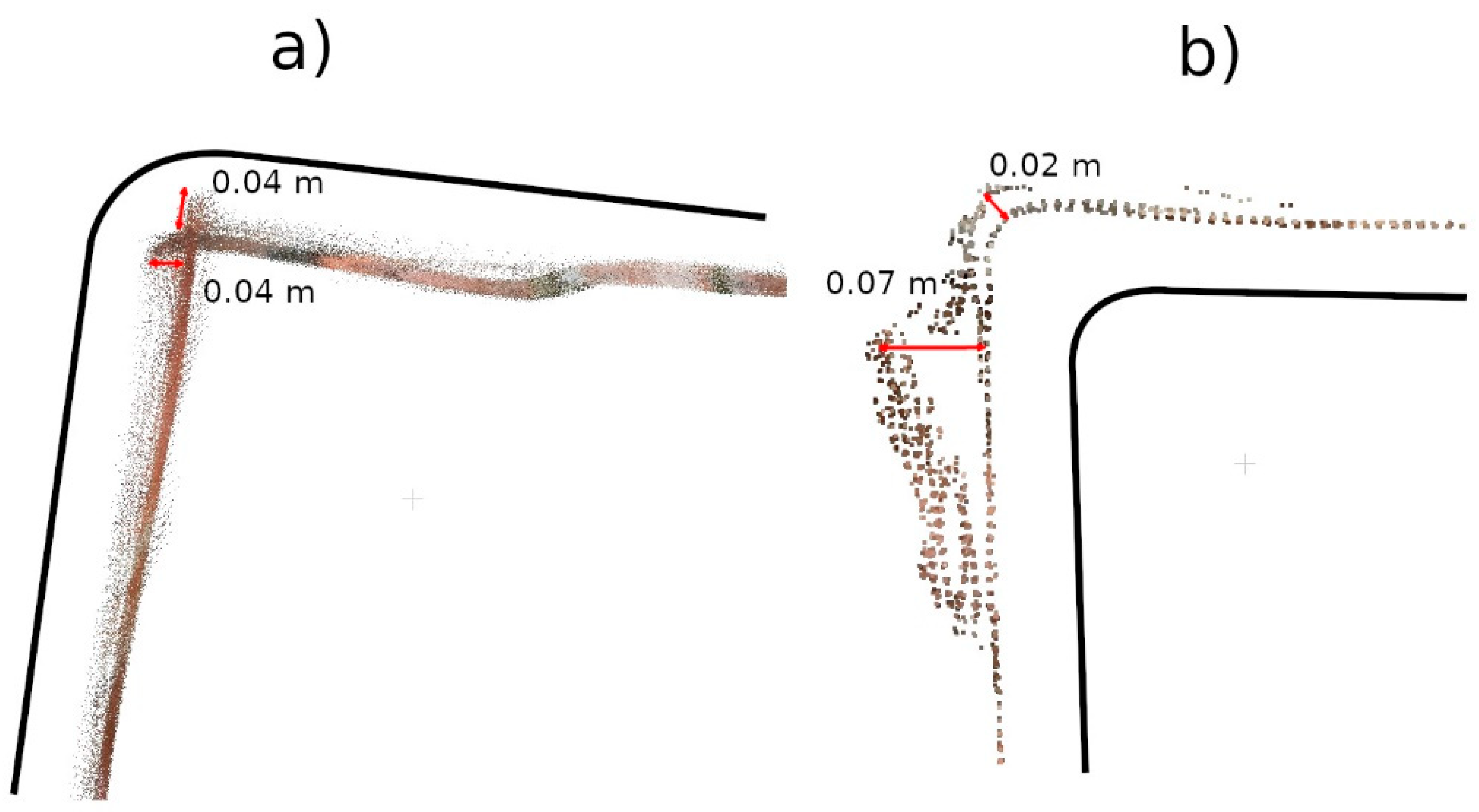

- Low-cost LiDAR scanners can be successfully used to detect cavities in historic architectural structures, enabling their continuous monitoring. The low cost and ease of use of this technology enable cyclic measurements to be conducted even in small time intervals. However, the effectiveness of this technology is closely related to the size of the measured area. In this work, it was shown that this technology gives the best results on an area of about 1.5 m2, allowing the detection of cavities at least 2 cm wide and 1 cm or more deep.

- The most precise results were obtained with more advanced measurement methods. Both the data acquired with the Pix4DCatch application, subsequently developed in the Pix4Dmatic desktop application, and the data from Shining 3D’s FreeScan handheld scanner showed an accuracy comparable to TLS technology. This demonstrates the significant development of LiDAR technology, which makes it possible to obtain accurate point clouds at a lower cost, but free apps and mobile devices still cannot match the precision of TLS scanners.

- Due to its mobility, the handheld scanner and the Pix4DCatch app prove to be a better solution in situations where the inventory covers a small part of the monument. In the context of such applications, the mobility and relatively high precision of these tools make them more practical than desktop TLS systems.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| <1 cm | 1–2 cm | 2–3 cm | 3–4 cm | 4–5 cm | 5–6 cm | 6–7 cm | 7–8 cm | 8–9 cm | 9–10 cm | 10–15 cm | 15–20 cm | 20–25 cm | 25–30 cm | 30–35 cm | 35–40 cm | 40–45 cm | >45 cm | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 3dScannerApp | 27.72% | 18.79% | 9.88% | 9.06% | 7.73% | 7.79% | 3.71% | 2.02% | 1.77% | 1.35% | 2.54% | 1.75% | 1.36% | 1.20% | 1.03% | 1.07% | 0.97% | 0.25% |

| Pix4D Captured | 26.50% | 16.71% | 11.39% | 8.93% | 8.03% | 6.14% | 5.22% | 4.58% | 2.89% | 1.78% | 5.89% | 1.00% | 0.46% | 0.21% | 0.12% | 0.06% | 0.05% | 0.03% |

| Pix4D Depth | 50.03% | 36.51% | 8.93% | 0.68% | 0.32% | 0.27% | 0.20% | 0.21% | 0.20% | 0.19% | 0.68% | 0.48% | 0.39% | 0.33% | 0.28% | 0.16% | 0.10% | 0.06% |

| Pix4D Fused | 52.45% | 31.57% | 10.52% | 1.30% | 0.51% | 0.39% | 0.32% | 0.25% | 0.21% | 0.17% | 0.62% | 0.44% | 0.35% | 0.27% | 0.29% | 0.15% | 0.10% | 0.07% |

| <1 cm | <2 cm | <3 cm | <4 cm | <5 cm | <6 cm | <7 cm | <8 cm | <9 cm | <10 cm | <15 cm | <20 cm | <25 cm | <30 cm | <35 cm | <40 cm | <45 cm | >45 cm | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 3dScannerApp | 27.72% | 46.52% | 56.40% | 65.45% | 73.19% | 80.98% | 84.69% | 86.71% | 88.49% | 89.83% | 92.38% | 94.13% | 95.48% | 96.68% | 97.71% | 98.78% | 99.75% | 100.00% |

| Pix4D Captured | 26.50% | 43.20% | 54.59% | 63.52% | 71.55% | 77.69% | 82.92% | 87.50% | 90.38% | 92.16% | 98.05% | 99.05% | 99.51% | 99.73% | 99.85% | 99.91% | 99.97% | 100.00% |

| Pix4D Depth | 50.03% | 86.53% | 95.46% | 96.14% | 96.46% | 96.73% | 96.93% | 97.14% | 97.34% | 97.52% | 98.20% | 98.68% | 99.08% | 99.40% | 99.68% | 99.84% | 99.94% | 100.00% |

| Pix4D Fused | 52.45% | 84.02% | 94.54% | 95.84% | 96.35% | 96.74% | 97.06% | 97.31% | 97.52% | 97.70% | 98.32% | 98.76% | 99.11% | 99.38% | 99.67% | 99.82% | 99.93% | 100.00% |

| <1 mm | <2 mm | <3 mm | <4 mm | <5 mm | <6 mm | <7 mm | <8 mm | <9 mm | <10 mm | <15 mm | <20 mm | <25 mm | <30 mm | <35 mm | <40 mm | <45 mm | >45 mm | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 3DScannerApp D | 3.48% | 12.04% | 21.48% | 29.32% | 37.54% | 43.81% | 50.46% | 55.86% | 61.37% | 65.38% | 81.69% | 93.50% | 99.29% | 99.61% | 99.76% | 99.87% | 99.94% | 100.00% |

| 3DScannerApp M | 10.41% | 37.82% | 56.76% | 71.16% | 80.86% | 86.73% | 90.76% | 93.40% | 94.93% | 96.04% | 98.30% | 99.15% | 99.52% | 99.72% | 99.88% | 99.98% | 100.00% | 100.00% |

| Pix4D Captured D | 1.91% | 5.93% | 10.79% | 14.31% | 19.01% | 22.64% | 27.80% | 31.80% | 37.30% | 41.57% | 66.95% | 84.44% | 93.39% | 96.53% | 97.62% | 97.94% | 98.13% | 100.00% |

| Pix4D Captured M | 9.12% | 34.76% | 52.82% | 67.91% | 78.00% | 85.12% | 90.31% | 93.48% | 95.61% | 97.09% | 99.36% | 99.79% | 99.93% | 99.98% | 100.00% | 100.00% | 100.00% | 100.00% |

| Pix4D Depth D | 14.78% | 40.32% | 60.95% | 73.61% | 83.29% | 88.81% | 92.91% | 95.20% | 96.87% | 97.81% | 99.55% | 99.87% | 99.94% | 99.98% | 99.99% | 100.00% | 100.00% | 100.00% |

| Pix4D Depth M | 16.34% | 52.32% | 74.49% | 86.19% | 92.94% | 96.31% | 97.85% | 98.74% | 99.21% | 99.45% | 99.87% | 99.96% | 99.99% | 100.00% | 100.00% | 100.00% | 100.00% | 100.00% |

| Pix4D Fused D | 35.86% | 76.47% | 90.92% | 95.59% | 97.42% | 98.29% | 98.75% | 99.04% | 99.22% | 99.35% | 99.67% | 99.83% | 99.93% | 99.97% | 99.99% | 99.99% | 100.00% | 100.00% |

| Pix4D Fused M | 40.72% | 90.79% | 96.73% | 97.99% | 98.55% | 98.89% | 99.12% | 99.28% | 99.40% | 99.49% | 99.78% | 99.93% | 99.98% | 100.00% | 100.00% | 100.00% | 100.00% | 100.00% |

| Sitescape 1 | 3.13% | 9.22% | 14.21% | 18.65% | 22.63% | 26.17% | 29.31% | 32.09% | 35.23% | 37.60% | 51.49% | 67.43% | 85.61% | 97.06% | 99.48% | 99.78% | 99.87% | 100.00% |

| Sitescape 2 | 0.28% | 3.73% | 7.38% | 11.12% | 15.11% | 19.44% | 24.12% | 29.17% | 34.55% | 40.17% | 65.58% | 84.09% | 91.48% | 95.53% | 97.11% | 98.01% | 98.45% | 100.00% |

| Sitescape 3 | 1.35% | 4.71% | 7.94% | 11.20% | 14.56% | 19.82% | 23.49% | 27.28% | 31.19% | 35.23% | 57.86% | 75.88% | 88.21% | 95.38% | 97.94% | 98.81% | 99.20% | 100.00% |

| Sitescape 4 | 3.49% | 9.76% | 14.99% | 19.89% | 24.76% | 29.85% | 34.92% | 39.53% | 43.80% | 48.04% | 73.59% | 90.02% | 97.53% | 98.71% | 98.94% | 99.07% | 99.22% | 100.00% |

| Sitescape 5 | 3.09% | 12.83% | 22.95% | 34.12% | 50.18% | 62.06% | 72.90% | 81.75% | 89.42% | 92.88% | 98.66% | 99.52% | 99.78% | 99.89% | 99.94% | 99.96% | 99.98% | 100.00% |

| <1 mm | <2 mm | <3 mm | <4 mm | <5 mm | <6 mm | <7 mm | <8 mm | <9 mm | <10 mm | <15 mm | <20 mm | <25 mm | <30 mm | <35 mm | <40 mm | <45 mm | >45 mm | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Shining D | 2.62% | 6.37% | 9.99% | 17.29% | 20.95% | 24.58% | 31.66% | 35.02% | 38.28% | 44.39% | 59.98% | 76.60% | 87.45% | 94.00% | 96.41% | 97.55% | 97.80% | 100.00% |

| Shining M | 24.64% | 59.53% | 76.59% | 85.07% | 89.06% | 92.03% | 94.04% | 95.36% | 96.53% | 97.30% | 98.69% | 99.17% | 99.57% | 99.81% | 99.90% | 99.93% | 99.95% | 100.00% |

References

- Lerma, J.L.; Navarro, S.; Cabrelles, M.; Villaverde, V. Terrestrial Laser Scanning and Close Range Photogrammetry for 3D Archaeological Documentation: The Upper Palaeolithic Cave of Parpalló as a Case Study. J. Archaeol. Sci. 2010, 37, 499–507. [Google Scholar] [CrossRef]

- Mateus, L.; Fernández, J.; Ferreira, V.; Oliveira, C.; Aguiar, J.; Gago, A.S.; Pacheco, P.; Pernão, J. Terrestrial Laser Scanning and Digital Photogrammetry for Heritage Conservation: Case Study of The Historical Walls of Lagos, Portugal. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W11, 843–847. [Google Scholar] [CrossRef]

- Remondino, F. Heritage Recording and 3D Modeling with Photogrammetry and 3D Scanning. Remote Sens. 2011, 3, 1104–1138. [Google Scholar] [CrossRef]

- Bula, J.; Derron, M.-H.; Mariethoz, G. Dense Point Cloud Acquisition with a Low-Cost Velodyne VLP-16. Geosci. Instrum. Methods Data Syst. 2020, 9, 385–396. [Google Scholar] [CrossRef]

- Liu, J.; Azhar, S.; Willkens, D.; Li, B. Static Terrestrial Laser Scanning (TLS) for Heritage Building Information Modeling (HBIM): A Systematic Review. Virtual Worlds 2023, 2, 90–114. [Google Scholar] [CrossRef]

- Maté-González, M.Á.; Di Pietra, V.; Piras, M. Evaluation of Different LiDAR Technologies for the Documentation of Forgotten Cultural Heritage under Forest Environments. Sensors 2022, 22, 6314. [Google Scholar] [CrossRef] [PubMed]

- Murtiyoso, A.; Grussenmeyer, P.; Landes, T.; Macher, H. First Assessments into the Use of Commercial-Grade Solid State Lidar for Low Cost Heritage Documentation. In Proceedings of the XXIV ISPRS Congress, Nice, France, 5–9 July 2021; Volume XLIII-B2-2021. [Google Scholar]

- Luetzenburg, G.; Kroon, A.; Bjørk, A.A. Evaluation of the Apple iPhone 12 Pro LiDAR for an Application in Geosciences. Sci. Rep. 2021, 11, 22221. [Google Scholar] [CrossRef] [PubMed]

- Mêda, P.; Calvetti, D.; Sousa, H. Exploring the Potential of iPad-LiDAR Technology for Building Renovation Diagnosis: A Case Study. Buildings 2023, 13, 456. [Google Scholar] [CrossRef]

- Vacca, G. 3D Survey with Apple LiDAR Sensor—Test and Assessment for Architectural and Cultural Heritage. Heritage 2023, 6, 1476–1501. [Google Scholar] [CrossRef]

- Vogt, M.; Rips, A.; Emmelmann, C. Comparison of iPad Pro®’s LiDAR and TrueDepth Capabilities with an Industrial 3D Scanning Solution. Technologies 2021, 9, 25. [Google Scholar] [CrossRef]

- Nowak, R.; Kania, T.; Rutkowski, R.; Ekiert, E. Research and TLS (LiDAR) Construction Diagnostics of Clay Brick Masonry Arched Stairs. Materials 2022, 15, 552. [Google Scholar] [CrossRef] [PubMed]

- Teppati Losè, L.; Spreafico, A.; Chiabrando, F.; Giulio Tonolo, F. Apple LiDAR Sensor for 3D Surveying: Tests and Results in the Cultural Heritage Domain. Remote Sens. 2022, 14, 4157. [Google Scholar] [CrossRef]

- Waliulu, Y.E.P.R.; Suprobo, P.; Adi, T.J.W. Volume Calculation Accuracy and 3D Visualization of Flexible Pavement Damage Based on Low-Cost LiDAR. In Proceedings of the 2023 IEEE Asia-Pacific Conference on Geoscience, Electronics and Remote Sensing Technology (AGERS), Surabaya, Indonesia, 19–20 December 2023; pp. 109–113. [Google Scholar]

- Błaszczak-Bąk, W.; Suchocki, C.; Kozakiewicz, T.; Janicka, J. Measurement Methodology for Surface Defects Inventory of Building Wall Using Smartphone with Light Detection and Ranging Sensor. Measurement 2023, 219, 113286. [Google Scholar] [CrossRef]

- Purfürst, T.; de Miguel-Díez, F.; Berendt, F.; Engler, B.; Cremer, T. Comparison of Wood Stack Volume Determination between Manual, Photo-Optical, iPad-LiDAR and Handheld-LiDAR Based Measurement Methods. Iforest Biogeosciences For. 2023, 16, 243–252. [Google Scholar] [CrossRef]

- Ptaszyńska, D. Miejskie Mury Obronne w Województwie Koszalińskim; Muzeum archeologiczno-historyczne w Koszalinie: Koszalin, Poland, 1974. [Google Scholar]

- Szewczyk, D. Koszalin Od Średniowiecza Do Współczesności: Katalog Wystawy; Muzeum w Koszalinie: Koszalin, Poland, 2010; ISBN 978-83-89463-12-8. [Google Scholar]

- Marc, Y. Apple LIDAR Demystified: SPAD, VCSEL i Fusion. Available online: https://4sense.medium.com/apple-lidar-demystified-spad-vcsel-and-fusion-aa9c3519d4cb (accessed on 10 September 2024).

- Järvenpää, A. Metrological Characterization of a Consumer Grade Flash LiDAR Device. Master’s Thesis, Aalto University, Espoo, Finland, 22 November 2021. [Google Scholar]

- García-Gómez, P.; Royo, S.; Rodrigo, N.; Casas, J.R. Geometric Model and Calibration Method for a Solid-State LiDAR. Sensors 2020, 20, 2898. [Google Scholar] [CrossRef] [PubMed]

- Gollob, C.; Ritter, T.; Kraßnitzer, R.; Tockner, A.; Nothdurft, A. Measurement of Forest Inventory Parameters with Apple iPad Pro and Integrated LiDAR Technology. Remote Sens. 2021, 13, 3129. [Google Scholar] [CrossRef]

- Spreafico, A.; Chiabrando, F.; Teppati Losè, L.; Giulio Tonolo, F. The iPad Pro Built-In Lidar Sensor: 3D Rapid Mapping Tests and Quality Assessment. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, XLIII-B1-2021, 63–69. [Google Scholar] [CrossRef]

| Data | Number of Points |

|---|---|

| TLS | 191 |

| 3DScannerApp D | 3.5 |

| 3DScannerApp M | 4 |

| Pix4D Captured D | 4 |

| Pix4D Captured M | 62 |

| Pix4D Depth D | 86 |

| Pix4D Depth M | 1564 |

| Pix4D Fused D | 45 |

| Pix4D Fused M | 555 |

| Sitescape 1 | 258 |

| Sitescape 2 | 298 |

| Sitescape 3 | 215 |

| Sitescape 4 | 1508.8 |

| Sitescape 5 | 1224 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kędziorski, P.; Jagoda, M.; Tysiąc, P.; Katzer, J. An Example of Using Low-Cost LiDAR Technology for 3D Modeling and Assessment of Degradation of Heritage Structures and Buildings. Materials 2024, 17, 5445. https://doi.org/10.3390/ma17225445

Kędziorski P, Jagoda M, Tysiąc P, Katzer J. An Example of Using Low-Cost LiDAR Technology for 3D Modeling and Assessment of Degradation of Heritage Structures and Buildings. Materials. 2024; 17(22):5445. https://doi.org/10.3390/ma17225445

Chicago/Turabian StyleKędziorski, Piotr, Marcin Jagoda, Paweł Tysiąc, and Jacek Katzer. 2024. "An Example of Using Low-Cost LiDAR Technology for 3D Modeling and Assessment of Degradation of Heritage Structures and Buildings" Materials 17, no. 22: 5445. https://doi.org/10.3390/ma17225445

APA StyleKędziorski, P., Jagoda, M., Tysiąc, P., & Katzer, J. (2024). An Example of Using Low-Cost LiDAR Technology for 3D Modeling and Assessment of Degradation of Heritage Structures and Buildings. Materials, 17(22), 5445. https://doi.org/10.3390/ma17225445